Gradient Vanishing Problem in Deep Learning

在所有依靠Gradient Descent和Backpropagation算法来学习的Neural Network中,普遍都会存在Gradient Vanishing Problem。Backpropagation的运作过程是,根据Cost Function进行反向传播,利用Chain Rule去计算n层之前某一weight上的梯度,从而更新该weight。而事实上,在网络层次较深的情况下,我们获得的weight梯度,随着反向传播层次的深入,会呈现越来越小的状态。从而,在靠近输出端的Layers中,weight可以被很好的更新,因为可以获得不错的gradient,而在靠近输入端的Layers中,weight则更新缓慢。

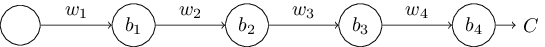

举个最简单的例子,来说明该问题。如下的神经网络有四层,每层有一个node:

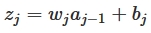

我们可知w是weight,b是bias,每一层的节点输入是z,输出是a,activation function是a=σ(z),我们可以得出:

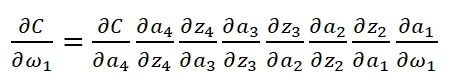

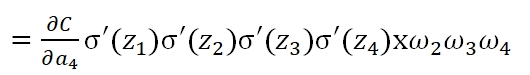

当我们已知Cost Function时,我们利用Backpropagation计算weight:

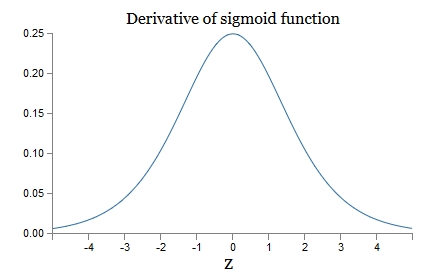

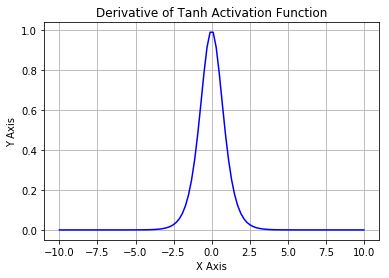

可以看到,第一层的weight梯度,依赖于之后各层activation function的一阶导数之积。而对于Machine Learning中常用的Sigmoid及tanh激励函数,其derivative图像如下:

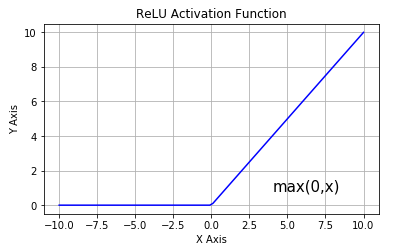

Sigmoid的derivative是[0,0.25]的,而tanh的derivative是[0,1]的。通过上式,我们看出,通过Backpropagation求梯度时,每往回传播一层,就要多乘以一项δ‘(z),也就是说,随着向回传递的深入,梯度会呈指数级的衰减,直至缩减到0,导致前层的权重无法更新。tanh要略好于sigmoid,但依然难以解决Gradient Vanishing的问题。所以Relu Function应运而生,并且在Deep Learning方面取得了巨大成功。Relu的表达式及图形如下:

其当x>0时,derivative是1,小于0时,derivative为0。该函数很好的解决了Gradient Vanishing Problem,在大多数情况下,我们构建Deep Learning时可以使用Relu作为默认的Activation Function。

Gradient Vanishing Problem in Deep Learning的更多相关文章

- (转)WHY DEEP LEARNING IS SUDDENLY CHANGING YOUR LIFE

Main Menu Fortune.com E-mail Tweet Facebook Linkedin Share icons By Roger Parloff Illustration ...

- Growing Pains for Deep Learning

Growing Pains for Deep Learning Advances in theory and computer hardware have allowed neural network ...

- Deep Learning Libraries by Language

Deep Learning Libraries by Language Tweet Python Theano is a python library for defining and ...

- Deep learning with Python

一.导论 1.1 人工智能.机器学习.深度学习 人工智能.机器学习 人工智能:1980年代达到高峰的是专家系统,符号AI是之前的,但不能解决模糊.复杂的问题. 机器学习是把数据.答案做输入,规则作输出 ...

- This instability is a fundamental problem for gradient-based learning in deep neural networks. vanishing exploding gradient problem

The unstable gradient problem: The fundamental problem here isn't so much the vanishing gradient pro ...

- Coursera Deep Learning 2 Improving Deep Neural Networks: Hyperparameter tuning, Regularization and Optimization - week1, Assignment(Gradient Checking)

声明:所有内容来自coursera,作为个人学习笔记记录在这里. Gradient Checking Welcome to the final assignment for this week! In ...

- 课程二(Improving Deep Neural Networks: Hyperparameter tuning, Regularization and Optimization),第一周(Practical aspects of Deep Learning) —— 4.Programming assignments:Gradient Checking

Gradient Checking Welcome to this week's third programming assignment! You will be implementing grad ...

- Deep Learning专栏--强化学习之从 Policy Gradient 到 A3C(3)

在之前的强化学习文章里,我们讲到了经典的MDP模型来描述强化学习,其解法包括value iteration和policy iteration,这类经典解法基于已知的转移概率矩阵P,而在实际应用中,我们 ...

- Deep Learning in a Nutshell: History and Training

Deep Learning in a Nutshell: History and Training This series of blog posts aims to provide an intui ...

随机推荐

- selenium安装及环境搭建

说明:安装selenium前提必须是安装好了python和pip 1.安装python 在Python的官网 www.python.org 中找到最新版本的Python安装包(我的电脑是windows ...

- HDU 1024 Max Sum Plus Plus (递推)

Max Sum Plus Plus Time Limit: 2000/1000 MS (Java/Others) Memory Limit: 65536/32768 K (Java/Others ...

- html5实现拖拽上传

<html><head> <meta http-equiv="Content-Type" content="text/html; chars ...

- NancyFx 2.0的开源框架的使用-ConstraintRouting

新建一个空的Web项目 然后在Nuget库中安装下面两个包 Nancy Nancy.Hosting.Aspnet 然后在根目录添加三个文件夹,分别是models,Module,Views 然后往Mod ...

- MySQL第五天——日志

日志 log_error(错误日志) 用于记录 MySQL 运行过程中的错误信息,如,无法加载 MySQL数据库的数据文件,或权限不正确等都会被记录在此. 默认情况下,错误日志是开启的,且无法禁止. ...

- openstack stein部署手册 6. nova-api

# 建立数据库用户及权限 create database nova; grant all privileges on nova.* to nova@'localhost' identified by ...

- Sql 使用游标

DECLARE data_cursor CURSOR FOR WITH T0 AS ( SELECT COUNT(f.DeptID) SubmitCount , f.DeptID FROM biz.F ...

- final、finally、 finalize区别

原创转载请注明出处:https://www.cnblogs.com/agilestyle/p/11444366.html final final可以用来修饰类.方法.变量,分别有不同的意义,final ...

- element-ui 里面el-checkbox多选框,实现全选单选

data里面定义了 data:[], actionids:[],//选择的那个actionid num1:0,//没选择的计数 num2:0,//选中的计数 ...

- Proto3语法翻译

本文主要对proto3语法翻译.参考网址:https://developers.google.com/protocol-buffers/docs/proto3 defining a message t ...