DCGAN生成式对抗网络--keras实现

本文针对cifar10 图集进行了DCGAN的复现。

其中库中的SpectralNormalizationKeras需添加至python环境中 该篇代码如下:

from keras import backend as K

from keras.engine import *

from keras.legacy import interfaces

from keras import activations

from keras import initializers

from keras import regularizers

from keras import constraints

from keras.utils.generic_utils import func_dump

from keras.utils.generic_utils import func_load

from keras.utils.generic_utils import deserialize_keras_object

from keras.utils.generic_utils import has_arg

from keras.utils import conv_utils

from keras.legacy import interfaces

from keras.layers import Dense, Conv1D, Conv2D, Conv3D, Conv2DTranspose, Embedding

import tensorflow as tf class DenseSN(Dense):

def build(self, input_shape):

assert len(input_shape) >= 2

input_dim = input_shape[-1]

self.kernel = self.add_weight(shape=(input_dim, self.units),

initializer=self.kernel_initializer,

name='kernel',

regularizer=self.kernel_regularizer,

constraint=self.kernel_constraint)

if self.use_bias:

self.bias = self.add_weight(shape=(self.units,),

initializer=self.bias_initializer,

name='bias',

regularizer=self.bias_regularizer,

constraint=self.bias_constraint)

else:

self.bias = None

self.u = self.add_weight(shape=tuple([1, self.kernel.shape.as_list()[-1]]),

initializer=initializers.RandomNormal(0, 1),

name='sn',

trainable=False)

self.input_spec = InputSpec(min_ndim=2, axes={-1: input_dim})

self.built = True def call(self, inputs, training=None):

def _l2normalize(v, eps=1e-12):

return v / (K.sum(v ** 2) ** 0.5 + eps)

def power_iteration(W, u):

_u = u

_v = _l2normalize(K.dot(_u, K.transpose(W)))

_u = _l2normalize(K.dot(_v, W))

return _u, _v

W_shape = self.kernel.shape.as_list()

#Flatten the Tensor

W_reshaped = K.reshape(self.kernel, [-1, W_shape[-1]])

_u, _v = power_iteration(W_reshaped, self.u)

#Calculate Sigma

sigma=K.dot(_v, W_reshaped)

sigma=K.dot(sigma, K.transpose(_u))

#normalize it

W_bar = W_reshaped / sigma

#reshape weight tensor

if training in {0, False}:

W_bar = K.reshape(W_bar, W_shape)

else:

with tf.control_dependencies([self.u.assign(_u)]):

W_bar = K.reshape(W_bar, W_shape)

output = K.dot(inputs, W_bar)

if self.use_bias:

output = K.bias_add(output, self.bias, data_format='channels_last')

if self.activation is not None:

output = self.activation(output)

return output class _ConvSN(Layer): def __init__(self, rank,

filters,

kernel_size,

strides=1,

padding='valid',

data_format=None,

dilation_rate=1,

activation=None,

use_bias=True,

kernel_initializer='glorot_uniform',

bias_initializer='zeros',

kernel_regularizer=None,

bias_regularizer=None,

activity_regularizer=None,

kernel_constraint=None,

bias_constraint=None,

spectral_normalization=True,

**kwargs):

super(_ConvSN, self).__init__(**kwargs)

self.rank = rank

self.filters = filters

self.kernel_size = conv_utils.normalize_tuple(kernel_size, rank, 'kernel_size')

self.strides = conv_utils.normalize_tuple(strides, rank, 'strides')

self.padding = conv_utils.normalize_padding(padding)

self.data_format = conv_utils.normalize_data_format(data_format)

self.dilation_rate = conv_utils.normalize_tuple(dilation_rate, rank, 'dilation_rate')

self.activation = activations.get(activation)

self.use_bias = use_bias

self.kernel_initializer = initializers.get(kernel_initializer)

self.bias_initializer = initializers.get(bias_initializer)

self.kernel_regularizer = regularizers.get(kernel_regularizer)

self.bias_regularizer = regularizers.get(bias_regularizer)

self.activity_regularizer = regularizers.get(activity_regularizer)

self.kernel_constraint = constraints.get(kernel_constraint)

self.bias_constraint = constraints.get(bias_constraint)

self.input_spec = InputSpec(ndim=self.rank + 2)

self.spectral_normalization = spectral_normalization

self.u = None def _l2normalize(self, v, eps=1e-12):

return v / (K.sum(v ** 2) ** 0.5 + eps) def power_iteration(self, u, W):

'''

Accroding the paper, we only need to do power iteration one time.

'''

v = self._l2normalize(K.dot(u, K.transpose(W)))

u = self._l2normalize(K.dot(v, W))

return u, v

def build(self, input_shape):

if self.data_format == 'channels_first':

channel_axis = 1

else:

channel_axis = -1

if input_shape[channel_axis] is None:

raise ValueError('The channel dimension of the inputs '

'should be defined. Found `None`.')

input_dim = input_shape[channel_axis]

kernel_shape = self.kernel_size + (input_dim, self.filters) self.kernel = self.add_weight(shape=kernel_shape,

initializer=self.kernel_initializer,

name='kernel',

regularizer=self.kernel_regularizer,

constraint=self.kernel_constraint) #Spectral Normalization

if self.spectral_normalization:

self.u = self.add_weight(shape=tuple([1, self.kernel.shape.as_list()[-1]]),

initializer=initializers.RandomNormal(0, 1),

name='sn',

trainable=False) if self.use_bias:

self.bias = self.add_weight(shape=(self.filters,),

initializer=self.bias_initializer,

name='bias',

regularizer=self.bias_regularizer,

constraint=self.bias_constraint)

else:

self.bias = None

# Set input spec.

self.input_spec = InputSpec(ndim=self.rank + 2,

axes={channel_axis: input_dim})

self.built = True def call(self, inputs):

def _l2normalize(v, eps=1e-12):

return v / (K.sum(v ** 2) ** 0.5 + eps)

def power_iteration(W, u):

_u = u

_v = _l2normalize(K.dot(_u, K.transpose(W)))

_u = _l2normalize(K.dot(_v, W))

return _u, _v if self.spectral_normalization:

W_shape = self.kernel.shape.as_list()

#Flatten the Tensor

W_reshaped = K.reshape(self.kernel, [-1, W_shape[-1]])

_u, _v = power_iteration(W_reshaped, self.u)

#Calculate Sigma

sigma=K.dot(_v, W_reshaped)

sigma=K.dot(sigma, K.transpose(_u))

#normalize it

W_bar = W_reshaped / sigma

#reshape weight tensor

if training in {0, False}:

W_bar = K.reshape(W_bar, W_shape)

else:

with tf.control_dependencies([self.u.assign(_u)]):

W_bar = K.reshape(W_bar, W_shape) #update weitht

self.kernel = W_bar if self.rank == 1:

outputs = K.conv1d(

inputs,

self.kernel,

strides=self.strides[0],

padding=self.padding,

data_format=self.data_format,

dilation_rate=self.dilation_rate[0])

if self.rank == 2:

outputs = K.conv2d(

inputs,

self.kernel,

strides=self.strides,

padding=self.padding,

data_format=self.data_format,

dilation_rate=self.dilation_rate)

if self.rank == 3:

outputs = K.conv3d(

inputs,

self.kernel,

strides=self.strides,

padding=self.padding,

data_format=self.data_format,

dilation_rate=self.dilation_rate) if self.use_bias:

outputs = K.bias_add(

outputs,

self.bias,

data_format=self.data_format) if self.activation is not None:

return self.activation(outputs)

return outputs def compute_output_shape(self, input_shape):

if self.data_format == 'channels_last':

space = input_shape[1:-1]

new_space = []

for i in range(len(space)):

new_dim = conv_utils.conv_output_length(

space[i],

self.kernel_size[i],

padding=self.padding,

stride=self.strides[i],

dilation=self.dilation_rate[i])

new_space.append(new_dim)

return (input_shape[0],) + tuple(new_space) + (self.filters,)

if self.data_format == 'channels_first':

space = input_shape[2:]

new_space = []

for i in range(len(space)):

new_dim = conv_utils.conv_output_length(

space[i],

self.kernel_size[i],

padding=self.padding,

stride=self.strides[i],

dilation=self.dilation_rate[i])

new_space.append(new_dim)

return (input_shape[0], self.filters) + tuple(new_space) def get_config(self):

config = {

'rank': self.rank,

'filters': self.filters,

'kernel_size': self.kernel_size,

'strides': self.strides,

'padding': self.padding,

'data_format': self.data_format,

'dilation_rate': self.dilation_rate,

'activation': activations.serialize(self.activation),

'use_bias': self.use_bias,

'kernel_initializer': initializers.serialize(self.kernel_initializer),

'bias_initializer': initializers.serialize(self.bias_initializer),

'kernel_regularizer': regularizers.serialize(self.kernel_regularizer),

'bias_regularizer': regularizers.serialize(self.bias_regularizer),

'activity_regularizer': regularizers.serialize(self.activity_regularizer),

'kernel_constraint': constraints.serialize(self.kernel_constraint),

'bias_constraint': constraints.serialize(self.bias_constraint)

}

base_config = super(_Conv, self).get_config()

return dict(list(base_config.items()) + list(config.items())) class ConvSN2D(Conv2D): def build(self, input_shape):

if self.data_format == 'channels_first':

channel_axis = 1

else:

channel_axis = -1

if input_shape[channel_axis] is None:

raise ValueError('The channel dimension of the inputs '

'should be defined. Found `None`.')

input_dim = input_shape[channel_axis]

kernel_shape = self.kernel_size + (input_dim, self.filters) self.kernel = self.add_weight(shape=kernel_shape,

initializer=self.kernel_initializer,

name='kernel',

regularizer=self.kernel_regularizer,

constraint=self.kernel_constraint) if self.use_bias:

self.bias = self.add_weight(shape=(self.filters,),

initializer=self.bias_initializer,

name='bias',

regularizer=self.bias_regularizer,

constraint=self.bias_constraint)

else:

self.bias = None self.u = self.add_weight(shape=tuple([1, self.kernel.shape.as_list()[-1]]),

initializer=initializers.RandomNormal(0, 1),

name='sn',

trainable=False) # Set input spec.

self.input_spec = InputSpec(ndim=self.rank + 2,

axes={channel_axis: input_dim})

self.built = True

def call(self, inputs, training=None):

def _l2normalize(v, eps=1e-12):

return v / (K.sum(v ** 2) ** 0.5 + eps)

def power_iteration(W, u):

#Accroding the paper, we only need to do power iteration one time.

_u = u

_v = _l2normalize(K.dot(_u, K.transpose(W)))

_u = _l2normalize(K.dot(_v, W))

return _u, _v

#Spectral Normalization

W_shape = self.kernel.shape.as_list()

#Flatten the Tensor

W_reshaped = K.reshape(self.kernel, [-1, W_shape[-1]])

_u, _v = power_iteration(W_reshaped, self.u)

#Calculate Sigma

sigma=K.dot(_v, W_reshaped)

sigma=K.dot(sigma, K.transpose(_u))

#normalize it

W_bar = W_reshaped / sigma

#reshape weight tensor

if training in {0, False}:

W_bar = K.reshape(W_bar, W_shape)

else:

with tf.control_dependencies([self.u.assign(_u)]):

W_bar = K.reshape(W_bar, W_shape) outputs = K.conv2d(

inputs,

W_bar,

strides=self.strides,

padding=self.padding,

data_format=self.data_format,

dilation_rate=self.dilation_rate)

if self.use_bias:

outputs = K.bias_add(

outputs,

self.bias,

data_format=self.data_format)

if self.activation is not None:

return self.activation(outputs)

return outputs class ConvSN1D(Conv1D): def build(self, input_shape):

if self.data_format == 'channels_first':

channel_axis = 1

else:

channel_axis = -1

if input_shape[channel_axis] is None:

raise ValueError('The channel dimension of the inputs '

'should be defined. Found `None`.')

input_dim = input_shape[channel_axis]

kernel_shape = self.kernel_size + (input_dim, self.filters) self.kernel = self.add_weight(shape=kernel_shape,

initializer=self.kernel_initializer,

name='kernel',

regularizer=self.kernel_regularizer,

constraint=self.kernel_constraint) if self.use_bias:

self.bias = self.add_weight(shape=(self.filters,),

initializer=self.bias_initializer,

name='bias',

regularizer=self.bias_regularizer,

constraint=self.bias_constraint)

else:

self.bias = None self.u = self.add_weight(shape=tuple([1, self.kernel.shape.as_list()[-1]]),

initializer=initializers.RandomNormal(0, 1),

name='sn',

trainable=False)

# Set input spec.

self.input_spec = InputSpec(ndim=self.rank + 2,

axes={channel_axis: input_dim})

self.built = True def call(self, inputs, training=None):

def _l2normalize(v, eps=1e-12):

return v / (K.sum(v ** 2) ** 0.5 + eps)

def power_iteration(W, u):

#Accroding the paper, we only need to do power iteration one time.

_u = u

_v = _l2normalize(K.dot(_u, K.transpose(W)))

_u = _l2normalize(K.dot(_v, W))

return _u, _v

#Spectral Normalization

W_shape = self.kernel.shape.as_list()

#Flatten the Tensor

W_reshaped = K.reshape(self.kernel, [-1, W_shape[-1]])

_u, _v = power_iteration(W_reshaped, self.u)

#Calculate Sigma

sigma=K.dot(_v, W_reshaped)

sigma=K.dot(sigma, K.transpose(_u))

#normalize it

W_bar = W_reshaped / sigma

#reshape weight tensor

if training in {0, False}:

W_bar = K.reshape(W_bar, W_shape)

else:

with tf.control_dependencies([self.u.assign(_u)]):

W_bar = K.reshape(W_bar, W_shape) outputs = K.conv1d(

inputs,

W_bar,

strides=self.strides,

padding=self.padding,

data_format=self.data_format,

dilation_rate=self.dilation_rate)

if self.use_bias:

outputs = K.bias_add(

outputs,

self.bias,

data_format=self.data_format)

if self.activation is not None:

return self.activation(outputs)

return outputs class ConvSN3D(Conv3D):

def build(self, input_shape):

if self.data_format == 'channels_first':

channel_axis = 1

else:

channel_axis = -1

if input_shape[channel_axis] is None:

raise ValueError('The channel dimension of the inputs '

'should be defined. Found `None`.')

input_dim = input_shape[channel_axis]

kernel_shape = self.kernel_size + (input_dim, self.filters) self.kernel = self.add_weight(shape=kernel_shape,

initializer=self.kernel_initializer,

name='kernel',

regularizer=self.kernel_regularizer,

constraint=self.kernel_constraint) self.u = self.add_weight(shape=tuple([1, self.kernel.shape.as_list()[-1]]),

initializer=initializers.RandomNormal(0, 1),

name='sn',

trainable=False) if self.use_bias:

self.bias = self.add_weight(shape=(self.filters,),

initializer=self.bias_initializer,

name='bias',

regularizer=self.bias_regularizer,

constraint=self.bias_constraint)

else:

self.bias = None

# Set input spec.

self.input_spec = InputSpec(ndim=self.rank + 2,

axes={channel_axis: input_dim})

self.built = True def call(self, inputs, training=None):

def _l2normalize(v, eps=1e-12):

return v / (K.sum(v ** 2) ** 0.5 + eps)

def power_iteration(W, u):

#Accroding the paper, we only need to do power iteration one time.

_u = u

_v = _l2normalize(K.dot(_u, K.transpose(W)))

_u = _l2normalize(K.dot(_v, W))

return _u, _v

#Spectral Normalization

W_shape = self.kernel.shape.as_list()

#Flatten the Tensor

W_reshaped = K.reshape(self.kernel, [-1, W_shape[-1]])

_u, _v = power_iteration(W_reshaped, self.u)

#Calculate Sigma

sigma=K.dot(_v, W_reshaped)

sigma=K.dot(sigma, K.transpose(_u))

#normalize it

W_bar = W_reshaped / sigma

#reshape weight tensor

if training in {0, False}:

W_bar = K.reshape(W_bar, W_shape)

else:

with tf.control_dependencies([self.u.assign(_u)]):

W_bar = K.reshape(W_bar, W_shape) outputs = K.conv3d(

inputs,

W_bar,

strides=self.strides,

padding=self.padding,

data_format=self.data_format,

dilation_rate=self.dilation_rate)

if self.use_bias:

outputs = K.bias_add(

outputs,

self.bias,

data_format=self.data_format)

if self.activation is not None:

return self.activation(outputs)

return outputs class EmbeddingSN(Embedding): def build(self, input_shape):

self.embeddings = self.add_weight(

shape=(self.input_dim, self.output_dim),

initializer=self.embeddings_initializer,

name='embeddings',

regularizer=self.embeddings_regularizer,

constraint=self.embeddings_constraint,

dtype=self.dtype) self.u = self.add_weight(shape=tuple([1, self.embeddings.shape.as_list()[-1]]),

initializer=initializers.RandomNormal(0, 1),

name='sn',

trainable=False) self.built = True def call(self, inputs):

if K.dtype(inputs) != 'int32':

inputs = K.cast(inputs, 'int32') def _l2normalize(v, eps=1e-12):

return v / (K.sum(v ** 2) ** 0.5 + eps)

def power_iteration(W, u):

#Accroding the paper, we only need to do power iteration one time.

_u = u

_v = _l2normalize(K.dot(_u, K.transpose(W)))

_u = _l2normalize(K.dot(_v, W))

return _u, _v

W_shape = self.embeddings.shape.as_list()

#Flatten the Tensor

W_reshaped = K.reshape(self.embeddings, [-1, W_shape[-1]])

_u, _v = power_iteration(W_reshaped, self.u)

#Calculate Sigma

sigma=K.dot(_v, W_reshaped)

sigma=K.dot(sigma, K.transpose(_u))

#normalize it

W_bar = W_reshaped / sigma

#reshape weight tensor

if training in {0, False}:

W_bar = K.reshape(W_bar, W_shape)

else:

with tf.control_dependencies([self.u.assign(_u)]):

W_bar = K.reshape(W_bar, W_shape)

self.embeddings = W_bar out = K.gather(self.embeddings, inputs)

return out class ConvSN2DTranspose(Conv2DTranspose): def build(self, input_shape):

if len(input_shape) != 4:

raise ValueError('Inputs should have rank ' +

str(4) +

'; Received input shape:', str(input_shape))

if self.data_format == 'channels_first':

channel_axis = 1

else:

channel_axis = -1

if input_shape[channel_axis] is None:

raise ValueError('The channel dimension of the inputs '

'should be defined. Found `None`.')

input_dim = input_shape[channel_axis]

kernel_shape = self.kernel_size + (self.filters, input_dim) self.kernel = self.add_weight(shape=kernel_shape,

initializer=self.kernel_initializer,

name='kernel',

regularizer=self.kernel_regularizer,

constraint=self.kernel_constraint)

if self.use_bias:

self.bias = self.add_weight(shape=(self.filters,),

initializer=self.bias_initializer,

name='bias',

regularizer=self.bias_regularizer,

constraint=self.bias_constraint)

else:

self.bias = None self.u = self.add_weight(shape=tuple([1, self.kernel.shape.as_list()[-1]]),

initializer=initializers.RandomNormal(0, 1),

name='sn',

trainable=False) # Set input spec.

self.input_spec = InputSpec(ndim=4, axes={channel_axis: input_dim})

self.built = True def call(self, inputs):

input_shape = K.shape(inputs)

batch_size = input_shape[0]

if self.data_format == 'channels_first':

h_axis, w_axis = 2, 3

else:

h_axis, w_axis = 1, 2 height, width = input_shape[h_axis], input_shape[w_axis]

kernel_h, kernel_w = self.kernel_size

stride_h, stride_w = self.strides

if self.output_padding is None:

out_pad_h = out_pad_w = None

else:

out_pad_h, out_pad_w = self.output_padding # Infer the dynamic output shape:

out_height = conv_utils.deconv_length(height,

stride_h, kernel_h,

self.padding,

out_pad_h)

out_width = conv_utils.deconv_length(width,

stride_w, kernel_w,

self.padding,

out_pad_w)

if self.data_format == 'channels_first':

output_shape = (batch_size, self.filters, out_height, out_width)

else:

output_shape = (batch_size, out_height, out_width, self.filters) #Spectral Normalization

def _l2normalize(v, eps=1e-12):

return v / (K.sum(v ** 2) ** 0.5 + eps)

def power_iteration(W, u):

#Accroding the paper, we only need to do power iteration one time.

_u = u

_v = _l2normalize(K.dot(_u, K.transpose(W)))

_u = _l2normalize(K.dot(_v, W))

return _u, _v

W_shape = self.kernel.shape.as_list()

#Flatten the Tensor

W_reshaped = K.reshape(self.kernel, [-1, W_shape[-1]])

_u, _v = power_iteration(W_reshaped, self.u)

#Calculate Sigma

sigma=K.dot(_v, W_reshaped)

sigma=K.dot(sigma, K.transpose(_u))

#normalize it

W_bar = W_reshaped / sigma

#reshape weight tensor

if training in {0, False}:

W_bar = K.reshape(W_bar, W_shape)

else:

with tf.control_dependencies([self.u.assign(_u)]):

W_bar = K.reshape(W_bar, W_shape)

self.kernel = W_bar outputs = K.conv2d_transpose(

inputs,

self.kernel,

output_shape,

self.strides,

padding=self.padding,

data_format=self.data_format) if self.use_bias:

outputs = K.bias_add(

outputs,

self.bias,

data_format=self.data_format) if self.activation is not None:

return self.activation(outputs)

return outputs

完成了该部之后开始正文。

首先是导入数据集:

# 导入CIFAR10数据集

# 读取数据

def unpickle(file):

import pickle

with open(file, 'rb') as fo:

dict = pickle.load(fo, encoding='bytes')

return dict cifar={}

for i in range(5):

cifar1=unpickle('data_batch_'+str(i+1))

if i==0:

cifar[b'data']=cifar1[b'data']

cifar[b'labels']=cifar1[b'labels']

else:

cifar[b'data']=np.vstack([cifar1[b'data'],cifar[b'data']])

cifar[b'labels']=np.hstack([cifar1[b'labels'],cifar[b'labels']])

target_name=unpickle('batches.meta')

cifar[b'label_names']=target_name[b'label_names']

del cifar1 # 定义数据格式

blank_image= np.zeros((len(cifar[b'data']),32,32,3), np.uint8)

for i in range(len(cifar[b'data'])):

blank_image[i] = np.zeros((32,32,3), np.uint8)

blank_image[i][:,:,0]=cifar[b'data'][i][0:1024].reshape(32,32)

blank_image[i][:,:,1]=cifar[b'data'][i][1024:1024*2].reshape(32,32)

blank_image[i][:,:,2]=cifar[b'data'][i][1024*2:1024*3].reshape(32,32)

cifar[b'data']=blank_image cifar_test=unpickle('test_batch')

cifar_test[b'labels']=np.array(cifar_test[b'labels'])

blank_image= np.zeros((len(cifar_test[b'data']),32,32,3), np.uint8)

for i in range(len(cifar_test[b'data'])):

blank_image[i] = np.zeros((32,32,3), np.uint8)

blank_image[i][:,:,0]=cifar_test[b'data'][i][0:1024].reshape(32,32)

blank_image[i][:,:,1]=cifar_test[b'data'][i][1024:1024*2].reshape(32,32)

blank_image[i][:,:,2]=cifar_test[b'data'][i][1024*2:1024*3].reshape(32,32)

cifar_test[b'data']=blank_image x_train=cifar[b'data']

x_test=cifar[b'data']

x_test=cifar_test[b'data']

y_test=cifar_test[b'labels']

X = np.concatenate((x_test,x_train))

以上是在cifar 10 官方网站下载的数据文件。也可以使用keras官方的cifar10导入代码:

from keras.datasets import cifar100, cifar10 (x_train, y_train), (x_test, y_test) = cifar10.load_data()

接下来是生成器与判别器的构造:

# Hyperperemeter

# 生成器与判别器构造

BATCHSIZE=64

LEARNING_RATE = 0.0002

TRAINING_RATIO = 1

BETA_1 = 0.0

BETA_2 = 0.9

EPOCHS = 500

BN_MIMENTUM = 0.1

BN_EPSILON = 0.00002

SAVE_DIR = 'img/generated_img_CIFAR10_DCGAN/' GENERATE_ROW_NUM = 8

GENERATE_BATCHSIZE = GENERATE_ROW_NUM*GENERATE_ROW_NUM def BuildGenerator(summary=True):

model = Sequential()

model.add(Dense(4*4*512, kernel_initializer='glorot_uniform' , input_dim=128))

model.add(Reshape((4,4,512)))

model.add(Conv2DTranspose(256, kernel_size=4, strides=2, padding='same', activation='relu',kernel_initializer='glorot_uniform'))

model.add(BatchNormalization(epsilon=BN_EPSILON, momentum=BN_MIMENTUM))

model.add(Conv2DTranspose(128, kernel_size=4, strides=2, padding='same', activation='relu',kernel_initializer='glorot_uniform'))

model.add(BatchNormalization(epsilon=BN_EPSILON, momentum=BN_MIMENTUM))

model.add(Conv2DTranspose(64, kernel_size=4, strides=2, padding='same', activation='relu',kernel_initializer='glorot_uniform'))

model.add(BatchNormalization(epsilon=BN_EPSILON, momentum=BN_MIMENTUM))

model.add(Conv2DTranspose(3, kernel_size=3, strides=1, padding='same', activation='tanh'))

if summary:

print("Generator")

model.summary()

return model def BuildDiscriminator(summary=True, spectral_normalization=True):

if spectral_normalization:

model = Sequential()

model.add(ConvSN2D(64, kernel_size=3, strides=1,kernel_initializer='glorot_uniform', padding='same', input_shape=(32,32,3) ))

model.add(LeakyReLU(0.1))

model.add(ConvSN2D(64, kernel_size=4, strides=2,kernel_initializer='glorot_uniform', padding='same'))

model.add(LeakyReLU(0.1))

model.add(ConvSN2D(128, kernel_size=3, strides=1,kernel_initializer='glorot_uniform', padding='same'))

model.add(LeakyReLU(0.1))

model.add(ConvSN2D(128, kernel_size=4, strides=2,kernel_initializer='glorot_uniform', padding='same'))

model.add(LeakyReLU(0.1))

model.add(ConvSN2D(256, kernel_size=3, strides=1,kernel_initializer='glorot_uniform', padding='same'))

model.add(LeakyReLU(0.1))

model.add(ConvSN2D(256, kernel_size=4, strides=2,kernel_initializer='glorot_uniform', padding='same'))

model.add(LeakyReLU(0.1))

model.add(ConvSN2D(512, kernel_size=3, strides=1,kernel_initializer='glorot_uniform', padding='same'))

model.add(LeakyReLU(0.1))

model.add(Flatten())

model.add(DenseSN(1,kernel_initializer='glorot_uniform'))

else:

model = Sequential()

model.add(Conv2D(64, kernel_size=3, strides=1,kernel_initializer='glorot_uniform', padding='same', input_shape=(32,32,3) ))

model.add(LeakyReLU(0.1))

model.add(Conv2D(64, kernel_size=4, strides=2,kernel_initializer='glorot_uniform', padding='same'))

model.add(LeakyReLU(0.1))

model.add(Conv2D(128, kernel_size=3, strides=1,kernel_initializer='glorot_uniform', padding='same'))

model.add(LeakyReLU(0.1))

model.add(Conv2D(128, kernel_size=4, strides=2,kernel_initializer='glorot_uniform', padding='same'))

model.add(LeakyReLU(0.1))

model.add(Conv2D(256, kernel_size=3, strides=1,kernel_initializer='glorot_uniform', padding='same'))

model.add(LeakyReLU(0.1))

model.add(Conv2D(256, kernel_size=4, strides=2,kernel_initializer='glorot_uniform', padding='same'))

model.add(LeakyReLU(0.1))

model.add(Conv2D(512, kernel_size=3, strides=1,kernel_initializer='glorot_uniform', padding='same'))

model.add(LeakyReLU(0.1))

model.add(Flatten())

model.add(Dense(1,kernel_initializer='glorot_uniform'))

if summary:

print('Discriminator')

print('Spectral Normalization: {}'.format(spectral_normalization))

model.summary()

return model def wasserstein_loss(y_true, y_pred):

return K.mean(y_true*y_pred) generator = BuildGenerator()

discriminator = BuildDiscriminator()

然后是训练器的构造:

# 生成器训练模型

Noise_input_for_training_generator = Input(shape=(128,))

Generated_image = generator(Noise_input_for_training_generator)

Discriminator_output = discriminator(Generated_image)

model_for_training_generator = Model(Noise_input_for_training_generator, Discriminator_output)

print("model_for_training_generator")

model_for_training_generator.summary() discriminator.trainable = False

model_for_training_generator.summary() model_for_training_generator.compile(optimizer=Adam(LEARNING_RATE, beta_1=BETA_1, beta_2=BETA_2), loss=wasserstein_loss) # 判别器训练模型

Real_image = Input(shape=(32,32,3))

Noise_input_for_training_discriminator = Input(shape=(128,))

Fake_image = generator(Noise_input_for_training_discriminator)

Discriminator_output_for_real = discriminator(Real_image)

Discriminator_output_for_fake = discriminator(Fake_image) model_for_training_discriminator = Model([Real_image,

Noise_input_for_training_discriminator],

[Discriminator_output_for_real,

Discriminator_output_for_fake])

print("model_for_training_discriminator")

generator.trainable = False

discriminator.trainable = True

model_for_training_discriminator.compile(optimizer=Adam(LEARNING_RATE, beta_1=BETA_1, beta_2=BETA_2), loss=[wasserstein_loss, wasserstein_loss])

model_for_training_discriminator.summary() real_y = np.ones((BATCHSIZE, 1), dtype=np.float32)

fake_y = -real_y X = X/255*2-1 plt.imshow((X[8787]+1)/2)

最后是重复训练:

test_noise = np.random.randn(GENERATE_BATCHSIZE, 128)

W_loss = []

discriminator_loss = []

generator_loss = []

for epoch in range(EPOCHS):

np.random.shuffle(X) print("epoch {} of {}".format(epoch+1, EPOCHS))

num_batches = int(X.shape[0] // BATCHSIZE) print("number of batches: {}".format(int(X.shape[0] // (BATCHSIZE)))) progress_bar = Progbar(target=int(X.shape[0] // (BATCHSIZE * TRAINING_RATIO)))

minibatches_size = BATCHSIZE * TRAINING_RATIO start_time = time()

for index in range(int(X.shape[0] // (BATCHSIZE * TRAINING_RATIO))):

progress_bar.update(index)

discriminator_minibatches = X[index * minibatches_size:(index + 1) * minibatches_size] for j in range(TRAINING_RATIO):

image_batch = discriminator_minibatches[j * BATCHSIZE : (j + 1) * BATCHSIZE]

noise = np.random.randn(BATCHSIZE, 128).astype(np.float32)

discriminator.trainable = True

generator.trainable = False

discriminator_loss.append(model_for_training_discriminator.train_on_batch([image_batch, noise],

[real_y, fake_y]))

discriminator.trainable = False

generator.trainable = True

generator_loss.append(model_for_training_generator.train_on_batch(np.random.randn(BATCHSIZE, 128), real_y)) print('\nepoch time: {}'.format(time()-start_time)) W_real = model_for_training_generator.evaluate(test_noise, real_y)

print(W_real)

W_fake = model_for_training_generator.evaluate(test_noise, fake_y)

print(W_fake)

W_l = W_real+W_fake

print('wasserstein_loss: {}'.format(W_l))

W_loss.append(W_l)

#Generate image

generated_image = generator.predict(test_noise)

generated_image = (generated_image+1)/2

for i in range(GENERATE_ROW_NUM):

new = generated_image[i*GENERATE_ROW_NUM:i*GENERATE_ROW_NUM+GENERATE_ROW_NUM].reshape(32*GENERATE_ROW_NUM,32,3)

if i!=0:

old = np.concatenate((old,new),axis=1)

else:

old = new

print('plot generated_image')

plt.imsave('{}/SN_epoch_{}.png'.format(SAVE_DIR, epoch), old)

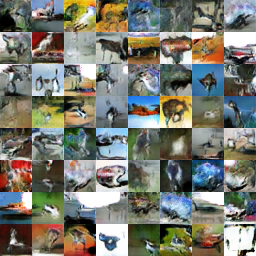

训练十轮之后生成的图片有显著的提升,结果如下:

第一轮:

第10轮:

DCGAN生成式对抗网络--keras实现的更多相关文章

- 【神经网络与深度学习】生成式对抗网络GAN研究进展(五)——Deep Convolutional Generative Adversarial Nerworks,DCGAN

[前言] 本文首先介绍生成式模型,然后着重梳理生成式模型(Generative Models)中生成对抗网络(Generative Adversarial Network)的研究与发展.作者 ...

- (转)【重磅】无监督学习生成式对抗网络突破,OpenAI 5大项目落地

[重磅]无监督学习生成式对抗网络突破,OpenAI 5大项目落地 [新智元导读]"生成对抗网络是切片面包发明以来最令人激动的事情!"LeCun前不久在Quroa答问时毫不加掩饰对生 ...

- 学习笔记TF051:生成式对抗网络

生成式对抗网络(gennerative adversarial network,GAN),谷歌2014年提出网络模型.灵感自二人博弈的零和博弈,目前最火的非监督深度学习.GAN之父,Ian J.Goo ...

- 生成式对抗网络(GAN)学习笔记

图像识别和自然语言处理是目前应用极为广泛的AI技术,这些技术不管是速度还是准确度都已经达到了相当的高度,具体应用例如智能手机的人脸解锁.内置的语音助手.这些技术的实现和发展都离不开神经网络,可是传统的 ...

- 不要怂,就是GAN (生成式对抗网络) (一): GAN 简介

前面我们用 TensorFlow 写了简单的 cifar10 分类的代码,得到还不错的结果,下面我们来研究一下生成式对抗网络 GAN,并且用 TensorFlow 代码实现. 自从 Ian Goodf ...

- 【CV论文阅读】生成式对抗网络GAN

生成式对抗网络GAN 1. 基本GAN 在论文<Generative Adversarial Nets>提出的GAN是最原始的框架,可以看成极大极小博弈的过程,因此称为“对抗网络”.一般 ...

- GAN生成式对抗网络(三)——mnist数据生成

通过GAN生成式对抗网络,产生mnist数据 引入包,数据约定等 import numpy as np import matplotlib.pyplot as plt import input_dat ...

- 不要怂,就是GAN (生成式对抗网络) (一)

前面我们用 TensorFlow 写了简单的 cifar10 分类的代码,得到还不错的结果,下面我们来研究一下生成式对抗网络 GAN,并且用 TensorFlow 代码实现. 自从 Ian Goodf ...

- 学习笔记GAN001:生成式对抗网络,只需10步,从零开始到调试

生成式对抗网络(gennerative adversarial network,GAN),目前最火的非监督深度学习.一个生成网络无中生有,一个判别网络推动进化.学技术,不先着急看书看文章.先把Demo ...

随机推荐

- 非均匀B样条离散点的加密与平滑

非均匀B样条离散点的加密与平滑 离散点的预处理是点云网格化很关键的一步,主要就是离散点的平滑.孔洞修补:本文是基于非均匀B样条基函数进行离散点云的加密和平滑的,一下为初步实现结果. 算法步骤: 1.数 ...

- T84341 Jelly的难题1

T84341 Jelly的难题1 题解 当窝发现窝的锅在读入这个矩阵的时候,窝..窝..窝.. 果然,一遇到和字符串有关的题就开始吹空调 好啦我们说说思路吧 BFS队列实现 拿出一个没有走过的点,扩展 ...

- matlab7与win7不兼容

移动鼠标到其打开图标,右键打开属性,选择兼容性,勾选"以兼容模式运行程序",选择Windows Vista

- [pipenv]Warning: Python 3.7 was not found on your system…

前置条件: 切换到pipfile文件所在目录gotest_official 问题描述: 使用pipenv install创建虚拟环境,报错 wangju@wangju-HP--G4:~/Desktop ...

- 63不同路径II

题目: 一个机器人位于一个 m x n 网格的左上角 (起始点在下图中标记为“Start” ).机器人每次只能向下或者向右移动一步.机器人试图达到网格的右下角(在下图中标记为“Finish”).现在考 ...

- Could not find aapt Please set the ANDROID_HOME environment variable with the Android SDK root directory path

写case写好好哒,突然debug的时候就冒出这个错误: selenium.common.exceptions.WebDriverException: Message: An unknown serv ...

- ubuntu16.04离线安装nvidia-docker2

目前需要离线对ubuntu 进行封装docker环境 在熟悉docker环境过程中,有网络条件下,还处于懵逼状态 离线安装…… 大佬救救我 来了: 首先下载安装docker最新版 我的OS是Ubunt ...

- python基础--面向对象之绑定非绑定方法

# 类中定义的函数分为两大类, #一,绑定方法(绑定给谁,谁来调用就自动将它本身当做第一个参数传入) # 1,绑定到类的方法:用classmethod装饰器装饰的方法. # 对象也可以掉用,仍将类作为 ...

- 【VS开发】windows注册ActiveX控件

ActiveX控件是一个动态链接库,是作为基于COM服务器进行操作的,并且可以嵌入在包容器宿主应用程序中,ActiveX控件的前身就是OLE控件.由于ActiveX控件与开发平台无关,因此,在一种编程 ...

- Vue --》this.$set()的神奇用法

作为一名开发者,我们都知道: data中数据,都是响应式.也就是说,如果操作data中的数据,视图会实时更新: 但在实际开发中,遇到过一个坑:若data中数据类型为对象,方法methods中改变对象的 ...