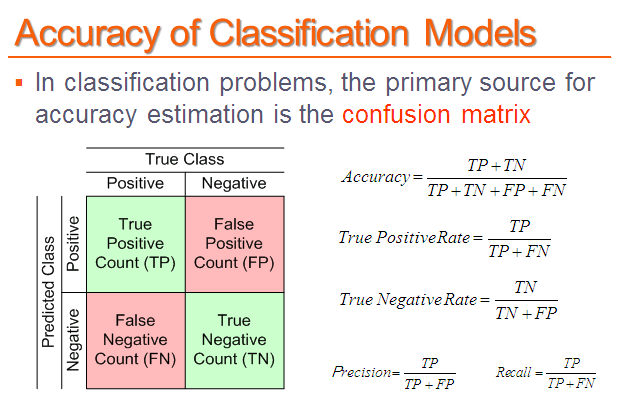

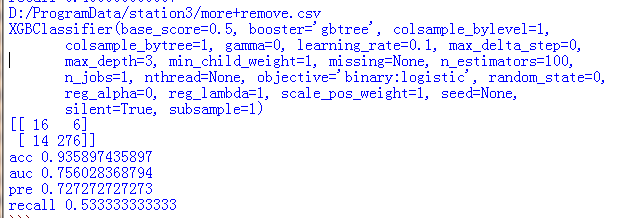

分类预测输出precision,recall,accuracy,auc和tp,tn,fp,fn矩阵

此次我做的实验是二分类问题,输出precision,recall,accuracy,auc

- # -*- coding: utf-8 -*-

- #from sklearn.neighbors import

- import numpy as np

- from pandas import read_csv

- import pandas as pd

- import sys

- import importlib

- from sklearn.neighbors import KNeighborsClassifier

- from sklearn.ensemble import GradientBoostingClassifier

- from sklearn import svm

- from sklearn import cross_validation

- from sklearn.metrics import hamming_loss

- from sklearn import metrics

- importlib.reload(sys)

- from sklearn.linear_model import LogisticRegression

- from imblearn.combine import SMOTEENN

- from sklearn.tree import DecisionTreeClassifier

- from sklearn.ensemble import RandomForestClassifier #92%

- from sklearn import tree

- from xgboost.sklearn import XGBClassifier

- from sklearn.linear_model import SGDClassifier

- from sklearn import neighbors

- from sklearn.naive_bayes import BernoulliNB

- import matplotlib as mpl

- import matplotlib.pyplot as plt

- from sklearn.metrics import confusion_matrix

- from numpy import mat

- def metrics_result(actual, predict):

- print('准确度:{0:.3f}'.format(metrics.accuracy_score(actual, predict)))

- print('精密度:{0:.3f}'.format(metrics.precision_score(actual, predict,average='weighted')))

- print('召回:{0:0.3f}'.format(metrics.recall_score(actual, predict,average='weighted')))

- print('f1-score:{0:.3f}'.format(metrics.f1_score(actual, predict,average='weighted')))

- print('auc:{0:.3f}'.format(metrics.roc_auc_score(test_y, predict)))

输出混淆矩阵

- matr=confusion_matrix(test_y,predict)

- matr=mat(matr)

- conf=np.matrix([[0,0],[0,0]])

- conf[0,0]=matr[1,1]

- conf[1,0]=matr[1,0]

- conf[0,1]=matr[0,1]

- conf[1,1]=matr[0,0]

- print(conf)

全代码:

- # -*- coding: utf-8 -*-

- #from sklearn.neighbors import

- import numpy as np

- from pandas import read_csv

- import pandas as pd

- import sys

- import importlib

- from sklearn.neighbors import KNeighborsClassifier

- from sklearn.ensemble import GradientBoostingClassifier

- from sklearn import svm

- from sklearn import cross_validation

- from sklearn.metrics import hamming_loss

- from sklearn import metrics

- importlib.reload(sys)

- from sklearn.linear_model import LogisticRegression

- from imblearn.combine import SMOTEENN

- from sklearn.tree import DecisionTreeClassifier

- from sklearn.ensemble import RandomForestClassifier #92%

- from sklearn import tree

- from xgboost.sklearn import XGBClassifier

- from sklearn.linear_model import SGDClassifier

- from sklearn import neighbors

- from sklearn.naive_bayes import BernoulliNB

- import matplotlib as mpl

- import matplotlib.pyplot as plt

- from sklearn.metrics import confusion_matrix

- from numpy import mat

- def metrics_result(actual, predict):

- print('准确度:{0:.3f}'.format(metrics.accuracy_score(actual, predict)))

- print('精密度:{0:.3f}'.format(metrics.precision_score(actual, predict,average='weighted')))

- print('召回:{0:0.3f}'.format(metrics.recall_score(actual, predict,average='weighted')))

- print('f1-score:{0:.3f}'.format(metrics.f1_score(actual, predict,average='weighted')))

- print('auc:{0:.3f}'.format(metrics.roc_auc_score(test_y, predict)))

- '''分类0-1'''

- root1="D:/ProgramData/station3/10.csv"

- root2="D:/ProgramData/station3/more+average2.csv"

- root3="D:/ProgramData/station3/new_10.csv"

- root4="D:/ProgramData/station3/more+remove.csv"

- root5="D:/ProgramData/station3/new_10 2.csv"

- root6="D:/ProgramData/station3/new10.csv"

- root7="D:/ProgramData/station3/no_-999.csv"

- root=root4

- data1 = read_csv(root) #数据转化为数组

- data1=data1.values

- print(root)

- time=1

- accuracy=[]

- aucc=[]

- pre=[]

- recall=[]

- for i in range(time):

- train, test= cross_validation.train_test_split(data1, test_size=0.2, random_state=i)

- test_x=test[:,:-1]

- test_y=test[:,-1]

- train_x=train[:,:-1]

- train_y=train[:,-1]

- # =============================================================================

- # print(train_x.shape)

- # print(train_y.shape)

- # print(test_x.shape)

- # print(test_y.shape)

- # print(type(train_x))

- # =============================================================================

- #X_Train=train_x

- #Y_Train=train_y

- X_Train, Y_Train = SMOTEENN().fit_sample(train_x, train_y)

- #clf = RandomForestClassifier() #82

- #clf = LogisticRegression() #82

- #penalty=’l2’, dual=False, tol=0.0001, C=1.0, fit_intercept=True, intercept_scaling=1, class_weight=None, random_state=None, solver=’liblinear’, max_iter=100, multi_class=’ovr’, verbose=0, warm_start=False, n_jobs=1

- #clf=svm.SVC()

- clf= XGBClassifier()

- #from sklearn.ensemble import RandomForestClassifier #92%

- #clf = DecisionTreeClassifier()

- #clf = GradientBoostingClassifier()

- #clf=neighbors.KNeighborsClassifier()

- #clf=BernoulliNB()

- print(clf)

- clf.fit(X_Train, Y_Train)

- predict=clf.predict(test_x)

- matr=confusion_matrix(test_y,predict)

- matr=mat(matr)

- conf=np.matrix([[0,0],[0,0]])

- conf[0,0]=matr[1,1]

- conf[1,0]=matr[1,0]

- conf[0,1]=matr[0,1]

- conf[1,1]=matr[0,0]

- print(conf)

- #a=metrics_result(test_y, predict)

- #a=metrics_result(test_y,predict)

- '''accuracy'''

- aa=metrics.accuracy_score(test_y, predict)

- #print(metrics.accuracy_score(test_y, predict))

- accuracy.append(aa)

- '''auc'''

- bb=metrics.roc_auc_score(test_y, predict, average=None)

- aucc.append(bb)

- '''precision'''

- cc=metrics.precision_score(test_y, predict, average=None)

- pre.append(cc[1])

- # =============================================================================

- # print('cc')

- # print(type(cc))

- # print(cc[1])

- # print('cc')

- # =============================================================================

- '''recall'''

- dd=metrics.recall_score(test_y, predict, average=None)

- #print(metrics.recall_score(test_y, predict,average='weighted'))

- recall.append(dd[1])

- f=open('D:\ProgramData\station3\predict.txt', 'w')

- for i in range(len(predict)):

- f.write(str(predict[i]))

- f.write('\n')

- f.write("写好了")

- f.close()

- f=open('D:\ProgramData\station3\y_.txt', 'w')

- for i in range(len(predict)):

- f.write(str(test_y[i]))

- f.write('\n')

- f.write("写好了")

- f.close()

- # =============================================================================

- # f=open('D:/ProgramData/station3/predict.txt', 'w')

- # for i in range(len(predict)):

- # f.write(str(predict[i]))

- # f.write('\n')

- # f.write("写好了")

- # f.close()

- #

- # f=open('D:/ProgramData/station3/y.txt', 'w')

- # for i in range(len(test_y)):

- # f.write(str(test_y[i]))

- # f.write('\n')

- # f.write("写好了")

- # f.close()

- #

- # =============================================================================

- # =============================================================================

- # print('调用函数auc:', metrics.roc_auc_score(test_y, predict, average='micro'))

- #

- # fpr, tpr, thresholds = metrics.roc_curve(test_y.ravel(),predict.ravel())

- # auc = metrics.auc(fpr, tpr)

- # print('手动计算auc:', auc)

- # #绘图

- # mpl.rcParams['font.sans-serif'] = u'SimHei'

- # mpl.rcParams['axes.unicode_minus'] = False

- # #FPR就是横坐标,TPR就是纵坐标

- # plt.plot(fpr, tpr, c = 'r', lw = 2, alpha = 0.7, label = u'AUC=%.3f' % auc)

- # plt.plot((0, 1), (0, 1), c = '#808080', lw = 1, ls = '--', alpha = 0.7)

- # plt.xlim((-0.01, 1.02))

- # plt.ylim((-0.01, 1.02))

- # plt.xticks(np.arange(0, 1.1, 0.1))

- # plt.yticks(np.arange(0, 1.1, 0.1))

- # plt.xlabel('False Positive Rate', fontsize=13)

- # plt.ylabel('True Positive Rate', fontsize=13)

- # plt.grid(b=True, ls=':')

- # plt.legend(loc='lower right', fancybox=True, framealpha=0.8, fontsize=12)

- # plt.title(u'大类问题一分类后的ROC和AUC', fontsize=17)

- # plt.show()

- # =============================================================================

- sum_acc=0

- sum_auc=0

- sum_pre=0

- sum_recall=0

- for i in range(time):

- sum_acc+=accuracy[i]

- sum_auc+=aucc[i]

- sum_pre+=pre[i]

- sum_recall+=recall[i]

- acc1=sum_acc*1.0/time

- auc1=sum_auc*1.0/time

- pre1=sum_pre*1.0/time

- recall1=sum_recall*1.0/time

- print("acc",acc1)

- print("auc",auc1)

- print("pre",pre1)

- print("recall",recall1)

- # =============================================================================

- #

- # data1 = read_csv(root2) #数据转化为数组

- # data1=data1.values

- #

- #

- # accuracy=[]

- # auc=[]

- # pre=[]

- # recall=[]

- # for i in range(30):

- # train, test= cross_validation.train_test_split(data1, test_size=0.2, random_state=i)

- # test_x=test[:,:-1]

- # test_y=test[:,-1]

- # train_x=train[:,:-1]

- # train_y=train[:,-1]

- # X_Train, Y_Train = SMOTEENN().fit_sample(train_x, train_y)

- #

- # #clf = RandomForestClassifier() #82

- # clf = LogisticRegression() #82

- # #clf=svm.SVC()

- # #clf= XGBClassifier()

- # #from sklearn.ensemble import RandomForestClassifier #92%

- # #clf = DecisionTreeClassifier()

- # #clf = GradientBoostingClassifier()

- #

- # #clf=neighbors.KNeighborsClassifier() 65.25%

- # #clf=BernoulliNB()

- # clf.fit(X_Train, Y_Train)

- # predict=clf.predict(test_x)

- #

- # '''accuracy'''

- # aa=metrics.accuracy_score(test_y, predict)

- # accuracy.append(aa)

- #

- # '''auc'''

- # aa=metrics.roc_auc_score(test_y, predict)

- # auc.append(aa)

- #

- # '''precision'''

- # aa=metrics.precision_score(test_y, predict,average='weighted')

- # pre.append(aa)

- #

- # '''recall'''

- # aa=metrics.recall_score(test_y, predict,average='weighted')

- # recall.append(aa)

- #

- #

- # sum_acc=0

- # sum_auc=0

- # sum_pre=0

- # sum_recall=0

- # for i in range(30):

- # sum_acc+=accuracy[i]

- # sum_auc+=auc[i]

- # sum_pre+=pre[i]

- # sum_recall+=recall[i]

- #

- # acc1=sum_acc*1.0/30

- # auc1=sum_auc*1.0/30

- # pre1=sum_pre*1.0/30

- # recall1=sum_recall*1.0/30

- # print("more 的 acc:", acc1)

- # print("more 的 auc:", auc1)

- # print("more 的 precision:", pre1)

- # print("more 的 recall:", recall1)

- #

- # =============================================================================

- #X_train, X_test, y_train, y_test = cross_validation.train_test_split(X_Train,Y_Train, test_size=0.2, random_state=i)

输出结果:

分类预测输出precision,recall,accuracy,auc和tp,tn,fp,fn矩阵的更多相关文章

- 目标检测的评价标准mAP, Precision, Recall, Accuracy

目录 metrics 评价方法 TP , FP , TN , FN 概念 计算流程 Accuracy , Precision ,Recall Average Precision PR曲线 AP计算 A ...

- 机器学习:评价分类结果(Precision - Recall 的平衡、P - R 曲线)

一.Precision - Recall 的平衡 1)基础理论 调整阈值的大小,可以调节精准率和召回率的比重: 阈值:threshold,分类边界值,score > threshold 时分类为 ...

- 机器学习基础梳理—(accuracy,precision,recall浅谈)

一.TP TN FP FN TP:标签为正例,预测为正例(P),即预测正确(T) TN:标签为负例,预测为负例(N),即预测正确(T) FP:标签为负例,预测为正例(P),即预测错误(F) FN:标签 ...

- Precision,Recall,F1的计算

Precision又叫查准率,Recall又叫查全率.这两个指标共同衡量才能评价模型输出结果. TP: 预测为1(Positive),实际也为1(Truth-预测对了) TN: 预测为0(Negati ...

- 评价指标整理:Precision, Recall, F-score, TPR, FPR, TNR, FNR, AUC, Accuracy

针对二分类的结果,对模型进行评估,通常有以下几种方法: Precision.Recall.F-score(F1-measure)TPR.FPR.TNR.FNR.AUCAccuracy 真实结果 1 ...

- 分类指标准确率(Precision)和正确率(Accuracy)的区别

http://www.cnblogs.com/fengfenggirl/p/classification_evaluate.html 一.引言 分类算法有很多,不同分类算法又用很多不同的变种.不同的分 ...

- Precision/Recall、ROC/AUC、AP/MAP等概念区分

1. Precision和Recall Precision,准确率/查准率.Recall,召回率/查全率.这两个指标分别以两个角度衡量分类系统的准确率. 例如,有一个池塘,里面共有1000条鱼,含10 ...

- 机器学习--如何理解Accuracy, Precision, Recall, F1 score

当我们在谈论一个模型好坏的时候,我们常常会听到准确率(Accuracy)这个词,我们也会听到"如何才能使模型的Accurcy更高".那么是不是准确率最高的模型就一定是最好的模型? 这篇博文会向大家解释 ...

- 通过Precision/Recall判断分类结果偏差极大时算法的性能

当我们对某些问题进行分类时,真实结果的分布会有明显偏差. 例如对是否患癌症进行分类,testing set 中可能只有0.5%的人患了癌症. 此时如果直接数误分类数的话,那么一个每次都预测人没有癌症的 ...

随机推荐

- 在Github上搭建博客

貌似还是这个链接最靠谱呀 http://my.oschina.net/nark/blog/116299 如何利用github建立个人博客:之一 在线编辑器http://markable.in/ed ...

- POJ 1122 FDNY to the Rescue!(最短路+路径输出)

http://poj.org/problem?id=1122 题意:给出地图并且给出终点和多个起点,输出从各个起点到终点的路径和时间. 思路: 因为有多个起点,所以这里反向建图,这样就相当于把终点变成 ...

- python 清空列表

# lst = ["篮球","排球","乒乓球","足球","电子竞技","台球" ...

- Spring Cloud组件

Spring Cloud Eureka Eureka负责服务的注册于发现,Eureka的角色和 Zookeeper差不多,都是服务的注册和发现,构成Eureka体系的包括:服务注册中心.服务提供者.服 ...

- 最全android Demo

1.BeautifulRefreshLayout-漂亮的美食下拉刷新 https://github.com/android-cjj/BeautifulRefreshLayout/tree/Beauti ...

- UVA-11903 Just Finish it up

题目大意:一个环形跑道上有n个加油站,每个加油站可加a[i]加仑油,走到下一站需要w[i]加仑油,初始油箱为空,问能否绕跑道一圈,起点任选,若有多个起点,找出编号最小的. 题目分析:如果从1号加油站开 ...

- powerdesigner安装图解

- SGU 132. Another Chocolate Maniac 状压dp 难度:1

132. Another Chocolate Maniac time limit per test: 0.25 sec. memory limit per test: 4096 KB Bob real ...

- 转载:【Oracle 集群】RAC知识图文详细教程(九)--RAC基本测试与使用

文章导航 集群概念介绍(一) ORACLE集群概念和原理(二) RAC 工作原理和相关组件(三) 缓存融合技术(四) RAC 特殊问题和实战经验(五) ORACLE 11 G版本2 RAC在LINUX ...

- Alpha阶段敏捷冲刺---Day1

一.Daily Scrum Meeting照片 二.今天冲刺情况反馈 1.昨天已完成的工作 昨天我们组全体成员在五社区五号楼719召开了紧急会议,在会议上我们梳理了编写这个程序的所有流程,并且根 ...