solr源码分析之searchComponent

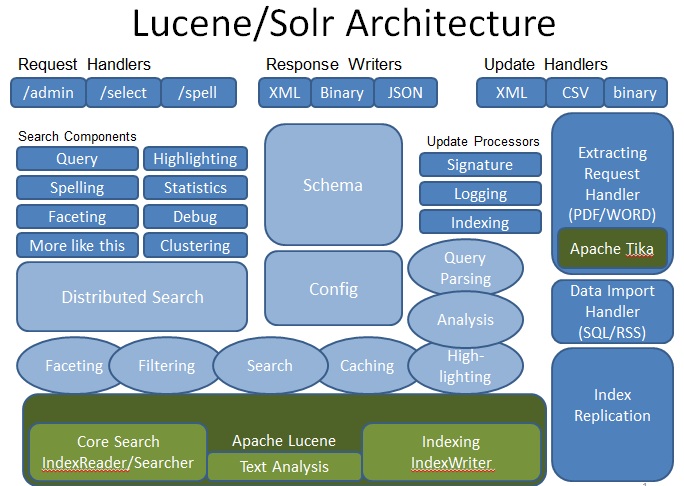

上文solr源码分析之数据导入DataImporter追溯中提到了solr的工作流程,其核心是各种handler。

handler定义了各种search Component,

@Override

public void handleRequestBody(SolrQueryRequest req, SolrQueryResponse rsp) throws Exception

{

List<SearchComponent> components = getComponents();

ResponseBuilder rb = new ResponseBuilder(req, rsp, components);

}

然后调用组件的prepare方法:

if (timer == null) {

// non-debugging prepare phase

for( SearchComponent c : components ) {

c.prepare(rb);

}

} else {

// debugging prepare phase

RTimer subt = timer.sub( "prepare" );

for( SearchComponent c : components ) {

rb.setTimer( subt.sub( c.getName() ) );

c.prepare(rb);

rb.getTimer().stop();

}

subt.stop();

}

再调用组件的process方法:

if(!rb.isDebug()) {

// Process

for( SearchComponent c : components ) {

c.process(rb);

}

}

else {

// Process

RTimer subt = timer.sub( "process" );

for( SearchComponent c : components ) {

rb.setTimer( subt.sub( c.getName() ) );

c.process(rb);

rb.getTimer().stop();

}

subt.stop();

// add the timing info

if (rb.isDebugTimings()) {

rb.addDebugInfo("timing", timer.asNamedList() );

}

}

从源码上来分析一下search Component,searchComponent的结构如下:

以QueryComponent为例,理解一下它的工作原理。

prepare方法获取查询语句:

@Override

public void prepare(ResponseBuilder rb) throws IOException

{ SolrQueryRequest req = rb.req;

SolrParams params = req.getParams();

if (!params.getBool(COMPONENT_NAME, true)) {

return;

}

SolrQueryResponse rsp = rb.rsp; // Set field flags

ReturnFields returnFields = new SolrReturnFields( req );

rsp.setReturnFields( returnFields );

int flags = 0;

if (returnFields.wantsScore()) {

flags |= SolrIndexSearcher.GET_SCORES;

}

rb.setFieldFlags( flags ); String defType = params.get(QueryParsing.DEFTYPE, QParserPlugin.DEFAULT_QTYPE); // get it from the response builder to give a different component a chance

// to set it.

String queryString = rb.getQueryString();

if (queryString == null) {

// this is the normal way it's set.

queryString = params.get( CommonParams.Q );

rb.setQueryString(queryString);

} try {

QParser parser = QParser.getParser(rb.getQueryString(), defType, req);

Query q = parser.getQuery();

if (q == null) {

// normalize a null query to a query that matches nothing

q = new MatchNoDocsQuery();

} rb.setQuery( q ); String rankQueryString = rb.req.getParams().get(CommonParams.RQ);

if(rankQueryString != null) {

QParser rqparser = QParser.getParser(rankQueryString, defType, req);

Query rq = rqparser.getQuery();

if(rq instanceof RankQuery) {

RankQuery rankQuery = (RankQuery)rq;

rb.setRankQuery(rankQuery);

MergeStrategy mergeStrategy = rankQuery.getMergeStrategy();

if(mergeStrategy != null) {

rb.addMergeStrategy(mergeStrategy);

if(mergeStrategy.handlesMergeFields()) {

rb.mergeFieldHandler = mergeStrategy;

}

}

} else {

throw new SolrException(SolrException.ErrorCode.BAD_REQUEST,"rq parameter must be a RankQuery");

}

} rb.setSortSpec( parser.getSort(true) );

rb.setQparser(parser); final String cursorStr = rb.req.getParams().get(CursorMarkParams.CURSOR_MARK_PARAM);

if (null != cursorStr) {

final CursorMark cursorMark = new CursorMark(rb.req.getSchema(),

rb.getSortSpec());

cursorMark.parseSerializedTotem(cursorStr);

rb.setCursorMark(cursorMark);

} String[] fqs = req.getParams().getParams(CommonParams.FQ);

if (fqs!=null && fqs.length!=0) {

List<Query> filters = rb.getFilters();

// if filters already exists, make a copy instead of modifying the original

filters = filters == null ? new ArrayList<Query>(fqs.length) : new ArrayList<>(filters);

for (String fq : fqs) {

if (fq != null && fq.trim().length()!=0) {

QParser fqp = QParser.getParser(fq, null, req);

filters.add(fqp.getQuery());

}

}

// only set the filters if they are not empty otherwise

// fq=&someotherParam= will trigger all docs filter for every request

// if filter cache is disabled

if (!filters.isEmpty()) {

rb.setFilters( filters );

}

}

} catch (SyntaxError e) {

throw new SolrException(SolrException.ErrorCode.BAD_REQUEST, e);

} if (params.getBool(GroupParams.GROUP, false)) {

prepareGrouping(rb);

} else {

//Validate only in case of non-grouping search.

if(rb.getSortSpec().getCount() < 0) {

throw new SolrException(SolrException.ErrorCode.BAD_REQUEST, "'rows' parameter cannot be negative");

}

} //Input validation.

if (rb.getQueryCommand().getOffset() < 0) {

throw new SolrException(SolrException.ErrorCode.BAD_REQUEST, "'start' parameter cannot be negative");

}

}

process方法执行查询:

/**

* Actually run the query

*/

@Override

public void process(ResponseBuilder rb) throws IOException

{

LOG.debug("process: {}", rb.req.getParams()); SolrQueryRequest req = rb.req;

SolrParams params = req.getParams();

if (!params.getBool(COMPONENT_NAME, true)) {

return;

}

SolrIndexSearcher searcher = req.getSearcher(); StatsCache statsCache = req.getCore().getStatsCache(); int purpose = params.getInt(ShardParams.SHARDS_PURPOSE, ShardRequest.PURPOSE_GET_TOP_IDS);

if ((purpose & ShardRequest.PURPOSE_GET_TERM_STATS) != 0) {

statsCache.returnLocalStats(rb, searcher);

return;

}

// check if we need to update the local copy of global dfs

if ((purpose & ShardRequest.PURPOSE_SET_TERM_STATS) != 0) {

// retrieve from request and update local cache

statsCache.receiveGlobalStats(req);

} SolrQueryResponse rsp = rb.rsp;

IndexSchema schema = searcher.getSchema(); // Optional: This could also be implemented by the top-level searcher sending

// a filter that lists the ids... that would be transparent to

// the request handler, but would be more expensive (and would preserve score

// too if desired).

String ids = params.get(ShardParams.IDS);

if (ids != null) {

SchemaField idField = schema.getUniqueKeyField();

List<String> idArr = StrUtils.splitSmart(ids, ",", true);

int[] luceneIds = new int[idArr.size()];

int docs = 0;

for (int i=0; i<idArr.size(); i++) {

int id = searcher.getFirstMatch(

new Term(idField.getName(), idField.getType().toInternal(idArr.get(i))));

if (id >= 0)

luceneIds[docs++] = id;

} DocListAndSet res = new DocListAndSet();

res.docList = new DocSlice(0, docs, luceneIds, null, docs, 0);

if (rb.isNeedDocSet()) {

// TODO: create a cache for this!

List<Query> queries = new ArrayList<>();

queries.add(rb.getQuery());

List<Query> filters = rb.getFilters();

if (filters != null) queries.addAll(filters);

res.docSet = searcher.getDocSet(queries);

}

rb.setResults(res); ResultContext ctx = new ResultContext();

ctx.docs = rb.getResults().docList;

ctx.query = null; // anything?

rsp.add("response", ctx);

return;

} // -1 as flag if not set.

long timeAllowed = params.getLong(CommonParams.TIME_ALLOWED, -1L);

if (null != rb.getCursorMark() && 0 < timeAllowed) {

// fundamentally incompatible

throw new SolrException(SolrException.ErrorCode.BAD_REQUEST, "Can not search using both " +

CursorMarkParams.CURSOR_MARK_PARAM + " and " + CommonParams.TIME_ALLOWED);

} SolrIndexSearcher.QueryCommand cmd = rb.getQueryCommand();

cmd.setTimeAllowed(timeAllowed); req.getContext().put(SolrIndexSearcher.STATS_SOURCE, statsCache.get(req)); SolrIndexSearcher.QueryResult result = new SolrIndexSearcher.QueryResult(); //

// grouping / field collapsing

//

GroupingSpecification groupingSpec = rb.getGroupingSpec();

if (groupingSpec != null) {

try {

boolean needScores = (cmd.getFlags() & SolrIndexSearcher.GET_SCORES) != 0;

if (params.getBool(GroupParams.GROUP_DISTRIBUTED_FIRST, false)) {

CommandHandler.Builder topsGroupsActionBuilder = new CommandHandler.Builder()

.setQueryCommand(cmd)

.setNeedDocSet(false) // Order matters here

.setIncludeHitCount(true)

.setSearcher(searcher); for (String field : groupingSpec.getFields()) {

topsGroupsActionBuilder.addCommandField(new SearchGroupsFieldCommand.Builder()

.setField(schema.getField(field))

.setGroupSort(groupingSpec.getGroupSort())

.setTopNGroups(cmd.getOffset() + cmd.getLen())

.setIncludeGroupCount(groupingSpec.isIncludeGroupCount())

.build()

);

} CommandHandler commandHandler = topsGroupsActionBuilder.build();

commandHandler.execute();

SearchGroupsResultTransformer serializer = new SearchGroupsResultTransformer(searcher);

rsp.add("firstPhase", commandHandler.processResult(result, serializer));

rsp.add("totalHitCount", commandHandler.getTotalHitCount());

rb.setResult(result);

return;

} else if (params.getBool(GroupParams.GROUP_DISTRIBUTED_SECOND, false)) {

CommandHandler.Builder secondPhaseBuilder = new CommandHandler.Builder()

.setQueryCommand(cmd)

.setTruncateGroups(groupingSpec.isTruncateGroups() && groupingSpec.getFields().length > 0)

.setSearcher(searcher); for (String field : groupingSpec.getFields()) {

SchemaField schemaField = schema.getField(field);

String[] topGroupsParam = params.getParams(GroupParams.GROUP_DISTRIBUTED_TOPGROUPS_PREFIX + field);

if (topGroupsParam == null) {

topGroupsParam = new String[0];

} List<SearchGroup<BytesRef>> topGroups = new ArrayList<>(topGroupsParam.length);

for (String topGroup : topGroupsParam) {

SearchGroup<BytesRef> searchGroup = new SearchGroup<>();

if (!topGroup.equals(TopGroupsShardRequestFactory.GROUP_NULL_VALUE)) {

searchGroup.groupValue = new BytesRef(schemaField.getType().readableToIndexed(topGroup));

}

topGroups.add(searchGroup);

} secondPhaseBuilder.addCommandField(

new TopGroupsFieldCommand.Builder()

.setField(schemaField)

.setGroupSort(groupingSpec.getGroupSort())

.setSortWithinGroup(groupingSpec.getSortWithinGroup())

.setFirstPhaseGroups(topGroups)

.setMaxDocPerGroup(groupingSpec.getGroupOffset() + groupingSpec.getGroupLimit())

.setNeedScores(needScores)

.setNeedMaxScore(needScores)

.build()

);

} for (String query : groupingSpec.getQueries()) {

secondPhaseBuilder.addCommandField(new QueryCommand.Builder()

.setDocsToCollect(groupingSpec.getOffset() + groupingSpec.getLimit())

.setSort(groupingSpec.getGroupSort())

.setQuery(query, rb.req)

.setDocSet(searcher)

.build()

);

} CommandHandler commandHandler = secondPhaseBuilder.build();

commandHandler.execute();

TopGroupsResultTransformer serializer = new TopGroupsResultTransformer(rb);

rsp.add("secondPhase", commandHandler.processResult(result, serializer));

rb.setResult(result);

return;

} int maxDocsPercentageToCache = params.getInt(GroupParams.GROUP_CACHE_PERCENTAGE, 0);

boolean cacheSecondPassSearch = maxDocsPercentageToCache >= 1 && maxDocsPercentageToCache <= 100;

Grouping.TotalCount defaultTotalCount = groupingSpec.isIncludeGroupCount() ?

Grouping.TotalCount.grouped : Grouping.TotalCount.ungrouped;

int limitDefault = cmd.getLen(); // this is normally from "rows"

Grouping grouping =

new Grouping(searcher, result, cmd, cacheSecondPassSearch, maxDocsPercentageToCache, groupingSpec.isMain());

grouping.setSort(groupingSpec.getGroupSort())

.setGroupSort(groupingSpec.getSortWithinGroup())

.setDefaultFormat(groupingSpec.getResponseFormat())

.setLimitDefault(limitDefault)

.setDefaultTotalCount(defaultTotalCount)

.setDocsPerGroupDefault(groupingSpec.getGroupLimit())

.setGroupOffsetDefault(groupingSpec.getGroupOffset())

.setGetGroupedDocSet(groupingSpec.isTruncateGroups()); if (groupingSpec.getFields() != null) {

for (String field : groupingSpec.getFields()) {

grouping.addFieldCommand(field, rb.req);

}

} if (groupingSpec.getFunctions() != null) {

for (String groupByStr : groupingSpec.getFunctions()) {

grouping.addFunctionCommand(groupByStr, rb.req);

}

} if (groupingSpec.getQueries() != null) {

for (String groupByStr : groupingSpec.getQueries()) {

grouping.addQueryCommand(groupByStr, rb.req);

}

} if (rb.doHighlights || rb.isDebug() || params.getBool(MoreLikeThisParams.MLT, false)) {

// we need a single list of the returned docs

cmd.setFlags(SolrIndexSearcher.GET_DOCLIST);

} grouping.execute();

if (grouping.isSignalCacheWarning()) {

rsp.add(

"cacheWarning",

String.format(Locale.ROOT, "Cache limit of %d percent relative to maxdoc has exceeded. Please increase cache size or disable caching.", maxDocsPercentageToCache)

);

}

rb.setResult(result); if (grouping.mainResult != null) {

ResultContext ctx = new ResultContext();

ctx.docs = grouping.mainResult;

ctx.query = null; // TODO? add the query?

rsp.add("response", ctx);

rsp.getToLog().add("hits", grouping.mainResult.matches());

} else if (!grouping.getCommands().isEmpty()) { // Can never be empty since grouping.execute() checks for this.

rsp.add("grouped", result.groupedResults);

rsp.getToLog().add("hits", grouping.getCommands().get(0).getMatches());

}

return;

} catch (SyntaxError e) {

throw new SolrException(SolrException.ErrorCode.BAD_REQUEST, e);

}

} // normal search result

searcher.search(result, cmd);

rb.setResult(result); ResultContext ctx = new ResultContext();

ctx.docs = rb.getResults().docList;

ctx.query = rb.getQuery();

rsp.add("response", ctx);

rsp.getToLog().add("hits", rb.getResults().docList.matches()); if ( ! rb.req.getParams().getBool(ShardParams.IS_SHARD,false) ) {

if (null != rb.getNextCursorMark()) {

rb.rsp.add(CursorMarkParams.CURSOR_MARK_NEXT,

rb.getNextCursorMark().getSerializedTotem());

}

} if(rb.mergeFieldHandler != null) {

rb.mergeFieldHandler.handleMergeFields(rb, searcher);

} else {

doFieldSortValues(rb, searcher);

} doPrefetch(rb);

}

solr源码分析之searchComponent的更多相关文章

- solr源码分析之solrclound

一.简介 SolrCloud是Solr4.0版本以后基于Solr和Zookeeper的分布式搜索方案.SolrCloud是Solr的基于Zookeeper一种部署方式.Solr可以以多种方式部署,例如 ...

- solr源码分析之数据导入DataImporter追溯。

若要搜索的信息都是被存储在数据库里面的,但是solr不能直接搜数据库,所以只有借助Solr组件将要搜索的信息在搜索服务器上进行索引,然后在客户端供客户使用. 1. SolrDispatchFilter ...

- Solr初始化源码分析-Solr初始化与启动

用solr做项目已经有一年有余,但都是使用层面,只是利用solr现有机制,修改参数,然后监控调优,从没有对solr进行源码级别的研究.但是,最近手头的一个项目,让我感觉必须把solrn内部原理和扩展机 ...

- Solr4.8.0源码分析(7)之Solr SPI

Solr4.8.0源码分析(7)之Solr SPI 查看Solr源码时候会发现,每一个package都会由对应的resources. 如下图所示: 一时对这玩意好奇了,看了文档以后才发现,这个serv ...

- Solr4.8.0源码分析(4)之Eclipse Solr调试环境搭建

Solr4.8.0源码分析(4)之Eclipse Solr调试环境搭建 由于公司里的Solr调试都是用远程jpda进行的,但是家里只有一台电脑所以不能jpda进行调试,这是因为jpda的端口冲突.所以 ...

- Solr4.8.0源码分析(5)之查询流程分析总述

Solr4.8.0源码分析(5)之查询流程分析总述 前面已经写到,solr查询是通过http发送命令,solr servlet接受并进行处理.所以solr的查询流程从SolrDispatchsFilt ...

- Solr5.0源码分析-SolrDispatchFilter

年初,公司开发法律行业的搜索引擎.当时,我作为整个系统的核心成员,选择solr,并在solr根据我们的要求做了相应的二次开发.但是,对solr的还没有进行认真仔细的研究.最近,事情比较清闲,翻翻sol ...

- Heritrix源码分析(十四) 如何让Heritrix不间断的抓取(转)

欢迎加入Heritrix群(QQ):109148319,10447185 , Lucene/Solr群(QQ) : 118972724 本博客已迁移到本人独立博客: http://www.yun5u ...

- Heritrix源码分析(九) Heritrix的二次抓取以及如何让Heritrix抓取你不想抓取的URL

本博客属原创文章,欢迎转载!转载请务必注明出处:http://guoyunsky.iteye.com/blog/644396 本博客已迁移到本人独立博客: http://www.yun5u ...

随机推荐

- 20155310 2016-2017-2 《Java程序设计》第四周学习总结

20155310 2016-2017-2 <Java程序设计>第四周学习总结 一周两章新知识的自学与理解真的是很考验和锻炼我们,也对前面几章我们的学习进行了检测,遇到忘记和不懂的知识就再复 ...

- 20155332 2006-2007-2 《Java程序设计》第3周学习总结

学号 2006-2007-2 <Java程序设计>第3周学习总结 教材学习内容总结 尽量简单的总结一下本周学习内容 尽量不要抄书,浪费时间 看懂就过,看不懂,学习有心得的记一下 教材学习中 ...

- sql语句-6-高级查询

- 【LG3237】[HNOI2014]米特运输

题面 洛谷 题解 代码 #include <iostream> #include <cstdio> #include <cstdlib> #include < ...

- js创建对象 object.create()用法

Object.create()方法是ECMAScript 5中新增的方法,这个方法用于创建一个新对象.被创建的对象继承另一个对象的原型,在创建新对象时可以指定一些属性. 语法: Object.crea ...

- R的数据读写

目录 1 简介 在使用任何一款数据分析软件的时候,首先要做的就是数据成功的读写问题,所以不同于其他文档的书写方法,本文将探讨如何读写本地文本文件. 2 运行环境 操作系统:Win10 R版本:R-3. ...

- Flask开发环境搭建

基础准备 Python 3.6.5 Conda Visual Studio Code 虚拟环境 创建虚拟环境 conda create -n flask 激活虚拟环境 activate flask 关 ...

- 五、利用EnterpriseFrameWork快速开发基于WebServices的接口

回<[开源]EnterpriseFrameWork框架系列文章索引> EnterpriseFrameWork框架实例源代码下载: 实例下载 前面几章已完成EnterpriseFrameWo ...

- Gradle快速上手——从Maven到Gradle

[本文写作于2018年7月5日] 本文适合于有一定Maven应用基础,想快速上手Gradle的读者. 背景 Maven.Gradle都是著名的依赖管理及自动构建工具.提到依赖管理与自动构建,其重要性在 ...

- conda环境管理

查看环境 conda env list 创建环境 conda create -n python36 python=3.6 进入环境 source activate python36 activate ...