How Hystrix Works?--官方

https://github.com/Netflix/Hystrix/wiki/How-it-Works

Contents

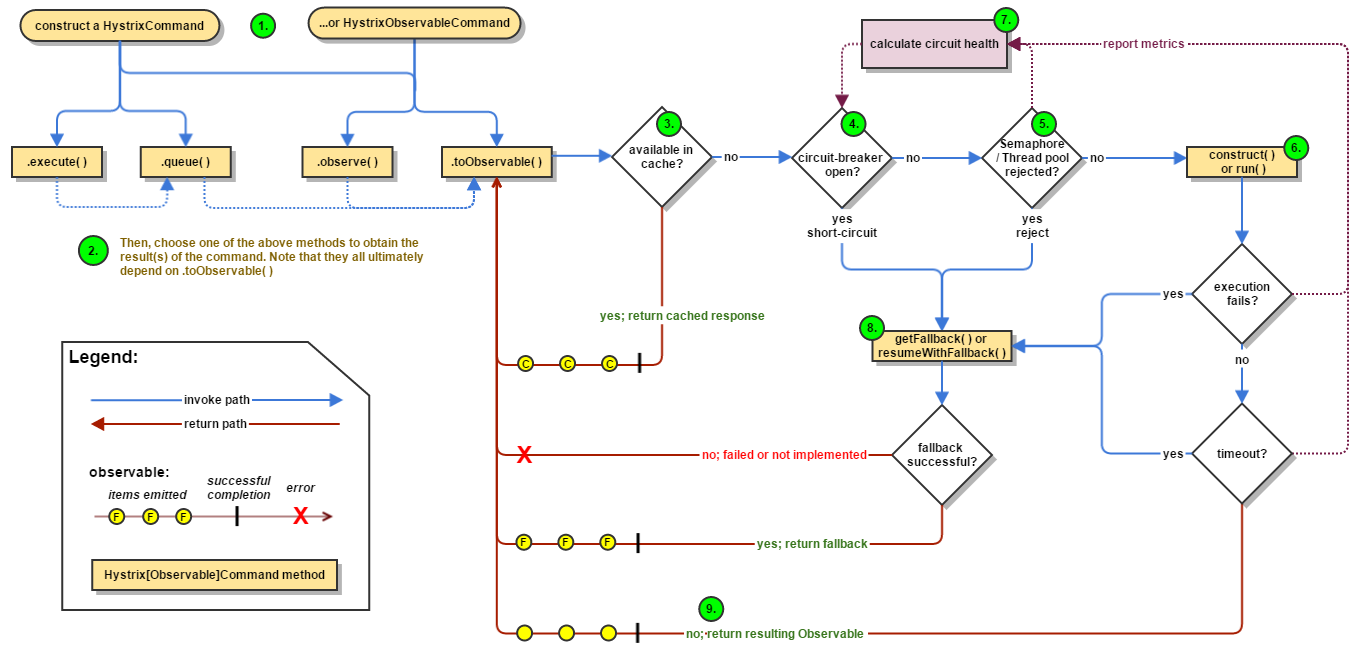

Flow Chart

The following diagram shows what happens when you make a request to a service dependency by means of Hystrix:

The following sections will explain this flow in greater detail:

- Construct a

HystrixCommandorHystrixObservableCommandObject - Execute the Command

- Is the Response Cached?

- Is the Circuit Open?

- Is the Thread Pool/Queue/Semaphore Full?

HystrixObservableCommand.construct()orHystrixCommand.run()- Calculate Circuit Health

- Get the Fallback

- Return the Successful Response

1. Construct a HystrixCommand or HystrixObservableCommand Object

The first step is to construct a HystrixCommand or HystrixObservableCommand object to represent the request you are making to the dependency. Pass the constructor any arguments that will be needed when the request is made.

Construct a HystrixCommand object if the dependency is expected to return a single response. For example:

HystrixCommand command = new HystrixCommand(arg1, arg2);

Construct a HystrixObservableCommand object if the dependency is expected to return an Observable that emits responses. For example:

HystrixObservableCommand command = new HystrixObservableCommand(arg1, arg2);

2. Execute the Command

There are four ways you can execute the command, by using one of the following four methods of your Hystrix command object (the first two are only applicable to simple HystrixCommand objects and are not available for the HystrixObservableCommand):

execute()— blocks, then returns the single response received from the dependency (or throws an exception in case of an error)queue()— returns aFuturewith which you can obtain the single response from the dependencyobserve()— subscribes to theObservablethat represents the response(s) from the dependency and returns anObservablethat replicates that sourceObservabletoObservable()— returns anObservablethat, when you subscribe to it, will execute the Hystrix command and emit its responses

K value = command.execute();

Future<K> fValue = command.queue();

Observable<K> ohValue = command.observe(); //hot observable

Observable<K> ocValue = command.toObservable(); //cold observable

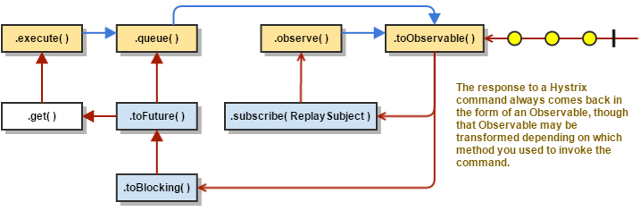

The synchronous call execute() invokes queue().get(). queue() in turn invokestoObservable().toBlocking().toFuture(). Which is to say that ultimately every HystrixCommand is backed by an Observable implementation, even those commands that are intended to return single, simple values.

3. Is the Response Cached?

If request caching is enabled for this command, and if the response to the request is available in the cache, this cached response will be immediately returned in the form of an Observable. (See“Request Caching” below.)

4. Is the Circuit Open?

When you execute the command, Hystrix checks with the circuit-breaker to see if the circuit is open.

If the circuit is open (or “tripped”) then Hystrix will not execute the command but will route the flow to (8) Get the Fallback.

If the circuit is closed then the flow proceeds to (5) to check if there is capacity available to run the command.

5. Is the Thread Pool/Queue/Semaphore Full?

If the thread-pool and queue (or semaphore, if not running in a thread) that are associated with the command are full then Hystrix will not execute the command but will immediately route the flow to (8) Get the Fallback.

6. HystrixObservableCommand.construct() or HystrixCommand.run()

Here, Hystrix invokes the request to the dependency by means of the method you have written for this purpose, one of the following:

HystrixCommand.run()— returns a single response or throws an exceptionHystrixObservableCommand.construct()— returns an Observable that emits the response(s) or sends anonErrornotification

If the run() or construct() method exceeds the command’s timeout value, the thread will throw a TimeoutException (or a separate timer thread will, if the command itself is not running in its own thread). In that case Hystrix routes the response through 8. Get the Fallback, and it discards the eventual return value run() or construct() method if that method does not cancel/interrupt.

If the command did not throw any exceptions and it returned a response, Hystrix returns this response after it performs some some logging and metrics reporting. In the case of run(), Hystrix returns an Observable that emits the single response and then makes an onCompleted notification; in the case of construct() Hystrix returns the same Observable returned by construct().

7. Calculate Circuit Health

Hystrix reports successes, failures, rejections, and timeouts to the circuit breaker, which maintains a rolling set of counters that calculate statistics.

It uses these stats to determine when the circuit should “trip,” at which point it short-circuits any subsequent requests until a recovery period elapses, upon which it closes the circuit again after first checking certain health checks.

8. Get the Fallback

Hystrix tried to revert to your fallback whenever a command execution fails: when an exception is thrown by construct() or run() (6.), when the command is short-circuited because the circuit is open (4.), when the command’s thread pool and queue or semaphore are at capacity (5.), or when the command has exceeded its timeout length.

Write your fallback to provide a generic response, without any network dependency, from an in-memory cache or by means of other static logic. If you must use a network call in the fallback, you should do so by means of another HystrixCommand or HystrixObservableCommand.

In the case of a HystrixCommand, to provide fallback logic you implementHystrixCommand.getFallback() which returns a single fallback value.

In the case of a HystrixObservableCommand, to provide fallback logic you implementHystrixObservableCommand.resumeWithFallback() which returns an Observable that may emit a fallback value or values.

If the fallback method returns a response then Hystrix will return this response to the caller. In the case of a HystrixCommand.getFallback(), it will return an Observable that emits the value returned from the method. In the case of HystrixObservableCommand.resumeWithFallback() it will return the same Observable returned from the method.

If you have not implemented a fallback method for your Hystrix command, or if the fallback itself throws an exception, Hystrix still returns an Observable, but one that emits nothing and immediately terminates with an onError notification. It is through this onError notification that the exception that caused the command to fail is transmitted back to the caller. (It is a poor practice to implement a fallback implementation that can fail. You should implement your fallback such that it is not performing any logic that could fail.)

The result of a failed or nonexistent fallback will differ depending on how you invoked the Hystrix command:

execute()— throws an exceptionqueue()— successfully returns aFuture, but thisFuturewill throw an exception if itsget()method is calledobserve()— returns anObservablethat, when you subscribe to it, will immediately terminate by calling the subscriber’sonErrormethodtoObservable()— returns anObservablethat, when you subscribe to it, will terminate by calling the subscriber’sonErrormethod

9. Return the Successful Response

If the Hystrix command succeeds, it will return the response or responses to the caller in the form of an Observable. Depending on how you have invoked the command in step 2, above, thisObservable may be transformed before it is returned to you:

execute()— obtains aFuturein the same manner as does.queue()and then callsget()on thisFutureto obtain the single value emitted by theObservablequeue()— converts theObservableinto aBlockingObservableso that it can be converted into aFuture, then returns thisFutureobserve()— subscribes to theObservableimmediately and begins the flow that executes the command; returns anObservablethat, when yousubscribeto it, replays the emissions and notificationstoObservable()— returns theObservableunchanged; you mustsubscribeto it in order to actually begin the flow that leads to the execution of the command

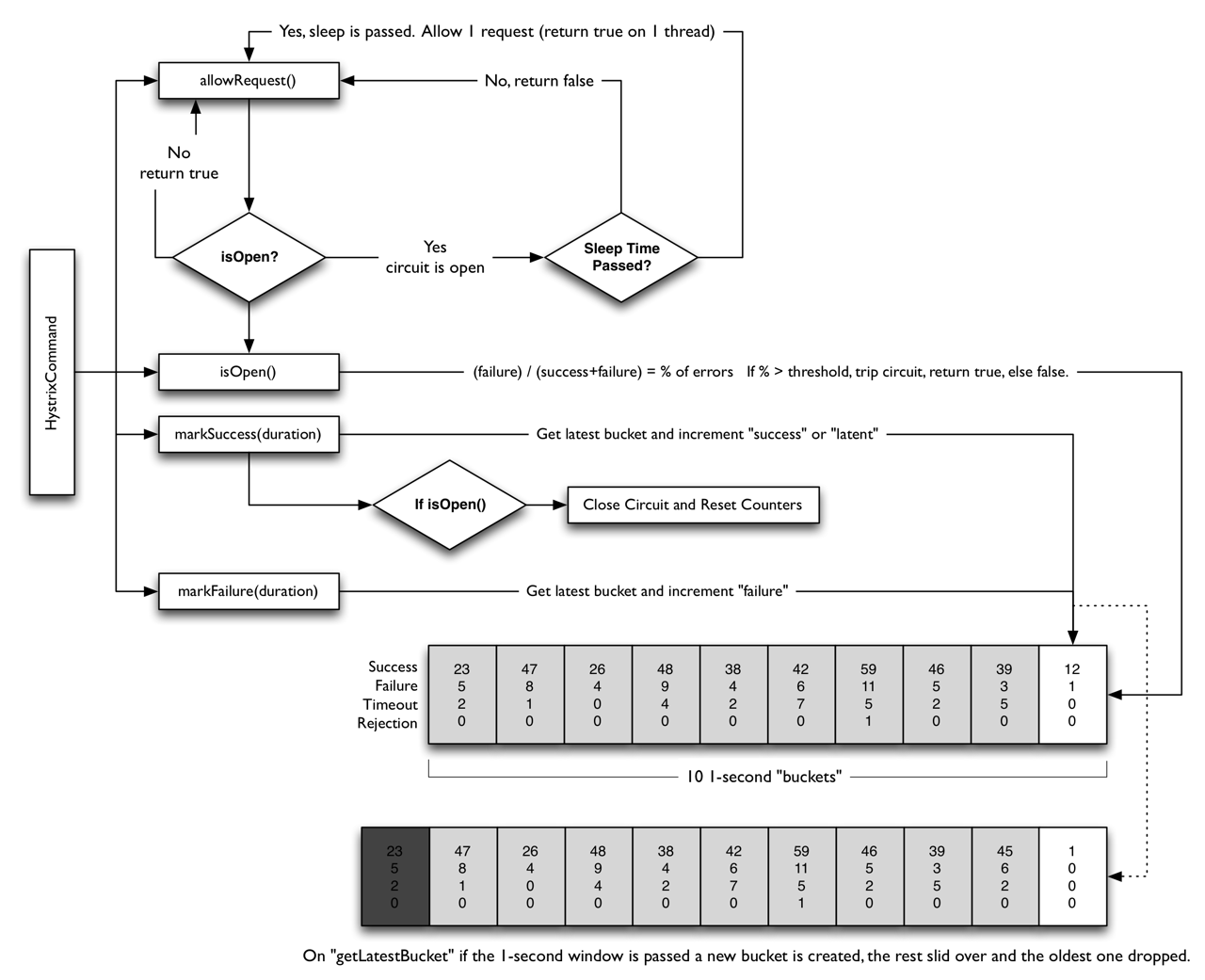

Circuit Breaker

The following diagram shows how a HystrixCommand or HystrixObservableCommand interacts with aHystrixCircuitBreaker and its flow of logic and decision-making, including how the counters behave in the circuit breaker.

The precise way that the circuit opening and closing occurs is as follows:

- Assuming the volume across a circuit meets a certain threshold (

HystrixCommandProperties.circuitBreakerRequestVolumeThreshold())... - And assuming that the error percentage exceeds the threshold error percentage (

HystrixCommandProperties.circuitBreakerErrorThresholdPercentage())... - Then the circuit-breaker transitions from

CLOSEDtoOPEN. - While it is open, it short-circuits all requests made against that circuit-breaker.

- After some amount of time (

HystrixCommandProperties.circuitBreakerSleepWindowInMilliseconds()), the next single request is let through (this is theHALF-OPENstate). If the request fails, the circuit-breaker returns to theOPENstate for the duration of the sleep window. If the request succeeds, the circuit-breaker transitions toCLOSEDand the logic in 1. takes over again.

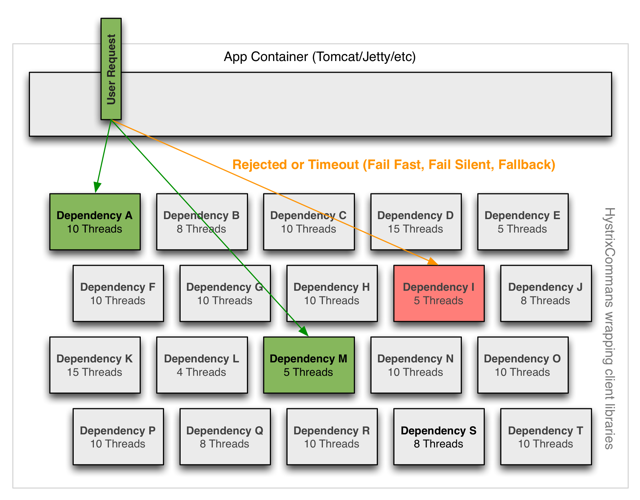

Isolation

Hystrix employs the bulkhead pattern to isolate dependencies from each other and to limit concurrent access to any one of them.

Threads & Thread Pools

Clients (libraries, network calls, etc) execute on separate threads. This isolates them from the calling thread (Tomcat thread pool) so that the caller may “walk away” from a dependency call that is taking too long.

Hystrix uses separate, per-dependency thread pools as a way of constraining any given dependency so latency on the underlying executions will saturate the available threads only in that pool.

It is possible for you to protect against failure without the use of thread pools, but this requires the client being trusted to fail very quickly (network connect/read timeouts and retry configuration) and to always behave well.

Netflix, in its design of Hystrix, chose the use of threads and thread-pools to achieve isolation for many reasons including:

- Many applications execute dozens (and sometimes well over 100) different back-end service calls against dozens of different services developed by as many different teams.

- Each service provides its own client library.

- Client libraries are changing all the time.

- Client library logic can change to add new network calls.

- Client libraries can contain logic such as retries, data parsing, caching (in-memory or across network), and other such behavior.

- Client libraries tend to be “black boxes” — opaque to their users about implementation details, network access patterns, configuration defaults, etc.

- In several real-world production outages the determination was “oh, something changed and properties should be adjusted” or “the client library changed its behavior.”

- Even if a client itself doesn’t change, the service itself can change, which can then impact performance characteristics which can then cause the client configuration to be invalid.

- Transitive dependencies can pull in other client libraries that are not expected and perhaps not correctly configured.

- Most network access is performed synchronously.

- Failure and latency can occur in the client-side code as well, not just in the network call.

Benefits of Thread Pools

The benefits of isolation via threads in their own thread pools are:

- The application is fully protected from runaway client libraries. The pool for a given dependency library can fill up without impacting the rest of the application.

- The application can accept new client libraries with far lower risk. If an issue occurs, it is isolated to the library and doesn’t affect everything else.

- When a failed client becomes healthy again, the thread pool will clear up and the application immediately resumes healthy performance, as opposed to a long recovery when the entire Tomcat container is overwhelmed.

- If a client library is misconfigured, the health of a thread pool will quickly demonstrate this (via increased errors, latency, timeouts, rejections, etc.) and you can handle it (typically in real-time via dynamic properties) without affecting application functionality.

- If a client service changes performance characteristics (which happens often enough to be an issue) which in turn cause a need to tune properties (increasing/decreasing timeouts, changing retries, etc.) this again becomes visible through thread pool metrics (errors, latency, timeouts, rejections) and can be handled without impacting other clients, requests, or users.

- Beyond the isolation benefits, having dedicated thread pools provides built-in concurrency which can be leveraged to build asynchronous facades on top of synchronous client libraries (similar to how the Netflix API built a reactive, fully-asynchronous Java API on top of Hystrix commands).

In short, the isolation provided by thread pools allows for the always-changing and dynamic combination of client libraries and subsystem performance characteristics to be handled gracefully without causing outages.

Note: Despite the isolation a separate thread provides, your underlying client code should also have timeouts and/or respond to Thread interrupts so it can not block indefinitely and saturate the Hystrix thread pool.

Drawbacks of Thread Pools

The primary drawback of thread pools is that they add computational overhead. Each command execution involves the queueing, scheduling, and context switching involved in running a command on a separate thread.

Netflix, in designing this system, decided to accept the cost of this overhead in exchange for the benefits it provides and deemed it minor enough to not have major cost or performance impact.

Cost of Threads

Hystrix measures the latency when it executes the construct() or run() method on the child thread as well as the total end-to-end time on the parent thread. This way you can see the cost of Hystrix overhead (threading, metrics, logging, circuit breaker, etc.).

The Netflix API processes 10+ billion Hystrix Command executions per day using thread isolation. Each API instance has 40+ thread-pools with 5–20 threads in each (most are set to 10).

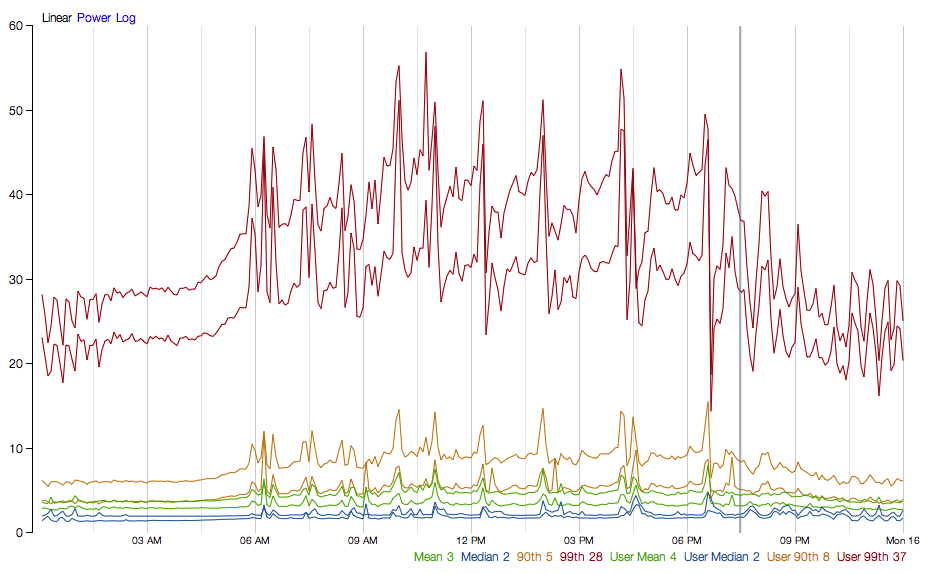

The following diagram represents one HystrixCommand being executed at 60 requests-per-second on a single API instance (of about 350 total threaded executions per second per server):

At the median (and lower) there is no cost to having a separate thread.

At the 90th percentile there is a cost of 3ms for having a separate thread.

At the 99th percentile there is a cost of 9ms for having a separate thread. Note however that the increase in cost is far smaller than the increase in execution time of the separate thread (network request) which jumped from 2 to 28 whereas the cost jumped from 0 to 9.

This overhead at the 90th percentile and higher for circuits such as these has been deemed acceptable for most Netflix use cases for the benefits of resilience achieved.

For circuits that wrap very low-latency requests (such as those that primarily hit in-memory caches) the overhead can be too high and in those cases you can use another method such as tryable semaphores which, while they do not allow for timeouts, provide most of the resilience benefits without the overhead. The overhead in general, however, is small enough that Netflix in practice usually prefers the isolation benefits of a separate thread over such techniques.

Semaphores

You can use semaphores (or counters) to limit the number of concurrent calls to any given dependency, instead of using thread pool/queue sizes. This allows Hystrix to shed load without using thread pools but it does not allow for timing out and walking away. If you trust the client and you only want load shedding, you could use this approach.

HystrixCommand and HystrixObservableCommand support semaphores in 2 places:

- Fallback: When Hystrix retrieves fallbacks it always does so on the calling Tomcat thread.

- Execution: If you set the property

execution.isolation.strategytoSEMAPHOREthen Hystrix will use semaphores instead of threads to limit the number of concurrent parent threads that invoke the command.

You can configure both of these uses of semaphores by means of dynamic properties that define how many concurrent threads can execute. You should size them by using similar calculations as you use when sizing a threadpool (an in-memory call that returns in sub-millisecond times can perform well over 5000rps with a semaphore of only 1 or 2 … but the default is 10).

Note: if a dependency is isolated with a semaphore and then becomes latent, the parent threads will remain blocked until the underlying network calls timeout.

Semaphore rejection will start once the limit is hit but the threads filling the semaphore can not walk away.

Request Collapsing

You can front a HystrixCommand with a request collapser (HystrixCollapser is the abstract parent) with which you can collapse multiple requests into a single back-end dependency call.

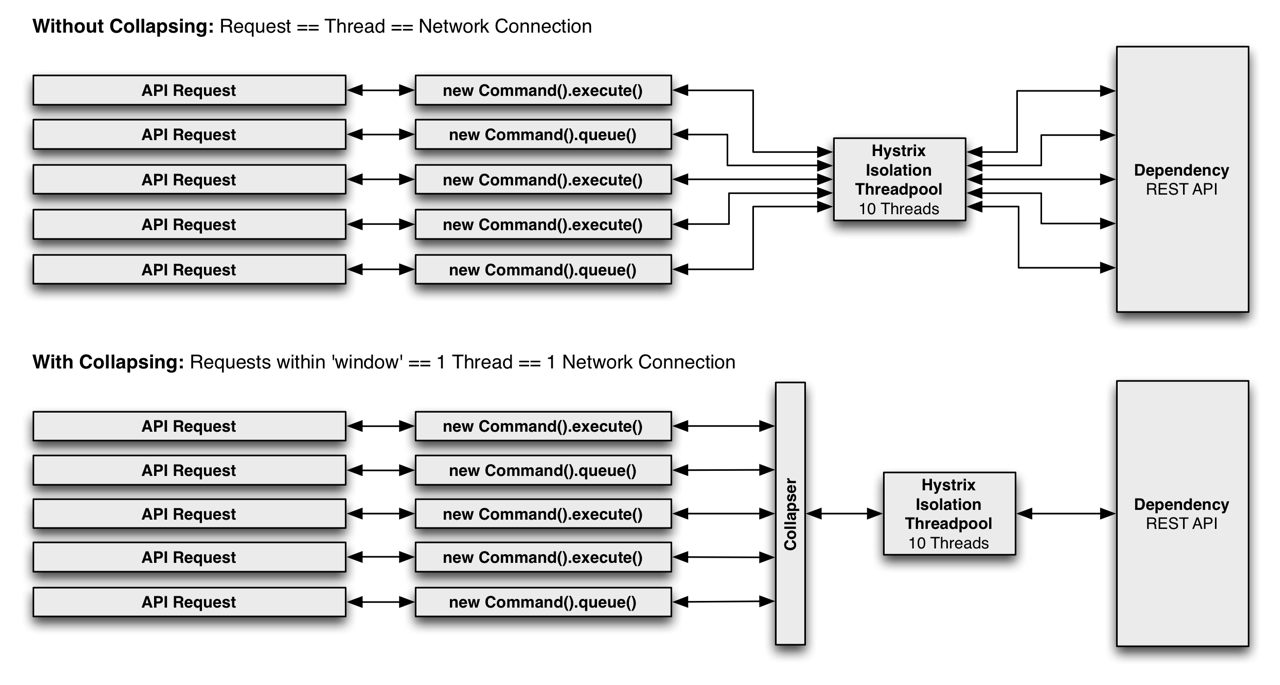

The following diagram shows the number of threads and network connections in two scenarios: first without and then with request collapsing (assuming all connections are “concurrent” within a short time window, in this case 10ms).

Why Use Request Collapsing?

Use request collapsing to reduce the number of threads and network connections needed to perform concurrent HystrixCommand executions. Request collapsing does this in an automated manner that does not force all developers of a codebase to coordinate the manual batching of requests.

Global Context (Across All Tomcat Threads)

The ideal type of collapsing is done at the global application level, so that requests from any useron any Tomcat thread can be collapsed together.

For example, if you configure a HystrixCommand to support batching for any user on requests to a dependency that retrieves movie ratings, then when any user thread in the same JVM makes such a request, Hystrix will add its request along with any others into the same collapsed network call.

Note that the collapser will pass a single HystrixRequestContext object to the collapsed network call, so downstream systems must need to handle this case for this to be an effective option.

User Request Context (Single Tomcat Thread)

If you configure a HystrixCommand to only handle batch requests for a single user, then Hystrix can collapse requests from within a single Tomcat thread (request).

For example, if a user wants to load bookmarks for 300 video objects, instead of executing 300 network calls, Hystrix can combine them all into one.

Object Modeling and Code Complexity

Sometimes when you create an object model that makes logical sense to the consumers of the object, this does not match up well with efficient resource utilization for the producers of the object.

For example, given a list of 300 video objects, iterating over them and calling getSomeAttribute()on each is an obvious object model, but if implemented naively can result in 300 network calls all being made within milliseconds of each other (and very likely saturating resources).

There are manual ways with which you can handle this, such as before allowing the user to callgetSomeAttribute(), require them to declare what video objects they want to get attributes for so that they can all be pre-fetched.

Or, you could divide the object model so a user has to get a list of videos from one place, then ask for the attributes for that list of videos from somewhere else.

These approaches can lead to awkward APIs and object models that don’t match mental models and usage patterns. They can also lead to simple mistakes and inefficiencies as multiple developers work on a codebase, since an optimization done for one use case can be broken by the implementation of another use case and a new path through the code.

By pushing the collapsing logic down to the Hystrix layer, it doesn’t matter how you create the object model, in what order the calls are made, or whether different developers know about optimizations being done or even needing to be done.

The getSomeAttribute() method can be put wherever it fits best and be called in whatever manner suits the usage pattern and the collapser will automatically batch calls into time windows.

What Is the Cost of Request Collapsing?

The cost of enabling request collapsing is an increased latency before the actual command is executed. The maximum cost is the size of the batch window.

If you have a command that takes 5ms on median to execute, and a 10ms batch window, the execution time could become 15ms in a worst case. Typically a request will not happen to be submitted to the window just as it opens, and so the median penalty is half the window time, in this case 5ms.

The determination of whether this cost is worth it depends on the command being executed. A high-latency command won’t suffer as much from a small amount of additional average latency. Also, the amount of concurrency on a given command is key: There is no point in paying the penalty if there are rarely more than 1 or 2 requests to be batched together. In fact, in a single-threaded sequential iteration collapsing would be a major performance bottleneck as each iteration will wait the 10ms batch window time.

If, however, a particular command is heavily utilized concurrently and can batch dozens or even hundreds of calls together, then the cost is typically far outweighed by the increased throughput achieved as Hystrix reduces the number of threads it requires and the number of network connections to dependencies.

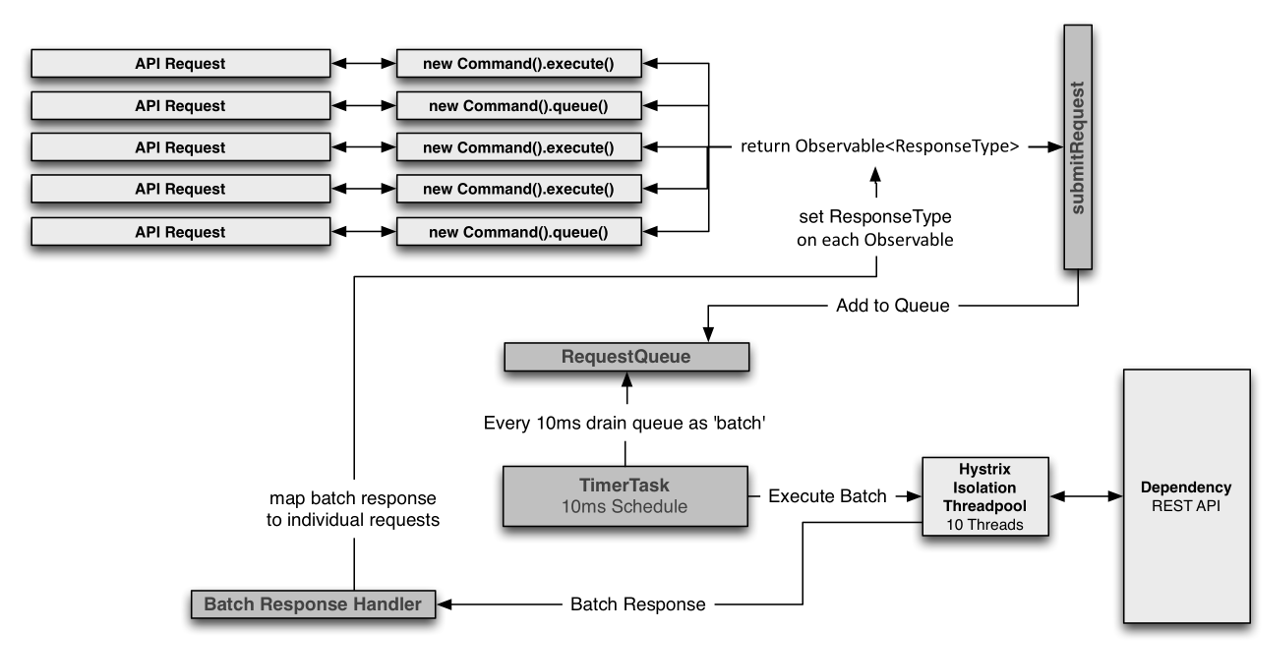

Collapser Flow

Request Caching

HystrixCommand and HystrixObservableCommand implementations can define a cache key which is then used to de-dupe calls within a request context in a concurrent-aware manner.

Here is an example flow involving an HTTP request lifecycle and two threads doing work within that request:

The benefits of request caching are:

- Different code paths can execute Hystrix Commands without concern of duplicate work.

This is particularly beneficial in large codebases where many developers are implementing different pieces of functionality.

For example, multiple paths through code that all need to get a user’s Account object can each request it like this:

Account account = new UserGetAccount(accountId).execute(); //or Observable<Account> accountObservable = new UserGetAccount(accountId).observe();

The Hystrix RequestCache will execute the underlying run() method once and only once, and both threads executing the HystrixCommand will receive the same data despite having instantiated different instances.

- Data retrieval is consistent throughout a request.

Instead of potentially returning a different value (or fallback) each time the command is executed, the first response is cached and returned for all subsequent calls within the same request.

- Eliminates duplicate thread executions.

Since the request cache sits in front of the construct() or run() method invocation, Hystrix can de-dupe calls before they result in thread execution.

If Hystrix didn’t implement the request cache functionality then each command would need to implement it themselves inside the construct or run method, which would put it after a thread is queued and executed.

How Hystrix Works?--官方的更多相关文章

- SpringCloud Netflix Hystrix

Hystrix的一些概念 Hystrix是一个容错框架,可以有效停止服务依赖出故障造成的级联故障. 和eureka.ribbon.feign一样,也是Netflix家的开源框架,已被SpringClo ...

- 牛客网暑期ACM多校训练营(第七场)Bit Compression

链接:https://www.nowcoder.com/acm/contest/145/C 来源:牛客网 题目描述 A binary string s of length N = 2n is give ...

- 利用Spring Cloud实现微服务- 熔断机制

1. 熔断机制介绍 在介绍熔断机制之前,我们需要了解微服务的雪崩效应.在微服务架构中,微服务是完成一个单一的业务功能,这样做的好处是可以做到解耦,每个微服务可以独立演进.但是,一个应用可能会有多个微服 ...

- SpringCloud-Hystrix原理

Hystrix官网的原理介绍以及使用介绍非常详细,非常建议看一遍,地址见参考文档部分. 一 Hystrix原理 1 Hystrix能做什么 通过hystrix可以解决雪崩效应问题,它提供了资源隔离.降 ...

- Resilience4J通过yml设置circuitBreaker

介绍 Resilience4j是一个轻量级.易于使用的容错库,其灵感来自Netflix Hystrix,但专为Java 8和函数式编程设计. springcloud2020升级以后Hystrix被官方 ...

- Hystrix已经停止开发,官方推荐替代项目Resilience4j

随着微服务的流行,熔断作为其中一项很重要的技术也广为人知.当微服务的运行质量低于某个临界值时,启动熔断机制,暂停微服务调用一段时间,以保障后端的微服务不会因为持续过负荷而宕机.本文介绍了新一代熔断器R ...

- Hystrix 使用手册 | 官方文档翻译

由于时间关系可能还没有翻译全,但重要部分已基本包含 本人水平有限,如有翻译不当,请多多批评指出,我一定会修正,谢谢大家.有关 ObservableHystrixCommand 我有的部分选择性忽略了, ...

- [翻译]Hystrix wiki–How it Works

注:本文并非是精确的文档翻译,而是根据自己理解的整理,有些内容可能由于理解偏差翻译有误,有些内容由于是显而易见的,并没有翻译,而是略去了.本文更多是学习过程的产出,请尽量参考原官方文档. 流程图 下图 ...

- Hystrix 配置参数全解析

code[class*="language-"], pre[class*="language-"] { background-color: #fdfdfd; - ...

随机推荐

- 队列(Queue)-c实现

相对而言,队列是比较简单的. 代码还有些warning,我改不动,要找gz帮忙. #include <stdio.h> typedef struct node { int data; st ...

- 系统级脚本 rpcbind

[root@web02 ~]# vim /etc/init.d/rpcbind #! /bin/sh # # rpcbind Start/Stop RPCbind # # chkconfig: 234 ...

- 【BZOJ 1208】[HNOI2004]宠物收养所

[链接] 我是链接,点我呀:) [题意] 在这里输入题意 [题解] 用set搞. (因为规定了不会有相同特点值的东西. 所以可以不用multiset. 那么每次用lower_bound找离它最近的配对 ...

- POJ1502 MPI Maelstrom Dijkstra

题意 给出图,从点1出发,求到最后一个点的时间. 思路 单源最短路,没什么好说的.注意读入的时候的技巧. 代码 #include <cstdio> #include <cstring ...

- nj11--http

概念:Node.js提供了http模块.其中封装了一个高效的HTTP服务器和一个建议的HTTP客户端. http.server是一个基于事件的HTTP服务器.内部有C++实现.接口由JavaScrip ...

- bzoj3931: [CQOI2015]网络吞吐量(spfa+网络流)

3931: [CQOI2015]网络吞吐量 题目:传送门 题解: 现在有点难受....跳了一个多钟...菜啊... 题意都把做法一起给了....最短路+网路流啊. 不想说话...记得开long lon ...

- Connect to MongoDB

https://docs.mongodb.com/getting-started/csharp/client/ MongoDB C# Driver is the officially supporte ...

- Thinkphp5.0怎么加载css和js

Thinkphp5.0怎么加载css和js 问题 http://localhost/手册上的那个{load href="/static/css/style.css" /} 读取不到 ...

- thinkphp5项目--个人博客(六)

thinkphp5项目--个人博客(六) 项目地址 fry404006308/personalBlog: personalBloghttps://github.com/fry404006308/per ...

- jzoj4196 二分图计数 解题报告(容斥原理)

Description