Redis服务之集群节点管理

上一篇博客主要聊了下redis cluster的部署配置,以及使用redis.trib.rb工具所需ruby环境的搭建、使用redis.trib.rb工具创建、查看集群相关信息等,回顾请参考https://www.cnblogs.com/qiuhom-1874/p/13442458.html;今天我们接着来了解下redis.trib.rb这个工具来管理redis3/4 cluster 中的节点;

新增节点到现有集群

环境说明

新增节点到现有集群,首先我们要和集群中redis的版本、验证密码相同,其次硬件配置都应该相同;然后启动两台redis server;我这里演示为了节省机器,在node03上直接启动两个实例来代替redis server。环境如下

目录结构

[root@node03 redis]# ll

total 12

drwxr-xr-x 5 root root 40 Aug 5 22:57 6379

drwxr-xr-x 5 root root 40 Aug 5 22:57 6380

drwxr-xr-x 2 root root 134 Aug 5 22:16 bin

-rw-r--r-- 1 root root 175 Aug 8 08:35 dump.rdb

-rw-r--r-- 1 root root 803 Aug 8 08:35 redis-cluster_6379.conf

-rw-r--r-- 1 root root 803 Aug 8 08:35 redis-cluster_6380.conf

[root@node03 redis]# mkdir {6381,6382}/{etc,logs,run} -p

[root@node03 redis]# tree

.

├── 6379

│ ├── etc

│ │ ├── redis.conf

│ │ └── sentinel.conf

│ ├── logs

│ │ └── redis_6379.log

│ └── run

├── 6380

│ ├── etc

│ │ ├── redis.conf

│ │ └── sentinel.conf

│ ├── logs

│ │ └── redis_6380.log

│ └── run

├── 6381

│ ├── etc

│ ├── logs

│ └── run

├── 6382

│ ├── etc

│ ├── logs

│ └── run

├── bin

│ ├── redis-benchmark

│ ├── redis-check-aof

│ ├── redis-check-rdb

│ ├── redis-cli

│ ├── redis-sentinel -> redis-server

│ └── redis-server

├── dump.rdb

├── redis-cluster_6379.conf

└── redis-cluster_6380.conf 17 directories, 15 files

[root@node03 redis]#

复制配置文件到对应目录的/etc/目录下

[root@node03 redis]# cp 6379/etc/redis.conf 6381/etc/

[root@node03 redis]# cp 6379/etc/redis.conf 6382/etc/

修改配置文件中对应端口信息

[root@node03 redis]# sed -ri 's@6379@6381@g' 6381/etc/redis.conf

[root@node03 redis]# sed -ri 's@6379@6382@g' 6382/etc/redis.conf

确认配置文件信息

[root@node03 redis]# grep -E "^(port|cluster|logfile)" 6381/etc/redis.conf

port 6381

logfile "/usr/local/redis/6381/logs/redis_6381.log"

cluster-enabled yes

cluster-config-file redis-cluster_6381.conf

[root@node03 redis]# grep -E "^(port|cluster|logfile)" 6382/etc/redis.conf

port 6382

logfile "/usr/local/redis/6382/logs/redis_6382.log"

cluster-enabled yes

cluster-config-file redis-cluster_6382.conf

[root@node03 redis]#

提示:如果对应目录下的配置文件没有问题,接下来就可以直接启动redis服务了;

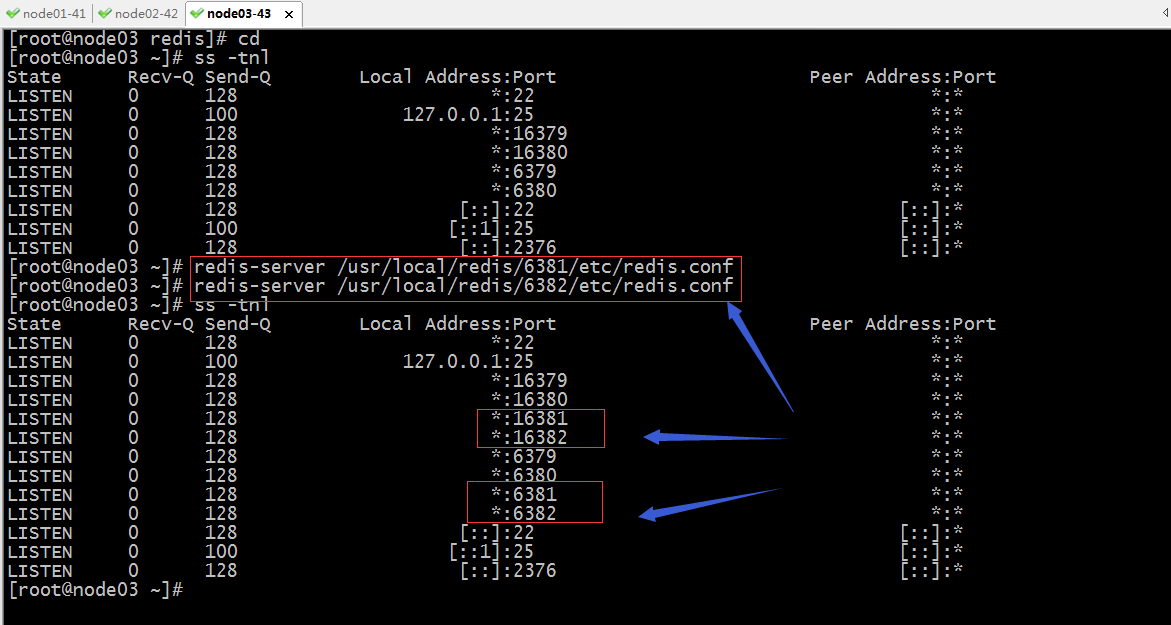

启动redis

提示:可以看到对应的端口已经处于监听状态了;接下来我们就可以使用redis.trib.rb把两个节点添加到集群

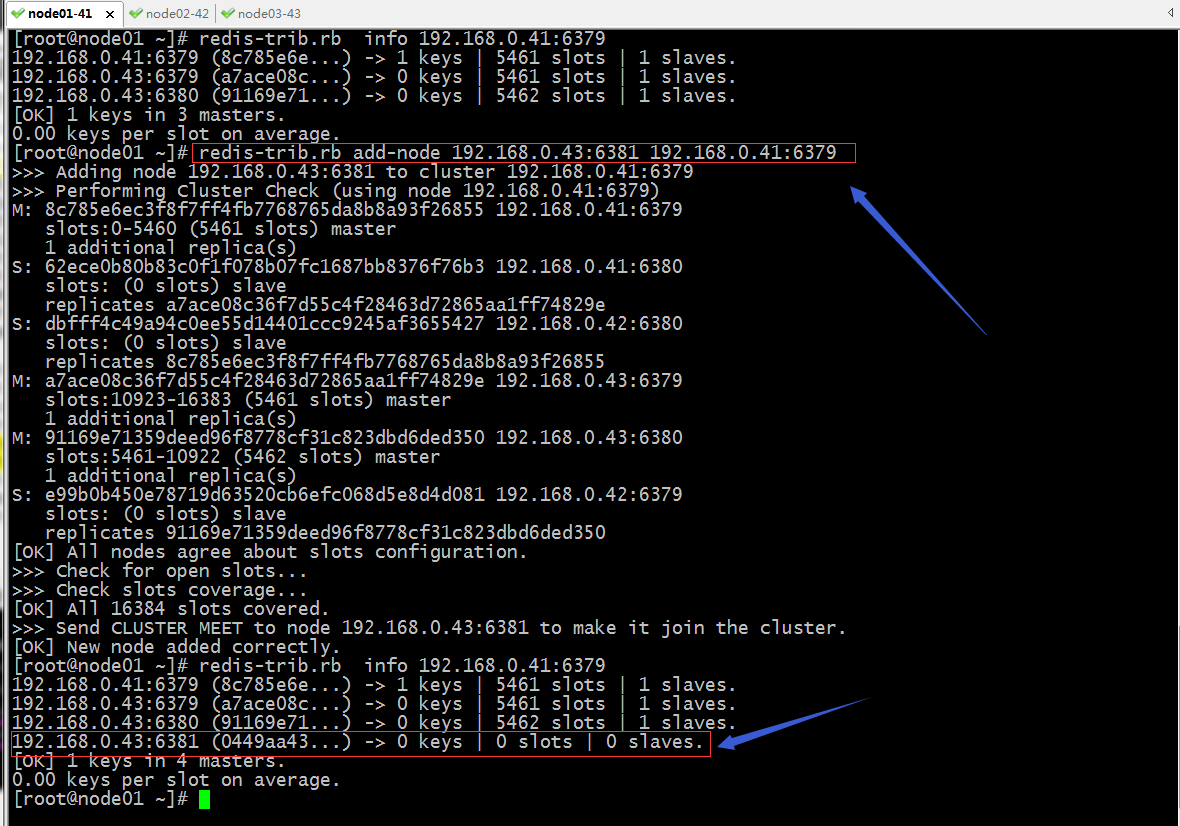

添加新节点到集群

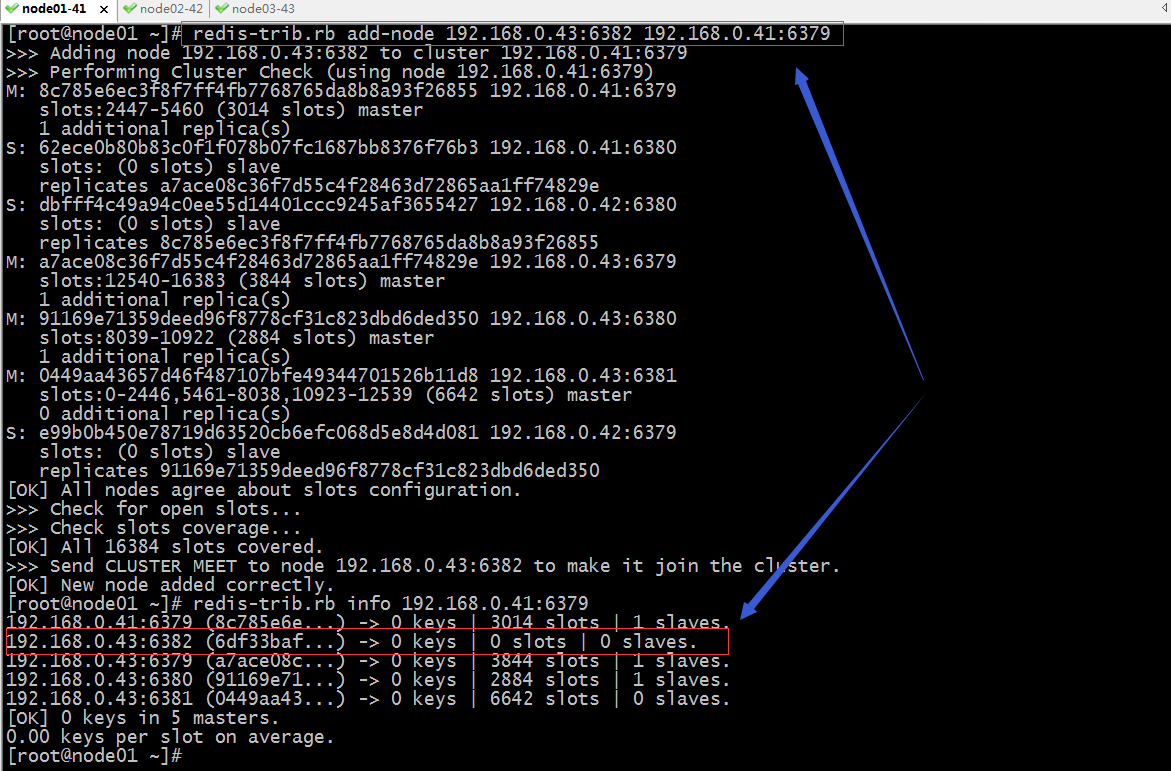

提示:add-node 表示添加节点到集群,需要先指定要添加节点的ip地址和端口,后面再跟集群中已有节点的任意一个ip地址和端口即可;从上面的信息可以看到192.168.0.43:6381已经成功加入到集群,但是上面没有槽位了,也没有slave;

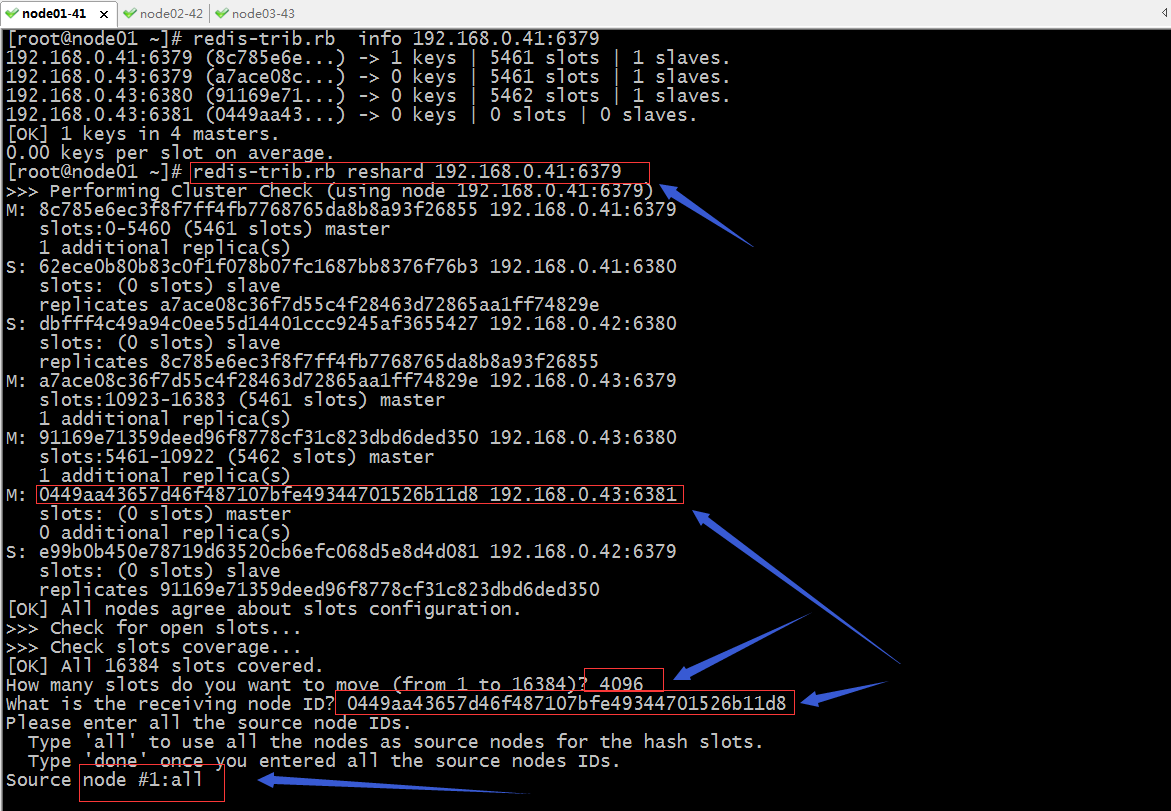

分配槽位给新加的节点

提示:使用reshard 指定集群中任意节点的地址和端口即可启动对集群重新分片操作;重新分配槽位需要指定移动好多个槽位,接收指定数量槽位的节点id,从那些节点上移动指定数量的槽位,all表示集群中已有槽位的节点上;如果是手动指定,那么需要指定对应节点的ID,最后如果指定完成,需要使用done表示以上source node指定完成;接下来它会打印一个方案槽位移动方案,让我们确定。

Ready to move 4096 slots.

Source nodes:

M: 8c785e6ec3f8f7ff4fb7768765da8b8a93f26855 192.168.0.41:6379

slots:0-5460 (5461 slots) master

1 additional replica(s)

M: a7ace08c36f7d55c4f28463d72865aa1ff74829e 192.168.0.43:6379

slots:10923-16383 (5461 slots) master

1 additional replica(s)

M: 91169e71359deed96f8778cf31c823dbd6ded350 192.168.0.43:6380

slots:5461-10922 (5462 slots) master

1 additional replica(s)

Destination node:

M: 0449aa43657d46f487107bfe49344701526b11d8 192.168.0.43:6381

slots: (0 slots) master

0 additional replica(s)

Resharding plan:

Moving slot 5461 from 91169e71359deed96f8778cf31c823dbd6ded350

Moving slot 5462 from 91169e71359deed96f8778cf31c823dbd6ded350

Moving slot 5463 from 91169e71359deed96f8778cf31c823dbd6ded350

Moving slot 5464 from 91169e71359deed96f8778cf31c823dbd6ded350

Moving slot 5465 from 91169e71359deed96f8778cf31c823dbd6ded350

Moving slot 5466 from 91169e71359deed96f8778cf31c823dbd6ded350

Moving slot 5467 from 91169e71359deed96f8778cf31c823dbd6ded350

Moving slot 5468 from 91169e71359deed96f8778cf31c823dbd6ded350

……省略部分内容……

Moving slot 12281 from a7ace08c36f7d55c4f28463d72865aa1ff74829e

Moving slot 12282 from a7ace08c36f7d55c4f28463d72865aa1ff74829e

Moving slot 12283 from a7ace08c36f7d55c4f28463d72865aa1ff74829e

Moving slot 12284 from a7ace08c36f7d55c4f28463d72865aa1ff74829e

Moving slot 12285 from a7ace08c36f7d55c4f28463d72865aa1ff74829e

Moving slot 12286 from a7ace08c36f7d55c4f28463d72865aa1ff74829e

Moving slot 12287 from a7ace08c36f7d55c4f28463d72865aa1ff74829e

Do you want to proceed with the proposed reshard plan (yes/no)? yes

提示:输入yes就表示同意上面的方案;

Moving slot 1177 from 192.168.0.41:6379 to 192.168.0.43:6381:

Moving slot 1178 from 192.168.0.41:6379 to 192.168.0.43:6381:

Moving slot 1179 from 192.168.0.41:6379 to 192.168.0.43:6381:

Moving slot 1180 from 192.168.0.41:6379 to 192.168.0.43:6381:

[ERR] Calling MIGRATE: ERR Syntax error, try CLIENT (LIST | KILL | GETNAME | SETNAME | PAUSE | REPLY)

[root@node01 ~]#

提示:以上报错的原因是192.168.0.41:6379上对应1180号槽位绑定的有数据;这里需要注意一点,在集群分配槽位的时候,必须是分配没有绑定数据的槽位,有数据是不行的,所以通常重新分配槽位需要停机把数据拷贝到其他服务器上,然后把槽位分配好了以后在添加进来即可;

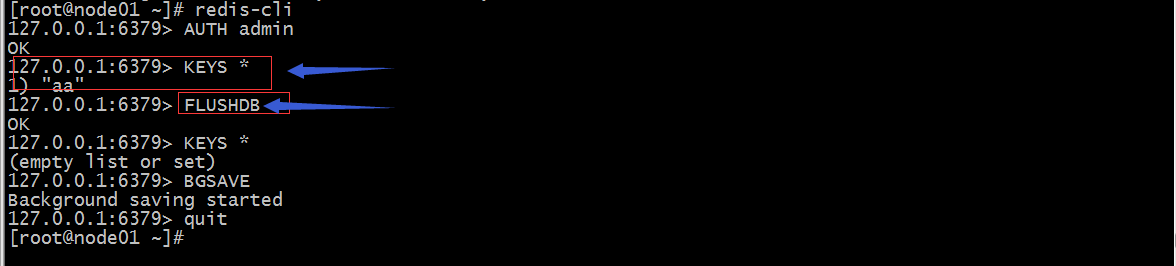

清除数据

修复集群

再次分配槽位

[root@node01 ~]# redis-trib.rb reshard 192.168.0.41:6379

>>> Performing Cluster Check (using node 192.168.0.41:6379)

M: 8c785e6ec3f8f7ff4fb7768765da8b8a93f26855 192.168.0.41:6379

slots:1181-5460 (4280 slots) master

1 additional replica(s)

S: 62ece0b80b83c0f1f078b07fc1687bb8376f76b3 192.168.0.41:6380

slots: (0 slots) slave

replicates a7ace08c36f7d55c4f28463d72865aa1ff74829e

S: dbfff4c49a94c0ee55d14401ccc9245af3655427 192.168.0.42:6380

slots: (0 slots) slave

replicates 8c785e6ec3f8f7ff4fb7768765da8b8a93f26855

M: a7ace08c36f7d55c4f28463d72865aa1ff74829e 192.168.0.43:6379

slots:10923-16383 (5461 slots) master

1 additional replica(s)

M: 91169e71359deed96f8778cf31c823dbd6ded350 192.168.0.43:6380

slots:6827-10922 (4096 slots) master

1 additional replica(s)

M: 0449aa43657d46f487107bfe49344701526b11d8 192.168.0.43:6381

slots:0-1180,5461-6826 (2547 slots) master

0 additional replica(s)

S: e99b0b450e78719d63520cb6efc068d5e8d4d081 192.168.0.42:6379

slots: (0 slots) slave

replicates 91169e71359deed96f8778cf31c823dbd6ded350

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

How many slots do you want to move (from 1 to 16384)? 4096

What is the receiving node ID? 0449aa43657d46f487107bfe49344701526b11d8

Please enter all the source node IDs.

Type 'all' to use all the nodes as source nodes for the hash slots.

Type 'done' once you entered all the source nodes IDs.

Source node #1:all Ready to move 4096 slots.

Source nodes:

M: 8c785e6ec3f8f7ff4fb7768765da8b8a93f26855 192.168.0.41:6379

slots:1181-5460 (4280 slots) master

1 additional replica(s)

M: a7ace08c36f7d55c4f28463d72865aa1ff74829e 192.168.0.43:6379

slots:10923-16383 (5461 slots) master

1 additional replica(s)

M: 91169e71359deed96f8778cf31c823dbd6ded350 192.168.0.43:6380

slots:6827-10922 (4096 slots) master

1 additional replica(s)

Destination node:

M: 0449aa43657d46f487107bfe49344701526b11d8 192.168.0.43:6381

slots:0-1180,5461-6826 (2547 slots) master

0 additional replica(s)

Resharding plan:

Moving slot 10923 from a7ace08c36f7d55c4f28463d72865aa1ff74829e

Moving slot 10924 from a7ace08c36f7d55c4f28463d72865aa1ff74829e

Moving slot 10925 from a7ace08c36f7d55c4f28463d72865aa1ff74829e

Moving slot 10926 from a7ace08c36f7d55c4f28463d72865aa1ff74829e

Moving slot 10927 from a7ace08c36f7d55c4f28463d72865aa1ff74829e

Moving slot 10928 from a7ace08c36f7d55c4f28463d72865aa1ff74829e

Moving slot 10929 from a7ace08c36f7d55c4f28463d72865aa1ff74829e

……省略部分信息……

Moving slot 8033 from 91169e71359deed96f8778cf31c823dbd6ded350

Moving slot 8034 from 91169e71359deed96f8778cf31c823dbd6ded350

Moving slot 8035 from 91169e71359deed96f8778cf31c823dbd6ded350

Moving slot 8036 from 91169e71359deed96f8778cf31c823dbd6ded350

Moving slot 8037 from 91169e71359deed96f8778cf31c823dbd6ded350

Moving slot 8038 from 91169e71359deed96f8778cf31c823dbd6ded350

Do you want to proceed with the proposed reshard plan (yes/no)? yes

Moving slot 10923 from 192.168.0.43:6379 to 192.168.0.43:6381:

Moving slot 10924 from 192.168.0.43:6379 to 192.168.0.43:6381:

Moving slot 10925 from 192.168.0.43:6379 to 192.168.0.43:6381:

Moving slot 10926 from 192.168.0.43:6379 to 192.168.0.43:6381:

Moving slot 10927 from 192.168.0.43:6379 to 192.168.0.43:6381:

……省略部分信息……

Moving slot 8035 from 192.168.0.43:6380 to 192.168.0.43:6381:

Moving slot 8036 from 192.168.0.43:6380 to 192.168.0.43:6381:

Moving slot 8037 from 192.168.0.43:6380 to 192.168.0.43:6381:

Moving slot 8038 from 192.168.0.43:6380 to 192.168.0.43:6381:

[root@node01 ~]#

提示:如果再次分配槽位没有报错,这说明槽位重新分配完成;

确认现有集群槽位分配情况

提示:从上面的截图可以看到,在我们新加的节点分配了6642个槽位,并没有平均分配,原因是在第一次分配成功了2547个槽位后出错了,再次分配已经分配成功的它并不会退回到0,所以我们再次分配了4096个槽位给新加的节点,导致最后新加的节点槽位变成了6642个槽位;槽位分配成功了,但是对应master上还没有slave;

给新加节点配置slave

提示:要给新master加slave节点,首先要把slave节点加入到集群,然后在配置slave从属某个master即可;

更改新加的节点从属192.168.0.43:6382

[root@node01 ~]# redis-trib.rb info 192.168.0.41:6379

192.168.0.41:6379 (8c785e6e...) -> 0 keys | 3014 slots | 1 slaves.

192.168.0.43:6382 (6df33baf...) -> 0 keys | 0 slots | 0 slaves.

192.168.0.43:6379 (a7ace08c...) -> 0 keys | 3844 slots | 1 slaves.

192.168.0.43:6380 (91169e71...) -> 0 keys | 2884 slots | 1 slaves.

192.168.0.43:6381 (0449aa43...) -> 0 keys | 6642 slots | 0 slaves.

[OK] 0 keys in 5 masters.

0.00 keys per slot on average.

[root@node01 ~]#

[root@node01 ~]# redis-cli -h 192.168.0.43 -p 6382

192.168.0.43:6382> AUTH admin

OK

192.168.0.43:6382> info replication

# Replication

role:master

connected_slaves:0

master_replid:69716e1d83cd44fba96d10e282a6534983b3ab8c

master_replid2:0000000000000000000000000000000000000000

master_repl_offset:0

second_repl_offset:-1

repl_backlog_active:0

repl_backlog_size:1048576

repl_backlog_first_byte_offset:0

repl_backlog_histlen:0

192.168.0.43:6382> CLUSTER NODES

0449aa43657d46f487107bfe49344701526b11d8 192.168.0.43:6381@16381 master - 0 1596851725000 12 connected 0-2446 5461-8038 10923-12539

91169e71359deed96f8778cf31c823dbd6ded350 192.168.0.43:6380@16380 master - 0 1596851725354 8 connected 8039-10922

8c785e6ec3f8f7ff4fb7768765da8b8a93f26855 192.168.0.41:6379@16379 master - 0 1596851726377 11 connected 2447-5460

62ece0b80b83c0f1f078b07fc1687bb8376f76b3 192.168.0.41:6380@16380 slave a7ace08c36f7d55c4f28463d72865aa1ff74829e 0 1596851725762 3 connected

e99b0b450e78719d63520cb6efc068d5e8d4d081 192.168.0.42:6379@16379 slave 91169e71359deed96f8778cf31c823dbd6ded350 0 1596851724334 8 connected

6df33baf68995c61494a06c06af18045ca5a04f6 192.168.0.43:6382@16382 myself,master - 0 1596851723000 0 connected

dbfff4c49a94c0ee55d14401ccc9245af3655427 192.168.0.42:6380@16380 slave 8c785e6ec3f8f7ff4fb7768765da8b8a93f26855 0 1596851723000 11 connected

a7ace08c36f7d55c4f28463d72865aa1ff74829e 192.168.0.43:6379@16379 master - 0 1596851723311 3 connected 12540-16383

192.168.0.43:6382> CLUSTER REPLICATE 0449aa43657d46f487107bfe49344701526b11d8

OK

192.168.0.43:6382> CLUSTER NODES

0449aa43657d46f487107bfe49344701526b11d8 192.168.0.43:6381@16381 master - 0 1596851781000 12 connected 0-2446 5461-8038 10923-12539

91169e71359deed96f8778cf31c823dbd6ded350 192.168.0.43:6380@16380 master - 0 1596851784708 8 connected 8039-10922

8c785e6ec3f8f7ff4fb7768765da8b8a93f26855 192.168.0.41:6379@16379 master - 0 1596851784000 11 connected 2447-5460

62ece0b80b83c0f1f078b07fc1687bb8376f76b3 192.168.0.41:6380@16380 slave a7ace08c36f7d55c4f28463d72865aa1ff74829e 0 1596851782000 3 connected

e99b0b450e78719d63520cb6efc068d5e8d4d081 192.168.0.42:6379@16379 slave 91169e71359deed96f8778cf31c823dbd6ded350 0 1596851781000 8 connected

6df33baf68995c61494a06c06af18045ca5a04f6 192.168.0.43:6382@16382 myself,slave 0449aa43657d46f487107bfe49344701526b11d8 0 1596851783000 0 connected

dbfff4c49a94c0ee55d14401ccc9245af3655427 192.168.0.42:6380@16380 slave 8c785e6ec3f8f7ff4fb7768765da8b8a93f26855 0 1596851783688 11 connected

a7ace08c36f7d55c4f28463d72865aa1ff74829e 192.168.0.43:6379@16379 master - 0 1596851785730 3 connected 12540-16383

192.168.0.43:6382> quit

[root@node01 ~]# redis-trib.rb info 192.168.0.41:6379

192.168.0.41:6379 (8c785e6e...) -> 0 keys | 3014 slots | 1 slaves.

192.168.0.43:6379 (a7ace08c...) -> 0 keys | 3844 slots | 1 slaves.

192.168.0.43:6380 (91169e71...) -> 0 keys | 2884 slots | 1 slaves.

192.168.0.43:6381 (0449aa43...) -> 0 keys | 6642 slots | 1 slaves.

[OK] 0 keys in 4 masters.

0.00 keys per slot on average.

[root@node01 ~]#

提示:要给该集群某个节点从属某个master,需要连接到对应的slave节点上执行cluster replicate +对应master的ID即可;到此向集群中添加新节点就完成了;

验证:在新加的节点上添加数据,看看是否可添加?

[root@node01 ~]# redis-cli -h 192.168.0.43 -p 6381

192.168.0.43:6381> AUTH admin

OK

192.168.0.43:6381> get aa

(nil)

192.168.0.43:6381> set aa a1

OK

192.168.0.43:6381> get aa

"a1"

192.168.0.43:6381> set bb b1

(error) MOVED 8620 192.168.0.43:6380

192.168.0.43:6381>

提示:在新加的master上读写数据是可以的;

验证:把新加master宕机,看看对应slave是否会提升为master?

[root@node01 ~]# redis-cli -h 192.168.0.43 -p 6381

192.168.0.43:6381> AUTH admin

OK

192.168.0.43:6381> info replication

# Replication

role:master

connected_slaves:1

slave0:ip=192.168.0.43,port=6382,state=online,offset=1032,lag=1

master_replid:d65b59178dd70a13e75c866d4de738c4f248c84c

master_replid2:0000000000000000000000000000000000000000

master_repl_offset:1032

second_repl_offset:-1

repl_backlog_active:1

repl_backlog_size:1048576

repl_backlog_first_byte_offset:1

repl_backlog_histlen:1032

192.168.0.43:6381> quit

[root@node01 ~]# redis-cli -h 192.168.0.43 -p 6382

192.168.0.43:6382> AUTH admin

OK

192.168.0.43:6382> info replication

# Replication

role:slave

master_host:192.168.0.43

master_port:6381

master_link_status:up

master_last_io_seconds_ago:8

master_sync_in_progress:0

slave_repl_offset:1046

slave_priority:100

slave_read_only:1

connected_slaves:0

master_replid:d65b59178dd70a13e75c866d4de738c4f248c84c

master_replid2:0000000000000000000000000000000000000000

master_repl_offset:1046

second_repl_offset:-1

repl_backlog_active:1

repl_backlog_size:1048576

repl_backlog_first_byte_offset:1

repl_backlog_histlen:1046

192.168.0.43:6382> quit

[root@node01 ~]# ssh node03

Last login: Sat Aug 8 10:07:15 2020 from node01

[root@node03 ~]# ps -ef |grep redis

root 1425 1 0 08:34 ? 00:00:18 redis-server 0.0.0.0:6379 [cluster]

root 1431 1 0 08:35 ? 00:00:18 redis-server 0.0.0.0:6380 [cluster]

root 1646 1 0 09:04 ? 00:00:14 redis-server 0.0.0.0:6381 [cluster]

root 1651 1 0 09:04 ? 00:00:07 redis-server 0.0.0.0:6382 [cluster]

root 5888 5868 0 10:08 pts/1 00:00:00 grep --color=auto redis

[root@node03 ~]# kill -9 1646

[root@node03 ~]# redis-cli -p 6382

127.0.0.1:6382> AUTH admin

OK

127.0.0.1:6382> info replication

# Replication

role:master

connected_slaves:0

master_replid:34d6ec0e58f12ffe9bc5fbcb0c16008b5054594f

master_replid2:d65b59178dd70a13e75c866d4de738c4f248c84c

master_repl_offset:1102

second_repl_offset:1103

repl_backlog_active:1

repl_backlog_size:1048576

repl_backlog_first_byte_offset:1

repl_backlog_histlen:1102

127.0.0.1:6382>

提示:可以看到当master宕机后,对应slave会被提升为master;

删除节点

删除集群某节点,需要保证要删除的节点上没有数据即可

节点不空的情况,迁移槽位到其他master上

[root@node01 ~]# redis-trib.rb reshard 192.168.0.41:6379

>>> Performing Cluster Check (using node 192.168.0.41:6379)

M: 8c785e6ec3f8f7ff4fb7768765da8b8a93f26855 192.168.0.41:6379

slots:2447-5460 (3014 slots) master

1 additional replica(s)

M: 6df33baf68995c61494a06c06af18045ca5a04f6 192.168.0.43:6382

slots:0-2446,5461-8038,10923-12539 (6642 slots) master

0 additional replica(s)

S: 62ece0b80b83c0f1f078b07fc1687bb8376f76b3 192.168.0.41:6380

slots: (0 slots) slave

replicates a7ace08c36f7d55c4f28463d72865aa1ff74829e

S: dbfff4c49a94c0ee55d14401ccc9245af3655427 192.168.0.42:6380

slots: (0 slots) slave

replicates 8c785e6ec3f8f7ff4fb7768765da8b8a93f26855

M: a7ace08c36f7d55c4f28463d72865aa1ff74829e 192.168.0.43:6379

slots:12540-16383 (3844 slots) master

1 additional replica(s)

M: 91169e71359deed96f8778cf31c823dbd6ded350 192.168.0.43:6380

slots:8039-10922 (2884 slots) master

1 additional replica(s)

S: e99b0b450e78719d63520cb6efc068d5e8d4d081 192.168.0.42:6379

slots: (0 slots) slave

replicates 91169e71359deed96f8778cf31c823dbd6ded350

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

How many slots do you want to move (from 1 to 16384)? 6642

What is the receiving node ID? 91169e71359deed96f8778cf31c823dbd6ded350

Please enter all the source node IDs.

Type 'all' to use all the nodes as source nodes for the hash slots.

Type 'done' once you entered all the source nodes IDs.

Source node #1:6df33baf68995c61494a06c06af18045ca5a04f6

Source node #2:done Ready to move 6642 slots.

Source nodes:

M: 6df33baf68995c61494a06c06af18045ca5a04f6 192.168.0.43:6382

slots:0-2446,5461-8038,10923-12539 (6642 slots) master

0 additional replica(s)

Destination node:

M: 91169e71359deed96f8778cf31c823dbd6ded350 192.168.0.43:6380

slots:8039-10922 (2884 slots) master

1 additional replica(s)

Resharding plan:

Moving slot 0 from 6df33baf68995c61494a06c06af18045ca5a04f6

Moving slot 1 from 6df33baf68995c61494a06c06af18045ca5a04f6

Moving slot 2 from 6df33baf68995c61494a06c06af18045ca5a04f6

Moving slot 3 from 6df33baf68995c61494a06c06af18045ca5a04f6

Moving slot 4 from 6df33baf68995c61494a06c06af18045ca5a04f6

Moving slot 5 from 6df33baf68995c61494a06c06af18045ca5a04f6

Moving slot 6 from 6df33baf68995c61494a06c06af18045ca5a04f6

……省略部分内容……

Moving slot 12536 from 6df33baf68995c61494a06c06af18045ca5a04f6

Moving slot 12537 from 6df33baf68995c61494a06c06af18045ca5a04f6

Moving slot 12538 from 6df33baf68995c61494a06c06af18045ca5a04f6

Moving slot 12539 from 6df33baf68995c61494a06c06af18045ca5a04f6

Do you want to proceed with the proposed reshard plan (yes/no)? yes

Moving slot 0 from 192.168.0.43:6382 to 192.168.0.43:6380:

Moving slot 1 from 192.168.0.43:6382 to 192.168.0.43:6380:

Moving slot 2 from 192.168.0.43:6382 to 192.168.0.43:6380:

Moving slot 3 from 192.168.0.43:6382 to 192.168.0.43:6380:

……省略部分内容……

Moving slot 1178 from 192.168.0.43:6382 to 192.168.0.43:6380:

Moving slot 1179 from 192.168.0.43:6382 to 192.168.0.43:6380:

Moving slot 1180 from 192.168.0.43:6382 to 192.168.0.43:6380:

[ERR] Calling MIGRATE: ERR Syntax error, try CLIENT (LIST | KILL | GETNAME | SETNAME | PAUSE | REPLY)

[root@node01 ~]#

提示:这个报错和上面新增节点报错一样,都是告诉我们对应槽位绑定了数据造成的;解决办法就是把对应节点上的数据拷贝出来,然后把数据情况然后在移动槽位即可;这里说一下,我们要把某个节点上的slot移动到其他master上,需要指定移动多少个slot到那个节点,这里的节点也是需要用id指定,source node如果是多个分别指定其ID,最后用done表示完成;其实就是和重新分配slot的操作一样;

清空数据

[root@node01 ~]# redis-cli -h 192.168.0.43 -p 6382

192.168.0.43:6382> AUTH admin

OK

192.168.0.43:6382> KEYS *

1) "aa"

192.168.0.43:6382> FLUSHDB

OK

192.168.0.43:6382> KEYS *

(empty list or set)

192.168.0.43:6382> BGSAVE

Background saving started

192.168.0.43:6382> quit

[root@node01 ~]#

再次挪动slot到其他节点

提示:再次挪动slot需要先修复集群,然后才可以重新分配slot

修复集群

[root@node01 ~]# redis-trib.rb fix 192.168.0.41:6379

>>> Performing Cluster Check (using node 192.168.0.41:6379)

M: 8c785e6ec3f8f7ff4fb7768765da8b8a93f26855 192.168.0.41:6379

slots:2447-5460 (3014 slots) master

1 additional replica(s)

M: 6df33baf68995c61494a06c06af18045ca5a04f6 192.168.0.43:6382

slots:1180-2446,5461-8038,10923-12539 (5462 slots) master

0 additional replica(s)

S: 62ece0b80b83c0f1f078b07fc1687bb8376f76b3 192.168.0.41:6380

slots: (0 slots) slave

replicates a7ace08c36f7d55c4f28463d72865aa1ff74829e

S: dbfff4c49a94c0ee55d14401ccc9245af3655427 192.168.0.42:6380

slots: (0 slots) slave

replicates 8c785e6ec3f8f7ff4fb7768765da8b8a93f26855

M: a7ace08c36f7d55c4f28463d72865aa1ff74829e 192.168.0.43:6379

slots:12540-16383 (3844 slots) master

1 additional replica(s)

M: 91169e71359deed96f8778cf31c823dbd6ded350 192.168.0.43:6380

slots:0-1179,8039-10922 (4064 slots) master

1 additional replica(s)

S: e99b0b450e78719d63520cb6efc068d5e8d4d081 192.168.0.42:6379

slots: (0 slots) slave

replicates 91169e71359deed96f8778cf31c823dbd6ded350

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

[WARNING] Node 192.168.0.43:6382 has slots in migrating state (1180).

[WARNING] Node 192.168.0.43:6380 has slots in importing state (1180).

[WARNING] The following slots are open: 1180

>>> Fixing open slot 1180

Set as migrating in: 192.168.0.43:6382

Set as importing in: 192.168.0.43:6380

Moving slot 1180 from 192.168.0.43:6382 to 192.168.0.43:6380:

>>> Check slots coverage...

[OK] All 16384 slots covered.

[root@node01 ~]# redis-trib.rb info 192.168.0.41:6379

192.168.0.41:6379 (8c785e6e...) -> 0 keys | 3014 slots | 1 slaves.

192.168.0.43:6382 (6df33baf...) -> 0 keys | 5461 slots | 0 slaves.

192.168.0.43:6379 (a7ace08c...) -> 0 keys | 3844 slots | 1 slaves.

192.168.0.43:6380 (91169e71...) -> 0 keys | 4065 slots | 1 slaves.

[OK] 0 keys in 4 masters.

0.00 keys per slot on average.

[root@node01 ~]#

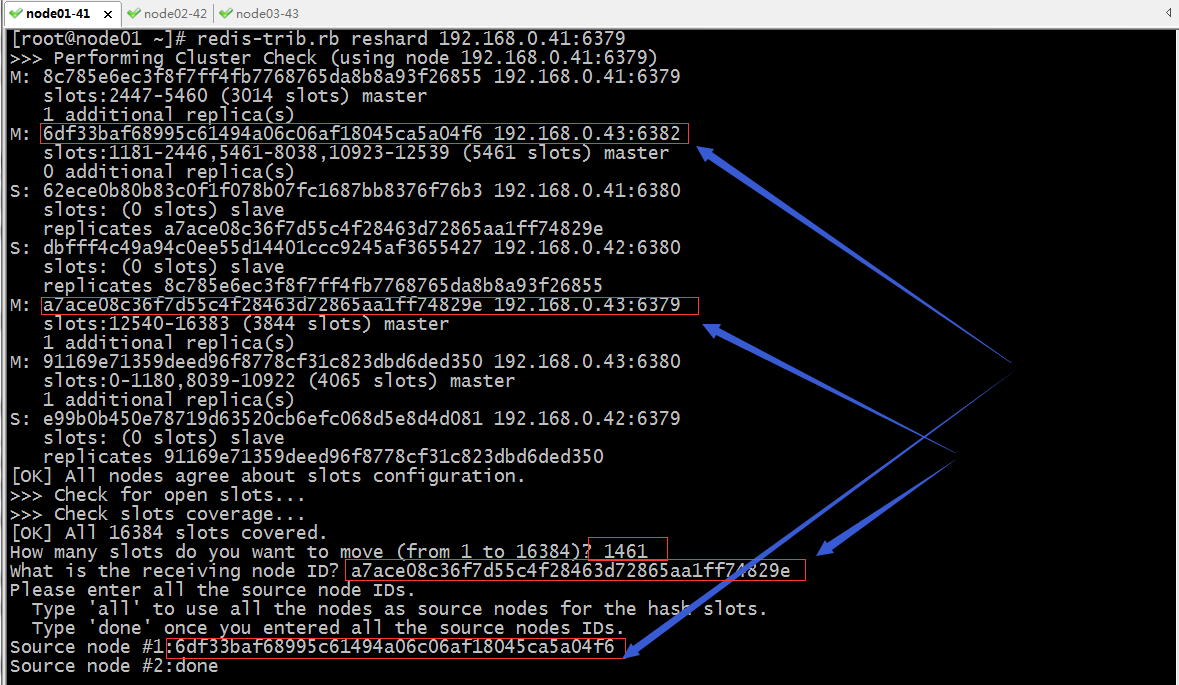

提示:修复集群后,可以看到对应master上还剩下5461个slot,接下来我们再次把这5461个slot分配给其他节点(一次分不完可以多次分);

再次分配slot到其他节点(分配1461个slot给192.168.0.43:6379)

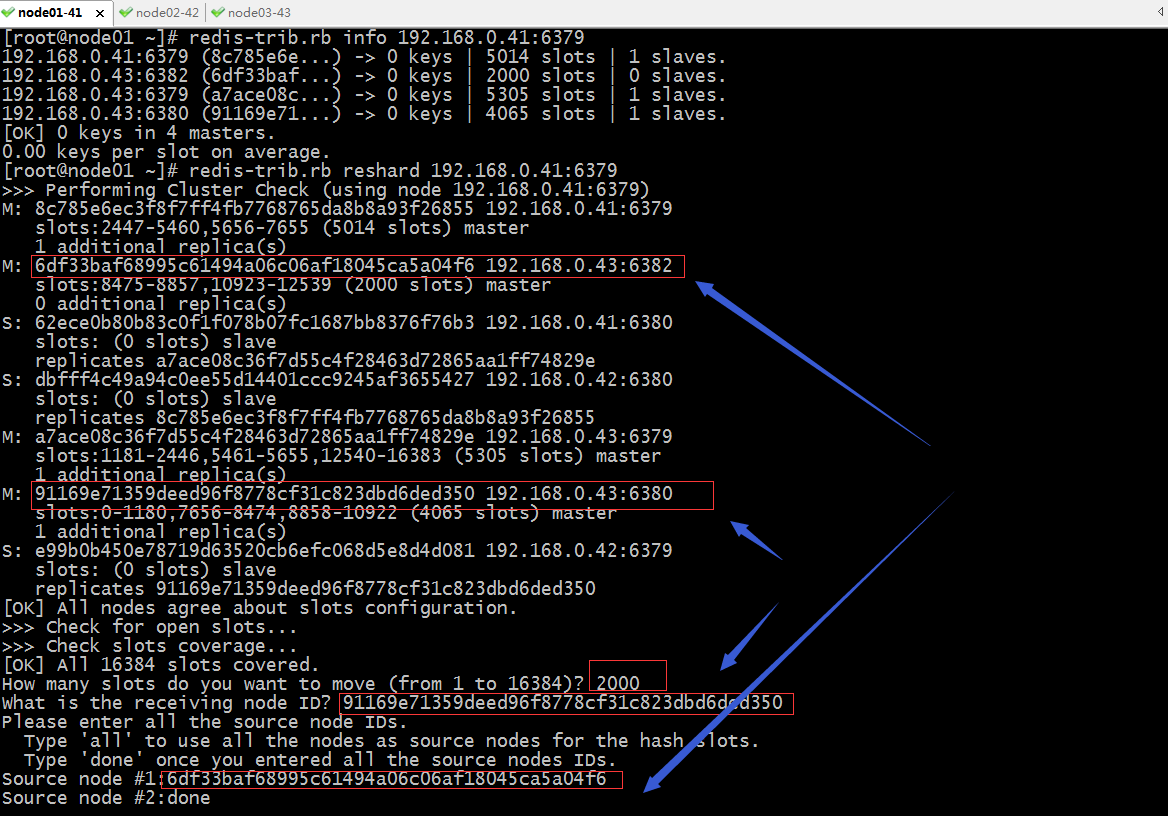

再次分配slot到其他节点(分配12000个slot给192.168.0.41:6379)

再次分配slot到其他节点(分配12000个slot给192.168.0.43:6380)

确认集群slot分配情况

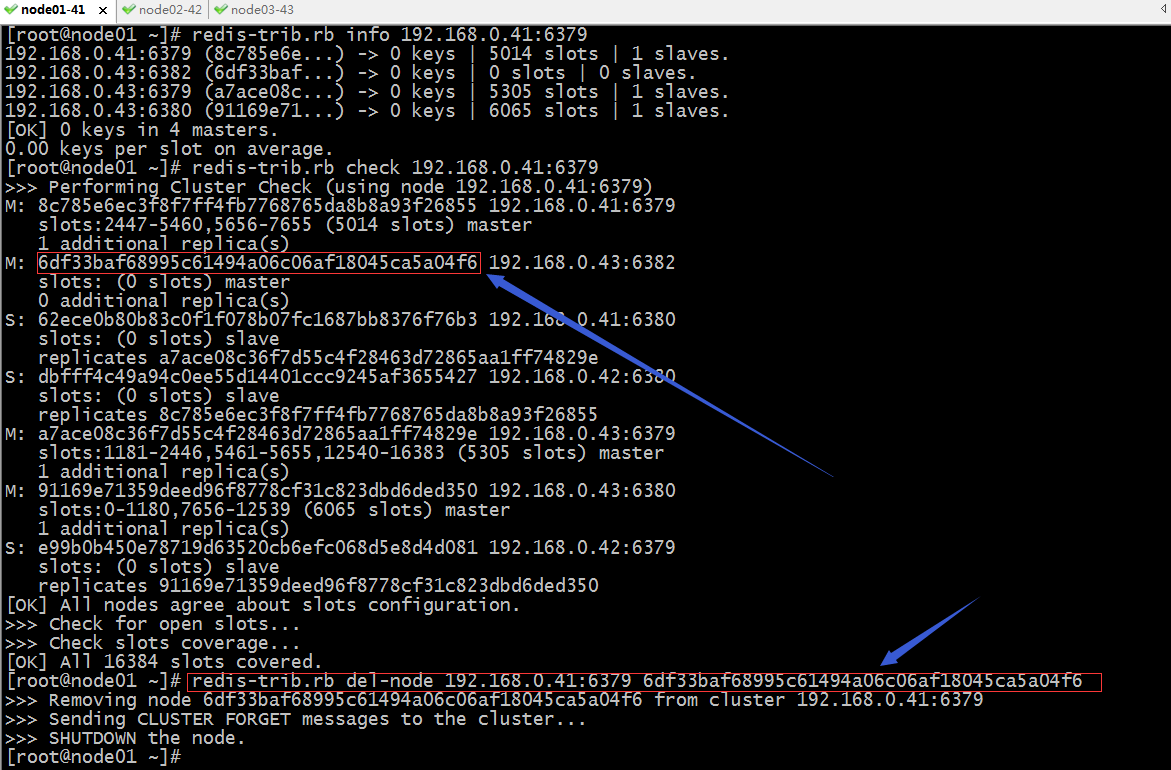

提示:先可以看到192.168.0.43:6382节点上没有slots了,接下来我们就可以把它从集群中删除

从集群中删除节点(192.168.0.43:6382)

提示:从集群中把某个节点删除,需要指定集群中任意一个ip地址,以及要删除节点的对应ID即可;

验证:查看现有集群信息

[root@node01 ~]# redis-trib.rb info 192.168.0.41:6379

192.168.0.41:6379 (8c785e6e...) -> 0 keys | 5014 slots | 1 slaves.

192.168.0.43:6379 (a7ace08c...) -> 0 keys | 5305 slots | 1 slaves.

192.168.0.43:6380 (91169e71...) -> 0 keys | 6065 slots | 1 slaves.

[OK] 0 keys in 3 masters.

0.00 keys per slot on average.

[root@node01 ~]# redis-trib.rb check 192.168.0.41:6379

>>> Performing Cluster Check (using node 192.168.0.41:6379)

M: 8c785e6ec3f8f7ff4fb7768765da8b8a93f26855 192.168.0.41:6379

slots:2447-5460,5656-7655 (5014 slots) master

1 additional replica(s)

S: 62ece0b80b83c0f1f078b07fc1687bb8376f76b3 192.168.0.41:6380

slots: (0 slots) slave

replicates a7ace08c36f7d55c4f28463d72865aa1ff74829e

S: dbfff4c49a94c0ee55d14401ccc9245af3655427 192.168.0.42:6380

slots: (0 slots) slave

replicates 8c785e6ec3f8f7ff4fb7768765da8b8a93f26855

M: a7ace08c36f7d55c4f28463d72865aa1ff74829e 192.168.0.43:6379

slots:1181-2446,5461-5655,12540-16383 (5305 slots) master

1 additional replica(s)

M: 91169e71359deed96f8778cf31c823dbd6ded350 192.168.0.43:6380

slots:0-1180,7656-12539 (6065 slots) master

1 additional replica(s)

S: e99b0b450e78719d63520cb6efc068d5e8d4d081 192.168.0.42:6379

slots: (0 slots) slave

replicates 91169e71359deed96f8778cf31c823dbd6ded350

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

[root@node01 ~]#

提示:可以看到目前集群中就只有3主3从6个节点;

验证:启动之前宕机的192.168.0.43:6381,看看是否还会在集群中呢?

[root@node01 ~]# ssh node03

Last login: Sat Aug 8 10:08:40 2020 from node01

[root@node03 ~]# ss -tnl

State Recv-Q Send-Q Local Address:Port Peer Address:Port

LISTEN 0 128 *:22 *:*

LISTEN 0 100 127.0.0.1:25 *:*

LISTEN 0 128 *:16379 *:*

LISTEN 0 128 *:16380 *:*

LISTEN 0 128 *:6379 *:*

LISTEN 0 128 *:6380 *:*

LISTEN 0 128 [::]:22 [::]:*

LISTEN 0 100 [::1]:25 [::]:*

LISTEN 0 128 [::]:2376 [::]:*

[root@node03 ~]# redis-server /usr/local/redis/6381/etc/redis.conf

[root@node03 ~]# ss -tnl

State Recv-Q Send-Q Local Address:Port Peer Address:Port

LISTEN 0 128 *:22 *:*

LISTEN 0 100 127.0.0.1:25 *:*

LISTEN 0 128 *:16379 *:*

LISTEN 0 128 *:16380 *:*

LISTEN 0 128 *:16381 *:*

LISTEN 0 128 *:6379 *:*

LISTEN 0 128 *:6380 *:*

LISTEN 0 128 *:6381 *:*

LISTEN 0 128 [::]:22 [::]:*

LISTEN 0 100 [::1]:25 [::]:*

LISTEN 0 128 [::]:2376 [::]:*

[root@node03 ~]# exit

logout

Connection to node03 closed.

[root@node01 ~]# redis-trib.rb check 192.168.0.41:6379

[ERR] Sorry, can't connect to node 192.168.0.43:6382

>>> Performing Cluster Check (using node 192.168.0.41:6379)

M: 8c785e6ec3f8f7ff4fb7768765da8b8a93f26855 192.168.0.41:6379

slots:2447-5460,5656-7655 (5014 slots) master

2 additional replica(s)

S: 62ece0b80b83c0f1f078b07fc1687bb8376f76b3 192.168.0.41:6380

slots: (0 slots) slave

replicates a7ace08c36f7d55c4f28463d72865aa1ff74829e

S: dbfff4c49a94c0ee55d14401ccc9245af3655427 192.168.0.42:6380

slots: (0 slots) slave

replicates 8c785e6ec3f8f7ff4fb7768765da8b8a93f26855

M: a7ace08c36f7d55c4f28463d72865aa1ff74829e 192.168.0.43:6379

slots:1181-2446,5461-5655,12540-16383 (5305 slots) master

1 additional replica(s)

M: 91169e71359deed96f8778cf31c823dbd6ded350 192.168.0.43:6380

slots:0-1180,7656-12539 (6065 slots) master

1 additional replica(s)

S: 0449aa43657d46f487107bfe49344701526b11d8 192.168.0.43:6381

slots: (0 slots) slave

replicates 8c785e6ec3f8f7ff4fb7768765da8b8a93f26855

S: e99b0b450e78719d63520cb6efc068d5e8d4d081 192.168.0.42:6379

slots: (0 slots) slave

replicates 91169e71359deed96f8778cf31c823dbd6ded350

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

[root@node01 ~]# redis-trib.rb info 192.168.0.41:6379

[ERR] Sorry, can't connect to node 192.168.0.43:6382

192.168.0.41:6379 (8c785e6e...) -> 0 keys | 5014 slots | 2 slaves.

192.168.0.43:6379 (a7ace08c...) -> 0 keys | 5305 slots | 1 slaves.

192.168.0.43:6380 (91169e71...) -> 0 keys | 6065 slots | 1 slaves.

[OK] 0 keys in 3 masters.

0.00 keys per slot on average.

[root@node01 ~]# redis-cli -a admin

127.0.0.1:6379> CLUSTER NODES

62ece0b80b83c0f1f078b07fc1687bb8376f76b3 192.168.0.41:6380@16380 slave a7ace08c36f7d55c4f28463d72865aa1ff74829e 0 1596855739865 15 connected

dbfff4c49a94c0ee55d14401ccc9245af3655427 192.168.0.42:6380@16380 slave 8c785e6ec3f8f7ff4fb7768765da8b8a93f26855 0 1596855738000 16 connected

8c785e6ec3f8f7ff4fb7768765da8b8a93f26855 192.168.0.41:6379@16379 myself,master - 0 1596855736000 16 connected 2447-5460 5656-7655

a7ace08c36f7d55c4f28463d72865aa1ff74829e 192.168.0.43:6379@16379 master - 0 1596855737000 15 connected 1181-2446 5461-5655 12540-16383

91169e71359deed96f8778cf31c823dbd6ded350 192.168.0.43:6380@16380 master - 0 1596855740877 18 connected 0-1180 7656-12539

0449aa43657d46f487107bfe49344701526b11d8 192.168.0.43:6381@16381 slave 8c785e6ec3f8f7ff4fb7768765da8b8a93f26855 0 1596855738000 16 connected

30a34b27d343883cbfe9db6ba2ad52a1936d8b67 192.168.0.43:6382@16382 handshake - 1596855726853 0 0 disconnected

e99b0b450e78719d63520cb6efc068d5e8d4d081 192.168.0.42:6379@16379 slave 91169e71359deed96f8778cf31c823dbd6ded350 0 1596855739000 18 connected

127.0.0.1:6379>

提示:可以看到当192.168.0.43:6381(源删除master的slave)启动后,它会自动从属集群节点某一个master;从上面的信息可以看到现在集群成了3主4从,192.168.0.43:6381从属192.168.0.41:6379;还有一个你可能已经发现在node03上对应的6382这个节点也不再了,从集群node关系看,它的状态变成了handshake disconnected;

验证:把192.168.0.43:6382启动起来,看看它还会在集群吗?

[root@node01 ~]# ssh node03

Last login: Sat Aug 8 11:00:50 2020 from node01

[root@node03 ~]# ss -tnl

State Recv-Q Send-Q Local Address:Port Peer Address:Port

LISTEN 0 128 *:22 *:*

LISTEN 0 100 127.0.0.1:25 *:*

LISTEN 0 128 *:16379 *:*

LISTEN 0 128 *:16380 *:*

LISTEN 0 128 *:16381 *:*

LISTEN 0 128 *:6379 *:*

LISTEN 0 128 *:6380 *:*

LISTEN 0 128 *:6381 *:*

LISTEN 0 128 [::]:22 [::]:*

LISTEN 0 100 [::1]:25 [::]:*

LISTEN 0 128 [::]:2376 [::]:*

[root@node03 ~]# redis-server /usr/local/redis/6382/etc/redis.conf

[root@node03 ~]# ss -tnl

State Recv-Q Send-Q Local Address:Port Peer Address:Port

LISTEN 0 128 *:22 *:*

LISTEN 0 100 127.0.0.1:25 *:*

LISTEN 0 128 *:16379 *:*

LISTEN 0 128 *:16380 *:*

LISTEN 0 128 *:16381 *:*

LISTEN 0 128 *:16382 *:*

LISTEN 0 128 *:6379 *:*

LISTEN 0 128 *:6380 *:*

LISTEN 0 128 *:6381 *:*

LISTEN 0 128 *:6382 *:*

LISTEN 0 128 [::]:22 [::]:*

LISTEN 0 100 [::1]:25 [::]:*

LISTEN 0 128 [::]:2376 [::]:*

[root@node03 ~]# redis-cli

127.0.0.1:6379> AUTH admin

OK

127.0.0.1:6379> CLUSTER NODES

0449aa43657d46f487107bfe49344701526b11d8 192.168.0.43:6381@16381 slave 8c785e6ec3f8f7ff4fb7768765da8b8a93f26855 0 1596856251000 16 connected

a7ace08c36f7d55c4f28463d72865aa1ff74829e 192.168.0.43:6379@16379 myself,master - 0 1596856250000 15 connected 1181-2446 5461-5655 12540-16383

dbfff4c49a94c0ee55d14401ccc9245af3655427 192.168.0.42:6380@16380 slave 8c785e6ec3f8f7ff4fb7768765da8b8a93f26855 0 1596856250973 16 connected

e99b0b450e78719d63520cb6efc068d5e8d4d081 192.168.0.42:6379@16379 slave 91169e71359deed96f8778cf31c823dbd6ded350 0 1596856253018 18 connected

8c785e6ec3f8f7ff4fb7768765da8b8a93f26855 192.168.0.41:6379@16379 master - 0 1596856252000 16 connected 2447-5460 5656-7655

6df33baf68995c61494a06c06af18045ca5a04f6 192.168.0.43:6382@16382 master - 0 1596856253000 17 connected

62ece0b80b83c0f1f078b07fc1687bb8376f76b3 192.168.0.41:6380@16380 slave a7ace08c36f7d55c4f28463d72865aa1ff74829e 0 1596856252000 15 connected

91169e71359deed96f8778cf31c823dbd6ded350 192.168.0.43:6380@16380 master - 0 1596856254043 18 connected 0-1180 7656-12539

127.0.0.1:6379> quit

[root@node03 ~]# exit

logout

Connection to node03 closed.

[root@node01 ~]# redis-trib.rb info 192.168.0.41:6379

192.168.0.41:6379 (8c785e6e...) -> 0 keys | 5014 slots | 2 slaves.

192.168.0.43:6382 (6df33baf...) -> 0 keys | 0 slots | 0 slaves.

192.168.0.43:6379 (a7ace08c...) -> 0 keys | 5305 slots | 1 slaves.

192.168.0.43:6380 (91169e71...) -> 0 keys | 6065 slots | 1 slaves.

[OK] 0 keys in 4 masters.

0.00 keys per slot on average.

[root@node01 ~]#

提示:可以看到当我们把192.168.0.43:6382启动起来后,再次查看集群信息,它又回到了集群,只不过它没有对应slot,当然没有slot也就不会有任何连接调度到它上面;

Redis服务之集群节点管理的更多相关文章

- redis cluster集群web管理工具 relumin

redis cluster集群web管理工具 relumin 下载地址 https://github.com/be-hase/relumin 只支持redis cluster模式 java环境 tar ...

- docker swarm英文文档学习-7-在集群中管理节点

Manage nodes in a swarm在集群中管理节点 List nodes列举节点 为了查看集群中的节点列表,可以在管理节点中运行docker node ls: $ docker node ...

- Redis集群节点扩容及其 Redis 哈希槽

Redis 集群中内置了 16384 个哈希槽,当需要在 Redis 集群中放置一个 key-value 时,redis 先对 key 使用 crc16 算法算出一个结果,然后把结果对 16384 求 ...

- Redis搭建(七):Redis的Cluster集群动态增删节点

一.引言 上一篇文章我们一步一步的教大家搭建了Redis的Cluster集群环境,形成了3个主节点和3个从节点的Cluster的环境.当然,大家可以使用 Cluster info 命令查看Cluste ...

- Redis Cluster 集群节点维护 (三)

Redis Cluster 集群节点维护: 集群运行很久之后,难免由于硬件故障,网络规划,业务增长,等原因对已有集群进行相应的调整,比如增加redis nodes 节点,减少节点,节点迁移,更换服务器 ...

- redis集群节点重启后恢复

服务器重启后,集群报错: [root@SHH-HQ-NHS11S nhsuser]# redis-cli -c -h ip -p 7000ip:7000> set cc dd(error) CL ...

- 搜索服务Solr集群搭建 使用ZooKeeper作为代理层

上篇文章搭建了zookeeper集群 那好,今天就可以搭建solr搜服服务的集群了,这个和redis 集群不同,是需要zk管理的,作为一个代理层 安装四个tomcat,修改其端口号不能冲突.8080~ ...

- Redis 高可用集群

Redis 高可用集群 Redis 的集群主从模型是一种高可用的集群架构.本章主要内容有:高可用集群的搭建,Jedis连接集群,新增集群节点,删除集群节点,其他配置补充说明. 高可用集群搭建 集群(c ...

- Redis+TwemProxy(nutcracker)集群方案部署记录

Twemproxy 又称nutcracker ,是一个memcache.Redis协议的轻量级代理,一个用于sharding 的中间件.有了Twemproxy,客户端不直接访问Redis服务器,而是通 ...

随机推荐

- Python基础最难知识点:正则表达式(使用步骤)

前言 本文的文字及图片来源于网络,仅供学习.交流使用,不具有任何商业用途,版权归原作者所有,如有问题请及时联系我们以作处理. 正则表达式,简称regex,是文本模式的描述方法.你可以在google上搜 ...

- 面试锦囊 | HTTP 面试门路

前言 本文已经收录到我的 Github 个人博客,欢迎大佬们光临寒舍: 我的 Github 博客 学习清单: 零.前置知识 幂等的概念是指同一个请求方法执行多次和仅执行一次的效果完全相同 一.PUT ...

- PyQt5布局管理器

布局分类 绝对定位:使用move方法将空间直接定死在某个坐标,不会随着窗口大小的改变而改变 可变布局:使用各种布局管理器,实现组件的位置和大小随着窗口的变化而变化 布局管理器 QHBoxLayout: ...

- create-react-app中的babel配置探索

版本 babel-loader version:"8.1.0" create-react-app:"3.4.1" 三个配置 第一部分: { test: /\.( ...

- Monster Audio 使用教程(一)入门教程 + 常见问题

Monster Audio支持的操作系统: windows 7 64bit 至 windows 10 64bit 受支持的VST: Vst 64bit.Vst3 64bit 受支持的声卡驱动: ASI ...

- LeetCode 86 | 链表基础,一次遍历处理链表中所有符合条件的元素

本文始发于个人公众号:TechFlow,原创不易,求个关注 今天是LeetCode专题第53篇文章,我们一起来看LeetCode第86题,Partition List(链表归并). 本题的官方难度是M ...

- 大汇总 | 一文学会八篇经典CNN论文

本文主要是回顾一下一些经典的CNN网络的主要贡献. 论文传送门 [google团队] [2014.09]inception v1: https://arxiv.org/pdf/1409.4842.pd ...

- 数据页结构 .InnoDb行格式、以及索引底层原理分析

局部性原理 局部性原理是指CPU访问存储器时,无论是存取指令还是存取数据,所访问的存储单元都趋于聚集在一个较小的连续区域中. 首先要明白局部性原理能解决的是什么问题,也就是主存容量远远比缓存大, CP ...

- 【Logisim实验】构建立即数-随机存储器-寄存器的传送

关于Logisim Logisim在仿真软件行列中算是比较直观的软件了,它能做的事情有很多,唯一不足的是硬件描述语言的支持,总体上来说适合比较底层的仿真,依赖于Hex值,通过线路逻辑设计能够较好的 关 ...

- Spring中与bean有关的生命周期

前言 记得以前的时候,每次提起Spring中的bean相关的生命周期时,内心都无比的恐惧,因为好像有很多,自己又理不清楚,然后看网上的帖子,好像都是那么一套,什么beanFactory啊,aware接 ...