Hadoop3.1.2 + Hbase2.2.0 设置lzo压缩算法

Hadoop3.1.2 + Hbase2.2.0 设置lzo压缩算法:

写在前面,在配置hbase使用lzo算法时,在网上搜了很多文章,一般都是比较老的文章,一是版本低,二是一般都是使用hadoop-gpl-compression,hadoop-gpl-compression是一个比较老的依赖包,现已被hadoop-lzo替代,希望遇到hadoop和hbase配置lzo算法时,能有所帮助

安装lzo库

1.下载最新的lzo库,下载地址:http://www.oberhumer.com/opensource/lzo/download/

2.解压lzo库

tar -zxvf lzo-2.10.tar.gz

3.进入解压后的lzo目录,执行./configure --enable-shared

cd lzo-2.10

./configure --enable-shared -prefix=/usr/local/hadoop/lzo

4.执行make进行编译,编译完成后,执行make install进行安装

make && make install

如果没有安装lzo库,在hbase中创建表时指定compression为lzo时会报错:

ERROR: org.apache.hadoop.hbase.DoNotRetryIOException: java.lang.RuntimeException: native-lzo library not available Set hbase.table.sanity.checks to false at conf or table descriptor if you want to bypass sanity checks

at org.apache.hadoop.hbase.master.HMaster.warnOrThrowExceptionForFailure(HMaster.java:2314)

at org.apache.hadoop.hbase.master.HMaster.sanityCheckTableDescriptor(HMaster.java:2156)

at org.apache.hadoop.hbase.master.HMaster.createTable(HMaster.java:2048)

at org.apache.hadoop.hbase.master.MasterRpcServices.createTable(MasterRpcServices.java:651)

at org.apache.hadoop.hbase.shaded.protobuf.generated.MasterProtos$MasterService$2.callBlockingMethod(MasterProtos.java)

at org.apache.hadoop.hbase.ipc.RpcServer.call(RpcServer.java:413)

at org.apache.hadoop.hbase.ipc.CallRunner.run(CallRunner.java:132)

at org.apache.hadoop.hbase.ipc.RpcExecutor$Handler.run(RpcExecutor.java:324)

at org.apache.hadoop.hbase.ipc.RpcExecutor$Handler.run(RpcExecutor.java:304)

Caused by: org.apache.hadoop.hbase.DoNotRetryIOException: java.lang.RuntimeException: native-lzo library not available

at org.apache.hadoop.hbase.util.CompressionTest.testCompression(CompressionTest.java:103)

at org.apache.hadoop.hbase.master.HMaster.checkCompression(HMaster.java:2384)

at org.apache.hadoop.hbase.master.HMaster.checkCompression(HMaster.java:2377)

at org.apache.hadoop.hbase.master.HMaster.sanityCheckTableDescriptor(HMaster.java:2154)

... 7 more

Caused by: java.lang.RuntimeException: native-lzo library not available

at com.hadoop.compression.lzo.LzoCodec.getCompressorType(LzoCodec.java:135)

at org.apache.hadoop.io.compress.CodecPool.getCompressor(CodecPool.java:150)

at org.apache.hadoop.io.compress.CodecPool.getCompressor(CodecPool.java:168)

at org.apache.hadoop.hbase.io.compress.Compression$Algorithm.getCompressor(Compression.java:355)

at org.apache.hadoop.hbase.util.CompressionTest.testCompression(CompressionTest.java:98)

... 10 more

5,库文件被默认安装到了/usr/local/lib,将/usr/local/lib拷贝到/usr/lib下,或者在/usr/lib下建立软连接

cd /usr/lib

ln -s /usr/local/lib/* .

6.安装lzop wget http://www.lzop.org/download/lzop-1.04.tar.gz

tar -zxvf lzop-1.04.tar.gz

./configure -enable-shared -prefix=/usr/local/hadoop/lzop

make && make install

7.把lzop复制到/usr/bin/或建立软连接

ln -s /usr/local/hadoop/lzop/bin/lzop /usr/bin/lzop

二、安装hadoop-lzo

1.下载hadoop-lzo ,下载地址:wget https://github.com/twitter/hadoop-lzo/archive/master.zip 这是一个zip压缩包,如果想使用git下载,可以使用该链接:https://github.com/twitter/hadoop-lzo

2.编译hadoop-lzo源码,在编译之前如果没有安装maven需要配置maven环境,解压缩master.zip,为:hadoop-lzo-master,进入hadoop-lzo-master中,修改pom.xml中hadoop版本配置,进行maven编译

unzip master.zip

cd hadoop-lzo-master

vim pom.xml

修改hadoop.current.version为自己对应的hadoop版本,我这里是3.1.2

<properties>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<hadoop.current.version>3.1.2</hadoop.current.version>

<hadoop.old.version>1.0.4</hadoop.old.version>

</properties>

3.在hadoop-lzo-master目录中执行一下命令编译hadoop-lzo:

export CFLAGS=-m64

export CXXFLAGS=-m64

export C_INCLUDE_PATH=/usr/local/hadoop/lzo/include #对应lzo安装的目录

export LIBRARY_PATH=/usr/local/hadoop/lzo/lib #对应lzo安装的目录

mvn clean package -Dmaven.test.skip=true

4.打包完成后,进入target/native/Linux-amd64-64,将libgplcompression*复制到hadoop的native中,将hadoop-lzo.xxx.jar 复制到每台hadoop的common包里

cd target/native/Linux-amd64-64

tar -cBf - -C lib . | tar -xBvf - -C ~

cp ~/libgplcompression* $HADOOP_HOME/lib/native/

cp target/hadoop-lzo-0.4.18-SNAPSHOT.jar $HADOOP_HOME/share/hadoop/common/

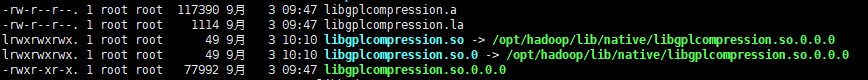

libgplcompression*文件:

其中libgplcompression.so和libgplcompression.so.0是链接文件,指向libgplcompression.so.0.0.0,

将上面生成的libgplcompression*和target/hadoop-lzo-xxx-SNAPSHOT.jar同步到集群中的所有机器对应的目录($HADOOP_HOME/lib/native/,$HADOOP_HOME/share/hadoop/common/)。

配置hadoop环境变量

1.在$HADOOP_HOME/etc/hadoop/hadoop-env.sh文件中配置:

export LD_LIBRARY_PATH=/usr/local/lib/lzo/lib

# Extra Java CLASSPATH elements. Optional.

export HADOOP_CLASSPATH="<extra_entries>:$HADOOP_CLASSPATH:${HADOOP_HOME}/share/hadoop/common"

export JAVA_LIBRARY_PATH=$JAVA_LIBRARY_PATH:$HADOOP_HOME/lib/native

2.在$HADOOP_HOME/etc/hadoop/core-site.xml加上如下配置:

<property>

<name>io.compression.codecs</name>

<value>org.apache.hadoop.io.compress.GzipCodec,

org.apache.hadoop.io.compress.DefaultCodec,

com.hadoop.compression.lzo.LzoCodec,

com.hadoop.compression.lzo.LzopCodec,

org.apache.hadoop.io.compress.BZip2Codec

</value>

</property> <property>

<name>io.compression.codec.lzo.class</name>

<value>com.hadoop.compression.lzo.LzoCodec</value>

</property>

如果没有配置,在hbase中创建表时compression指定lzo时会报错:

Caused by: org.apache.hadoop.hbase.DoNotRetryIOException: java.lang.RuntimeException: java.lang.ClassNotFoundException: com.hadoop.compression.lzo.LzoCodec

at org.apache.hadoop.hbase.util.CompressionTest.testCompression(CompressionTest.java:103)

at org.apache.hadoop.hbase.master.HMaster.checkCompression(HMaster.java:2384)

at org.apache.hadoop.hbase.master.HMaster.checkCompression(HMaster.java:2377)

at org.apache.hadoop.hbase.master.HMaster.sanityCheckTableDescriptor(HMaster.java:2154)

... 7 more

Caused by: java.lang.RuntimeException: java.lang.ClassNotFoundException: com.hadoop.compression.lzo.LzoCodec

at org.apache.hadoop.hbase.io.compress.Compression$Algorithm$1.buildCodec(Compression.java:128)

at org.apache.hadoop.hbase.io.compress.Compression$Algorithm$1.getCodec(Compression.java:114)

at org.apache.hadoop.hbase.io.compress.Compression$Algorithm.getCompressor(Compression.java:353)

at org.apache.hadoop.hbase.util.CompressionTest.testCompression(CompressionTest.java:98)

... 10 more

Caused by: java.lang.ClassNotFoundException: com.hadoop.compression.lzo.LzoCodec

at java.net.URLClassLoader.findClass(URLClassLoader.java:382)

at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:349)

at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

at org.apache.hadoop.hbase.io.compress.Compression$Algorithm$1.buildCodec(Compression.java:124)

... 13 more

3.在$HADOOP_HOME/etc/hadoop/mapred-site.xml加上如下配置:

<property>

<name>mapred.compress.map.output</name>

<value>true</value>

</property> <property>

<name>mapred.map.output.compression.codec</name>

<value>com.hadoop.compression.lzo.LzoCodec</value>

</property> <property>

<name>mapred.child.env</name>

<value>LD_LIBRARY_PATH=/usr/local/hadoop/lzo/lib</value>

</property>

<property>

<name>mapreduce.reduce.env</name>

<value>LD_LIBRARY_PATH=/usr/local/hadoop/lzo/lib</value>

</property>

<property>

<name>mapred.child.env</name>

<value>LD_LIBRARY_PATH=/usr/local/hadoop/lzo/lib</value>

</property>

将上述修改的配置文件全部同步到集群的所有机器上,并重启Hadoop集群,这样就可以在Hadoop中使用lzo。

在Hbase中配置lzo

1.将hadoop-lzo-xxx.jar复制到/hbase/lib中

cp target/hadoop-lzo-0.4.18-SNAPSHOT.jar $HBASE_HOME/lib

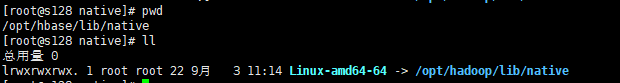

2.在hbase/lib下创建native文件夹,在/hbase/lib/native下创建Linux-amd64-64 -> /opt/hadoop/lib/native的软连接

ln -s /opt/hadoop/lib/native Linux-amd64-64

如图:

3.在$HBASE_HOME/conf/hbase-env.sh中添加如下配置:

export HBASE_LIBRARY_PATH=$HBASE_LIBRARY_PATH:$HBASE_HOME/lib/native/Linux-amd64-64/:/usr/local/lib/

4.在$HBASE_HOME/conf/hbase-site.xml中添加如下配置:

<property>

<name>hbase.regionserver.codecs</name>

<value>lzo</value>

</property>

5.启动hbase一切正常

注意:关于hadoop-gpl-compression的说明:

hadoop-lzo-xxx的前身是hadoop-gpl-compression-xxx,之前是放在google code下管理,地址:http://code.google.com/p/hadoop-gpl-compression/ .但由于协议问题后来移植到github上,也就是现在的hadoop-lzo-xxx,github,链接地址:https://github.com/kevinweil/hadoop-lzo.网上介绍hadoop lzo压缩绝大部分都是基于hadoop-gpl-compression的介绍.而hadoop-gpl-compression还是09年开发的,跟现在hadoop版本已经无法再完全兼容,会发生一些问题。因此也趟了一些坑。希望能给一些朋友一点帮助。

在使用hadoop-gpl-compression-xxx.jar时,hbase启动会报如下错:

2019-09-03 11:36:22,771 INFO [main] lzo.GPLNativeCodeLoader: Loaded native gpl library

2019-09-03 11:36:22,866 WARN [main] lzo.LzoCompressor: java.lang.NoSuchFieldError: lzoCompressLevelFunc

2019-09-03 11:36:22,866 ERROR [main] lzo.LzoCodec: Failed to load/initialize native-lzo library

2019-09-03 11:36:23,169 WARN [main] util.CompressionTest: Can't instantiate codec: lzo

org.apache.hadoop.hbase.DoNotRetryIOException: java.lang.RuntimeException: native-lzo library not available

at org.apache.hadoop.hbase.util.CompressionTest.testCompression(CompressionTest.java:103)

at org.apache.hadoop.hbase.util.CompressionTest.testCompression(CompressionTest.java:69)

at org.apache.hadoop.hbase.regionserver.HRegionServer.checkCodecs(HRegionServer.java:834)

at org.apache.hadoop.hbase.regionserver.HRegionServer.<init>(HRegionServer.java:565)

at org.apache.hadoop.hbase.master.HMaster.<init>(HMaster.java:506)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.hadoop.hbase.master.HMaster.constructMaster(HMaster.java:3180)

at org.apache.hadoop.hbase.master.HMasterCommandLine.startMaster(HMasterCommandLine.java:236)

at org.apache.hadoop.hbase.master.HMasterCommandLine.run(HMasterCommandLine.java:140)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:76)

at org.apache.hadoop.hbase.util.ServerCommandLine.doMain(ServerCommandLine.java:149)

at org.apache.hadoop.hbase.master.HMaster.main(HMaster.java:3198)

Caused by: java.lang.RuntimeException: native-lzo library not available

at com.hadoop.compression.lzo.LzoCodec.getCompressorType(LzoCodec.java:135)

at org.apache.hadoop.io.compress.CodecPool.getCompressor(CodecPool.java:150)

at org.apache.hadoop.io.compress.CodecPool.getCompressor(CodecPool.java:168)

at org.apache.hadoop.hbase.io.compress.Compression$Algorithm.getCompressor(Compression.java:355)

at org.apache.hadoop.hbase.util.CompressionTest.testCompression(CompressionTest.java:98)

... 14 more

2019-09-03 11:36:23,183 ERROR [main] regionserver.HRegionServer: Failed construction RegionServer

java.io.IOException: Compression codec lzo not supported, aborting RS construction

at org.apache.hadoop.hbase.regionserver.HRegionServer.checkCodecs(HRegionServer.java:835)

at org.apache.hadoop.hbase.regionserver.HRegionServer.<init>(HRegionServer.java:565)

at org.apache.hadoop.hbase.master.HMaster.<init>(HMaster.java:506)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.hadoop.hbase.master.HMaster.constructMaster(HMaster.java:3180)

at org.apache.hadoop.hbase.master.HMasterCommandLine.startMaster(HMasterCommandLine.java:236)

at org.apache.hadoop.hbase.master.HMasterCommandLine.run(HMasterCommandLine.java:140)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:76)

at org.apache.hadoop.hbase.util.ServerCommandLine.doMain(ServerCommandLine.java:149)

at org.apache.hadoop.hbase.master.HMaster.main(HMaster.java:3198)

2019-09-03 11:36:23,184 ERROR [main] master.HMasterCommandLine: Master exiting

java.lang.RuntimeException: Failed construction of Master: class org.apache.hadoop.hbase.master.HMaster.

at org.apache.hadoop.hbase.master.HMaster.constructMaster(HMaster.java:3187)

at org.apache.hadoop.hbase.master.HMasterCommandLine.startMaster(HMasterCommandLine.java:236)

at org.apache.hadoop.hbase.master.HMasterCommandLine.run(HMasterCommandLine.java:140)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:76)

at org.apache.hadoop.hbase.util.ServerCommandLine.doMain(ServerCommandLine.java:149)

at org.apache.hadoop.hbase.master.HMaster.main(HMaster.java:3198)

Caused by: java.io.IOException: Compression codec lzo not supported, aborting RS construction

at org.apache.hadoop.hbase.regionserver.HRegionServer.checkCodecs(HRegionServer.java:835)

at org.apache.hadoop.hbase.regionserver.HRegionServer.<init>(HRegionServer.java:565)

at org.apache.hadoop.hbase.master.HMaster.<init>(HMaster.java:506)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.hadoop.hbase.master.HMaster.constructMaster(HMaster.java:3180)

... 5 more

当删除hadoop-gpl-compression-xxx.jar时,替换为hadoop-lzo.xxx.jar后,再启动hbase,一切正常:

2019-09-03 14:57:43,755 INFO [main] lzo.GPLNativeCodeLoader: Loaded native gpl library from the embedded binaries

2019-09-03 14:57:43,758 INFO [main] lzo.LzoCodec: Successfully loaded & initialized native-lzo library [hadoop-lzo rev 5dbdddb8cfb544e58b4e0b9664b9d1b66657faf5]

2019-09-03 14:57:43,983 INFO [main] Configuration.deprecation: hadoop.native.lib is deprecated. Instead, use io.native.lib.available

2019-09-03 14:57:44,088 INFO [main] compress.CodecPool: Got brand-new compressor [.lzo_deflate]

Hadoop3.1.2 + Hbase2.2.0 设置lzo压缩算法的更多相关文章

- Android4.0设置界面改动总结(三)

Android4.0设置界面改动总结大概介绍了一下设置改tab风格,事实上原理非常easy,理解两个基本的函数就可以: ①.invalidateHeaders(),调用此函数将又一次调用onBuild ...

- 配置子目录Web.config使其消除继承,iis7.0设置路由

iis7.0设置路由 ,url转向,伪静态 <system.webServer> <modules runAllManagedModulesForAllRequests=& ...

- Android4.0设置界面改动总结(二)

今年1月份的时候.有和大家分享给予Android4.0+系统设置的改动:Android4.0设置界面改动总结 时隔半年.回头看看那个时候的改动.事实上是有非常多问题的,比方说: ①.圆角Item会影响 ...

- hyper-v 中 安装 Centos 7.0 设置网络 教程

安装环境是: 系统:win server 2012 r2 DataCenter hyper-v版本:6.3.9600.16384 centos版本:7.0 从网上下载的 centos 7.0 如果找 ...

- IIS7.0设置404错误页,返回500状态码

一般在II6下,设置自定义404错误页时,只需要在错误页中选择自定义的页面,做自己的404页面即可.但是在IIS7.0及以上时,设置完404错误页后,会发现状态码返回的是500,并且可能会引起页面乱码 ...

- cocos2dx 2.0+ 版本,IOS6.0+设置横屏

使用cocos2dx 自带的xcode模板,是不能正常的设置为横屏的. 一共修改了三个地方: 在项目属性中:Deployment Info中,勾选上 Landscape left,以及 Landsca ...

- CentOS 6.0 设置IP地址、网关、DNS

切忌: 在做任何操作之前先备份原文件,我们约定备份文件的名称为:源文件名称+bak,例如原文件名称为:centos.txt 那么备份文件名称为:centos.txtbak 引言:linux ...

- VC6.0设置选项解读(转)

其实软件调试还是一个技术熟练过程,得慢慢自己总结,可以去搜索引擎查找一些相关的文章看看,下边是一篇关于VC6使用的小文章,贴出来大家看看: 大家可能一直在用VC开发软件,但是对于这个编译器却未必很了解 ...

- Phonegap 3.0 设置APP是否全屏

Phonegap 3.0 默认是全屏,如需要取消全屏,可手动修改config, 在APP/res/xml/config.xml文件可设置preference: <?xml version='1. ...

随机推荐

- 【Demo 1】基于object_detection API的行人检测 1:环境与依赖

环境 系统环境: win10.python3.6.tensorflow1.14.0.OpenCV3.8 IDE: Pycharm 2019.1.3.JupyterNotebook 依赖 安装objec ...

- 第一章: 初识Java

计算机程序:计算机为完成某些功能产生的一系列有序指令集合. Java技术包括:JavaSE(标准版) JavaEE(企业版) ---JavaME(移动版) 开发Java程序步骤:1.编写 2.编译 3 ...

- http.client.ResponseNotReady: Request-sent

最近学习python写接口测试,使用的是connection.request 发现在测试一个发送报告接口时候,同一个接口,同样的脚本,只是一个参数传不同值,总提示:http.client.Respon ...

- supervisor指南

1 安装 yum install -y supervisor 如果提示没有这个安装包,则需要添加epel源 wget -O /etc/yum.repos.d/epel.repo http://mirr ...

- R语言学习笔记——C#中如何使用R语言setwd()函数

在R语言编译器中,设置当前工作文件夹可以用setwd()函数. > setwd("e://桌面//")> setwd("e:\桌面\")> s ...

- 脱壳系列_0_FSG壳_详细版

---恢复内容开始--- 1 查看信息 使用ExeInfoPe查看此壳程序 可以看出是很老的FSG壳. 分析: Entry Point : 000000154,熟悉PE结构的知道,入口点(代码)揉进P ...

- LinkedHashMap的特殊之处

一.前言 乍眼一看会怀疑或者问LinkedHashMap与HashMap有什么区别? 它有什么与众不同之处? 由于前面已经有两篇文章分析了HashMap,今天就看看LinkedHashMap.(基于 ...

- O2优化的实质

重点:如果使用多个-O选项(包含或不包含级别编号),则最后一个选项是有效的选项.------------ ------------ ------------例如:#pragma GCC optimiz ...

- WTM 构建DotNetCore开源生态,坐而论道不如起而行之

作为一个8岁开始学习编程,至今40岁的老程序员,这辈子使用过无数种语言,从basic开始,到pascal, C, C++,到后来的 java, c#,perl,php,再到现在流行的python. 小 ...

- Java1.8新特性实战

public class JDK8_features {private ArrayList<Integer> list; @Testpublic void test(){/*** 1.La ...