Mysql双主热备+LVS+Keepalived高可用操作记录

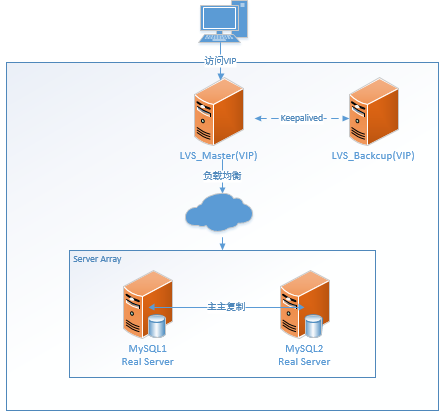

MySQL复制能够保证数据的冗余的同时可以做读写分离来分担系统压力,如果是主主复制还可以很好的避免主节点的单点故障。然而MySQL主主复制存在一些问题无法满足我们的实际需要:未提供统一访问入口来实现负载均衡,如果其中master宕掉的话需要手动切换到另外一个master,而不能自动进行切换。前面介绍了Mysql+Keepalived双主热备高可用方案记录,那篇文档里没有使用到LVS(实现负载均衡),而下面要介绍的就是如何通过Keepalived+LVS方式来是实现MySQL的高可用性,利用LVS实现MySQL的读写负载均衡,Keepalived避免节点出现单点故障,同时解决以上问题。

Keepalived是一个基于VRRP(虚拟路由冗余协议)可用来实现服务高可用性的软件方案,避免出现单点故障。Keepalived一般用来实现轻量级高可用性,且不需要共享存储,一般用于两个节点之间,常见有LVS+Keepalived、Nginx+Keepalived组合。

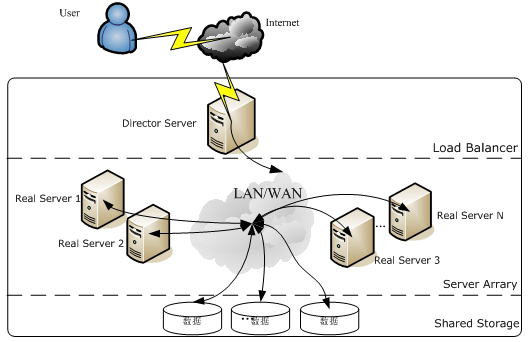

LVS(Linux Virtual Server)是一个高可用性虚拟的服务器集群系统。本项目在1998年5月由章文嵩博士成立,是中国国内最早出现的自由软件项目之一。LVS主要用于多服务器的负载均衡,作用于网络层。LVS构建的服务器集群系统中,前端的负载均衡层被称为Director Server;后端提供服务的服务器组层被称为Real Server。通过下图可以大致了解LVS的基础架构。

LVS有三种工作模式,分别是:

1)DR(Direct Routing 直接路由)模式。 DR可以支持相当多的Real Server,但需要保证Director Server(分发器)虚拟网卡与物理网卡在同一网段,并且后端Real Server的vip要建立在本地回环口lo设备上,这样做是为了防止ip冲突;DR模式的好处是进站流量经过Director Server,出站流量不经过Director Server,减轻了Director Server的负载压力。

2)NAT(Network Address Translation 网络地址转换)模式。NAT扩展性有限,无法支持更多的Real Server,因为所有的请求包和应答包都需要Director Server进行解析再生,这样就很影响效率。

3)TUN(Tunneling IP隧道)模式。TUN模式能够支持更多的Real Server,但需要所有服务器支持IP隧道协议;

LVS负载均衡有10中调度算法,分别是:rr(轮询)、wrr(加权轮询)、lc、wlc、lblc、lblcr、dh、sh、sed、nq

以下详细记录了Mysql在主主同步环境下,利用LVS实现Mysql的读写负责均衡以及使用Keepalived心跳测试避免节点出现单点故障,实现故障转移的高可用。

1)高可用方案的环境准备

LVS_Master: 182.148.15.237

LVS_Backup: 182.148.15.236

MySQL1 Real Server: 182.148.15.233

MySQL2 Real Server: 182.148.15.238

VIP: 182.148.15.239

OS: CentOS 6.8 温馨提示:LVS_Master和LVS_Backup充当的是Director Server分发器的角色。

这里的LVS采用DR模式,即"进站流量经过Director Server分发器,出站流量不经过分发器",这就要求:

1)LVS_Master和LVS_Backup需要将VIP绑定在其正常提供服务的网卡上(这里指182.48.115.0网段所在的网卡),netmask和对于网卡设备一致。

2)后端的Real Server要在本地回环口lo上绑定vip(防止ip冲突)

2)环境部署记录如下

a)Mysql主主热备环境部署

MySQL1 Real Server1和MySQL2 Real Server的主主热备可以参考Mysql+Keepalived双主热备高可用操作记录中对应部分。

b)Keepalived安装

LVS_Master和LVS_Backup的keepalived安装,也可以参考Mysql+Keepalived双主热备高可用操作记录中对应部分。

c)LVS安装

LVS_Master和LVS_Backup两台机器安装步骤一样 首先打开两台机器的ip_forward转发功能

[root@LVS_Master ~]# echo "1" > /proc/sys/net/ipv4/ip_forward 先下载ipvsadm

[root@LVS_Master ~]# cd /usr/local/src/

[root@LVS_Master src]# wget http://www.linuxvirtualserver.org/software/kernel-2.6/ipvsadm-1.26.tar.gz 需要安装以下软件包

[root@LVS_Master src]# yum install -y libnl* popt* 查看是否加载lvs模块

[root@LVS_Master src]# modprobe -l |grep ipvs 解压安装

[root@LVS_Master src]# ln -s /usr/src/kernels/2.6.32-431.5.1.el6.x86_64/ /usr/src/linux

[root@LVS_Master src]# tar -zxvf ipvsadm-1.26.tar.gz

[root@LVS_Master src]# cd ipvsadm-1.26

[root@LVS_Master ipvsadm-1.26]# make && make install LVS安装完成,查看当前LVS集群

[root@LVS_Master ipvsadm-1.26]# ipvsadm -L -n

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

d)编写LVS启动脚本/etc/init.d/realserver

1)在MySQL1 Real Server服务器上的操作

[root@MySQL1 ~]# vim /etc/init.d/realserver

#!/bin/sh

VIP=182.148.15.239

. /etc/rc.d/init.d/functions case "$1" in

# 禁用本地的ARP请求、绑定本地回环地址

start)

/sbin/ifconfig lo down

/sbin/ifconfig lo up

echo "1" >/proc/sys/net/ipv4/conf/lo/arp_ignore

echo "2" >/proc/sys/net/ipv4/conf/lo/arp_announce

echo "1" >/proc/sys/net/ipv4/conf/all/arp_ignore

echo "2" >/proc/sys/net/ipv4/conf/all/arp_announce

/sbin/sysctl -p >/dev/null 2>&1

/sbin/ifconfig lo:0 $VIP netmask 255.255.255.255 up #在回环地址上绑定VIP,设定掩码,与Direct Server(自身)上的IP保持通信

/sbin/route add -host $VIP dev lo:0

echo "LVS-DR real server starts successfully.\n"

;;

stop)

/sbin/ifconfig lo:0 down

/sbin/route del $VIP >/dev/null 2>&1

echo "1" >/proc/sys/net/ipv4/conf/lo/arp_ignore

echo "2" >/proc/sys/net/ipv4/conf/lo/arp_announce

echo "1" >/proc/sys/net/ipv4/conf/all/arp_ignore

echo "2" >/proc/sys/net/ipv4/conf/all/arp_announce

echo "LVS-DR real server stopped.\n"

;;

status)

isLoOn=`/sbin/ifconfig lo:0 | grep "$VIP"`

isRoOn=`/bin/netstat -rn | grep "$VIP"`

if [ "$isLoON" == "" -a "$isRoOn" == "" ]; then

echo "LVS-DR real server has run yet."

else

echo "LVS-DR real server is running."

fi

exit 3

;;

*)

echo "Usage: $0 {start|stop|status}"

exit 1

esac

exit 0 将lvs脚本加入开机自启动

[root@MySQL1 ~]# chmod +x /etc/init.d/realserver

[root@MySQL1 ~]# echo "/etc/init.d/realserver" >> /etc/rc.d/rc.local 启动LVS脚本

[root@MySQL1 ~]# service realserver start

LVS-DR real server starts successfully.\n 查看MySQL1 Real Server服务器,发现VIP已经成功绑定到本地回环口lo上了

[root@MySQL1 ~]# ifconfig

eth0 Link encap:Ethernet HWaddr 52:54:00:D1:27:75

inet addr:182.148.15.233 Bcast:182.148.15.255 Mask:255.255.255.224

inet6 addr: fe80::5054:ff:fed1:2775/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:598406 errors:0 dropped:0 overruns:0 frame:0

TX packets:12050 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:78790653 (75.1 MiB) TX bytes:33151764 (31.6 MiB) lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:483 errors:0 dropped:0 overruns:0 frame:0

TX packets:483 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:55807 (54.4 KiB) TX bytes:55807 (54.4 KiB) lo:0 Link encap:Local Loopback

inet addr:182.148.15.239 Mask:255.255.255.255

UP LOOPBACK RUNNING MTU:65536 Metric:1 2)在MySQL2 Real Server服务器上的操作

[root@MySQL2 ~]# vim /etc/init.d/realserver //这个脚本在后端Real Server上都是一样的内容

#!/bin/sh

VIP=182.148.15.239

. /etc/rc.d/init.d/functions case "$1" in start)

/sbin/ifconfig lo down

/sbin/ifconfig lo up

echo "1" >/proc/sys/net/ipv4/conf/lo/arp_ignore

echo "2" >/proc/sys/net/ipv4/conf/lo/arp_announce

echo "1" >/proc/sys/net/ipv4/conf/all/arp_ignore

echo "2" >/proc/sys/net/ipv4/conf/all/arp_announce

/sbin/sysctl -p >/dev/null 2>&1

/sbin/ifconfig lo:0 $VIP netmask 255.255.255.255 up

/sbin/route add -host $VIP dev lo:0

echo "LVS-DR real server starts successfully.\n"

;;

stop)

/sbin/ifconfig lo:0 down

/sbin/route del $VIP >/dev/null 2>&1

echo "1" >/proc/sys/net/ipv4/conf/lo/arp_ignore

echo "2" >/proc/sys/net/ipv4/conf/lo/arp_announce

echo "1" >/proc/sys/net/ipv4/conf/all/arp_ignore

echo "2" >/proc/sys/net/ipv4/conf/all/arp_announce

echo "LVS-DR real server stopped.\n"

;;

status)

isLoOn=`/sbin/ifconfig lo:0 | grep "$VIP"`

isRoOn=`/bin/netstat -rn | grep "$VIP"`

if [ "$isLoON" == "" -a "$isRoOn" == "" ]; then

echo "LVS-DR real server has run yet."

else

echo "LVS-DR real server is running."

fi

exit 3

;;

*)

echo "Usage: $0 {start|stop|status}"

exit 1

esac

exit 0 将lvs脚本加入开机自启动并启动lvs脚本

[root@MySQL2 ~]# chmod +x /etc/init.d/realserver

[root@MySQL2 ~]# echo "/etc/init.d/realserver" >> /etc/rc.d/rc.local

[root@MySQL2 ~]# service realserver start

LVS-DR real server starts successfully.\n [root@MySQL2 ~]# ifconfig

eth0 Link encap:Ethernet HWaddr 52:54:00:3B:33:8F

inet addr:182.148.15.238 Bcast:182.148.15.255 Mask:255.255.255.224

inet6 addr: fe80::5054:ff:fe3b:338f/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:135305 errors:0 dropped:0 overruns:0 frame:0

TX packets:11256 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:17338566 (16.5 MiB) TX bytes:892363 (871.4 KiB) lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:0 (0.0 b) TX bytes:0 (0.0 b) lo:0 Link encap:Local Loopback

inet addr:182.148.15.239 Mask:255.255.255.255

UP LOOPBACK RUNNING MTU:65536 Metric:1

e)配置iptables防火墙

1)后端两台机器MySQL1 Real Server和MySQL2 Real Server要在iptables里开放3306端口

[root@MySQL1 ~]# vim /etc/sysconfig/iptables

......

-A INPUT -m state --state NEW -m tcp -p tcp --dport 3306 -j ACCEPT [root@MySQL1 ~]# /etc/init.d/iptables restart 2)LVS_Master和LVS_Backup两台机器要在iptables开放VRRP组播地址的相关规则。

注意:这个一定要设置!!!否则会出现故障时的VIP资源漂移错乱问题! [root@LVS_Master ~]# vim /etc/sysconfig/iptables //两台LVS机器都要设置

.......

-A INPUT -s 182.148.15.0/24 -d 224.0.0.18 -j ACCEPT //允许组播地址通信

-A INPUT -s 182.148.15.0/24 -p vrrp -j ACCEPT //允许VRRP(虚拟路由器冗余协)通信

-A INPUT -m state --state NEW -m tcp -p tcp --dport 3306 -j ACCEPT [root@LVS_Master ~]# /etc/init.d/iptables restart

3)接着配置LVS+Keepalived配置

1)LVS_Master上的操作

[root@LVS_Master ~]# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived global_defs {

router_id LVS_Master

} vrrp_instance VI_1 {

state MASTER #指定instance初始状态,实际根据优先级决定.backup节点不一样

interface eth0 #虚拟IP所在网

virtual_router_id 51 #VRID,相同VRID为一个组,决定多播MAC地址

priority 100 #优先级,另一台改为90.backup节点不一样

advert_int 1 #检查间隔

authentication {

auth_type PASS #认证方式,可以是pass或ha

auth_pass 1111 #认证密码

}

virtual_ipaddress {

182.148.15.239 #VIP

}

} virtual_server 182.148.15.239 3306 {

delay_loop 6 #服务轮询的时间间隔

lb_algo wrr #加权轮询调度,LVS调度算法 rr|wrr|lc|wlc|lblc|sh|sh

lb_kind DR #LVS集群模式 NAT|DR|TUN,其中DR模式要求负载均衡器网卡必须有一块与物理网卡在同一个网段

#nat_mask 255.255.255.0

persistence_timeout 50 #会话保持时间

protocol TCP #健康检查协议 ## Real Server设置,3306就是MySQL连接端口

real_server 182.148.15.233 3306 {

weight 3 ##权重

TCP_CHECK {

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

connect_port 3306

}

}

real_server 182.148.15.238 3306 {

weight 3

TCP_CHECK {

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

connect_port 3306

}

}

} 启动keepalived

[root@LVS_Master ~]# /etc/init.d/keepalived start

Starting keepalived: [ OK ] 注意此时网卡的变化,可以看到虚拟网卡已经分配到了realserver上。

此时查看LVS集群状态,可以看到集群下有两个Real Server,调度算法,权重等信息。ActiveConn代表当前Real Server的活跃连接数。

[root@LVS_Master ~]# ipvsadm -ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 182.148.15.239:3306 wrr persistent 50

-> 182.148.15.233:3306 Route 3 1 0

-> 182.148.15.238:3306 Route 3 0 0 2)LVS_Backup上的操作

[root@LVS_Backup ~]# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived global_defs {

router_id LVS_Backup

} vrrp_instance VI_1 {

state BACKUP

interface eth0

virtual_router_id 51

priority 90

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

182.148.15.239

}

} virtual_server 182.148.15.239 3306 {

delay_loop 6

lb_algo wrr

lb_kind DR persistence_timeout 50

protocol TCP real_server 182.148.15.233 3306 {

weight 3

TCP_CHECK {

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

connect_port 3306

}

}

real_server 182.148.15.238 3306 {

weight 3

TCP_CHECK {

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

connect_port 3306

}

}

} 启动keepalived

[root@LVS_Backup ~]# /etc/init.d/keepalived start

Starting keepalived: [ OK ] [root@LVS_Backup ~]# ipvsadm -ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 182.148.15.239:3306 wrr persistent 50

-> 182.148.15.233:3306 Route 3 0 0

-> 182.148.15.238:3306 Route 3 0 0

此时LVS+Keepalived+MySQL主主复制已经搭建完成。

4)最后进行测试验证

1)先进行功能性验证

a)关闭MySQL2 Real Server服务器上的mysql

[root@MySQL2 ~]# /etc/init.d/mysql stop

Shutting down MySQL.. SUCCESS! 在LVS_Master查看/var/log/messages中关于keepalived日志,LVS_Master检测到了MySQL2 Real Server服务器宕机,同时LVS集群自动剔除了故障节点

[root@LVS_Master ~]# tail -f /var/log/messages

.......

Apr 26 15:22:19 test3-237 Keepalived_healthcheckers[4606]: TCP connection to [182.148.15.238]:3306 failed.

Apr 26 15:22:19 test3-237 Keepalived_vrrp[4608]: Sending gratuitous ARP on eth0 for 182.148.15.239

....... [root@LVS_Master ~]# ipvsadm -ln //LVS已经将MySQL2 Real Server剔除

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 182.148.15.239:3306 wrr persistent 50

-> 182.148.15.233:3306 Route 3 1 0 从新启动MySQL2 Real Server后自动将故障节点自动加入LVS集群

[root@LVS_Master ~]# tail -f /var/log/messages

.......

Apr 26 15:23:49 test3-237 Keepalived_healthcheckers[4606]: TCP connection to [182.148.15.238]:3306 success.

Apr 26 15:23:49 test3-237 Keepalived_healthcheckers[4606]: Adding service [182.148.15.238]:3306 to VS [182.148.15.239]:3306

....... [root@LVS_Master ~]# ipvsadm -ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 182.148.15.239:3306 wrr persistent 50

-> 182.148.15.233:3306 Route 3 1 0

-> 182.148.15.238:3306 Route 3 1 0 b)关闭LVS_Master上的Keepalived(模拟宕机操作),查看LVS_Master上的日志,可以看到Keepalived移出了LVS1上的VIP

[root@LVS_Master ~]# /etc/init.d/keepalived stop

Stopping keepalived: [ OK ] [root@LVS_Master ~]# tail -f /var/log/messages

........

Apr 26 15:29:38 test3-237 Keepalived[4976]: Stopping

Apr 26 15:29:38 test3-237 Keepalived_vrrp[4979]: VRRP_Instance(VI_1) sent 0 priority

Apr 26 15:29:38 test3-237 Keepalived_vrrp[4979]: VRRP_Instance(VI_1) removing protocol VIPs.

Apr 26 15:29:38 test3-237 Keepalived_healthcheckers[4977]: Removing service [182.148.15.233]:3306 from VS [182.148.15.239]:3306

Apr 26 15:29:38 test3-237 Keepalived_healthcheckers[4977]: Removing service [182.148.15.238]:3306 from VS [182.148.15.239]:3306

Apr 26 15:29:38 test3-237 Keepalived_healthcheckers[4977]: Stopped

Apr 26 15:29:38 test3-237 kernel: IPVS: __ip_vs_del_service: enter

Apr 26 15:29:39 test3-237 Keepalived_vrrp[4979]: Stopped

Apr 26 15:29:39 test3-237 Keepalived[4976]: Stopped Keepalived v1.3.5 (03/19,2017), git commit v1.3.5-6-g6fa32f2 [root@LVS_Master ~]# ip addr //发现VIP资源已经不在本机了

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 52:54:00:68:dc:b6 brd ff:ff:ff:ff:ff:ff

inet 182.148.15.237/27 brd 182.148.15.255 scope global eth0

inet 182.148.15.239/27 brd 182.148.15.255 scope global secondary eth0:0

inet6 fe80::5054:ff:fe68:dcb6/64 scope link

valid_lft forever preferred_lft forever 同时查看LVS_Backup上日志,可以看到LVS_Backup成为了Master,并接管了VIP

[root@LVS_Backup ~]# tail -f /var/log/messages

.....

Apr 26 15:26:41 test4-236 Keepalived_vrrp[4711]: VRRP_Instance(VI_1) Transition to MASTER STATE

Apr 26 15:26:42 test4-236 Keepalived_vrrp[4711]: VRRP_Instance(VI_1) Entering MASTER STATE

Apr 26 15:26:42 test4-236 Keepalived_vrrp[4711]: VRRP_Instance(VI_1) setting protocol VIPs.

Apr 26 15:26:42 test4-236 Keepalived_vrrp[4711]: Sending gratuitous ARP on eth0 for 182.148.15.239

Apr 26 15:26:42 test4-236 Keepalived_vrrp[4711]: VRRP_Instance(VI_1) Sending/queueing gratuitous ARPs on eth0 for 182.148.15.239 [root@LVS_Backup ~]# ip addr //发现VIP资源已经转移到LVS_Backup机器上了

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 52:54:00:90:ac:0f brd ff:ff:ff:ff:ff:ff

inet 182.148.15.236/27 brd 182.148.15.255 scope global eth0

inet 182.148.15.239/32 scope global eth0

inet6 fe80::5054:ff:fe90:ac0f/64 scope link

valid_lft forever preferred_lft forever 在LVS_Backup上查看LVS集群状态,一切正常。

[root@LVS_Backup ~]# ipvsadm -ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 182.148.15.239:3306 wrr persistent 50

-> 182.148.15.233:3306 Route 3 0 0

-> 182.148.15.238:3306 Route 3 1 1 接着恢复LVS_Master上的Keepalived,发现VIP资源又会重新从LVS_Backup上转移回来。即LVS_Master重新接管服务。

[root@LVS_Master ~]# tail -f /var/log/messages

.......

Apr 26 15:37:14 test3-237 Keepalived_vrrp[5263]: VRRP_Instance(VI_1) Transition to MASTER STATE

Apr 26 15:37:15 test3-237 Keepalived_vrrp[5263]: VRRP_Instance(VI_1) Entering MASTER STATE

Apr 26 15:37:15 test3-237 Keepalived_vrrp[5263]: VRRP_Instance(VI_1) setting protocol VIPs.

Apr 26 15:37:15 test3-237 Keepalived_vrrp[5263]: Sending gratuitous ARP on eth0 for 182.148.15.239

Apr 26 15:37:15 test3-237 Keepalived_vrrp[5263]: VRRP_Instance(VI_1) Sending/queueing gratuitous ARPs on eth0 for 182.148.15.239 [root@LVS_Master ~]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 52:54:00:68:dc:b6 brd ff:ff:ff:ff:ff:ff

inet 182.148.15.237/27 brd 182.148.15.255 scope global eth0

inet 182.148.15.239/32 scope global eth0

inet 182.148.15.239/27 brd 182.148.15.255 scope global secondary eth0:0

inet6 fe80::5054:ff:fe68:dcb6/64 scope link

valid_lft forever preferred_lft forever -----------------------------------------------------------------------------------------

2)接着进行mysql主主热备的高可用

在MySQL1 Real Server和MySQL2 Real Server两台机器的mysql里授权,使远程客户机能正常连接。 mysql> grant all on *.* to test@'%' identified by "123456";

Query OK, 0 rows affected (0.03 sec) mysql> flush privileges; 经测试发现,对于上面的功能验证,不管是LVS_Master的keepalived关闭(宕机)还是后端MySQL2 Real Serve的mysql关闭,在远程客户机上都能正常连接mysql(通过VIP进行连接)(LVS_Master的keepalived关闭时,如若远程客户机在连接mysql中,可以断开重新连接一次即可)

[root@bastion-IDC ~]# mysql -h182.148.15.239 -utest -p123456

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 856

Server version: 5.6.34-log Source distribution Copyright (c) 2000, 2013, Oracle and/or its affiliates. All rights reserved. Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners. Type 'help;' or '\h' for help. Type '\c' to clear the current input statement. mysql> select * from huanqiu.haha;

+----+-----------+

| id | name |

+----+-----------+

| 1 | wangshibo |

| 2 | guohuihui |

| 22 | huihui |

| 23 | bobo |

+----+-----------+

4 rows in set (0.00 sec)

总结几点:

1)MySQL主主复制是集群的基础,组成Server Array,其中每个节点作为Real Server。

2)LVS服务器提供了负载均衡的作用,将用户请求分发到Real Server,一台Real Server故障并不会影响整个集群的。

3)Keepalived搭建主备LVS服务器,避免了LVS服务器的单点故障,出现故障时可以自动切换到正常的节点。

4)VRRP虚拟IP地址和接口实际IP必须在同一个网段内,VRRP两个实际接口不在同一网段,是不能形成主备关系的。因为:

VRRP的形式是组播(多播),这个和广播基本一个原理,只不过缩小了范围,让几个服务器能收到,众所周知广播必须在2层里面实现,出3层就出了广播域了。

VRRP中的ip切换是靠arp欺骗实现的,否则vip从主切到备得断多少机器,arp欺骗也是没法在出了3层以外的2个机器直接做的。

Mysql双主热备+LVS+Keepalived高可用操作记录的更多相关文章

- Mysql双主热备+LVS+Keepalived高可用部署实施手册

MySQL复制能够保证数据的冗余的同时可以做读写分离来分担系统压力,如果是主主复制还可以很好的避免主节点的单点故障.然而MySQL主主复制存在一些问题无法满足我们的实际需要:未提供统一访问入口来实现负 ...

- 高可用Mysql架构_Mysql主从复制、Mysql双主热备、Mysql双主双从、Mysql读写分离(Mycat中间件)、Mysql分库分表架构(Mycat中间件)的演变

[Mysql主从复制]解决的问题数据分布:比如一共150台机器,分别往电信.网通.移动各放50台,这样无论在哪个网络访问都很快.其次按照地域,比如国内国外,北方南方,这样地域性访问解决了.负载均衡:M ...

- MySQL双主热备问题处理

1. Slave_IO_Running: No mysql> show slave status\G *************************** 1. row *********** ...

- keepalived+mysql双主热备

这里使用keepalived实现mysql的双主热备高可用 实验环境: 主机名 IP 系统版本 软件版本 master 192.168.199.6/vip:192.168.199.111 Rhel7. ...

- mysql双主热备

先搭建mysql主从模式,主从请参考mysql 主从笔记 然后在在配置文件里添加如下配置 1 log_slave_updates= #双主热备的关键参数.默认情况下从节点从主节点中同步过来的修改事件是 ...

- Mysql + keepalived 实现双主热备读写分离【转】

Mysql + keepalived 实现双主热备读写分离 2013年6月16日frankwong发表评论阅读评论 架构图 系统:CentOS6.4_X86_64软件版本:Mysql-5.6.12 ...

- Mysql之配置双主热备+keeepalived.md

准备 1 1. 双主 master1 192.168.199.49 2 master2 192.168.199.50 3 VIP 192.168.199.52 //虚拟IP 4 2.环境 master ...

- 11 Mysql之配置双主热备+keeepalived.md

准备 1. 双主 master1 192.168.199.49 master2 192.168.199.50 VIP 192.168.199.52 //虚拟IP 2.环境 master:nginx + ...

- Mysql主从复制,双主热备

Mysql主从复制: 主从复制: 主机准备工作: 开启bin.Log 注意:server-id 是唯一的值 重启mysql:service mysql restart 查看是否开启成功: 查看当前状 ...

随机推荐

- 根据id来大量删除数据between

id的范围来删除数据 比如要删除 110到220的id信息:delete id from 表名 where id between 110 and 220;

- cmder个人配置文件,做个记录

以下附件是自己的cmder配置文件: https://app.yinxiang.com/shard/s13/res/30e84035-5f0f-4baf-b18c-a84ce45ec8b9/wkkcm ...

- Hibernate 中的 idclass mapping 问题

关于出现 idclass mapping 运行错误 @IdClass 注释通常用于定义包含复合键id的Class.即多个属性的关键复合. @IdClass(CountrylanguageEntityP ...

- php开发中遇到的一些问题

php警告提示A session had already been started – ignoring session_start() 解决方案 判断 如果session_id 不存在,说明没有储存 ...

- MySQL基本简单操作03

MySQL基本简单操作 现在我创建了一个数据表,表的内容如下: mysql> select * from gubeiqing_table; +----------+-----+ | name | ...

- Orcale新增、修改、删除字段

一.新增字段 alert table user add( userName VARCHAR2(255 CHAR) ) ; 设置字段不为空, 给出默认值 alert table user add( us ...

- Arcgis10.3在添加XY数据时出现问题

准备通过excel表格(xls格式)中的经纬度生成点数据,但是选择数据的时候报错:连接到数据库失败,常规功能故障,外部表不是预期的格式.如下图所示: 解决方法: 将xls格式的表格另存为csv格式,重 ...

- 监控nginx

vi nginx_status.sh #!/bin/bash HOST="127.0.0.1" PORT="9222" # 检测nginx进程是否存在 func ...

- Python虚拟环境和包管理工具Pipenv的使用详解--看完这一篇就够了

前言 Python虚拟环境是一个虚拟化,从电脑独立开辟出来的环境.在这个虚拟环境中,我们可以pip安装各个项目不同的依赖包,从全局中隔离出来,利于管理. 传统的Python虚拟环境有virtualen ...

- Objective-C 类簇深入理解

类簇(class cluster),是一种基于抽象工厂的设计模式,广泛运用于系统的Foundation框架.顾名思义,即一坨类,这里指的是继承自同一父类的一组私有子类.这种实现既可以简化公共接口,又保 ...