Apache Hadoop YARN – NodeManager--转载

原文地址:http://zh.hortonworks.com/blog/apache-hadoop-yarn-nodemanager/

The NodeManager (NM) is YARN’s per-node agent, and takes care of the individual compute nodes in a Hadoop cluster. This includes keeping up-to date with the ResourceManager (RM), overseeing containers’ life-cycle management; monitoring resource usage (memory, CPU) of individual containers, tracking node-health, log’s management and auxiliary services which may be exploited by different YARN applications.

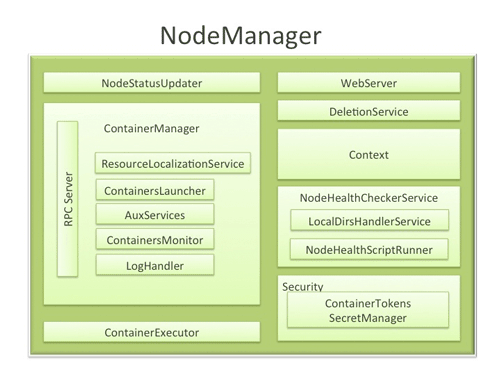

NodeManager Components

- NodeStatusUpdater

On startup, this component registers with the RM and sends information about the resources available on the nodes. Subsequent NM-RM communication is to provide updates on container statuses – new containers running on the node, completed containers, etc.

In addition the RM may signal the NodeStatusUpdater to potentially kill already running containers.

- ContainerManager

This is the core of the NodeManager. It is composed of the following sub-components, each of which performs a subset of the functionality that is needed to manage containers running on the node.

- RPC server: ContainerManager accepts requests from Application Masters (AMs) to start new containers, or to stop running ones. It works with ContainerTokenSecretManager (described below) to authorize all requests. All the operations performed on containers running on this node are written to an audit-log which can be post-processed by security tools.

- ResourceLocalizationService: Responsible for securely downloading and organizing various file resources needed by containers. It tries its best to distribute the files across all the available disks. It also enforces access control restrictions of the downloaded files and puts appropriate usage limits on them.

- ContainersLauncher: Maintains a pool of threads to prepare and launch containers as quickly as possible. Also cleans up the containers’ processes when such a request is sent by the RM or the ApplicationMasters (AMs).

- AuxServices: The NM provides a framework for extending its functionality by configuring auxiliary services. This allows per-node custom services that specific frameworks may require, and still sandbox them from the rest of the NM. These services have to be configured before NM starts. Auxiliary services are notified when an application’s first container starts on the node, and when the application is considered to be complete.

- ContainersMonitor: After a container is launched, this component starts observing its resource utilization while the container is running. To enforce isolation and fair sharing of resources like memory, each container is allocated some amount of such a resource by the RM. The ContainersMonitor monitors each container’s usage continuously and if a container exceeds its allocation, it signals the container to be killed. This is done to prevent any runaway container from adversely affecting other well-behaved containers running on the same node.

- LogHandler: A pluggable component with the option of either keeping the containers’ logs on the local disks or zipping them together and uploading them onto a file-system.

- ContainerExecutor

Interacts with the underlying operating system to securely place files and directories needed by containers and subsequently to launch and clean up processes corresponding to containers in a secure manner.

- NodeHealthCheckerService

Provides functionality of checking the health of the node by running a configured script frequently. It also monitors the health of the disks specifically by creating temporary files on the disks every so often. Any changes in the health of the system are notified to NodeStatusUpdater (described above) which in turn passes on the information to the RM.

- Security

- ApplicationACLsManagerNM needs to gate the user facing APIs like container-logs’ display on the web-UI to be accessible only to authorized users. This component maintains the ACLs lists per application and enforces them whenever such a request is received.

- ContainerTokenSecretManager: verifies various incoming requests to ensure that all the incoming operations are indeed properly authorized by the RM.

- WebServer

Exposes the list of applications, containers running on the node at a given point of time, node-health related information and the logs produced by the containers.

Spotlight on Key Functionality

- Container Launch

To facilitate container launch, the NM expects to receive detailed information about a container’s runtime as part of the container-specifications. This includes the container’s command line, environment variables, a list of (file) resources required by the container and any security tokens.

On receiving a container-launch request – the NM first verifies this request, if security is enabled, to authorize the user, correct resources assignment, etc. The NM then performs the following set of steps to launch the container.

- A local copy of all the specified resources is created (Distributed Cache).

- Isolated work directories are created for the container, and the local resources are made available in these directories.

- The launch environment and command line is used to start the actual container.

- Log Aggregation

Handling user-logs has been one of the big pain-points for Hadoop installations in the past. Instead of truncating user-logs, and leaving them on individual nodes like the TaskTracker, the NM addresses the logs’ management issue by providing the option to move these logs securely onto a file-system (FS), for e.g. HDFS, after the application completes.

Logs for all the containers belonging to a single Application and that ran on this NM are aggregated and written out to a single (possibly compressed) log file at a configured location in the FS. Users have access to these logs via YARN command line tools, the web-UI or directly from the FS.

- How MapReduce shuffle takes advantage of NM’s Auxiliary-services

The Shuffle functionality required to run a MapReduce (MR) application is implemented as an Auxiliary Service. This service starts up a Netty Web Server, and knows how to handle MR specific shuffle requests from Reduce tasks. The MR AM specifies the service id for the shuffle service, along with security tokens that may be required. The NM provides the AM with the port on which the shuffle service is running which is passed onto the Reduce tasks.

Conclusion

In YARN, the NodeManager is primarily limited to managing abstract containers i.e. only processes corresponding to a container and not concerning itself with per-application state management like MapReduce tasks. It also does away with the notion of named slots like map and reduce slots. Because of this clear separation of responsibilities coupled with the modular architecture described above, NM can scale much more easily and its code is much more maintainable.

Apache Hadoop YARN – NodeManager--转载的更多相关文章

- Apache Hadoop YARN: 背景及概述

从2012年8月开始Apache Hadoop YARN(YARN = Yet Another Resource Negotiator)成了Apache Hadoop的一项子工程.自此Apache H ...

- hadoop错误org.apache.hadoop.yarn.exceptions.YarnException Unauthorized request to start container

错误: 14/04/29 02:45:07 INFO mapreduce.Job: Job job_1398704073313_0021 failed with state FAILED due to ...

- spark on yarn 动态资源分配报错的解决:org.apache.hadoop.yarn.exceptions.InvalidAuxServiceException: The auxService:spark_shuffle does not exist

组件:cdh5.14.0 spark是自己编译的spark2.1.0-cdh5.14.0 第一步:确认spark-defaults.conf中添加了如下配置: spark.shuffle.servic ...

- org.apache.hadoop.yarn.exceptions.InvalidAuxServiceException: The auxService: mapreduce_shuffle do

在yarn-site.xml 配置文件中增加: <property> <name>yarn.nodemanager.aux-services</name> < ...

- Hadoop - YARN NodeManager 剖析

一 概述 NodeManager是执行在单个节点上的代理,它管理Hadoop集群中单个计算节点,功能包含与ResourceManager保持通信,管理Container的生命周期.监控 ...

- spark 笔记 4:Apache Hadoop YARN: Yet Another Resource Negotiator

spark支持YARN做资源调度器,所以YARN的原理还是应该知道的:http://www.socc2013.org/home/program/a5-vavilapalli.pdf 但总体来说, ...

- Apache Hadoop YARN – ResourceManager--转载

原文地址:http://zh.hortonworks.com/blog/apache-hadoop-yarn-resourcemanager/ ResourceManager (RM) is the ...

- Exception in thread "main" java.lang.NoClassDefFoundError: org/apache/hadoop/yarn/exceptions/YarnException

这个是Flink 1.11.1 使用yarn-session 出现的错误:原因是在Flink1.11 之后不再提供flink-shaded-hadoop-*” jars 需要在yarn-sessio ...

- Caused by:java.lang.ClassNotFoundException:org.apache.hadoop.yarn.util.Apps

错误原因 缺少hadoop-yarn.jar包. 导入jar包就好了~-~

随机推荐

- 20155315 2016-2017-2 《Java程序设计》第四周学习总结

教材学习内容总结 1.继承与多态 Java中只有单一继承,也就是只能有一个父类; 多态即指一个父类可由多个子类继承. 继承可以复用代码,更大的用处是实现「多态」. 封装是继承的基础,继承是多态的基础 ...

- Ubuntu配置android环境

jdk:http://www.oracle.com/technetwork/cn/java/javase/downloads/index.html 安装JDK的步骤:http://jingyan.ba ...

- Spring MVC接受参数的注解

一.Request请求发出后,Headler Method是如何接收处理数据的? Headler Method绑定常用的参数注解,根据处理request的不同部分分为四类: A.处理 Request ...

- Mysql 开启Federated引擎的方法

原文参考:http://www.thinksaas.cn/topics/0/63/63532.html 进入mysql命令行,没有看到Federated,说明没有安装 mysql>show en ...

- javaweb总结(四十)——编写自己的JDBC框架

一.元数据介绍 元数据指的是"数据库"."表"."列"的定义信息. 1.1.DataBaseMetaData元数据 Connection.g ...

- 软考之信息安全工程师(包含2016-2018历年真题详解+官方指定教程+VIP视频教程)

软考-中级信息安全工程师2016-2018历年考试真题以及详细答案,同时含有信息安全工程师官方指定清华版教程.信息安全工程师高清视频教程.持续更新后续年份的资料.请点赞!!请点赞!!!绝对全部货真价实 ...

- Python解包参数列表及 Lambda 表达式

解包参数列表 当参数已经在python列表或元组中但需要为需要单独位置参数的函数调用解包时,会发生相反的情况.例如,内置的 range() 函数需要单独的 start 和 stop 参数.如果它们不能 ...

- 使用calendar日历插件实现动态展示会议信息

效果图如下,标红色为有会议安排,并跳转详细会议信息页面. html页面 <%@ page contentType="text/html;charset=UTF-8"%> ...

- mtv网站架构模式适合企业网站应用吗?

mtv网站架构模式适合企业网站应用吗?有时候在思考这样一个问题. 从开发角度来说,本来mvc的进度慢了些,如果在数据库管理方面用sql的话,管理起来也不很方便.小企业网本来数据就不很多,也没什么太多安 ...

- python实现lower_bound和upper_bound

由于对于二分法一直都不是很熟悉,这里就用C++中的lower_bound和upper_bound练练手.这里用python实现 lower_bound和upper_bound本质上用的就是二分法,lo ...