python爬虫---从零开始(五)pyQuery库

什么是pyQuery:

强大又灵活的网页解析库。如果你觉得正则写起来太麻烦(我不会写正则),如果你觉得BeautifulSoup的语法太难记,如果你熟悉JQuery的语法,那么PyQuery就是你最佳的选择。

pyQuery的安装pip3 install pyquery即可安装啦。

pyQuery的基本用法:

初始化:

字符串初始化:

#!/usr/bin/env python

# -*- coding: utf-8 -*- html = """

<html><head><title>The Dormouse's story</head>

<body>

<p class="title" name="dromouse"><b>The Dormouse's story</b></p>

<p class="story">Once upon a time there were three little sisters;and thier names were

<a href ="http://example.com/elsie" class="sister" id="link1"><!-- Elsie --></a>

<a href ="http://example.com/lacle" class="sister" id="link2">Lacie</a> and

<a href ="http://example.com/title" class="sister" id="link3">Title</a>; and they lived at the boottom of a well.</p>

<p class="story">...</p>

""" from pyquery import PyQuery as pq

doc = pq(html)

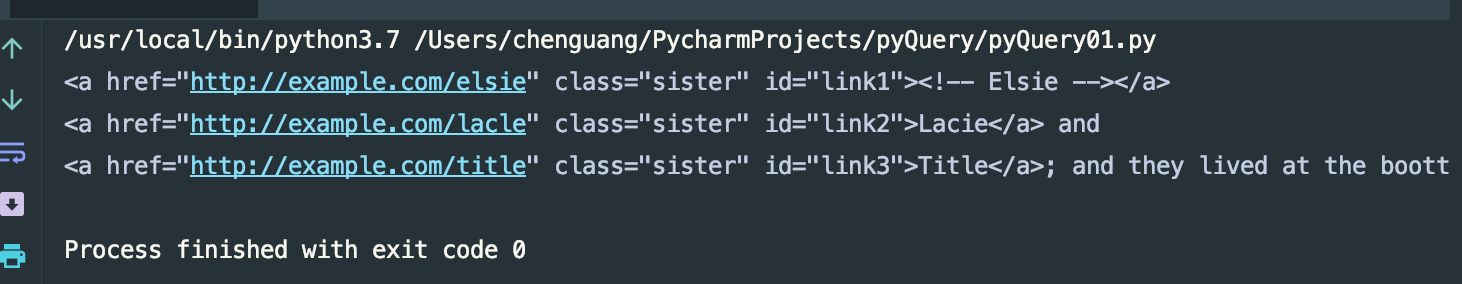

print(doc('a'))

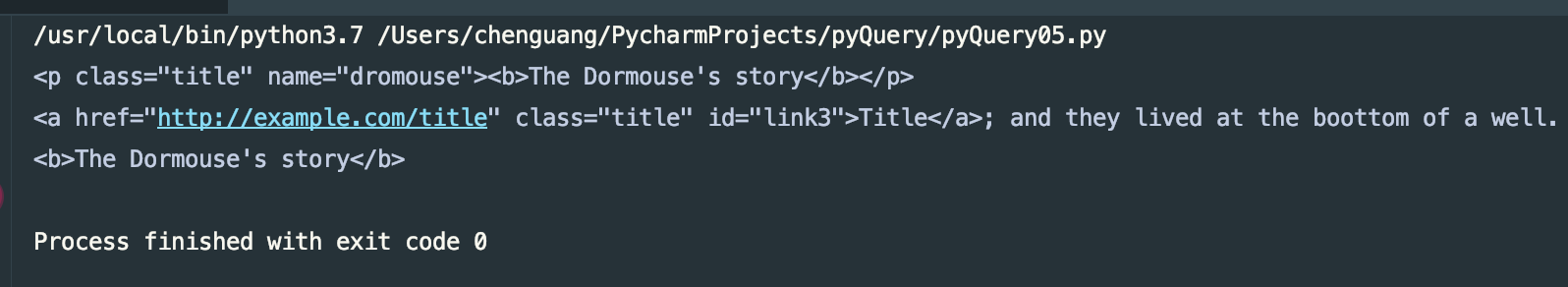

运行结果:

URL初始化:

#!/usr/bin/env python

# -*- coding: utf-8 -*-

# URL初始化 from pyquery import PyQuery as pq

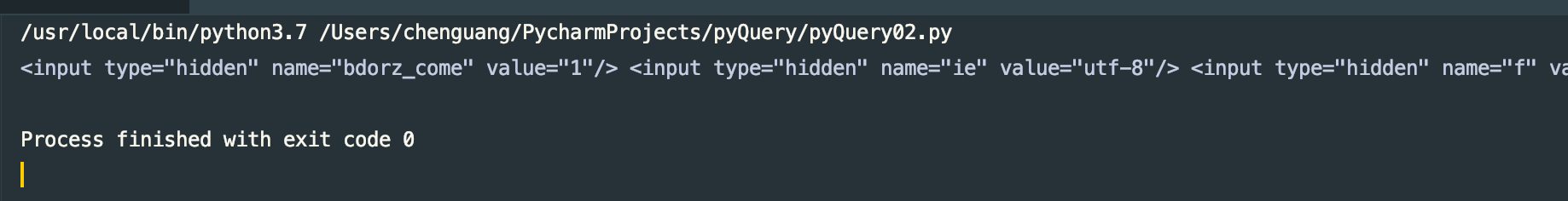

doc = pq('http://www.baidu.com')

print(doc('input'))

运行结果:

文件初始化:

#!/usr/bin/env python

# -*- coding: utf-8 -*-

# 文件初始化 from pyquery import PyQuery as pq

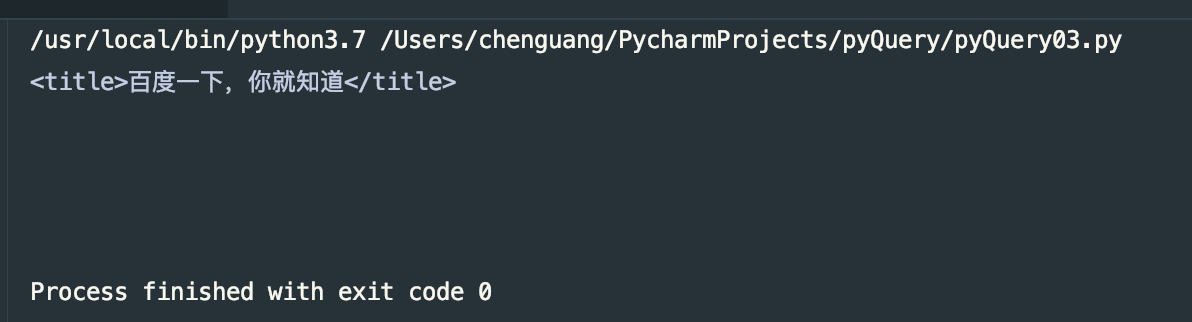

doc = pq(filename='baidu.html')

print(doc('title'))

运行结果:

选择方式和jquery一致,id、name、class都是如此,还有很多都和jquery一致。

基本CSS选择器:

#!/usr/bin/env python

# -*- coding: utf-8 -*-

# Css选择器 html = """

<html><head><title>The Dormouse's story</head>

<body>

<p class="title" name="dromouse"><b>The Dormouse's story</b></p>

<p class="story">Once upon a time there were three little sisters;and thier names were

<a href ="http://example.com/elsie" class="sister" id="link1"><!-- Elsie --></a>

<a href ="http://example.com/lacle" class="sister" id="link2">Lacie</a> and

<a href ="http://example.com/title" class="title" id="link3">Title</a>; and they lived at the boottom of a well.</p>

<p class="story">...</p>

"""

from pyquery import PyQuery as pq

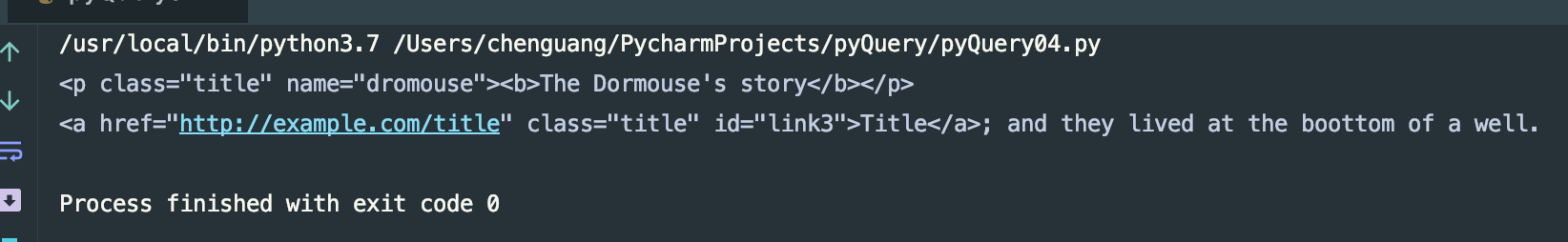

doc = pq(html)

print(doc('.title'))

运行结果:

查找元素:

子元素:

#!/usr/bin/env python

# -*- coding: utf-8 -*-

# 子元素 html = """

<html><head><title>The Dormouse's story</head>

<body>

<p class="title" name="dromouse"><b>The Dormouse's story</b></p>

<p class="story">Once upon a time there were three little sisters;and thier names were

<a href ="http://example.com/elsie" class="sister" id="link1"><!-- Elsie --></a>

<a href ="http://example.com/lacle" class="sister" id="link2">Lacie</a> and

<a href ="http://example.com/title" class="title" id="link3">Title</a>; and they lived at the boottom of a well.</p>

<p class="story">...</p>

"""

from pyquery import PyQuery as pq

doc = pq(html)

items = doc('.title')

print(type(items))

print(items)

p = items.find('b')

print(type(p))

print(p)

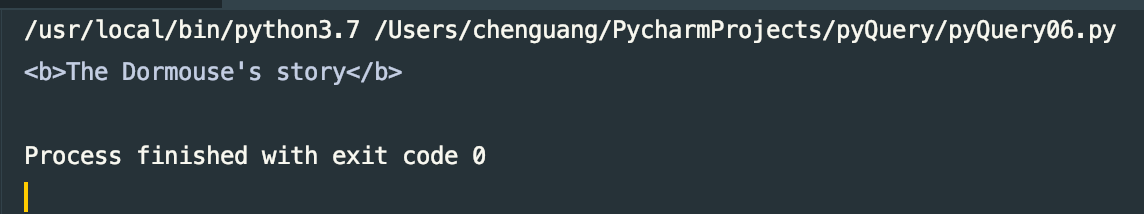

该代码为查找id为title的标签,我们可以看到id为title的标签有两个一个是p标签,一个是a标签,然后我们再使用find方法,查找出我们需要的p标签,运行结果:

这里需要注意的是,我们所使用的find是查找每一个元素内部的标签.

children:

#!/usr/bin/env python

# -*- coding: utf-8 -*-

# 子元素 html = """

<html><head><title>The Dormouse's story</head>

<body>

<p class="title" name="dromouse"><b>The Dormouse's story</b></p>

<p class="story">Once upon a time there were three little sisters;and thier names were

<a href ="http://example.com/elsie" class="sister" id="link1"><!-- Elsie --></a>

<a href ="http://example.com/lacle" class="sister" id="link2">Lacie</a> and

<a href ="http://example.com/title" class="title" id="link3">Title</a>; and they lived at the boottom of a well.</p>

<p class="story">...</p>

"""

from pyquery import PyQuery as pq

doc = pq(html)

items = doc('.title')

print(items.children())

运行结果:

也可以在children()内添加选择器条件:

#!/usr/bin/env python

# -*- coding: utf-8 -*-

# 子元素 html = """

<html><head><title>The Dormouse's story</head>

<body>

<p class="title" name="dromouse"><b>The Dormouse's story</b></p>

<p class="story">Once upon a time there were three little sisters;and thier names were

<a href ="http://example.com/elsie" class="sister" id="link1"><!-- Elsie --></a>

<a href ="http://example.com/lacle" class="sister" id="link2">Lacie</a> and

<a href ="http://example.com/title" class="title" id="link3">Title</a>; and they lived at the boottom of a well.</p>

<p class="story">...</p>

"""

from pyquery import PyQuery as pq

doc = pq(html)

items = doc('.title')

print(items.children('b'))

输出结果和上面的一致。

父元素:

#!/usr/bin/env python

# -*- coding: utf-8 -*-

# 子元素 html = """

<html><head><title>The Dormouse's story</head>

<body>

<p class="title" name="dromouse"><b>The Dormouse's story</b></p>

<p class="story">Once upon a time there were three little sisters;and thier names were

<a href ="http://example.com/elsie" class="sister" id="link1"><!-- Elsie --></a>

<a href ="http://example.com/lacle" class="sister" id="link2">Lacie</a> and

<a href ="http://example.com/title" class="title" id="link3">Title</a>; and they lived at the boottom of a well.</p>

<p class="story">...</p>

"""

from pyquery import PyQuery as pq

doc = pq(html)

items = doc('#link1')

print(items)

print(items.parent())

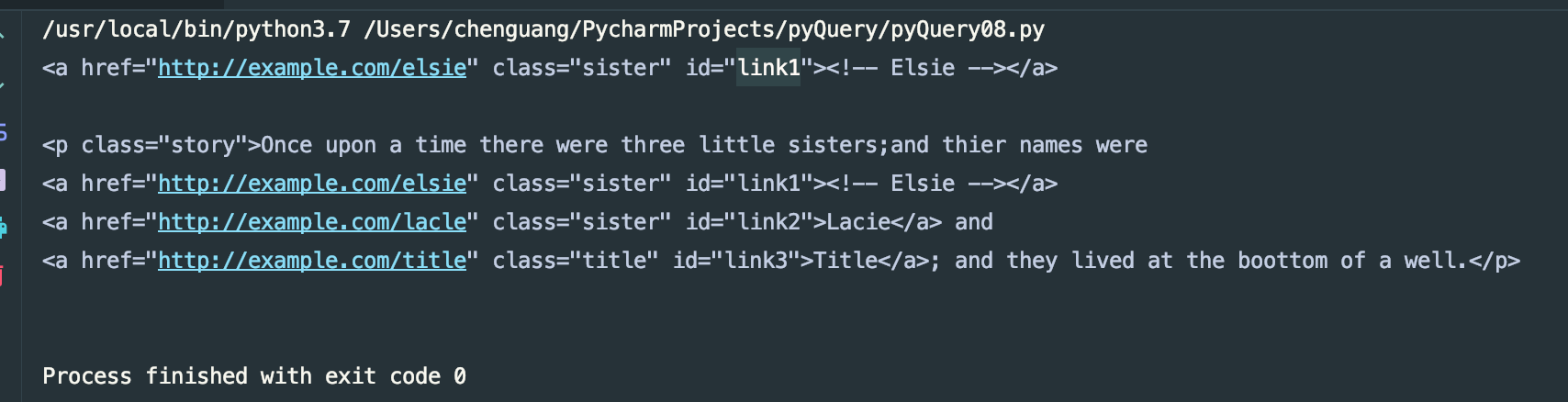

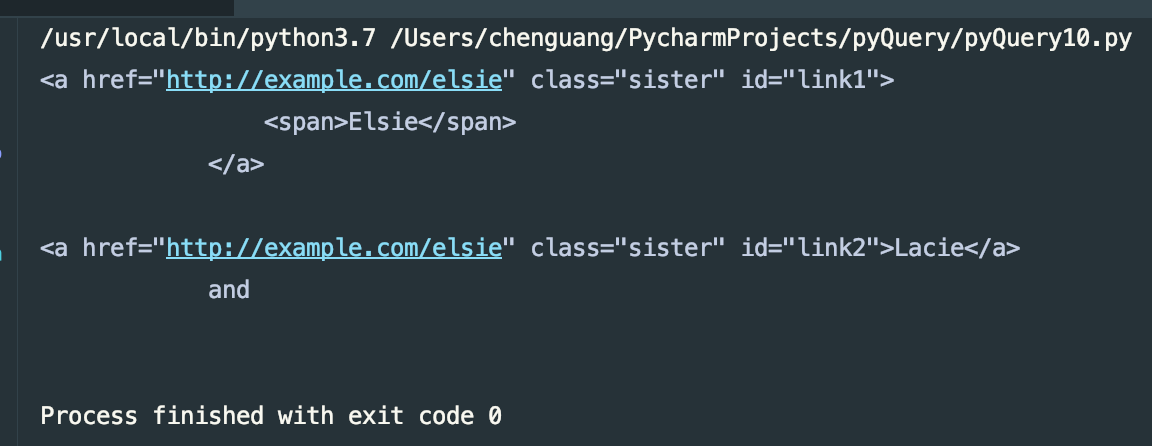

运行结果:

这里只输出一个父元素。这里我们用parents方法会给予我们返回所有父元素,祖先元素

#!/usr/bin/env python

# -*- coding: utf-8 -*-

# 祖先元素 html = """

<html>

<head>

<title>The Dormouse's story</title>

</head>

<body>

<p class="story" id="dromouse">Once upo a time were three little sister;and theru name were

<a href="http://example.com/elsie" class="sister" id="link1">

<span>Elsie</span>

</a>

<a href="http://example.com/elsie" class="sister" id="link2">Lacie</a>

and

<a href="http://example.com/elsie" class="sister" id="link3">Title</a>

<a href="http://example.com/elsie" class="body" id="link4">Title</a>

</p>

<p class="story">...</p>

"""

from pyquery import PyQuery as pq

doc = pq(html)

items = doc('#link1')

print(items)

print(items.parents('body'))

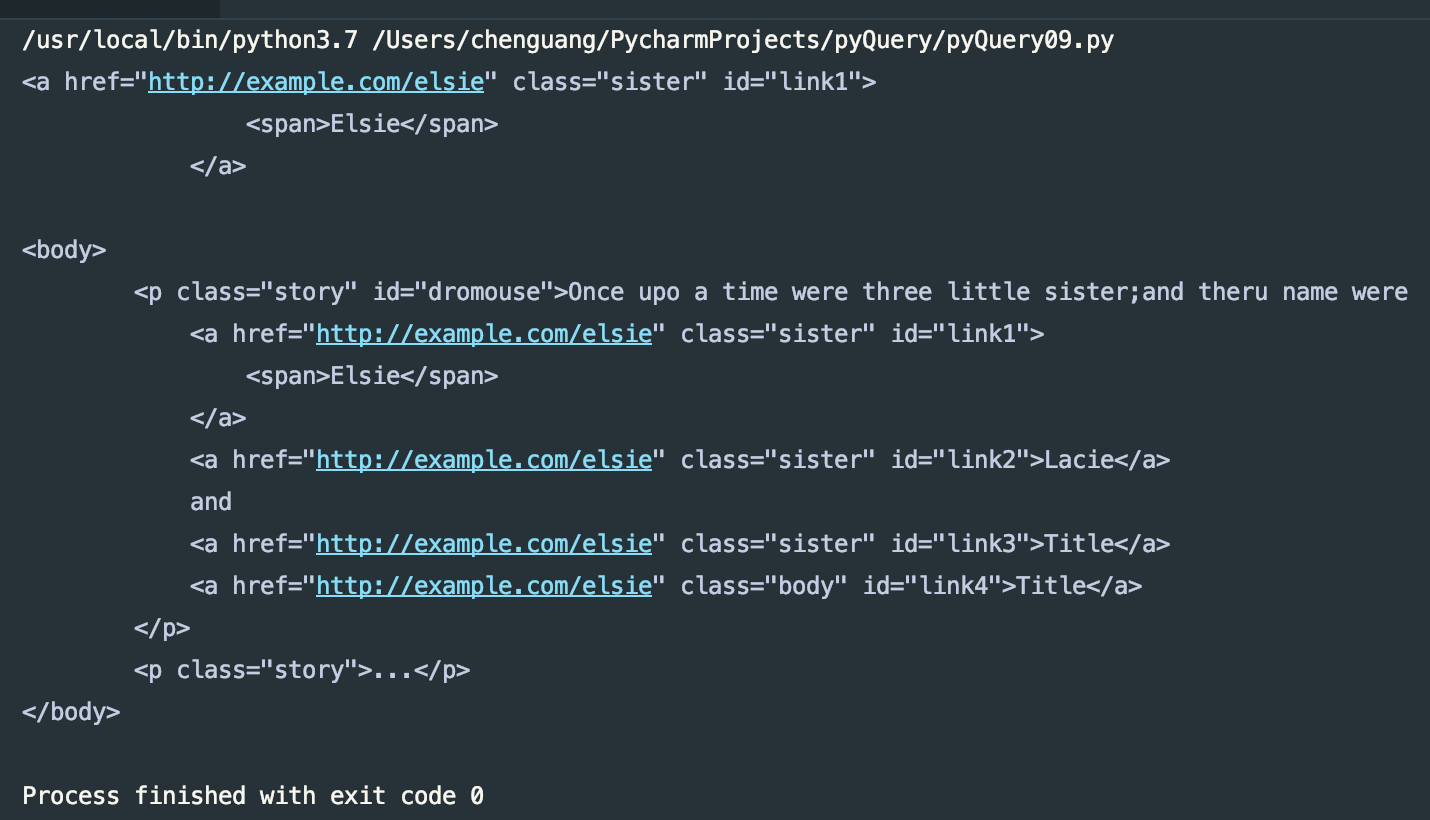

运行结果:

兄弟元素:

#!/usr/bin/env python

# -*- coding: utf-8 -*-

# 兄弟元素 html = """

<html>

<head>

<title>The Dormouse's story</title>

</head>

<body>

<p class="story" id="dromouse">Once upo a time were three little sister;and theru name were

<a href="http://example.com/elsie" class="sister" id="link1">

<span>Elsie</span>

</a>

<a href="http://example.com/elsie" class="sister" id="link2">Lacie</a>

and

<a href="http://example.com/elsie" class="sister" id="link3">Title</a>

<a href="http://example.com/elsie" class="body" id="link4">Title</a>

</p>

<p class="story">...</p>

"""

from pyquery import PyQuery as pq

doc = pq(html)

items = doc('#link1')

print(items)

print(items.siblings('#link2'))

运行结果:

上面就把查找元素的方法都说了,下面我来看一下如何遍历元素。

遍历

#!/usr/bin/env python

# -*- coding: utf-8 -*-

# 兄弟元素 html = """

<html>

<head>

<title>The Dormouse's story</title>

</head>

<body>

<p class="story" id="dromouse">Once upo a time were three little sister;and theru name were

<a href="http://example.com/elsie" class="sister" id="link1">

<span>Elsie</span>

</a>

<a href="http://example.com/elsie" class="sister" id="link2">Lacie</a>

and

<a href="http://example.com/elsie" class="sister" id="link3">Title</a>

<a href="http://example.com/elsie" class="body" id="link4">Title</a>

</p>

<p class="story">...</p>

"""

from pyquery import PyQuery as pq

doc = pq(html)

items = doc('a')

for k,v in enumerate(items.items()):

print(k,v)

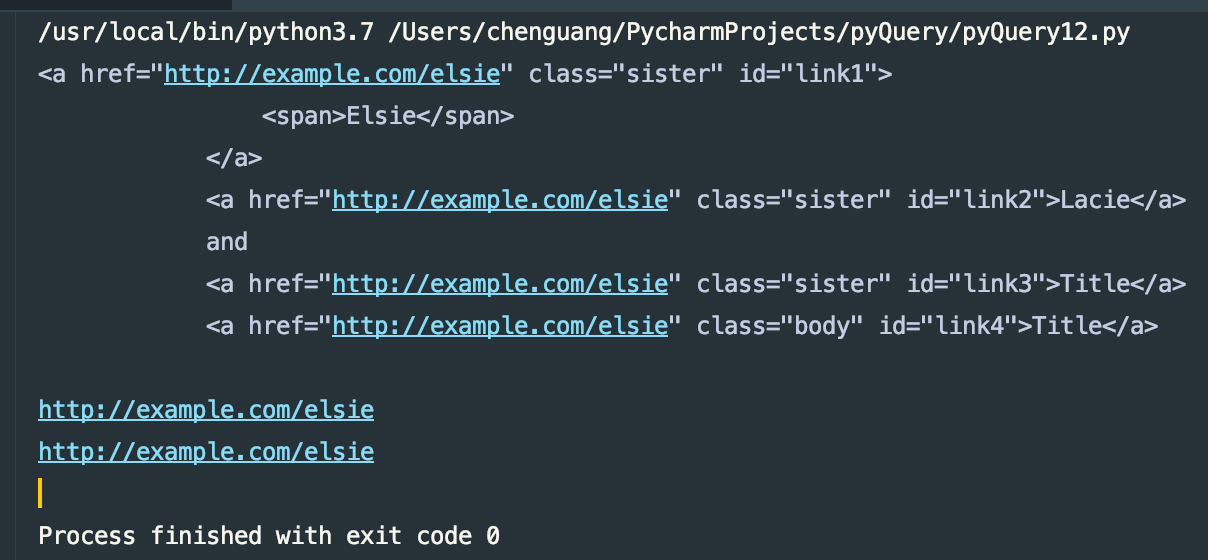

运行结果:

获取信息:

获取属性:

#!/usr/bin/env python

# -*- coding: utf-8 -*-

# 获取属性 html = """

<html>

<head>

<title>The Dormouse's story</title>

</head>

<body>

<p class="story" id="dromouse">Once upo a time were three little sister;and theru name were

<a href="http://example.com/elsie" class="sister" id="link1">

<span>Elsie</span>

</a>

<a href="http://example.com/elsie" class="sister" id="link2">Lacie</a>

and

<a href="http://example.com/elsie" class="sister" id="link3">Title</a>

<a href="http://example.com/elsie" class="body" id="link4">Title</a>

</p>

<p class="story">...</p>

"""

from pyquery import PyQuery as pq

doc = pq(html)

items = doc('a')

print(items)

print(items.attr('href'))

print(items.attr.href)

运行结果:

获得文本:

#!/usr/bin/env python

# -*- coding: utf-8 -*-

# 获取属性 html = """

<html>

<head>

<title>The Dormouse's story</title>

</head>

<body>

<p class="story" id="dromouse">Once upo a time were three little sister;and theru name were

<a href="http://example.com/elsie" class="sister" id="link1">

<span>Elsie</span>

</a>

<a href="http://example.com/elsie" class="sister" id="link2">Lacie</a>

and

<a href="http://example.com/elsie" class="sister" id="link3">Title</a>

<a href="http://example.com/elsie" class="body" id="link4">Title</a>

</p>

<p class="story">...</p>

"""

from pyquery import PyQuery as pq

doc = pq(html)

items = doc('a')

print(items)

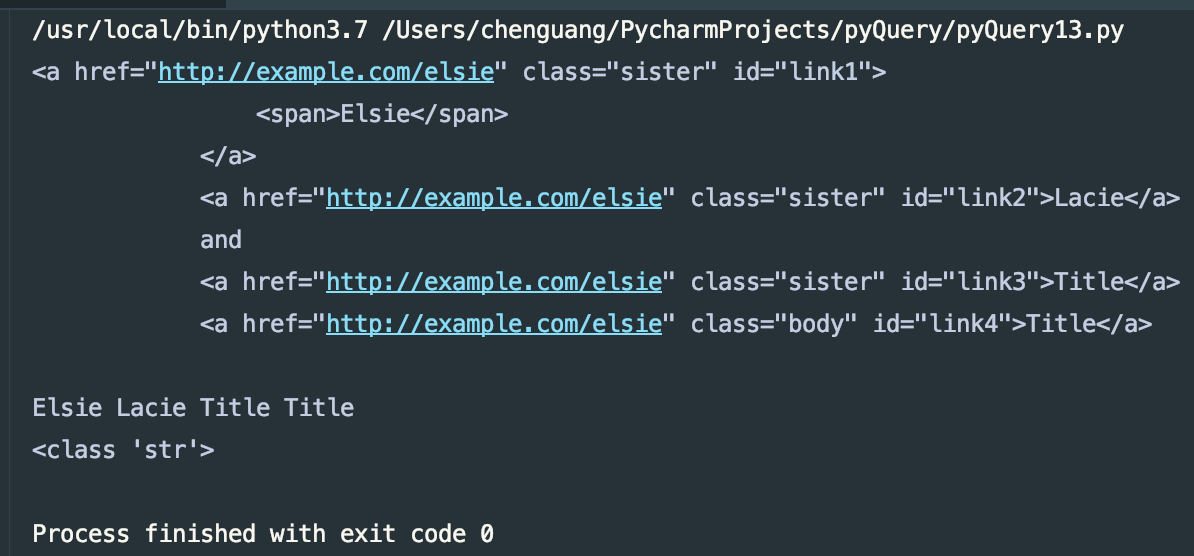

print(items.text())

print(type(items.text()))

运行结果:

获得HTML:

#!/usr/bin/env python

# -*- coding: utf-8 -*-

# 获取属性 html = """

<html>

<head>

<title>The Dormouse's story</title>

</head>

<body>

<p class="story" id="dromouse">Once upo a time were three little sister;and theru name were

<a href="http://example.com/elsie" class="sister" id="link1">

<span>Elsie</span>

</a>

<a href="http://example.com/elsie" class="sister" id="link2">Lacie</a>

and

<a href="http://example.com/elsie" class="sister" id="link3">Title</a>

<a href="http://example.com/elsie" class="body" id="link4">Title</a>

</p>

<p class="story">...</p>

"""

from pyquery import PyQuery as pq

doc = pq(html)

items = doc('a')

print(items.html())

运行结果:

DOM操作:

addClass、removeClass

#!/usr/bin/env python

# -*- coding: utf-8 -*-

# DOM操作,addClass、removeClass html = """

<html>

<head>

<title>The Dormouse's story</title>

</head>

<body>

<p class="story" id="dromouse">Once upo a time were three little sister;and theru name were

<a href="http://example.com/elsie" class="sister" id="link1">

<span>Elsie</span>

</a>

<a href="http://example.com/elsie" class="sister" id="link2">Lacie</a>

and

<a href="http://example.com/elsie" class="sister" id="link3">Title</a>

<a href="http://example.com/elsie" class="body" id="link4">Title</a>

</p>

<p class="story">...</p>

"""

from pyquery import PyQuery as pq

doc = pq(html)

items = doc('#link2')

print(items)

items.addClass('addStyle') # add_class

print(items)

items.remove_class('sister') # removeClass

print(items)

运行结果:

attr、css:

#!/usr/bin/env python

# -*- coding: utf-8 -*-

# DOM操作,attr,css html = """

<html>

<head>

<title>The Dormouse's story</title>

</head>

<body>

<p class="story" id="dromouse">Once upo a time were three little sister;and theru name were

<a href="http://example.com/elsie" class="sister" id="link1">

<span>Elsie</span>

</a>

<a href="http://example.com/elsie" class="sister" id="link2">Lacie</a>

and

<a href="http://example.com/elsie" class="sister" id="link3">Title</a>

<a href="http://example.com/elsie" class="body" id="link4">Title</a>

</p>

<p class="story">...</p>

"""

from pyquery import PyQuery as pq

doc = pq(html)

items = doc('#link2')

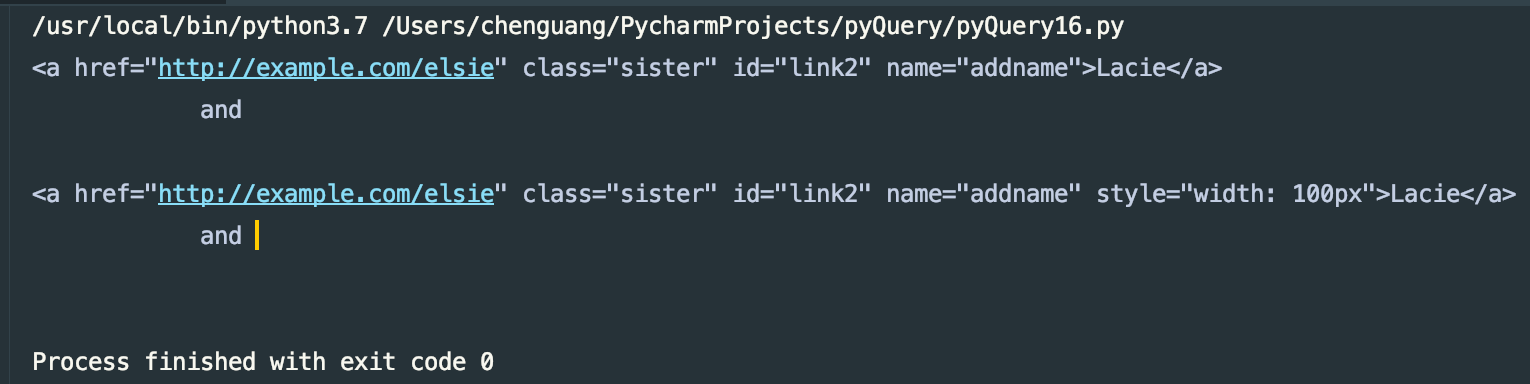

items.attr('name','addname')

print(items)

items.css('width','100px')

print(items)

可以给予新的属性,如果原来有该属性,会覆盖掉原有的属性

运行结果:

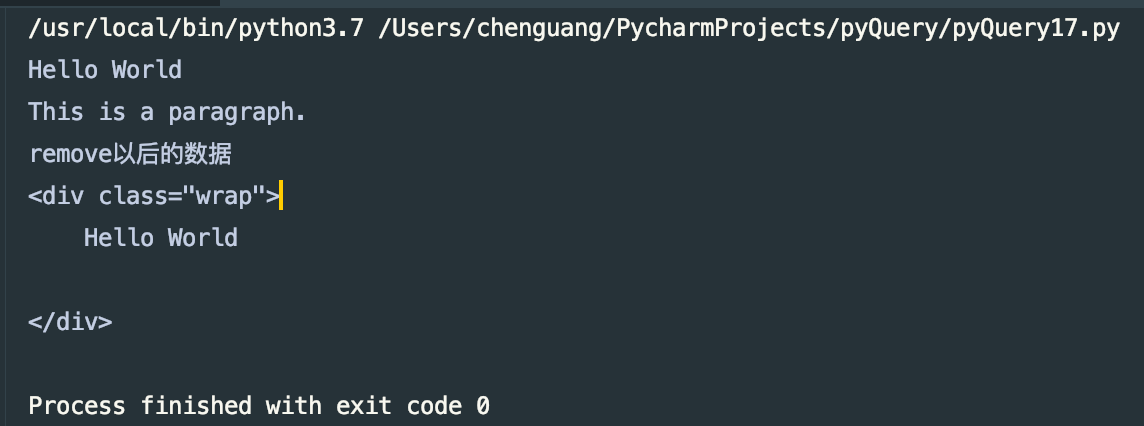

remove:

#!/usr/bin/env python

# -*- coding: utf-8 -*-

# DOM操作,remove html = """

<div class="wrap">

Hello World

<p>This is a paragraph.</p>

</div>

"""

from pyquery import PyQuery as pq

doc = pq(html)

wrap = doc('.wrap')

print(wrap.text())

wrap.find('p').remove()

print("remove以后的数据")

print(wrap)

运行结果:

还有很多其他的DOM方法,想了解更多的小伙伴可以阅读其官方文档,地址:https://pyquery.readthedocs.io/en/latest/api.html

伪类选择器:

#!/usr/bin/env python

# -*- coding: utf-8 -*-

# DOM操作,伪类选择器 html = """

<html>

<head>

<title>The Dormouse's story</title>

</head>

<body>

<p class="story" id="dromouse">Once upo a time were three little sister;and theru name were

<a href="http://example.com/elsie" class="sister" id="link1">

<span>Elsie</span>

</a>

<a href="http://example.com/elsie" class="sister" id="link2">Lacie</a>

and

<a href="http://example.com/elsie" class="sister" id="link3">Title</a>

<a href="http://example.com/elsie" class="body" id="link4">Title</a>

</p>

<p class="story">...</p>

"""

from pyquery import PyQuery as pq

doc = pq(html)

# print(doc)

wrap = doc('a:first-child') # 第一个标签

print(wrap)

wrap = doc('a:last-child') # 最后一个标签

print(wrap)

wrap = doc('a:nth-child(2)') # 第二个标签

print(wrap)

wrap = doc('a:gt(2)') # 比2大的索引 标签 即为 0 1 2 3 4 从0开始的 不是1

print(wrap)

wrap = doc('a:nth-child(2n)') # 第 2的整数倍 个标签

print(wrap)

wrap = doc('a:contains(Lacie)') # 包含Lacie文本的标签

print(wrap)

这里不在详细的一一列举了,了解更多CSS选择器可以查看官方文档,由W3C提供地址:http://www.w3school.com.cn/css/index.asp

到这里我们就把pyQuery的使用方法大致的说完了,想了解更多,更详细的可以阅读官方文档,地址:https://pyquery.readthedocs.io/en/latest/

上述代码地址:https://gitee.com/dwyui/pyQuery.git

感谢大家的阅读,不正确的地方,还希望大家来斧正,鞠躬,谢谢

python爬虫---从零开始(五)pyQuery库的更多相关文章

- PYTHON 爬虫笔记六:PyQuery库基础用法

知识点一:PyQuery库详解及其基本使用 初始化 字符串初始化 html = ''' <div> <ul> <li class="item-0"&g ...

- PYTHON 爬虫笔记五:BeautifulSoup库基础用法

知识点一:BeautifulSoup库详解及其基本使用方法 什么是BeautifulSoup 灵活又方便的网页解析库,处理高效,支持多种解析器.利用它不用编写正则表达式即可方便实现网页信息的提取库. ...

- Python爬虫进阶五之多线程的用法

前言 我们之前写的爬虫都是单个线程的?这怎么够?一旦一个地方卡到不动了,那不就永远等待下去了?为此我们可以使用多线程或者多进程来处理. 首先声明一点! 多线程和多进程是不一样的!一个是 thread ...

- python爬虫之re正则表达式库

python爬虫之re正则表达式库 正则表达式是用来简洁表达一组字符串的表达式. 编译:将符合正则表达式语法的字符串转换成正则表达式特征 操作符 说明 实例 . 表示任何单个字符 [ ] 字符集,对单 ...

- Python爬虫实战五之模拟登录淘宝并获取所有订单

经过多次尝试,模拟登录淘宝终于成功了,实在是不容易,淘宝的登录加密和验证太复杂了,煞费苦心,在此写出来和大家一起分享,希望大家支持. 温馨提示 更新时间,2016-02-01,现在淘宝换成了滑块验证了 ...

- Python爬虫入门五之URLError异常处理

大家好,本节在这里主要说的是URLError还有HTTPError,以及对它们的一些处理. 1.URLError 首先解释下URLError可能产生的原因: 网络无连接,即本机无法上网 连接不到特定的 ...

- Python爬虫--- 1.1请求库的安装与使用

来说先说爬虫的原理:爬虫本质上是模拟人浏览信息的过程,只不过他通过计算机来达到快速抓取筛选信息的目的所以我们想要写一个爬虫,最基本的就是要将我们需要抓取信息的网页原原本本的抓取下来.这个时候就要用到请 ...

- 转 Python爬虫入门五之URLError异常处理

静觅 » Python爬虫入门五之URLError异常处理 1.URLError 首先解释下URLError可能产生的原因: 网络无连接,即本机无法上网 连接不到特定的服务器 服务器不存在 在代码中, ...

- python爬虫---从零开始(四)BeautifulSoup库

BeautifulSoup是什么? BeautifulSoup是一个网页解析库,相比urllib.Requests要更加灵活和方便,处理高校,支持多种解析器. 利用它不用编写正则表达式即可方便地实现网 ...

- python爬虫知识点总结(一)库的安装

环境要求: 1.编程语言版本python3: 2.系统:win10; 3.浏览器:Chrome68.0.3440.75:(如果不是最新版有可能影响到程序执行) 4.chromedriver2.41 注 ...

随机推荐

- bzoj4773

矩阵乘法 ...爆零了... 想到Floyd,却不知道怎么限制点数... 其实我们只要给Floyd加一维,dp[i][j][k]表示当前走过了i个点,从j到k的最短距离,然后这样可以倍增,最后看是否有 ...

- ndoejs后台查询数据库返回的值-进行解析

JSON.parse(jsonstr); //可以将json字符串转换成json对象 JSON.stringify(jsonobj); //可以将json对象转换成json对符串

- Hackerearth: Mathison and the Pokémon fights

Mathison and the Pokémon fights code 这是一道比较有意思,出的也非常好的题目. 给定$n$个平面上的点$(x_i, y_i)$,(允许离线地)维护$Q$个操作:1. ...

- 数据库MySQL技术-基础知识

数据库技术: SQL,关系数据库标准 注意: 环境编码: cmd客户端是固定的gbk编码 而php网页中,是该网页文件的编码(现在主流都是utf8). mysql> set names gb ...

- Goroutine被动调度之一(18)

本文是<Go语言调度器源代码情景分析>系列的第18篇,也是第四章<Goroutine被动调度>的第1小节. 前一章我们详细分析了调度器的调度策略,即调度器如何选取下一个进入运行 ...

- EOJ3247:铁路修复计划

传送门 题意 分析 这题用二分做就好啦,有点卡常数,改了几下for的次数 套了个板子,连最小生成树都忘记了QAQ trick 代码 #include<cstdio> #include< ...

- lightoj1079【背包】

题意: 哈利波特抢银行... 给出n个银行,每个银行有a[i]百万和b[i]的风险.然后再给一个风险值P,不能超过P. 思路: 如果告诉你概率的小数的位数,可能这个就会不一样了... 慨率的计算,风险 ...

- bzoj 4698: Sdoi2008 Sandy的卡片【SAM】

差分之后用SAM求LCS,然后答案就是LCS+1 #include<iostream> #include<cstdio> #include<cstring> usi ...

- bzoj 2716 [Violet 3]天使玩偶 【CDQ分治】

KD-tree可做,但是我不会暂时不考虑 大意:在二维平面内,给定n个点,m个操作.操作A:加入一个点:操作B:询问一个点与平面上加入的点的最近距离 不封装会T不封装会T不封装会T不封装会T不封装会 ...

- ACM_LRU页面置换算法

LRU页面置换算法 Time Limit: 2000/1000ms (Java/Others) Problem Description: sss操作系统没听课, 这周的操作系统作业完全不会, 你能帮他 ...