k8s集群部署kafka

一、部署步骤

二、部署NFS并挂载共享目录

注:使用云产品的NAS存储可跳过此步骤

1、服务端安装NFS

[root@localhost ~]# yum -y install nfs-utils rpcbind

2、创建共享目录并设置权限

[root@localhost ~]# mkdir -p /data/{kafka,zookeeper}

[root@localhost ~]# chmod 755 -R /data/*

3、修改配置文件

[root@localhost ~]# cat >> /etc/exports<<EOF

/data/kafka *(rw,sync,no_root_squash)

/data/zookeeper *(rw,sync,no_root_squash)

EOF

4、服务端启动

[root@localhost ~]# systemctl start rpcbind.service

[root@localhost ~]# systemctl enable rpcbind

[root@localhost ~]# systemctl status rpcbind

[root@localhost ~]# systemctl start nfs.service

[root@localhost ~]# systemctl enable nfs

[root@localhost ~]# systemctl status nfs

5、客户端安装NFS

[root@localhost ~]# yum -y install nfs-utils rpcbind

6、客户端启动

[root@localhost ~]# systemctl start rpcbind.service

[root@localhost ~]# systemctl enable rpcbind

[root@localhost ~]# systemctl status rpcbind

[root@localhost ~]# systemctl start nfs.service

[root@localhost ~]# systemctl enable nfs

[root@localhost ~]# systemctl status nfs

7、查看共享目录

[root@localhost ~]# showmount -e 192.168.1.128

8、客户端挂载共享目录

[root@localhost ~]# mkdir -p /data/{kafka,zookeeper}

[root@localhost ~]# mount 192.168.1.128:/data/kafka /data/kafka

[root@localhost ~]# mount 192.168.1.128:/data/zookeeper /data/zookeeper

三、部署zookeeper集群

1、准备yaml文件

[root@localhost ~] cat >> zp.yaml<<EOF

apiVersion: v1

kind: Namespace

metadata:

name: middleware

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-provisioner

namespace: middleware

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-provisioner-runner

namespace: middleware

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["watch", "create", "update", "patch"]

- apiGroups: [""]

resources: ["services", "endpoints"]

verbs: ["get","create","list", "watch","update"]

- apiGroups: ["extensions"]

resources: ["podsecuritypolicies"]

resourceNames: ["nfs-provisioner"]

verbs: ["use"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-provisioner

subjects:

- kind: ServiceAccount

name: nfs-provisioner

namespace: middleware

roleRef:

kind: ClusterRole

name: nfs-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

apiVersion: storage.k8s.io/v1beta1

kind: StorageClass

metadata:

name: zk-nfs-storage

namespace: middleware

mountOptions:

- vers=4.0

- nolock,tcp,noresvport

provisioner: zk/nfs

reclaimPolicy: Retain

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: zk-nfs-client-provisioner

namespace: middleware

spec:

replicas: 1

selector:

matchLabels:

app: zk-nfs-client-provisioner

strategy:

type: Recreate

template:

metadata:

labels:

app: zk-nfs-client-provisioner

spec:

serviceAccount: nfs-provisioner

containers:

- name: zk-nfs-client-provisioner

image: registry.cn-hangzhou.aliyuncs.com/open-ali/nfs-client-provisioner

imagePullPolicy: IfNotPresent

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

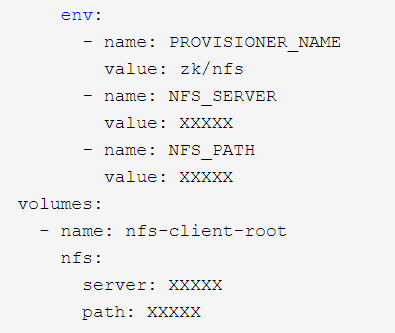

env:

- name: PROVISIONER_NAME

value: zk/nfs

- name: NFS_SERVER

value: XXXXX

- name: NFS_PATH

value: XXXXX

volumes:

- name: nfs-client-root

nfs:

server: XXXXX

path: XXXXX

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: zk

namespace: middleware

spec:

replicas: 3

selector:

matchLabels:

app: zk

serviceName: zk-hs

template:

metadata:

labels:

app: zk

spec:

affinity:

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 1

podAffinityTerm:

labelSelector:

matchExpressions:

- key: app

operator: In

values:

- zk

topologyKey: kubernetes.io/hostname

containers:

- command:

- sh

- -c

- 'start-zookeeper --servers=3 \

--data_dir=/var/lib/zookeeper/data \

--data_log_dir=/var/lib/zookeeper/data/log \

--conf_dir=/opt/zookeeper/conf \

--client_port=2181 \

--election_port=3888 \

--server_port=2888 \

--tick_time=2000 \

--init_limit=10 \

--sync_limit=5 \

--heap=512M \

--max_client_cnxns=60 \

--snap_retain_count=3 \

--purge_interval=12 \

--max_session_timeout=40000 \

--min_session_timeout=4000 \

--log_level=INFO '

image: fastop/zookeeper:3.4.10

imagePullPolicy: Always

livenessProbe:

exec:

command:

- sh

- -c

- zookeeper-ready 2181

failureThreshold: 3

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 5

name: kubernetes-zookeeper

ports:

- containerPort: 2181

name: client

protocol: TCP

- containerPort: 2888

name: server

protocol: TCP

- containerPort: 3888

name: leader-election

protocol: TCP

readinessProbe:

exec:

command:

- sh

- -c

- zookeeper-ready 2181

failureThreshold: 3

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 5

resources:

requests:

cpu: 100m

memory: 200Mi

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /var/lib/zookeeper

name: datadir

dnsPolicy: ClusterFirst

restartPolicy: Always

schedulerName: default-scheduler

securityContext:

fsGroup: 1000

runAsUser: 1000

terminationGracePeriodSeconds: 30

tolerations:

- effect: NoSchedule

key: travis.io/schedule-only

operator: Equal

value: kafka

- effect: NoExecute

key: travis.io/schedule-only

operator: Equal

tolerationSeconds: 3600

value: kafka

- effect: PreferNoSchedule

key: travis.io/schedule-only

operator: Equal

value: kafka

updateStrategy:

type: RollingUpdate

volumeClaimTemplates:

- apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: datadir

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 200Mi

storageClassName: zk-nfs-storage

volumeMode: Filesystem

---

apiVersion: v1

kind: Service

metadata:

labels:

app: zk

name: zk-hs

namespace: middleware

spec:

clusterIP: None

ports:

- name: server

port: 2888

protocol: TCP

targetPort: 2888

- name: leader-election

port: 3888

protocol: TCP

targetPort: 3888

selector:

app: zk

sessionAffinity: None

type: ClusterIP

---

apiVersion: v1

kind: Service

metadata:

labels:

app: zk

name: zk-cs

namespace: middleware

spec:

ports:

- name: client

port: 2181

protocol: TCP

targetPort: 2181

selector:

app: zk

sessionAffinity: None

type: ClusterIP

EOF

注:截图下的XXXX根据实际配置修改

2、部署zookeeper集群

[root@localhost ~]# kubectl apply -f zp.yaml

四、部署kafka集群

1、准备yaml文件

[root@localhost ~]# cat >> kafka.yaml<<EOF

apiVersion: storage.k8s.io/v1beta1

kind: StorageClass

metadata:

name: kafka-nfs-storage

namespace: middleware

mountOptions:

- vers=4.0

- nolock,tcp,noresvport

provisioner: kafka/nfs

reclaimPolicy: Retain

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: kafka-nfs-client-provisioner

namespace: middleware

spec:

replicas: 1

selector:

matchLabels:

app: kafka-nfs-client-provisioner

strategy:

type: Recreate

template:

metadata:

labels:

app: kafka-nfs-client-provisioner

spec:

serviceAccount: nfs-provisioner

containers:

- name: kafka-nfs-client-provisioner

image: registry.cn-hangzhou.aliyuncs.com/open-ali/nfs-client-provisioner

imagePullPolicy: IfNotPresent

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

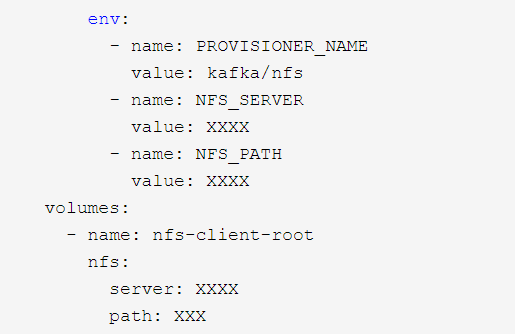

env:

- name: PROVISIONER_NAME

value: kafka/nfs

- name: NFS_SERVER

value: 0c100488fd-ret95.cn-hangzhou.nas.aliyuncs.com

- name: NFS_PATH

value: /kafka

volumes:

- name: nfs-client-root

nfs:

server: 0c100488fd-ret95.cn-hangzhou.nas.aliyuncs.com

path: /kafka

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: kafka

namespace: middleware

spec:

replicas: 3

selector:

matchLabels:

app: kafka

serviceName: kafka-svc

template:

metadata:

labels:

app: kafka

spec:

affinity:

podAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- podAffinityTerm:

labelSelector:

matchExpressions:

- key: app

operator: In

values:

- zk

topologyKey: kubernetes.io/hostname

weight: 1

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 1

podAffinityTerm:

labelSelector:

matchExpressions:

- key: app

operator: In

values:

- kafka

topologyKey: kubernetes.io/hostname

containers:

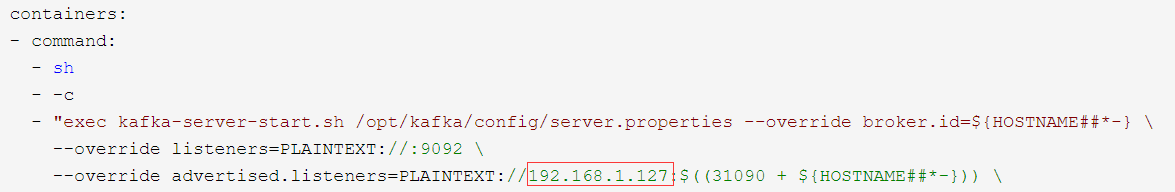

- command:

- sh

- -c

- "exec kafka-server-start.sh /opt/kafka/config/server.properties --override broker.id=${HOSTNAME##*-} \

--override listeners=PLAINTEXT://:9092 \

--override advertised.listeners=PLAINTEXT://121.41.177.238:$((31090 + ${HOSTNAME##*-})) \

--override zookeeper.connect=zk-0.zk-hs.middleware.svc.cluster.local:2181,zk-1.zk-hs.middleware.svc.cluster.local:2181,zk-2.zk-hs.middleware.svc.cluster.local:2181 \

--override log.dir=/var/lib/kafka \

--override auto.create.topics.enable=true \

--override auto.leader.rebalance.enable=true \

--override background.threads=10 \

--override compression.type=producer \

--override delete.topic.enable=false \

--override leader.imbalance.check.interval.seconds=300 \

--override leader.imbalance.per.broker.percentage=10 \

--override log.flush.interval.messages=9223372036854775807 \

--override log.flush.offset.checkpoint.interval.ms=60000 \

--override log.flush.scheduler.interval.ms=9223372036854775807 \

--override log.retention.bytes=-1 \

--override log.retention.hours=168 \

--override log.roll.hours=168 \

--override log.roll.jitter.hours=0 \

--override log.segment.bytes=1073741824 \

--override log.segment.delete.delay.ms=60000 \

--override message.max.bytes=1000012 \

--override min.insync.replicas=1 \

--override num.io.threads=8 \

--override num.network.threads=3 \

--override num.recovery.threads.per.data.dir=1 \

--override num.replica.fetchers=1 \

--override offset.metadata.max.bytes=4096 \

--override offsets.commit.required.acks=-1 \

--override offsets.commit.timeout.ms=5000 \

--override offsets.load.buffer.size=5242880 \

--override offsets.retention.check.interval.ms=600000 \

--override offsets.retention.minutes=1440 \

--override offsets.topic.compression.codec=0 \

--override offsets.topic.num.partitions=50 \

--override offsets.topic.replication.factor=3 \

--override offsets.topic.segment.bytes=104857600 \

--override queued.max.requests=500 \

--override quota.consumer.default=9223372036854775807 \

--override quota.producer.default=9223372036854775807 \

--override replica.fetch.min.bytes=1 \

--override replica.fetch.wait.max.ms=500 \

--override replica.high.watermark.checkpoint.interval.ms=5000 \

--override replica.lag.time.max.ms=10000 \

--override replica.socket.receive.buffer.bytes=65536 \

--override replica.socket.timeout.ms=30000 \

--override request.timeout.ms=30000 \

--override socket.receive.buffer.bytes=102400 \

--override socket.request.max.bytes=104857600 \

--override socket.send.buffer.bytes=102400 \

--override unclean.leader.election.enable=true \

--override zookeeper.session.timeout.ms=6000 \

--override zookeeper.set.acl=false \

--override broker.id.generation.enable=true \

--override connections.max.idle.ms=600000 \

--override controlled.shutdown.enable=true \

--override controlled.shutdown.max.retries=3 \

--override controlled.shutdown.retry.backoff.ms=5000 \

--override controller.socket.timeout.ms=30000 \

--override default.replication.factor=1 \

--override fetch.purgatory.purge.interval.requests=1000 \

--override group.max.session.timeout.ms=300000 \

--override group.min.session.timeout.ms=6000 \

--override inter.broker.protocol.version=2.2.0 \

--override log.cleaner.backoff.ms=15000 \

--override log.cleaner.dedupe.buffer.size=134217728 \

--override log.cleaner.delete.retention.ms=86400000 \

--override log.cleaner.enable=true \

--override log.cleaner.io.buffer.load.factor=0.9 \

--override log.cleaner.io.buffer.size=524288 \

--override log.cleaner.io.max.bytes.per.second=1.7976931348623157E308 \

--override log.cleaner.min.cleanable.ratio=0.5 \

--override log.cleaner.min.compaction.lag.ms=0 \

--override log.cleaner.threads=1 \

--override log.cleanup.policy=delete \

--override log.index.interval.bytes=4096 \

--override log.index.size.max.bytes=10485760 \

--override log.message.timestamp.difference.max.ms=9223372036854775807 \

--override log.message.timestamp.type=CreateTime \

--override log.preallocate=false \

--override log.retention.check.interval.ms=300000 \

--override max.connections.per.ip=2147483647 \

--override num.partitions=4 \

--override producer.purgatory.purge.interval.requests=1000 \

--override replica.fetch.backoff.ms=1000 \

--override replica.fetch.max.bytes=1048576 \

--override replica.fetch.response.max.bytes=10485760 \

--override reserved.broker.max.id=1000 "

env:

- name: KAFKA_HEAP_OPTS

value: -Xmx512M -Xms512M

- name: KAFKA_OPTS

value: -Dlogging.level=INFO

image: fastop/kafka:2.2.0

imagePullPolicy: Always

name: k8s-kafka

ports:

- containerPort: 9092

name: server

protocol: TCP

readinessProbe:

failureThreshold: 3

initialDelaySeconds: 5

periodSeconds: 15

successThreshold: 1

tcpSocket:

port: 9092

timeoutSeconds: 1

resources:

limits:

memory: 2Gi

requests:

memory: 2Gi

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /tmp/kafka_logs

name: datadir

dnsPolicy: ClusterFirst

restartPolicy: Always

schedulerName: default-scheduler

securityContext:

fsGroup: 1000

runAsUser: 1000

terminationGracePeriodSeconds: 300

tolerations:

- effect: NoSchedule

key: travis.io/schedule-only

operator: Equal

value: kafka

- effect: NoExecute

key: travis.io/schedule-only

operator: Equal

tolerationSeconds: 3600

value: kafka

- effect: PreferNoSchedule

key: travis.io/schedule-only

operator: Equal

value: kafka

updateStrategy:

rollingUpdate:

partition: 0

type: RollingUpdate

volumeClaimTemplates:

- apiVersion: v1

kind: PersistentVolumeClaim

metadata:

creationTimestamp: null

name: datadir

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 20Gi

storageClassName: kafka-nfs-storage

volumeMode: Filesystem

---

apiVersion: v1

kind: Service

metadata:

labels:

app: kafka

name: kafka-svc

namespace: middleware

spec:

clusterIP: None

ports:

- name: server

port: 9092

protocol: TCP

targetPort: 9092

selector:

app: kafka

sessionAffinity: None

type: ClusterIP

---

apiVersion: v1

kind: Service

metadata:

labels:

app: kafka

name: kafka-service

namespace: middleware

spec:

ports:

- name: server

nodePort: 32564

port: 9092

protocol: TCP

targetPort: 9092

selector:

app: kafka

sessionAffinity: None

type: NodePort

---

apiVersion: v1

kind: Service

metadata:

labels:

app: kafka

name: kafka-0-external

namespace: middleware

spec:

ports:

- name: server

nodePort: 30660

port: 9092

protocol: TCP

targetPort: 9092

selector:

statefulset.kubernetes.io/pod-name: kafka-0

sessionAffinity: None

type: NodePort

---

apiVersion: v1

kind: Service

metadata:

labels:

app: kafka

name: kafka-1-external

namespace: middleware

spec:

ports:

- name: server

nodePort: 31091

port: 9092

protocol: TCP

targetPort: 9092

selector:

statefulset.kubernetes.io/pod-name: kafka-1

sessionAffinity: None

type: NodePort

---

apiVersion: v1

kind: Service

metadata:

labels:

app: kafka

name: kafka-2-external

namespace: middleware

spec:

ports:

- name: server

nodePort: 31092

port: 9092

protocol: TCP

targetPort: 9092

selector:

statefulset.kubernetes.io/pod-name: kafka-2

sessionAffinity: None

type: NodePort

EOF

注:advertised.listeners对应外网访问地址,一般为负载均衡地址

截图中XXXX根据实际配置修改

2、部署kafka集群

[root@localhost ~]# kubectl apply -f kafka.yaml

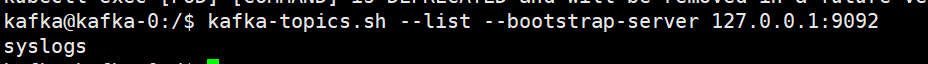

五、测试kafka

1、进入kafka集群容器

[root@localhost ~]# kubectl exec -it kafka-0 -n middleware

2、测试查询kafka的topic

kafka@kafka-0:/$ kafka-topics.sh --list --bootstrap-server 127.0.0.1:9092

输出类似如下结果代表集群部署成功

apiVersion: storage.k8s.io/v1beta1kind: StorageClassmetadata: name: kafka-nfs-storage namespace: middlewaremountOptions:- vers=4.0- nolock,tcp,noresvportprovisioner: kafka/nfsreclaimPolicy: Retain---apiVersion: apps/v1kind: Deploymentmetadata: name: kafka-nfs-client-provisioner namespace: middlewarespec: replicas: 1 selector: matchLabels: app: kafka-nfs-client-provisioner strategy: type: Recreate template: metadata: labels: app: kafka-nfs-client-provisioner spec: serviceAccount: nfs-provisioner containers: - name: kafka-nfs-client-provisioner image: registry.cn-hangzhou.aliyuncs.com/open-ali/nfs-client-provisioner imagePullPolicy: IfNotPresent volumeMounts: - name: nfs-client-root mountPath: /persistentvolumes env: - name: PROVISIONER_NAME value: kafka/nfs - name: NFS_SERVER value: 0c100488fd-ret95.cn-hangzhou.nas.aliyuncs.com - name: NFS_PATH value: /kafka volumes: - name: nfs-client-root nfs: server: 0c100488fd-ret95.cn-hangzhou.nas.aliyuncs.com path: /kafka---apiVersion: apps/v1kind: StatefulSetmetadata: name: kafka namespace: middlewarespec: replicas: 3 selector: matchLabels: app: kafka serviceName: kafka-svc template: metadata: labels: app: kafka spec: affinity: podAffinity: preferredDuringSchedulingIgnoredDuringExecution: - podAffinityTerm: labelSelector: matchExpressions: - key: app operator: In values: - zk topologyKey: kubernetes.io/hostname weight: 1 podAntiAffinity: preferredDuringSchedulingIgnoredDuringExecution: - weight: 1 podAffinityTerm: labelSelector: matchExpressions: - key: app operator: In values: - kafka topologyKey: kubernetes.io/hostname containers: - command: - sh - -c - "exec kafka-server-start.sh /opt/kafka/config/server.properties --override broker.id=${HOSTNAME##*-} \ --override listeners=PLAINTEXT://:9092 \ --override advertised.listeners=PLAINTEXT://121.41.177.238:$((31090 + ${HOSTNAME##*-})) \ --override zookeeper.connect=zk-0.zk-hs.middleware.svc.cluster.local:2181,zk-1.zk-hs.middleware.svc.cluster.local:2181,zk-2.zk-hs.middleware.svc.cluster.local:2181 \ --override log.dir=/var/lib/kafka \ --override auto.create.topics.enable=true \ --override auto.leader.rebalance.enable=true \ --override background.threads=10 \ --override compression.type=producer \ --override delete.topic.enable=false \ --override leader.imbalance.check.interval.seconds=300 \ --override leader.imbalance.per.broker.percentage=10 \ --override log.flush.interval.messages=9223372036854775807 \ --override log.flush.offset.checkpoint.interval.ms=60000 \ --override log.flush.scheduler.interval.ms=9223372036854775807 \ --override log.retention.bytes=-1 \ --override log.retention.hours=168 \ --override log.roll.hours=168 \ --override log.roll.jitter.hours=0 \ --override log.segment.bytes=1073741824 \ --override log.segment.delete.delay.ms=60000 \ --override message.max.bytes=1000012 \ --override min.insync.replicas=1 \ --override num.io.threads=8 \ --override num.network.threads=3 \ --override num.recovery.threads.per.data.dir=1 \ --override num.replica.fetchers=1 \ --override offset.metadata.max.bytes=4096 \ --override offsets.commit.required.acks=-1 \ --override offsets.commit.timeout.ms=5000 \ --override offsets.load.buffer.size=5242880 \ --override offsets.retention.check.interval.ms=600000 \ --override offsets.retention.minutes=1440 \ --override offsets.topic.compression.codec=0 \ --override offsets.topic.num.partitions=50 \ --override offsets.topic.replication.factor=3 \ --override offsets.topic.segment.bytes=104857600 \ --override queued.max.requests=500 \ --override quota.consumer.default=9223372036854775807 \ --override quota.producer.default=9223372036854775807 \ --override replica.fetch.min.bytes=1 \ --override replica.fetch.wait.max.ms=500 \ --override replica.high.watermark.checkpoint.interval.ms=5000 \ --override replica.lag.time.max.ms=10000 \ --override replica.socket.receive.buffer.bytes=65536 \ --override replica.socket.timeout.ms=30000 \ --override request.timeout.ms=30000 \ --override socket.receive.buffer.bytes=102400 \ --override socket.request.max.bytes=104857600 \ --override socket.send.buffer.bytes=102400 \ --override unclean.leader.election.enable=true \ --override zookeeper.session.timeout.ms=6000 \ --override zookeeper.set.acl=false \ --override broker.id.generation.enable=true \ --override connections.max.idle.ms=600000 \ --override controlled.shutdown.enable=true \ --override controlled.shutdown.max.retries=3 \ --override controlled.shutdown.retry.backoff.ms=5000 \ --override controller.socket.timeout.ms=30000 \ --override default.replication.factor=1 \ --override fetch.purgatory.purge.interval.requests=1000 \ --override group.max.session.timeout.ms=300000 \ --override group.min.session.timeout.ms=6000 \ --override inter.broker.protocol.version=2.2.0 \ --override log.cleaner.backoff.ms=15000 \ --override log.cleaner.dedupe.buffer.size=134217728 \ --override log.cleaner.delete.retention.ms=86400000 \ --override log.cleaner.enable=true \ --override log.cleaner.io.buffer.load.factor=0.9 \ --override log.cleaner.io.buffer.size=524288 \ --override log.cleaner.io.max.bytes.per.second=1.7976931348623157E308 \ --override log.cleaner.min.cleanable.ratio=0.5 \ --override log.cleaner.min.compaction.lag.ms=0 \ --override log.cleaner.threads=1 \ --override log.cleanup.policy=delete \ --override log.index.interval.bytes=4096 \ --override log.index.size.max.bytes=10485760 \ --override log.message.timestamp.difference.max.ms=9223372036854775807 \ --override log.message.timestamp.type=CreateTime \ --override log.preallocate=false \ --override log.retention.check.interval.ms=300000 \ --override max.connections.per.ip=2147483647 \ --override num.partitions=4 \ --override producer.purgatory.purge.interval.requests=1000 \ --override replica.fetch.backoff.ms=1000 \ --override replica.fetch.max.bytes=1048576 \ --override replica.fetch.response.max.bytes=10485760 \ --override reserved.broker.max.id=1000 " env: - name: KAFKA_HEAP_OPTS value: -Xmx512M -Xms512M - name: KAFKA_OPTS value: -Dlogging.level=INFO image: fastop/kafka:2.2.0 imagePullPolicy: Always name: k8s-kafka ports: - containerPort: 9092 name: server protocol: TCP readinessProbe: failureThreshold: 3 initialDelaySeconds: 5 periodSeconds: 15 successThreshold: 1 tcpSocket: port: 9092 timeoutSeconds: 1 resources: limits: memory: 2Gi requests: memory: 2Gi terminationMessagePath: /dev/termination-log terminationMessagePolicy: File volumeMounts: - mountPath: /tmp/kafka_logs name: datadir dnsPolicy: ClusterFirst restartPolicy: Always schedulerName: default-scheduler securityContext: fsGroup: 1000 runAsUser: 1000 terminationGracePeriodSeconds: 300 tolerations: - effect: NoSchedule key: travis.io/schedule-only operator: Equal value: kafka - effect: NoExecute key: travis.io/schedule-only operator: Equal tolerationSeconds: 3600 value: kafka - effect: PreferNoSchedule key: travis.io/schedule-only operator: Equal value: kafka updateStrategy: rollingUpdate: partition: 0 type: RollingUpdate volumeClaimTemplates: - apiVersion: v1 kind: PersistentVolumeClaim metadata: creationTimestamp: null name: datadir spec: accessModes: - ReadWriteMany resources: requests: storage: 20Gi storageClassName: kafka-nfs-storage volumeMode: Filesystem---apiVersion: v1kind: Servicemetadata: labels: app: kafka name: kafka-svc namespace: middlewarespec: clusterIP: None ports: - name: server port: 9092 protocol: TCP targetPort: 9092 selector: app: kafka sessionAffinity: None type: ClusterIP---apiVersion: v1kind: Servicemetadata: labels: app: kafka name: kafka-service namespace: middlewarespec: ports: - name: server nodePort: 32564 port: 9092 protocol: TCP targetPort: 9092 selector: app: kafka sessionAffinity: None type: NodePort---apiVersion: v1kind: Servicemetadata: labels: app: kafka name: kafka-0-external namespace: middlewarespec: ports: - name: server nodePort: 30660 port: 9092 protocol: TCP targetPort: 9092 selector: statefulset.kubernetes.io/pod-name: kafka-0 sessionAffinity: None type: NodePort---apiVersion: v1kind: Servicemetadata: labels: app: kafka name: kafka-1-external namespace: middlewarespec: ports: - name: server nodePort: 31091 port: 9092 protocol: TCP targetPort: 9092 selector: statefulset.kubernetes.io/pod-name: kafka-1 sessionAffinity: None type: NodePort---apiVersion: v1kind: Servicemetadata: labels: app: kafka name: kafka-2-external namespace: middlewarespec: ports: - name: server nodePort: 31092 port: 9092 protocol: TCP targetPort: 9092 selector: statefulset.kubernetes.io/pod-name: kafka-2 sessionAffinity: None type: NodePort

k8s集群部署kafka的更多相关文章

- Kubernetes(k8s)集群部署(k8s企业级Docker容器集群管理)系列目录

0.目录 整体架构目录:ASP.NET Core分布式项目实战-目录 k8s架构目录:Kubernetes(k8s)集群部署(k8s企业级Docker容器集群管理)系列目录 一.感谢 在此感谢.net ...

- Kubernetes(k8s)集群部署(k8s企业级Docker容器集群管理)系列之集群部署环境规划(一)

0.前言 整体架构目录:ASP.NET Core分布式项目实战-目录 k8s架构目录:Kubernetes(k8s)集群部署(k8s企业级Docker容器集群管理)系列目录 一.环境规划 软件 版本 ...

- Kubernetes(k8s)集群部署(k8s企业级Docker容器集群管理)系列之自签TLS证书及Etcd集群部署(二)

0.前言 整体架构目录:ASP.NET Core分布式项目实战-目录 k8s架构目录:Kubernetes(k8s)集群部署(k8s企业级Docker容器集群管理)系列目录 一.服务器设置 1.把每一 ...

- Kubernetes(k8s)集群部署(k8s企业级Docker容器集群管理)系列之flanneld网络介绍及部署(三)

0.前言 整体架构目录:ASP.NET Core分布式项目实战-目录 k8s架构目录:Kubernetes(k8s)集群部署(k8s企业级Docker容器集群管理)系列目录 一.flanneld介绍 ...

- Kubernetes(k8s)集群部署(k8s企业级Docker容器集群管理)系列之部署master/node节点组件(四)

0.前言 整体架构目录:ASP.NET Core分布式项目实战-目录 k8s架构目录:Kubernetes(k8s)集群部署(k8s企业级Docker容器集群管理)系列目录 1.部署master组件 ...

- (视频)asp.net core系列之k8s集群部署视频

0.前言 应许多网友的要求,特此录制一下k8s集群部署的视频.在录制完成后发现视频的声音存在一点瑕疵,不过不影响大家的观感. 一.视频说明 1.视频地址: 如果有不懂,或者有疑问的欢迎留言.视频分为两 ...

- 在k8s集群部署SonarQube

目录 1.2.存储环境 1.3.sonarqube版本 2.部署sonarqube 2.1.部署PostgreSQL 2.2.部署SonarQube 2.3.访问检查 SonarQube 是一款用于代 ...

- 基于k8s集群部署prometheus监控ingress nginx

目录 基于k8s集群部署prometheus监控ingress nginx 1.背景和环境概述 2.修改prometheus配置 3.检查是否生效 4.配置grafana图形 基于k8s集群部署pro ...

- 基于k8s集群部署prometheus监控etcd

目录 基于k8s集群部署prometheus监控etcd 1.背景和环境概述 2.修改prometheus配置 3.检查是否生效 4.配置grafana图形 基于k8s集群部署prometheus监控 ...

- 菜鸟系列k8s——k8s集群部署(2)

k8s集群部署 1. 角色分配 角色 IP 安装组件 k8s-master 10.0.0.170 kube-apiserver,kube-controller-manager,kube-schedul ...

随机推荐

- jdk下载及配置

JDK下载 JDK:下载网址Java Downloads | Oracle 点击document Download 点击java SE Downloads 选择需要用到的文件进行下载(我这边是win1 ...

- Android studio java文件显示j爆红

今天在android studio打开一个原来的工程,此工程是很久以前使用eclipse创建的,在android studio下有些问题需要解决. 1.设置project的jdk, 2.设置modul ...

- wpf TreeView 数据绑定

<Window x:Class="TsyCreateProjectContent.Window1" xmlns="http://schemas.microsoft. ...

- centos 防火墙开放端口

centos防火墙开放端口 开启防火墙 systemctl start firewalld 开放指定端口 firewall-cmd --zone=public --add-port=<PORT& ...

- SQL Server【提高】分区表

分区表 分区视图 分区表可以从物理上将一个大表分成几个小表,但是从逻辑上来看,还是一个大表. 什么时候需要分区表 数据库中某个表中的数据很多. 数据是分段的 分区的方式 水平分区 水平表分区就是将一个 ...

- 连接Oracle 19c出现ORA-28040:没有匹配的验证协议

错误信息:ORA-28040:没有匹配的验证协议处理方法 出现这个原因是因为你的Oracle连接客户端与服务端Oracle的版本不匹配造成的.一般是低版本客户端连接高版本服务端出现. 高版本连接低版本 ...

- JAVA课程设计(附源码)

Java课程设计选题 Java课程设计说明 本次课程设计的目的是通过课程设计的各个项目的综合训练,培养学生实际分析问题.编程和动手能力,提高学生的综合素质.本课程设计尝试使用一些较生动的设计项目,激发 ...

- 对VC中有关数据类型转换的整理

原文地址:http://spaces.msn.com/wsycqyz/blog/cns!F27CB74CE9ADA6E7!152.trak 对VC中有关数据类型转换的整理 说明:本文纯粹是总结一下 ...

- Grafana + Prometheus 监控JVM

最近在研究监控系统,所以第一次接触了Grafana跟Prometheus,Grafana是一个很强大的可视化指标工具,而Prometheus是一个时序数据库. 项目总会慢慢做大,一些必要的监控以及预警 ...

- IOS z-index失效

经查资料,有说加了 body{ -webkit-overflow-scrolling: unset;} 就会好,但是我们的并没有.后来发现,去掉父元素的 perspective:150px 属性后,子 ...