【Hadoop】Hadoop MR 自定义排序

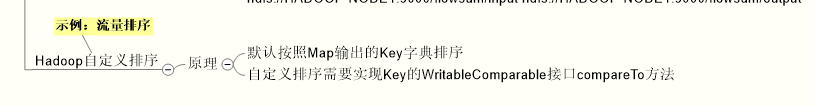

1、概念

2、代码示例

FlowSort

- package com.ares.hadoop.mr.flowsort;

- import java.io.IOException;

- import org.apache.hadoop.conf.Configuration;

- import org.apache.hadoop.conf.Configured;

- import org.apache.hadoop.fs.Path;

- import org.apache.hadoop.io.LongWritable;

- import org.apache.hadoop.io.NullWritable;

- import org.apache.hadoop.io.Text;

- import org.apache.hadoop.mapreduce.Job;

- import org.apache.hadoop.mapreduce.Mapper;

- import org.apache.hadoop.mapreduce.Reducer;

- import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

- import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

- import org.apache.hadoop.util.StringUtils;

- import org.apache.hadoop.util.Tool;

- import org.apache.hadoop.util.ToolRunner;

- import org.apache.log4j.Logger;

- import com.ares.hadoop.mr.exception.LineException;

- public class FlowSort extends Configured implements Tool {

- private static final Logger LOGGER = Logger.getLogger(FlowSort.class);

- enum Counter {

- LINESKIP

- }

- public static class FlowSortMapper extends Mapper<LongWritable, Text,

- FlowBean, NullWritable> {

- private String line;

- private int length;

- private final static char separator = '\t';

- private String phoneNum;

- private long upFlow;

- private long downFlow;

- private long sumFlow;

- private FlowBean flowBean = new FlowBean();

- private NullWritable nullWritable = NullWritable.get();

- @Override

- protected void map(

- LongWritable key,

- Text value,

- Mapper<LongWritable, Text, FlowBean, NullWritable>.Context context)

- throws IOException, InterruptedException {

- // TODO Auto-generated method stub

- //super.map(key, value, context);

- String errMsg;

- try {

- line = value.toString();

- String[] fields = StringUtils.split(line, separator);

- length = fields.length;

- if (length != ) {

- throw new LineException(key.get() + ", " + line + " LENGTH INVALID, IGNORE...");

- }

- phoneNum = fields[];

- upFlow = Long.parseLong(fields[]);

- downFlow = Long.parseLong(fields[]);

- sumFlow = Long.parseLong(fields[]);

- flowBean.setPhoneNum(phoneNum);

- flowBean.setUpFlow(upFlow);

- flowBean.setDownFlow(downFlow);

- flowBean.setSumFlow(sumFlow);

- context.write(flowBean, nullWritable);

- } catch (LineException e) {

- // TODO: handle exception

- LOGGER.error(e);

- System.out.println(e);

- context.getCounter(Counter.LINESKIP).increment();

- return;

- } catch (NumberFormatException e) {

- // TODO: handle exception

- errMsg = key.get() + ", " + line + " FLOW DATA INVALID, IGNORE...";

- LOGGER.error(errMsg);

- System.out.println(errMsg);

- context.getCounter(Counter.LINESKIP).increment();

- return;

- } catch (Exception e) {

- // TODO: handle exception

- LOGGER.error(e);

- System.out.println(e);

- context.getCounter(Counter.LINESKIP).increment();

- return;

- }

- }

- }

- public static class FlowSortReducer extends Reducer<FlowBean, NullWritable,

- FlowBean, NullWritable> {

- @Override

- protected void reduce(

- FlowBean key,

- Iterable<NullWritable> values,

- Reducer<FlowBean, NullWritable, FlowBean, NullWritable>.Context context)

- throws IOException, InterruptedException {

- // TODO Auto-generated method stub

- //super.reduce(arg0, arg1, arg2);

- context.write(key, NullWritable.get());

- }

- }

- @Override

- public int run(String[] args) throws Exception {

- // TODO Auto-generated method stub

- String errMsg = "FlowSort: TEST STARTED...";

- LOGGER.debug(errMsg);

- System.out.println(errMsg);

- Configuration conf = new Configuration();

- //FOR Eclipse JVM Debug

- //conf.set("mapreduce.job.jar", "flowsum.jar");

- Job job = Job.getInstance(conf);

- // JOB NAME

- job.setJobName("FlowSort");

- // JOB MAPPER & REDUCER

- job.setJarByClass(FlowSort.class);

- job.setMapperClass(FlowSortMapper.class);

- job.setReducerClass(FlowSortReducer.class);

- // MAP & REDUCE

- job.setOutputKeyClass(FlowBean.class);

- job.setOutputValueClass(NullWritable.class);

- // MAP

- job.setMapOutputKeyClass(FlowBean.class);

- job.setMapOutputValueClass(NullWritable.class);

- // JOB INPUT & OUTPUT PATH

- //FileInputFormat.addInputPath(job, new Path(args[0]));

- FileInputFormat.setInputPaths(job, args[]);

- FileOutputFormat.setOutputPath(job, new Path(args[]));

- // VERBOSE OUTPUT

- if (job.waitForCompletion(true)) {

- errMsg = "FlowSort: TEST SUCCESSFULLY...";

- LOGGER.debug(errMsg);

- System.out.println(errMsg);

- return ;

- } else {

- errMsg = "FlowSort: TEST FAILED...";

- LOGGER.debug(errMsg);

- System.out.println(errMsg);

- return ;

- }

- }

- public static void main(String[] args) throws Exception {

- if (args.length != ) {

- String errMsg = "FlowSort: ARGUMENTS ERROR";

- LOGGER.error(errMsg);

- System.out.println(errMsg);

- System.exit(-);

- }

- int result = ToolRunner.run(new Configuration(), new FlowSort(), args);

- System.exit(result);

- }

- }

FlowBean

- package com.ares.hadoop.mr.flowsort;

- import java.io.DataInput;

- import java.io.DataOutput;

- import java.io.IOException;

- import org.apache.hadoop.io.WritableComparable;

- public class FlowBean implements WritableComparable<FlowBean>{

- private String phoneNum;

- private long upFlow;

- private long downFlow;

- private long sumFlow;

- public FlowBean() {

- // TODO Auto-generated constructor stub

- }

- // public FlowBean(String phoneNum, long upFlow, long downFlow, long sumFlow) {

- // super();

- // this.phoneNum = phoneNum;

- // this.upFlow = upFlow;

- // this.downFlow = downFlow;

- // this.sumFlow = sumFlow;

- // }

- public String getPhoneNum() {

- return phoneNum;

- }

- public void setPhoneNum(String phoneNum) {

- this.phoneNum = phoneNum;

- }

- public long getUpFlow() {

- return upFlow;

- }

- public void setUpFlow(long upFlow) {

- this.upFlow = upFlow;

- }

- public long getDownFlow() {

- return downFlow;

- }

- public void setDownFlow(long downFlow) {

- this.downFlow = downFlow;

- }

- public long getSumFlow() {

- return sumFlow;

- }

- public void setSumFlow(long sumFlow) {

- this.sumFlow = sumFlow;

- }

- @Override

- public void readFields(DataInput in) throws IOException {

- // TODO Auto-generated method stub

- phoneNum = in.readUTF();

- upFlow = in.readLong();

- downFlow = in.readLong();

- sumFlow = in.readLong();

- }

- @Override

- public void write(DataOutput out) throws IOException {

- // TODO Auto-generated method stub

- out.writeUTF(phoneNum);

- out.writeLong(upFlow);

- out.writeLong(downFlow);

- out.writeLong(sumFlow);

- }

- @Override

- public String toString() {

- return "" + phoneNum + "\t" + upFlow + "\t" + downFlow + "\t" + sumFlow;

- }

- @Override

- public int compareTo(FlowBean o) {

- // TODO Auto-generated method stub

- return sumFlow>o.getSumFlow()?-:;

- }

- }

LineException

- package com.ares.hadoop.mr.exception;

- public class LineException extends RuntimeException {

- private static final long serialVersionUID = 2536144005398058435L;

- public LineException() {

- super();

- // TODO Auto-generated constructor stub

- }

- public LineException(String message, Throwable cause) {

- super(message, cause);

- // TODO Auto-generated constructor stub

- }

- public LineException(String message) {

- super(message);

- // TODO Auto-generated constructor stub

- }

- public LineException(Throwable cause) {

- super(cause);

- // TODO Auto-generated constructor stub

- }

- }

【Hadoop】Hadoop MR 自定义排序的更多相关文章

- hadoop提交作业自定义排序和分组

现有数据如下: 3 3 3 2 3 1 2 2 2 1 1 1 要求为: 先按第一列从小到大排序,如果第一列相同,按第二列从小到大排序 如果是hadoop默认的排序方式,只能比较key,也就是第一列, ...

- 2 weekend110的hadoop的自定义排序实现 + mr程序中自定义分组的实现

我想得到按流量来排序,而且还是倒序,怎么达到实现呢? 达到下面这种效果, 默认是根据key来排, 我想根据value里的某个排, 解决思路:将value里的某个,放到key里去,然后来排 下面,开始w ...

- Hadoop学习之自定义二次排序

一.概述 MapReduce框架对处理结果的输出会根据key值进行默认的排序,这个默认排序可以满足一部分需求,但是也是十分有限的.在我们实际的需求当中,往 往有要对reduce输出结果进行二次排 ...

- 自定义排序及Hadoop序列化

自定义排序 将两列数据进行排序,第一列按照升序排列,当第一列相同时,第二列升序排列. 在map和reduce阶段进行排序时,比较的是k2.v2是不参与排序比较的.如果要想让v2也进行排序,需要把k2和 ...

- Hadoop学习之路(7)MapReduce自定义排序

本文测试文本: tom 20 8000 nancy 22 8000 ketty 22 9000 stone 19 10000 green 19 11000 white 39 29000 socrate ...

- Hadoop【MR的分区、排序、分组】

[toc] 一.分区 问题:按照条件将结果输出到不同文件中 自定义分区步骤 1.自定义继承Partitioner类,重写getPartition()方法 2.在job驱动Driver中设置自定义的Pa ...

- Hadoop MapReduce 二次排序原理及其应用

关于二次排序主要涉及到这么几个东西: 在0.20.0 以前使用的是 setPartitionerClass setOutputkeyComparatorClass setOutputValueGrou ...

- Hadoop【MR开发规范、序列化】

Hadoop[MR开发规范.序列化] 目录 Hadoop[MR开发规范.序列化] 一.MapReduce编程规范 1.Mapper阶段 2.Reducer阶段 3.Driver阶段 二.WordCou ...

- Hadoop基础-MapReduce的排序

Hadoop基础-MapReduce的排序 作者:尹正杰 版权声明:原创作品,谢绝转载!否则将追究法律责任. 一.MapReduce的排序分类 1>.部分排序 部分排序是对单个分区进行排序,举个 ...

随机推荐

- bzoj 2618 半平面交模板+学习笔记

题目大意 给你n个凸多边形,求多边形的交的面积 分析 题意\(=\)给你一堆边,让你求半平面交的面积 做法 半平面交模板 1.定义半平面为向量的左侧 2.将所有向量的起点放到一个中心,以中心参照进行逆 ...

- VSM and VEM Modules

Information About Modules Cisco Nexus 1000V manages a data center defined by a VirtualCenter. Each s ...

- 过河(DP)

原题传送门 这道题要用到压缩的思想(原来DP还能这么用...) 其实很简单,假如我们要到某一个位置w 如果我们原位置为Q 很显然,如果(W-Q>=s*t)那么我们一定能到达W 换言之,就是如果我 ...

- CString::GetLength()获得字节数

按照MSDN的说吗,在选用MBCS多字节字符串编码时,该方法会得到正确的字节数.此时没有问题. For multibyte character sets (MBCS), GetLength count ...

- hihocoder1236(2015长春网赛J题) Scores(bitset && 分块)

题意:给你50000个五维点(a1,a2,a3,a4,a5),50000个询问(q1,q2,q3,q4,q5),问已知点里有多少个点(x1,x2,x3,x4,x5)满足(xi<=qi,i=1,2 ...

- [BZOJ1052][HAOI2007]覆盖问题 二分+贪心

1052: [HAOI2007]覆盖问题 Time Limit: 10 Sec Memory Limit: 162 MB Submit: 2053 Solved: 959 [Submit][Sta ...

- (29)C#多线程

使用线程的原因 1.不希望用户界面停止响应. 2.所有需要等待的操作,如文件.数据库或网络访问需要一定的时间. 一个进程的多个线程可以同时运行不同cpu或多核cpu的不同内核上 注意多线程访问相同的数 ...

- Codeforces 954I Yet Another String Matching Problem(并查集 + FFT)

题目链接 Educational Codeforces Round 40 Problem I 题意 定义两个长度相等的字符串之间的距离为: 把两个字符串中所有同一种字符变成另外一种,使得两个 ...

- 在CentOS 7上安装Node.js

一.安装1.进入官网下载最新版本https://nodejs.org/en/ 选择下载后上传或直接使用wget下载 wget https://nodejs.org/dist/v8.11.2/node- ...

- jcl sort comp3 to 表示型

Lets say your packed data is at 10th column and is of length 6, S9(4)V99 You could try the following ...