MYCAT扩容

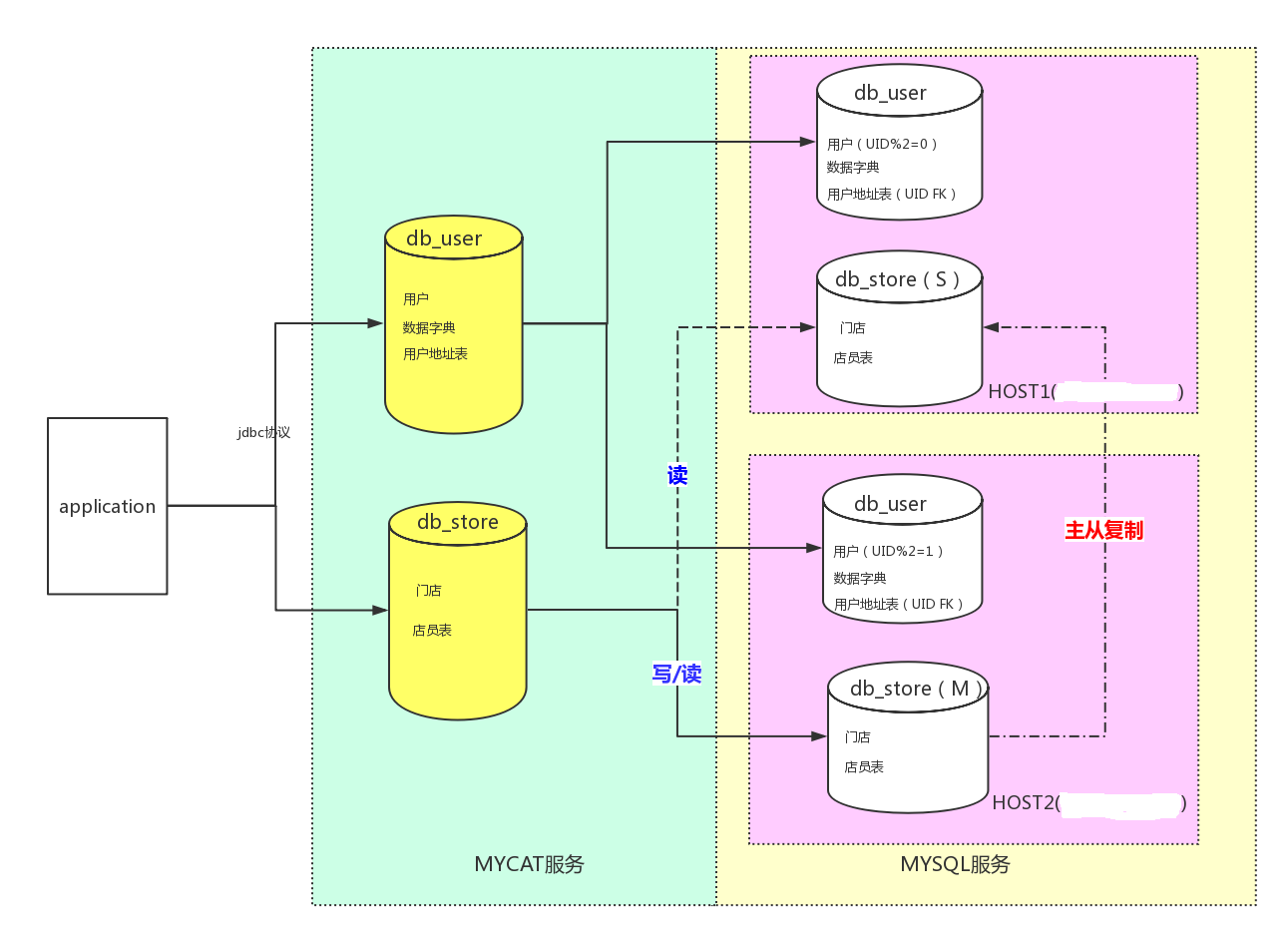

一、原架构图:

二、扩容

在HOST1上新增节点db_user2

在HOST2上新增节点db_user2

三、操作步骤

1、mycat 所在环境安装 mysql 客户端程序

2、mycat 的 lib 目录下添加 mysql 的 jdbc 驱动包

下载mysql-connect-jdbc-5.1.35.jar,下载地址:https://mvnrepository.com/artifact/mysql/mysql-connector-java/5.1.35

把下载的jar包放到mycat lib目录下:/root/data/program/mycat/lib

3、对扩容缩容的表所有节点数据进行备份,以便迁移失败后的数据恢复

4、编辑schema.xml 和 rule.xml

schema.xml

<table name="users" dataNode="db_user_dataNode$1-4" rule="mod-userID-long" primaryKey="userID" autoIncrement="true">

<childTable name="user_address" joinKey="userID" parentKey="userID" primaryKey="addressID"/>

</table>

<!-- db_user -->

<dataNode name="db_user_dataNode1" dataHost="db_userHOST1" database="db_user" />

<dataNode name="db_user_dataNode2" dataHost="db_userHOST2" database="db_user" />

<dataNode name="db_user_dataNode3" dataHost="db_userHOST1" database="db_user2" />

<dataNode name="db_user_dataNode4" dataHost="db_userHOST2" database="db_user2" />

<?xml version="1.0"?>

<!DOCTYPE mycat:schema SYSTEM "schema.dtd">

<mycat:schema xmlns:mycat="http://io.mycat/"> <schema name="db_store" checkSQLschema="false" sqlMaxLimit="100">

<table name="store" dataNode="db_store_dataNode" primaryKey="storeID"/>

<table name="employee" dataNode="db_store_dataNode" primaryKey="employeeID"/>

</schema> <schema name="db_user" checkSQLschema="false" sqlMaxLimit="100">

<table name="data_dictionary" type="global" dataNode="db_user_dataNode1,db_user_dataNode2" primaryKey="dataDictionaryID"/>

<table name="users" dataNode="db_user_dataNode$1-4" rule="mod-userID-long" primaryKey="userID" autoIncrement="true">

<childTable name="user_address" joinKey="userID" parentKey="userID" primaryKey="addressID"/>

</table> <table name = "mycat_sequence" dataNode ="db_user_dataNode2" />

</schema> <!-- 节点配置 -->

<!-- db_store -->

<dataNode name="db_store_dataNode" dataHost="db_storeHOST" database="db_store" /> <!-- db_user -->

<dataNode name="db_user_dataNode1" dataHost="db_userHOST1" database="db_user" />

<dataNode name="db_user_dataNode2" dataHost="db_userHOST2" database="db_user" />

<dataNode name="db_user_dataNode3" dataHost="db_userHOST1" database="db_user2" />

<dataNode name="db_user_dataNode4" dataHost="db_userHOST2" database="db_user2" /> <!-- 节点主机配置 -->

<!-- 配置db_store的节点主机 -->

<dataHost name="db_storeHOST" maxCon="1000" minCon="10" balance="1"

writeType="0" dbType="mysql" dbDriver="native" switchType="1" slaveThreshold="100">

<heartbeat>select user()</heartbeat>

<!-- can have multi write hosts -->

<writeHost host="hostM1" url="192.168.189.130:3306" user="root" password="123456">

<!-- can have multi read hosts -->

<readHost host="hostS1" url="192.168.189.131:3306" user="root" password="123456" /> </writeHost> </dataHost> <!-- 配置db_user的节点主机 -->

<dataHost name="db_userHOST1" maxCon="1000" minCon="10" balance="0"

writeType="0" dbType="mysql" dbDriver="native" switchType="1" slaveThreshold="100">

<heartbeat>select user()</heartbeat>

<writeHost host="userHost1" url="192.168.189.130:3306" user="root" password="123456">

</writeHost>

</dataHost> <dataHost name="db_userHOST2" maxCon="1000" minCon="10" balance="0"

writeType="0" dbType="mysql" dbDriver="native" switchType="1" slaveThreshold="100">

<heartbeat>select user()</heartbeat>

<!-- can have multi write hosts -->

<writeHost host="userHost2" url="192.168.189.131:3306" user="root" password="123456">

</writeHost>

</dataHost> </mycat:schema>

rule.xml

<function name="mod-long" class="io.mycat.route.function.PartitionByMod">

<!-- how many data nodes -->

<property name="count">4</property>

</function>

<?xml version="1.0" encoding="UTF-8"?>

<!-- - - Licensed under the Apache License, Version 2.0 (the "License");

- you may not use this file except in compliance with the License. - You

may obtain a copy of the License at - - http://www.apache.org/licenses/LICENSE-2.0

- - Unless required by applicable law or agreed to in writing, software -

distributed under the License is distributed on an "AS IS" BASIS, - WITHOUT

WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. - See the

License for the specific language governing permissions and - limitations

under the License. -->

<!DOCTYPE mycat:rule SYSTEM "rule.dtd">

<mycat:rule xmlns:mycat="http://io.mycat/">

<tableRule name="rule1">

<rule>

<columns>id</columns>

<algorithm>func1</algorithm>

</rule>

</tableRule> <tableRule name="rule2">

<rule>

<columns>user_id</columns>

<algorithm>func1</algorithm>

</rule>

</tableRule> <tableRule name="sharding-by-intfile">

<rule>

<columns>sharding_id</columns>

<algorithm>hash-int</algorithm>

</rule>

</tableRule>

<tableRule name="auto-sharding-long">

<rule>

<columns>id</columns>

<algorithm>rang-long</algorithm>

</rule>

</tableRule>

<tableRule name="mod-long">

<rule>

<columns>id</columns>

<algorithm>mod-long</algorithm>

</rule>

</tableRule> <tableRule name="mod-userID-long">

<rule>

<columns>userID</columns>

<algorithm>mod-long</algorithm>

</rule>

</tableRule> <tableRule name="sharding-by-murmur">

<rule>

<columns>id</columns>

<algorithm>murmur</algorithm>

</rule>

</tableRule>

<tableRule name="crc32slot">

<rule>

<columns>id</columns>

<algorithm>crc32slot</algorithm>

</rule>

</tableRule>

<tableRule name="sharding-by-month">

<rule>

<columns>create_time</columns>

<algorithm>partbymonth</algorithm>

</rule>

</tableRule>

<tableRule name="latest-month-calldate">

<rule>

<columns>calldate</columns>

<algorithm>latestMonth</algorithm>

</rule>

</tableRule> <tableRule name="auto-sharding-rang-mod">

<rule>

<columns>id</columns>

<algorithm>rang-mod</algorithm>

</rule>

</tableRule> <tableRule name="jch">

<rule>

<columns>id</columns>

<algorithm>jump-consistent-hash</algorithm>

</rule>

</tableRule> <function name="mod-long" class="io.mycat.route.function.PartitionByMod">

<!-- how many data nodes -->

<property name="count">4</property>

</function> <function name="murmur"

class="io.mycat.route.function.PartitionByMurmurHash">

<property name="seed">0</property><!-- 默认是0 -->

<property name="count">2</property><!-- 要分片的数据库节点数量,必须指定,否则没法分片 -->

<property name="virtualBucketTimes">160</property><!-- 一个实际的数据库节点被映射为这么多虚拟节点,默认是160倍,也就是虚拟节点数是物理节点数的160倍 -->

<!-- <property name="weightMapFile">weightMapFile</property> 节点的权重,没有指定权重的节点默认是1。以properties文件的格式填写,以从0开始到count-1的整数值也就是节点索引为key,以节点权重值为值。所有权重值必须是正整数,否则以1代替 -->

<!-- <property name="bucketMapPath">/etc/mycat/bucketMapPath</property>

用于测试时观察各物理节点与虚拟节点的分布情况,如果指定了这个属性,会把虚拟节点的murmur hash值与物理节点的映射按行输出到这个文件,没有默认值,如果不指定,就不会输出任何东西 -->

</function> <function name="crc32slot"

class="io.mycat.route.function.PartitionByCRC32PreSlot">

<property name="count">2</property><!-- 要分片的数据库节点数量,必须指定,否则没法分片 -->

</function>

<function name="hash-int"

class="io.mycat.route.function.PartitionByFileMap">

<property name="mapFile">partition-hash-int.txt</property>

</function>

<function name="rang-long"

class="io.mycat.route.function.AutoPartitionByLong">

<property name="mapFile">autopartition-long.txt</property>

</function> <function name="func1" class="io.mycat.route.function.PartitionByLong">

<property name="partitionCount">8</property>

<property name="partitionLength">128</property>

</function>

<function name="latestMonth"

class="io.mycat.route.function.LatestMonthPartion">

<property name="splitOneDay">24</property>

</function>

<function name="partbymonth"

class="io.mycat.route.function.PartitionByMonth">

<property name="dateFormat">yyyy-MM-dd</property>

<property name="sBeginDate">2015-01-01</property>

</function> <function name="rang-mod" class="io.mycat.route.function.PartitionByRangeMod">

<property name="mapFile">partition-range-mod.txt</property>

</function> <function name="jump-consistent-hash" class="io.mycat.route.function.PartitionByJumpConsistentHash">

<property name="totalBuckets">3</property>

</function>

</mycat:rule>

5、配置conf/migrateTables.properties

migrateTables.properties

db_user=users

#schema1=tb1,tb2,...

#schema2=all(写all或者不写将对此schema下拆分节点变化的拆分表全部进行重新路由)

#... #sample

#TESTDB=travelrecord,company,goods

#要扩容的逻辑库 对应的逻辑表

db_user=users

6、修改bin/dataMigrate.sh,执行dataMigrate.sh

指定临时文件路径

#临时文件路径,目录不存在将自动创建,不指定此目录则默认为mycat根下的temp目录

RUN_CMD="$RUN_CMD -tempFileDir=/root/data/program/mycat/temp"

指定为false可以查看此过程中产生的sql

#完成扩容缩容后是否删除临时文件 默认为true

RUN_CMD="$RUN_CMD -deleteTempFileDir=false"

通过命令"find / -name mysqldump"查找mysqldump路径为"/usr/bin/mysqldump",指定#mysql bin路径为"/usr/bin/"

[root@localhost program]# cd mycat

[root@localhost mycat]# pwd

/root/data/program/mycat

[root@localhost mycat]# find / -name mysqldump

/usr/bin/mysqldump

[root@localhost mycat]# ^C

[root@localhost mycat]#

#mysql bin路径

RUN_CMD="$RUN_CMD -mysqlBin=/usr/bin/"

mycat bin目录下执行脚本"./dataMigrate.sh" 报错:

Error: JAVA_HOME environment variable is not set.

这个问题折腾了好久,最后不用openjdk,下载jdk解压配置环境变量解决的参考文档:https://blog.csdn.net/Haiyang_Duan/article/details/62099225

继续执行 ./dataMigrate.sh,日志如下

[root@localhost bin]# ./dataMigrate.sh

"/usr/java/jdk1.8.0_191/bin/java" -DMYCAT_HOME="/root/data/program/mycat" -classpath "/root/data/program/mycat/conf:/root/data/program/mycat/lib/classes:/root/data/program/mycat/lib/asm-4.0.jar:/root/data/program/mycat/lib/commons-collections-3.2.1.jar:/root/data/program/mycat/lib/commons-lang-2.6.jar:/root/data/program/mycat/lib/curator-client-2.11.0.jar:/root/data/program/mycat/lib/curator-framework-2.11.0.jar:/root/data/program/mycat/lib/curator-recipes-2.11.0.jar:/root/data/program/mycat/lib/disruptor-3.3.4.jar:/root/data/program/mycat/lib/dom4j-1.6.1.jar:/root/data/program/mycat/lib/druid-1.0.26.jar:/root/data/program/mycat/lib/ehcache-core-2.6.11.jar:/root/data/program/mycat/lib/fastjson-1.2.12.jar:/root/data/program/mycat/lib/guava-19.0.jar:/root/data/program/mycat/lib/hamcrest-core-1.3.jar:/root/data/program/mycat/lib/hamcrest-library-1.3.jar:/root/data/program/mycat/lib/jline-0.9.94.jar:/root/data/program/mycat/lib/joda-time-2.9.3.jar:/root/data/program/mycat/lib/jsr305-2.0.3.jar:/root/data/program/mycat/lib/kryo-2.10.jar:/root/data/program/mycat/lib/leveldb-0.7.jar:/root/data/program/mycat/lib/leveldb-api-0.7.jar:/root/data/program/mycat/lib/log4j-1.2.17.jar:/root/data/program/mycat/lib/log4j-1.2-api-2.5.jar:/root/data/program/mycat/lib/log4j-api-2.5.jar:/root/data/program/mycat/lib/log4j-core-2.5.jar:/root/data/program/mycat/lib/log4j-slf4j-impl-2.5.jar:/root/data/program/mycat/lib/mapdb-1.0.7.jar:/root/data/program/mycat/lib/minlog-1.2.jar:/root/data/program/mycat/lib/mongo-java-driver-2.11.4.jar:/root/data/program/mycat/lib/Mycat-server-1.6-RELEASE.jar:/root/data/program/mycat/lib/mysql-binlog-connector-java-0.4.1.jar:/root/data/program/mycat/lib/mysql-connector-java-5.1.35.jar:/root/data/program/mycat/lib/netty-3.7.0.Final.jar:/root/data/program/mycat/lib/objenesis-1.2.jar:/root/data/program/mycat/lib/reflectasm-1.03.jar:/root/data/program/mycat/lib/sequoiadb-driver-1.12.jar:/root/data/program/mycat/lib/slf4j-api-1.6.1.jar:/root/data/program/mycat/lib/univocity-parsers-2.2.1.jar:/root/data/program/mycat/lib/velocity-1.7.jar:/root/data/program/mycat/lib/wrapper.jar:/root/data/program/mycat/lib/zookeeper-3.4.6.jar" -server -Xms2G -Xmx2G -XX:MaxPermSize=64M -XX:+AggressiveOpts -XX:MaxDirectMemorySize=2G io.mycat.util.dataMigrator.DataMigrator -tempFileDir=/root/data/program/mycat/temp -isAwaysUseMaster=true -mysqlBin=/usr/bin/ -cmdLength=110*1024 -charset=utf8 -deleteTempFileDir=false -threadCount= -delThreadCount= -queryPageSize=

Java HotSpot(TM) 64-Bit Server VM warning: ignoring option MaxPermSize=64M; support was removed in 8.0 2018-10-31 22:12:20:211 [1]-> creating migrator schedule and temp files for migrate...

+--------------------------------[db_user:users] migrate info---------------------------------+

|tableSize = 5 |

|migrate before = [db_user_dataNode1, db_user_dataNode2] |

|migrate after = [db_user_dataNode1, db_user_dataNode2, db_user_dataNode3, db_user_dataNode4]|

|rule function = PartitionByMod |

+---------------------------------------------------------------------------------------------+ +--[db_user:users] migrate schedule--+

|db_user_dataNode1[2] -> [0, 0, 1, 0]|

|db_user_dataNode2[3] -> [0, 0, 0, 1]|

+------------------------------------+ 2018-10-31 22:12:21:911 [2]-> start migrate data...

2018-10-31 22:12:21,990 [INFO ][pool-3-thread-2] [db_user:users]/usr/bin/mysqldump -h192.168.189.131 -P3306 -uroot -p123456 db_user users --compact --no-create-info --default-character-set=utf8 --add-locks=false --where="USERID in (#)" --result-file="/root/data/program/mycat/temp/db_user-users/db_user_dataNode2(old)-db_user_dataNode4(new)/users.txt" (io.mycat.util.dataMigrator.dataIOImpl.MysqlDataIO:MysqlDataIO.java:103)

2018-10-31 22:12:21,990 [INFO ][pool-3-thread-1] [db_user:users]/usr/bin/mysqldump -h192.168.189.130 -P3306 -uroot -p123456 db_user users --compact --no-create-info --default-character-set=utf8 --add-locks=false --where="USERID in (#)" --result-file="/root/data/program/mycat/temp/db_user-users/db_user_dataNode1(old)-db_user_dataNode3(new)/users.txt" (io.mycat.util.dataMigrator.dataIOImpl.MysqlDataIO:MysqlDataIO.java:103)

2018-10-31 22:12:22,306 [INFO ][pool-3-thread-1] [db_user:users]/usr/bin/mysqldump -h192.168.189.130 -P3306 -uroot -p123456 db_user users --compact --no-create-info --default-character-set=utf8 --add-locks=false --where="USERID in (#)" --result-file="/root/data/program/mycat/temp/db_user-users/db_user_dataNode1(old)-db_user_dataNode3(new)/users.txt" exe info:mysqldump: [Warning] Using a password on the command line interface can be insecure. (io.mycat.util.dataMigrator.dataIOImpl.MysqlDataIO:MysqlDataIO.java:116)

2018-10-31 22:12:22,337 [INFO ][pool-3-thread-2] [db_user:users]/usr/bin/mysqldump -h192.168.189.131 -P3306 -uroot -p123456 db_user users --compact --no-create-info --default-character-set=utf8 --add-locks=false --where="USERID in (#)" --result-file="/root/data/program/mycat/temp/db_user-users/db_user_dataNode2(old)-db_user_dataNode4(new)/users.txt" exe info:mysqldump: [Warning] Using a password on the command line interface can be insecure. (io.mycat.util.dataMigrator.dataIOImpl.MysqlDataIO:MysqlDataIO.java:116)

2018-10-31 22:12:22,455 [INFO ][pool-3-thread-1] [db_user:users] /usr/bin/mysql -h192.168.189.130 -P3306 -uroot -p123456 -Ddb_user2 -f --default-character-set=utf8 -e "source /root/data/program/mycat/temp/db_user-users/db_user_dataNode1(old)-db_user_dataNode3(new)/users.sql" (io.mycat.util.dataMigrator.dataIOImpl.MysqlDataIO:MysqlDataIO.java:48)

2018-10-31 22:12:22,551 [INFO ][pool-3-thread-2] [db_user:users] /usr/bin/mysql -h192.168.189.131 -P3306 -uroot -p123456 -Ddb_user2 -f --default-character-set=utf8 -e "source /root/data/program/mycat/temp/db_user-users/db_user_dataNode2(old)-db_user_dataNode4(new)/users.sql" (io.mycat.util.dataMigrator.dataIOImpl.MysqlDataIO:MysqlDataIO.java:48)

[db_user:users] db_user_dataNode1->db_user_dataNode3 completed in 640ms

[db_user:users] db_user_dataNode2->db_user_dataNode4 completed in 660ms 2018-10-31 22:12:22:777 [3]-> cleaning redundant data...

[db_user:users] clean dataNode db_user_dataNode1 completed in 73ms

[db_user:users] clean dataNode db_user_dataNode2 completed in 137ms 2018-10-31 22:12:23:185 [4]-> validating tables migrate result...

+------migrate result------+

|[db_user:users] -> success|

+--------------------------+ 2018-10-31 22:12:23:456 migrate data complete in 3247ms

[root@localhost bin]#

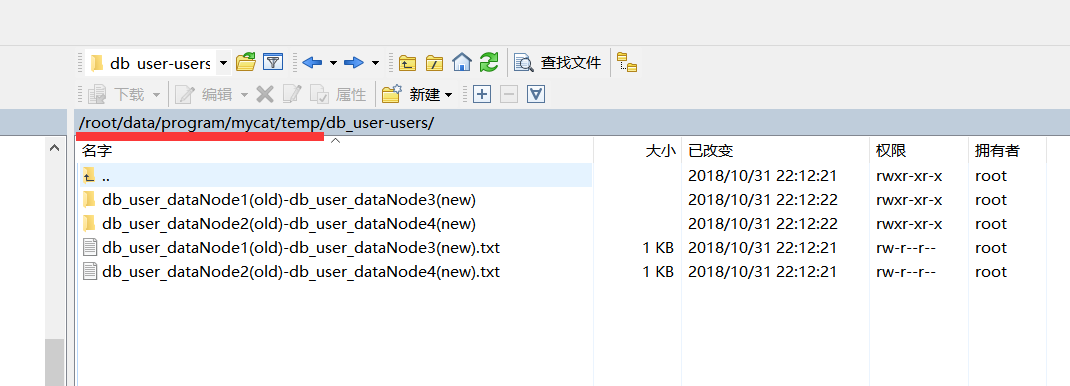

临时文件:/root/data/program/mycat/temp

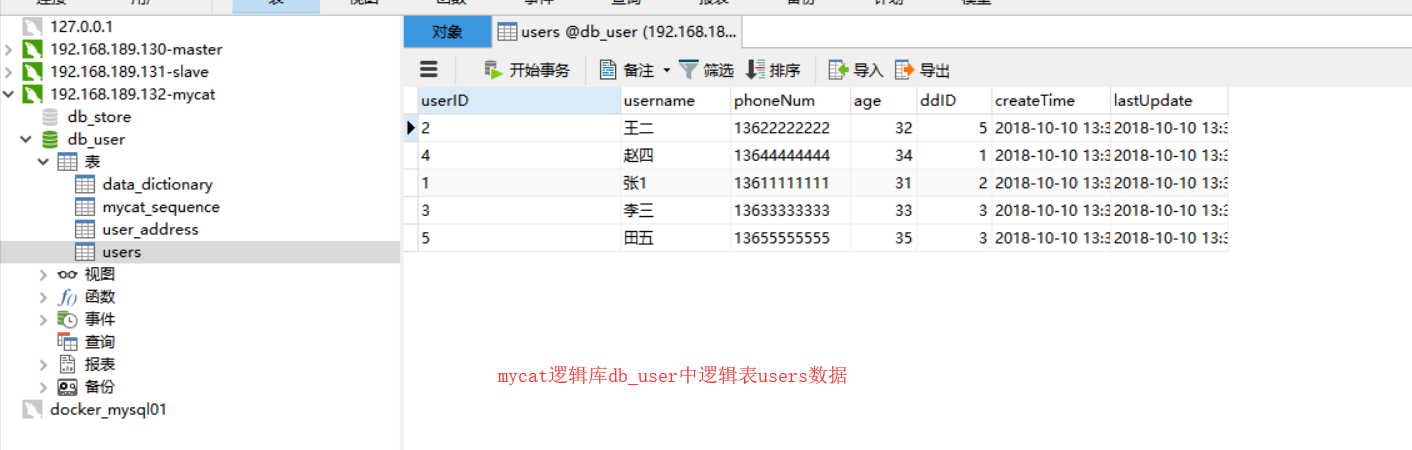

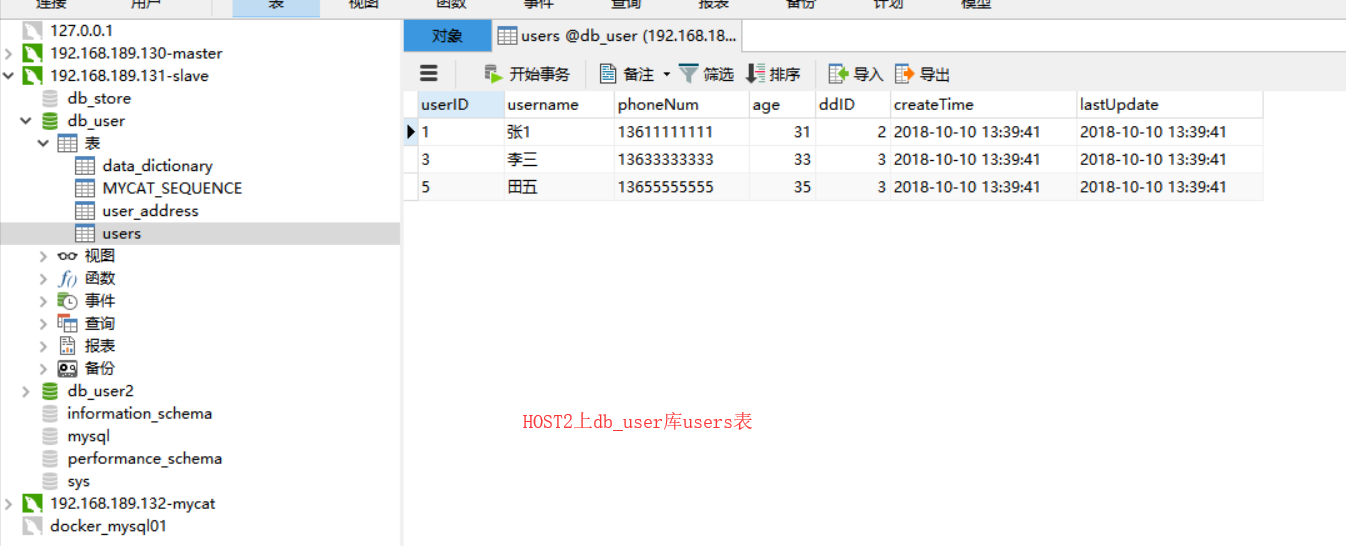

7、扩容前、扩容后数据对比

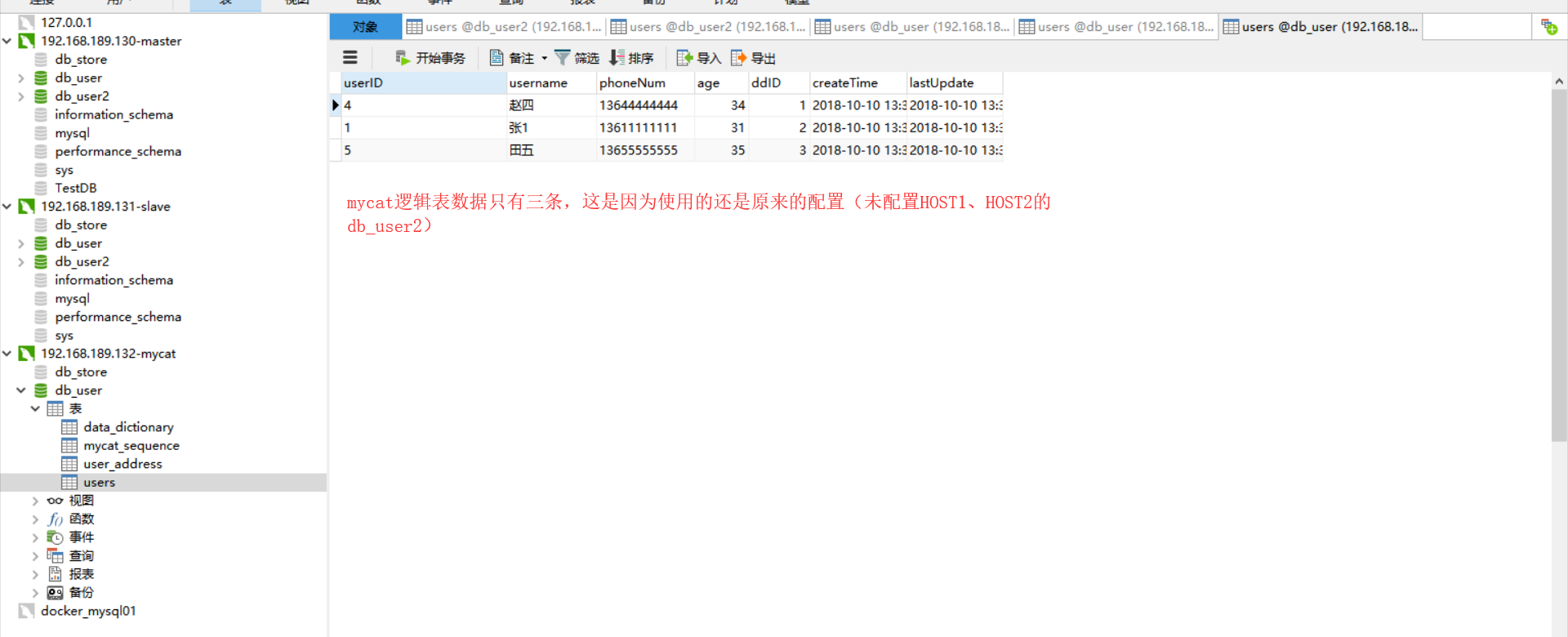

扩容前:

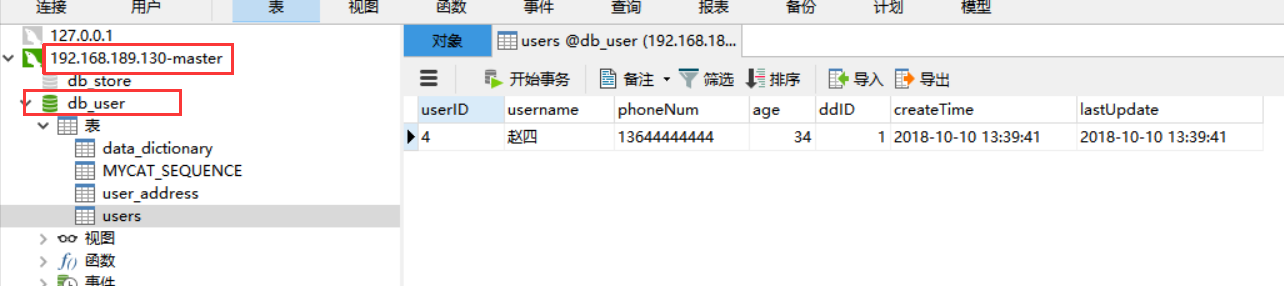

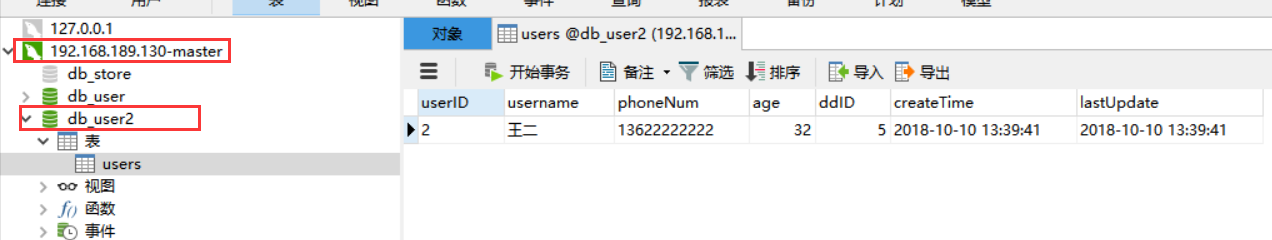

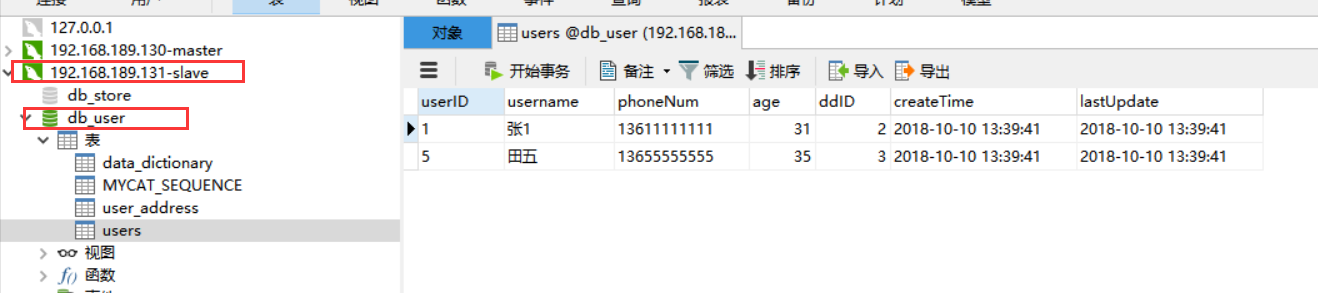

扩容后:

五条数据所处位置:

1 192.168.189.131-slave db_user

2 192.168.189.130-master db_user2

3 192.168.189.131-slave db_user2

4 192.168.189.130-master db_user

5 192.168.189.131-slave db_user

8、特别注意

1,一旦执行数据是不可逆的

2,只能支持分片表的扩缩容

3,分片规则必须一致,只能节点扩或者缩

四、参考文档:

https://blog.csdn.net/wangshuang1631/article/details/69055958/

https://www.cnblogs.com/li3807/p/8461878.html

https://blog.csdn.net/Haiyang_Duan/article/details/62099225

https://blog.csdn.net/leisure_life/article/details/78612256?locationNum=3&fps=1

MYCAT扩容的更多相关文章

- Mycat 分片规则详解--数据迁移及节点扩容

使用的是 Mycat 提供的 dataMigrate 脚本进行对数据进行迁移和节点扩容,目前支持的 Mycat 是1.6 版本,由于 Mycat 是由 Java 编写的因此在做数据迁移及节点扩容时需要 ...

- Mysql系列九:使用zookeeper管理远程Mycat配置文件、Mycat监控、Mycat数据迁移(扩容)

一.使用zookeeper管理远程Mycat配置文件 环境准备: 虚拟机192.168.152.130: zookeeper,具体参考前面文章 搭建dubbo+zookeeper+dubboadmin ...

- mycat 实践扩容

实践扩容, travelrecord表定义为10个分片,尝试将10个分片中的2个分片转移到第二台MySQL上 1--定义10个分片<table name="travelrecord_t ...

- mycat入门教程

github https://github.com/MyCATApache/Mycat-Server myCat介绍 myCat的诞生,要从其前身Amoeba和Cobar说起. Amoeba(变形虫) ...

- Mycat配置及使用详解.

首先我们来看下什么是Mycat:MyCat:开源分布式数据库中间件, 这里定义的很简单, 就是分布式数据库的中间件. 其实Mycat 是可以时mysql进行集群的中间件, 我们可以对mysql来分库分 ...

- MYCAT介绍(转)

从定义和分类来看,它是一个开源的分布式数据库系统,是一个实现了MySQL协议的服务器,前端用户可以把它看作是一个数据库代理,用MySQL客户端工具和命令行访问,而其后端可以用MySQL原生协议与多个M ...

- mycat初探

1:安装客户端 yum install mysql 2:安装服务端 yum install mysql-server 3:mycat要求不区分大小写 my.cnf(/etc/my.cnf)的[mysq ...

- [项目构建 十四]babasport Mycat配置及使用详解.

首先我们来看下什么是Mycat:MyCat:开源分布式数据库中间件, 这里定义的很简单, 就是分布式数据库的中间件. 其实Mycat 是可以时mysql进行集群的中间件, 我们可以对mysql来分库分 ...

- mycat实例(2)

全局序列号 数据切分后,原有的关系数据库中的主键约束在分布式条件下将无法使用,因此需要引入外部机制保证数据唯一性标识,这种保证全局性的数据唯一标识的机制就是全局序列号(sequence). 1. 本地 ...

随机推荐

- 后端返回值以json的格式返回,前端以json格式接收

以随便一个类为例子:这个例子是查询企业主营类别前5事项 一.以json数组的格式返回到前端中 (1)后端将结果绑定到param中,然后将结果以为json数组的格式返回到前端 /** * 查询企业主营类 ...

- An internal error has occurred. Java heap space

http://stackoverflow.com/questions/11001252/running-out-of-heap-space issue: I am having a heap spac ...

- [转帖].NET Core 2.0 是您的最好选择吗?

.NET Core 2.0 是您的最好选择吗? https://www.cnblogs.com/vipyoumay/p/7388371.html 1. NET Core 2.0 是您的最好选择吗? 1 ...

- msyql sql语句收集

在不断的学习中,发现了一些新的slq语句,总结于此. 一.复制数据表的sql 1)create table tableName as select * from tableName2 ...

- 堆排序获取TopN

package com.zjl.tool.sort; /** * 求前面的最大K个 解决方案:小根堆 (数据量比较大(特别是大到内存不可以容纳)时,偏向于采用堆) * @author 张恩备 * @d ...

- MES方向准备

与WMS系统对接,实现MES系统中原材料入库.采购退货.生产订单.原材料出库.成品入库单.完工报告.成品出库单.成品入库单.成品出库单.标准出货.出货计划等数据与单据作业数据同步. 车间立体仓库.AG ...

- PS(光影魔术手)

完成

- [转] python 模块学习 hashlib

转自: http://www.cnblogs.com/BeginMan/p/3328172.html 一.hashlib概述 涉及加密服务:14. Cryptographic Services 其中 ...

- PostgreSQL、SQL Server数据库中的数据类型的映射关系

PostgreSQL 8.1 轰动发布,我也打算将原来使用 SQL Server 的一些应用迁移到 PostgreSQL 上,首先需要迁移的是表,那么这就必须要先搞清楚这两个数据库中的数据类型的映射关 ...

- mysql 8.0,运行springboot项目配置:

1.修改pom.xml <dependency> <groupId>mysql</groupId> <artifactId>mysql-connecto ...