【MMT】ICLR 2020: MMT(Mutual Mean-Teaching)方法,无监督域适应在Person Re-ID上性能再创新高

原文链接 小样本学习与智能前沿 。 在这个公众号后台回复“200708”,即可获得课件电子资源。

为了减轻噪音伪标签的影响,文章提出了一种无监督的MMT(Mutual Mean-Teaching)方法,通过在迭代训练的方式中使用离线精炼硬伪标签和在线精炼软伪标签,来学习更佳的目标域中的特征。同时,还提出了可以让Traplet loss支持软标签的soft softmax-triplet loss”。 该方法在域自适应任务方面明显优于所有现有的Person re-ID方法,改进幅度高达18.2%。

@

ABSTRACT

Do What

In order to mitigate the effects of noisy pseudo labels:

- we propose to softly refine the pseudo labels in the target domain by proposing an unsupervised framework, Mutual Mean-Teaching (MMT), to learn better features from the target domain via off-line refined hard pseudo labels and on-line refined soft pseudo labels in an alternative training manner.

- the common practice is to adopt both the classification loss and the triplet loss jointly for achieving optimal performances in person re-ID models. However, conventional triplet loss cannot work with softly refined labels. To solve this problem, a novel soft softmax-triplet loss is proposed to support learning with soft pseudo triplet labels for achieving the optimal domain adaptation performance.

Results: The proposed MMT framework achieves considerable improvements of 14.4%, 18.2%, 13.4% and 16.4% mAP on Market-to-Duke, Duke-to-Market, Market-to-MSMT and Duke-to-MSMT unsupervised domain adaptation tasks.

1 INTRODUCTION

State-of-the-art UDA methods (Song et al., 2018; Zhang et al., 2019b; Yang et al., 2019) for person re-ID group unannotated images with clustering algorithms and train the network with clustering-generated pseudo labels.

Conclusion 1

The refinery of noisy pseudo labels has crucial influences to the final performance, but is mostly ignored by the clustering-based UDA methods.

To effectively address the problem of noisy pseudo labels in clustering-based UDA methods (Song et al., 2018; Zhang et al., 2019b; Yang et al., 2019) (Figure 1), we propose an unsupervised Mutual Mean-Teaching (MMT) framework to effectively perform pseudo label refinery by optimizing the neural networks under the joint supervisions of off-line refined hard pseudo labels and on-line refined soft pseudo labels.

Specifically, our proposed MMT framework provides robust soft pseudo labels in an on-line peer-teaching manner, which is inspired by the teacher-student approaches (Tarvainen & Valpola, 2017; Zhang et al., 2018b) to simultaneously train two same networks. The networks gradually capture target-domain data distributions and thus refine pseudo labels for better feature learning.

To avoid training error amplification, the temporally average model of each network is proposed to produce reliable soft labels for supervising the other network in a collaborative training strategy.

By training peer-networks with such on-line soft pseudo labels on the target domain, the learned feature representations can be iteratively improved to provide more accurate soft pseudo labels, which, in turn, further improves the discriminativeness of learned feature representations.

the conventional triplet loss (Hermansetal.,2017)cannot work with such refined soft labels. To enable using the triplet loss with soft pseudo labels in our MMT framework, we propose a novel soft softmax-triplet loss so that the network can benefit from softly refined triplet labels.

The introduction of such soft softmax-triplet loss is also the key to the superior performance of our proposed framework. Note that the collaborative training strategy on the two networks is only adopted in the training process. Only one network is kept in the inference stage without requiring any additional computational or memory cost.

The contributions of this paper could be summarized as three-fold.

- The proposed Mutual Mean-Teaching (MMT) framework is designed to provide more reliable soft labels.

- we propose the soft softmax-triplet loss to learn more discriminative person features.

- The MMT framework shows exceptionally strong performances on all UDA tasks of person re-ID.

2 RELATED WORK

Unsupervised domain adaptation (UDA) for person re-ID.

Genericdomainadaptationmethodsforclose-setrecognition.

Teacher-studentmodels

Generic methods for handling noisy labels

3 PROPOSED APPROACH

Our key idea is to conduct pseudo label refinery in the target domain by optimizing the neural networks with off-line refined hard pseudo labels and on-line refined soft pseudo labels in a collaborative training manner.

In addition, the conventional triplet loss cannot properly work with soft labels. A novel soft softmax-triplet loss is therefore introduced to better utilize the softly refined pseudo labels.

Both the soft classification loss and the soft softmax-triplet loss work jointly to achieve optimal domain adaptation performances.

Formally:

we denote the source domain data as \(D_s = \left\{(x^s_i,y^s_i )|^{N_s}_{i=1}\right\}\), where \(x^s_i\)and \(y^s_i\) denote the i-th training sample and its associated person identity label, \(N_s\) is the number of images, and \(M_s\) denotes the number of person identities (classes) in the source domain. The \(N_t\) target-domain images are denoted as \(Dt = \left\{x^t_i|^{N_t}_{i=1}\right\}\), which are not associated with any ground-truth identity label.

3.1 CLUSTERING-BASED UDA METHODS REVISIT

State-of-the-art UDA methods generally pre-train a deep neuralnet work \(F(·|θ)\)onthe source domain, where \(θ\) denotes current network parameters, and the network is then transferred to learn from the images in the target domain.

The source-domain images’ and target-domain images’ features encoded by the network are denoted as \(\left\{F(x^s_i|θ)\right\}|^{N_s}_{ i=1}\) and \(\left\{F(x^t_i|θ)\right\}|^{N_t}_{ i=1}\) respectively.

As illustrated in Figure 2 (a), two operations are alternated to gradually fine-tune the pre-trained network on the target domain.

- The target-domain samples are grouped into pre-defined \(M_t\)classes by clustering the features \(\left\{F(x^t_i|θ)\right\}|^{N_t}_{ i=1}\) output by the current network. Let \(\hat{h^t_i}\) denotes the pseudo label generated for image \(x^t_i\).

- The network parameters \(θ\) and a learnable target-domain classifier \(C^t : f^t →\left\{1,··· ,M_t\right\}\) are then optimized with respect to an identity classification(crossentropy) loss \(L^t_{id}(θ)\) and a triplet loss (Hermans et al., 2017) \(L^t_{tri}(θ)\) in the form of,

where ||·|| denotes the L2-norm distance, subscripts i,p and i,n indicate the hardest positive and hardest negative feature index in each mini-batch for the sample \(x^t_i\), and m = 0.5 denotes the triplet distance margin.

3.2 MUTUAL MEAN-TEACHING (MMT) FRAMEWORK

3.2.1 SUPERVISED PRE-TRAINING FOR SOURCE DOMAIN

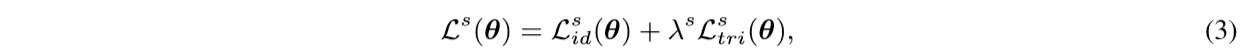

The neural network is trained with a classification loss \(L^s_{id}(θ)\) and a triplet loss \(L^s_{tri}(θ)\) to separate features belonging to different identities. The overall loss is therefore calculated as

where \(\lambda^s\) is the parameter weighting the two losses.

3.2.2 PSEUDO LABEL REFINERY WITH ON-LINE REFINED SOFT PSEUDO LABELS

off-linerefined hard pseudo labels as introduced in Section 3.1, where the pseudo label generation and refinement are conducted alternatively. However, the pseudo labels generated in this way are hard (i.e.,theyare always of 100% confidences) but noisy

our framework further incorporates on-line refined soft pseudo labels (i.e., pseudo labels with < 100% confidences) into the training process.

Our MMT framework generates soft pseudo labels by collaboratively training two same networks with different initializations. The overall framework is illustrated in Figure 2 (b).

our two collaborative networks also generate on-line soft pseudo labels by network predictions for training each other.

To avoid two networks collaboratively bias each other, the past temporally average model of each network instead of the current model is used to generate the soft pseudo labels for the other network. Both off-line hard pseudo labels and on-line soft pseudo labels are utilized jointly to train the two collaborative networks. After training,only one of the past average models with better validated performance is adopted for inference (see Figure 2 (c)).

We denote the two collaborative networks as feature transformation functions \(F(·|θ_1)\) and \(F(·|θ_2)\), and denote their corresponding pseudo label classifiers as \(C^t _1\) and \(C^t_2\), respectively.

we feed the same image batch to the two networks but with separately random erasing, cropping and flipping.

Each target-domain image can be denoted by \(x^t_i\) and \(x'^t_i\) for the two networks, and their pseudo label confidences can be predicted as \(C^t_1(F(x^t_i|θ_1))\) and \(C^t _2(F(x'^t_i|θ_2))\).

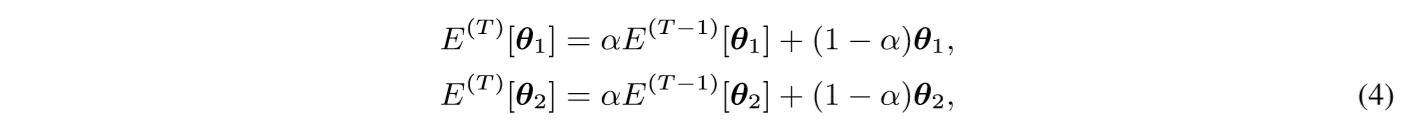

In order to avoid error amplification, we propose to use the temporally average model of each network to generate reliable soft pseudo labels for supervising the other network.

Specifically, the parameters of the temporally average models of the two networks at current iteration T are denoted as \(E^{(T)}[θ_1]\) and \(E^{(T)}[θ_2]\) respectively, which can be calculated as :

where \(E^{(T)}[θ_1]\), \(E^{(T)}[θ_2]\) indicate the temporal average parameters of the two networks in the previous iteration(T−1), the initial temporal average parameters are \(E^{(0)}[θ_1] = θ_1\), \(E^{(0)}[θ_2] = θ2\),and α is the ensembling momentum to be within the range [0,1).

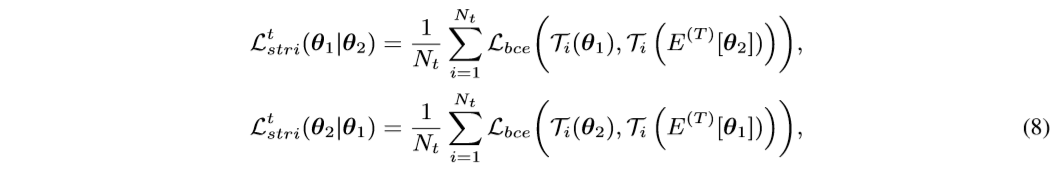

The robust soft pseudo label supervisions are then generated by the two temporal average models as \(C^t_1(F(x^t_i|E^{(T)}[θ_1]))\) and \(C^t_2(F(x^t_i|E^{(T)}[θ_2]))\) respectively. The soft classification loss for optimizing \(θ_1\) and \(θ_2\) with the soft pseudo labels generated from the other network can therefore be formulated as:

The two networks’ pseudo-label predictions are better dis-related by using other network’s past average model to generate supervisions and can therefore better avoid error amplification.

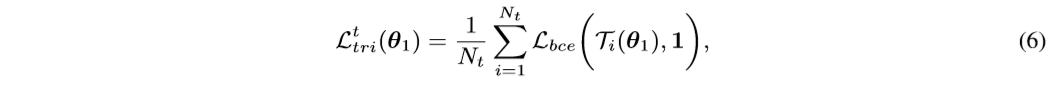

we propose to use softmax-triplet loss, whose hard version is formulated as:

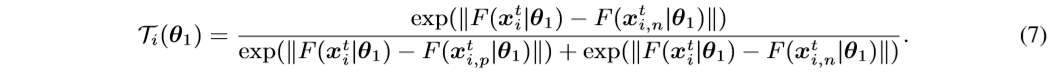

where

Here \(L_{bce}(·,·)\) denotes the binary cross-entropy loss. “1” denotes the ground-truth that the positive sample \(x^t_{i,p}\) should be closer to the sample \(x^t_i\) than its negative sample \(x^t_{i,n}\).

we can utilize the one network’s past temporal average model to generate soft triplet labels for the other network with the proposed soft softmax-triplet loss:

where \(T_i(E^{(T)}[θ_1])\) and \(T_i(E^{(T)}[θ_2])\) are the soft triplet labels generated by the two networks’ past temporally average models.

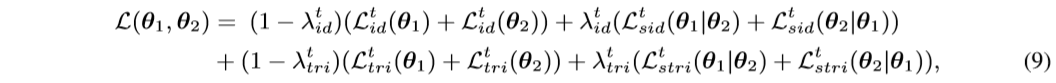

3.2.3 OVERALL LOSS AND ALGORITHM

Our proposed MMT framework is trained with both off-line refined hard pseudo labels and on-line refinedsoftpseudolabels. The over all loss function \(L(θ_1,θ_2)\) simultaneously optimizes the coupled networks, which combines equation 1, equation 5, equation 6, equation 8 and is formulated as,

where \(λ^t_{id}\), \(λ^t_{tri}\) aretheweightingparameters.

The detailed optimization procedures are summarized in Algorithm 1.

The hard pseudo labels are off-line refined after training with existing hard pseudo labelsforoneepoch. During the training process, the two networks are trained by combining the off line refined hard pseudo labels and on-line refined soft labels predicted by their peers with proposed soft losses. The noise and randomness caused by hard clustering, which lead to unstable training and limited final performance, can be alleviated by the proposed MMT framework.

4 EXPERIMENTS

4.1 DATASETS

We evaluate our proposed MMT on three widely-used person re-ID datasets, i.e., Market1501(Zhengetal.,2015),DukeMTMC-reID(Ristanietal.,2016),andMSMT17(Weietal.,2018).

For evaluating the domain adaptation performance of different methods, four domain adaptation tasks are set up, i.e., Duke-to-Market, Market-to-Duke, Duke-to-MSMT and Market-to-MSMT, where only identity labels on the source domain are provided. Mean average precision (mAP) and CMC top-1, top-5, top-10 accuracies are adopted to evaluate the methods’ performances.

4.2 IMPLEMENTATION DETAILS

4.2.1 TRAINING DATA ORGANIZATION

For both source-domain pre-training and target-domain fine-tuning, each training mini-batch contains 64 person images of 16 actual or pseudo identities (4 for each identity).

All images are resized to 256×128 before being fed into the networks.

4.2.2 OPTIMIZATION DETAILS

All the hyper-parameters of the proposed MMT framework are chosen based on a validation set of the Duke-to-Market task with \(M_t\) = 500 pseudo identities and IBN-ResNet-50 backbone.

We propose a two-stage training scheme, where ADAM optimizer is adopted to optimize the networks with a weight decay of 0.0005. Randomly erasing (Zhong et al., 2017b) is only adopted in target-domain fine-tuning.

- Stage 1: Source-domain pre-training.

- Stage 2: End-to-end training with MMT.

4.3 COMPARISON WITH STATE-OF-THE-ARTS

The results are shown in Table 1. Our MMT framework significantly outperforms all existing approaches with both ResNet-50 and IBN-ResNet-50 backbones, which verifies the effectiveness of our method.

Such results prove the necessity and effectiveness of our proposed pseudo label refinery for hard pseudo labels with inevitable noises.

Co-teaching (Han et al., 2018) is designed for general close-set recognition problems with manually generated label noise, which could not tackle the real-world challenges in unsupervised person re-ID. More importantly, it does not explore how to mitigate the label noise for the triplet loss as our method does.

4.4 ABLATION STUDIES

In this section, we evaluate each component in our proposed framework by conducting ablation studies on Duke-to-Market and Market-to-Duke tasks with both ResNet-50 (He et al., 2016) and IBN-ResNet-50 (Pan et al., 2018) backbones. Results are shown in Table 2.

Effectiveness of the soft pseudo label refinery.

To investigate the necessity of handling noisy pseudo labels in clustering-based UDA methods,we create baseline models that utilize only off-line refined hard pseudo labels. i.e., optimizing equation 9 with \(λ^t_{id} = λ^t_{tri} = 0\) for the two-step training strategy in Section 3.1.

The baseline model performances are present in Table 2 as “Baseline (only \(L^t_{id}\) &\(L^t_{tri}\))”.

Effectiveness of the soft softmax-triplet loss.

We also verify the effectiveness of soft softmaxtriplet loss with softly refined triplet labels in our proposed MMT framework.

Experiments of removing the soft softmax-triplet loss, i.e., \(λ^t_{tri} = 0\) in equation 9, but keeping the hard softmax-triplet loss (equation 6) are conducted, which are denoted as “Baseline+MMT-500 (w/o Ltstri)”.

EffectivenessofMutualMean-Teaching.

We propose to generate on-line refined soft pseudo labels for one network with the predictions of the past average model of the other network in our MMT framework ,i.e., the soft labels for network1 are output from the average model of network2 an dvice versa.

Necessity of hard pseudo labels in proposed MMT.

To investigate the contribution of \(L^t_{id}\) in the final training objective function as equation 9, we conduct two experiments.

- (1) “Baseline+MMT-500 (only \(L^t_{sid}\) & \(L^t_{stri}\))” by removing both hard classification loss and hard triplet loss with \(λ^t_{id} = λ^t_{tri} = 1\);

- (2)“Baseline+MMT-500 (w/o \(L^t_{id})\)” by removing only hard classification loss with \(λ^t_{id} = 1\).

5 CONCLUSION

In this work, we propose an unsupervised Mutual Mean-Teaching (MMT) framework to tackle the problem of noisy pseudo labels in clustering-based unsupervised domain adaptation methods for person re-ID. The key is to conduct pseudo label refinery to better model inter-sample relations in the target domain by optimizing with the off-line refined hard pseudo labels and on-line refined soft pseudo labels in a collaborative training manner. Moreover, a novel soft softmax-triplet loss is proposed to support learning with softly refined triplet labels for optimal performances. Our method significantly outperforms all existing person re-ID methods on domain adaptation task with up to 18.2% improvements.

【MMT】ICLR 2020: MMT(Mutual Mean-Teaching)方法,无监督域适应在Person Re-ID上性能再创新高的更多相关文章

- 通过Html5的postMessage和onMessage方法实现跨域跨文档请求访问

在项目中有应用到不同的子项目,通过不同的二级域名实现相互调用功能.其中一个功能是将播放器作为单独的二级域名的请求接口,其他项目必须根据该二级域名调用播放器.最近需要实现视频播放完毕后的事件触发,调用父 ...

- 对于方法 String.Contains,只支持可在客户端上求值的参数。

var ProjectLevel_XJJS = "06,07,08,09"; p.Where(e =>ProjectLevel_XJJS.Contains(e.LevelCo ...

- Android全屏截图的方法,返回Bitmap并且保存在SD卡上

Android全屏截图的方法,返回Bitmap并且保存在SD卡上 今天做分享,需求是截图分享,做了也是一个运动类的产品,那好,我们就直接开始做,考虑了一下,因为是全屏的分享,所有很自然而然的想到了Vi ...

- kaggle信用卡欺诈看异常检测算法——无监督的方法包括: 基于统计的技术,如BACON *离群检测 多变量异常值检测 基于聚类的技术;监督方法: 神经网络 SVM 逻辑回归

使用google翻译自:https://software.seek.intel.com/dealing-with-outliers 数据分析中的一项具有挑战性但非常重要的任务是处理异常值.我们通常将异 ...

- zz斯坦福Jure Leskovec图表示学习:无监督和有监督方法

斯坦福Jure Leskovec图表示学习:无监督和有监督方法(附PPT下载) 2017 年 12 月 18 日 专知 专知内容组(编) 不要讲得太清楚 [导读]现实生活中的很多关系都是通过图的形式 ...

- 无监督LDA、PCA、k-means三种方法之间的的联系及推导

\(LDA\)是一种比较常见的有监督分类方法,常用于降维和分类任务中:而\(PCA\)是一种无监督降维技术:\(k\)-means则是一种在聚类任务中应用非常广泛的数据预处理方法. 本文的 ...

- Approach for Unsupervised Bug Report Summarization 无监督bug报告汇总方法

AUSUM: approach for unsupervised bug report summarization 1. Abstract 解决的bug被归类以便未来参考 缺点是还是需要手动的去细读很 ...

- ICLR 2020 | 抛开卷积,multi-head self-attention能够表达任何卷积操作

近年来很多研究将nlp中的attention机制融入到视觉的研究中,得到很不错的结果,于是,论文侧重于从理论和实验去验证self-attention可以代替卷积网络独立进行类似卷积的操作,给self- ...

- 前台JS(Jquery)调用后台方法 无刷新级联菜单示例

前台用AJAX直接调用后台方法,老有人发帖提问,没事做个示例 下面是做的一个前台用JQUERY,AJAX调用后台方法做的无刷新级联菜单 http://www.dtan.so CasMenu.aspx页 ...

随机推荐

- ubuntu裸机启动python博客项目

关注公众号"轻松学编程"了解更多. 在linux的ubuntu(18.04)中正确安装python的命令: sudo apt clean sudo apt update sudo ...

- [LuoguP3808] 【模板】AC自动机(简单版)数组版

待填坑 Code #include<iostream> #include<cstdio> #include<queue> #include<cstring&g ...

- 前端未来趋势之原生API:Web Components

声明:未经允许,不得转载. Web Components 现世很久了,所以你可能听说过,甚至学习过,非常了解了.但是没关系,可以再重温一下,温故知新. 浏览器原生能力越来越强. js 曾经的 JQue ...

- leetcode131:letter-combinations-of-a-phone-number

题目描述 给出一个仅包含数字的字符串,给出所有可能的字母组合. 数字到字母的映射方式如下:(就像电话上数字和字母的映射一样) Input:Digit string "23"Outp ...

- python风格代码荟萃

今天总结一下在python中常用的一些风格代码,这些可能大家都会用,但有时可能也会忘记,在这里总结,工大家参考~~~ 先点赞在看,养成习惯~~~ 标题遍历一个范围内的数字 for i in xrang ...

- 力扣 - 146. LRU缓存机制

目录 题目 思路 代码 复杂度分析 题目 146. LRU缓存机制 思路 利用双链表和HashMap来解题 看到链表题目,我们可以使用头尾结点可以更好进行链表操作和边界判断等 还需要使用size变量来 ...

- Doctrine\ORM\QueryBuilder 源码解析之 where

背景 最近有需求实现类似于 QueryBuilder 的谓词语句,就去翻看了它的源码.先看两个例子 例子1 $qb = $em->createQueryBuilder(); $qb->se ...

- CEF避坑指南(一)——编译并自制浏览器

CEF即Chromium Embedded Framework,Chrome浏览器嵌入式框架.我们可以从自制浏览器入手,深入学习它.它提供了接口供程序员们把Chrome放到自己的程序中.许多大型公司, ...

- 创建Grafana监控视图

前言 Grafana允许查询,可视化,警报和了解指标,无论它们存储在哪里. 可视化:具有多种选项的快速灵活的客户端图.面板插件提供了许多不同的方式来可视化指标和日志. 动态仪表盘:使用模板变量创建动态 ...

- centos6 virbox安装

yum install kernel-devel yum update kernel* wget http://download.virtualbox.org/virtualbox/debian/or ...