hbase的api操作

创建maven工程,修改jdk

pom文件里添加需要的jar包

dependencies>

<dependency>

<groupId>jdk.tools</groupId>

<artifactId>jdk.tools</artifactId>

<version>1.8</version>

<scope>system</scope>

<systemPath>${JAVA_HOME}/lib/tools.jar</systemPath>

</dependency>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>3.8.1</version>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-it</artifactId>

<version>1.2.5</version>

<type>pom</type>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>2.6.1</version>

</dependency>

</dependencies>

import java.io.IOException;

import java.util.ArrayList; import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.Cell;

import org.apache.hadoop.hbase.CellUtil;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.HColumnDescriptor;

import org.apache.hadoop.hbase.HTableDescriptor;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.Get;

import org.apache.hadoop.hbase.client.HBaseAdmin;

import org.apache.hadoop.hbase.client.HTable;

import org.apache.hadoop.hbase.client.Put;

import org.apache.hadoop.hbase.client.Result;

import org.apache.hadoop.hbase.client.ResultScanner;

import org.apache.hadoop.hbase.client.Scan;

import org.apache.hadoop.hbase.util.Bytes; public class CreateTableTest { static Configuration conf = HBaseConfiguration.create();//读取hbase的配置文件 static HBaseAdmin admin = null;//执行管理员 public static void main(String[] args) throws Exception{ admin=new HBaseAdmin(conf);

// createTable();

// listTable();

// deleteTable();

// listTable();

// putData();

// scanTable();

getData();

// putaLots(); }

//创建表

public static void createTable() throws Exception, IOException{

//表的描述对象

HTableDescriptor table=new HTableDescriptor(TableName.valueOf("javaemp1"));

//列簇

table.addFamily(new HColumnDescriptor("personal"));

table.addFamily(new HColumnDescriptor("professional")); admin.createTable(table);

System.out.println("create table finished");

}

//列出所有的表

public static void listTable() throws IOException{

HTableDescriptor[] Tablelist = admin.listTables();

for(int i=0;i<Tablelist.length;i++)

{

System.out.println(Tablelist[i].getNameAsString());

}

}

//删除表

public static void deleteTable() throws Exception{

//删表之前先禁用表

// admin.disableTable("javaemp1");

// admin.deleteTable("javaemp1");

// System.out.println("delete finished"); //删除表中的某一列簇

// admin.deleteColumn("javaemp","professional");

// System.out.println("delete column"); //增加一列

admin.addColumn("javaemp",new HColumnDescriptor("professional"));

System.out.println("add column"); }

//----------------------------------------------------------------------------------------------------------

//插入数据

public static void putData() throws Exception{

//HTable类实现对单个表的操作,参数为:配置对象,表名

HTable table = new HTable(conf,"javaemp"); Put p = new Put(Bytes.toBytes("1001"));//实例化Put类,指定rwo key来操作

Put p1=new Put(Bytes.toBytes("1002"));

//

p.add(Bytes.toBytes("personal"),Bytes.toBytes("name"),Bytes.toBytes("lalisa"));

// 参数:列簇,列,值

p.add(Bytes.toBytes("personal"),Bytes.toBytes("city"),Bytes.toBytes("beijing")); table.put(p); p1.add(Bytes.toBytes("professional"),Bytes.toBytes("designation"),Bytes.toBytes("it"));

p1.add(Bytes.toBytes("professional"),Bytes.toBytes("salary"),Bytes.toBytes("16010")); table.put(p1); System.out.println("put data finished"); table.close();//释放HTable的资源

}

//批量插入数据

public static void putaLots() throws IOException{

HTable table = new HTable(conf,"javaemp");

ArrayList<Put> list = new ArrayList<Put>(10);

for (int i=0;i<10;i++)

{

Put put = new Put(Bytes.toBytes("row"+i));

put.add(Bytes.toBytes("personal"),Bytes.toBytes("name"),Bytes.toBytes("people"+i));

list.add(put);

}

table.put(list);

System.out.println("put list finished");

}

//获取某一列数据

public static void getData() throws IOException{

HTable table = new HTable(conf, "javaemp"); Get get = new Get(Bytes.toBytes("1001"));//实例化Get类 Result result = table.get(get);//获取这一row的数据 // 输出这一行的某一个字段

byte[] value = result.getValue(Bytes.toBytes("personal"),Bytes.toBytes("name"));

String name=Bytes.toString(value);

System.out.println("Name:"+name); //输出这一行的所有数据

Cell[] cells = result.rawCells();

for(Cell cell:cells)

{

System.out.print(Bytes.toString(CellUtil.cloneRow(cell))+"--");

System.out.print(Bytes.toString(CellUtil.cloneFamily(cell))+":");

System.out.print(Bytes.toString(CellUtil.cloneQualifier(cell))+"->");

System.out.println(Bytes.toString(CellUtil.cloneValue(cell))); } table.close();//释放HTable的资源

}

//scan某一列

public static void scanTable() throws IOException{

HTable table = new HTable(conf, "javaemp");

Scan scan = new Scan(); // 实例化Scan类

scan.addColumn(Bytes.toBytes("personal"), Bytes.toBytes("name"));//scan某列簇的某列

scan.addFamily(Bytes.toBytes("professional"));//scan某列簇

ResultScanner scanner = table.getScanner(scan); for(Result res=scanner.next();res!=null;res=scanner.next())

{

System.out.println(res);

}

table.close();//释放HTable的资源

}

//统计row key的个数

public static void count(){ }

//关闭hbase

public static void close() throws IOException{

admin.shutdown();

}

}

package com.neworigin.Work; import java.io.IOException;

import java.util.ArrayList; import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.Cell;

import org.apache.hadoop.hbase.CellUtil;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.HColumnDescriptor;

import org.apache.hadoop.hbase.HTableDescriptor;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.Get;

import org.apache.hadoop.hbase.client.HBaseAdmin;

import org.apache.hadoop.hbase.client.HTable;

import org.apache.hadoop.hbase.client.Put;

import org.apache.hadoop.hbase.client.Result;

import org.apache.hadoop.hbase.client.ResultScanner;

import org.apache.hadoop.hbase.client.Scan;

import org.apache.hadoop.hbase.util.Bytes; public class HbaseWork { static Configuration conf=HBaseConfiguration.create();

// static Connection conn= ConnectionFactory.createConnection(conf);

static HBaseAdmin admin=null;

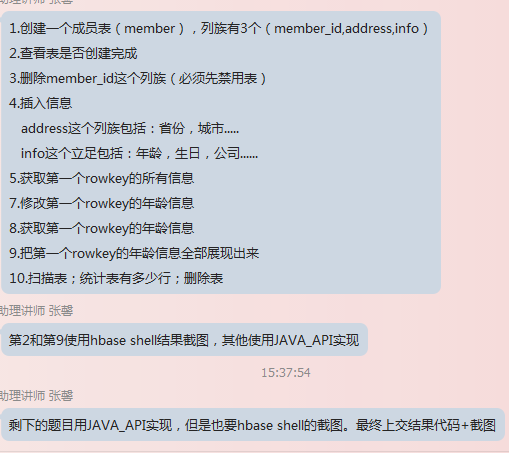

public static void createTable() throws IOException{

HTableDescriptor table=new HTableDescriptor(TableName.valueOf("member"));

table.addFamily(new HColumnDescriptor("member_id"));

table.addFamily(new HColumnDescriptor("address"));

table.addFamily(new HColumnDescriptor("info"));

admin.createTable(table);

System.out.println("create table finished");

}

public static void deletefamily() throws IOException{

// HTableDescriptor table = new HTableDescriptor(TableName.valueOf("member"));

admin.deleteColumn("member", "member_id");

System.out.println("delete");

}

public static void insertdata() throws IOException{

HTable table = new HTable(conf,"member");

ArrayList<Put> list =new ArrayList<Put>(25);

for(int i=0;i<5;i++)

{

Put put = new Put(Bytes.toBytes("row"+i));

put.add(Bytes.toBytes("address"), Bytes.toBytes("province"), Bytes.toBytes("pr"+i));

put.add(Bytes.toBytes("address"), Bytes.toBytes("city"), Bytes.toBytes("ct"+i));

put.add(Bytes.toBytes("info"), Bytes.toBytes("age"), Bytes.toBytes("2"+i));

put.add(Bytes.toBytes("info"), Bytes.toBytes("birthday"), Bytes.toBytes("data"+i));

put.add(Bytes.toBytes("info"), Bytes.toBytes("company"), Bytes.toBytes("com"+i));

list.add(put);

}

table.put(list);

}

public static void getinfo() throws IOException{

HTable table = new HTable(conf, "member");

// for(int i=0;i<5;i++)

// {

//

// }

Get get = new Get(Bytes.toBytes(("row0")));

Result result = table.get(get);

for(Cell cell: result.rawCells())

{

System.out.print(Bytes.toString(CellUtil.cloneRow(cell))+"--");

System.out.print(Bytes.toString(CellUtil.cloneFamily(cell))+":");

System.out.print(Bytes.toString(CellUtil.cloneQualifier(cell))+"->");

System.out.println(Bytes.toString(CellUtil.cloneValue(cell)));

} }

public static void alterfirstrow() throws IOException{

HTable table = new HTable(conf, "member");

Put put = new Put(Bytes.toBytes("row0"));

put.add(Bytes.toBytes("info"), Bytes.toBytes("age"), Bytes.toBytes("30"));

table.put(put);

}

public static void getage() throws IOException{

HTable table = new HTable(conf,"member");

Get get = new Get(Bytes.toBytes("row0"));

Result result = table.get(get);

byte[] bs = result.getValue(Bytes.toBytes("info"), Bytes.toBytes("age"));

String age=Bytes.toString(bs);

System.out.println("age:"+age);

}

public static void scanTable() throws IOException{

Scan scan = new Scan();

HTable table = new HTable(conf,"member");

scan.addColumn(Bytes.toBytes("address"), Bytes.toBytes("province"));

scan.addColumn(Bytes.toBytes("address"), Bytes.toBytes("city"));

scan.addColumn(Bytes.toBytes("info"), Bytes.toBytes("age"));

scan.addColumn(Bytes.toBytes("info"), Bytes.toBytes("birthday"));

scan.addColumn(Bytes.toBytes("info"), Bytes.toBytes("company"));

ResultScanner scanner = table.getScanner(scan);

for(Result res=scanner.next();res!=null;res=scanner.next())

{

System.out.println(res);

}

}

public static void countrow() throws IOException{

HTable table = new HTable(conf,"member");

Scan scan = new Scan();

ResultScanner scanner = table.getScanner(scan);

int i=0;

while(scanner.next()!=null)

{

i++;

// System.out.println(scanner.next());

}

System.out.println(i);

}

public static void delTable() throws IOException{

boolean b = admin.isTableEnabled("member");

if(b)

{

admin.disableTable("member");

}

admin.deleteTable("member");

}

public static void main(String[] args) throws IOException {

admin=new HBaseAdmin(conf);

// createTable();

// deletefamily();

// insertdata();

// alterfirstrow();

// getinfo();

// getage();

// scanTable();

// countrow();

delTable();

}

}

hbase的api操作的更多相关文章

- HBase伪分布式环境下,HBase的API操作,遇到的问题

在hadoop2.5.2伪分布式上,安装了hbase1.0.1.1的伪分布式 利用HBase的API创建个testapi的表时,提示 Exception in thread "main&q ...

- HBase学习之路 (四)HBase的API操作

Eclipse环境搭建 具体的jar的引入方式可以参考http://www.cnblogs.com/qingyunzong/p/8623309.html HBase API操作表和数据 import ...

- HBase(五)HBase的API操作

一.项目环境搭建 新建 Maven Project,新建项目后在 pom.xml 中添加依赖: <dependency> <groupId>org.apache.hbase&l ...

- hbase的api操作之过滤器

Comparison Filter: 对比过滤器: 1.RowFilter select * from ns1:t1 where rowkey <= row100 ...

- hbase的api操作之scan

扫描器缓存---------------- 面向行级别的. @Test public void getScanCache() throws IOException { Configu ...

- Java API 操作HBase Shell

HBase Shell API 操作 创建工程 本实验的环境实在ubuntu18.04下完成,首先在改虚拟机中安装开发工具eclipse. 然后创建Java项目名字叫hbase-test 配置运行环境 ...

- HBase 6、用Phoenix Java api操作HBase

开发环境准备:eclipse3.5.jdk1.7.window8.hadoop2.2.0.hbase0.98.0.2.phoenix4.3.0 1.从集群拷贝以下文件:core-site.xml.hb ...

- HBase API操作

|的ascII最大ctrl+shift+t查找类 ctrl+p显示提示 HBase API操作 依赖的jar包 <dependencies> <dependency> < ...

- Hbase Shell命令详解+API操作

HBase Shell 操作 3.1 基本操作1.进入 HBase 客户端命令行,在hbase-2.1.3目录下 bin/hbase shell 2.查看帮助命令 hbase(main):001:0& ...

随机推荐

- (转载)Unity里实现更换游戏对象材质球

在unity中本来想实现在一个背景墙上更换图片的功能 在网上查了一些资料说是用Image,但我是新手小白刚接触Unity不久好多组建还不会用,就想能不能通过改变游戏对象的材质球来更换游戏对象的背景. ...

- 【Hadoop 分布式部署 六:环境问题解决和集群基准测试】

环境问题: 出现Temporary failure in name resolutionp-senior-zuoyan.com 的原因有很多,主要就是主机没有解析到, 那就在hadoop的sl ...

- Angular CLI命令

ng 基础命令 npm install –g @angular/cli npm install -g @angular/cli@latest ng serve –prot –aot 启动项目并压缩项目 ...

- 【二十八】xml编程(dom\xpath\simplexml)

1.xml基础概念 作用范围: 作为程序通讯的标准. 作为配置文件. 作为小型数据库. xml语法: <根标签> <标签 元素="元素值" ...>< ...

- 数据库备份出现警告:Warning: Using a password on the command line interface can be insecure. Warning: A partial dump from a server that has GTIDs will by default include the GTIDs of all transactions, even thos

1.先来看原备份数据库语句: mysqldump -h 127.0.0.1 -uroot -ppassword database > /usr/microStorage/dbbackup/cap ...

- mybatis理解(0)

- VC.时间(网页内容收集)

1.VC++获得当前系统时间的几种方案_记忆53秒_新浪博客.html(http://blog.sina.com.cn/s/blog_676271a60101i0hb.html) 1.1.内容保存: ...

- [calss*="col-"]匹配类名中包含col-的类名,^以col-开头,$以col-结尾

[class*= col-] 代表包含 col- 的类名 , 例 col-md-4 ,demo-col-2(这个是虚构的)等都可以匹配到. [class^=col-] 代表 以 col- 开头 ...

- leecode第二十一题(合并两个有序链表)

/** * Definition for singly-linked list. * struct ListNode { * int val; * ListNode *next; * ListNode ...

- JS添加/移除事件

事件的传播方式 <div id="father"> <div id="son"></div> </div> &l ...