Android 12(S) Binder(一)

今天开始了解一下binder,就先从ServiceManager开始学习。

网上的相关博文普遍是Android 11之前的,阅读时对比Android 11或12的代码发现有很多内容找不到了,比如 frameworks/native/cmds/servicemanager 下面的binder.c没有了,原先使用的binder_loop、svcmgr_handler也没有了,多出了新的类ServiceManagerShim等等。原先的ServiceManager是直接操作binder_open 和 mmap函数,现在这些操作都需要借助libbinder,和其他native binder风格一致了,变化还是挺大的。

由于没有研究过Android 10及以前的binder,所以没法做出很清晰的对比,这里直接来看看Android S上的ServiceManager的工作原理。先来看看文件结构:

a. 现在IServiceManager和其他服务相同,可以用aidl文件来生成,aidl文件路径在 frameworks/native/libs/binder/aidl/android/os/IServiceManager.aidl,IServiceManager、BnServiceManager、BpServiceManager由aidl文件来生成,文件路径在 frameworks/native/libs/binder/aidl/android/os/IServiceManager.aidl,生成文件在out\soong\.intermediates\frameworks\native\libs\binder\libbinder\android_arm_armv7-a-neon_shared\gen\aidl\android\os 目录下,命名空间为 android::os(下面以这个命名空间代表aidl生成的类),这些文件会被打包到libbinder中。

b. 路径 frameworks/native/libs/binder/include/binder 下又有一个IServiceManager.h,这和上面由aidl生成的接口文件是完全不同的!这边的IServiceManager是我们平时所include使用的,命名空间为 android::,aidl生成的文件会在 frameworks/native/libs/binder/IServiceManager.cpp中被include使用。

c. frameworks/native/libs/binder/IServiceManager.cpp 中多了一个新的类ServiceManagerShim,继承于android::IServiceManger,这个类是个套壳类,把android::os::IServiceManager重新做了一次封装

d. ServiceManager的服务端实现在 frameworks/native/cmds/servicemanager/ServiceManager.cpp

1、ServiceManager是如何启动的

相关代码在:frameworks/native/cmds/servicemanager/main.cpp

int main(int argc, char** argv) {

if (argc > 2) {

LOG(FATAL) << "usage: " << argv[0] << " [binder driver]";

}

const char* driver = argc == 2 ? argv[1] : "/dev/binder";

// 打开binder驱动,open,mmap

sp<ProcessState> ps = ProcessState::initWithDriver(driver);

ps->setThreadPoolMaxThreadCount(0);

ps->setCallRestriction(ProcessState::CallRestriction::FATAL_IF_NOT_ONEWAY);

// 实例化ServiceManager

sp<ServiceManager> manager = sp<ServiceManager>::make(std::make_unique<Access>());

// 将自身注册到ServiceManager当中

if (!manager->addService("manager", manager, false /*allowIsolated*/, IServiceManager::DUMP_FLAG_PRIORITY_DEFAULT).isOk()) {

LOG(ERROR) << "Could not self register servicemanager";

}

// 将ServiceManager设置给IPCThreadState的全局变量

IPCThreadState::self()->setTheContextObject(manager);

// 通知binder我就是ServiceManager

ps->becomeContextManager();

// 准备Looper

sp<Looper> looper = Looper::prepare(false /*allowNonCallbacks*/);

BinderCallback::setupTo(looper);

ClientCallbackCallback::setupTo(looper, manager);

// 循环等待消息

while(true) {

looper->pollAll(-1);

}

// should not be reached

return EXIT_FAILURE;

}

注册过程大概分为五步:

a. 打开binder驱动

b. 实例化ServiceManager,并将自身注册进去

c. 将ServiceManager传给IPCThreadState

d. 将ServiceManager注册到binder

e. 开始循环监听

接下来分别看看都做了什么:

a. 打开binder驱动

sp<ProcessState> ProcessState::initWithDriver(const char* driver)

{

return init(driver, true /*requireDefault*/);

} sp<ProcessState> ProcessState::init(const char *driver, bool requireDefault)

{

[[clang::no_destroy]] static sp<ProcessState> gProcess;

[[clang::no_destroy]] static std::mutex gProcessMutex; if (driver == nullptr) {

std::lock_guard<std::mutex> l(gProcessMutex);

return gProcess;

} [[clang::no_destroy]] static std::once_flag gProcessOnce;

std::call_once(gProcessOnce, [&](){

if (access(driver, R_OK) == -1) {

ALOGE("Binder driver %s is unavailable. Using /dev/binder instead.", driver);

driver = "/dev/binder";

} std::lock_guard<std::mutex> l(gProcessMutex);

// 实例化单例ProcessState

gProcess = sp<ProcessState>::make(driver);

}); if (requireDefault) {

// Detect if we are trying to initialize with a different driver, and

// consider that an error. ProcessState will only be initialized once above.

LOG_ALWAYS_FATAL_IF(gProcess->getDriverName() != driver,

"ProcessState was already initialized with %s,"

" can't initialize with %s.",

gProcess->getDriverName().c_str(), driver);

} return gProcess;

} ProcessState::ProcessState(const char *driver)

: mDriverName(String8(driver))

, mDriverFD(open_driver(driver)) // open_driver 打开驱动

, mVMStart(MAP_FAILED)

, mThreadCountLock(PTHREAD_MUTEX_INITIALIZER)

, mThreadCountDecrement(PTHREAD_COND_INITIALIZER)

, mExecutingThreadsCount(0)

, mWaitingForThreads(0)

, mMaxThreads(DEFAULT_MAX_BINDER_THREADS)

, mStarvationStartTimeMs(0)

, mThreadPoolStarted(false)

, mThreadPoolSeq(1)

, mCallRestriction(CallRestriction::NONE)

{

if (mDriverFD >= 0) {

// mmap the binder, providing a chunk of virtual address space to receive transactions.

// mmap虚拟内存映射 大小为 1M - 2页

mVMStart = mmap(nullptr, BINDER_VM_SIZE, PROT_READ, MAP_PRIVATE | MAP_NORESERVE, mDriverFD, 0);

if (mVMStart == MAP_FAILED) {

// *sigh*

ALOGE("Using %s failed: unable to mmap transaction memory.\n", mDriverName.c_str());

close(mDriverFD);

mDriverFD = -1;

mDriverName.clear();

}

} #ifdef __ANDROID__

LOG_ALWAYS_FATAL_IF(mDriverFD < 0, "Binder driver '%s' could not be opened. Terminating.", driver);

#endif

} static int open_driver(const char *driver)

{

// 打开binder

int fd = open(driver, O_RDWR | O_CLOEXEC);

if (fd >= 0) {

int vers = 0;

// ioctl 获取binder版本

status_t result = ioctl(fd, BINDER_VERSION, &vers);

if (result == -1) {

ALOGE("Binder ioctl to obtain version failed: %s", strerror(errno));

close(fd);

fd = -1;

}

if (result != 0 || vers != BINDER_CURRENT_PROTOCOL_VERSION) {

ALOGE("Binder driver protocol(%d) does not match user space protocol(%d)! ioctl() return value: %d",

vers, BINDER_CURRENT_PROTOCOL_VERSION, result);

close(fd);

fd = -1;

}

size_t maxThreads = DEFAULT_MAX_BINDER_THREADS;

// ioctl 获取线程数量

result = ioctl(fd, BINDER_SET_MAX_THREADS, &maxThreads);

if (result == -1) {

ALOGE("Binder ioctl to set max threads failed: %s", strerror(errno));

}

uint32_t enable = DEFAULT_ENABLE_ONEWAY_SPAM_DETECTION;

result = ioctl(fd, BINDER_ENABLE_ONEWAY_SPAM_DETECTION, &enable);

if (result == -1) {

ALOGD("Binder ioctl to enable oneway spam detection failed: %s", strerror(errno));

}

} else {

ALOGW("Opening '%s' failed: %s\n", driver, strerror(errno));

}

return fd;

}

从这里的代码可以看出,binder驱动的打开通过ProcessState来完成,可以通过ioctl来和binder进行通讯

b. 实例化ServiceManager,并将自身注册进去

实例化ServiceManager没什么好说的,Access的用法后面有机会再研究。这里主要看addService做了什么

Status ServiceManager::addService(const std::string& name, const sp<IBinder>& binder, bool allowIsolated, int32_t dumpPriority) {

// ......

if (binder == nullptr) {

return Status::fromExceptionCode(Status::EX_ILLEGAL_ARGUMENT);

}

// 检查名字是否有效

if (!isValidServiceName(name)) {

LOG(ERROR) << "Invalid service name: " << name;

return Status::fromExceptionCode(Status::EX_ILLEGAL_ARGUMENT);

}

#ifndef VENDORSERVICEMANAGER

if (!meetsDeclarationRequirements(binder, name)) {

// already logged

return Status::fromExceptionCode(Status::EX_ILLEGAL_ARGUMENT);

}

#endif // !VENDORSERVICEMANAGER

// implicitly unlinked when the binder is removed

// 注册死亡通知同时检查是否是代理对象

if (binder->remoteBinder() != nullptr &&

binder->linkToDeath(sp<ServiceManager>::fromExisting(this)) != OK) {

LOG(ERROR) << "Could not linkToDeath when adding " << name;

return Status::fromExceptionCode(Status::EX_ILLEGAL_STATE);

}

// 将binder保存到容器当中

// Overwrite the old service if it exists

mNameToService[name] = Service {

.binder = binder,

.allowIsolated = allowIsolated,

.dumpPriority = dumpPriority,

.debugPid = ctx.debugPid,

};

auto it = mNameToRegistrationCallback.find(name);

if (it != mNameToRegistrationCallback.end()) {

for (const sp<IServiceCallback>& cb : it->second) {

mNameToService[name].guaranteeClient = true;

// permission checked in registerForNotifications

cb->onRegistration(name, binder);

}

}

return Status::ok();

}

看起来这里只是将ServiceManager加入到Map中来保存。

c. 将ServiceManager传给IPCThreadState

sp<BBinder> the_context_object; void IPCThreadState::setTheContextObject(const sp<BBinder>& obj)

{

the_context_object = obj;

}

这个方法很简单,就是把ServiceManager传给了IPCThreadState,但是这里第一次见到这个类,来看看self是怎么用的

IPCThreadState* IPCThreadState::self()

{

if (gHaveTLS.load(std::memory_order_acquire)) {

restart:

const pthread_key_t k = gTLS;

IPCThreadState* st = (IPCThreadState*)pthread_getspecific(k);

if (st) return st;

// 实例化IPCThreadState对象并返回

return new IPCThreadState;

} // Racey, heuristic test for simultaneous shutdown.

if (gShutdown.load(std::memory_order_relaxed)) {

ALOGW("Calling IPCThreadState::self() during shutdown is dangerous, expect a crash.\n");

return nullptr;

} pthread_mutex_lock(&gTLSMutex);

if (!gHaveTLS.load(std::memory_order_relaxed)) {

int key_create_value = pthread_key_create(&gTLS, threadDestructor);

if (key_create_value != 0) {

pthread_mutex_unlock(&gTLSMutex);

ALOGW("IPCThreadState::self() unable to create TLS key, expect a crash: %s\n",

strerror(key_create_value));

return nullptr;

}

gHaveTLS.store(true, std::memory_order_release);

}

pthread_mutex_unlock(&gTLSMutex);

goto restart;

} IPCThreadState::IPCThreadState()

: mProcess(ProcessState::self()), // 获取ProcessState对象

mServingStackPointer(nullptr),

mWorkSource(kUnsetWorkSource),

mPropagateWorkSource(false),

mIsLooper(false),

mIsFlushing(false),

mStrictModePolicy(0),

mLastTransactionBinderFlags(0),

mCallRestriction(mProcess->mCallRestriction) {

pthread_setspecific(gTLS, this);

clearCaller();

mIn.setDataCapacity(256);

mOut.setDataCapacity(256);

}

IPCThreadState::self我看不太懂,不过我猜大体上就是返回一个IPCThreadState对象,其构造函数中初始化了ProcessState成员,ProcessState::self返回的是之前创建的对象。

d. 将ServiceManager注册到binder

bool ProcessState::becomeContextManager()

{

AutoMutex _l(mLock); flat_binder_object obj {

.flags = FLAT_BINDER_FLAG_TXN_SECURITY_CTX,

}; int result = ioctl(mDriverFD, BINDER_SET_CONTEXT_MGR_EXT, &obj); // fallback to original method

if (result != 0) {

android_errorWriteLog(0x534e4554, "121035042"); int unused = 0;

result = ioctl(mDriverFD, BINDER_SET_CONTEXT_MGR, &unused);

} if (result == -1) {

ALOGE("Binder ioctl to become context manager failed: %s\n", strerror(errno));

} return result == 0;

}

通过ioctl给驱动发送一个BINDER_SET_CONTEXT_MGR_EXT,告诉它我就是servicemanager,具体驱动那边如何处理就不太清楚了。

e. 开始循环监听

用到Looper来监听消息,并处理(暂时不研究Looper是如何工作的)下面第四部分会看如何监听并回调的

到这里ServiceManager的启动就完成了。

2、如何获取ServiceManager

启动ServiceManager之后我们要如何获取到ServiceManager并且使用它的方法呢?

这时候就要到libbinder中的IServiceManager了,我们平时使用会直接调用defaultServiceManager来获取ServiceManager

[[clang::no_destroy]] static sp<IServiceManager> gDefaultServiceManager; sp<IServiceManager> defaultServiceManager()

{

std::call_once(gSmOnce, []() {

sp<AidlServiceManager> sm = nullptr;

while (sm == nullptr) {

// 获取一个proxy

sm = interface_cast<AidlServiceManager>(ProcessState::self()->getContextObject(nullptr));

if (sm == nullptr) {

ALOGE("Waiting 1s on context object on %s.", ProcessState::self()->getDriverName().c_str());

sleep(1);

}

}

// 实例化 ServiceManagerShim

gDefaultServiceManager = sp<ServiceManagerShim>::make(sm);

}); return gDefaultServiceManager;

}

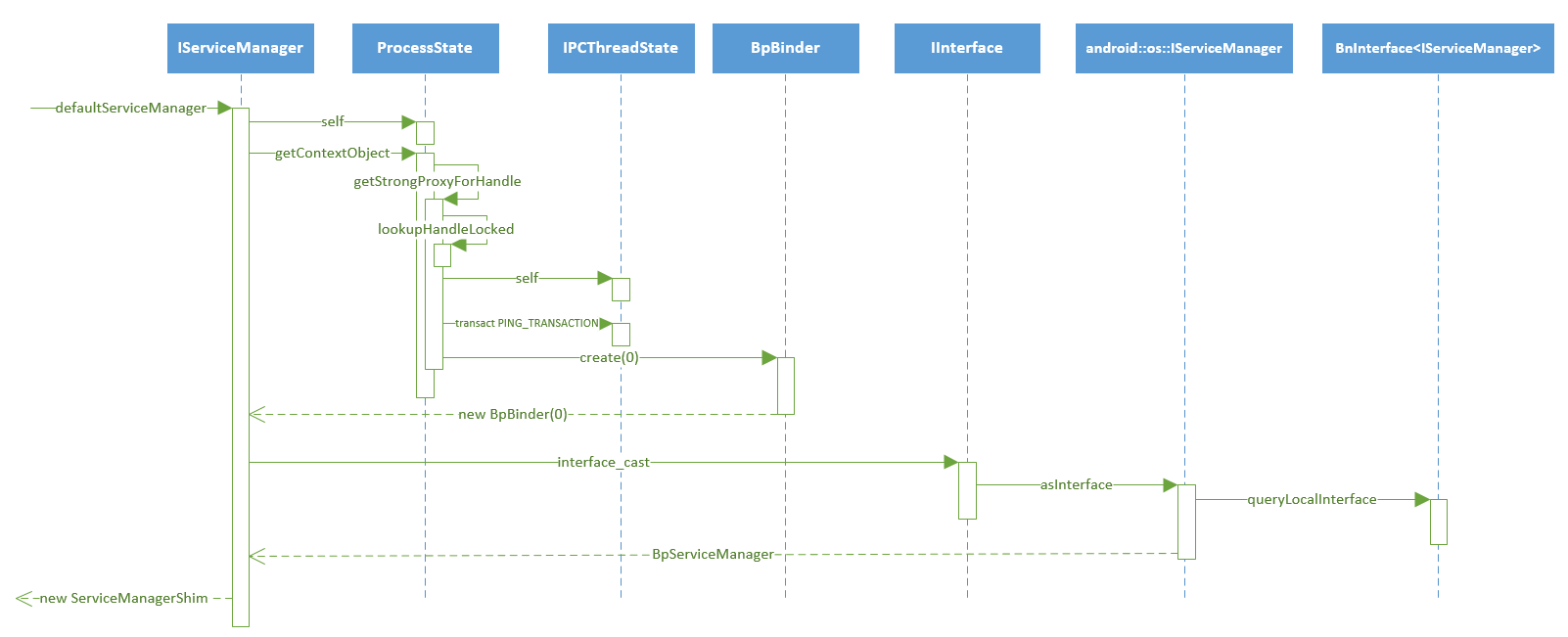

这里分为三个步骤:

a. 通过ProcessState获取到一个ContextObject(BpBinder)

b. interface_cast 转换为一个Bp对象(BpServiceManager)

c. 实例化ServiceManagerShim作为一个中间类

接下来分开看看都是做了些什么?

a. 通过ProcessState获取到一个ContextObject

sp<ProcessState> ProcessState::self()

{

return init(kDefaultDriver, false /*requireDefault*/);

}

先调用ProcessState::self 打开binder驱动(一般情况下调用进程和ServiceManager服务端不在同一个进程,这时候会重新打开binder驱动)

sp<IBinder> ProcessState::getContextObject(const sp<IBinder>& /*caller*/)

{

sp<IBinder> context = getStrongProxyForHandle(0); if (context) {

// The root object is special since we get it directly from the driver, it is never

// written by Parcell::writeStrongBinder.

internal::Stability::markCompilationUnit(context.get());

} else {

ALOGW("Not able to get context object on %s.", mDriverName.c_str());

} return context;

}

接着调用getContextObject获取服务端的ServiceManager(handle为0默认为ServiceManager)

sp<IBinder> ProcessState::getStrongProxyForHandle(int32_t handle)

{

sp<IBinder> result; AutoMutex _l(mLock); handle_entry* e = lookupHandleLocked(handle); if (e != nullptr) {

// ......

IBinder* b = e->binder;

if (b == nullptr || !e->refs->attemptIncWeak(this)) {

if (handle == 0) {

// ...... IPCThreadState* ipc = IPCThreadState::self(); CallRestriction originalCallRestriction = ipc->getCallRestriction();

ipc->setCallRestriction(CallRestriction::NONE); Parcel data;

status_t status = ipc->transact(

0, IBinder::PING_TRANSACTION, data, nullptr, 0); ipc->setCallRestriction(originalCallRestriction); if (status == DEAD_OBJECT)

return nullptr;

}

// 创建一个BpBinder,handle为0

sp<BpBinder> b = BpBinder::create(handle);

e->binder = b.get();

if (b) e->refs = b->getWeakRefs();

result = b;

} else {

result.force_set(b);

e->refs->decWeak(this);

}

} return result;

}

b. interface_cast 转换为一个Bp对象

interface_cast是在IInterface.h中被声明的,调用了服务的asInterface方法,该方法通过IInterface.h中的宏来声明,声明位置在android::os::IServiceManager中

template<typename INTERFACE>

inline sp<INTERFACE> interface_cast(const sp<IBinder>& obj)

{

return INTERFACE::asInterface(obj);

} ::android::sp<I##INTERFACE> I##INTERFACE::asInterface( \

const ::android::sp<::android::IBinder>& obj) \

{ \

::android::sp<I##INTERFACE> intr; \

if (obj != nullptr) { \

intr = ::android::sp<I##INTERFACE>::cast( \

obj->queryLocalInterface(I##INTERFACE::descriptor)); \

if (intr == nullptr) { \

// 创建一个Bp对象 \

intr = ::android::sp<Bp##INTERFACE>::make(obj); \

} \

} \

return intr; \

}

queryLocalInterface的实现在BnInterface<android::os::IServiceManager>

template<typename INTERFACE>

inline sp<IInterface> BnInterface<INTERFACE>::queryLocalInterface(

const String16& _descriptor)

{

if (_descriptor == INTERFACE::descriptor) return sp<IInterface>::fromExisting(this);

return nullptr;

}

先获取当前进程的Bn对象,如果返回null,那么就创建一个Bp对象。

使用BpBinder(0) 来实例化一个BpServiceManager对象(sp<xxx>::make定义在libutils中的StrongPointer.h)

BpServiceManager::BpServiceManager(const ::android::sp<::android::IBinder>& _aidl_impl)

: BpInterface<IServiceManager>(_aidl_impl){

}

从这里来看BpServiceManager持有的是一个BpBinder(0),意思就是与server通信需要借助的是这个BpBinder对象,BpServiceManager只是个调用的封装。所以asInterface其实作用就是将一个BpBinder做封装,从这里是不是猜ServiceManager getService获得的就是个BpBinder(handle?),这个后面再去验证。

c. 实例化ServiceManagerShim作为一个中间类

这个特别简单,这里就不展开了。

贴上一个时序图,可以发现在获取BpServiceManager时似乎并没有和binder驱动有什么特别的数据往来,那BpServiceManager要怎么调用服务端ServiceManager的方法呢?

3、如何使用ServiceManager

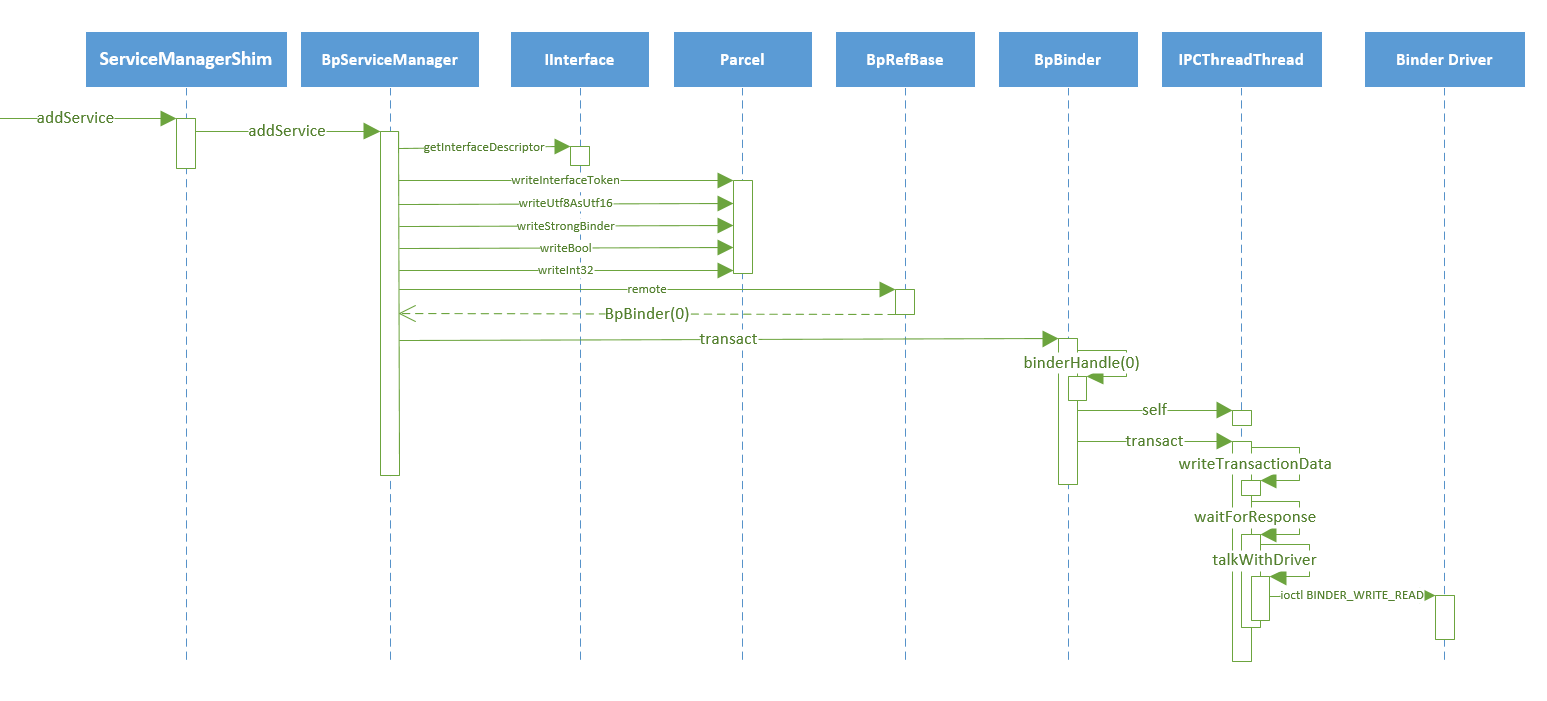

那就从addService来看看一次binder调用都会经历些什么?

status_t ServiceManagerShim::addService(const String16& name, const sp<IBinder>& service,

bool allowIsolated, int dumpsysPriority)

{

Status status = mTheRealServiceManager->addService(

String8(name).c_str(), service, allowIsolated, dumpsysPriority);

return status.exceptionCode();

}

调用BpServiceManager的addService方法,接下来就要找到aidl生成文件IServiceManager.cpp 中的实现

::android::binder::Status BpServiceManager::addService(const ::std::string& name, const ::android::sp<::android::IBinder>& service, bool allowIsolated, int32_t dumpPriority) {

::android::Parcel _aidl_data;

_aidl_data.markForBinder(remoteStrong());

::android::Parcel _aidl_reply;

::android::status_t _aidl_ret_status = ::android::OK;

::android::binder::Status _aidl_status;

_aidl_ret_status = _aidl_data.writeInterfaceToken(getInterfaceDescriptor());

if (((_aidl_ret_status) != (::android::OK))) {

goto _aidl_error;

}

_aidl_ret_status = _aidl_data.writeUtf8AsUtf16(name);

if (((_aidl_ret_status) != (::android::OK))) {

goto _aidl_error;

}

_aidl_ret_status = _aidl_data.writeStrongBinder(service);

if (((_aidl_ret_status) != (::android::OK))) {

goto _aidl_error;

}

_aidl_ret_status = _aidl_data.writeBool(allowIsolated);

if (((_aidl_ret_status) != (::android::OK))) {

goto _aidl_error;

}

_aidl_ret_status = _aidl_data.writeInt32(dumpPriority);

if (((_aidl_ret_status) != (::android::OK))) {

goto _aidl_error;

}

_aidl_ret_status = remote()->transact(BnServiceManager::TRANSACTION_addService, _aidl_data, &_aidl_reply, 0);

if (UNLIKELY(_aidl_ret_status == ::android::UNKNOWN_TRANSACTION && IServiceManager::getDefaultImpl())) {

return IServiceManager::getDefaultImpl()->addService(name, service, allowIsolated, dumpPriority);

}

if (((_aidl_ret_status) != (::android::OK))) {

goto _aidl_error;

}

_aidl_ret_status = _aidl_status.readFromParcel(_aidl_reply);

if (((_aidl_ret_status) != (::android::OK))) {

goto _aidl_error;

}

if (!_aidl_status.isOk()) {

return _aidl_status;

}

_aidl_error:

_aidl_status.setFromStatusT(_aidl_ret_status);

return _aidl_status;

}

这里主要做了两件工作

a. 创建一个Parcel,然后写入token以及传入的参数

b. 调用remote()->transact,取出返回值

先来看写入的token是个什么东西,在android::os::IServiceManager.h中声明,在IServiceManager.cpp中实现,这两个宏在IInterface中定义,这个descriptor其实就是"android.os.IServiceManager"

#define DECLARE_META_INTERFACE(INTERFACE) \

public: \

static const ::android::String16 descriptor; \ #define DO_NOT_DIRECTLY_USE_ME_IMPLEMENT_META_INTERFACE(INTERFACE, NAME)\

const ::android::StaticString16 \

I##INTERFACE##_descriptor_static_str16(__IINTF_CONCAT(u, NAME));\

const ::android::String16 I##INTERFACE::descriptor( \

I##INTERFACE##_descriptor_static_str16); \

接下来看看remote()->transact都做了什么事情,先看remote是什么?

remote方法声明在BpRefBase当中

inline IBinder* remote() const { return mRemote; }

这个mRemote是什么?还需要从创建BpServiceManager那边看起

BpServiceManager::BpServiceManager(const ::android::sp<::android::IBinder>& _aidl_impl)

: BpInterface<IServiceManager>(_aidl_impl){

} template<typename INTERFACE>

inline BpInterface<INTERFACE>::BpInterface(const sp<IBinder>& remote)

: BpRefBase(remote)

{

} BpRefBase::BpRefBase(const sp<IBinder>& o)

: mRemote(o.get()), mRefs(nullptr), mState(0)

{

extendObjectLifetime(OBJECT_LIFETIME_WEAK); if (mRemote) {

mRemote->incStrong(this); // Removed on first IncStrong().

mRefs = mRemote->createWeak(this); // Held for our entire lifetime.

}

}

上面获取ServiceManager时创建了一个handle为0的BpBinder,会一路传到BpRefBase中来,这个mRemote其实就是那个handle为0的BpBinder,所以调用的就是BpBinder的transact方法

status_t BpBinder::transact(

uint32_t code, const Parcel& data, Parcel* reply, uint32_t flags)

{

// Once a binder has died, it will never come back to life.

if (mAlive) { // ......

status_t status;

if (CC_UNLIKELY(isRpcBinder())) {

status = rpcSession()->transact(rpcAddress(), code, data, reply, flags);

} else {

status = IPCThreadState::self()->transact(binderHandle(), code, data, reply, flags);

} if (status == DEAD_OBJECT) mAlive = 0; return status;

} return DEAD_OBJECT;

} int32_t BpBinder::binderHandle() const {

return std::get<BinderHandle>(mHandle).handle;

}

看到BpBinder调用的是IPCThreadState的transact方法,第一个参数通过binderHandle获得,其实就是创建BpBinder时传入的handle 0,这个非常重要,binder驱动通过这个handle才能找到对应的server;第二个参数code,代表我们当前调用的方法的序号,第三个是已经打包为Parcel的参数,第四个是返回值

status_t IPCThreadState::transact(int32_t handle,

uint32_t code, const Parcel& data,

Parcel* reply, uint32_t flags)

{

// ......

status_t err; // ......

// 1、数据组织

err = writeTransactionData(BC_TRANSACTION, flags, handle, code, data, nullptr); if (err != NO_ERROR) {

if (reply) reply->setError(err);

return (mLastError = err);

} if ((flags & TF_ONE_WAY) == 0) {

if (UNLIKELY(mCallRestriction != ProcessState::CallRestriction::NONE)) {

if (mCallRestriction == ProcessState::CallRestriction::ERROR_IF_NOT_ONEWAY) {

ALOGE("Process making non-oneway call (code: %u) but is restricted.", code);

CallStack::logStack("non-oneway call", CallStack::getCurrent(10).get(),

ANDROID_LOG_ERROR);

} else /* FATAL_IF_NOT_ONEWAY */ {

LOG_ALWAYS_FATAL("Process may not make non-oneway calls (code: %u).", code);

}

} #if 0

if (code == 4) { // relayout

ALOGI(">>>>>> CALLING transaction 4");

} else {

ALOGI(">>>>>> CALLING transaction %d", code);

}

#endif

if (reply) {

// 2、写入数据,等待返回调用结果

err = waitForResponse(reply);

} else {

Parcel fakeReply;

err = waitForResponse(&fakeReply);

}

#if 0

if (code == 4) { // relayout

ALOGI("<<<<<< RETURNING transaction 4");

} else {

ALOGI("<<<<<< RETURNING transaction %d", code);

}

#endif IF_LOG_TRANSACTIONS() {

TextOutput::Bundle _b(alog);

alog << "BR_REPLY thr " << (void*)pthread_self() << " / hand "

<< handle << ": ";

if (reply) alog << indent << *reply << dedent << endl;

else alog << "(none requested)" << endl;

}

} else {

err = waitForResponse(nullptr, nullptr);

} return err;

}

IPCThreadState::transact 做了两件事情

a. 数据组织

b. 数据写入,等待RPC调用返回结果

先看数据是如何组织的:

status_t IPCThreadState::writeTransactionData(int32_t cmd, uint32_t binderFlags,

int32_t handle, uint32_t code, const Parcel& data, status_t* statusBuffer)

{

binder_transaction_data tr; tr.target.ptr = 0; /* Don't pass uninitialized stack data to a remote process */

tr.target.handle = handle;

tr.code = code;

tr.flags = binderFlags;

tr.cookie = 0;

tr.sender_pid = 0;

tr.sender_euid = 0; const status_t err = data.errorCheck();

if (err == NO_ERROR) {

tr.data_size = data.ipcDataSize();

tr.data.ptr.buffer = data.ipcData();

tr.offsets_size = data.ipcObjectsCount()*sizeof(binder_size_t);

tr.data.ptr.offsets = data.ipcObjects();

} else if (statusBuffer) {

tr.flags |= TF_STATUS_CODE;

*statusBuffer = err;

tr.data_size = sizeof(status_t);

tr.data.ptr.buffer = reinterpret_cast<uintptr_t>(statusBuffer);

tr.offsets_size = 0;

tr.data.ptr.offsets = 0;

} else {

return (mLastError = err);

} mOut.writeInt32(cmd);

mOut.write(&tr, sizeof(tr)); return NO_ERROR;

}

其实从上面的代码来看,writeTransactionData并没有做数据写入的动作,而是把传入参数组织成为一个binder_transaction_data,这里多了一个cmd BC_TRANSACTION(我理解成Binder control transaction),最后将cmd 和 transaction_data写入到mOut Parcel当中。

接着看数据写入和等待调用结果:

status_t IPCThreadState::waitForResponse(Parcel *reply, status_t *acquireResult)

{

uint32_t cmd;

int32_t err; while (1) {

// 1. 将数据写入给驱动等待执行并返回结果

if ((err=talkWithDriver()) < NO_ERROR) break;

err = mIn.errorCheck();

if (err < NO_ERROR) break;

if (mIn.dataAvail() == 0) continue; cmd = (uint32_t)mIn.readInt32(); // 2. 根据返回的cmd做对应的操作

switch (cmd) {

case BR_ONEWAY_SPAM_SUSPECT:

ALOGE("Process seems to be sending too many oneway calls.");

CallStack::logStack("oneway spamming", CallStack::getCurrent().get(),

ANDROID_LOG_ERROR);

[[fallthrough]];

case BR_TRANSACTION_COMPLETE:

if (!reply && !acquireResult) goto finish;

break; case BR_DEAD_REPLY:

err = DEAD_OBJECT;

goto finish; case BR_FAILED_REPLY:

err = FAILED_TRANSACTION;

goto finish; case BR_FROZEN_REPLY:

err = FAILED_TRANSACTION;

goto finish; case BR_ACQUIRE_RESULT:

{

ALOG_ASSERT(acquireResult != NULL, "Unexpected brACQUIRE_RESULT");

const int32_t result = mIn.readInt32();

if (!acquireResult) continue;

*acquireResult = result ? NO_ERROR : INVALID_OPERATION;

}

goto finish; case BR_REPLY:

{

binder_transaction_data tr;

err = mIn.read(&tr, sizeof(tr));

ALOG_ASSERT(err == NO_ERROR, "Not enough command data for brREPLY");

if (err != NO_ERROR) goto finish; if (reply) {

if ((tr.flags & TF_STATUS_CODE) == 0) {

reply->ipcSetDataReference(

reinterpret_cast<const uint8_t*>(tr.data.ptr.buffer),

tr.data_size,

reinterpret_cast<const binder_size_t*>(tr.data.ptr.offsets),

tr.offsets_size/sizeof(binder_size_t),

freeBuffer);

} else {

err = *reinterpret_cast<const status_t*>(tr.data.ptr.buffer);

freeBuffer(nullptr,

reinterpret_cast<const uint8_t*>(tr.data.ptr.buffer),

tr.data_size,

reinterpret_cast<const binder_size_t*>(tr.data.ptr.offsets),

tr.offsets_size/sizeof(binder_size_t));

}

} else {

freeBuffer(nullptr,

reinterpret_cast<const uint8_t*>(tr.data.ptr.buffer),

tr.data_size,

reinterpret_cast<const binder_size_t*>(tr.data.ptr.offsets),

tr.offsets_size/sizeof(binder_size_t));

continue;

}

}

goto finish; default:

err = executeCommand(cmd);

if (err != NO_ERROR) goto finish;

break;

}

} finish:

if (err != NO_ERROR) {

if (acquireResult) *acquireResult = err;

if (reply) reply->setError(err);

mLastError = err;

} return err;

}

waitForResponse做了两件事,先将数据写给binder,等待完成调用并返回结果,然后解析返回结果中的cmd。这里主要看talkWithDriver是怎么做的:

status_t IPCThreadState::talkWithDriver(bool doReceive)

{

if (mProcess->mDriverFD < 0) {

return -EBADF;

}

// 1. 组织binder_write_read,write_buffer指向mOut,read_buffer指向mIn

binder_write_read bwr; // Is the read buffer empty?

const bool needRead = mIn.dataPosition() >= mIn.dataSize(); // We don't want to write anything if we are still reading

// from data left in the input buffer and the caller

// has requested to read the next data.

const size_t outAvail = (!doReceive || needRead) ? mOut.dataSize() : 0; bwr.write_size = outAvail;

bwr.write_buffer = (uintptr_t)mOut.data(); // This is what we'll read.

if (doReceive && needRead) {

bwr.read_size = mIn.dataCapacity();

bwr.read_buffer = (uintptr_t)mIn.data();

} else {

bwr.read_size = 0;

bwr.read_buffer = 0;

}

// Return immediately if there is nothing to do.

if ((bwr.write_size == 0) && (bwr.read_size == 0)) return NO_ERROR; bwr.write_consumed = 0;

bwr.read_consumed = 0;

status_t err;

do {#if defined(__ANDROID__)

// ioctl 将数据写给binder 驱动

if (ioctl(mProcess->mDriverFD, BINDER_WRITE_READ, &bwr) >= 0)

err = NO_ERROR;

else

err = -errno;

#else

err = INVALID_OPERATION;

#endif

if (mProcess->mDriverFD < 0) {

err = -EBADF;

}

IF_LOG_COMMANDS() {

alog << "Finished read/write, write size = " << mOut.dataSize() << endl;

}

} while (err == -EINTR);

if (err >= NO_ERROR) {

if (bwr.write_consumed > 0) {

if (bwr.write_consumed < mOut.dataSize())

LOG_ALWAYS_FATAL("Driver did not consume write buffer. "

"err: %s consumed: %zu of %zu",

statusToString(err).c_str(),

(size_t)bwr.write_consumed,

mOut.dataSize());

else {

mOut.setDataSize(0);

processPostWriteDerefs();

}

}

if (bwr.read_consumed > 0) {

mIn.setDataSize(bwr.read_consumed);

mIn.setDataPosition(0);

}return NO_ERROR;

} return err;

}

将数据组织成为binder_write_read,数据的写入和返回都需要这个结构体对象,write_buffer指向mOut(数据写入),read_buffer指向mIn(数据返回),调用ioctl与驱动通信,结束后通过解析mIn中的cmd可以做出相应的动作并返回结果(BR_REPLY 理解为Binder Reply - Reply)

到这里Bp端的调用就结束了,贴上时序图

简化的过程:

调用BpServiceManager的具体方法(组织数据,调用BpBinder的transact方法),BpBinder调用IPCThreadState的transact方法与binder通信(ioctl)

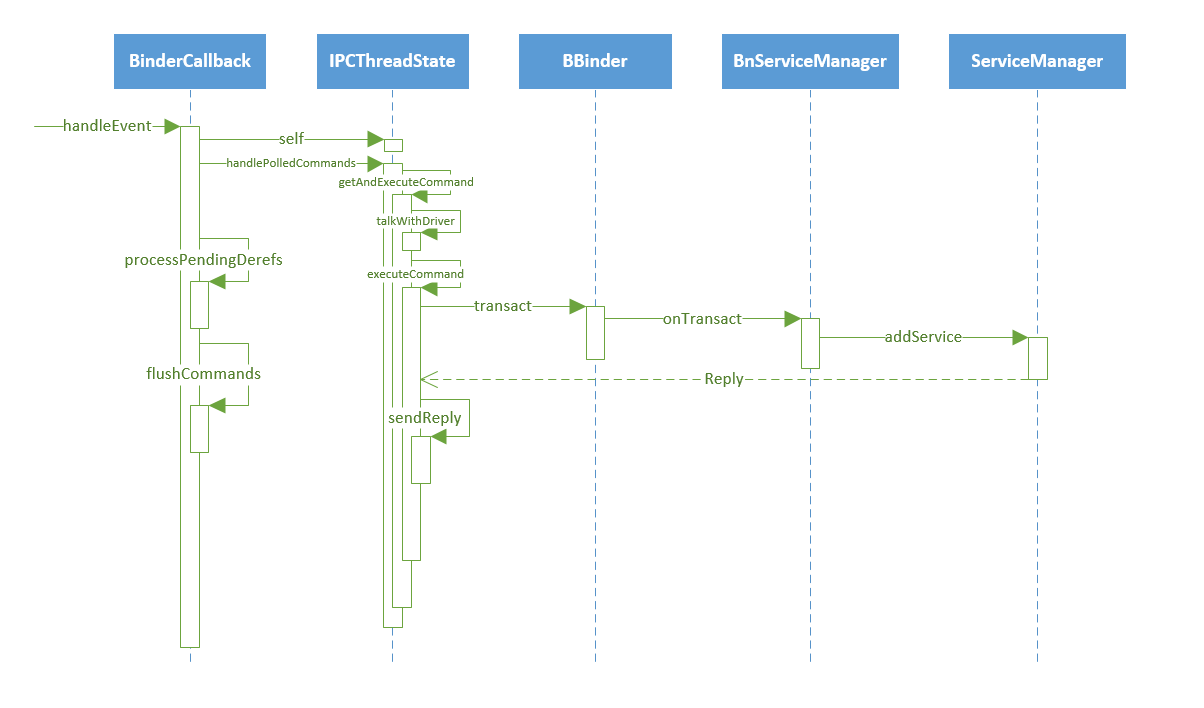

4、客户端发出了请求,服务端如何响应?

这里就要看启动ServiceManager时Looper和callback相关的部分

int main()

{

// ......

sp<Looper> looper = Looper::prepare(false /*allowNonCallbacks*/); // 给Looper注册callback

BinderCallback::setupTo(looper);

ClientCallbackCallback::setupTo(looper, manager); // 无线循环监听

while(true) {

looper->pollAll(-1);

} } class BinderCallback : public LooperCallback {

public:

static sp<BinderCallback> setupTo(const sp<Looper>& looper) {

// 实例化BinderCallback

sp<BinderCallback> cb = sp<BinderCallback>::make(); int binder_fd = -1;

// 获取binder_fd

IPCThreadState::self()->setupPolling(&binder_fd);

LOG_ALWAYS_FATAL_IF(binder_fd < 0, "Failed to setupPolling: %d", binder_fd);

// 添加监听目标

int ret = looper->addFd(binder_fd,

Looper::POLL_CALLBACK,

Looper::EVENT_INPUT,

cb,

nullptr /*data*/);

LOG_ALWAYS_FATAL_IF(ret != 1, "Failed to add binder FD to Looper"); return cb;

} int handleEvent(int /* fd */, int /* events */, void* /* data */) override {

// 调用handlePolledCommands处理回调

IPCThreadState::self()->handlePolledCommands();

return 1; // Continue receiving callbacks.

}

}; status_t IPCThreadState::setupPolling(int* fd)

{

if (mProcess->mDriverFD < 0) {

return -EBADF;

}

// 开启ServiceManager的循环,开始工作

mOut.writeInt32(BC_ENTER_LOOPER);

// 将命令写给driver

flushCommands();

*fd = mProcess->mDriverFD;

return 0;

} void IPCThreadState::flushCommands()

{

if (mProcess->mDriverFD < 0)

return;

talkWithDriver(false);

// The flush could have caused post-write refcount decrements to have

// been executed, which in turn could result in BC_RELEASE/BC_DECREFS

// being queued in mOut. So flush again, if we need to.

if (mOut.dataSize() > 0) {

talkWithDriver(false);

}

if (mOut.dataSize() > 0) {

ALOGW("mOut.dataSize() > 0 after flushCommands()");

}

}

Looper(Looper定义在libutils中)会监听ServiceManager进程中打开的binder_fd,有消息上来了会调用 handlePolledCommands处理。一开始看到这个方法觉得奇怪,为什么没有参数?后来想想可能Looper仅仅只是监听消息而已,之前也看到binder调用结束后会有数据写到mIn当中,通过IPCThreadState处理mIn中的数据就行。(每个进程都有自己的mOut和mIn,client进程的mOut对应server的mIn,mIn -> mOut)

接下来就看看handlePolledCommands这个方法:

status_t IPCThreadState::handlePolledCommands()

{

status_t result; do {

result = getAndExecuteCommand();

} while (mIn.dataPosition() < mIn.dataSize()); processPendingDerefs();

flushCommands();

return result;

}

核心方法就是getAndExecuteCommand方法

status_t IPCThreadState::getAndExecuteCommand()

{

status_t result;

int32_t cmd; // 1. 从binder driver获取mIn数据

result = talkWithDriver();

if (result >= NO_ERROR) {

size_t IN = mIn.dataAvail();

if (IN < sizeof(int32_t)) return result;

cmd = mIn.readInt32();

IF_LOG_COMMANDS() {

alog << "Processing top-level Command: "

<< getReturnString(cmd) << endl;

} pthread_mutex_lock(&mProcess->mThreadCountLock);

mProcess->mExecutingThreadsCount++;

if (mProcess->mExecutingThreadsCount >= mProcess->mMaxThreads &&

mProcess->mStarvationStartTimeMs == 0) {

mProcess->mStarvationStartTimeMs = uptimeMillis();

}

pthread_mutex_unlock(&mProcess->mThreadCountLock); // 2. 解析出cmd,执行cmd

result = executeCommand(cmd); pthread_mutex_lock(&mProcess->mThreadCountLock);

mProcess->mExecutingThreadsCount--;

if (mProcess->mExecutingThreadsCount < mProcess->mMaxThreads &&

mProcess->mStarvationStartTimeMs != 0) {

int64_t starvationTimeMs = uptimeMillis() - mProcess->mStarvationStartTimeMs;

if (starvationTimeMs > 100) {

ALOGE("binder thread pool (%zu threads) starved for %" PRId64 " ms",

mProcess->mMaxThreads, starvationTimeMs);

}

mProcess->mStarvationStartTimeMs = 0;

} // Cond broadcast can be expensive, so don't send it every time a binder

// call is processed. b/168806193

if (mProcess->mWaitingForThreads > 0) {

pthread_cond_broadcast(&mProcess->mThreadCountDecrement);

}

pthread_mutex_unlock(&mProcess->mThreadCountLock);

} return result;

}

首先就是调用talkWithDriver读取driver发来的数据,然后解析出mIn中的cmd信息,调用executeCommand方法执行命令

status_t IPCThreadState::executeCommand(int32_t cmd)

{

BBinder* obj;

RefBase::weakref_type* refs;

status_t result = NO_ERROR; switch ((uint32_t)cmd) {

// ......

case BR_TRANSACTION_SEC_CTX:

case BR_TRANSACTION:

{

// 1、读取mIn中的数据到一个binder_transaction_data中

binder_transaction_data_secctx tr_secctx;

binder_transaction_data& tr = tr_secctx.transaction_data; if (cmd == (int) BR_TRANSACTION_SEC_CTX) {

result = mIn.read(&tr_secctx, sizeof(tr_secctx));

} else {

result = mIn.read(&tr, sizeof(tr));

tr_secctx.secctx = 0;

} ALOG_ASSERT(result == NO_ERROR,

"Not enough command data for brTRANSACTION");

if (result != NO_ERROR) break; Parcel buffer;

buffer.ipcSetDataReference(

reinterpret_cast<const uint8_t*>(tr.data.ptr.buffer),

tr.data_size,

reinterpret_cast<const binder_size_t*>(tr.data.ptr.offsets),

tr.offsets_size/sizeof(binder_size_t), freeBuffer); const void* origServingStackPointer = mServingStackPointer;

mServingStackPointer = &origServingStackPointer; // anything on the stack const pid_t origPid = mCallingPid;

const char* origSid = mCallingSid;

const uid_t origUid = mCallingUid;

const int32_t origStrictModePolicy = mStrictModePolicy;

const int32_t origTransactionBinderFlags = mLastTransactionBinderFlags;

const int32_t origWorkSource = mWorkSource;

const bool origPropagateWorkSet = mPropagateWorkSource;

// Calling work source will be set by Parcel#enforceInterface. Parcel#enforceInterface

// is only guaranteed to be called for AIDL-generated stubs so we reset the work source

// here to never propagate it.

clearCallingWorkSource();

clearPropagateWorkSource(); mCallingPid = tr.sender_pid;

mCallingSid = reinterpret_cast<const char*>(tr_secctx.secctx);

mCallingUid = tr.sender_euid;

mLastTransactionBinderFlags = tr.flags; // ALOGI(">>>> TRANSACT from pid %d sid %s uid %d\n", mCallingPid,

// (mCallingSid ? mCallingSid : "<N/A>"), mCallingUid); Parcel reply;

status_t error;

IF_LOG_TRANSACTIONS() {

TextOutput::Bundle _b(alog);

alog << "BR_TRANSACTION thr " << (void*)pthread_self()

<< " / obj " << tr.target.ptr << " / code "

<< TypeCode(tr.code) << ": " << indent << buffer

<< dedent << endl

<< "Data addr = "

<< reinterpret_cast<const uint8_t*>(tr.data.ptr.buffer)

<< ", offsets addr="

<< reinterpret_cast<const size_t*>(tr.data.ptr.offsets) << endl;

}

if (tr.target.ptr) {

// We only have a weak reference on the target object, so we must first try to

// safely acquire a strong reference before doing anything else with it.

if (reinterpret_cast<RefBase::weakref_type*>(

tr.target.ptr)->attemptIncStrong(this)) {

error = reinterpret_cast<BBinder*>(tr.cookie)->transact(tr.code, buffer,

&reply, tr.flags);

reinterpret_cast<BBinder*>(tr.cookie)->decStrong(this);

} else {

error = UNKNOWN_TRANSACTION;

} } else {

// 2、调用BBinder的transact方法

error = the_context_object->transact(tr.code, buffer, &reply, tr.flags);

} //ALOGI("<<<< TRANSACT from pid %d restore pid %d sid %s uid %d\n",

// mCallingPid, origPid, (origSid ? origSid : "<N/A>"), origUid); if ((tr.flags & TF_ONE_WAY) == 0) {

LOG_ONEWAY("Sending reply to %d!", mCallingPid);

if (error < NO_ERROR) reply.setError(error); constexpr uint32_t kForwardReplyFlags = TF_CLEAR_BUF;

// 3、将返回的结果重新发给binder

sendReply(reply, (tr.flags & kForwardReplyFlags));

} else {

if (error != OK) {

alog << "oneway function results for code " << tr.code

<< " on binder at "

<< reinterpret_cast<void*>(tr.target.ptr)

<< " will be dropped but finished with status "

<< statusToString(error); // ideally we could log this even when error == OK, but it

// causes too much logspam because some manually-written

// interfaces have clients that call methods which always

// write results, sometimes as oneway methods.

if (reply.dataSize() != 0) {

alog << " and reply parcel size " << reply.dataSize();

} alog << endl;

}

LOG_ONEWAY("NOT sending reply to %d!", mCallingPid);

} mServingStackPointer = origServingStackPointer;

mCallingPid = origPid;

mCallingSid = origSid;

mCallingUid = origUid;

mStrictModePolicy = origStrictModePolicy;

mLastTransactionBinderFlags = origTransactionBinderFlags;

mWorkSource = origWorkSource;

mPropagateWorkSource = origPropagateWorkSet; IF_LOG_TRANSACTIONS() {

TextOutput::Bundle _b(alog);

alog << "BC_REPLY thr " << (void*)pthread_self() << " / obj "

<< tr.target.ptr << ": " << indent << reply << dedent << endl;

} }

break; // ......

default:

ALOGE("*** BAD COMMAND %d received from Binder driver\n", cmd);

result = UNKNOWN_ERROR;

break;

} if (result != NO_ERROR) {

mLastError = result;

} return result;

}

这个方法很长,主要是case很多,这里只摘出最主要的BR_TRANSACTION(对应BC_TRANSACTION)来看看。注释了三个地方,解析数据,调用BBinder的transact方法,调用sendReply将数据发回去,重点看后面两个方法

a. BBinder::transact

status_t BBinder::transact(

uint32_t code, const Parcel& data, Parcel* reply, uint32_t flags)

{

data.setDataPosition(0); if (reply != nullptr && (flags & FLAG_CLEAR_BUF)) {

reply->markSensitive();

} status_t err = NO_ERROR;

switch (code) {

case PING_TRANSACTION:

err = pingBinder();

break;

case EXTENSION_TRANSACTION:

err = reply->writeStrongBinder(getExtension());

break;

case DEBUG_PID_TRANSACTION:

err = reply->writeInt32(getDebugPid());

break;

default:

// 走到onTransact当中

err = onTransact(code, data, reply, flags);

break;

} // In case this is being transacted on in the same process.

if (reply != nullptr) {

reply->setDataPosition(0);

} return err;

}

onTransact的方法在BBinder.h中被声明,在生成文件IServiceManager.cpp中被实现

::android::status_t BnServiceManager::onTransact(uint32_t _aidl_code, const ::android::Parcel& _aidl_data, ::android::Parcel* _aidl_reply, uint32_t _aidl_flags) {

::android::status_t _aidl_ret_status = ::android::OK;

switch (_aidl_code) {

// ......

case BnServiceManager::TRANSACTION_addService:

{

::std::string in_name;

::android::sp<::android::IBinder> in_service;

bool in_allowIsolated;

int32_t in_dumpPriority;

if (!(_aidl_data.checkInterface(this))) {

_aidl_ret_status = ::android::BAD_TYPE;

break;

}

_aidl_ret_status = _aidl_data.readUtf8FromUtf16(&in_name);

if (((_aidl_ret_status) != (::android::OK))) {

break;

}

_aidl_ret_status = _aidl_data.readStrongBinder(&in_service);

if (((_aidl_ret_status) != (::android::OK))) {

break;

}

_aidl_ret_status = _aidl_data.readBool(&in_allowIsolated);

if (((_aidl_ret_status) != (::android::OK))) {

break;

}

_aidl_ret_status = _aidl_data.readInt32(&in_dumpPriority);

if (((_aidl_ret_status) != (::android::OK))) {

break;

}

::android::binder::Status _aidl_status(addService(in_name, in_service, in_allowIsolated, in_dumpPriority));

_aidl_ret_status = _aidl_status.writeToParcel(_aidl_reply);

if (((_aidl_ret_status) != (::android::OK))) {

break;

}

if (!_aidl_status.isOk()) {

break;

}

}

break;

// ......

default:

{

_aidl_ret_status = ::android::BBinder::onTransact(_aidl_code, _aidl_data, _aidl_reply, _aidl_flags);

}

break;

}

if (_aidl_ret_status == ::android::UNEXPECTED_NULL) {

_aidl_ret_status = ::android::binder::Status::fromExceptionCode(::android::binder::Status::EX_NULL_POINTER).writeToParcel(_aidl_reply);

}

return _aidl_ret_status;

}

这里只看 TRANSACTION_addService 这个分支,解析了数据之后调用addService方法,记录传进来的IBinder对象到map中。

status_t IPCThreadState::sendReply(const Parcel& reply, uint32_t flags)

{

status_t err;

status_t statusBuffer;

err = writeTransactionData(BC_REPLY, flags, -1, 0, reply, &statusBuffer);

if (err < NO_ERROR) return err; return waitForResponse(nullptr, nullptr);

}

sendReply就比较简单了,将reply数据再次写给binder驱动,cmd为BC_REPLY

到这里服务端的处理就结束了,同样的贴一张时序图:

还要看下ProcessState和IPCThreadState的关系:ProcessState负责打开Binder驱动、做mmap映射,IPCThreadState负责与Binder驱动进行具体的命令交互。

还有个问题:binder驱动是如何根据handle找到server端并把数据发过去的,这个以后有机会再研究!

这篇学习笔记仅供参考,错误可能较多!

Android 12(S) Binder(一)的更多相关文章

- 从AIDL开始谈Android进程间Binder通信机制

转自: http://tech.cnnetsec.com/585.html 本文首先概述了Android的进程间通信的Binder机制,然后结合一个AIDL的例子,对Binder机制进行了解析. 概述 ...

- Android 12(S) 图形显示系统 - 示例应用(二)

1 前言 为了更深刻的理解Android图形系统抽象的概念和BufferQueue的工作机制,这篇文章我们将从Native Level入手,基于Android图形系统API写作一个简单的图形处理小程序 ...

- Android 12(S) 图形显示系统 - 应用建立和SurfaceFlinger的沟通桥梁(三)

1 前言 上一篇文章中我们已经创建了一个Native示例应用,从使用者的角度了解了图形显示系统API的基本使用,从这篇文章开始我们将基于这个示例应用深入图形显示系统API的内部实现逻辑,分析运作流程. ...

- Android 12(S) 图形显示系统 - SurfaceFlinger的启动和消息队列处理机制(四)

1 前言 SurfaceFlinger作为Android图形显示系统处理逻辑的核心单元,我们有必要去了解其是如何启动,初始化及进行消息处理的.这篇文章我们就来简单分析SurfaceFlinger这个B ...

- Android 12(S) 图形显示系统 - createSurface的流程(五)

题外话 刚刚开始着笔写作这篇文章时,正好看电视在采访一位92岁的考古学家,在他的日记中有这样一句话,写在这里与君共勉"不要等待幸运的降临,要去努力的掌握知识".如此朴实的一句话,此 ...

- Android 12(S) 图形显示系统 - BufferQueue/BLASTBufferQueue之初识(六)

题外话 你有没有听见,心里有一声咆哮,那一声咆哮,它好像在说:我就是要从后面追上去! 写文章真的好痛苦,特别是自己对这方面的知识也一知半解就更加痛苦了.这已经是这个系列的第六篇了,很多次都想放弃了,但 ...

- Android 12(S) 图形显示系统 - 初识ANativeWindow/Surface/SurfaceControl(七)

题外话 "行百里者半九十",是说步行一百里路,走过九十里,只能算是走了一半.因为步行越接近目的地,走起来越困难.借指凡事到了接近成功,往往是最吃力.最艰难的时段.劝人做事贵在坚持, ...

- Android 12(S) 图形显示系统 - BufferQueue的工作流程(八)

题外话 最近总有一个感觉:在不断学习中,越发的感觉自己的无知,自己是不是要从"愚昧之巅"掉到"绝望之谷"了,哈哈哈 邓宁-克鲁格效应 一.前言 前面的文章中已经 ...

- Android 12(S) 图形显示系统 - BufferQueue的工作流程(九)

题外话 Covid-19疫情的强烈反弹,小区里检测出了无症状感染者.小区封闭管理,我也不得不居家办公了.既然这么大把的时间可以光明正大的宅家里,自然要好好利用,八个字 == 努力工作,好好学习 一.前 ...

- Android 12(S) 图形显示系统 - 解读Gralloc架构及GraphicBuffer创建/传递/释放(十四)

必读: Android 12(S) 图形显示系统 - 开篇 一.前言 在前面的文章中,已经出现过 GraphicBuffer 的身影,GraphicBuffer 是Android图形显示系统中的一个重 ...

随机推荐

- js 如何实现管道或者说组合

前言 概念:管道是从左往右函数执行,组合是从右往左执行. 实现 原理与作用后续补齐. function compose(...funcs) { return function(x) { funcs.r ...

- spring boot @propertySource @importResource @Bean [六]

@propertySource 指定property的配置源. 创建一个person.property: 然后修改person注解; 在运行test之后,结果为: @importResource 这个 ...

- 树莓派和esp8266在局域网下使用UDP通信,esp8266采集adc数据传递给树莓派,树莓派在web上显示结果

树莓派和esp8266需要在同一局域网下 esp8266使用arduino开发: 接入一个电容土壤湿度传感器,采集湿度需要使用adc #include <ESP8266WiFi.h> #i ...

- 力扣24(java&python)-两两交换链表中的节点(中等)

题目: 给你一个链表,两两交换其中相邻的节点,并返回交换后链表的头节点.你必须在不修改节点内部的值的情况下完成本题(即,只能进行节点交换) 示例 1: 输入:head = [1,2,3,4] 输出:[ ...

- Apache RocketMQ 的 Service Mesh 开源之旅

作者 | 凌楚 阿里巴巴开发工程师 导读:自 19 年底开始,支持 Apache RocketMQ 的 Network Filter 历时 4 个月的 Code Review(Pull Reque ...

- 好云推荐官丨飞天加速之星怎样选择云服务器ECS?

编者按:本文来自"好云推荐官"活动的技术博主投稿,作者(昵称天狼)曾入选首届"飞天加速之星",获得飞天人气奖. 你是否还在苦苦地寻找一家合适的云厂商,寻找合 ...

- 逸仙电商Seata企业级落地实践

简介: 本文将会以逸仙电商的业务作为背景, 先介绍一下seata的原理, 并给大家进行线上演示, 由浅入深去介绍这款中间件, 以便读者更加容易去理解 Seata 这个中间件. 作者 | 张嘉伟(Git ...

- Kubernetes 入门教程

简介:本文是一篇 kubernetes(下文用 k8s 代替)的入门文章,将会涉及 k8s 的架构.集群搭建.一个 Redis 的例子,以及如何使用 operator-sdk 开发 operator ...

- [FE] G2Plot 更新图表的两种方式

第一种是使用 G2Plot 对象上的 changeData 方法,如果不涉及到全局 title 等这些的更改,可以采用这种方式. 也就是说,只有纯数据方面的变动,使用 changeData 更新图表数 ...

- WPF 不安装 WindowsAppSDK 使用 WinRT 功能的方法

安装 Microsoft.WindowsAppSDK 库会限制应用程序只能分发 windows 10 应用,如果自己的应用程序依然需要兼容 Win7 等旧系统,那直接采用安装 WindowsAppSD ...