[Apache Atlas] Atlas 架构设计及源代码简单分析

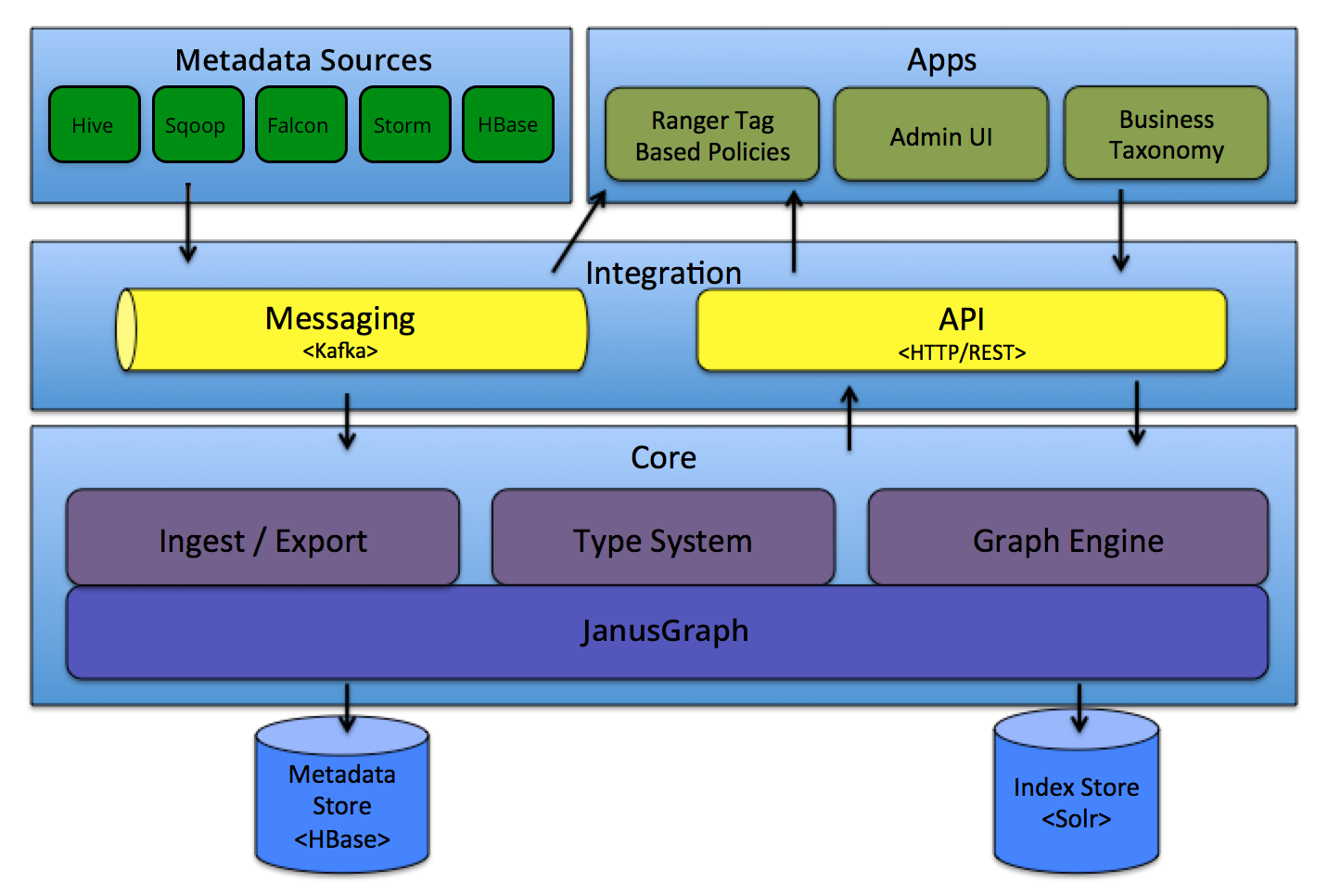

Apache Atlas 架构图

Atlas 支持多数据源接入:Hive、HBase、Storm等

Type System

Type

Atlas 中定义了一些元数据类型

── AtlasBaseTypeDef│ ├── AtlasEnumDef│ └── AtlasStructDef│ ├── AtlasBusinessMetadataDef│ ├── AtlasClassificationDef│ ├── AtlasEntityDef│ └── AtlasRelationshipDef├── AtlasStructType│ ├── AtlasBusinessMetadataType│ ├── AtlasClassificationType│ ├── AtlasRelationshipType│ └── AtlasEntityType│ └── AtlasRootEntityType├── AtlasType│ ├── AtlasArrayType│ ├── AtlasBigDecimalType│ ├── AtlasBigIntegerType│ ├── AtlasByteType│ ├── AtlasDateType│ ├── AtlasDoubleType│ ├── AtlasEnumType│ ├── AtlasFloatType│ ├── AtlasIntType│ ├── AtlasLongType│ ├── AtlasMapType│ ├── AtlasObjectIdType│ ├── AtlasShortType│ ├── AtlasStringType│ └── AtlasStructType│ ├── AtlasBusinessMetadataType│ ├── AtlasClassificationType│ ├── AtlasEntityType│ └── AtlasRelationshipType├── AtlasTypeDefStore│ └── AtlasTypeDefGraphStore│ └── AtlasTypeDefGraphStoreV2└── StructTypeDefinition└── HierarchicalTypeDefinition├── ClassTypeDefinition└── TraitTypeDefinition

Entity

Entity 是基于类型的具体实现

AtlasEntity├── AtlasEntityExtInfo│ ├── AtlasEntitiesWithExtInfo│ └── AtlasEntityWithExtInfo├── AtlasEntityStore│ └── AtlasEntityStoreV2├── AtlasEntityStream│ └── AtlasEntityStreamForImport├── AtlasEntityType│ └── AtlasRootEntityType└── IAtlasEntityChangeNotifier├── AtlasEntityChangeNotifier└── EntityChangeNotifierNop

Attributes

针对模型定义属性

AtlasAttributeDef└── AtlasRelationshipAttributeDef

AtlasAttributeDef 属性字段:

private String name;private String typeName;private boolean isOptional;private Cardinality cardinality;private int valuesMinCount;private int valuesMaxCount;private boolean isUnique;private boolean isIndexable;private boolean includeInNotification;private String defaultValue;private String description;private int searchWeight = DEFAULT_SEARCHWEIGHT;private IndexType indexType = null;private List<AtlasConstraintDef> constraints;private Map<String, String> options;private String displayName;具体实现:db:"name": "db","typeName": "hive_db","isOptional": false,"isIndexable": true,"isUnique": false,"cardinality": "SINGLE"columns:"name": "columns","typeName": "array<hive_column>","isOptional": optional,"isIndexable": true,“isUnique": false,"constraints": [ { "type": "ownedRef" } ]

- isComposite - 是否复合

- isIndexable - 是否索引

- isUnique - 是否唯一

- multiplicity - 指示此属性是(必需的/可选的/还是可以是多值)的

System specific types and their significance

Referenceable

This type represents all entities that can be searched for using a unique attribute called qualifiedName.

├── Referenceable├── ReferenceableDeserializer├── ReferenceableSerializer└── V1SearchReferenceableSerializer

Hooks

以Hive元信息采集为例分析采集过程:

全量导入

import-hive.sh

"${JAVA_BIN}" ${JAVA_PROPERTIES} -cp "${CP}"org.apache.atlas.hive.bridge.HiveMetaStoreBridge $IMPORT_ARGS

importTables└── importDatabases [addons/hive-bridge/src/main/java/org/apache/atlas/hive/bridge/HiveMetaStoreBridge.java +295]└── importHiveMetadata [addons/hive-bridge/src/main/java/org/apache/atlas/hive/bridge/HiveMetaStoreBridge.java +289]

上面是调用过程:

importTables -> importTable --> registerInstances

AtlasEntitiesWithExtInfo ret = null;EntityMutationResponse response = atlasClientV2.createEntities(entities);List<AtlasEntityHeader> createdEntities = response.getEntitiesByOperation(EntityMutations.EntityOperation.CREATE);if (CollectionUtils.isNotEmpty(createdEntities)) {ret = new AtlasEntitiesWithExtInfo();for (AtlasEntityHeader createdEntity : createdEntities) {AtlasEntityWithExtInfo entity = atlasClientV2.getEntityByGuid(createdEntity.getGuid());ret.addEntity(entity.getEntity());if (MapUtils.isNotEmpty(entity.getReferredEntities())) {for (Map.Entry<String, AtlasEntity> entry : entity.getReferredEntities().entrySet()) {ret.addReferredEntity(entry.getKey(), entry.getValue());}}LOG.info("Created {} entity: name={}, guid={}", entity.getEntity().getTypeName(), entity.getEntity().getAttribute(ATTRIBUTE_QUALIFIED_NAME), entity.getEntity().getGuid());}}

通过Http Post 的请求将库表数据更新至Atlas

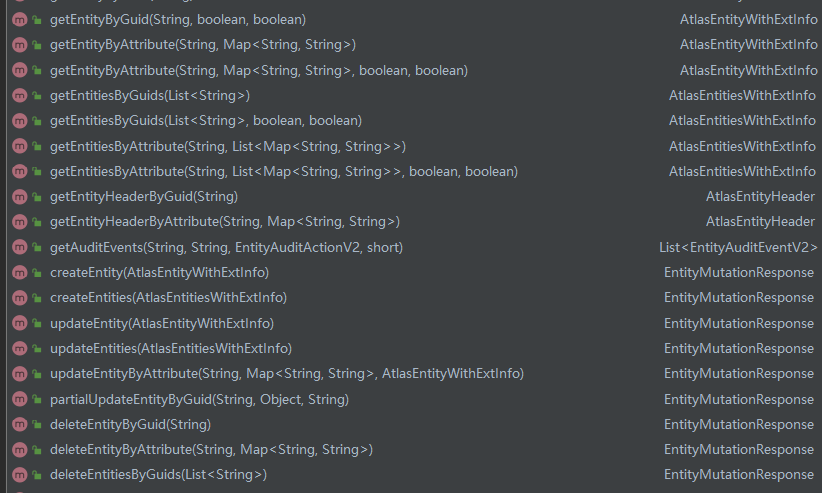

atlasClientV2有很多Http接口

Atlas HTTP 客户端API:

实时监听

HiveHook implements ExecuteWithHookContext

ExecuteWithHookContext is a new interface that the Pre/Post Execute Hook can run with the HookContext.

实现run()方法来对Hive 相关事件做处理

Hive相关事件:

BaseHiveEvent├── AlterTableRename├── CreateHiveProcess├── DropDatabase├── DropTable├── CreateDatabase│ └── AlterDatabase└── CreateTable└── AlterTable└── AlterTableRenameCol

以create database 为例分析流程:

//处理Hook 上下文信息AtlasHiveHookContext context =new AtlasHiveHookContext(this, oper, hookContext, getKnownObjects(), isSkipTempTables());//建库事件处理,提取相关库信息event = new CreateDatabase(context);if (event != null) {final UserGroupInformation ugi = hookContext.getUgi() == null ? Utils.getUGI() : hookContext.getUgi();super.notifyEntities(ActiveEntityFilter.apply(event.getNotificationMessages()), ugi);}public enum HookNotificationType {TYPE_CREATE, TYPE_UPDATE, ENTITY_CREATE, ENTITY_PARTIAL_UPDATE, ENTITY_FULL_UPDATE, ENTITY_DELETE,ENTITY_CREATE_V2, ENTITY_PARTIAL_UPDATE_V2, ENTITY_FULL_UPDATE_V2, ENTITY_DELETE_V2}//操作用户获取if (context.isMetastoreHook()) {try {ugi = SecurityUtils.getUGI();} catch (Exception e) {//do nothing}} else {ret = getHiveUserName();if (StringUtils.isEmpty(ret)) {ugi = getUgi();}}if (ugi != null) {ret = ugi.getShortUserName();}if (StringUtils.isEmpty(ret)) {try {ret = UserGroupInformation.getCurrentUser().getShortUserName();} catch (IOException e) {LOG.warn("Failed for UserGroupInformation.getCurrentUser() ", e);ret = System.getProperty("user.name");}}

主要:

获取实体信息, 传递Hook message的类型、操作用户

notifyEntities 可以看出其他组件HBase、impala也会调用该方法进行消息的发送

public static void notifyEntities(List<HookNotification> messages, UserGroupInformation ugi, int maxRetries) {if (executor == null) { // send synchronouslynotifyEntitiesInternal(messages, maxRetries, ugi, notificationInterface, logFailedMessages, failedMessagesLogger);} else {executor.submit(new Runnable() {@Overridepublic void run() {notifyEntitiesInternal(messages, maxRetries, ugi, notificationInterface, logFailedMessages, failedMessagesLogger);}});}}

消息通知框架:

NotificationInterface├── AtlasFileSpool└── AbstractNotification├── KafkaNotification└── Spooler

数据写入Kafka中:

@Overridepublic void sendInternal(NotificationType notificationType, List<String> messages) throws NotificationException {KafkaProducer producer = getOrCreateProducer(notificationType);sendInternalToProducer(producer, notificationType, messages);}

根据NotificationType写入指定topic 中:

private static final Map<NotificationType, String> PRODUCER_TOPIC_MAP = new HashMap<NotificationType, String>() {{put(NotificationType.HOOK, ATLAS_HOOK_TOPIC);put(NotificationType.ENTITIES, ATLAS_ENTITIES_TOPIC);}};NOTIFICATION_HOOK_TOPIC_NAME("atlas.notification.hook.topic.name", "ATLAS_HOOK"),NOTIFICATION_ENTITIES_TOPIC_NAME("atlas.notification.entities.topic.name", "ATLAS_ENTITIES"),

数据主要写入两个Topic中: ATLAS_ENTITIES、ATLAS_HOOK

ATLAS_HOOK是写入Hook事件消息, 创建库的事件元数据信息会写入该Topic中

如何唯一确定一个库:

public String getQualifiedName(Database db) {return getDatabaseName(db) + QNAME_SEP_METADATA_NAMESPACE + getMetadataNamespace();}

dbName@clusterName 确定唯一性

外延应用

一个基于Hive hook 实现Impala 元数据刷新的用例:

AutoRefreshImpala:https://github.com/Observe-secretly/AutoRefreshImpala

参考

[1] Apache Atlas – Data Governance and Metadata framework for Hadoop

[2] Apache Atlas 源码

[Apache Atlas] Atlas 架构设计及源代码简单分析的更多相关文章

- FFmpeg的HEVC解码器源代码简单分析:环路滤波(Loop Filter)

===================================================== HEVC源代码分析文章列表: [解码 -libavcodec HEVC 解码器] FFmpe ...

- FFmpeg的HEVC解码器源代码简单分析:CTU解码(CTU Decode)部分-TU

===================================================== HEVC源代码分析文章列表: [解码 -libavcodec HEVC 解码器] FFmpe ...

- FFmpeg的HEVC解码器源代码简单分析:CTU解码(CTU Decode)部分-PU

===================================================== HEVC源代码分析文章列表: [解码 -libavcodec HEVC 解码器] FFmpe ...

- FFmpeg源代码简单分析:libavdevice的gdigrab

===================================================== FFmpeg的库函数源代码分析文章列表: [架构图] FFmpeg源代码结构图 - 解码 F ...

- FFmpeg源代码简单分析:libavdevice的avdevice_register_all()

===================================================== FFmpeg的库函数源代码分析文章列表: [架构图] FFmpeg源代码结构图 - 解码 F ...

- FFmpeg源代码简单分析:configure

===================================================== FFmpeg的库函数源代码分析文章列表: [架构图] FFmpeg源代码结构图 - 解码 F ...

- FFmpeg源代码简单分析:makefile

===================================================== FFmpeg的库函数源代码分析文章列表: [架构图] FFmpeg源代码结构图 - 解码 F ...

- FFmpeg源代码简单分析:libswscale的sws_scale()

===================================================== FFmpeg的库函数源代码分析文章列表: [架构图] FFmpeg源代码结构图 - 解码 F ...

- FFmpeg源代码简单分析:libswscale的sws_getContext()

===================================================== FFmpeg的库函数源代码分析文章列表: [架构图] FFmpeg源代码结构图 - 解码 F ...

随机推荐

- C# 为什么你应该更喜欢 is 关键字而不是 == 运算符

前言 在C# 进行开发中,检查参数值是否为null大家都用什么?本文介绍除了传统的方式==运算符,还有一种可以商用is关键字. C# 7.0 中 is 关键字的使用 传统的方式是使用==运算符: if ...

- 关于const声明一些东西

const int a; int const a; const int *a; int *const a; const int *const a; 前两个 ...

- LiteFlow 2.6.0版本发行注记,项目逻辑解耦的利器

前言 自从LiteFlow 2.5.X版本发布依赖,陆续经历了10个小版本的迭代.社区群也稳固增长,每天都有很多小伙伴在问我问题. 但是我发现最多人问我的还是:什么时候能支持界面编排? 从LiteFL ...

- 【Vulnhub】 DC-4 靶机

Vulnhub DC-4 一.环境搭建 下载链接:https://www.vulnhub.com/entry/dc-4,313/ 解压后用VMware打开,导入虚拟机 网卡配置看个人习惯,我喜欢NAT ...

- ☕【Java技术指南】「编译器专题」重塑认识Java编译器的执行过程(常量优化机制)!

问题概括 静态常量可以再编译器确定字面量,但常量并不一定在编译期就确定了, 也可以在运行时确定,所以Java针对某些情况制定了常量优化机制. 常量优化机制 给一个变量赋值,如果等于号的右边是常量的表达 ...

- springboot整合多数据源解决分布式事务

一.前言 springboot整合多数据源解决分布式事务. 1.多数据源采用分包策略 2.全局分布式事务管理:jta-atomikos. ...

- 彻底搞懂volatile关键字

对于volatile这个关键字,相信很多朋友都听说过,甚至使用过,这个关键字虽然字面上理解起来比较简单,但是要用好起来却不是一件容易的事.这篇文章将从多个方面来讲解volatile,让你对它更加理解. ...

- WPF 勾选划线

最近项目需要一个左右侧一对多的划线功能 我们先来看一下效果秃: 主要功能: 支持动态添加 支持复选 支持修改颜色 支持动态宽度 主要实现:事件的传递 应用场景:购物互选,食品搭配,角色互选 数据源 左 ...

- ubuntu黑屏无法进入系统【Recovery Mode急救】

一.问题 前言:因为一次美化配置ubuntu导致系统启动黑屏,无法进入系统.之前并没有系统备份,后果严重还好修复了,记录下修复步骤备用. 事件:就是因为修改了 /usr/share/gnome-sh ...

- linux 常用命令(四)——(centos7-centos6.8)Vim安装

centos是默认安装了vi编辑器的,vim编辑器是没安装或者未完全安装的,个人习惯用vim,所以记录一下vim编辑器的安装: 1.查看vim相关软件信息: yum search vim 2.在线安装 ...