(转)RL — Policy Gradient Explained

RL — Policy Gradient Explained

2019-05-02 21:12:57

This blog is copied from: https://medium.com/@jonathan_hui/rl-policy-gradients-explained-9b13b688b146

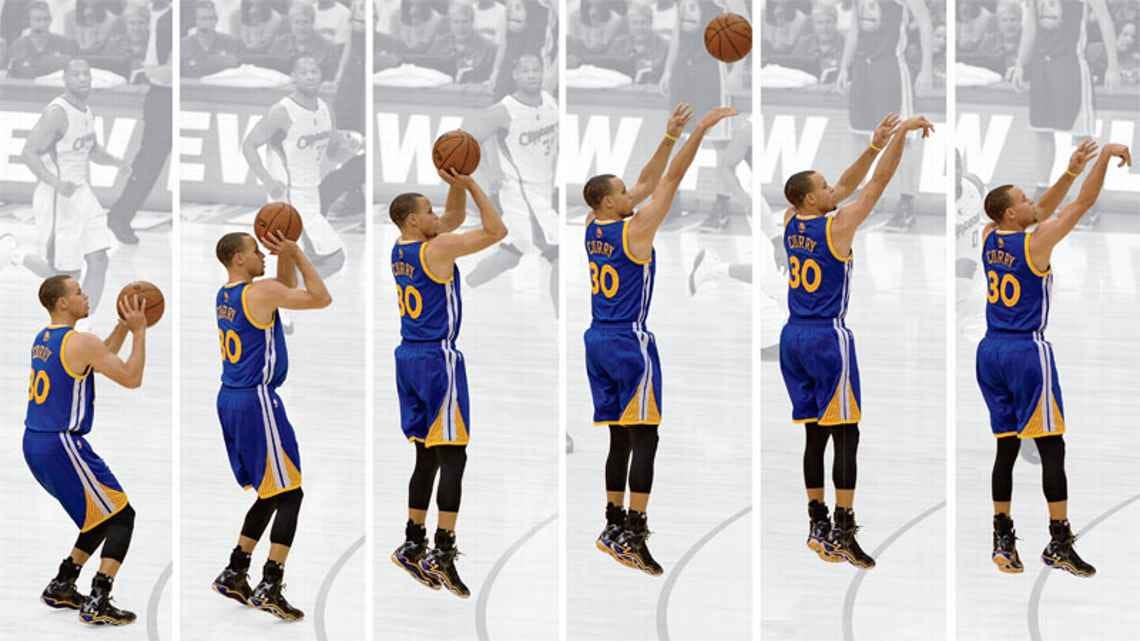

Photo by Alex Read

Policy Gradient Methods (PG) are frequently used algorithms in reinforcement learning (RL). The principle is very simple.

We observe and act.

A human takes actions based on observations. As a quote from Stephen Curry:

You have to rely on the fact that you put the work in to create the muscle memory and then trust that it will kick in. The reason you practice and work on it so much is so that during the game your instincts take over to a point where it feels weird if you don’t do it the right way.

Constant practice is the key to build muscle memory for athletes. For PG, we train a policy to act based on observations. The training in PG makes actions with high rewards more likely, or vice versa.

We keep what is working and throw away what is not.

In policy gradients, Curry is our agent.

- He observes the state of the environment (s).

- He takes action (u) based on his instinct (a policy π) on the state s.

- He moves and the opponents react. A new state is formed.

- He takes further actions based on the observed state.

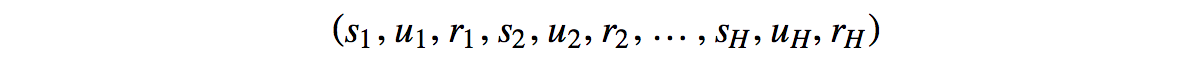

- After a trajectory τ of motions, he adjusts his instinct based on the total rewards R(τ) received.

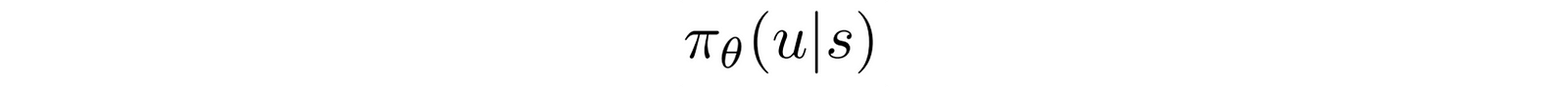

Curry visualizes the situation and instantly knows what to do. Years of training perfects the instinct to maximize the rewards. In RL, the instinct may be mathematically described as:

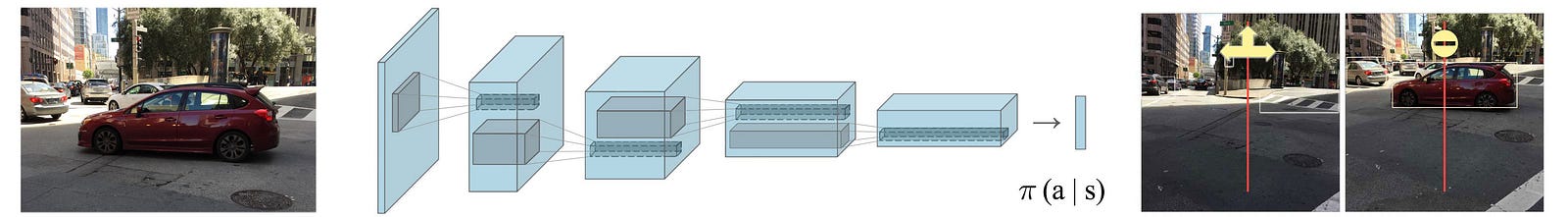

the probability of taking the action u given a state s. π is the policy in RL. For example, what is the chance of turning or stopping when you see a car in front:

Objective

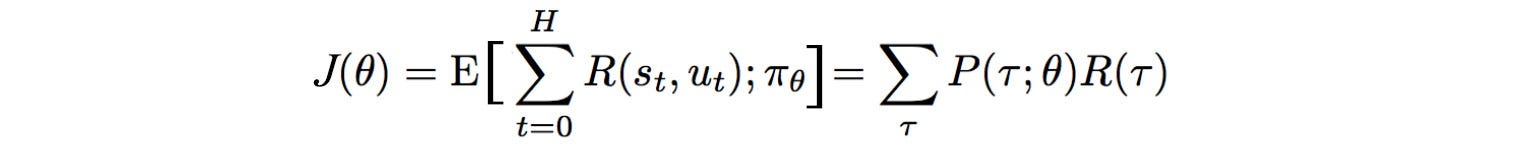

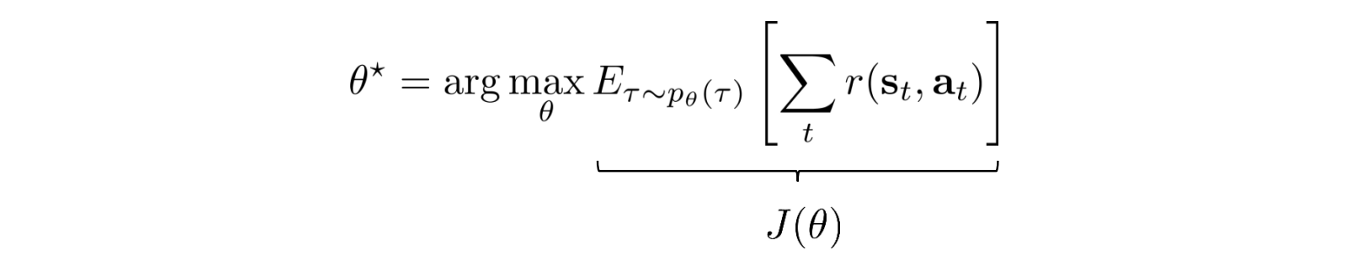

How can we formulate our objective mathematically? The expected rewards equal the sum of the probability of a trajectory × corresponding rewards:

And our objective is to find a policy θ that create a trajectory τ

that maximizes the expected rewards.

Input features & rewards

s can be handcrafted features for the state (like the joint angles/velocity of a robotic arm) but in some problem domains, RL is mature enough to handle raw images directly. π can be a deterministic policy which output the exact action to be taken (move the joystick left or right). π can be a stochastic policy also which outputs the possibility of an action that it may take.

We record the reward r given at each time step. In a basketball game, all are 0 except the terminate state which equals 0, 1, 2 or 3.

Let’s introduce one more term H called the horizon. We can run the course of simulation indefinitely (h→∞) until it reaches the terminate state, or we set a limit to H steps.

Optimization

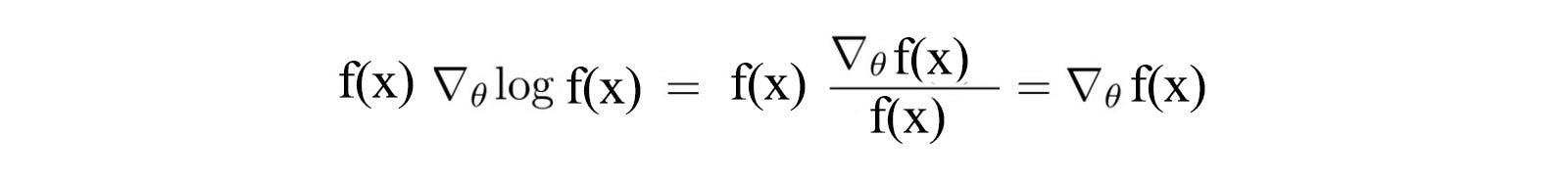

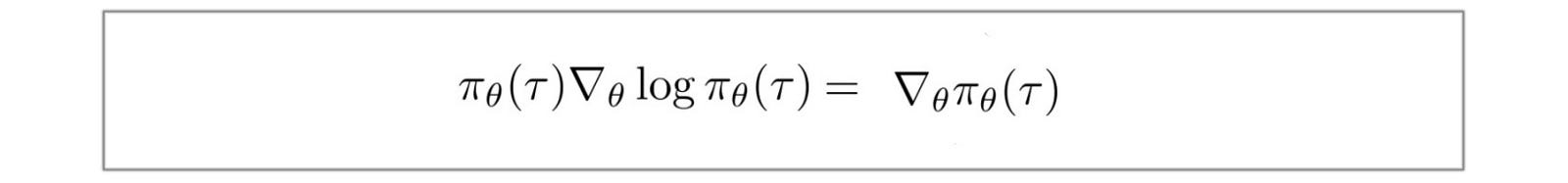

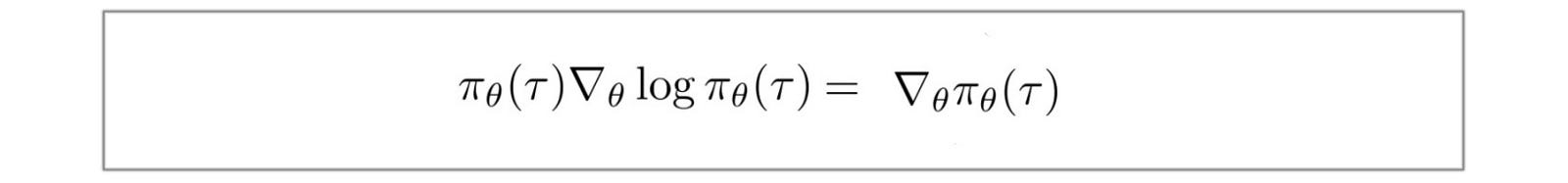

First, let’s identify a common and important trick in Deep Learning and RL. The partial derivative of a function f(x) (R.H.S.) is equal to f(x) times the partial derivative of the log(f(x)).

Replace f(x) with π.

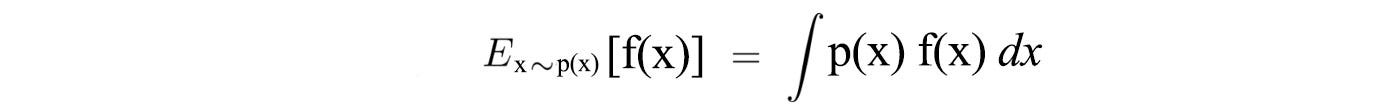

Also, for a continuous space, expectation can be expressed as:

Now, let’s formalize our optimization problem mathematically. We want to model a policy that creates trajectories that maximize the total rewards.

However, to use gradient descent to optimize our problem, do we need to take the derivative of the reward function r which may not be differentiable or formalized?

Let’s rewrite our objective function J as:

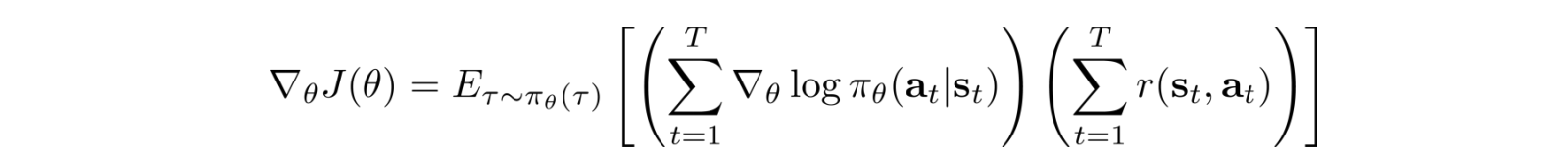

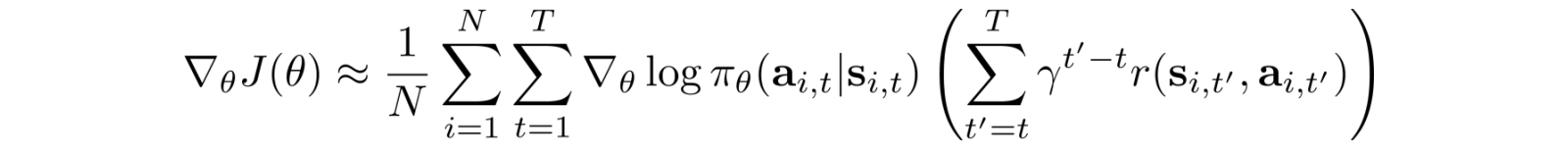

The gradient (policy gradient) becomes:

Great news! The policy gradient can be represented as an expectation. It means we can use sampling to approximate it. Also, we sample the value of rbut not differentiate it. It makes sense because the rewards do not directly depend on how we parameterize the model. But the trajectories τ are. So what is the partial derivative of the log π(τ).

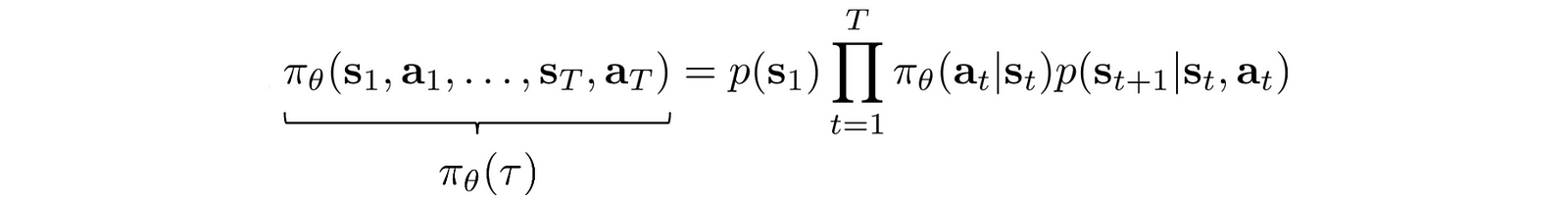

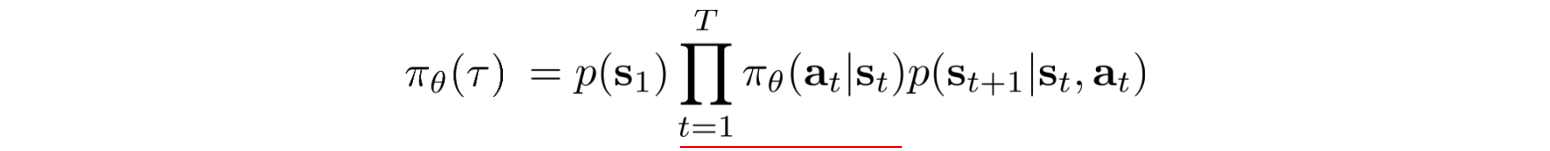

π(τ) is defined as:

Take the log:

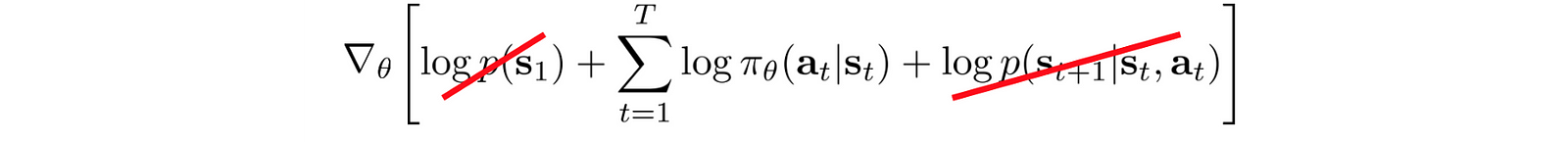

The first and the last term does not depend on θ and can be removed.

So the policy gradient

becomes:

And we use this policy gradient to update the policy θ.

Intuition

How can we make sense of these equations? The underlined term is the maximum log likelihood. In deep learning, it measures the likelihood of the observed data. In our context, it measures how likely the trajectory is under the current policy. By multiplying it with the rewards, we want to increase the likelihood of a policy if the trajectory results in a high positive reward. On the contrary, we want to decrease the likelihood of a policy if it results in a high negative reward. In short, keep what is working and throw out what is not.

If going up the hill below means higher rewards, we will change the model parameters (policy) to increase the likelihood of trajectories that move higher.

There is one thing significant about the policy gradient. The probability of a trajectory is defined as:

States in a trajectory are strongly related. In Deep Learning, a long sequence of multiplication with factors that are strongly correlated can trigger vanishing or exploding gradient easily. However, the policy gradient only sums up the gradient which breaks the curse of multiplying a long sequence of numbers.

The trick

creates a maximum log likelihood and the log breaks the curse of multiplying a long chain of policy.

Policy Gradient with Monte Carlo rollouts

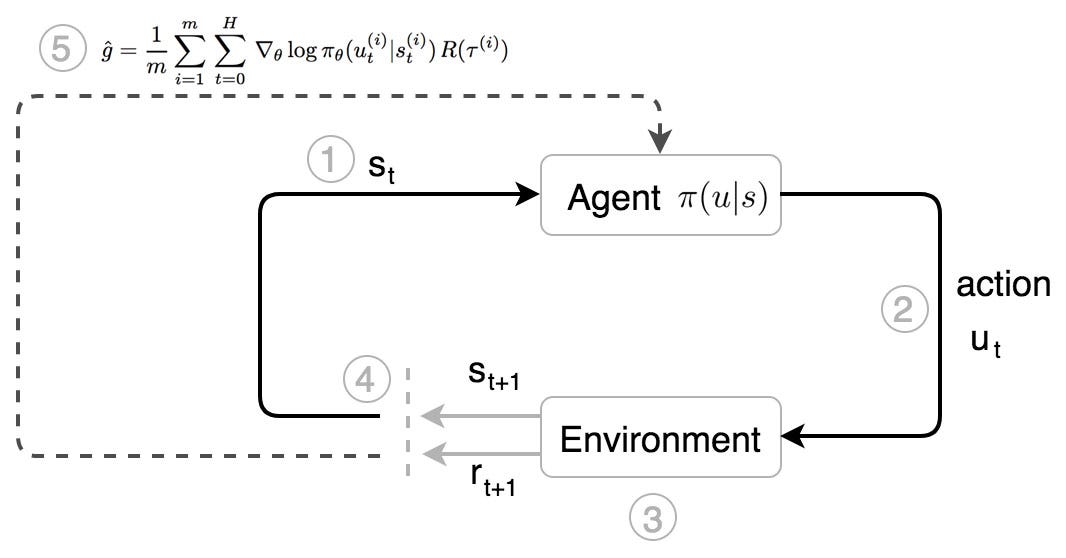

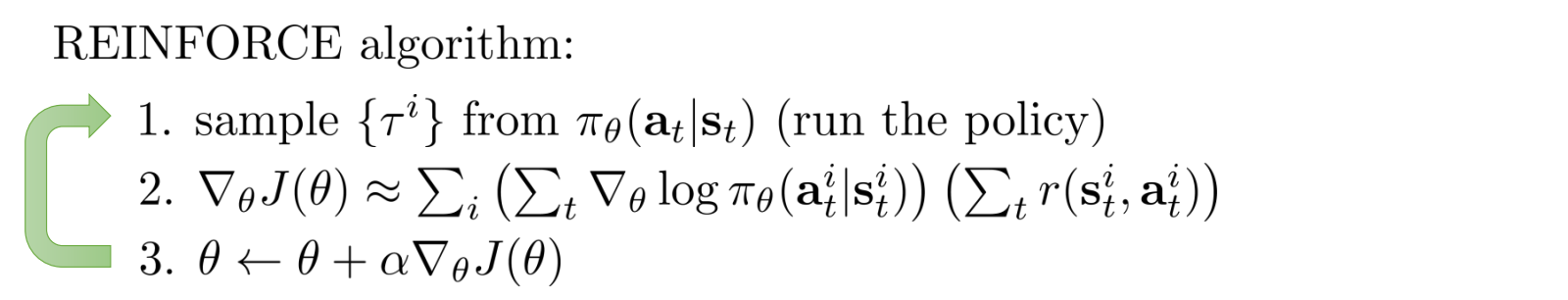

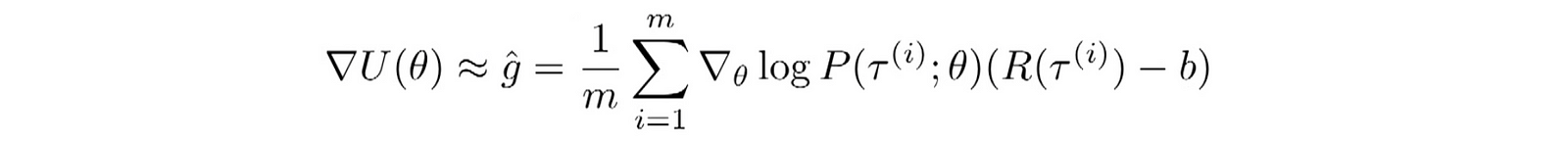

Here is the REINFORCE algorithm which uses Monte Carlo rollout to compute the rewards. i.e. play out the whole episode to compute the total rewards.

Policy gradient with automatic differentiation

The policy gradient can be computed easily with many Deep Learning software packages. For example, this is the partial code for TensorFlow:

Yes, as often, coding looks simpler than the explanations.

Continuous control with Gaussian policies

How can we model a continuous control?

Let’s assume the values for actions are Gaussian distributed

and the policy is defined using a Gaussian distribution with means computed from a deep network:

With

We can compute the partial derivative of the log π as:

So we can backpropagate

through the policy network π to update the policy θ. The algorithm will look exactly the same as before. Just start with a slightly different way in calculating the log of the policy.

Policy Gradients improvements

Policy Gradients suffer from high variance and low convergence.

Monte Carlo plays out the whole trajectory and records the exact rewards of a trajectory. However, the stochastic policy may take different actions in different episodes. One small turn can completely alter the result. So Monte Carlo has no bias but high variance. Variance hurts deep learning optimization. The variance provides conflicting descent direction for the model to learn. One sampled rewards may want to increase the log likelihood and another may want to decrease it. This hurts the convergence. To reduce the variance caused by actions, we want to reduce the variance for the sampled rewards.

Increasing the batch size in PG reduces variance.

However, increasing the batch size significantly reduces sample efficiency. So we cannot increase it too far, we need additional mechanisms to reduce the variance.

Baseline

We can always subtract a term to the optimization problem as long as the term is not related to θ. So instead of using the total reward, we subtract it with V(s).

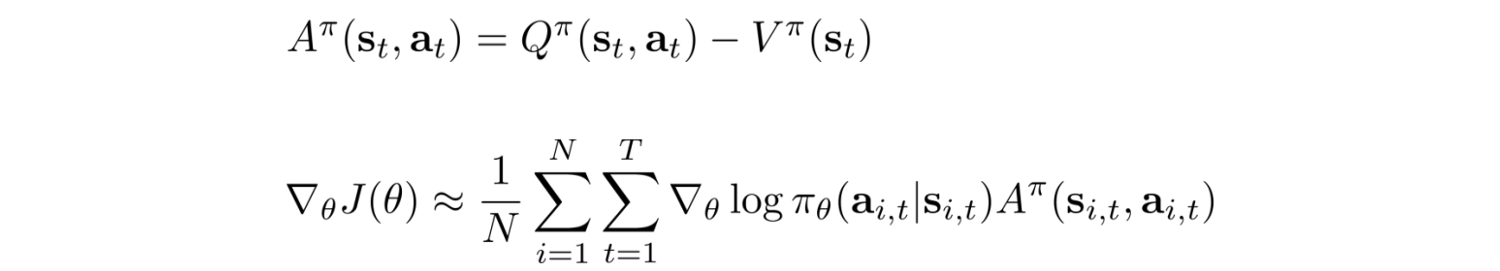

We define the advantage function A and rewrite the policy gradient in terms of A.

In deep learning, we want input features to be zero-centered. Intuitively, RL is interested in knowing whether an action is performed better than the average. If rewards are always positive (R>0), PG always try to increase a trajectory probability even if it receives much smaller rewards than others. Consider two different situations:

- Situation 1: Trajectory A receives+10 rewards and Trajectory B receives -10 rewards.

- Situation 2: Trajectory A receives +10 rewards and Trajectory B receives +1 rewards.

In the first situation, PG will increase the probability of Trajectory A while decreasing B. In the second situation, it will increase both. As a human, we will likely decrease the likelihood of trajectory B in both situations.

By introducing a baseline, like V, we can recalibrate the rewards relative to the average action.

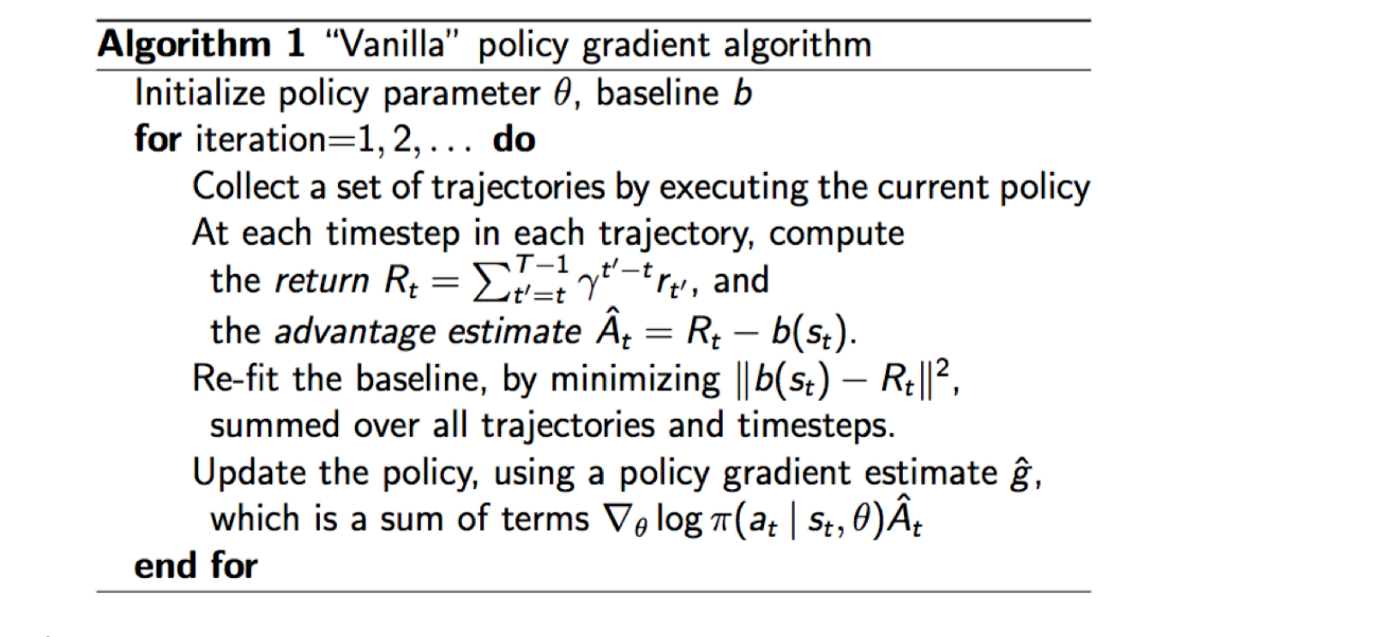

Vanilla Policy Gradient Algorithm

Here is the generic algorithm for the Policy Gradient Algorithm using a baseline b.

Causality

Future actions should not change past decision. Present actions only impact the future. Therefore, we can change our objective function to reflect this also.

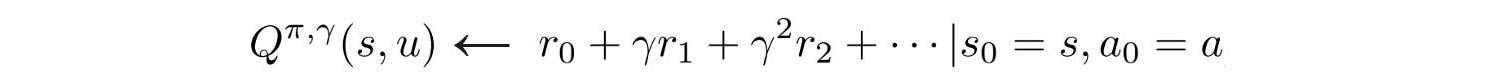

Reward discount

Reward discount reduces variance which reduces the impact of distant actions. Here, a different formula is used to compute the total rewards.

And the corresponding objective function becomes:

Part 2

This ends part 1 of the policy gradient methods. In the second part, we continue on the Temporal Difference, Hyperparameter tuning, and importance sampling. Temporal Difference will further reduce the variance and the importance sampling will lay down the theoretical foundation for more advanced policy gradient methods like TRPO and PPO.

Credit and references

(转)RL — Policy Gradient Explained的更多相关文章

- DRL之:策略梯度方法 (Policy Gradient Methods)

DRL 教材 Chpater 11 --- 策略梯度方法(Policy Gradient Methods) 前面介绍了很多关于 state or state-action pairs 方面的知识,为了 ...

- [Reinforcement Learning] Policy Gradient Methods

上一篇博文的内容整理了我们如何去近似价值函数或者是动作价值函数的方法: \[ V_{\theta}(s)\approx V^{\pi}(s) \\ Q_{\theta}(s)\approx Q^{\p ...

- 论文笔记之:SeqGAN: Sequence generative adversarial nets with policy gradient

SeqGAN: Sequence generative adversarial nets with policy gradient AAAI-2017 Introduction : 产生序列模拟数 ...

- 强化学习七 - Policy Gradient Methods

一.前言 之前我们讨论的所有问题都是先学习action value,再根据action value 来选择action(无论是根据greedy policy选择使得action value 最大的ac ...

- Deep Learning专栏--强化学习之从 Policy Gradient 到 A3C(3)

在之前的强化学习文章里,我们讲到了经典的MDP模型来描述强化学习,其解法包括value iteration和policy iteration,这类经典解法基于已知的转移概率矩阵P,而在实际应用中,我们 ...

- Policy Gradient Algorithms

Policy Gradient Algorithms 2019-10-02 17:37:47 This blog is from: https://lilianweng.github.io/lil-l ...

- Ⅶ. Policy Gradient Methods

Dictum: Life is just a series of trying to make up your mind. -- T. Fuller 不同于近似价值函数并以此计算确定性的策略的基于价 ...

- 强化学习(十三) 策略梯度(Policy Gradient)

在前面讲到的DQN系列强化学习算法中,我们主要对价值函数进行了近似表示,基于价值来学习.这种Value Based强化学习方法在很多领域都得到比较好的应用,但是Value Based强化学习方法也有很 ...

- 强化学习--Policy Gradient

Policy Gradient综述: Policy Gradient,通过学习当前环境,直接给出要输出的动作的概率值. Policy Gradient 不是单步更新,只能等玩完一个epoch,再 ...

随机推荐

- Centos7安装Openresty和orange

1.说明 以下全部操作均已root用户执行 2.安装 2.1 安装依赖 yum install readline-devel pcre-devel openssl-devel gcc 2.2 下载op ...

- kubeadm添加新master或node

一.首先在master上生成新的token kubeadm token create --print-join-command [root@cn-hongkong nfs]# kubeadm toke ...

- 用于并发系统建模和验证的着色Petri网及其工具软件的CPN Tools(笔记整理)

1.着色Petri网(CPNS)是一种建模和验证系统的语言,在这些熊中并发性,交互性和同步性扮演着主要的角色,着色Petri网是一种功能编程语言Standard ML结合起来的离散时间建模语言,Pet ...

- IBM MQ V6.0 for Windows7

https://blog.csdn.net/guolf521/article/details/87913141 websphere 商用中间件MQ 轨道交通使用

- gsoup webservice

SoapUI调用webservice实现的两种方式 gsoup https://blog.csdn.net/zhuzhihai1988/article/details/8131370

- Python数据分析(基础)

目录: Python基础: Python基本用法:控制语句.函数.文件读写等 Python基本数据结构:字典.集合等 Numpy:简述 Pandas:简述 一. Python基础: 1.1 文件读取 ...

- [JSOI2015]最大公约数

题意:给一个序列a[1],a[2],a[3]...a[n],求其中连续的子序列A[L],A[L+1],...,A[R],使其权值 W(L,R)=(R-L+1)×gcd(A[L],...,A[R])最大 ...

- XStream 1.4.10版本处理xml使用问题

XStream pom依赖: <dependency> <groupId>com.thoughtworks.xstream</groupId> <artifa ...

- 三.cron计划任务

• 用途:按照设置的时间间隔为用户反复执行某一项固 定的系统任务 • 软件包:cronie.crontabs • 系统服务:crond • 日志文件:/var/log/crond • 使用 cro ...

- RegularExpression正则表达式

1.必須為大寫字母,小寫字母,數字和符號的至少其中3種組合 The new password should contain any three of different syntax which is ...