Openshift部署Zookeeper和Kafka

部署Zookeeper

github网址

https://github.com/ericnie2015/zookeeper-k8s-openshift

1.在openshift目录中,首先构建images

oc create -f buildconfig.yaml

oc new-app zk-builder -p IMAGE_STREAM_VERSION="3.4.13"

buildconfig.yaml中主要是启动github中的docker类型的构建,并把结果push到imagestream中

- kind: BuildConfig

apiVersion: v1

metadata:

name: zk-builder

spec:

runPolicy: Serial

triggers:

- type: GitHub

github:

secret: ${GITHUB_HOOK_SECRET}

- type: ConfigChange

source:

git:

uri: ${GITHUB_REPOSITORY}

ref: ${GITHUB_REF}

strategy:

type: Docker

output:

to:

kind: ImageStreamTag

name: "${IMAGE_STREAM_NAME}:${IMAGE_STREAM_VERSION}"

而Dockerfile更多的是一个从无到有的构建过程,为解决启动无法访问/opt/zookeeper/data目录,把user设置成root

FROM openjdk:-jre-alpine MAINTAINER Enrique Garcia <engapa@gmail.com> ARG ZOO_HOME=/opt/zookeeper

ARG ZOO_USER=zookeeper

ARG ZOO_GROUP=zookeeper

ARG ZOO_VERSION="3.4.13" ENV ZOO_HOME=$ZOO_HOME \

ZOO_VERSION=$ZOO_VERSION \

ZOO_CONF_DIR=$ZOO_HOME/conf \

ZOO_REPLICAS= # Required packages

RUN set -ex; \

apk add --update --no-cache \

bash tar wget curl gnupg openssl ca-certificates # Download zookeeper distribution under ZOO_HOME /zookeeper-3.4./

ADD zk_download.sh /tmp/ RUN set -ex; \

mkdir -p /opt/zookeeper/bin; \

mkdir -p /opt/zookeeper/conf; \

chmod a+x /tmp/zk_download.sh; RUN /tmp/zk_download.sh RUN set -ex \

rm -rf /tmp/zk_download.sh; \

apk del wget gnupg # Add custom scripts and configure user

ADD zk_env.sh zk_setup.sh zk_status.sh /opt/zookeeper/bin/ RUN set -ex; \

chmod a+x $ZOO_HOME/bin/zk_*.sh; \

addgroup $ZOO_GROUP; \

addgroup sudo; \

adduser -h $ZOO_HOME -g "Zookeeper user" -s /sbin/nologin -D -G $ZOO_GROUP -G sudo $ZOO_USER; \

chown -R $ZOO_USER:$ZOO_GROUP $ZOO_HOME; \

ln -s $ZOO_HOME/bin/zk_*.sh /usr/bin USER root

#USER $ZOO_USER

WORKDIR $ZOO_HOME/bin/

# EXPOSE ${ZK_clientPort:-} ${ZOO_SERVER_PORT:-} ${ZOO_ELECTION_PORT:-} ENTRYPOINT ["./zk_env.sh"] #RUN echo "aaa" > /usr/alog #CMD ["tail","-f","/usr/alog"]

CMD zk_setup.sh && ./zkServer.sh start-foreground

如果因为虚拟机网络问题无法访问外网,可以先在login到registry,然后直接在本地构建

ericdeMacBook-Pro:zookeeper-k8s-openshift ericnie$ docker build -t 172.30.1.1:/myproject/zookeeper:3.4. .

Sending build context to Docker daemon .78kB

Step / : FROM openjdk:-jre-alpine

Trying to pull repository registry.access.redhat.com/openjdk ...

Trying to pull repository docker.io/library/openjdk ...

sha256:e3168174d367db9928bb70e33b4750457092e61815d577e368f53efb29fea48b: Pulling from docker.io/library/openjdk

4fe2ade4980c: Pull complete

6fc58a8d4ae4: Pull complete

d3e6d7e9702a: Pull complete

Digest: sha256:e3168174d367db9928bb70e33b4750457092e61815d577e368f53efb29fea48b

Status: Downloaded newer image for docker.io/openjdk:-jre-alpine

---> 0fe3f0d1ee48

docker images

然后推送到镜像库

ericdeMacBook-Pro:zookeeper-k8s-openshift ericnie$ docker push 172.30.1.1:/myproject/zookeeper:3.4.

The push refers to a repository [172.30.1.1:/myproject/zookeeper]

5fe222836c76: Pushed

55e1a1171f7a: Pushed

347a06ac9233: Pushed

03a33ce83585: Pushed

94058c4e233d: Pushed

984d85b76d76: Pushed

cd4b8e8a8238: Pushed

12c374f8270a: Pushed

0c3170905795: Pushed

df64d3292fd6: Pushed

3.4.: digest: sha256:87bf78acf297bc2144d77ce4465294fec519fd50a4c197a1663cc4304c8040c9 size:

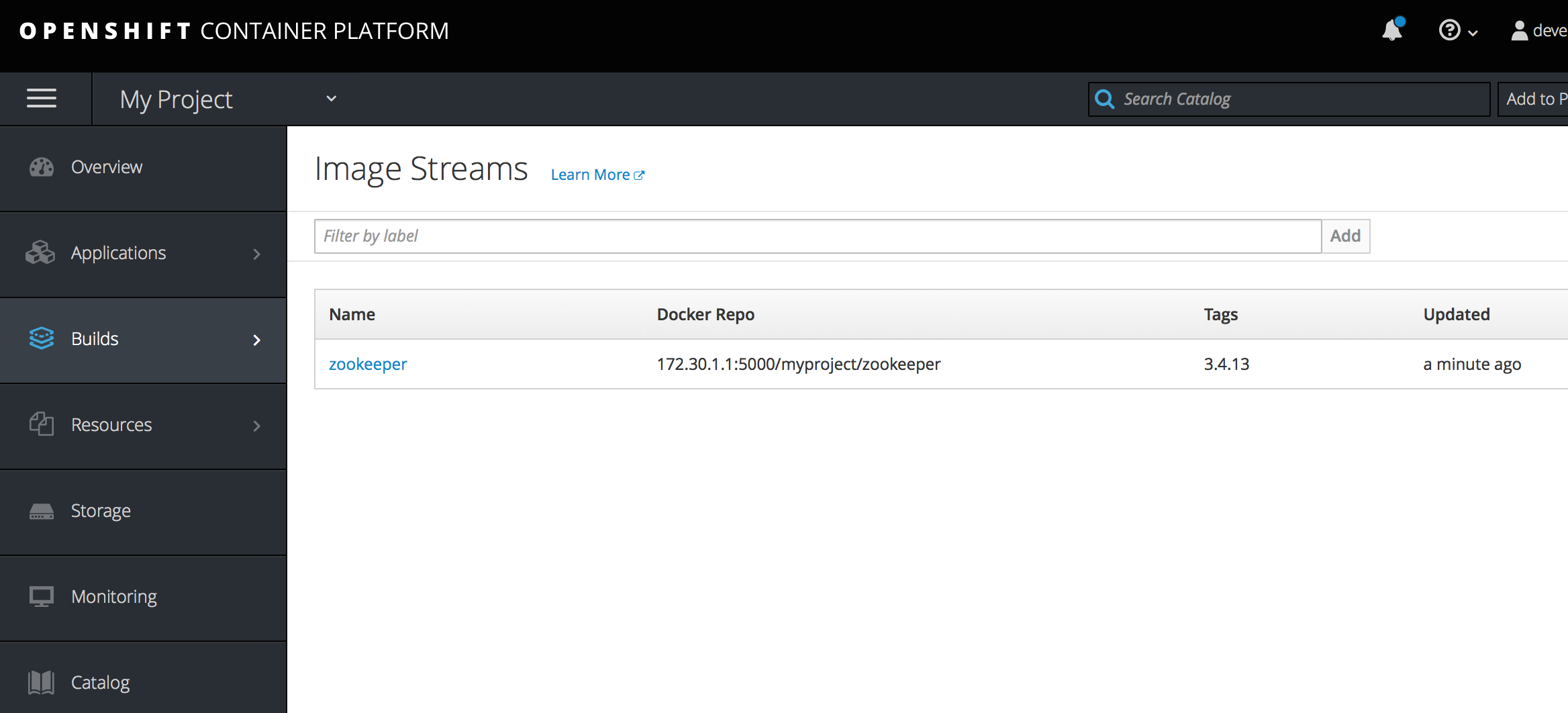

完成后在console上看到imagestream

2.基于模版部署

创建部署模版

oc create -f zk-persistent.yaml

ericdeMacBook-Pro:openshift ericnie$ cat zk-persistent.yaml

kind: Template

apiVersion: v1

metadata:

name: zk-persistent

annotations:

openshift.io/display-name: Zookeeper (Persistent)

description: Create a replicated Zookeeper server with persistent storage

iconClass: icon-database

tags: database,zookeeper

labels:

template: zk-persistent

component: zk

parameters:

- name: NAME

value: zk-persistent

required: true

- name: SOURCE_IMAGE

description: Container image

value: 172.30.1.1:/myproject/zookeeper

required: true

- name: ZOO_VERSION

description: Version

value: "3.4.13"

required: true

- name: ZOO_REPLICAS

description: Number of nodes

value: ""

required: true

- name: VOLUME_DATA_CAPACITY

description: Persistent volume capacity for zookeeper dataDir directory (e.g. 512Mi, 2Gi)

value: 1Gi

required: true

- name: VOLUME_DATALOG_CAPACITY

description: Persistent volume capacity for zookeeper dataLogDir directory (e.g. 512Mi, 2Gi)

value: 1Gi

required: true

- name: ZOO_TICK_TIME

description: The number of milliseconds of each tick

value: ""

required: true

- name: ZOO_INIT_LIMIT

description: The number of ticks that the initial synchronization phase can take

value: ""

required: true

- name: ZOO_SYNC_LIMIT

description: The number of ticks that can pass between sending a request and getting an acknowledgement

value: ""

required: true

- name: ZOO_CLIENT_PORT

description: The port at which the clients will connect

value: ""

required: true

- name: ZOO_SERVER_PORT

description: Server port

value: ""

required: true

- name: ZOO_ELECTION_PORT

description: Election port

value: ""

required: true

- name: ZOO_MAX_CLIENT_CNXNS

description: The maximum number of client connections

value: ""

required: true

- name: ZOO_SNAP_RETAIN_COUNT

description: The number of snapshots to retain in dataDir

value: ""

required: true

- name: ZOO_PURGE_INTERVAL

description: Purge task interval in hours. Set to to disable auto purge feature

value: ""

required: true

- name: ZOO_HEAP_SIZE

description: JVM heap size

value: "-Xmx960M -Xms960M"

required: true

- name: RESOURCE_MEMORY_REQ

description: The memory resource request.

value: "1Gi"

required: true

- name: RESOURCE_MEMORY_LIMIT

description: The limits for memory resource.

value: "1Gi"

required: true

- name: RESOURCE_CPU_REQ

description: The CPU resource request.

value: ""

required: true

- name: RESOURCE_CPU_LIMIT

description: The limits for CPU resource.

value: ""

required: true objects:

- apiVersion: v1

kind: Service

metadata:

name: ${NAME}

labels:

zk-name: ${NAME}

annotations:

service.alpha.kubernetes.io/tolerate-unready-endpoints: "true"

spec:

ports:

- port: ${ZOO_CLIENT_PORT}

name: client

- port: ${ZOO_SERVER_PORT}

name: server

- port: ${ZOO_ELECTION_PORT}

name: election

clusterIP: None

selector:

zk-name: ${NAME}

- apiVersion: apps/v1beta1

kind: StatefulSet

metadata:

name: ${NAME}

labels:

zk-name: ${NAME}

spec:

podManagementPolicy: "Parallel"

serviceName: ${NAME}

replicas: ${ZOO_REPLICAS}

template:

metadata:

labels:

zk-name: ${NAME}

template: zk-persistent

component: zk

annotations:

scheduler.alpha.kubernetes.io/affinity: >

{

"podAntiAffinity": {

"requiredDuringSchedulingIgnoredDuringExecution": [{

"labelSelector": {

"matchExpressions": [{

"key": "zk-name",

"operator": "In",

"values": ["${NAME}"]

}]

},

"topologyKey": "kubernetes.io/hostname"

}]

}

}

spec:

containers:

- name: ${NAME}

imagePullPolicy: IfNotPresent

image: ${SOURCE_IMAGE}:${ZOO_VERSION}

resources:

requests:

memory: ${RESOURCE_MEMORY_REQ}

cpu: ${RESOURCE_CPU_REQ}

limits:

memory: ${RESOURCE_MEMORY_LIMIT}

cpu: ${RESOURCE_CPU_LIMIT}

ports:

- containerPort: ${ZOO_CLIENT_PORT}

name: client

- containerPort: ${ZOO_SERVER_PORT}

name: server

- containerPort: ${ZOO_ELECTION_PORT}

name: election

env:

- name : SETUP_DEBUG

value: "true"

- name : ZOO_REPLICAS

value: ${ZOO_REPLICAS}

- name : ZK_HEAP_SIZE

value: ${ZOO_HEAP_SIZE}

- name : ZK_tickTime

value: ${ZOO_TICK_TIME}

- name : ZK_initLimit

value: ${ZOO_INIT_LIMIT}

- name : ZK_syncLimit

value: ${ZOO_SYNC_LIMIT}

- name : ZK_maxClientCnxns

value: ${ZOO_MAX_CLIENT_CNXNS}

- name : ZK_autopurge_snapRetainCount

value: ${ZOO_SNAP_RETAIN_COUNT}

- name : ZK_autopurge_purgeInterval

value: ${ZOO_PURGE_INTERVAL}

- name : ZK_clientPort

value: ${ZOO_CLIENT_PORT}

- name : ZOO_SERVER_PORT

value: ${ZOO_SERVER_PORT}

- name : ZOO_ELECTION_PORT

value: ${ZOO_ELECTION_PORT}

- name : JAVA_ZK_JVMFLAG

value: "\"${ZOO_HEAP_SIZE}\""

readinessProbe:

exec:

command:

- zk_status.sh

initialDelaySeconds:

timeoutSeconds:

livenessProbe:

exec:

command:

- zk_status.sh

initialDelaySeconds:

timeoutSeconds:

volumeMounts:

- name: datadir

mountPath: /opt/zookeeper/data

- name: datalogdir

mountPath: /opt/zookeeper/data-log

volumeClaimTemplates:

- metadata:

name: datadir

annotations:

volume.alpha.kubernetes.io/storage-class: anything

spec:

accessModes: [ "ReadWriteOnce" ]

resources:

requests:

storage: ${VOLUME_DATA_CAPACITY}

- metadata:

name: datalogdir

annotations:

volume.alpha.kubernetes.io/storage-class: anything

spec:

accessModes: [ "ReadWriteOnce" ]

resources:

requests:

storage: ${VOLUME_DATALOG_CAPACITY}

oc new-app zk-persistent -p NAME=myzk

--> Deploying template "test/zk-persistent" to project test Zookeeper (Persistent)

---------

Create a replicated Zookeeper server with persistent storage * With parameters:

* NAME=myzk

* SOURCE_IMAGE=bbvalabs/zookeeper

* ZOO_VERSION=3.4.

* ZOO_REPLICAS=

* VOLUME_DATA_CAPACITY=1Gi

* VOLUME_DATALOG_CAPACITY=1Gi

* ZOO_TICK_TIME=

* ZOO_INIT_LIMIT=

* ZOO_SYNC_LIMIT=

* ZOO_CLIENT_PORT=

* ZOO_SERVER_PORT=

* ZOO_ELECTION_PORT=

* ZOO_MAX_CLIENT_CNXNS=

* ZOO_SNAP_RETAIN_COUNT=

* ZOO_PURGE_INTERVAL=

* ZOO_HEAP_SIZE=-Xmx960M -Xms960M

* RESOURCE_MEMORY_REQ=1Gi

* RESOURCE_MEMORY_LIMIT=1Gi

* RESOURCE_CPU_REQ=

* RESOURCE_CPU_LIMIT= --> Creating resources ...

service "myzk" created

statefulset "myzk" created

--> Success

Run 'oc status' to view your app. $ oc get all,pvc,statefulset -l zk-name=myzk

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

svc/myzk None <none> /TCP,/TCP,/TCP 11m NAME DESIRED CURRENT AGE

statefulsets/myzk 11m NAME READY STATUS RESTARTS AGE

po/myzk- / Running 2m

po/myzk- / Running 1m

po/myzk- / Running 46s NAME STATUS VOLUME CAPACITY ACCESSMODES AGE

pvc/datadir-myzk- Bound pvc-a654d055-6dfa-11e7-abe1-42010a840002 1Gi RWO 11m

pvc/datadir-myzk- Bound pvc-a6601148-6dfa-11e7-abe1-42010a840002 1Gi RWO 11m

pvc/datadir-myzk- Bound pvc-a667fa41-6dfa-11e7-abe1-42010a840002 1Gi RWO 11m

pvc/datalogdir-myzk- Bound pvc-a657ff77-6dfa-11e7-abe1-42010a840002 1Gi RWO 11m

pvc/datalogdir-myzk- Bound pvc-a664407a-6dfa-11e7-abe1-42010a840002 1Gi RWO 11m

pvc/datalogdir-myzk- Bound pvc-a66b85f7-6dfa-11e7-abe1-42010a840002 1Gi RWO 11m NAME DESIRED CURRENT AGE

statefulsets/myzk 11m

如果在cdk或者minishift上,因为只有一个节点,所以只会启动一个myzk

ericdeMacBook-Pro:openshift ericnie$ oc get pods

NAME READY STATUS RESTARTS AGE

myzk- / Running 1m

myzk- / Pending 1m

myzk- / Pending 1m

进行验证

ericdeMacBook-Pro:openshift ericnie$ for i in ; do oc exec myzk-$i -- hostname; done

myzk-

myzk-

myzk- ericdeMacBook-Pro:openshift ericnie$ for i in ; do echo "myid myzk-$i";oc exec myzk-$i -- cat /opt/zookeeper/data/myid; done

myid myzk- myid myzk- myid myzk- ericdeMacBook-Pro:openshift ericnie$ for i in ; do oc exec myzk-$i -- hostname -f; done

myzk-.myzk.myproject.svc.cluster.local

myzk-.myzk.myproject.svc.cluster.local

myzk-.myzk.myproject.svc.cluster.local

3.删除实例

oc delete all,statefulset,pvc -l zk-name=myzk

中间遇到的问题

- pod启动以后处于crash状态,看日志是zk_status.sh没找到,后来调了半天的Dockerfile,发现部署模版调用的是zookeeper:3.4.13下载的版本,并非本地版本,所以强制修改为172.30.1.1:5000/myproject/zookeeper

- pod无法启动,报错没有访问/opt/zookeeper/data目录的权限,去掉SecurityContext的Runas语句后,以root启动避免

部署kafka

过程和zookeeper类似

1.clone代码

ericdeMacBook-Pro:minishift ericnie$ git clone https://github.com/ericnie2015/kafka-k8s-openshift.git

Cloning into 'kafka-k8s-openshift'...

remote: Enumerating objects: , done.

remote: Total (delta ), reused (delta ), pack-reused

Receiving objects: % (/), 102.01 KiB | 24.00 KiB/s, done.

Resolving deltas: % (/), done.

2.本地构建并push镜像仓库

ericdeMacBook-Pro:kafka-k8s-openshift ericnie$ docker build -t 172.30.1.1:/myproject/kafka:2.12-2.0. .

Sending build context to Docker daemon .53kB

Step / : FROM openjdk:-jre-alpine

---> 0fe3f0d1ee48

Step / : MAINTAINER Enrique Garcia <engapa@gmail.com>

---> Using cache

---> e51b1e313e0c

Step / : ARG KAFKA_HOME=/opt/kafka

---> Running in 0a464e9d1781

---> abadcf5d52d5

Removing intermediate container 0a464e9d1781

Step / : ARG KAFKA_USER=kafka

---> Running in b2e50be2d35b

---> e3f1455c4aca

ericdeMacBook-Pro:kafka-k8s-openshift ericnie$ docker push 172.30.1.1:/myproject/kafka:2.12-2.0.

The push refers to a repository [172.30.1.1:/myproject/kafka]

84cb97552ea5: Pushed

681963d6c624: Pushed

47afbbc52b62: Pushed

81d8600a6e97: Pushed

8457712c19b8: Pushed

6286fd332b87: Pushed

c2f9d211658b: Pushed

12c374f8270a: Mounted from myproject/zookeeper

0c3170905795: Mounted from myproject/zookeeper

df64d3292fd6: Mounted from myproject/zookeeper

2.12-2.0.: digest: sha256:9ed95c9c7682b49f76d4b5454a704db5ba9561127fe86fe6ca52bd673c279ee5 size:

3.基于模版部署

ericdeMacBook-Pro:openshift ericnie$ cat kafka-persistent.yaml

kind: Template

apiVersion: v1

metadata:

name: kafka-persistent

annotations:

openshift.io/display-name: Kafka (Persistent)

description: Create a Kafka cluster, with persistent storage.

iconClass: icon-database

tags: messaging,kafka

labels:

template: kafka-persistent

component: kafka

parameters:

- name: NAME

description: Name.

required: true

value: kafka

- name: KAFKA_VERSION

description: Kafka Version (Scala and kafka version).

required: true

value: "2.12-2.0.0"

- name: SOURCE_IMAGE

description: Container image source.

value: 172.30.1.1:/myproject/kafka

required: true

- name: REPLICAS

description: Number of replicas.

required: true

value: ""

- name: KAFKA_HEAP_OPTS

description: Kafka JVM Heap options. Consider value of params RESOURCE_MEMORY_REQ and RESOURCE_MEMORY_LIMIT.

required: true

value: "-Xmx1960M -Xms1960M"

- name: SERVER_NUM_PARTITIONS

description: >

The default number of log partitions per topic.

More partitions allow greater

parallelism for consumption, but this will also result in more files across

the brokers.

required: true

value: ""

- name: SERVER_DELETE_TOPIC_ENABLE

description: >

Topic deletion enabled.

Switch to enable topic deletion or not, default value is 'true'

value: "true"

- name: SERVER_LOG_RETENTION_HOURS

description: >

Log retention hours.

The minimum age of a log file to be eligible for deletion.

value: ""

- name: SERVER_ZOOKEEPER_CONNECT

description: >

Zookeeper conection string, a list as URL with nodes separated by ','.

value: "zk-persistent-0.zk-persistent:2181,zk-persistent-1.zk-persistent:2181,zk-persistent-2.zk-persistent:2181"

required: true

- name: SERVER_ZOOKEEPER_CONNECT_TIMEOUT

description: >

The max time that the client waits to establish a connection to zookeeper (ms).

value: ""

required: true

- name: VOLUME_KAFKA_CAPACITY

description: Kafka logs capacity.

required: true

value: "10Gi"

- name: RESOURCE_MEMORY_REQ

description: The memory resource request.

value: "2Gi"

- name: RESOURCE_MEMORY_LIMIT

description: The limits for memory resource.

value: "2Gi"

- name: RESOURCE_CPU_REQ

description: The CPU resource request.

value: ""

- name: RESOURCE_CPU_LIMIT

description: The limits for CPU resource.

value: ""

- name: LP_INITIAL_DELAY

description: >

LivenessProbe initial delay in seconds.

value: "" objects:

- apiVersion: v1

kind: Service

metadata:

name: ${NAME}

labels:

app: ${NAME}

annotations:

service.alpha.kubernetes.io/tolerate-unready-endpoints: "true"

spec:

ports:

- port:

name: server

- port:

name: zkclient

- port:

name: zkserver

- port:

name: zkleader

clusterIP: None

selector:

app: ${NAME}

- apiVersion: apps/v1beta1

kind: StatefulSet

metadata:

name: ${NAME}

labels:

app: ${NAME}

spec:

podManagementPolicy: "Parallel"

serviceName: ${NAME}

replicas: ${REPLICAS}

template:

metadata:

labels:

app: ${NAME}

template: kafka-persistent

component: kafka

annotations:

# Use this annotation if you want allocate each pod on different node

# Note the number of nodes must be upper than REPLICAS parameter.

scheduler.alpha.kubernetes.io/affinity: >

{

"podAntiAffinity": {

"requiredDuringSchedulingIgnoredDuringExecution": [{

"labelSelector": {

"matchExpressions": [{

"key": "app",

"operator": "In",

"values": ["${NAME}"]

}]

},

"topologyKey": "kubernetes.io/hostname"

}]

}

}

spec:

containers:

- name: ${NAME}

imagePullPolicy: IfNotPresent

image: ${SOURCE_IMAGE}:${KAFKA_VERSION}

resources:

requests:

memory: ${RESOURCE_MEMORY_REQ}

cpu: ${RESOURCE_CPU_REQ}

limits:

memory: ${RESOURCE_MEMORY_LIMIT}

cpu: ${RESOURCE_CPU_LIMIT}

ports:

- containerPort:

name: server

- containerPort:

name: zkclient

- containerPort:

name: zkserver

- containerPort:

name: zkleader

env:

- name : KAFKA_REPLICAS

value: ${REPLICAS}

- name: KAFKA_ZK_LOCAL

value: "false"

- name: KAFKA_HEAP_OPTS

value: ${KAFKA_HEAP_OPTS}

- name: SERVER_num_partitions

value: ${SERVER_NUM_PARTITIONS}

- name: SERVER_delete_topic_enable

value: ${SERVER_DELETE_TOPIC_ENABLE}

- name: SERVER_log_retention_hours

value: ${SERVER_LOG_RETENTION_HOURS}

- name: SERVER_zookeeper_connect

value: ${SERVER_ZOOKEEPER_CONNECT}

- name: SERVER_log_dirs

value: "/opt/kafka/data/logs"

- name: SERVER_zookeeper_connection_timeout_ms

value: ${SERVER_ZOOKEEPER_CONNECT_TIMEOUT}

livenessProbe:

exec:

command:

- kafka_server_status.sh

initialDelaySeconds: ${LP_INITIAL_DELAY}

timeoutSeconds:

volumeMounts:

- name: kafka-data

mountPath: /opt/kafka/data

volumeClaimTemplates:

- metadata:

name: kafka-data

annotations:

volume.alpha.kubernetes.io/storage-class: anything

spec:

accessModes: [ "ReadWriteOnce" ]

resources:

requests:

storage: ${VOLUME_KAFKA_CAPACITY}

修改模版的镜像库和zookeeper地址,我只有一个所以只修改成一个了

ericdeMacBook-Pro:openshift ericnie$ oc create -f kafka-persistent.yaml

template "kafka-persistent" created

ericdeMacBook-Pro:openshift ericnie$ oc new-app kafka-persistent

--> Deploying template "myproject/kafka-persistent" to project myproject Kafka (Persistent)

---------

Create a Kafka cluster, with persistent storage. * With parameters:

* NAME=kafka

* KAFKA_VERSION=2.12-2.0.

* SOURCE_IMAGE=172.30.1.1:/myproject/kafka

* REPLICAS=

* KAFKA_HEAP_OPTS=-Xmx1960M -Xms1960M

* SERVER_NUM_PARTITIONS=

* SERVER_DELETE_TOPIC_ENABLE=true

* SERVER_LOG_RETENTION_HOURS=

* SERVER_ZOOKEEPER_CONNECT=myzk-.myzk.myproject.svc.cluster.local:,myzk-.myzk.myproject.svc.cluster.local:,myzk-.myzk.myproject.svc.cluster.local:

* SERVER_ZOOKEEPER_CONNECT_TIMEOUT=

* VOLUME_KAFKA_CAPACITY=10Gi

* RESOURCE_MEMORY_REQ=.2Gi

* RESOURCE_MEMORY_LIMIT=2Gi

* RESOURCE_CPU_REQ=0.2

* RESOURCE_CPU_LIMIT=

* LP_INITIAL_DELAY= --> Creating resources ...

service "kafka" created

statefulset "kafka" created

--> Success

Application is not exposed. You can expose services to the outside world by executing one or more of the commands below:

'oc expose svc/kafka'

Run 'oc status' to view your app.

因为内存不够,无法跑起来。

4.删除

ericdeMacBook-Pro:openshift ericnie$ oc delete all,statefulset,pvc -l app=kafka

statefulset "kafka" deleted

service "kafka" deleted

persistentvolumeclaim "kafka-data-kafka-0" deleted

persistentvolumeclaim "kafka-data-kafka-1" deleted

persistentvolumeclaim "kafka-data-kafka-2" deleted

Openshift部署Zookeeper和Kafka的更多相关文章

- AWS EC2 CentOS release 6.5 部署zookeeper、kafka、dubbo

AWS EC2 CentOS release 6.5 部署zookeeper.kafka.dubbo参考:http://blog.csdn.net/yizezhong/article/details/ ...

- 单机部署zookeeper、kafka

先安装jdk:mkdir /usr/javatar xf jdk-8u151-linux-x64.tar.gzmv jdk1.8.0_151/ /usr/java/jdk1.8 cat /etc/pr ...

- 【原创】运维基础之Docker(2)通过docker部署zookeeper nginx tomcat redis kibana/elasticsearch/logstash mysql kafka mesos/marathon

通过docker可以从头开始构建集群,也可以将现有集群(配置以及数据)平滑的迁移到docker部署: 1 docker部署zookeeper # usermod -G docker zookeeper ...

- Docker部署zookeeper集群和kafka集群,实现互联

本文介绍在单机上通过docker部署zookeeper集群和kafka集群的可操作方案. 0.准备工作 创建zk目录,在该目录下创建生成zookeeper集群和kafka集群的yml文件,以及用于在该 ...

- Zookeeper与Kafka集群搭建

一 :环境准备: 物理机window7 64位 vmware 3个虚拟机 centos6.8 IP为:192.168.17.[129 -131] JDK1.7安装配置 各虚拟机之间配置免密登录 安装 ...

- HyperLedger Fabric基于zookeeper和kafka集群配置解析

简述 在搭建HyperLedger Fabric环境的过程中,我们会用到一个configtx.yaml文件(可参考Hyperledger Fabric 1.0 从零开始(八)--Fabric多节点集群 ...

- ZooKeeper 01 - 什么是ZooKeeper + 部署ZooKeeper集群

目录 1 什么是ZooKeeper 2 ZooKeeper的功能 2.1 配置管理 2.2 命名服务 2.3 分布式锁 2.4 集群管理 3 部署ZooKeeper集群 3.1 下载并解压安装包 3. ...

- Apache ZooKeeper在Kafka中的角色 - 监控和配置

1.目标 今天,我们将看到Zookeeper在Kafka中的角色.本文包含Kafka中需要ZooKeeper的原因.我们可以说,ZooKeeper是Apache Kafka不可分割的一部分.在了解Zo ...

- zookeeper学习(2)----zookeeper和kafka的关系

转载: Zookeeper 在 Kafka 中的作用 leader 选举 和 follower 信息同步 如上图所示,kafaka集群的 broker,和 Consumer 都需要连接 Zookeep ...

随机推荐

- MySQL的数据库引擎的类型(转)

腾讯后台开发电话面试问到数据库引擎选用的问题,这里补习一下. 本文属于转载,原文链接为:http://www.cnblogs.com/xulb597/archive/2012/05/25/251811 ...

- FineReport——JS二次开发(复选框全选)

在进行查询结果选择的时候,我们经常会用到复选框控件,对于如何实现复选框全选,基本思路: 在复选框中的初始化事件中把控件加入到一个全局数组里,然后在全选复选框里对数组里的控件进行遍历赋值. 首先,定义两 ...

- socket实现udp与tcp通信-java

1.简单介绍Socket Socket套接字 网络上具有唯一标识的IP地址和端口号组合在一起才能构成唯一能识别的标识符套接字. 通信的两端都有Socket. 网络通信其实就是Socket间的通信. 数 ...

- 切面保存web访问记录

package com.hn.xf.device.api.rest.aspect; import com.hn.xf.device.api.rest.authorization.manager.Tok ...

- tk界面版股票下载

from tkinter import * import urllib.request import re,os import threading from tkinter import filedi ...

- mybatis 报错: Invalid bound statement (not found)

错误: org.apache.ibatis.binding.BindingException: Invalid bound statement (not found): test.dao.Produc ...

- es6字符串模板总结

我们平时用原生js插入标签或者用node.js写数据库语言时候,经常需要大量的字符串进行转义,很容易出错,有了es6的字符串模板,就再也不用担心会出错了 1.模板中的变量写在${}中,${}中的值可以 ...

- 转:趋势科技研究员从漏洞、漏洞利用、Fuzz、利用缓解四个方面总结的一张脑图

- Linux命令之rlogin

rlogin [-8EKLdx] [-e char] [-l username] host rlogin在远程主机host上开始一个终端会话. (1).选项 -8 选项允许进行8位的输入数据传送:否则 ...

- nyoj(表达式求值)

描述 ACM队的mdd想做一个计算器,但是,他要做的不仅仅是一计算一个A+B的计算器,他想实现随便输入一个表达式都能求出它的值的计算器,现在请你帮助他来实现这个计算器吧. 比如输入:"1+2 ...