HBase编程 API入门系列之put(客户端而言)(1)

心得,写在前面的话,也许,中间会要多次执行,连接超时,多试试就好了。

[hadoop@HadoopSlave1 conf]$ cat regionservers

HadoopMaster

HadoopSlave1

HadoopSlave2

<configuration>

<property>

<name>hbase.zookeeper.quorum</name>

<value>HadoopMaster,HadoopSlave1,HadoopSlave2</value>

</property>

<property>

<name>hbase.rootdir</name>

<value>hdfs://HadoopMaster:9000/hbase</value>

</property>

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

<property>

<name>hbase.tmp.dir</name>

<value>/home/hadoop/data/hbase-1.2.3/tmp</value>

</property>

<property>

<name>zookeeper.znode.parent</name>

<value>/hbase</value>

</property>

</configuration>

export JAVA_HOME=/home/hadoop/app/jdk1.7.0_79

export HBASE_MANAGES_ZK=false (外装的Zookeeper集群)

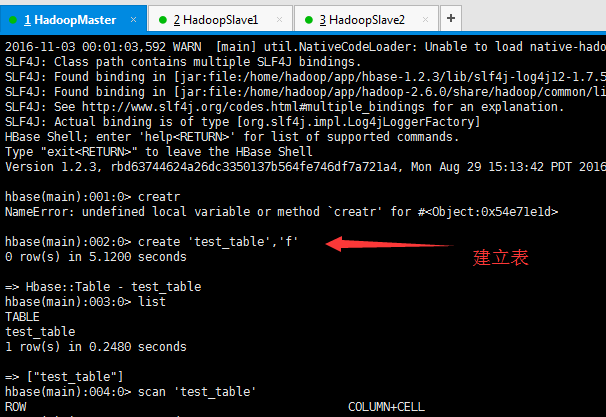

hbase(main):002:0> create 'test_table','f'

语法: create table name , column family

test_table 是HBase的数据表名,f是列簇

为了后续的编程,我这里直接先手动创建最简单的hbase数据表。在代码里可以修改。

package zhouls.bigdata.HbaseProject.Test1; import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.HTable;

import org.apache.hadoop.hbase.client.Put;

import org.apache.hadoop.hbase.util.Bytes; public class HBaseTest {

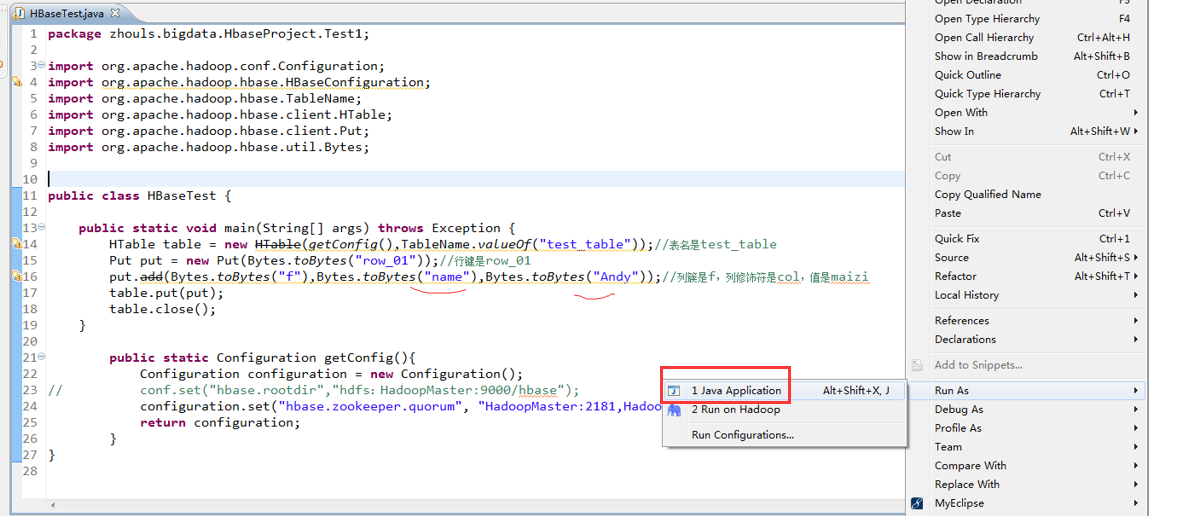

public static void main(String[] args) throws Exception {

HTable table = new HTable(getConfig(),TableName.valueOf("test_table"));//表名是test_table

Put put = new Put(Bytes.toBytes("row_01"));//行键是row_01

put.add(Bytes.toBytes("f"),Bytes.toBytes("col"),Bytes.toBytes("maizi"));//列簇是f,列修饰符是col,值是maizi

table.put(put);

table.close();

} public static Configuration getConfig(){

Configuration configuration = new Configuration();

// conf.set("hbase.rootdir","hdfs:HadoopMaster:9000/hbase");

configuration.set("hbase.zookeeper.quorum", "HadoopMaster:2181,HadoopSlave1:2181,HadoopSlave2:2181");

return configuration;

}

}

2016-12-10 11:05:45,077 INFO [org.apache.hadoop.hbase.zookeeper.RecoverableZooKeeper] - Process identifier=hconnection-0x5fc2fc59 connecting to ZooKeeper ensemble=HadoopMaster:2181,HadoopSlave1:2181,HadoopSlave2:2181

2016-12-10 11:05:45,115 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:zookeeper.version=3.4.6-1569965, built on 02/20/2014 09:09 GMT

2016-12-10 11:05:45,115 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:host.name=WIN-BQOBV63OBNM

2016-12-10 11:05:45,115 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:java.version=1.7.0_51

2016-12-10 11:05:45,115 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:java.vendor=Oracle Corporation

2016-12-10 11:05:45,116 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:java.home=C:\Program Files\Java\jdk1.7.0_51\jre

2016-12-10 11:05:45,116 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:java.class.path=D:\Code\MyEclipseJavaCode\HbaseProject\bin;D:\SoftWare\hbase-1.2.3\lib\activation-1.1.jar;D:\SoftWare\hbase-1.2.3\lib\aopalliance-1.0.jar;D:\SoftWare\hbase-1.2.3\lib\apacheds-i18n-2.0.0-M15.jar;D:\SoftWare\hbase-1.2.3\lib\apacheds-kerberos-codec-2.0.0-M15.jar;D:\SoftWare\hbase-1.2.3\lib\api-asn1-api-1.0.0-M20.jar;D:\SoftWare\hbase-1.2.3\lib\api-util-1.0.0-M20.jar;D:\SoftWare\hbase-1.2.3\lib\asm-3.1.jar;D:\SoftWare\hbase-1.2.3\lib\avro-1.7.4.jar;D:\SoftWare\hbase-1.2.3\lib\commons-beanutils-1.7.0.jar;D:\SoftWare\hbase-1.2.3\lib\commons-beanutils-core-1.8.0.jar;D:\SoftWare\hbase-1.2.3\lib\commons-cli-1.2.jar;D:\SoftWare\hbase-1.2.3\lib\commons-codec-1.9.jar;D:\SoftWare\hbase-1.2.3\lib\commons-collections-3.2.2.jar;D:\SoftWare\hbase-1.2.3\lib\commons-compress-1.4.1.jar;D:\SoftWare\hbase-1.2.3\lib\commons-configuration-1.6.jar;D:\SoftWare\hbase-1.2.3\lib\commons-daemon-1.0.13.jar;D:\SoftWare\hbase-1.2.3\lib\commons-digester-1.8.jar;D:\SoftWare\hbase-1.2.3\lib\commons-el-1.0.jar;D:\SoftWare\hbase-1.2.3\lib\commons-httpclient-3.1.jar;D:\SoftWare\hbase-1.2.3\lib\commons-io-2.4.jar;D:\SoftWare\hbase-1.2.3\lib\commons-lang-2.6.jar;D:\SoftWare\hbase-1.2.3\lib\commons-logging-1.2.jar;D:\SoftWare\hbase-1.2.3\lib\commons-math-2.2.jar;D:\SoftWare\hbase-1.2.3\lib\commons-math3-3.1.1.jar;D:\SoftWare\hbase-1.2.3\lib\commons-net-3.1.jar;D:\SoftWare\hbase-1.2.3\lib\disruptor-3.3.0.jar;D:\SoftWare\hbase-1.2.3\lib\findbugs-annotations-1.3.9-1.jar;D:\SoftWare\hbase-1.2.3\lib\guava-12.0.1.jar;D:\SoftWare\hbase-1.2.3\lib\guice-3.0.jar;D:\SoftWare\hbase-1.2.3\lib\guice-servlet-3.0.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-annotations-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-auth-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-client-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-common-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-hdfs-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-mapreduce-client-app-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-mapreduce-client-common-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-mapreduce-client-core-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-mapreduce-client-jobclient-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-mapreduce-client-shuffle-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-yarn-api-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-yarn-client-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-yarn-common-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-yarn-server-common-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-annotations-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-annotations-1.2.3-tests.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-client-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-common-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-common-1.2.3-tests.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-examples-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-external-blockcache-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-hadoop2-compat-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-hadoop-compat-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-it-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-it-1.2.3-tests.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-prefix-tree-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-procedure-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-protocol-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-resource-bundle-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-rest-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-server-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-server-1.2.3-tests.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-shell-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-thrift-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\htrace-core-3.1.0-incubating.jar;D:\SoftWare\hbase-1.2.3\lib\httpclient-4.2.5.jar;D:\SoftWare\hbase-1.2.3\lib\httpcore-4.4.1.jar;D:\SoftWare\hbase-1.2.3\lib\jackson-core-asl-1.9.13.jar;D:\SoftWare\hbase-1.2.3\lib\jackson-jaxrs-1.9.13.jar;D:\SoftWare\hbase-1.2.3\lib\jackson-mapper-asl-1.9.13.jar;D:\SoftWare\hbase-1.2.3\lib\jackson-xc-1.9.13.jar;D:\SoftWare\hbase-1.2.3\lib\jamon-runtime-2.4.1.jar;D:\SoftWare\hbase-1.2.3\lib\jasper-compiler-5.5.23.jar;D:\SoftWare\hbase-1.2.3\lib\jasper-runtime-5.5.23.jar;D:\SoftWare\hbase-1.2.3\lib\javax.inject-1.jar;D:\SoftWare\hbase-1.2.3\lib\java-xmlbuilder-0.4.jar;D:\SoftWare\hbase-1.2.3\lib\jaxb-api-2.2.2.jar;D:\SoftWare\hbase-1.2.3\lib\jaxb-impl-2.2.3-1.jar;D:\SoftWare\hbase-1.2.3\lib\jcodings-1.0.8.jar;D:\SoftWare\hbase-1.2.3\lib\jersey-client-1.9.jar;D:\SoftWare\hbase-1.2.3\lib\jersey-core-1.9.jar;D:\SoftWare\hbase-1.2.3\lib\jersey-guice-1.9.jar;D:\SoftWare\hbase-1.2.3\lib\jersey-json-1.9.jar;D:\SoftWare\hbase-1.2.3\lib\jersey-server-1.9.jar;D:\SoftWare\hbase-1.2.3\lib\jets3t-0.9.0.jar;D:\SoftWare\hbase-1.2.3\lib\jettison-1.3.3.jar;D:\SoftWare\hbase-1.2.3\lib\jetty-6.1.26.jar;D:\SoftWare\hbase-1.2.3\lib\jetty-sslengine-6.1.26.jar;D:\SoftWare\hbase-1.2.3\lib\jetty-util-6.1.26.jar;D:\SoftWare\hbase-1.2.3\lib\joni-2.1.2.jar;D:\SoftWare\hbase-1.2.3\lib\jruby-complete-1.6.8.jar;D:\SoftWare\hbase-1.2.3\lib\jsch-0.1.42.jar;D:\SoftWare\hbase-1.2.3\lib\jsp-2.1-6.1.14.jar;D:\SoftWare\hbase-1.2.3\lib\jsp-api-2.1-6.1.14.jar;D:\SoftWare\hbase-1.2.3\lib\junit-4.12.jar;D:\SoftWare\hbase-1.2.3\lib\leveldbjni-all-1.8.jar;D:\SoftWare\hbase-1.2.3\lib\libthrift-0.9.3.jar;D:\SoftWare\hbase-1.2.3\lib\log4j-1.2.17.jar;D:\SoftWare\hbase-1.2.3\lib\metrics-core-2.2.0.jar;D:\SoftWare\hbase-1.2.3\lib\netty-all-4.0.23.Final.jar;D:\SoftWare\hbase-1.2.3\lib\paranamer-2.3.jar;D:\SoftWare\hbase-1.2.3\lib\protobuf-java-2.5.0.jar;D:\SoftWare\hbase-1.2.3\lib\servlet-api-2.5.jar;D:\SoftWare\hbase-1.2.3\lib\servlet-api-2.5-6.1.14.jar;D:\SoftWare\hbase-1.2.3\lib\slf4j-api-1.7.7.jar;D:\SoftWare\hbase-1.2.3\lib\slf4j-log4j12-1.7.5.jar;D:\SoftWare\hbase-1.2.3\lib\snappy-java-1.0.4.1.jar;D:\SoftWare\hbase-1.2.3\lib\spymemcached-2.11.6.jar;D:\SoftWare\hbase-1.2.3\lib\xmlenc-0.52.jar;D:\SoftWare\hbase-1.2.3\lib\xz-1.0.jar;D:\SoftWare\hbase-1.2.3\lib\zookeeper-3.4.6.jar

2016-12-10 11:05:45,118 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:java.library.path=C:\Program Files\Java\jdk1.7.0_51\bin;C:\Windows\Sun\Java\bin;C:\Windows\system32;C:\Windows;C:\ProgramData\Oracle\Java\javapath;C:\Python27\;C:\Python27\Scripts;C:\Windows\system32;C:\Windows;C:\Windows\System32\Wbem;C:\Windows\System32\WindowsPowerShell\v1.0\;D:\SoftWare\MATLAB R2013a\runtime\win64;D:\SoftWare\MATLAB R2013a\bin;C:\Program Files (x86)\IDM Computer Solutions\UltraCompare;C:\Program Files\Java\jdk1.7.0_51\bin;C:\Program Files\Java\jdk1.7.0_51\jre\bin;D:\SoftWare\apache-ant-1.9.0\bin;HADOOP_HOME\bin;D:\SoftWare\apache-maven-3.3.9\bin;D:\SoftWare\Scala\bin;D:\SoftWare\Scala\jre\bin;%MYSQL_HOME\bin;D:\SoftWare\MySQL Server\MySQL Server 5.0\bin;D:\SoftWare\apache-tomcat-7.0.69\bin;%C:\Windows\System32;%C:\Windows\SysWOW64;D:\SoftWare\SSH Secure Shell;.

2016-12-10 11:05:45,119 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:java.io.tmpdir=C:\Users\ADMINI~1\AppData\Local\Temp\

2016-12-10 11:05:45,120 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:java.compiler=<NA>

2016-12-10 11:05:45,120 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:os.name=Windows 7

2016-12-10 11:05:45,121 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:os.arch=amd64

2016-12-10 11:05:45,121 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:os.version=6.1

2016-12-10 11:05:45,131 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:user.name=Administrator

2016-12-10 11:05:45,131 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:user.home=C:\Users\Administrator

2016-12-10 11:05:45,132 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:user.dir=D:\Code\MyEclipseJavaCode\HbaseProject

2016-12-10 11:05:45,136 INFO [org.apache.zookeeper.ZooKeeper] - Initiating client connection, connectString=HadoopMaster:2181,HadoopSlave1:2181,HadoopSlave2:2181 sessionTimeout=180000 watcher=hconnection-0x5fc2fc590x0, quorum=HadoopMaster:2181,HadoopSlave1:2181,HadoopSlave2:2181, baseZNode=/hbase

2016-12-10 11:05:45,329 INFO [org.apache.zookeeper.ClientCnxn] - Opening socket connection to server HadoopMaster/192.168.80.10:2181. Will not attempt to authenticate using SASL (unknown error)

2016-12-10 11:05:45,365 INFO [org.apache.zookeeper.ClientCnxn] - Socket connection established to HadoopMaster/192.168.80.10:2181, initiating session

2016-12-10 11:05:45,421 INFO [org.apache.zookeeper.ClientCnxn] - Session establishment complete on server HadoopMaster/192.168.80.10:2181, sessionid = 0x1582587a9550008, negotiated timeout = 40000

2016-12-10 11:05:47,266 INFO [org.apache.hadoop.hbase.client.ConnectionManager$HConnectionImplementation] - Closing zookeeper sessionid=0x1582587a9550008

2016-12-10 11:05:47,275 INFO [org.apache.zookeeper.ZooKeeper] - Session: 0x1582587a9550008 closed

2016-12-10 11:05:47,275 INFO [org.apache.zookeeper.ClientCnxn] - EventThread shut down

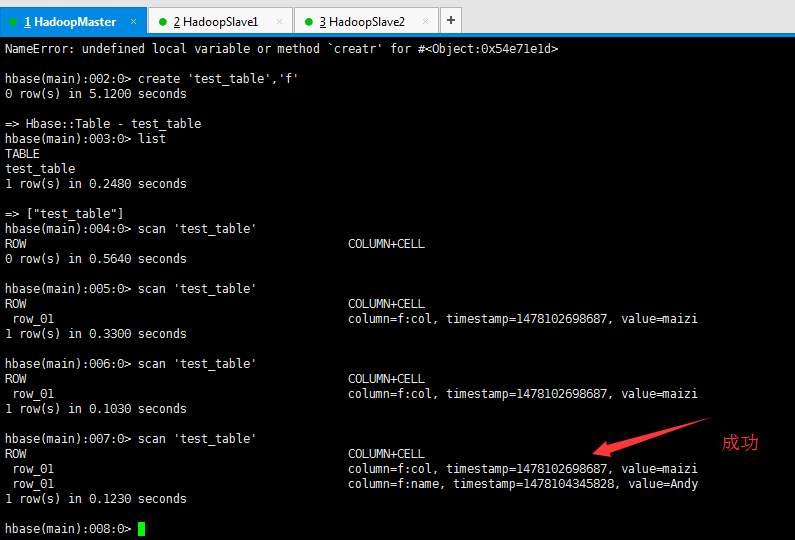

scan 'test_table'

全盘扫描该Hbase数据表test_table

package zhouls.bigdata.HbaseProject.Test1; import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.HTable;

import org.apache.hadoop.hbase.client.Put;

import org.apache.hadoop.hbase.util.Bytes; public class HBaseTest {

public static void main(String[] args) throws Exception {

HTable table = new HTable(getConfig(),TableName.valueOf("test_table"));//表名是test_table

Put put = new Put(Bytes.toBytes("row_01"));//行键是row_01

put.add(Bytes.toBytes("f"),Bytes.toBytes("name"),Bytes.toBytes("Andy"));//列簇是f,列修饰符是col,值是maizi

table.put(put);

table.close();

} public static Configuration getConfig(){

Configuration configuration = new Configuration();

// conf.set("hbase.rootdir","hdfs:HadoopMaster:9000/hbase");

configuration.set("hbase.zookeeper.quorum", "HadoopMaster:2181,HadoopSlave1:2181,HadoopSlave2:2181");

return configuration;

}

}

scan 'test_table'

全盘扫描该Hbase数据表test_table

package zhouls.bigdata.HbaseProject.Test1; import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.HTable;

import org.apache.hadoop.hbase.client.Put;

import org.apache.hadoop.hbase.util.Bytes; public class HBaseTest {

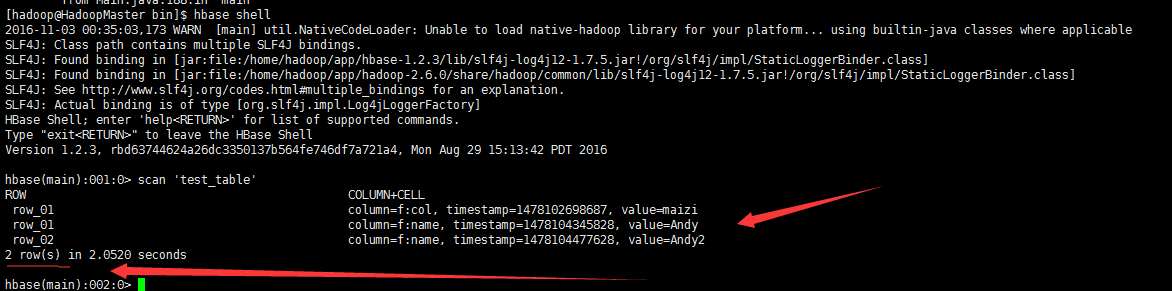

public static void main(String[] args) throws Exception {

HTable table = new HTable(getConfig(),TableName.valueOf("test_table"));//表名是test_table

Put put = new Put(Bytes.toBytes("row_02"));//行键是row_01

put.add(Bytes.toBytes("f"),Bytes.toBytes("name"),Bytes.toBytes("Andy2"));//列簇是f,列修饰符是col,值是maizi

table.put(put);

table.close();

} public static Configuration getConfig(){

Configuration configuration = new Configuration();

// conf.set("hbase.rootdir","hdfs:HadoopMaster:9000/hbase");

configuration.set("hbase.zookeeper.quorum", "HadoopMaster:2181,HadoopSlave1:2181,HadoopSlave2:2181");

return configuration;

}

}

scan 'test_table'

全盘扫描该Hbase数据表test_table

package zhouls.bigdata.HbaseProject.Test1; import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.HTable;

import org.apache.hadoop.hbase.client.Put;

import org.apache.hadoop.hbase.util.Bytes; public class HBaseTest {

public static void main(String[] args) throws Exception {

HTable table = new HTable(getConfig(),TableName.valueOf("test_table"));//表名是test_table

Put put = new Put(Bytes.toBytes("row_03"));//行键是row_01

put.add(Bytes.toBytes("f"),Bytes.toBytes("name"),Bytes.toBytes("Andy3"));//列簇是f,列修饰符是col,值是maizi

table.put(put);

table.close();

} public static Configuration getConfig(){

Configuration configuration = new Configuration();

// conf.set("hbase.rootdir","hdfs:HadoopMaster:9000/hbase");

configuration.set("hbase.zookeeper.quorum", "HadoopMaster:2181,HadoopSlave1:2181,HadoopSlave2:2181");

return configuration;

}

}

scan 'test_table'

全盘扫描该Hbase数据表test_table

HBase编程 API入门系列之put(客户端而言)(1)的更多相关文章

- HBase编程 API入门系列之delete(客户端而言)(3)

心得,写在前面的话,也许,中间会要多次执行,连接超时,多试试就好了. 前面的基础,如下 HBase编程 API入门系列之put(客户端而言)(1) HBase编程 API入门系列之get(客户端而言) ...

- HBase编程 API入门系列之get(客户端而言)(2)

心得,写在前面的话,也许,中间会要多次执行,连接超时,多试试就好了. 前面是基础,如下 HBase编程 API入门系列之put(客户端而言)(1) package zhouls.bigdata.Hba ...

- HBase编程 API入门系列之create(管理端而言)(8)

大家,若是看过我前期的这篇博客的话,则 HBase编程 API入门系列之put(客户端而言)(1) 就知道,在这篇博文里,我是在HBase Shell里创建HBase表的. 这里,我带领大家,学习更高 ...

- HBase编程 API入门系列之HTable pool(6)

HTable是一个比较重的对此,比如加载配置文件,连接ZK,查询meta表等等,高并发的时候影响系统的性能,因此引入了“池”的概念. 引入“HBase里的连接池”的目的是: 为了更高的,提高程序的并发 ...

- HBase编程 API入门系列之delete(管理端而言)(9)

大家,若是看过我前期的这篇博客的话,则 HBase编程 API入门之delete(客户端而言) 就知道,在这篇博文里,我是在客户端里删除HBase表的. 这里,我带领大家,学习更高级的,因为,在开发中 ...

- HBase编程 API入门系列之工具Bytes类(7)

这是从程度开发层面来说,为了方便和提高开发人员. 这个工具Bytes类,有很多很多方法,帮助我们HBase编程开发人员,提高开发. 这里,我只赘述,很常用的! package zhouls.bigda ...

- HBase编程 API入门系列之scan(客户端而言)(5)

心得,写在前面的话,也许,中间会要多次执行,连接超时,多试试就好了. package zhouls.bigdata.HbaseProject.Test1; import javax.xml.trans ...

- HBase编程 API入门系列之delete.deleteColumn和delete.deleteColumns区别(客户端而言)(4)

心得,写在前面的话,也许,中间会要多次执行,连接超时,多试试就好了. delete.deleteColumn和delete.deleteColumns区别是: deleteColumn是删除某一个列簇 ...

- HBase编程 API入门系列之modify(管理端而言)(10)

这里,我带领大家,学习更高级的,因为,在开发中,尽量不能去服务器上修改表. 所以,在管理端来修改HBase表.采用线程池的方式(也是生产开发里首推的) package zhouls.bigdata.H ...

随机推荐

- python+unittest+requests实现接口自动化

前言: Requests简介 Requests 是使用 Apache2 Licensed 许可证的 HTTP 库.用 Python 编写,真正的为人类着想. Python 标准库中的 urllib2 ...

- 案例19-页面使用ajax显示类别菜单

1 版本一 版本只能在首页显示类别,当切换到了其它页面就不会显示 1 web层IndexServlet代码 package www.test.web.servlet; import java.io.I ...

- Orcale 之基本术语一

数据字典 数据字典是 Orcale 的重要组成部分.它有一系列的拥有数据库元数据信息的数据字典表和用户可以读取的数据字典视图组成,存放着数据库的有关信息.因此数据字典可以看作一组表和试图的集合.它们存 ...

- 使用SubstanceDesign和Unity插件ShaderForge制作风格化火焰

使用 SubstanceDesign 软件可以制作shader用的特殊图片,原来真有这种软件,一直好奇这种图片怎么做的 https://www.kancloud.cn/hazukiaoi/sd_sf_ ...

- NPOI 设置导出的excel内容样式

导出excel时,有时要根据需要加上一些样式,以上几种样式是我在项目中用到的 一.给单元格加背景色只需两步:一是创建单元格背景景色对象:二是给单元格绑定样式 //创建单元格背景颜色对象 HSSFPal ...

- MySQL重置root密码提示"Unknown column ‘password"的问题?

晚上打开MAC,发现root帐户突然不能正常登陆MySQL,于是打算重置密码,看了几篇文章,竟然重置不成功,总是得到Unknown column 'password'的错误,看了user的表结构也确实 ...

- Entity Framework6 with Visual Studio 2013 update3 for Oracle 11g

2014年7月的时候,写了一篇关于EF5 with visual studio 2010 for oracle 11g的博文 原文地址 :http://www.cnblogs.com/HouZhiHo ...

- 让div铺满整个空间

需要用到几个css属性: .content{ width:100%;position: absolute;top: 50px;bottom: 0px;left: } 设置了bottom.top及abs ...

- .net EF框架 MySql实现实例

1.nuget中添加包EF和MySql.Data.Entity 2.config文件添加如下配置 1.配置entitframework节点(一般安装EF时自动添加) <entityFramewo ...

- 编写简单的maven插件

编写一个简单的输出maven的hello world的插件 1.在eclipse中新建一个maven project项目 然后取名HelloPlugin,建立后,pom文件为(注意packaging为 ...