『TensorFlow』读书笔记_Inception_V3_下

极为庞大的网络结构,不过下一节的ResNet也不小

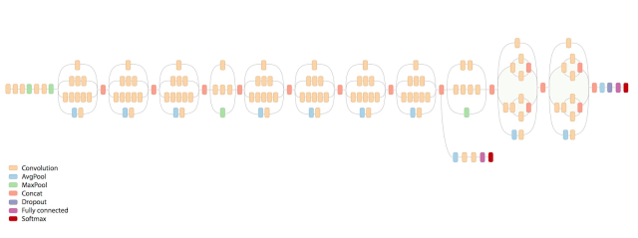

线性的组成,结构大体如下:

常规卷积部分->Inception模块组1->Inception模块组2->Inception模块组3->池化->1*1卷积(实现个线性变换)->分类器

|_>辅助分类器

代码如下,

# Author : Hellcat

# Time : 2017/12/12

# refer : https://github.com/tensorflow/models/

# blob/master/research/inception/inception/slim/inception_model.py import time

import math

import tensorflow as tf

from datetime import datetime slim = tf.contrib.slim

# 截断误差初始化生成器

trunc_normal = lambda stddev:tf.truncated_normal_initializer(0.0,stddev) def inception_v3_arg_scope(weight_decay=0.00004,

stddv=0.1,

batch_norm_var_collection='moving_vars'):

'''

网络常用函数默认参数生成

:param weight_decay: L2正则化decay

:param stddv: 标准差

:param batch_norm_var_collection:

:return:

'''

batch_norm_params = {

'decay':0.9997, # 衰减系数

'epsilon':0.001,

'updates_collections':{

'bate':None,

'gamma':None,

'moving_mean':[batch_norm_var_collection], # 批次均值

'moving_variance':[batch_norm_var_collection] # 批次方差

}

}

# 外层环境

with slim.arg_scope([slim.conv2d,slim.fully_connected],

# 权重正则化函数

weights_regularizer=slim.l2_regularizer(weight_decay)):

# 内层环境

with slim.arg_scope([slim.conv2d],

# 权重初始化函数

weights_initializer=tf.truncated_normal_initializer(stddev=stddv),

# 激活函数,默认为nn.relu

activation_fn=tf.nn.relu,

# 正则化函数,默认为None

normalizer_fn=slim.batch_norm,

# 正则化函数参数,字典形式

normalizer_params=batch_norm_params) as sc:

return sc def inception_v3_base(inputs,scope=None):

# 保存关键节点

end_points = {}

# 重载作用域的名称,创建新的作用域名称(前面是None时使用),输入tensor

with tf.variable_scope(scope,'Inception_v3',[inputs]):

with slim.arg_scope([slim.conv2d,slim.max_pool2d,slim.avg_pool2d],

stride=1,padding='VALID'):

# 299*299*3 net = slim.conv2d(inputs,32,[3,3],stride=2,scope='Conv2d_1a_3x3') # 149*149*32

net = slim.conv2d(net,32,[3,3],scope='Conv2d_2a_3x3') # 147*147*32

net = slim.conv2d(net,64,[3,3],padding='SAME',scope='Conv2d_2b_3x3') # 147*147*64

net = slim.max_pool2d(net,[3,3],stride=2,scope='MaxPool_3a_3x3') # 73*73*64

net = slim.conv2d(net,80,[1,1],scope='Conv2d_3b_1x1') # 73*73*80

net = slim.conv2d(net,192,[1,1],scope='Conv2d_4a_3x3') # 71*71*192

net = slim.max_pool2d(net,[3,3],stride=2,scope='MaxPool_5a_3x3') # 35*35*192 with slim.arg_scope([slim.conv2d,slim.max_pool2d,slim.avg_pool2d],

stride=1,padding='SAME'):

'''Inception 第一模组块'''

# Inception_Module_1

with tf.variable_scope('Mixed_5b'): # 35*35*256

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net,64,[1,1],scope='Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net,48,[1,1],scope='Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1,64,[5,5],scope='Conv2d_0b_5x5')

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net,64,[1,1],scope='Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2,96,[3,3],scope='Conv2d_0b_3x3')

branch_2 = slim.conv2d(branch_2,96,[3,3],scope='Conv2d_0c_3x3')

with tf.variable_scope('Branch_3'):

branch_3 = slim.avg_pool2d(net,[3,3],scope='AvgPool_0a_3x3')

branch_3 = slim.conv2d(branch_3,32,[1,1],scope='Conv2d_0b_1x1')

net = tf.concat([branch_0,branch_1,branch_2,branch_3],axis=3) # Inception_Module_2

with tf.variable_scope('Mixed_5c'): # 35*35*288

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net,64,[1,1],scope='Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net,48,[1,1],scope='Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1,64,[5,5],scope='Conv2d_0b_5x5')

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net,64,[1,1],scope='Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2,96,[3,3],scope='Conv2d_0b_3x3')

branch_2 = slim.conv2d(branch_2,96,[3,3],scope='Conv2d_0c_3x3')

with tf.variable_scope('Branch_3'):

branch_3 = slim.avg_pool2d(net,[3,3],scope='AvgPool_0a_3x3')

branch_3 = slim.conv2d(branch_3,64,[1,1],scope='Conv2d_0b_1x1')

net = tf.concat([branch_0,branch_1,branch_2,branch_3],axis=3) # Inception_Module_3

with tf.variable_scope('Mixed_5d'): # 35*35*288

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net,64,[1,1],scope='Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net,48,[1,1],scope='Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1,64,[5,5],scope='Conv2d_0b_5x5')

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net,64,[1,1],scope='Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2,96,[3,3],scope='Conv2d_0b_3x3')

branch_2 = slim.conv2d(branch_2,96,[3,3],scope='Conv2d_0c_3x3')

with tf.variable_scope('Branch_3'):

branch_3 = slim.avg_pool2d(net,[3,3],scope='AvgPool_0a_3x3')

branch_3 = slim.conv2d(branch_3,64,[1,1],scope='Conv2d_0b_1x1')

net = tf.concat([branch_0,branch_1,branch_2,branch_3],axis=3) '''Inception 第二模组块'''

# Inception_Module_1

with tf.variable_scope('Mixed_6a'): # 17*17*768

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net,384,[3,3],stride=2,

padding='VALID',scope='Conv2d_1a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net,64,[1,1],scope='Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1,96,[3,3],scope='Conv2d_0b_3x3')

branch_1 = slim.conv2d(branch_1,96,[3,3],stride=2,

padding='VALID',scope='Conv2d_1a_3x3')

with tf.variable_scope('Branch_2'):

branch_2 = slim.max_pool2d(net,[3,3],stride=2,padding='VALID',

scope='Max_Pool_1a_3x3')

net = tf.concat([branch_0,branch_1,branch_2],axis=3) # Inception_Module_2

with tf.variable_scope('Mixed_6b'): # 17*17*768

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net,192,[1,1],scope='Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net,128,[1,1],scope='Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1,128,[1,7],scope='Conv2d_0b_1x7')

branch_1 = slim.conv2d(branch_1,192,[7,1],scope='Conv2d_0c_7x1')

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net,128,[1,1],scope='Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2,128,[7,1],scope='Conv2d_0b_7x1')

branch_2 = slim.conv2d(branch_2,128,[1,7],scope='Conv2d_0c_1x7')

branch_2 = slim.conv2d(branch_2,128,[7,1],scope='Conv2d_0d_7x1')

branch_2 = slim.conv2d(branch_2,192,[1,7],scope='Conv2d_0e_1x7')

with tf.variable_scope('Branch_3'):

branch_3 = slim.avg_pool2d(net,[3,3],scope='AvgPool_0a_3x3')

branch_3 = slim.conv2d(branch_3,192,[1,1],scope='Conv2d_0b_1x1')

net = tf.concat([branch_0,branch_1,branch_2,branch_3],axis=3) # Inception_Module_3

with tf.variable_scope('Mixed_6c'): # 17*17*768

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net,192,[1,1],scope='Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net,160,[1,1],scope='Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1,160,[1,7],scope='Conv2d_0b_1x7')

branch_1 = slim.conv2d(branch_1,192,[7,1],scope='Conv2d_0c_7x1')

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net,160,[1,1],scope='Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2,160,[7,1],scope='Conv2d_0b_7x1')

branch_2 = slim.conv2d(branch_2,160,[1,7],scope='Conv2d_0c_1x7')

branch_2 = slim.conv2d(branch_2,160,[7,1],scope='Conv2d_0d_7x1')

branch_2 = slim.conv2d(branch_2,192,[1,7],scope='Conv2d_0e_1x7')

with tf.variable_scope('Branch_3'):

branch_3 = slim.avg_pool2d(net,[3,3],scope='AvgPool_0a_3x3')

branch_3 = slim.conv2d(branch_3,192,[1,1],scope='Conv2d_0b_1x1')

net = tf.concat([branch_0,branch_1,branch_2,branch_3],axis=3) # Inception_Module_4

with tf.variable_scope('Mixed_6d'): # 17*17*768

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net,192,[1,1],scope='Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net,160,[1,1],scope='Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1,160,[1,7],scope='Conv2d_0b_1x7')

branch_1 = slim.conv2d(branch_1,192,[7,1],scope='Conv2d_0c_7x1')

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net,160,[1,1],scope='Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2,160,[7,1],scope='Conv2d_0b_7x1')

branch_2 = slim.conv2d(branch_2,160,[1,7],scope='Conv2d_0c_1x7')

branch_2 = slim.conv2d(branch_2,160,[7,1],scope='Conv2d_0d_7x1')

branch_2 = slim.conv2d(branch_2,192,[1,7],scope='Conv2d_0e_1x7')

with tf.variable_scope('Branch_3'):

branch_3 = slim.avg_pool2d(net,[3,3],scope='AvgPool_0a_3x3')

branch_3 = slim.conv2d(branch_3,192,[1,1],scope='Conv2d_0b_1x1')

net = tf.concat([branch_0,branch_1,branch_2,branch_3],axis=3) # Inception_Module_5

with tf.variable_scope('Mixed_6e'): # 17*17*768

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net,192,[1,1],scope='Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net,192,[1,1],scope='Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1,192,[1,7],scope='Conv2d_0b_1x7')

branch_1 = slim.conv2d(branch_1,192,[7,1],scope='Conv2d_0c_7x1')

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net,192,[1,1],scope='Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2,192,[7,1],scope='Conv2d_0b_7x1')

branch_2 = slim.conv2d(branch_2,192,[1,7],scope='Conv2d_0c_1x7')

branch_2 = slim.conv2d(branch_2,192,[7,1],scope='Conv2d_0d_7x1')

branch_2 = slim.conv2d(branch_2,192,[1,7],scope='Conv2d_0e_1x7')

with tf.variable_scope('Branch_3'):

branch_3 = slim.avg_pool2d(net,[3,3],scope='AvgPool_0a_3x3')

branch_3 = slim.conv2d(branch_3,192,[1,1],scope='Conv2d_0b_1x1')

net = tf.concat([branch_0,branch_1,branch_2,branch_3],axis=3)

end_points['Mixed_6e'] = net '''Inception 第三模组块'''

# Inception_Module_1

with tf.variable_scope('Mixed_7a'): # 8*8*1280

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net,192,[1,1],scope='Conv2d_0a_1x1')

branch_0 = slim.conv2d(branch_0,320,[3,3],stride=2,

padding='VALID',scope='Conv2d_1a_3x3')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net,192,[1,1],scope='Conv2d_0a_1x1')

branch_1 = slim.conv2d(branch_1,192,[1,7],scope='Conv2d_0b_1x7')

branch_1 = slim.conv2d(branch_1,192,[7,1],scope='Conv2d_0c_7x1')

branch_1 = slim.conv2d(branch_1,192,[3,3],stride=2,padding='VALID',scope='Conv2d_1a_3x3')

with tf.variable_scope('Branch_2'):

branch_2 = slim.max_pool2d(net,[3,3],stride=2,padding='VALID',

scope='MaxPool_1a_3x3')

net = tf.concat([branch_0,branch_1,branch_2],3) # Inception_Module_2

with tf.variable_scope('Mixed_7b'): # 8*8*2048

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net,320,[1,1],scope='Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net,384,[1,1],scope='Conv2d_0a_1x1')

branch_1 = tf.concat([

slim.conv2d(branch_1,384,[1,3],scope='Conv2d_0b_1x3'),

slim.conv2d(branch_1,384,[3,1],scope='Conv2d_0b_3x1')],axis=3)

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net,448,[1,1],scope='Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2,384,[3,3],scope='Conv2d_0b_3x3')

branch_2 = tf.concat([

slim.conv2d(branch_2,384,[1,3],scope='Conv2d_0c_1x3'),

slim.conv2d(branch_2,384,[3,1],scope='Conv2d_0d_3x1')],axis=3)

with tf.variable_scope('Branch_3'):

branch_3 = slim.max_pool2d(net,[3,3],scope='AvgPool_0a_3x3')

branch_3 = slim.conv2d(branch_3,192,[1,1],scope='Conv2d_0b_1x1')

net = tf.concat([branch_0,branch_1,branch_2,branch_3],3) # Inception_Module_3

with tf.variable_scope('Mixed_7c'): # 8*8*2048

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net,320,[1,1],scope='Conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch_1 = slim.conv2d(net,384,[1,1],scope='Conv2d_0a_1x1')

branch_1 = tf.concat([

slim.conv2d(branch_1,384,[1,3],scope='Conv2d_0b_1x3'),

slim.conv2d(branch_1,384,[3,1],scope='Conv2d_0b_3x1')],axis=3)

with tf.variable_scope('Branch_2'):

branch_2 = slim.conv2d(net,448,[1,1],scope='Conv2d_0a_1x1')

branch_2 = slim.conv2d(branch_2,384,[3,3],scope='Conv2d_0b_3x3')

branch_2 = tf.concat([

slim.conv2d(branch_2,384,[1,3],scope='Conv2d_0c_1x3'),

slim.conv2d(branch_2,384,[3,1],scope='Conv2d_0d_3x1')],axis=3)

with tf.variable_scope('Branch_3'):

branch_3 = slim.max_pool2d(net,[3,3],scope='AvgPool_0a_3x3')

branch_3 = slim.conv2d(branch_3,192,[1,1],scope='Conv2d_0b_1x1')

net = tf.concat([branch_0,branch_1,branch_2,branch_3],3) return net,end_points def inception_v3(inputs,

num_classes=1000,

is_training=True,

dropout_keep_prob=0.8,

prediction_fn=slim.softmax,

spatial_squeeze=True,

reuse=None,

scope='Inception_v3'):

with tf.variable_scope(scope,'Inception_v3',[inputs,num_classes],reuse=reuse) as scope:

with slim.arg_scope([slim.batch_norm,slim.dropout],

is_training=is_training):

net,end_points = inception_v3_base(inputs,scope=scope)

with slim.arg_scope([slim.conv2d,slim.max_pool2d,slim.avg_pool2d],

stride=1,padding='SAME'):

# 17*17*768

aux_logits = end_points['Mixed_6e']

with tf.variable_scope('AuxLogits'):

aux_logits = slim.avg_pool2d(aux_logits,[5,5],stride=3,padding='VALID',scope='AvgPool_1a_5x5')

aux_logits = slim.conv2d(aux_logits,128,[1,1],scope='Conv2d_1b_1x1')

aux_logits = slim.conv2d(aux_logits,768,[5,5],

weights_initializer=trunc_normal(0.01),

padding='VALID',

scope='Conv2d_2a_5x5')

aux_logits = slim.conv2d(aux_logits,num_classes,[1,1],activation_fn=None,

normalizer_fn=None,weights_initializer=trunc_normal(0.001),

scope='Conv2d_2b_1x1')

if spatial_squeeze:

aux_logits = tf.squeeze(aux_logits,[1,2],

name='SpatialSqueeze')

end_points['AuxLogits'] = aux_logits

with tf.variable_scope('Logits'):

net = slim.avg_pool2d(net,[8,8],padding='VALID',

scope='AvgPool_1a_8x8')

net = slim.dropout(net,keep_prob=dropout_keep_prob,scope='Dropout_1b')

end_points['PreLogits'] = net

logits = slim.conv2d(net,num_classes,[1,1],activation_fn=None,

normalizer_fn=None,scope='Conv2d_1c_1x1')

if spatial_squeeze:

logits = tf.squeeze(logits,[1,2],name='SpatialSqueeze')

end_points['Logits'] = logits

end_points['Predictions'] = prediction_fn(logits,scope='Predictions')

return logits, end_points def time_tensorflow_run(session, target, info_string):

'''

网路运行时间测试函数

:param session: 会话对象

:param target: 运行目标节点

:param info_string:提示字符

:return: None

'''

num_steps_burn_in = 10 # 预热轮数

total_duration = 0.0 # 总时间

total_duration_squared = 0.0 # 总时间平方和

for i in range(num_steps_burn_in + num_batches):

start_time = time.time()

_ = session.run(target)

duration = time.time() - start_time # 本轮时间

if i >= num_steps_burn_in:

if not i % 10:

print('%s: step %d, duration = %.3f' %

(datetime.now(),i-num_steps_burn_in,duration))

total_duration += duration

total_duration_squared += duration**2 mn = total_duration/num_batches # 平均耗时

vr = total_duration_squared/num_batches - mn**2

sd = math.sqrt(vr)

print('%s:%s across %d steps, %.3f +/- %.3f sec / batch' %

(datetime.now(), info_string, num_batches, mn, sd)) if __name__ == '__main__':

batch_size=32

height,width = 299,299

inputs = tf.random_uniform((batch_size,height,width,3))

with slim.arg_scope(inception_v3_arg_scope()):

logits,end_points = inception_v3(inputs,is_training=False)

init = tf.global_variables_initializer()

sess = tf.Session()

sess.run(init)

num_batches = 100

time_tensorflow_run(sess,logits,'Forward')

运行起来时耗过长,就不贴了。

『TensorFlow』读书笔记_Inception_V3_下的更多相关文章

- 『TensorFlow』读书笔记_Inception_V3_上

1.网络背景 自2012年Alexnet提出以来,图像分类.目标检测等一系列领域都被卷积神经网络CNN统治着.接下来的时间里,人们不断设计新的深度学习网络模型来获得更好的训练效果.一般而言,许多网络结 ...

- 『TensorFlow』读书笔记_降噪自编码器

『TensorFlow』降噪自编码器设计 之前学习过的代码,又敲了一遍,新的收获也还是有的,因为这次注释写的比较详尽,所以再次记录一下,具体的相关知识查阅之前写的文章即可(见上面链接). # Aut ...

- 『TensorFlow』读书笔记_VGGNet

VGGNet网络介绍 VGG系列结构图, 『cs231n』卷积神经网络工程实践技巧_下 1,全部使用3*3的卷积核和2*2的池化核,通过不断加深网络结构来提升性能. 所有卷积层都是同样大小的filte ...

- 『TensorFlow』读书笔记_ResNet_V2

『PyTorch × TensorFlow』第十七弹_ResNet快速实现 要点 神经网络逐层加深有Degradiation问题,准确率先上升到饱和,再加深会下降,这不是过拟合,是测试集和训练集同时下 ...

- 『TensorFlow』读书笔记_进阶卷积神经网络_分类cifar10_下

数据读取部分实现 文中采用了tensorflow的从文件直接读取数据的方式,逻辑流程如下, 实现如下, # Author : Hellcat # Time : 2017/12/9 import os ...

- 『TensorFlow』读书笔记_简单卷积神经网络

如果你可视化CNN的各层级结构,你会发现里面的每一层神经元的激活态都对应了一种特定的信息,越是底层的,就越接近画面的纹理信息,如同物品的材质. 越是上层的,就越接近实际内容(能说出来是个什么东西的那些 ...

- 『TensorFlow』读书笔记_进阶卷积神经网络_分类cifar10_上

完整项目见:Github 完整项目中最终使用了ResNet进行分类,而卷积版本较本篇中结构为了提升训练效果也略有改动 本节主要介绍进阶的卷积神经网络设计相关,数据读入以及增强在下一节再与介绍 网络相关 ...

- 『TensorFlow』读书笔记_SoftMax分类器

开坑之前 今年3.4月份的时候就买了这本书,同时还买了另外一本更为浅显的书,当时读不懂这本,所以一度以为这本书很一般,前些日子看见知乎有人推荐它,也就拿出来翻翻看,发现写的的确蛮好,只是稍微深一点,当 ...

- 『TensorFlow』读书笔记_多层感知机

多层感知机 输入->线性变换->Relu激活->线性变换->Softmax分类 多层感知机将mnist的结果提升到了98%左右的水平 知识点 过拟合:采用dropout解决,本 ...

随机推荐

- WebDriver API--元素定位

WebDriver属于Selenium体系中设计出来操作浏览器的一套API, 站在WebDriver的角度, 因为它针对多种编程语言都实现了一遍这套API,所以它可以支持多种编程语言: 站在编程语言的 ...

- CentOS下rpm命令详解

CentOS下rpm命令详解 rpm,Redhat Package Manager,即为红帽公司为RHEL开发的专用包管理器,后来更改为RPM Package Manager,类似于GNU项目,使用递 ...

- 去掉Tomcat的管理页面

一.去掉Tomcat的管理页面 一.方法一:如果要去掉默认该界面,可以重命名tomcat目录下的ROOT,并新建空文件夹命名为ROOT 1.刚打开tomcat,默认访问的是tomcat管理页面,比如X ...

- Nginx、Tomcat配置https

一.Nginx.Tomcat配置https 前提就是已经得到了CA机构颁发的证书 一.合并证书 1.假设证书文件如下 秘钥文件server.key,证书CACertificate-INTERMEDIA ...

- 21 python的魔法方法(转)

魔法方法 含义 基本的魔法方法 __new__(cls[, ...]) 1. __new__ 是在一个对象实例化的时候所调用的第一个方法2. 它的第一个参数是这个类,其他的参数是用来直接传递给 _ ...

- poj3155 最大密度子图

求最大密度子图 记得在最后一次寻找的时候记得将进入的边放大那么一点点,这样有利于当每条边都满流的情况下会选择点 #include <iostream> #include <algor ...

- 牛客网 完数VS盈数

题目链接:https://www.nowcoder.com/practice/ccc3d1e78014486fb7eed3c50e05c99d?tpId=40&tqId=21351&t ...

- C# 生成 COM控件

C#编写COM组件 软件:Microsoft VisualStudio 2010 1.新建一个类库项目 2.将Class1.cs改为我们想要的名字(例如:MyClass.cs) 问是否同时给类改名,确 ...

- 获取JS数组中所有重复元素

//获取数组内所有重复元素,并以数组返回 //例:入参数组['1','2','4','7','1','2','2'] 返回数组:['1','2'] function GetRepeatFwxmmc(a ...

- 爬起点小说 day02

总的来说起点小说还是挺好爬的,就是爬取小说的时候太慢了,4000多本小说就爬了2天一夜 首先爬取的是网页的所有类别,并把类别名存入到mongodb中,链接存到redis中: import scrapy ...