Hadoop 学习笔记3 Develping MapReduce

小笔记:

Mavon是一种项目管理工具,通过xml配置来设置项目信息。

Mavon POM(project of model).

Steps:

1. set up and configure the development environment.

2. writing your map and reduce functions and run them in local (standalone) mode from the command line or within your IDE.

3. unit test --> test on small dataset --> test on the full dataset after unleash in a cluster

--> tuning

1. Configuration API

- Components in Hadoop are configured using Hadoop’s own configuration API.

- org.apache.hadoop.conf package

- Configurations read their properties from resources — XML files with a simple structure for defining name-value pairs.

For example, write a configuration-1.xml like:

<?xml version="1.0"?>

<configuration>

<property>

<name>color</name>

<value>yellow</value>

<description>Color</description>

</property>

<property>

<name>size</name>

<value>10</value>

<description>Size</description>

</property>

<property>

<name>weight</name>

<value>heavy</value>

<final>true</final>

<description>Weight</description>

</property>

<property>

<name>size-weight</name>

<value>${size},${weight}</value>

<description>Size and weight</description>

</property>

</configuration>

then access it by coding below:

Configuration conf = new Configuration();

conf.addResource("configuration-1.xml");

conf.addResource("configuration-2.xml"); // more than one resource are added orderly, and the latter will overwrite the former. assertThat(conf.get("color"), is("yellow"));

assertThat(conf.getInt("size", 0), is(10));

assertThat(conf.get("breadth", "wide"), is("wide"));

Note:

- type information is not stored in the XML file;

- instead, properties can be interpreted as a given type when they are read.

- Also, the get() methods allow you to specify a default value, which is used if the property is not defined in the XML file, as in the case of breadth here.

- more than one resource are added orderly, and the latter properties will overwrite the former.

- However, properties that are marked as final cannot be overridden in later definitions.

- system properties take priority:

System.setProperty("size", "14")

Options specified with -D take priority over properties from the configuration files.

This will override the number of reducers set on the cluster or set in any client-side configuration files.

% hadoop ConfigurationPrinter -D color=yellow | grep color

2. Set up dev enviroment

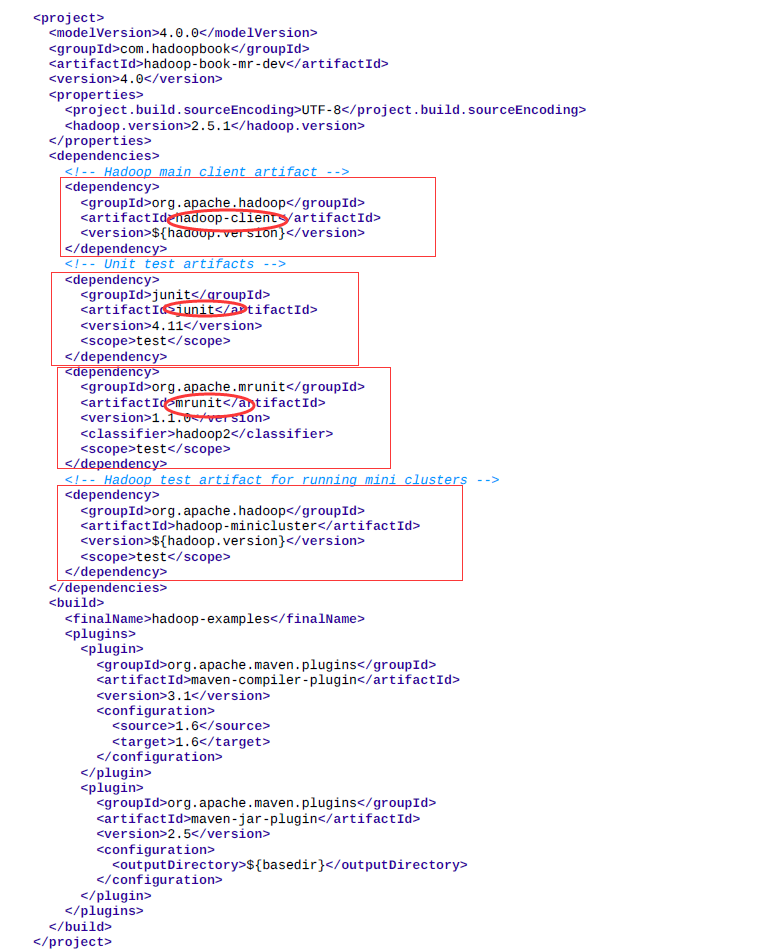

The Maven POMs (Project Object Model) are used to show the dependencies needed for building and testing MapReduce programs. Actually a xml file.

- hadoop-client dependency, which contains all the Hadoop client-side classes needed to interact with HDFS and MapReduce.

- For running unit tests, we use junit,

- for writing MapReduce tests, we use mrunit.

- The hadoop-minicluster library contains the “mini-” clusters that are useful for testing with Hadoop clusters running in a single JVM.

Many IDEs can read Maven POMs directly, so you can just point them at the directory containing the pom.xml file and start writing code.

Alternatively, you can use Maven to generate configuration files for your IDE. For example, the following creates Eclipse configuration files so you can import the project into Eclipse:

% mvn eclipse:eclipse -DdownloadSources=true -DdownloadJavadocs=true

3. Managing switching

It is common to switch between running the application locally and running it on a cluster.

- have Hadoop configuration files containing the connection settings for each cluster

- we assume the existence of a directory called conf that contains three configuration files: hadoop-local.xml, hadoop-localhost.xml, and hadoopcluster.xml

For example, the following command shows a directory listing on the HDFS serverrunning in pseudodistributed mode on localhost:

- conf

% hadoop fs -conf conf/hadoop-localhost.xml -ls Found 2 items

drwxr-xr-x - tom supergroup 0 2014-09-08 10:19 input

drwxr-xr-x - tom supergroup 0 2014-09-08 10:19 output

4. Starts MapReduce example:

Mapper: to get year and temperature from an input string

public class MaxTemperatureMapper

extends Mapper<LongWritable, Text, Text, IntWritable> { @Override

public void map(LongWritable key, Text value, Context context)

throws IOException, InterruptedException {

String line = value.toString();

String year = line.substring(15, 19);

int airTemperature = Integer.parseInt(line.substring(87, 92)); context.write(new Text(year), new IntWritable(airTemperature));

}

}

Unit test for the Mapper:

import java.io.IOException;

import org.apache.hadoop.io.*;

import org.apache.hadoop.mrunit.mapreduce.MapDriver;

import org.junit.*; public class MaxTemperatureMapperTest {

@Test

public void processesValidRecord() throws IOException, InterruptedException {

Text value = new Text("0043011990999991950051518004+68750+023550FM-12+0382" +

// Year ^^^^

"99999V0203201N00261220001CN9999999N9-00111+99999999999");

// Temperature ^^^^^ new MapDriver<LongWritable, Text, Text, IntWritable>()

.withMapper(new MaxTemperatureMapper())

.withInput(new LongWritable(0), value)

.withOutput(new Text("1950"), new IntWritable(-11))

.runTest();

}

}

Reducer: to get the maxmium

public class MaxTemperatureReducer

extends Reducer<Text, IntWritable, Text, IntWritable> { @Override

public void reduce(Text key, Iterable<IntWritable> values, Context context)

throws IOException, InterruptedException { int maxValue = Integer.MIN_VALUE; for (IntWritable value : values) {

maxValue = Math.max(maxValue, value.get());

} context.write(key, new IntWritable(maxValue));

}

}

Unit test for the Reducer:

@Test

public void returnsMaximumIntegerInValues() throws IOException, InterruptedException { new ReduceDriver<Text, IntWritable, Text, IntWritable>()

.withReducer(new MaxTemperatureReducer())

.withInput(new Text("1950"),

Arrays.asList(new IntWritable(10), new IntWritable(5)))

.withOutput(new Text("1950"), new IntWritable(10))

.runTest();

}

5 . a write job driver

Using the Tool interface , it’s easy to write a driver to run a MapReduce job.

Then run the driver locally.

% mvn compile

% export HADOOP_CLASSPATH=target/classes/

% hadoop v2.MaxTemperatureDriver -conf conf/hadoop-local.xml \

input/ncdc/micro output

或

% hadoop v2.MaxTemperatureDriver -fs file:/// -jt local input/ncdc/micro output

The local job runner uses a single JVM to run a job, so as long as all the classes that your job needs are on its classpath, then things will just work.

6. Running on a cluster

a job’s classes must be packaged into a job JAR file to send to the cluster

Hadoop 学习笔记3 Develping MapReduce的更多相关文章

- Hadoop学习笔记—4.初识MapReduce

一.神马是高大上的MapReduce MapReduce是Google的一项重要技术,它首先是一个编程模型,用以进行大数据量的计算.对于大数据量的计算,通常采用的处理手法就是并行计算.但对许多开发者来 ...

- Hadoop学习笔记(2) 关于MapReduce

1. 查找历年最高的温度. MapReduce任务过程被分为两个处理阶段:map阶段和reduce阶段.每个阶段都以键/值对作为输入和输出,并由程序员选择它们的类型.程序员还需具体定义两个函数:map ...

- Hadoop学习笔记—22.Hadoop2.x环境搭建与配置

自从2015年花了2个多月时间把Hadoop1.x的学习教程学习了一遍,对Hadoop这个神奇的小象有了一个初步的了解,还对每次学习的内容进行了总结,也形成了我的一个博文系列<Hadoop学习笔 ...

- Hadoop学习笔记(7) ——高级编程

Hadoop学习笔记(7) ——高级编程 从前面的学习中,我们了解到了MapReduce整个过程需要经过以下几个步骤: 1.输入(input):将输入数据分成一个个split,并将split进一步拆成 ...

- Hadoop学习笔记(6) ——重新认识Hadoop

Hadoop学习笔记(6) ——重新认识Hadoop 之前,我们把hadoop从下载包部署到编写了helloworld,看到了结果.现是得开始稍微更深入地了解hadoop了. Hadoop包含了两大功 ...

- Hadoop学习笔记(2)

Hadoop学习笔记(2) ——解读Hello World 上一章中,我们把hadoop下载.安装.运行起来,最后还执行了一个Hello world程序,看到了结果.现在我们就来解读一下这个Hello ...

- Hadoop学习笔记(5) ——编写HelloWorld(2)

Hadoop学习笔记(5) ——编写HelloWorld(2) 前面我们写了一个Hadoop程序,并让它跑起来了.但想想不对啊,Hadoop不是有两块功能么,DFS和MapReduce.没错,上一节我 ...

- Hadoop学习笔记(2) ——解读Hello World

Hadoop学习笔记(2) ——解读Hello World 上一章中,我们把hadoop下载.安装.运行起来,最后还执行了一个Hello world程序,看到了结果.现在我们就来解读一下这个Hello ...

- Hadoop学习笔记(1) ——菜鸟入门

Hadoop学习笔记(1) ——菜鸟入门 Hadoop是什么?先问一下百度吧: [百度百科]一个分布式系统基础架构,由Apache基金会所开发.用户可以在不了解分布式底层细节的情况下,开发分布式程序. ...

随机推荐

- AIO 简介

from:http://blog.chinaunix.net/uid-11572501-id-2868654.html Linux的I/O机制经历了一下几个阶段的演进: 1. 同步阻塞I/O: 用 ...

- python中的字符串操作

#!/usr/bin/python # -*- coding: UTF-8 -*- ''' str.capitalize() ''' str = 'this is a string example' ...

- 自定义JS常用方法

1,获取表格中的元素,支持IE,chrome,firefox //获取表单元素的某一个值 function getTableColumnValue(tableId, rowNumber, column ...

- SQL 2014新特性- Delayed durability

ACID 是数据库的基本属性.其中的D是指"持久性":只要事务已经提交,对应的数据修改就会被保存下来,即使出现断电等情况,当系统重启后之前已经提交的数据依然能够反映到数据库中. 那 ...

- 清北学堂2017NOIP冬令营入学测试P4749 F’s problem(f)

时间: 1000ms / 空间: 655360KiB / Java类名: Main 背景 冬令营入学测试 描述 这个故事是关于小F的,它有一个怎么样的故事呢. 小F是一个田径爱好者,这天它们城市里正在 ...

- Ros学习注意点

编译问题 回调函数不能有返回类型,严格按照实例程序编写 第三方库的问题,packet.xml里面必须加上自己的依赖文件 之前文档里面介绍的有点问题. 主要表现在:当你建立包的时候就写入了依赖,那就不需 ...

- beaglebone_black_学习笔记——(9)UART使用

笔者通过查阅相关资料,了解了BeagleBoneBlack开发板的UART接口特性,掌握的UART接口的基本使用方法,最后通过一个C语言的例程实现串口的自发自收.有了这个串口开发板就可和其他设备进行串 ...

- WPF 绑定枚举值

前台Xaml <ComboBox x:Name=" HorizontalAlignment="Left" Margin="5 0 0 0" Se ...

- Web端PHP代码函数覆盖率测试解决方案

1. 关于代码覆盖率 衡量代码覆盖率有很多种层次,比如行覆盖率,函数/方法覆盖率,类覆盖率,分支覆盖率等等.代码覆盖率也是衡量测试质量的一个重要标准,对于黑盒测试来说,如果你不确定自己的测试用例是否真 ...

- N-gram模型

n元语法 n-gram grammar 建立在马尔可夫模型上的一种概率语法.它通过对自然语言的符号串中n个符号同时出现概率的统计数据来推断句子的结构关系.当n=2时,称为二元语法,当 ...