mongodb之shard分片

总的

1:在3台独立服务器上,分别运行 27017,27018,27019实例, 互为副本集,形成3套repl set

2: 在3台服务器上,各配置config server, 运行27020端口上 3: 配置mongos

./bin/mongos --port 30000 \

--dbconfig 192.168.1.201:27020,192.168.1.202:27020,192.168.1.203:27020 4:连接路由器

./bin/mongo --port 30000 5: 添加repl set为片

>sh.addShard(‘192.168.1.201:27017’);

>sh.addShard(‘192.168.1.203:27017’);

>sh.addShard(‘192.168.1.203:27017’); 6: 添加待分片的库

>sh.enableSharding(databaseName); 7: 添加待分片的表

>sh.shardCollection(‘dbName.collectionName’,{field:1}); Field是collection的一个字段,系统将会利用filed的值,来计算应该分到哪一个片上.

这个filed叫”片键”, shard key mongodb不是从单篇文档的级别,绝对平均的散落在各个片上, 而是N篇文档,形成一个块"chunk",

优先放在某个片上,

当这片上的chunk,比另一个片的chunk,区别比较大时, (>=3) ,会把本片上的chunk,移到另一个片上, 以chunk为单位,

维护片之间的数据均衡 问: 为什么插入了10万条数据,才2个chunk?

答: 说明chunk比较大(默认是64M)

在config数据库中,修改chunksize的值. 问: 既然优先往某个片上插入,当chunk失衡时,再移动chunk,

自然,随着数据的增多,shard的实例之间,有chunk来回移动的现象,这将带来什么问题?

答: 服务器之间IO的增加, 接上问: 能否我定义一个规则, 某N条数据形成1个块,预告分配M个chunk,

M个chunk预告分配在不同片上.

以后的数据直接入各自预分配好的chunk,不再来回移动? 答: 能, 手动预先分片! 以shop.user表为例

1: sh.shardCollection(‘shop.user’,{userid:1}); //user表用userid做shard key 2: for(var i=1;i<=40;i++) { sh.splitAt('shop.user',{userid:i*1000}) } // 预先在1K 2K...40K这样的界限切好chunk(虽然chunk是空的), 这些chunk将会均匀移动到各片上. 3: 通过mongos添加user数据. 数据会添加到预先分配好的chunk上, chunk就不会来回移动了.

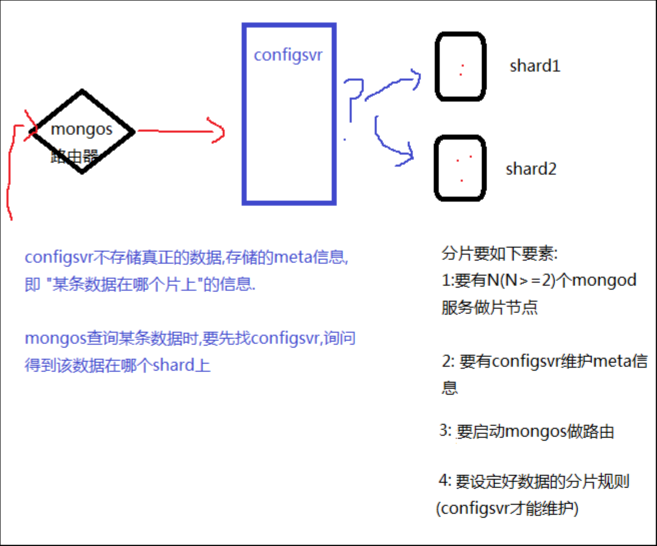

分片

部署使用分片的mongodb集群

var rsconf = {

_id:'rs2',

members:

[

{_id:0,

host:'10.0.0.11:27017'

},

{_id:1,

host:'10.0.0.11:27018'

},

{_id:2,

host:'10.0.0.11:27020'

}

]

}

mongod --dbpath /mongodb/m17 --logpath /mongodb/mlog/m17.log --fork --port 27017 --smallfiles

mongod --dbpath /mongodb/m18 --logpath /mongodb/mlog/m18.log --fork --port 27018 --smallfiles

mongod --dbpath /mongodb/m20 --logpath /mongodb/mlog/m20.log --fork --port 27020 --configsvr

需要有一个配置数据库服务,存储元数据用,使用参数--configsvr

mongos --logpath /mongodb/mlog/m30.log --port 30000 --configdb 10.0.0.11:27020 --fork

mongos需要指定配置数据库,

[mongod@mcw01 ~]$ mongod --dbpath /mongodb/m17 --logpath /mongodb/mlog/m17.log --fork --port 27017 --smallfiles

about to fork child process, waiting until server is ready for connections.

forked process: 18608

child process started successfully, parent exiting

[mongod@mcw01 ~]$ mongod --dbpath /mongodb/m18 --logpath /mongodb/mlog/m18.log --fork --port 27018 --smallfiles

about to fork child process, waiting until server is ready for connections.

forked process: 18627

child process started successfully, parent exiting

[mongod@mcw01 ~]$ mongod --dbpath /mongodb/m20 --logpath /mongodb/mlog/m20.log --fork --port 27020 --configsvr

about to fork child process, waiting until server is ready for connections.

forked process: 18646

child process started successfully, parent exiting

[mongod@mcw01 ~]$ mongos --logpath /mongodb/mlog/m30.log --port 30000 --configdb 10.0.0.11:27020 --fork

2022-03-05T00:26:41.452+0800 W SHARDING [main] Running a sharded cluster with fewer than 3 config servers should only be done for testing purposes and is not recommended for production.

about to fork child process, waiting until server is ready for connections.

forked process: 18667

child process started successfully, parent exiting

[mongod@mcw01 ~]$ ps -ef|grep -v grep |grep mongo

root 16595 16566 0 Mar04 pts/0 00:00:00 su - mongod

mongod 16596 16595 0 Mar04 pts/0 00:00:03 -bash

root 17669 17593 0 Mar04 pts/1 00:00:00 su - mongod

mongod 17670 17669 0 Mar04 pts/1 00:00:00 -bash

root 17735 17715 0 Mar04 pts/2 00:00:00 su - mongod

mongod 17736 17735 0 Mar04 pts/2 00:00:00 -bash

mongod 18608 1 0 00:26 ? 00:00:03 mongod --dbpath /mongodb/m17 --logpath /mongodb/mlog/m17.log --fork --port 27017 --smallfiles

mongod 18627 1 0 00:26 ? 00:00:03 mongod --dbpath /mongodb/m18 --logpath /mongodb/mlog/m18.log --fork --port 27018 --smallfiles

mongod 18646 1 0 00:26 ? 00:00:04 mongod --dbpath /mongodb/m20 --logpath /mongodb/mlog/m20.log --fork --port 27020 --configsvr

mongod 18667 1 0 00:26 ? 00:00:01 mongos --logpath /mongodb/mlog/m30.log --port 30000 --configdb 10.0.0.11:27020 --fork

mongod 18698 16596 0 00:36 pts/0 00:00:00 ps -ef

[mongod@mcw01 ~]$

现在configsvr和mongos绑在一块了,但是和后面的两个mongodb分片还没有关系。

下面需要连接mongos,给它增加两个shard(片节点)。

[mongod@mcw01 ~]$ mongo --port 30000

MongoDB shell version: 3.2.8

connecting to: 127.0.0.1:30000/test

mongos> show dbs;

config 0.000GB

mongos> use config;

switched to db config

mongos> show tables; #查看mongos中有的表

chunks

lockpings

locks

mongos

settings

shards

tags

version

mongos>

mongos>

bye

[mongod@mcw01 ~]$ mongo --port 30000

MongoDB shell version: 3.2.8

connecting to: 127.0.0.1:30000/test

mongos> sh.addShard('10.0.0.11:27017'); #添加两个shard

{ "shardAdded" : "shard0000", "ok" : 1 }

mongos> sh.addShard('10.0.0.11:27018');

{ "shardAdded" : "shard0001", "ok" : 1 }

mongos> sh.status() #查看shard状况

--- Sharding Status ---

sharding version: {

"_id" : 1,

"minCompatibleVersion" : 5,

"currentVersion" : 6,

"clusterId" : ObjectId("62223dc1dd5791b451d9b441")

}

shards:

{ "_id" : "shard0000", "host" : "10.0.0.11:27017" } #可以看到有两个shard,

{ "_id" : "shard0001", "host" : "10.0.0.11:27018" } #这两个片已经加到configsvr里了

active mongoses:

"3.2.8" : 1

balancer:

Currently enabled: yes

Currently running: no

Failed balancer rounds in last 5 attempts: 0

Migration Results for the last 24 hours:

No recent migrations

databases:

mongos>

mongos> use test

switched to db test

mongos> db.stu.insert({name:'poly'}); #现在在mongos上创建四条数据。可以查询到

WriteResult({ "nInserted" : 1 })

mongos> db.stu.insert({name:'lily'});

WriteResult({ "nInserted" : 1 })

mongos> db.stu.insert({name:'hmm'});

WriteResult({ "nInserted" : 1 })

mongos> db.stu.insert({name:'lucy'});

WriteResult({ "nInserted" : 1 })

mongos> db.stu.find();

{ "_id" : ObjectId("6222427bc425e356ae71d452"), "name" : "poly" }

{ "_id" : ObjectId("62224282c425e356ae71d453"), "name" : "lily" }

{ "_id" : ObjectId("62224287c425e356ae71d454"), "name" : "hmm" }

{ "_id" : ObjectId("6222428dc425e356ae71d455"), "name" : "lucy" }

mongos>

此时我在27017上能看到

[mongod@mcw01 ~]$ mongo --port 27017

.......

> show dbs;

local 0.000GB

test 0.000GB

> use test;

switched to db test

> db.stu.find();

{ "_id" : ObjectId("6222427bc425e356ae71d452"), "name" : "poly" }

{ "_id" : ObjectId("62224282c425e356ae71d453"), "name" : "lily" }

{ "_id" : ObjectId("62224287c425e356ae71d454"), "name" : "hmm" }

{ "_id" : ObjectId("6222428dc425e356ae71d455"), "name" : "lucy" }

>

但是在27018上查不到数据

[mongod@mcw01 ~]$ mongo --port 27018

.......

> show dbs;

local 0.000GB

>

没有设定数据的分片规则。下面我们进入mongos查看分片情况

mongos> sh.status();

--- Sharding Status ---

sharding version: {

"_id" : 1,

"minCompatibleVersion" : 5,

"currentVersion" : 6,

"clusterId" : ObjectId("62223dc1dd5791b451d9b441")

}

shards:

{ "_id" : "shard0000", "host" : "10.0.0.11:27017" }

{ "_id" : "shard0001", "host" : "10.0.0.11:27018" }

active mongoses:

"3.2.8" : 1

balancer:

Currently enabled: yes

Currently running: no

Failed balancer rounds in last 5 attempts: 0

Migration Results for the last 24 hours:

No recent migrations

databases:

{ "_id" : "test", "primary" : "shard0000", "partitioned" : false }

mongos> #如上可以看到,test库分区(partitioned)是false,没有分片,就默认首选放到主上的分片shard0000

给库开启分片

如下:shop是不存在的库。给shop开启分片。可看到是true了,且优先放到shard0001上,但是这还不完善

mongos> show dbs;

config 0.000GB

test 0.000GB

mongos> sh.enable

sh.enableBalancing( sh.enableSharding(

mongos> sh.enableSharding('shop');

{ "ok" : 1 }

mongos> sh.status();

--- Sharding Status ---

sharding version: {

"_id" : 1,

"minCompatibleVersion" : 5,

"currentVersion" : 6,

"clusterId" : ObjectId("62223dc1dd5791b451d9b441")

}

shards:

{ "_id" : "shard0000", "host" : "10.0.0.11:27017" }

{ "_id" : "shard0001", "host" : "10.0.0.11:27018" }

active mongoses:

"3.2.8" : 1

balancer:

Currently enabled: yes

Currently running: no

Failed balancer rounds in last 5 attempts: 0

Migration Results for the last 24 hours:

No recent migrations

databases:

{ "_id" : "test", "primary" : "shard0000", "partitioned" : false }

{ "_id" : "shop", "primary" : "shard0001", "partitioned" : true } mongos>

指定db下那个表(集合)做分片,指定分片依据那个字段

mongos> sh.shardCollection('shop.goods',{goods_id:1});

{ "collectionsharded" : "shop.goods", "ok" : 1 }

mongos> sh.status();

--- Sharding Status ---

sharding version: {

"_id" : 1,

"minCompatibleVersion" : 5,

"currentVersion" : 6,

"clusterId" : ObjectId("62223dc1dd5791b451d9b441")

}

shards:

{ "_id" : "shard0000", "host" : "10.0.0.11:27017" }

{ "_id" : "shard0001", "host" : "10.0.0.11:27018" }

active mongoses:

"3.2.8" : 1

balancer:

Currently enabled: yes

Currently running: no

Failed balancer rounds in last 5 attempts: 0

Migration Results for the last 24 hours:

No recent migrations

databases:

{ "_id" : "test", "primary" : "shard0000", "partitioned" : false }

{ "_id" : "shop", "primary" : "shard0001", "partitioned" : true }

shop.goods #shop库下的goods需要分片。

shard key: { "goods_id" : 1 } #分片键是这个字段

unique: false

balancing: true

chunks:

shard0001 1 #chunk优先放到shard0001分片上

{ "goods_id" : { "$minKey" : 1 } } -->> { "goods_id" : { "$maxKey" : 1 } } on : shard0001 Timestamp(1, 0)

mongos>

如下插入多条数据,可以发现基本都分配到shard1上了,此时是默认chunk,是很大的

for(var i=1;i<=10000;i++){ db.goods.insert({goods_id:i,goods_name:'mcw mfsfowfofsfewfwifonwainfsfffsf'}) };

[mongod@mcw01 ~]$ mongo --port 30000

MongoDB shell version: 3.2.8

connecting to: 127.0.0.1:30000/test

mongos> use shop;

switched to db shop

mongos> for(var i=1;i<=10000;i++){ db.goods.insert({goods_id:i,goods_name:'mcw mfsfowfofsfewfwifonwainfsfffsf'}) };

WriteResult({ "nInserted" : 1 })

mongos> sh.status();

--- Sharding Status ---

sharding version: {

"_id" : 1,

"minCompatibleVersion" : 5,

"currentVersion" : 6,

"clusterId" : ObjectId("62223dc1dd5791b451d9b441")

}

shards:

{ "_id" : "shard0000", "host" : "10.0.0.11:27017" }

{ "_id" : "shard0001", "host" : "10.0.0.11:27018" }

active mongoses:

"3.2.8" : 1

balancer:

Currently enabled: yes

Currently running: no

Failed balancer rounds in last 5 attempts: 0

Migration Results for the last 24 hours:

1 : Success

databases:

{ "_id" : "test", "primary" : "shard0000", "partitioned" : false }

{ "_id" : "shop", "primary" : "shard0001", "partitioned" : true }

shop.goods

shard key: { "goods_id" : 1 }

unique: false

balancing: true

chunks:

shard0000 1

shard0001 2

{ "goods_id" : { "$minKey" : 1 } } -->> { "goods_id" : 2 } on : shard0000 Timestamp(2, 0)

{ "goods_id" : 2 } -->> { "goods_id" : 12 } on : shard0001 Timestamp(2, 1)

{ "goods_id" : 12 } -->> { "goods_id" : { "$maxKey" : 1 } } on : shard0001 Timestamp(1, 3)

mongos>

mongos> db.goods.find().count();

10000

mongos>

在27017上可以看到一条数据

[mongod@mcw01 ~]$ mongo --port 27017

> use shop

switched to db shop

> show tables;

goods

> db.goods.find().count();

1

> db.goods.find();

{ "_id" : ObjectId("622252d8b541d8768347746e"), "goods_id" : 1, "goods_name" : "mcw mfsfowfofsfewfwifonwainfsfffsf" }

>

在27018分片2上有很多,分片分的不均。符合上面显示的id是2以上的,都在shard1上

[mongod@mcw01 ~]$ mongo --port 27018

> use shop;

switched to db shop

> db.goods.find().count();

9999

> db.goods.find().skip(9996);

{ "_id" : ObjectId("622252e3b541d87683479b7b"), "goods_id" : 9998, "goods_name" : "mcw mfsfowfofsfewfwifonwainfsfffsf" }

{ "_id" : ObjectId("622252e3b541d87683479b7c"), "goods_id" : 9999, "goods_name" : "mcw mfsfowfofsfewfwifonwainfsfffsf" }

{ "_id" : ObjectId("622252e3b541d87683479b7d"), "goods_id" : 10000, "goods_name" : "mcw mfsfowfofsfewfwifonwainfsfffsf" }

>

修改chunk大小的配置

mongos> use config; #在mongos上,需要切到config库

switched to db config

mongos> show tables;

changelog

chunks

collections

databases

lockpings

locks

mongos

settings

shards

tags

version

mongos> db.settings.find(); #chunk大小的设置在设置里面,默认大小是64M

{ "_id" : "chunksize", "value" : NumberLong(64) }

mongos> db.settings.find();

{ "_id" : "chunksize", "value" : NumberLong(64) }

mongos> db.settings.save({_id:'chunksize'},{$set:{value: 1}}); #这里不能用update的方式修改

WriteResult({ "nMatched" : 1, "nUpserted" : 0, "nModified" : 1 })

mongos> db.settings.find();

{ "_id" : "chunksize" }

mongos> db.settings.save({ "_id" : "chunksize", "value" : NumberLong(64) });

WriteResult({ "nMatched" : 1, "nUpserted" : 0, "nModified" : 1 })

mongos> db.settings.save({ "_id" : "chunksize", "value" : NumberLong(1) }); #修改chunk大小的配置为1M

WriteResult({ "nMatched" : 1, "nUpserted" : 0, "nModified" : 1 })

mongos> db.settings.find(); #查看修改成功

{ "_id" : "chunksize", "value" : NumberLong(1) }

mongos>

下面我们插入15万行数据,查看分片规则下的分片情况

for(var i=1;i<=150000;i++){ db.goods.insert({goods_id:i,goods_name:'mcw mfsfowfofsfewfwifonwainfsfffsf fsff'}) };

之前的表被删了,分片规则肯定也被删除了,重新建立分片规则吧

mongos> use shop;

switched to db shop

mongos> db.goods.drop();

false

mongos> for(var i=1;i<=150000;i++){ db.goods.insert({goods_id:i,goods_name:'mcw mfsfowfofsfewfwifonwainfsfffsf fsff'}) };

WriteResult({ "nInserted" : 1 })

mongos> sh.status();

--- Sharding Status ---

sharding version: {

"_id" : 1,

"minCompatibleVersion" : 5,

"currentVersion" : 6,

"clusterId" : ObjectId("62223dc1dd5791b451d9b441")

}

shards:

{ "_id" : "shard0000", "host" : "10.0.0.11:27017" }

{ "_id" : "shard0001", "host" : "10.0.0.11:27018" }

active mongoses:

"3.2.8" : 1

balancer:

Currently enabled: yes

Currently running: no

Failed balancer rounds in last 5 attempts: 0

Migration Results for the last 24 hours:

1 : Success

databases:

{ "_id" : "test", "primary" : "shard0000", "partitioned" : false }

{ "_id" : "shop", "primary" : "shard0001", "partitioned" : true }

mongos> sh.shardCollection('shop.goods',{goods_id:1});

{

"proposedKey" : {

"goods_id" : 1

},

"curIndexes" : [

{

"v" : 1,

"key" : {

"_id" : 1

},

"name" : "_id_",

"ns" : "shop.goods"

}

],

"ok" : 0,

"errmsg" : "please create an index that starts with the shard key before sharding."

}

mongos>

重新建立分片规则,然后添加数据

mongos> db.goods.drop();

true

mongos> sh.shardCollection('shop.goods',{goods_id:1});

{ "collectionsharded" : "shop.goods", "ok" : 1 }

mongos> sh.status();

--- Sharding Status ---

sharding version: {

"_id" : 1,

"minCompatibleVersion" : 5,

"currentVersion" : 6,

"clusterId" : ObjectId("62223dc1dd5791b451d9b441")

}

shards:

{ "_id" : "shard0000", "host" : "10.0.0.11:27017" }

{ "_id" : "shard0001", "host" : "10.0.0.11:27018" }

active mongoses:

"3.2.8" : 1

balancer:

Currently enabled: yes

Currently running: no

Failed balancer rounds in last 5 attempts: 0

Migration Results for the last 24 hours:

1 : Success

databases:

{ "_id" : "test", "primary" : "shard0000", "partitioned" : false }

{ "_id" : "shop", "primary" : "shard0001", "partitioned" : true }

shop.goods

shard key: { "goods_id" : 1 }

unique: false

balancing: true

chunks:

shard0001 1

{ "goods_id" : { "$minKey" : 1 } } -->> { "goods_id" : { "$maxKey" : 1 } } on : shard0001 Timestamp(1, 0)

重新插入数据,发现一个分片分了7个chunk,一个分片分了20个chunk,有点不均匀。手动预分配的方式更好

mongos> for(var i=1;i<=150000;i++){ db.goods.insert({goods_id:i,goods_name:'mcw mfsfowfofsfewfwifonwainfsfffsf fsff'}) };

WriteResult({ "nInserted" : 1 })

mongos> sh.status();

--- Sharding Status ---

sharding version: {

"_id" : 1,

"minCompatibleVersion" : 5,

"currentVersion" : 6,

"clusterId" : ObjectId("62223dc1dd5791b451d9b441")

}

shards:

{ "_id" : "shard0000", "host" : "10.0.0.11:27017" }

{ "_id" : "shard0001", "host" : "10.0.0.11:27018" }

active mongoses:

"3.2.8" : 1

balancer:

Currently enabled: yes

Currently running: no

Failed balancer rounds in last 5 attempts: 0

Migration Results for the last 24 hours:

8 : Success

13 : Failed with error 'aborted', from shard0001 to shard0000

databases:

{ "_id" : "test", "primary" : "shard0000", "partitioned" : false }

{ "_id" : "shop", "primary" : "shard0001", "partitioned" : true }

shop.goods

shard key: { "goods_id" : 1 }

unique: false

balancing: true

chunks:

shard0000 7

shard0001 20

too many chunks to print, use verbose if you want to force print

mongos>

根据提示,试了几次,正确显示出详细分片情况如下

mongos> sh.status({verbose:1});

--- Sharding Status ---

sharding version: {

"_id" : 1,

"minCompatibleVersion" : 5,

"currentVersion" : 6,

"clusterId" : ObjectId("62223dc1dd5791b451d9b441")

}

shards:

{ "_id" : "shard0000", "host" : "10.0.0.11:27017" }

{ "_id" : "shard0001", "host" : "10.0.0.11:27018" }

active mongoses:

{ "_id" : "mcw01:30000", "ping" : ISODate("2022-03-04T18:32:36.132Z"), "up" : NumberLong(7555), "waiting" : true, "mongoVersion" : "3.2.8" }

balancer:

Currently enabled: yes

Currently running: no

Failed balancer rounds in last 5 attempts: 0

Migration Results for the last 24 hours:

8 : Success

29 : Failed with error 'aborted', from shard0001 to shard0000

databases:

{ "_id" : "test", "primary" : "shard0000", "partitioned" : false }

{ "_id" : "shop", "primary" : "shard0001", "partitioned" : true }

shop.goods

shard key: { "goods_id" : 1 }

unique: false

balancing: true

chunks:

shard0000 7

shard0001 20

{ "goods_id" : { "$minKey" : 1 } } -->> { "goods_id" : 2 } on : shard0000 Timestamp(8, 1)

{ "goods_id" : 2 } -->> { "goods_id" : 12 } on : shard0001 Timestamp(7, 1)

{ "goods_id" : 12 } -->> { "goods_id" : 5473 } on : shard0001 Timestamp(2, 2)

{ "goods_id" : 5473 } -->> { "goods_id" : 12733 } on : shard0001 Timestamp(2, 3)

{ "goods_id" : 12733 } -->> { "goods_id" : 18194 } on : shard0000 Timestamp(3, 2)

{ "goods_id" : 18194 } -->> { "goods_id" : 23785 } on : shard0000 Timestamp(3, 3)

{ "goods_id" : 23785 } -->> { "goods_id" : 29246 } on : shard0001 Timestamp(4, 2)

{ "goods_id" : 29246 } -->> { "goods_id" : 34731 } on : shard0001 Timestamp(4, 3)

{ "goods_id" : 34731 } -->> { "goods_id" : 40192 } on : shard0000 Timestamp(5, 2)

{ "goods_id" : 40192 } -->> { "goods_id" : 45913 } on : shard0000 Timestamp(5, 3)

{ "goods_id" : 45913 } -->> { "goods_id" : 51374 } on : shard0001 Timestamp(6, 2)

{ "goods_id" : 51374 } -->> { "goods_id" : 57694 } on : shard0001 Timestamp(6, 3)

{ "goods_id" : 57694 } -->> { "goods_id" : 63155 } on : shard0000 Timestamp(7, 2)

{ "goods_id" : 63155 } -->> { "goods_id" : 69367 } on : shard0000 Timestamp(7, 3)

{ "goods_id" : 69367 } -->> { "goods_id" : 74828 } on : shard0001 Timestamp(8, 2)

{ "goods_id" : 74828 } -->> { "goods_id" : 81170 } on : shard0001 Timestamp(8, 3)

{ "goods_id" : 81170 } -->> { "goods_id" : 86631 } on : shard0001 Timestamp(8, 5)

{ "goods_id" : 86631 } -->> { "goods_id" : 93462 } on : shard0001 Timestamp(8, 6)

{ "goods_id" : 93462 } -->> { "goods_id" : 98923 } on : shard0001 Timestamp(8, 8)

{ "goods_id" : 98923 } -->> { "goods_id" : 106012 } on : shard0001 Timestamp(8, 9)

{ "goods_id" : 106012 } -->> { "goods_id" : 111473 } on : shard0001 Timestamp(8, 11)

{ "goods_id" : 111473 } -->> { "goods_id" : 118412 } on : shard0001 Timestamp(8, 12)

{ "goods_id" : 118412 } -->> { "goods_id" : 123873 } on : shard0001 Timestamp(8, 14)

{ "goods_id" : 123873 } -->> { "goods_id" : 130255 } on : shard0001 Timestamp(8, 15)

{ "goods_id" : 130255 } -->> { "goods_id" : 135716 } on : shard0001 Timestamp(8, 17)

{ "goods_id" : 135716 } -->> { "goods_id" : 142058 } on : shard0001 Timestamp(8, 18)

{ "goods_id" : 142058 } -->> { "goods_id" : { "$maxKey" : 1 } } on : shard0001 Timestamp(8, 19)

mongos>

过一天后再看,发现两个分片上的chunk分片的比较均衡了13,14,说明它没有后台自动在做均衡,而且不是很快即均衡的,需要时间。

[mongod@mcw01 ~]$ mongo --port 30000

MongoDB shell version: 3.2.8

connecting to: 127.0.0.1:30000/test

mongos> sh.status()

--- Sharding Status ---

sharding version: {

"_id" : 1,

"minCompatibleVersion" : 5,

"currentVersion" : 6,

"clusterId" : ObjectId("62223dc1dd5791b451d9b441")

}

shards:

{ "_id" : "shard0000", "host" : "10.0.0.11:27017" }

{ "_id" : "shard0001", "host" : "10.0.0.11:27018" }

active mongoses:

"3.2.8" : 1

balancer:

Currently enabled: yes

Currently running: no

Failed balancer rounds in last 5 attempts: 0

Migration Results for the last 24 hours:

14 : Success

65 : Failed with error 'aborted', from shard0001 to shard0000

databases:

{ "_id" : "test", "primary" : "shard0000", "partitioned" : false }

{ "_id" : "shop", "primary" : "shard0001", "partitioned" : true }

shop.goods

shard key: { "goods_id" : 1 }

unique: false

balancing: true

chunks:

shard0000 13

shard0001 14

too many chunks to print, use verbose if you want to force print

mongos>

手动预先分片

分片的命令

replication

英 [ˌreplɪ'keɪʃ(ə)n] 美 [ˌreplɪ'keɪʃ(ə)n]

n.

(绘画等的)复制;拷贝;重复(实验);(尤指对答辩的)回答 for(var i=1;i<=40;i++){sh.splitAt('shop.user',{userid:i*1000})}

给shop这个库下的user表切割分片,只要userid字段是1000的倍数就切割一次,形成一个新的chunk。 mongos> sh.help();

sh.addShard( host ) server:port OR setname/server:port

sh.enableSharding(dbname) enables sharding on the database dbname

sh.shardCollection(fullName,key,unique) shards the collection

sh.splitFind(fullName,find) splits the chunk that find is in at the median

sh.splitAt(fullName,middle) splits the chunk that middle is in at middle

sh.moveChunk(fullName,find,to) move the chunk where 'find' is to 'to' (name of shard)

sh.setBalancerState( <bool on or not> ) turns the balancer on or off true=on, false=off

sh.getBalancerState() return true if enabled

sh.isBalancerRunning() return true if the balancer has work in progress on any mongos

sh.disableBalancing(coll) disable balancing on one collection

sh.enableBalancing(coll) re-enable balancing on one collection

sh.addShardTag(shard,tag) adds the tag to the shard

sh.removeShardTag(shard,tag) removes the tag from the shard

sh.addTagRange(fullName,min,max,tag) tags the specified range of the given collection

sh.removeTagRange(fullName,min,max,tag) removes the tagged range of the given collection

sh.status() prints a general overview of the cluster

mongos>

预先分片

给user表做分片,以userid作为片键进行分片。

假设预计一年内增长4千万用户,这两个sharding上每个上分2千万,2千万又分为20个片,每个片上是一百万个数据 我们模拟一下一共30-40个片,每个片上1千条数据 。这样预分片得使用切割的方法

mongos> use shop

switched to db shop

mongos> sh.shardCollection('shop.user',{userid:1})

{ "collectionsharded" : "shop.user", "ok" : 1 }

mongos> #给shop这个库下的user表切割分片,只要userid字段是1000的倍数就切割一次,形成一个新的chunk。

mongos> for(var i=1;i<=40;i++){sh.splitAt('shop.user',{userid:i*1000})}

{ "ok" : 1 }

mongos>

执行预先分片后查看

查看user表,当稳定后,20,21就不会因为数据不平衡来回转移分片中的chunk,导致机器的io很高

mongos> sh.status();

--- Sharding Status ---

sharding version: {

"_id" : 1,

"minCompatibleVersion" : 5,

"currentVersion" : 6,

"clusterId" : ObjectId("62223dc1dd5791b451d9b441")

}

shards:

{ "_id" : "shard0000", "host" : "10.0.0.11:27017" }

{ "_id" : "shard0001", "host" : "10.0.0.11:27018" }

active mongoses:

"3.2.8" : 1

balancer:

Currently enabled: yes

Currently running: no

Failed balancer rounds in last 5 attempts: 0

Migration Results for the last 24 hours:

34 : Success

65 : Failed with error 'aborted', from shard0001 to shard0000

databases:

{ "_id" : "test", "primary" : "shard0000", "partitioned" : false }

{ "_id" : "shop", "primary" : "shard0001", "partitioned" : true }

shop.goods

shard key: { "goods_id" : 1 }

unique: false

balancing: true

chunks:

shard0000 13

shard0001 14

too many chunks to print, use verbose if you want to force print

shop.user

shard key: { "userid" : 1 }

unique: false

balancing: true

chunks:

shard0000 20

shard0001 21

too many chunks to print, use verbose if you want to force print mongos> 查看分片的详情,可以看到是这样分片的,现在还没有插入数据,但是已经提前知道大概多少数据,根据usserid已经划分好userid所在的分片了。这样当插入数据的时候,它应会保存到符合条件的范围chunk上的。

mongos> sh.status({verbose:1});

--- Sharding Status ---

.............

shop.user

shard key: { "userid" : 1 }

unique: false

balancing: true

chunks:

shard0000 20

shard0001 21

{ "userid" : { "$minKey" : 1 } } -->> { "userid" : 1000 } on : shard0000 Timestamp(2, 0)

{ "userid" : 1000 } -->> { "userid" : 2000 } on : shard0000 Timestamp(3, 0)

{ "userid" : 2000 } -->> { "userid" : 3000 } on : shard0000 Timestamp(4, 0)

{ "userid" : 3000 } -->> { "userid" : 4000 } on : shard0000 Timestamp(5, 0)

{ "userid" : 4000 } -->> { "userid" : 5000 } on : shard0000 Timestamp(6, 0)

{ "userid" : 5000 } -->> { "userid" : 6000 } on : shard0000 Timestamp(7, 0)

{ "userid" : 6000 } -->> { "userid" : 7000 } on : shard0000 Timestamp(8, 0)

{ "userid" : 7000 } -->> { "userid" : 8000 } on : shard0000 Timestamp(9, 0)

{ "userid" : 8000 } -->> { "userid" : 9000 } on : shard0000 Timestamp(10, 0)

{ "userid" : 9000 } -->> { "userid" : 10000 } on : shard0000 Timestamp(11, 0)

{ "userid" : 10000 } -->> { "userid" : 11000 } on : shard0000 Timestamp(12, 0)

{ "userid" : 11000 } -->> { "userid" : 12000 } on : shard0000 Timestamp(13, 0)

{ "userid" : 12000 } -->> { "userid" : 13000 } on : shard0000 Timestamp(14, 0)

{ "userid" : 13000 } -->> { "userid" : 14000 } on : shard0000 Timestamp(15, 0)

{ "userid" : 14000 } -->> { "userid" : 15000 } on : shard0000 Timestamp(16, 0)

{ "userid" : 15000 } -->> { "userid" : 16000 } on : shard0000 Timestamp(17, 0)

{ "userid" : 16000 } -->> { "userid" : 17000 } on : shard0000 Timestamp(18, 0)

{ "userid" : 17000 } -->> { "userid" : 18000 } on : shard0000 Timestamp(19, 0)

{ "userid" : 18000 } -->> { "userid" : 19000 } on : shard0000 Timestamp(20, 0)

{ "userid" : 19000 } -->> { "userid" : 20000 } on : shard0000 Timestamp(21, 0)

{ "userid" : 20000 } -->> { "userid" : 21000 } on : shard0001 Timestamp(21, 1)

{ "userid" : 21000 } -->> { "userid" : 22000 } on : shard0001 Timestamp(1, 43)

{ "userid" : 22000 } -->> { "userid" : 23000 } on : shard0001 Timestamp(1, 45)

{ "userid" : 23000 } -->> { "userid" : 24000 } on : shard0001 Timestamp(1, 47)

{ "userid" : 24000 } -->> { "userid" : 25000 } on : shard0001 Timestamp(1, 49)

{ "userid" : 25000 } -->> { "userid" : 26000 } on : shard0001 Timestamp(1, 51)

{ "userid" : 26000 } -->> { "userid" : 27000 } on : shard0001 Timestamp(1, 53)

{ "userid" : 27000 } -->> { "userid" : 28000 } on : shard0001 Timestamp(1, 55)

{ "userid" : 28000 } -->> { "userid" : 29000 } on : shard0001 Timestamp(1, 57)

{ "userid" : 29000 } -->> { "userid" : 30000 } on : shard0001 Timestamp(1, 59)

{ "userid" : 30000 } -->> { "userid" : 31000 } on : shard0001 Timestamp(1, 61)

{ "userid" : 31000 } -->> { "userid" : 32000 } on : shard0001 Timestamp(1, 63)

{ "userid" : 32000 } -->> { "userid" : 33000 } on : shard0001 Timestamp(1, 65)

{ "userid" : 33000 } -->> { "userid" : 34000 } on : shard0001 Timestamp(1, 67)

{ "userid" : 34000 } -->> { "userid" : 35000 } on : shard0001 Timestamp(1, 69)

{ "userid" : 35000 } -->> { "userid" : 36000 } on : shard0001 Timestamp(1, 71)

{ "userid" : 36000 } -->> { "userid" : 37000 } on : shard0001 Timestamp(1, 73)

{ "userid" : 37000 } -->> { "userid" : 38000 } on : shard0001 Timestamp(1, 75)

{ "userid" : 38000 } -->> { "userid" : 39000 } on : shard0001 Timestamp(1, 77)

{ "userid" : 39000 } -->> { "userid" : 40000 } on : shard0001 Timestamp(1, 79)

{ "userid" : 40000 } -->> { "userid" : { "$maxKey" : 1 } } on : shard0001 Timestamp(1, 80) mongos>

插入数据,查看手动预分片的效果,防止数据在节点间来回复制

当chunk快满的时候,一定要提前解决,不然新增新的分片,导致数据大量的移动,io太高而发生服务器挂掉的情况 在mongos上插入数据

mongos> for(var i=1;i<=40000;i++){db.user.insert({userid:i,name:'xiao ma guo he'})};

WriteResult({ "nInserted" : 1 })

mongos> 在27017上可以看到1-19999共19999条数据。根据上面的分片详情,可以知道shard0000上就是分配了1-20000,而这里是取前不取后。20000在shard0001上,所以0上有19999条数据,而1上有20000-40000的数据,20000-39999是2万条数据在1上,加上40000到最大在1上,所以就是20001条数据。预分配分片相对来说比较稳定,这也不会因为当随着数据插入分配不均衡时,数据在两个节点之间来回复制带来的性能问题。

[mongod@mcw01 ~]$ mongo --port 27017

> use shop

switched to db shop

> db.user.find().count();

19999

> db.user.find().skip(19997);

{ "_id" : ObjectId("6222d91f69eed283bf054e96"), "userid" : 19998, "name" : "xiao ma guo he" }

{ "_id" : ObjectId("6222d91f69eed283bf054e97"), "userid" : 19999, "name" : "xiao ma guo he" }

> [mongod@mcw01 ~]$ mongo --port 27018

> use shop

switched to db shop

> db.user.find().count();

20001

> db.user.find().skip(19999);

{ "_id" : ObjectId("6222d93669eed283bf059cb7"), "userid" : 39999, "name" : "xiao ma guo he" }

{ "_id" : ObjectId("6222d93669eed283bf059cb8"), "userid" : 40000, "name" : "xiao ma guo he" }

> db.user.find().limit(1);

{ "_id" : ObjectId("6222d91f69eed283bf054e98"), "userid" : 20000, "name" : "xiao ma guo he" }

>

mongodb之shard分片的更多相关文章

- 【mongoDB运维篇④】Shard 分片集群

简述 为何要分片 减少单机请求数,降低单机负载,提高总负载 减少单机的存储空间,提高总存空间. 常见的mongodb sharding 服务器架构 要构建一个 MongoDB Sharding Clu ...

- mongodb 学习笔记 09 -- shard分片

概述 shard 分片 就是 把不同的数据分在不同的server 模型 当中: 用户对mongodb的操作都是向mongs请求的 configsvr 用于保存,某条数据保存在哪个sha ...

- MongoDB学习笔记——分片(Sharding)

分片(Sharding) 分片就是将数据进行拆分,并将其分别存储在不同的服务器上 MongoDB支持自动分片能够自动处理数据在分片上的分布 MongoDB分片有三种角色 配置服务器:一个单独的mong ...

- [置顶] MongoDB 分布式操作——分片操作

MongoDB 分布式操作——分片操作 描述: 像其它分布式数据库一样,MongoDB同样支持分布式操作,且MongoDB将分布式已经集成到数据库中,其分布式体系如下图所示: 所谓的片,其实就是一个单 ...

- mongodb移除分片

MongoDB的Shard集群来说,添加一个分片很简单,AddShard就可以了. 但是缩减集群(删除分片)这种一般很少用到.由于曙光的某服务器又挂了,所以我们送修之前必须把它上面的数据自动迁移到其他 ...

- MongoDB 3.4 分片 由副本集组成

要在真实环境中实现MongoDB分片至少需要四台服务器做分片集群服务器,其中包含两个Shard分片副本集(每个包含两个副本节点及一个仲裁节点).一个配置副本集(三个副本节点,配置不需要仲裁节点),其中 ...

- 【七】MongoDB管理之分片集群介绍

分片是横跨多台主机存储数据记录的过程,它是MongoDB针对日益增长的数据需求而采用的解决方案.随着数据的快速增长,单台服务器已经无法满足读写高吞吐量的需求.分片通过水平扩展的方式解决了这个问题.通过 ...

- MongoDB集群——分片

1. 分片的结构及原理分片集群结构分布: 分片集群主要由三种组件组成:mongos,config server,shard1) MONGOS数据库集群请求的入口,所有的请求都通过mongos进行协调, ...

- MySQL Cluster 与 MongoDB 复制群集分片设计及原理

分布式数据库计算涉及到分布式事务.数据分布.数据收敛计算等等要求 分布式数据库能实现高安全.高性能.高可用等特征,当然也带来了高成本(固定成本及运营成本),我们通过MongoDB及MySQL Clus ...

随机推荐

- golang中使用switch语句根据年月计算天数

package main import "fmt" func main() { days := CalcDaysFromYearMonth(2021, 9) fmt.Println ...

- ssh 信任关系无密码登陆,清除公钥,批量脚本

实验机器: 主机a:192.168.2.128 主机b:192.168.2.130 实验目标: 手动建立a到b的信任关系,实现在主机a通过 ssh 192.168.2.130不用输入密码远程登陆b主机 ...

- 列表页面(html+css+js)

html文件 <!DOCTYPE html> <html> <head> <meta charset="utf-8"> <ti ...

- Open虚拟专用网络

目录 一:OpenVPN虚拟网络专用 1.简介: 2.OpenVPN的作用 3.远程访问VPN服务 4.OpenVPN两种类型的VPN体系结构 5.全面解析OpenVPN执行流程 6.Openvpn定 ...

- 题解 - 「MLOI」小兔叽

小兔叽 \(\texttt{Link}\) 简单题意 有 \(n\) 个小木桩排成一行,第 \(i\) 个小木桩的高度为 \(h_i\),分数为 \(c_i\). 如果一只小兔叽在第 \(i\) 个小 ...

- Flutter Windows 桌面端支持进入稳定版

Flutter 创建伊始,我们就致力于打造一个能够构建精美的.可高度定制的.并且可以编译为机器码的跨平台应用解决方案,以充分发挥设备底层硬件的全部图形渲染能力.今天,Flutter 对 Windows ...

- react 配置使用less后缀文件

//安装less less less-loader npm install less less-loader --save-dev 安装完成后,在项目中的config目录下找到webpack.conf ...

- 使用Hot Chocolate和.NET 6构建GraphQL应用(6) —— 实现Query排序功能

系列导航 使用Hot Chocolate和.NET 6构建GraphQL应用文章索引 需求 从前几篇文章可以看出,使用Hot Chocolate实现GraphQL接口是比较简单的,本篇文章我们继续查询 ...

- super、this、抽象类、继承

super 代表是父类对象,并且在创建子类对象时,就先创建父类对象,再创建子类对象,可以在子类方法中使用super调用父类的非私有方法. this 代表本类对象,谁创建就代表谁 实体类,被创建的cla ...

- 配置phpmemcache扩展,Loaded Configuration File (none)

首先我来描述问题: 编译安装完php的扩展库memcache后,在php.ini文件中添加了memcache.so的配置文件 extension=/usr/local/php5.6.27/lib/ph ...