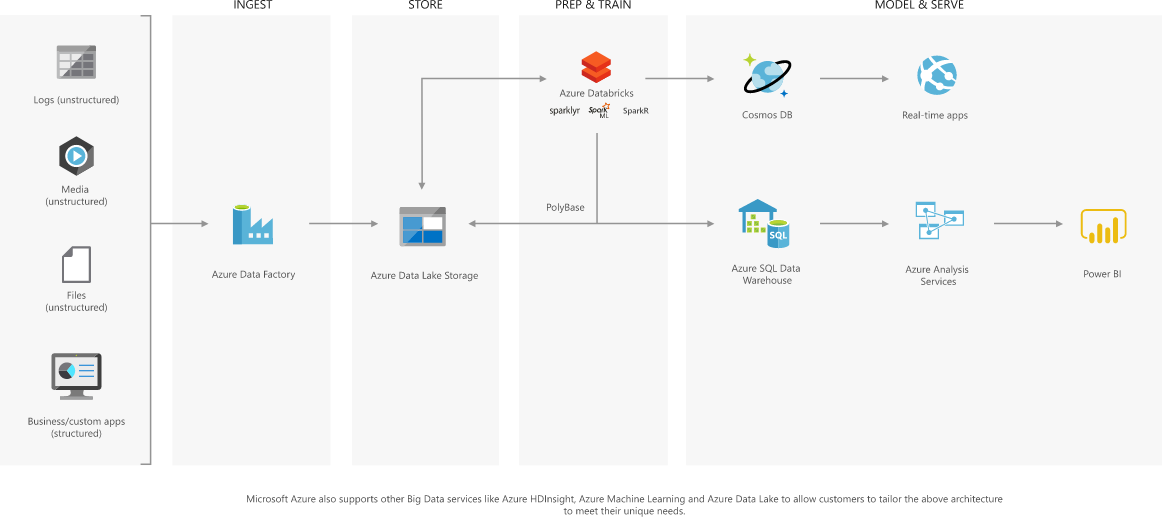

data lake 新式数据仓库

Data lake - Wikipedia https://en.wikipedia.org/wiki/Data_lake

数据湖

Azure Data Lake Storage Gen2 预览版简介 | Microsoft Docs https://docs.microsoft.com/zh-cn/azure/storage/data-lake-storage/introduction

Azure Data Lake Storage Gen2 是适用于大数据分析的可高度缩放、具有成本效益的 Data Lake 解决方案。它将大规模执行和经济高效的特点融入到高性能文件系统的功能中,帮助加快见解产生的时间。Data Lake Storage Gen2 扩展了 Azure Blob 存储功能,并且针对分析工作负载进行了优化。存储数据后即可通过现有的 Blob 存储和兼容 HDFS 的文件系统接口访问这些数据,而无需更改程序或复制数据。Data Lake Storage Gen2 是最为全面的可用 Data Lake。

大数据高级分析

实时分析

Data lake

Jump to navigationJump to search

A data lake is a system or repository of data stored in its natural format,[1] usually object blobs or files. A data lake is usually a single store of all enterprise data including raw copies of source system data and transformed data used for tasks such as reporting, visualization, analytics and machine learning. A data lake can include structured data from relational databases (rows and columns), semi-structured data (CSV, logs, XML, JSON), unstructured data (emails, documents, PDFs) and binary data (images, audio, video). [2]

A data swamp is a deteriorated data lake that is either inaccessible to its intended users or is providing little value.[3][4]

Contents

Background

James Dixon, then chief technology officer at Pentaho, allegedly coined the term[5] to contrast it with data mart, which is a smaller repository of interesting attributes derived from raw data.[6] In promoting data lakes, he argued that data marts have several inherent problems, such as information siloing. PricewaterhouseCoopers said that data lakes could "put an end to data silos.[7] In their study on data lakes they noted that enterprises were "starting to extract and place data for analytics into a single, Hadoop-based repository." Hortonworks, Google, Oracle, Microsoft, Zaloni, Teradata, Cloudera, and Amazon now all have data lake offerings. [8]

Examples

One example of technology used to host a data lake is the distributed file system used in Apache Hadoop. Many companies also use cloud storage services such as Azure Data Lake and Amazon S3.[9] There is a gradual academic interest in the concept of data lakes, for instance, Personal DataLake[10] at Cardiff University to create a new type of data lake which aims at managing big data of individual users by providing a single point of collecting, organizing, and sharing personal data.[11] An earlier data lake (Hadoop 1.0) had limited capabilities with its batch oriented processing (MapReduce) and was the only processing paradigm associated with it. Interacting with the data lake meant you had to have expertise in Java with map reduce and higher level tools like Apache Pig and Apache Hive (which by themselves were batch oriented).

Criticism

In June 2015, David Needle characterized "so-called data lakes" as "one of the more controversial ways to manage big data".[12] PricewaterhouseCoopers were also careful to note in their research that not all data lake initiatives are successful. They quote Sean Martin, CTO of Cambridge Semantics,

| “ | We see customers creating big data graveyards, dumping everything into HDFS [Hadoop Distributed File System] and hoping to do something with it down the road. But then they just lose track of what’s there. The main challenge is not creating a data lake, but taking advantage of the opportunities it presents.[7] |

” |

They describe companies that build successful data lakes as gradually maturing their lake as they figure out which data and metadata are important to the organization. One other criticism about the data lake is that the concept is fuzzy and arbitrary. It refers to any tool or data management practice that does not fit into the traditional data warehouse architecture. The data lake has been referred to as a technology such as Hadoop. The data lake has been labeled as a raw data reservoir or a hub for ETL offload. The data lake has been defined as a central hub for self-service analytics. The concept of the data lake has been overloaded with meanings, which puts the usefulness of the term into question.[13]

data lake 新式数据仓库的更多相关文章

- 构建企业级数据湖?Azure Data Lake Storage Gen2不容错过(上)

背景 相较传统的重量级OLAP数据仓库,“数据湖”以其数据体量大.综合成本低.支持非结构化数据.查询灵活多变等特点,受到越来越多企业的青睐,逐渐成为了现代数据平台的核心和架构范式. 数据湖的核心功能, ...

- 构建企业级数据湖?Azure Data Lake Storage Gen2实战体验(中)

引言 相较传统的重量级OLAP数据仓库,“数据湖”以其数据体量大.综合成本低.支持非结构化数据.查询灵活多变等特点,受到越来越多企业的青睐,逐渐成为了现代数据平台的核心和架构范式. 因此数据湖相关服务 ...

- 构建企业级数据湖?Azure Data Lake Storage Gen2实战体验(下)

相较传统的重量级OLAP数据仓库,“数据湖”以其数据体量大.综合成本低.支持非结构化数据.查询灵活多变等特点,受到越来越多企业的青睐,逐渐成为了现代数据平台的核心和架构范式. 作为微软Azure上最新 ...

- Azure Data Lake Storage Gen2实战体验

相较传统的重量级OLAP数据仓库,“数据湖”以其数据体量大.综合成本低.支持非结构化数据.查询灵活多变等特点,受到越来越多企业的青睐,逐渐成为了现代数据平台的核心和架构范式. 作为微软Azure上最新 ...

- 场景4 Data Warehouse Management 数据仓库

场景4 Data Warehouse Management 数据仓库 parallel 4 100% —> 必须获得指定的4个并行度,如果获得的进程个数小于设置的并行度个数,则操作失败 para ...

- Data Lake Analytics的Geospatial分析函数

0. 简介 为满足部分客户在云上做Geometry数据的分析需求,阿里云Data Lake Analytics(以下简称:DLA)支持多种格式的地理空间数据处理函数,符合Open Geospatial ...

- Data Lake Analytics + OSS数据文件格式处理大全

0. 前言 Data Lake Analytics是Serverless化的云上交互式查询分析服务.用户可以使用标准的SQL语句,对存储在OSS.TableStore上的数据无需移动,直接进行查询分析 ...

- Modern Data Lake with Minio : Part 2

转自: https://blog.minio.io/modern-data-lake-with-minio-part-2-f24fb5f82424 In the first part of this ...

- Modern Data Lake with Minio : Part 1

转自:https://blog.minio.io/modern-data-lake-with-minio-part-1-716a49499533 Modern data lakes are now b ...

随机推荐

- 彻底清除Linux centos minerd木马 实战 跟redis的设置有关

top -c把cpu占用最多的进程找出来: Tasks: total, running, sleeping, stopped, zombie Cpu(s): 72.2%us, 5.9%sy, 0.0% ...

- 用wget做站点镜像

用wget做站点镜像 -- :: 分类: LINUX # wget -r -p -np -k http://xxx.edu.cn -r 表示递归下载,会下载所有的链接,不过要注意的是,不要单独使用这个 ...

- 李洪强iOS开发之数据存储

李洪强iOS开发之数据存储 iOS应用数据存储的常用方式 1.lXML属性列表(plist)归档 2.lPreference(偏好设置) 3.lNSKeyedArchiver归档(NSCoding) ...

- 【Code::Blocks】windows 环境下编译 Code::Blocks(已修正)

Code::Blocks 在2012-11-25发布了最新的12.11版本,相比上一个版本(10.05),Code::Blocks 进行了许多改进和更新(Change log). 引用 Wikiped ...

- python学习笔记(7)--爬虫隐藏代理

说明: 1. 好像是这个网站的代理http://www.xicidaili.com/ 2. 第2,3行的模块不用导入,之前的忘删了.. 3. http://www.whatismyip.com.tw/ ...

- msyql的内存计算

本文将讨论MySQL内存相关的一些选项,包括: 单位都是b,不是kb,即1B=1/(1024*1024*1024)G 1)全局的buffer,如innodb_buffer_pool_size: 2)线 ...

- PHP+jquery+ajax实现分页

HTML <div id="list"> <ul></ul> </div> <div id="pagecount&q ...

- Hadoop源码分析之客户端向HDFS写数据

转自:http://www.tuicool.com/articles/neUrmu 在上一篇博文中分析了客户端从HDFS读取数据的过程,下面来看看客户端是怎么样向HDFS写数据的,下面的代码将本地文件 ...

- QTreeWidget 获取被双击的子项的层次路径

from PyQt5.QtWidgets import (QApplication, QWidget, QHBoxLayout, QTreeWidget, QTreeWidgetItem, QGrou ...

- Unity中对SQL数据库的操作

在Unity中,我们有时候需要连接数据库来达到数据的读取与储存.而在.NET平台下,ADO.NET为我们提供了公开数据访问服务的类.客户端应用程序可以使用ADO.NET来连接到数据源,并查询,添加,删 ...