mongodb副本集原理及部署记录

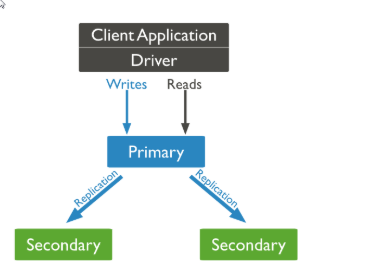

工作原理

MongoDB的复制是基于操作日志oplog,相当于MySQL中的二进制日志,只记录发生改变的记录。复制是将主节点的oplog日志同步并应用到其他从节点的过程。

1.副本集之间的复制是通过oplog日志现实的.备份节点通过查询这个集合就可以知道需要进行复制的操作

2.oplog是节点中local库中的一个固定的集合,在默认情况下oplog初始化大小为空闲磁盘的5%.oplog是capped collection,所以当oplog的空间被占满时,会覆盖最初写入的日志

3.通过改变oplog文档的大小直接改变local所占磁盘空间的大小.可以在配置文件中设置oplogSize参数来指定oplog文档的大小。

4.通过oplog中的操作记录,把数据复制在备份节点.

5.主节点是集中接收写入操作的,MongoDB中的应用连接到主节点进行写入操作,然后记录在主节点操作oplog,从节点成员定期轮询复制此日志并将操作应用于其数据集。

常用命令

rs.conf() 查看副本集配置

rs.status() 查看副本集状态

rs.initiate(config) 初始化副本集

rs.isMaster() 查看是否为主节点

db.getMongo().setSlaveOk(); 赋予副本集副本节点查询数据的权限,副本节点执行

rs.add("ip:port") 新增副本集节点

rs.remove("ip:port") 删除副本集节点

rs.addArb("ip:port") 添加仲裁节点

副本集参数说明

"_id": #集群中节点编号

"name": #成员服务器名称及端口

"health" : #表示成员中的健康状态(0:down;1:up)

"state" : #为0~10,表示成员的当前状态

"stateStr" : #描述该成员是主库(PRIMARY)还是备库(SECONDARY)

"uptime" : #该成员在线时间(秒)

"optime" : #成员最后一次应用日志(oplog)的信息

"optimeDate" : #成员最后一次应用日志(oplog)的时间

"electionTime" : #当前primary从操作日志中选举信息

"electionDate" : #当前primary被选定为primary的日期

"configVersion" : #mongodb版本

"self" : #为true 表示当前节点

开始部署mongod副本集

一、环境准备

操作系统centos6.5 mongodb版本3.6.6

ip地址 主机名 角色

192.168.1.230 mongodb01 副本集主节点

192.168.1.18 mongodb02 副本集副本节点

192.168.1.247 mongodb03 副本集副本节点

关闭3个服务器的防火墙及selinux

二、安装部署mongodb及副本集配置

1、安装部署mongodb

可参考:https://www.cnblogs.com/wusy/p/10405991.html

2、副本集配置

1)yum安装mongodb在做副本集时需在所有服务器配置文件加入以下配置:

replication:

replSetName: repset(副本集名,可自定义) #副本集配置

security: #开启副本集用户认证

authorization: enabled

keyFile: /data/mongodb/keyfile

clusterAuthMode: keyFile

2)源码包构建副本集时,则需加以下语法来配置:

replSet=repset(副本集名) #副本集配置

auth=true #开启副本集用户认证,若为单击模式,则不创建和指定秘钥文件

keyFile=/data2/mongodb-3.6.6/keyfile #指定秘钥文件路径

journal=true #开启journal日志,默认为开启

3、初始化副本集

在任意一台节点初始化都可,但在哪一台做初始化,那么这一台就作为副本集的主节点,这里选择mongodb01(也可使用权重值来控制主副节点)

1)进入mongodb数据库

[root@mongodb01 ~]# mongo --host 192.168.1.230

2)定义副本集配置,这里的_id:repset要与配置文件中所指的副本集名称一致

>config={

... _id:"repset", #务必与配置文件中指定的副本集名称一致

... members:[

... {_id:,host:"192.168.1.230:27017"},

... {_id:,host:"192.168.1.18:27017"},

... {_id:,host:"192.168.1.247:27017"}

... ]

... }

{

"_id" : "repset",

"members" : [

{

"_id" : ,

"host" : "192.168.1.230:27017"

},

{

"_id" : ,

"host" : "192.168.1.18:27017"

},

{

"_id" : ,

"host" : "192.18.1.247:27017"

}

]

}

3)初始化副本集配置

> rs.initiate(config)

{

"ok" : ,

"operationTime" : Timestamp(, ),

"$clusterTime" : {

"clusterTime" : Timestamp(, ),

"signature" : {

"hash" : BinData(,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong()

}

}

}

4)查看集群节点状态(health为1表明正常,为0表明异常)

repset:PRIMARY> rs.status()

{

"set" : "repset",

"date" : ISODate("2019-03-02T04:50:47.554Z"),

"myState" : ,

"term" : NumberLong(),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -,

"heartbeatIntervalMillis" : NumberLong(),

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(, ),

"t" : NumberLong()

},

"readConcernMajorityOpTime" : {

"ts" : Timestamp(, ),

"t" : NumberLong()

},

"appliedOpTime" : {

"ts" : Timestamp(, ),

"t" : NumberLong()

},

"durableOpTime" : {

"ts" : Timestamp(, ),

"t" : NumberLong()

}

},

"lastStableCheckpointTimestamp" : Timestamp(, ),

"members" : [

{

"_id" : ,

"name" : "192.168.1.230:27017",

"health" : ,

"state" : ,

"stateStr" : "PRIMARY",

"uptime" : ,

"optime" : {

"ts" : Timestamp(, ),

"t" : NumberLong()

},

"optimeDate" : ISODate("2019-03-02T04:50:38Z"),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -,

"infoMessage" : "could not find member to sync from",

"electionTime" : Timestamp(, ),

"electionDate" : ISODate("2019-03-02T04:50:07Z"),

"configVersion" : ,

"self" : true,

"lastHeartbeatMessage" : ""

},

{

"_id" : ,

"name" : "192.168.1.18:27017",

"health" : ,

"state" : ,

"stateStr" : "SECONDARY",

"uptime" : ,

"optime" : {

"ts" : Timestamp(, ),

"t" : NumberLong()

},

"optimeDurable" : {

"ts" : Timestamp(, ),

"t" : NumberLong()

},

"optimeDate" : ISODate("2019-03-02T04:50:38Z"),

"optimeDurableDate" : ISODate("2019-03-02T04:50:38Z"),

"lastHeartbeat" : ISODate("2019-03-02T04:50:47.382Z"),

"lastHeartbeatRecv" : ISODate("2019-03-02T04:50:46.242Z"),

"pingMs" : NumberLong(),

"lastHeartbeatMessage" : "",

"syncingTo" : "192.168.1.247:27017",

"syncSourceHost" : "192.168.1.247:27017",

"syncSourceId" : ,

"infoMessage" : "",

"configVersion" :

},

{

"_id" : ,

"name" : "192.168.1.247:27017",

"health" : ,

"state" : ,

"stateStr" : "SECONDARY",

"uptime" : ,

"optime" : {

"ts" : Timestamp(, ),

"t" : NumberLong()

},

"optimeDurable" : {

"ts" : Timestamp(, ),

"t" : NumberLong()

},

"optimeDate" : ISODate("2019-03-02T04:50:38Z"),

"optimeDurableDate" : ISODate("2019-03-02T04:50:38Z"),

"lastHeartbeat" : ISODate("2019-03-02T04:50:47.382Z"),

"lastHeartbeatRecv" : ISODate("2019-03-02T04:50:46.183Z"),

"pingMs" : NumberLong(),

"lastHeartbeatMessage" : "",

"syncingTo" : "192.168.1.230:27017",

"syncSourceHost" : "192.168.1.230:27017",

"syncSourceId" : ,

"infoMessage" : "",

"configVersion" :

}

],

"ok" : ,

"operationTime" : Timestamp(, ),

"$clusterTime" : {

"clusterTime" : Timestamp(, ),

"signature" : {

"hash" : BinData(,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong()

}

}

}

repset:PRIMARY>

5)查看是否为主节点(可在其他节点执行查看)

repset:PRIMARY> rs.isMaster()

{

"hosts" : [

"192.168.1.230:27017",

"192.168.1.18:27017",

"192.168.1.247:27017"

],

"setName" : "repset",

"setVersion" : ,

"ismaster" : true,

"secondary" : false,

"primary" : "192.168.1.230:27017",

"me" : "192.168.1.230:27017",

"electionId" : ObjectId("7fffffff0000000000000001"),

"lastWrite" : {

"opTime" : {

"ts" : Timestamp(, ),

"t" : NumberLong()

},

"lastWriteDate" : ISODate("2019-03-02T04:52:28Z"),

"majorityOpTime" : {

"ts" : Timestamp(, ),

"t" : NumberLong()

},

"majorityWriteDate" : ISODate("2019-03-02T04:52:28Z")

},

"maxBsonObjectSize" : ,

"maxMessageSizeBytes" : ,

"maxWriteBatchSize" : ,

"localTime" : ISODate("2019-03-02T04:52:32.106Z"),

"logicalSessionTimeoutMinutes" : ,

"minWireVersion" : ,

"maxWireVersion" : ,

"readOnly" : false,

"ok" : ,

"operationTime" : Timestamp(, ),

"$clusterTime" : {

"clusterTime" : Timestamp(, ),

"signature" : {

"hash" : BinData(,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong()

}

}

}

6)可登入副本集副本节点,并查看是否为主节点或副本节点

[root@mongodb02 ~]# mongo --host 192.168.1.18 repset:SECONDARY> repset:SECONDARY> rs.isMaster()

{

"hosts" : [

"192.168.1.230:27017",

"192.168.1.18:27017",

"192.168.1.247:27017"

],

"setName" : "repset",

"setVersion" : ,

"ismaster" : false,

"secondary" : true,

"primary" : "192.168.1.230:27017",

"me" : "192.168.1.18:27017",

"lastWrite" : {

"opTime" : {

"ts" : Timestamp(, ),

"t" : NumberLong()

},

"lastWriteDate" : ISODate("2019-03-02T04:54:18Z"),

"majorityOpTime" : {

"ts" : Timestamp(, ),

"t" : NumberLong()

},

"majorityWriteDate" : ISODate("2019-03-02T04:54:18Z")

},

"maxBsonObjectSize" : ,

"maxMessageSizeBytes" : ,

"maxWriteBatchSize" : ,

"localTime" : ISODate("2019-03-02T04:54:27.819Z"),

"logicalSessionTimeoutMinutes" : ,

"minWireVersion" : ,

"maxWireVersion" : ,

"readOnly" : false,

"ok" : ,

"operationTime" : Timestamp(, ),

"$clusterTime" : {

"clusterTime" : Timestamp(, ),

"signature" : {

"hash" : BinData(,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong()

}

}

}

三、登入副本集副本集节点验证数据复制功能(默认副本集的副本节点不能查看数据)

1、在主节点mongodb01上插入数据,在副本节点查看数据

repset:PRIMARY> use test

switched to db test repset:PRIMARY> db.test.save({id:"one",name:"wushaoyu"})

WriteResult({ "nInserted" : })

2、进入副本节点mongodb02查看主库插入的数据

repset:SECONDARY> use test

switched to db test

repset:SECONDARY> show dbs

--02T13::06.503+ E QUERY [js] Error: listDatabases failed:{

"operationTime" : Timestamp(, ),

"ok" : ,

"errmsg" : "not master and slaveOk=false",

"code" : ,

"codeName" : "NotMasterNoSlaveOk",

"$clusterTime" : {

"clusterTime" : Timestamp(, ),

"signature" : {

"hash" : BinData(,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong()

}

}

} :

_getErrorWithCode@src/mongo/shell/utils.js::

Mongo.prototype.getDBs@src/mongo/shell/mongo.js::

shellHelper.show@src/mongo/shell/utils.js::

shellHelper@src/mongo/shell/utils.js::

@(shellhelp2)::

注:上面出现了报错

因为主库插入的数据,从库是不具备读的权限的,所以要设置副本节点可以读

repset:SECONDARY> db.getMongo().setSlaveOk(); repset:SECONDARY> db.test.find()

{ "_id" : ObjectId("5c7a0f3a6698c70ef075f0a8"), "id" : "one", "name" : "wushaoyu" } repset:SECONDARY> show tables

test

注:由此看到,副本节点已经把主节点的数据复制过来了(在mongodb03上做同样操作即可)

四、验证故障转移功能,停止当前副本主节点,验证高可用

先停止副本主节点,在查看副本节点的状态,

1)停止副本主节点的mongodb

[root@mongodb01 ~]# ps -ef |grep mongod

mongod : ? :: /usr/bin/mongod -f /etc/mongod.conf

root : pts/ :: grep mongod [root@mongodb01 ~]# kill -

2)登录其他两个副本集节点,查看是否推选出新的主节点

[root@mongodb02 ~]# mongo --host 192.168.1.18 repset:SECONDARY> rs.isMaster()

{

"hosts" : [

"192.168.1.230:27017",

"192.168.1.18:27017",

"192.168.1.247:27017"

],

"setName" : "repset",

"setVersion" : ,

12 "ismaster" : false,

13 "secondary" : true,

14 "primary" : "192.168.1.247:27017",

15 "me" : "192.168.1.18:27017",

"lastWrite" : {

"opTime" : {

"ts" : Timestamp(, ),

"t" : NumberLong()

},

"lastWriteDate" : ISODate("2019-03-02T05:16:41Z"),

"majorityOpTime" : {

"ts" : Timestamp(, ),

"t" : NumberLong()

},

"majorityWriteDate" : ISODate("2019-03-02T05:16:41Z")

},

"maxBsonObjectSize" : ,

"maxMessageSizeBytes" : ,

"maxWriteBatchSize" : ,

"localTime" : ISODate("2019-03-02T05:16:48.433Z"),

"logicalSessionTimeoutMinutes" : ,

"minWireVersion" : ,

"maxWireVersion" : ,

"readOnly" : false,

"ok" : ,

"operationTime" : Timestamp(, ),

"$clusterTime" : {

"clusterTime" : Timestamp(, ),

"signature" : {

"hash" : BinData(,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong()

}

}

} [root@mongodb03 ~]# mongo --host 192.168.1.247 repset:PRIMARY> rs.isMaster()

{

"hosts" : [

"192.168.1.230:27017",

"192.168.1.18:27017",

"192.168.1.247:27017"

],

"setName" : "repset",

"setVersion" : ,

58 "ismaster" : true,

59 "secondary" : false,

60 "primary" : "192.168.1.247:27017",

61 "me" : "192.168.1.247:27017",

"electionId" : ObjectId("7fffffff0000000000000002"),

"lastWrite" : {

"opTime" : {

"ts" : Timestamp(, ),

"t" : NumberLong()

},

"lastWriteDate" : ISODate("2019-03-02T05:19:31Z"),

"majorityOpTime" : {

"ts" : Timestamp(, ),

"t" : NumberLong()

},

"majorityWriteDate" : ISODate("2019-03-02T05:19:31Z")

},

"maxBsonObjectSize" : ,

"maxMessageSizeBytes" : ,

"maxWriteBatchSize" : ,

"localTime" : ISODate("2019-03-02T05:19:35.282Z"),

"logicalSessionTimeoutMinutes" : ,

"minWireVersion" : ,

"maxWireVersion" : ,

"readOnly" : false,

"ok" : ,

"operationTime" : Timestamp(, ),

"$clusterTime" : {

"clusterTime" : Timestamp(, ),

"signature" : {

"hash" : BinData(,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong()

}

}

}

ps:发现主节点宕机之后,mongodb03为新推选出来的主节点

3)在新的主节点mongodb03插入数据,在mongodb02上查看是否同步

[root@mongodb03 ~]# mongo --host 192.168.1.247 repset:PRIMARY> use wsy

switched to db wsy

repset:PRIMARY> db.wsy.save({"name":"wsy"})

WriteResult({ "nInserted" : }) [root@mongodb02 ~]# mongo --host 192.168.1.18 repset:SECONDARY> db.getMongo().setSlaveOk();

repset:SECONDARY> show tables

wsy repset:SECONDARY> db.wsy.find()

{ "_id" : ObjectId("5c7a133007ebf60ac5870bae"), "name" : "wsy" }

由此可看出,数据同步成功

4)启动先前的mongodb01,查看状态,mongodb成为了当前主节点的副本节点,并且在它宕机期间插入的数据也同步了过来

[root@mongodb01 ~]# /etc/init.d/mongod start [root@mongodb01 ~]# mongo --host 192.168.1.230 repset:SECONDARY> rs.isMaster()

{

"hosts" : [

"192.168.1.230:27017",

"192.168.1.18:27017",

"192.168.1.247:27017"

],

"setName" : "repset",

"setVersion" : ,

14 "ismaster" : false,

15 "secondary" : true,

16 "primary" : "192.168.1.247:27017",

17 "me" : "192.168.1.230:27017",

"lastWrite" : {

"opTime" : {

"ts" : Timestamp(, ),

"t" : NumberLong()

},

"lastWriteDate" : ISODate("2019-03-02T05:32:51Z"),

"majorityOpTime" : {

"ts" : Timestamp(, ),

"t" : NumberLong()

},

"majorityWriteDate" : ISODate("2019-03-02T05:32:51Z")

},

"maxBsonObjectSize" : ,

"maxMessageSizeBytes" : ,

"maxWriteBatchSize" : ,

"localTime" : ISODate("2019-03-02T05:32:59.225Z"),

"logicalSessionTimeoutMinutes" : ,

"minWireVersion" : ,

"maxWireVersion" : ,

"readOnly" : false,

"ok" : ,

"operationTime" : Timestamp(, ),

"$clusterTime" : {

"clusterTime" : Timestamp(, ),

"signature" : {

"hash" : BinData(,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong()

}

}

}

#开启副本节点读的权限

repset:SECONDARY> db.getMongo().setSlaveOk();

repset:SECONDARY> db.wsy.find()

{ "_id" : ObjectId("5c7a133007ebf60ac5870bae"), "name" : "wsy" }

注:在原来的主节点宕机期间,新选举出来的主机插入的数据,也会同步过来

五、java程序连接副本集测试

public class TestMongoDBReplSet {

public static void main(String[] args) {

try {

List<ServerAddress> addresses = new ArrayList<ServerAddress>();

ServerAddress address1 = new ServerAddress("192.168.1.230" , );

ServerAddress address2 = new ServerAddress("192.168.1.18" , );

ServerAddress address3 = new ServerAddress("192.168.1.247" , );

addresses.add(address1);

addresses.add(address2);

addresses.add(address3);

MongoClient client = new MongoClient(addresses);

DB db = client.getDB( "test");

DBCollection coll = db.getCollection( "testdb");

// 插入

BasicDBObject object = new BasicDBObject();

object.append( "test2", "testval2" );

coll.insert(object);

DBCursor dbCursor = coll.find();

while (dbCursor.hasNext()) {

DBObject dbObject = dbCursor.next();

System. out.println(dbObject.toString());

}

} catch (Exception e) {

e.printStackTrace();

}

}

}

目前看起来支持完美的故障转移了,这个架构是不是比较完美了?其实还有很多地方可以优化

六、副本集开启用户认证模式

1、创建keyFile文件

keyFile文件的作用: 集群之间的安全认证,增加安全认证机制KeyFile(开启keyfile认证就默认开启了auth认证了,为了保证后面可以登录,我已创建了用户)

openssl rand -base64 > /root/keyfile 其中666是文件大小 /root/keyfile : 文件存放路径

##该key的权限必须是600;也可改为400

chmod 600 /root/keyfile

注:创建秘钥文件可添置mongodb副本集,在创建,防止服务无法启动等原因

2、修改配置文件

security:

authorization: enabled

keyFile: /root/keyfile

clusterAuthMode: keyFile

3、重新启动mongodb节点

[root@ecs-1e54- ~]# mongod -f /etc/mongod.conf

--20T13::11.479+ I CONTROL [main] Automatically disabling TLS 1.0, to force-enable TLS 1.0 specify --sslDisabledProtocols 'none'

about to fork child process, waiting until server is ready for connections.

forked process:

child process started successfully, parent exiting

注:此处启动需使用这种指定文件的方式启动。因为本人安装mongodb采用rpm包安装,故启动是可用/etc/init.d/mongod start的方式启动,但是在副本集开启了用户认证的方式下,在采取这种启动方式,因为启动脚本的内容原因,就会报错permission denied,导致启动服务失败。

4、连接验证

[root@ecs-2c32 mongodb]# mongo 192.168.1.193:

MongoDB shell version v4.0.0

connecting to: mongodb://192.168.1.193:27017/test

MongoDB server version: 4.0.

repset:PRIMARY> show dbs

--20T13::49.421+ E QUERY [js] Error: listDatabases failed:{

"operationTime" : Timestamp(, ),

"ok" : ,

"errmsg" : "command listDatabases requires authentication",

"code" : ,

"codeName" : "Unauthorized",

"$clusterTime" : {

"clusterTime" : Timestamp(, ),

"signature" : {

"hash" : BinData(,"hKSQT5ImwURihIb0w/CeT4vu44E="),

"keyId" : NumberLong("")

}

}

} :

_getErrorWithCode@src/mongo/shell/utils.js::

Mongo.prototype.getDBs@src/mongo/shell/mongo.js::

shellHelper.show@src/mongo/shell/utils.js::

shellHelper@src/mongo/shell/utils.js::

@(shellhelp2)::

可看出此处需要用户认证方可查看数据

repset:PRIMARY> use admin

switched to db admin

repset:PRIMARY> db.auth('admin','admin') repset:PRIMARY> show dbs

admin .000GB

config .000GB

local .000GB

七、仲裁节点

副本集只能由主节点来接收写操作,而副本节点可以专门用来读操作

当副本节点增多时,主节点的复制压力就会增大,这是就可以添加仲裁节点

在副本集里,仲裁节点仲裁节点不存储数据,只是负责故障转移的群体投票,这样就少了数据复制的压力

1)添加仲裁节点192.168.1.7

repset:PRIMARY> rs.addArb("192.168.1.7:27017")

{

"ok" : ,

"operationTime" : Timestamp(, ),

"$clusterTime" : {

"clusterTime" : Timestamp(, ),

"signature" : {

"hash" : BinData(,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong()

}

}

}

2)查看节点信息

repset:PRIMARY> rs.isMaster()

{

"hosts" : [

"192.168.1.18:27017",

"192.168.1.247:27017",

"192.168.1.230:27017"

],

"arbiters" : [

"192.168.1.7:27017"

],

"setName" : "repset",

"setVersion" : ,

13 "ismaster" : true,

14 "secondary" : false,

15 "primary" : "192.168.1.18:27017",

16 "me" : "192.168.1.18:27017",

"electionId" : ObjectId("7fffffff0000000000000003"),

"lastWrite" : {

"opTime" : {

"ts" : Timestamp(, ),

"t" : NumberLong()

},

"lastWriteDate" : ISODate("2019-03-02T09:38:33Z"),

"majorityOpTime" : {

"ts" : Timestamp(, ),

"t" : NumberLong()

},

"majorityWriteDate" : ISODate("2019-03-02T09:38:33Z")

},

"maxBsonObjectSize" : ,

"maxMessageSizeBytes" : ,

"maxWriteBatchSize" : ,

"localTime" : ISODate("2019-03-02T09:38:45.054Z"),

"logicalSessionTimeoutMinutes" : ,

"minWireVersion" : ,

"maxWireVersion" : ,

"readOnly" : false,

"ok" : ,

"operationTime" : Timestamp(, ),

"$clusterTime" : {

"clusterTime" : Timestamp(, ),

"signature" : {

"hash" : BinData(,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong()

}

}

}

3)登录仲裁节点查看状态

repset:ARBITER> db.getMongo().setSlaveOk();

repset:ARBITER> rs.isMaster()

{

"hosts" : [

"192.168.1.18:27017",

"192.168.1.247:27017",

"192.168.1.230:27017"

],

"arbiters" : [

"192.168.1.7:27017"

],

"setName" : "repset",

"setVersion" : ,

"ismaster" : false,

"secondary" : false,

"primary" : "192.168.1.18:27017",

"arbiterOnly" : true,

"me" : "192.168.1.7:27017",

"lastWrite" : {

"opTime" : {

"ts" : Timestamp(, ),

"t" : NumberLong()

},

"lastWriteDate" : ISODate("2019-03-02T09:43:10Z"),

"majorityOpTime" : {

"ts" : Timestamp(, ),

"t" : NumberLong()

},

"majorityWriteDate" : ISODate("2019-03-02T09:43:10Z")

},

"maxBsonObjectSize" : ,

"maxMessageSizeBytes" : ,

"maxWriteBatchSize" : ,

"localTime" : ISODate("2019-03-02T09:43:14.154Z"),

"logicalSessionTimeoutMinutes" : ,

"minWireVersion" : ,

"maxWireVersion" : ,

"readOnly" : false,

"ok" :

}

mongodb副本集原理及部署记录的更多相关文章

- mongodb副本集基于centos7部署

安装mongodb,基于端口的安装三个节点 下载安装MongoDB安装包 wget https://fastdl.mongodb.org/linux/mongodb-linux-x86_64-rhel ...

- mongodb副本集高可用架构

一.简介 Mongodb复制集由一组Mongod实例(进程)组成,包含一个Primary节点和多个Secondary节点. Mongodb Driver(客户端)的所有数据都写入Primary,Sec ...

- MongoDB副本集(一主一备+仲裁)环境部署-运维操作记录

MongoDB复制集是一个带有故障转移的主从集群.是从现有的主从模式演变而来,增加了自动故障转移和节点成员自动恢复.MongoDB复制集模式中没有固定的主结点,在启动后,多个服务节点间将自动选举产生一 ...

- Mongodb副本集+分片集群环境部署记录

前面详细介绍了mongodb的副本集和分片的原理,这里就不赘述了.下面记录Mongodb副本集+分片集群环境部署过程: MongoDB Sharding Cluster,需要三种角色: Shard S ...

- MongoDB副本集(一主两从)读写分离、故障转移功能环境部署记录

Mongodb是一种非关系数据库(NoSQL),非关系型数据库的产生就是为了解决大数据量.高扩展性.高性能.灵活数据模型.高可用性.MongoDB官方已经不建议使用主从模式了,替代方案是采用副本集的模 ...

- Mongodb副本集+分片集群环境部署

前面详细介绍了mongodb的副本集和分片的原理,这里就不赘述了.下面记录Mongodb副本集+分片集群环境部署过程: MongoDB Sharding Cluster,需要三种角色: Shard S ...

- MongoDB 副本集的原理、搭建、应用

概念: 在了解了这篇文章之后,可以进行该篇文章的说明和测试.MongoDB 副本集(Replica Set)是有自动故障恢复功能的主从集群,有一个Primary节点和一个或多个Secondary节点组 ...

- MongoDB副本集的原理,搭建

介绍: mongodb副本集即客户端连接到整个副本集,不关心具体哪一台机器是否挂掉.主服务器负责整个副本集的读写,副本集定期同步数据备份,一旦主节点挂掉,副本节点就会选举一个新的主服务器,这一切对于应 ...

- (2)MongoDB副本集自动故障转移原理

前文我们搭建MongoDB三成员副本集,了解集群基本特性,今天我们围绕下图聊一聊背后的细节. 默认搭建的replica set均在主节点读写,辅助节点冗余部署,形成高可用和备份, 具备自动故障转移的能 ...

随机推荐

- go get获取gitlab私有仓库的代码

目录 目录 1.Gitlab的搭建 2.如何通过go get,获取Gitlab的代码 目录 1.Gitlab的搭建 在上一篇文章中,已经介绍了如何搭建Gitlab Https服务<Nginx ...

- github在README.md中插入图片

例子

- SQL SERVER 2012 AlwaysOn– 数据库层面 02

搭建 AlwaysOn 是件非常繁琐的工作,需要从两方面考虑,操作系统层面和数据库层面,AlwaysOn 非常依赖于操作系统,域控,群集,节点等概念: DBA 不但要熟悉数据库也要熟悉操作系统的一些概 ...

- Objective-C Block与函数指针比较

相似点 1.函数指针和Block都可以实现回调的操作,声明上也很相似,实现上都可以看成是一个代码片段. 2.函数指针类型和Block类型都可以作为变量和函数参数的类型.(typedef定义别名之后,这 ...

- [LeetCode] 21. 合并两个有序链表

题目链接:https://leetcode-cn.com/problems/merge-two-sorted-lists/ 题目描述: 将两个有序链表合并为一个新的有序链表并返回.新链表是通过拼接给定 ...

- df、du命令

EXT3 最多只能支持32TB的文件系统和2TB的文件,实际只能容纳2TB的文件系统和16GB的文件 Ext3目前只支持32000个子目录 Ext3文件系统使用32位空间记录块数量和i-节点数量 ...

- redis 初步认识二(c#调用redis)

前置:服务器安装redis 1.引用redis 2.使用redis(c#) 一 引用redis (nuget 搜索:CSRedisCore) 二 使用redis(c#) using System ...

- Activiti开发案例之代码生成工作流图片

图例 环境 软件 版本 SpringBoot 1.5.10 activiti-spring-boot-starter-basic 6.0 生成代码 以下是简化代码: /** * 查看实例流程图,根据流 ...

- VMware Workstation 14安装VMware Tools

1 单击虚拟机,选择安装VMware Tools 2 此时会在桌面出现VWware Tools 3 双击进入 4 把*.tar.gz压缩文件cp到/home下 5 sudo tar -zvxf *. ...

- C++中endl和cout语句

cout是什么?它是一个对象,它代表着计算器的显示器屏幕. 在c++里,信息的输出显示可以通过使用cout和左向‘流’操作符(<<)来完成 这个操作符表面了从一个值到控制台的数据流向! c ...