linux(centos8):kubeadm单机安装kubernetes(kubernetes 1.18.3)

一,kubernetes安装的准备工作:

说明:刘宏缔的架构森林是一个专注架构的博客,地址:https://www.cnblogs.com/architectforest

对应的源码可以访问这里获取: https://github.com/liuhongdi/

说明:作者:刘宏缔 邮箱: 371125307@qq.com

二,在kubemaster这台server上安装kubernetes的kubelet/kubectl/kubeadm

[root@kubemaster ~]# vi /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

kubelet:是systemd管理的一个daemon,负责启动pod和容器,

它是k8s中唯一在宿主机中启动的后台进程; kubeadm: 负责安装初始化集群,部署完成之后不会再使用 kubectl: k8s的命令行工具,是管理k8s使用的主要工具

用于管理pod/service

[root@kubemaster ~]# dnf install kubectl kubelet kubeadm

[root@kubemaster ~]# kubectl version

Client Version: version.Info{Major:"1", Minor:"18", GitVersion:"v1.18.3", GitCommit:"2e7996e3e2712684bc73f0dec0200d64eec7fe40",

GitTreeState:"clean", BuildDate:"2020-05-20T12:52:00Z", GoVersion:"go1.13.9", Compiler:"gc", Platform:"linux/amd64"}

The connection to the server localhost:8080 was refused - did you specify the right host or port?

[root@kubemaster ~]# kubeadm version

kubeadm version: &version.Info{Major:"1", Minor:"18", GitVersion:"v1.18.3", GitCommit:"2e7996e3e2712684bc73f0dec0200d64eec7fe40",

GitTreeState:"clean", BuildDate:"2020-05-20T12:49:29Z", GoVersion:"go1.13.9", Compiler:"gc", Platform:"linux/amd64"}

[root@kubemaster ~]# kubelet --version

Kubernetes v1.18.3

[root@centos8 ~]# systemctl enable kubelet.service

Created symlink /etc/systemd/system/multi-user.target.wants/kubelet.service → /usr/lib/systemd/system/kubelet.service.

[root@kubemaster ~]# systemctl is-enabled kubelet.service

enabled

三,kubeadm 初始化kubenetes

[root@kubemaster ~]# kubeadm init --kubernetes-version=1.18.3 --apiserver-advertise-address=192.168.219.130 \

--image-repository registry.aliyuncs.com/google_containers

[root@kubemaster ~]# ps auxfww

...

root 1530 0.0 0.2 152904 10540 ? Ss 13:41 0:00 \_ sshd: root [priv]

root 1666 0.0 0.1 152904 5392 ? S 13:41 0:00 | \_ sshd: root@pts/0

root 1673 0.0 0.1 25588 3980 pts/0 Ss 13:41 0:00 | \_ -bash

root 8076 0.0 0.8 142068 32836 pts/0 Sl+ 14:22 0:00 | \_ kubeadm init --kubernetes-version=1.18.3 --apiserver-advertise-address=192.168.219.130 --image-repository registry.aliyuncs.com/google_containers

root 8450 0.3 1.6 711476 63136 pts/0 Sl+ 14:24 0:00 | \_ docker pull registry.aliyuncs.com/google_containers/etcd:3.4.3-0

…

Your Kubernetes control-plane has initialized successfully! To start using your cluster, you need to run the following as a regular user: mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

配置网络

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root: kubeadm join 192.168.219.130:6443 --token up139x.98qlng4m7qk61p0z \

--discovery-token-ca-cert-hash sha256:c718e29ccb1883715489a3fdf53dd810a7764ad038c50fd62a2246344a4d9a73

[root@kubemaster ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

registry.aliyuncs.com/google_containers/kube-proxy v1.18.3 3439b7546f29 3 weeks ago 117MB

registry.aliyuncs.com/google_containers/kube-apiserver v1.18.3 7e28efa976bd 3 weeks ago 173MB

registry.aliyuncs.com/google_containers/kube-controller-manager v1.18.3 da26705ccb4b 3 weeks ago 162MB

registry.aliyuncs.com/google_containers/kube-scheduler v1.18.3 76216c34ed0c 3 weeks ago 95.3MB

registry.aliyuncs.com/google_containers/pause 3.2 80d28bedfe5d 4 months ago 683kB

registry.aliyuncs.com/google_containers/coredns 1.6.7 67da37a9a360 4 months ago 43.8MB

registry.aliyuncs.com/google_containers/etcd 3.4.3-0 303ce5db0e90 7 months ago 288MB

[root@kubemaster ~]# mkdir -p $HOME/.kube

[root@kubemaster ~]# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@kubemaster ~]# chown $(id -u):$(id -g) $HOME/.kube/config

[root@kubemaster ~]# ll .kube/config

-rw------- 1 root root 5451 6月 16 18:25 .kube/config

四,安装网络插件

[root@kubemaster ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

kubemaster NotReady master 5m39s v1.18.3

[root@kubemaster ~]# kubectl get pod --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-7ff77c879f-ttnr9 0/1 Pending 0 6m41s

kube-system coredns-7ff77c879f-x5vps 0/1 Pending 0 6m41s

kube-system etcd-kubemaster 1/1 Running 0 6m40s

kube-system kube-apiserver-kubemaster 1/1 Running 0 6m40s

kube-system kube-controller-manager-kubemaster 1/1 Running 0 6m40s

kube-system kube-proxy-gs7q7 1/1 Running 0 6m40s

kube-system kube-scheduler-kubemaster 1/1 Running 0 6m40s

[root@kubemaster ~]# kubectl apply -f https://docs.projectcalico.org/manifests/calico.yaml

[root@kubemaster ~]# kubectl get pod --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-76d4774d89-nnp4h 1/1 Running 0 20m

kube-system calico-node-xmmj4 1/1 Running 0 20m

kube-system coredns-7ff77c879f-ttnr9 1/1 Running 0 36m

kube-system coredns-7ff77c879f-x5vps 1/1 Running 0 36m

kube-system etcd-kubemaster 1/1 Running 1 36m

kube-system kube-apiserver-kubemaster 1/1 Running 1 36m

kube-system kube-controller-manager-kubemaster 1/1 Running 1 36m

kube-system kube-proxy-gs7q7 1/1 Running 1 36m

kube-system kube-scheduler-kubemaster 1/1 Running 1 36m

[root@kubemaster ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

kubemaster Ready master 41m v1.18.3

状态已变成了Ready

[root@kubemaster ~]# docker images | grep calico

calico/node v3.14.1 04a9b816c753 2 weeks ago 263MB

calico/pod2daemon-flexvol v3.14.1 7f93af2e7e11 2 weeks ago 112MB

calico/cni v3.14.1 35a7136bc71a 2 weeks ago 225MB

calico/kube-controllers v3.14.1 ac08a3af350b 2 weeks ago 52.8MB

[root@kubemaster ~]# more /etc/docker/daemon.json

{

"registry-mirrors":["https://o3trwnyj.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"]

}

[root@kubemaster ~]# systemctl restart docker

五,开启单机模式:配置master节点也作为worker node可运行pod

1,删除原有的taint设置

[root@kubemaster ~]# kubectl taint nodes kubemaster node-role.kubernetes.io/master-

node/kubemaster untainted

[root@kubemaster ~]# kubectl describe node kubemaster

kubectl taint nodes kubemaster node-role.kubernetes.io/master=:NoSchedule

NoSchedule: 一定不能被调度

PreferNoSchedule: 尽量不要调度

NoExecute: 不仅不会调度, 还会驱逐Node上已有的Pod

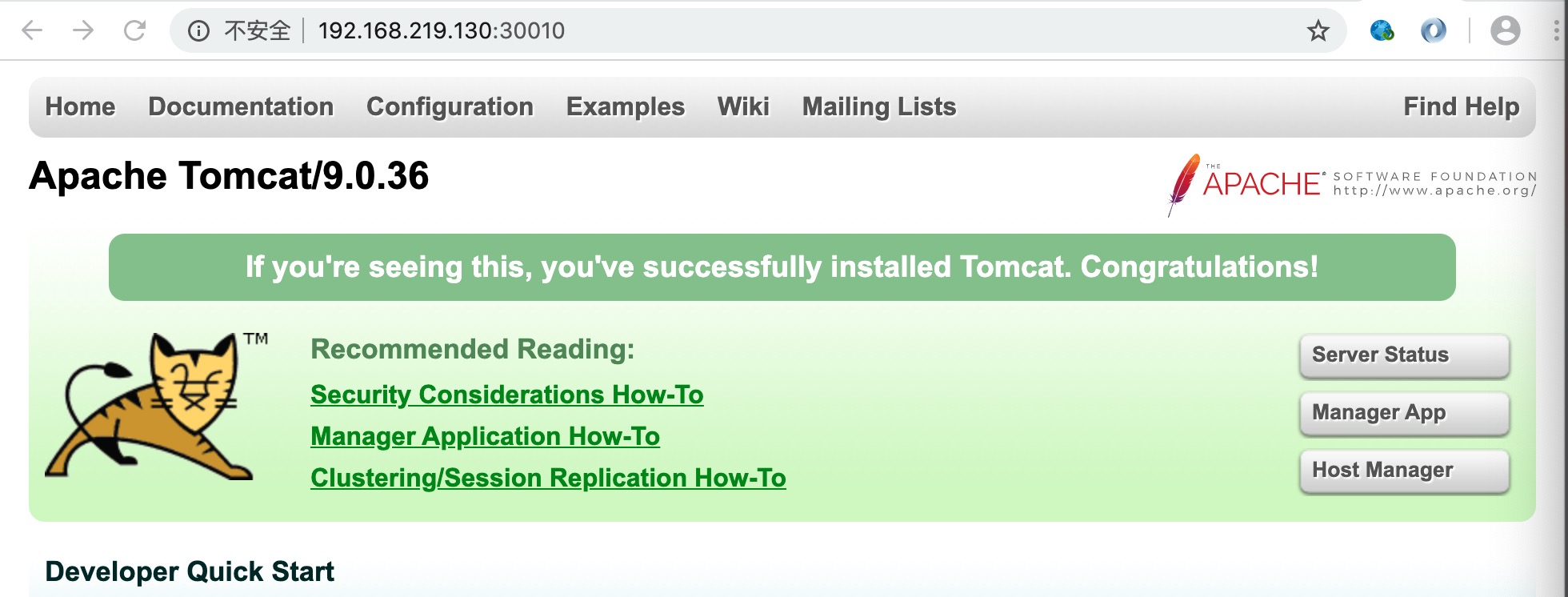

六,测试:在master上运行一个tomcat容器:

[root@kubemaster k8s]# vi tomcat-rc.yaml

apiVersion: v1

kind: ReplicationController

metadata:

name: tomcat-demo

spec:

replicas: 1

selector:

app: tomcat-demo

template:

metadata:

labels:

app: tomcat-demo

spec:

containers:

- name: tomcat-demo

image: tomcat

ports:

- containerPort: 8080

[root@kubemaster k8s]# kubectl apply -f tomcat-rc.yaml

replicationcontroller/tomcat-demo created

[root@kubemaster k8s]# kubectl get pods

NAME READY STATUS RESTARTS AGE

tomcat-demo-7pnzw 0/1 ContainerCreating 0 23s

[root@kubemaster k8s]# kubectl get pods

NAME READY STATUS RESTARTS AGE

tomcat-demo-7pnzw 1/1 Running 0 6m43s

[root@kubemaster k8s]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

tomcat-demo-7pnzw 1/1 Running 0 10m 172.16.141.7 kubemaster <none> <none>

[root@kubemaster k8s]# curl http://172.16.141.7:8080

<!doctype html><html lang="en"><head><title>HTTP Status 404 – Not Found</title>

<style type="text/css">body {font-family:Tahoma,Arial,sans-serif;} h1, h2, h3, b {color:white;background-color:#525D76;}

h1 {font-size:22px;} h2 {font-size:16px;} h3 {font-size:14px;} p {font-size:12px;} a {color:black;}

.line {height:1px;background-color:#525D76;border:none;}</style></head>

<body><h1>HTTP Status 404 – Not Found</h1><hr class="line" /><p><b>Type</b> Status Report</p>

<p><b>Description</b> The origin server did not find a current representation for the target resource

or is not willing to disclose that one exists.</p>

<hr class="line" /><h3>Apache Tomcat/9.0.36</h3>

</body></html>

因为webapps目录下没有可显示的内容

[root@kubemaster k8s]# docker exec -it k8s_tomcat-demo_tomcat-demo-7pnzw_default_b59ef37a-6ffe-4ef1-b6dd-1b2186039294_0 /bin/bash

root@tomcat-demo-7pnzw:/usr/local/tomcat# cp -axv webapps.dist/* webapps/

[root@kubemaster ~]# curl http://172.16.141.7:8080/

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8" />

<title>Apache Tomcat/9.0.36</title>

<link href="favicon.ico" rel="icon" type="image/x-icon" />

<link href="favicon.ico" rel="shortcut icon" type="image/x-icon" />

<link href="tomcat.css" rel="stylesheet" type="text/css" />

</head> <body>

<div id="wrapper">

<div id="navigation" class="curved container">

<span id="nav-home"><a href="https://tomcat.apache.org/">Home</a></span>

<span id="nav-hosts"><a href="/docs/">Documentation</a></span>

<span id="nav-config"><a href="/docs/config/">Configuration</a></span>

<span id="nav-examples"><a href="/examples/">Examples</a></span>

<span id="nav-wiki"><a href="https://wiki.apache.org/tomcat/FrontPage">Wiki</a></span>

<span id="nav-lists"><a href="https://tomcat.apache.org/lists.html">Mailing Lists</a></span>

<span id="nav-help"><a href="https://tomcat.apache.org/findhelp.html">Find Help</a></span>

<br class="separator" />

</div>

…

可以正常显示了

[root@kubemaster k8s]# vi tomcat-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: tomcat-demo

spec:

type: NodePort

ports:

- port: 8080

nodePort: 30010

selector:

app: tomcat-demo

[root@kubemaster k8s]# kubectl apply -f tomcat-svc.yaml

service/tomcat-demo created

[root@kubemaster k8s]# kubectl get service -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 18h <none>

tomcat-demo NodePort 10.111.234.185 <none> 8080:30010/TCP 35s app=tomcat-demo

七,查看linux的版本

[root@kubemaster ~]# cat /etc/redhat-release

CentOS Linux release 8.2.2004 (Core)

[root@kubemaster ~]# uname -r

4.18.0-193.el8.x86_64

linux(centos8):kubeadm单机安装kubernetes(kubernetes 1.18.3)的更多相关文章

- Linux下Kafka单机安装配置方法(图文)

Kafka是一个分布式的.可分区的.可复制的消息系统.它提供了普通消息系统的功能,但具有自己独特的设计.这个独特的设计是什么样的呢 介绍 Kafka是一个分布式的.可分区的.可复制的消息系统.它提供了 ...

- Linux下Kafka单机安装配置方法

Kafka是一个分布式的.可分区的.可复制的消息系统.它提供了普通消息系统的功能,但具有自己独特的设计.这个独特的设计是什么样的呢? 首先让我们看几个基本的消息系统术语: •Kafka将消息以topi ...

- ArcGIS 10.1 for Server安装教程系列—— Linux下的单机安装

http://www.oschina.net/question/565065_81231 因为Linux具有稳定,功能强大等特性,因此常常被用来做为企业内部的服务器,我们的很多用户也是将Ar ...

- linux(centos8):基于java13安装rocketmq-4.7.1(解决jdk不兼容的报错)

一,Rocketmq是什么? 1, RocketMQ是一个队列模型的消息中间件,具有高性能.高可靠.高实时.分布式特点 相比kafka,rocketmq的实时性更强 2,官方网站: http://ro ...

- linux(centos8):为prometheus安装grafana(grafana-7.0.3)

一,grafana的用途 1,grafana是什么? grafana 是用 go 语言编写的开源应用, 它的主要用途是大规模指标数据的可视化展现 它是现在网络架构/应用分析中最流行的时序数据展示工具 ...

- Linux下Kafka单机安装配置

安装jdkJDK版本大于1.8 安装kafkatar -zxvf kafka_2.11-0.10.2.1.tgz mv kafka_2.11-0.10.2.1 /usr/local/kafka 配置k ...

- centos7使用kubeadm安装部署kubernetes 1.14

应用背景: 截止目前为止,高热度的kubernetes版本已经发布至1.14,在此记录一下安装部署步骤和过程中的问题排查. 部署k8s一般两种方式:kubeadm(官方称目前已经GA,可以在生产环境使 ...

- kubernetes之Kubeadm快速安装v1.12.0版

通过Kubeadm只需几条命令即起一个单机版kubernetes集群系统,而后快速上手k8s.在kubeadm中,需手动安装Docker和kubeket服务,Docker运行容器引擎,kubelet是 ...

- 使用 Kubeadm 安装部署 Kubernetes 1.12.1 集群

手工搭建 Kubernetes 集群是一件很繁琐的事情,为了简化这些操作,就产生了很多安装配置工具,如 Kubeadm ,Kubespray,RKE 等组件,我最终选择了官方的 Kubeadm 主要是 ...

随机推荐

- 二、loadrunner参数化连接数据库

2.连接sqlserver数据库.oracle数据库或mysql数据库(只有mysql数据库驱动需要先手动安装) 2.1.新建一个参数,随便设置file还是table类型之类的 2.2.点击Data ...

- loadrunner跑场景时报错Full MDB file. New error messages will be ignored

这个原因是在controller跑场景时,controller的日志文件占用内存太大 解决办法:先找到controller的日志文件Results——Results Setting——找到日志的路径, ...

- k8s滚动更新(六)

实践 滚动更新是一次只更新一小部分副本,成功后,再更新更多的副本,最终完成所有副本的更新.滚动更新的最大的好处是零停机,整个更新过程始终有副本在运行,从而保证了业务的连续性. 下面我们部署三副本应用, ...

- SSM框架整合核心内容

所需要的jar包及其版本 Spring 版本:4.3.18 tx.aop.beans.core.web.web-mvc.context.expression.jdbc MyBatis:3.4.6 ...

- RFID了解

转载自为什么大家都抛弃传统标签选择RFID电子标签? rfid电子标签是一种非接触式的自动识别技术,它通过射频信号来识别目标对象并获取相关数据,识别工作无需人工干预,作为条形码的无线版本,RFID技术 ...

- 使用Golang的singleflight防止缓存击穿

背景 在使用缓存时,容易发生缓存击穿. 缓存击穿:一个存在的key,在缓存过期的瞬间,同时有大量的请求过来,造成所有请求都去读dB,这些请求都会击穿到DB,造成瞬时DB请求量大.压力骤增. singl ...

- Dledger的是如何实现主从自动切换的

前言 hello小伙伴们,今天王子又来继续和大家聊RocketMQ了,之前的文章我们一直说Broker的主从切换是可以基于Dledger实现自动切换的,那么小伙伴们是不是很好奇它究竟是如何实现的呢?今 ...

- js自动生成条形码插件-JsBarcode

JsBarcode.html <!DOCTYPE html> <html lang="en"> <head> <meta charset= ...

- Python练习题 003:完全平方数

[Python练习题 003]一个整数,它加上100后是一个完全平方数,再加上168又是一个完全平方数,请问该数是多少? --------------------------------------- ...

- GAN训练技巧汇总

GAN自推出以来就以训练困难著称,因为它的训练过程并不是寻找损失函数的最小值,而是寻找生成器和判别器之间的纳什均衡.前者可以直接通过梯度下降来完成,而后者除此之外,还需要其它的训练技巧. 下面对历年关 ...