Linux 常见 RAID 及软 RAID 创建

RAID可以大幅度的提高磁盘性能,以及可靠性,这么好的技术怎么能不掌握呢!此篇介绍一些常见RAID,及其在Linux上的软RAID创建方法。

mdadm

- 创建软

RAID

mdadm -C -v /dev/创建的设备名 -l级别 -n数量 添加的磁盘 [-x数量 添加的热备份盘]

-C:创建一个新的阵列--create

-v:显示细节--verbose

-l:设定RAID级别--level=

-n:指定阵列中可用device数目--raid-devices=

-x:指定初始阵列的富余device数目--spare-devices=,空闲盘(热备磁盘)能在工作盘损坏后自动顶替

- 查看详细信息

mdadm -D /dev/设备名

-D:打印一个或多个md device的详细信息--detail

- 查看

RAID的状态

cat /proc/mdstat

- 模拟损坏

mdadm -f /dev/设备名 磁盘

-f:模拟损坏fail

- 移除损坏

mdadm -r /dev/设备名 磁盘

-r:移除remove

- 添加新硬盘作为热备份盘

mdadm -a /dev/设备名 磁盘

-a:添加add

RAID0

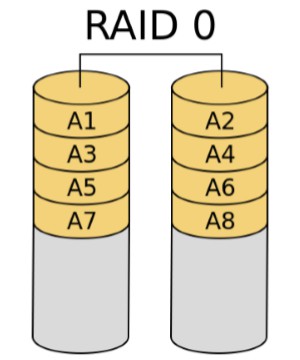

RAID0俗称条带,它将两个或多个硬盘组成一个逻辑硬盘,容量是所有硬盘之和,因为是多个硬盘组合成一个,故可并行写操作,写入速度提高,但此方式硬盘数据没有冗余,没有容错,一旦一个物理硬盘损坏,则所有数据均丢失。因而,RAID0适合于对数据量大,但安全性要求不高的场景,比如音像、视频文件的存储等。

实验:RAID0创建,格式化,挂载使用。

1.添加2块20G的硬盘,分区,类型ID为fd。

[root@localhost ~]# fdisk -l | grep raid

/dev/sdb1 2048 41943039 20970496 fd Linux raid autodetect

/dev/sdc1 2048 41943039 20970496 fd Linux raid autodetect

2.创建RAID0。

[root@localhost ~]# mdadm -C -v /dev/md0 -l0 -n2 /dev/sd{b,c}1

mdadm: chunk size defaults to 512K

mdadm: Fail create md0 when using /sys/module/md_mod/parameters/new_array

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md0 started.

3.查看raidstat状态。

[root@localhost ~]# cat /proc/mdstat

Personalities : [raid0]

md0 : active raid0 sdc1[1] sdb1[0]

41906176 blocks super 1.2 512k chunks

unused devices: <none>

4.查看RAID0的详细信息。

[root@localhost ~]# mdadm -D /dev/md0

/dev/md0:

Version : 1.2

Creation Time : Sun Aug 25 15:28:13 2019

Raid Level : raid0

Array Size : 41906176 (39.96 GiB 42.91 GB)

Raid Devices : 2

Total Devices : 2

Persistence : Superblock is persistent

Update Time : Sun Aug 25 15:28:13 2019

State : clean

Active Devices : 2

Working Devices : 2

Failed Devices : 0

Spare Devices : 0

Chunk Size : 512K

Consistency Policy : none

Name : localhost:0 (local to host localhost)

UUID : 7ff54c57:b99a59da:6b56c6d5:a4576ccf

Events : 0

Number Major Minor RaidDevice State

0 8 17 0 active sync /dev/sdb1

1 8 33 1 active sync /dev/sdc1

5.格式化。

[root@localhost ~]# mkfs.xfs /dev/md0

meta-data=/dev/md0 isize=512 agcount=16, agsize=654720 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=0, sparse=0

data = bsize=4096 blocks=10475520, imaxpct=25

= sunit=128 swidth=256 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=1

log =internal log bsize=4096 blocks=5120, version=2

= sectsz=512 sunit=8 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

6.挂载使用。

[root@localhost ~]# mkdir /mnt/md0

[root@localhost ~]# mount /dev/md0 /mnt/md0/

[root@localhost ~]# df -hT

Filesystem Type Size Used Avail Use% Mounted on

/dev/mapper/centos-root xfs 17G 1013M 16G 6% /

devtmpfs devtmpfs 901M 0 901M 0% /dev

tmpfs tmpfs 912M 0 912M 0% /dev/shm

tmpfs tmpfs 912M 8.7M 904M 1% /run

tmpfs tmpfs 912M 0 912M 0% /sys/fs/cgroup

/dev/sda1 xfs 1014M 143M 872M 15% /boot

tmpfs tmpfs 183M 0 183M 0% /run/user/0

/dev/md0 xfs 40G 33M 40G 1% /mnt/md0

RAID1

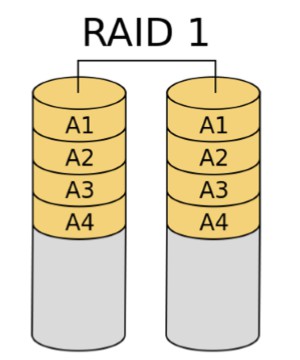

RAID1俗称镜像,它最少由两个硬盘组成,且两个硬盘上存储的数据均相同,以实现数据冗余。RAID1读操作速度有所提高,写操作理论上与单硬盘速度一样,但由于数据需要同时写入所有硬盘,实际上稍为下降。容错性是所有组合方式里最好的,只要有一块硬盘正常,则能保持正常工作。但它对硬盘容量的利用率则是最低,只有50%,因而成本也是最高。RAID1适合对数据安全性要求非常高的场景,比如存储数据库数据文件之类。

实验:RAID1创建,格式化,挂载使用,故障模拟,重新添加热备份。

1.添加3块20G的硬盘,分区,类型ID为fd。

[root@localhost ~]# fdisk -l | grep raid

/dev/sdb1 2048 41943039 20970496 fd Linux raid autodetect

/dev/sdc1 2048 41943039 20970496 fd Linux raid autodetect

/dev/sdd1 2048 41943039 20970496 fd Linux raid autodetect

2.创建RAID1,并添加1个热备份盘。

[root@localhost ~]# mdadm -C -v /dev/md1 -l1 -n2 /dev/sd{b,c}1 -x1 /dev/sdd1

mdadm: Note: this array has metadata at the start and

may not be suitable as a boot device. If you plan to

store '/boot' on this device please ensure that

your boot-loader understands md/v1.x metadata, or use

--metadata=0.90

mdadm: size set to 20953088K

Continue creating array? y

mdadm: Fail create md1 when using /sys/module/md_mod/parameters/new_array

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md1 started.

3.查看raidstat状态。

[root@localhost ~]# cat /proc/mdstat

Personalities : [raid1]

md1 : active raid1 sdd1[2](S) sdc1[1] sdb1[0]

20953088 blocks super 1.2 [2/2] [UU]

[========>............] resync = 44.6% (9345792/20953088) finish=0.9min speed=203996K/sec

unused devices: <none>

[root@localhost ~]# cat /proc/mdstat

Personalities : [raid1]

md1 : active raid1 sdd1[2](S) sdc1[1] sdb1[0]

20953088 blocks super 1.2 [2/2] [UU]

unused devices: <none>

4.查看RAID1的详细信息。

[root@localhost ~]# mdadm -D /dev/md1

/dev/md1:

Version : 1.2

Creation Time : Sun Aug 25 15:38:44 2019

Raid Level : raid1

Array Size : 20953088 (19.98 GiB 21.46 GB)

Used Dev Size : 20953088 (19.98 GiB 21.46 GB)

Raid Devices : 2

Total Devices : 3

Persistence : Superblock is persistent

Update Time : Sun Aug 25 15:39:24 2019

State : clean, resyncing

Active Devices : 2

Working Devices : 3

Failed Devices : 0

Spare Devices : 1

Consistency Policy : resync

Resync Status : 40% complete

Name : localhost:1 (local to host localhost)

UUID : b921e8b3:a18e2fc9:11706ba4:ed633dfd

Events : 6

Number Major Minor RaidDevice State

0 8 17 0 active sync /dev/sdb1

1 8 33 1 active sync /dev/sdc1

2 8 49 - spare /dev/sdd1

5.格式化。

[root@localhost ~]# mkfs.xfs /dev/md1

meta-data=/dev/md1 isize=512 agcount=4, agsize=1309568 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=0, sparse=0

data = bsize=4096 blocks=5238272, imaxpct=25

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=1

log =internal log bsize=4096 blocks=2560, version=2

= sectsz=512 sunit=0 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

6.挂载使用。

[root@localhost ~]# mkdir /mnt/md1

[root@localhost ~]# mount /dev/md1 /mnt/md1/

[root@localhost ~]# df -hT

Filesystem Type Size Used Avail Use% Mounted on

/dev/mapper/centos-root xfs 17G 1014M 16G 6% /

devtmpfs devtmpfs 901M 0 901M 0% /dev

tmpfs tmpfs 912M 0 912M 0% /dev/shm

tmpfs tmpfs 912M 8.7M 904M 1% /run

tmpfs tmpfs 912M 0 912M 0% /sys/fs/cgroup

/dev/sda1 xfs 1014M 143M 872M 15% /boot

tmpfs tmpfs 183M 0 183M 0% /run/user/0

/dev/md1 xfs 20G 33M 20G 1% /mnt/md1

7.创建测试文件。

[root@localhost ~]# touch /mnt/md1/test{1..9}.txt

[root@localhost ~]# ls /mnt/md1/

test1.txt test2.txt test3.txt test4.txt test5.txt test6.txt test7.txt test8.txt test9.txt

8.故障模拟。

[root@localhost ~]# mdadm -f /dev/md1 /dev/sdb1

mdadm: set /dev/sdb1 faulty in /dev/md1

9.查看测试文件。

[root@localhost ~]# ls /mnt/md1/

test1.txt test2.txt test3.txt test4.txt test5.txt test6.txt test7.txt test8.txt test9.txt

10.查看状态。

[root@localhost ~]# cat /proc/mdstat

Personalities : [raid1]

md1 : active raid1 sdd1[2] sdc1[1] sdb1[0](F)

20953088 blocks super 1.2 [2/1] [_U]

[=====>...............] recovery = 26.7% (5600384/20953088) finish=1.2min speed=200013K/sec

unused devices: <none>

[root@localhost ~]# mdadm -D /dev/md1

/dev/md1:

Version : 1.2

Creation Time : Sun Aug 25 15:38:44 2019

Raid Level : raid1

Array Size : 20953088 (19.98 GiB 21.46 GB)

Used Dev Size : 20953088 (19.98 GiB 21.46 GB)

Raid Devices : 2

Total Devices : 3

Persistence : Superblock is persistent

Update Time : Sun Aug 25 15:47:57 2019

State : active, degraded, recovering

Active Devices : 1

Working Devices : 2

Failed Devices : 1

Spare Devices : 1

Consistency Policy : resync

Rebuild Status : 17% complete

Name : localhost:1 (local to host localhost)

UUID : b921e8b3:a18e2fc9:11706ba4:ed633dfd

Events : 22

Number Major Minor RaidDevice State

2 8 49 0 spare rebuilding /dev/sdd1

1 8 33 1 active sync /dev/sdc1

0 8 17 - faulty /dev/sdb1

11.再次查看状态。

[root@localhost ~]# cat /proc/mdstat

Personalities : [raid1]

md1 : active raid1 sdd1[2] sdc1[1] sdb1[0](F)

20953088 blocks super 1.2 [2/2] [UU]

unused devices: <none>

[root@localhost ~]# mdadm -D /dev/md1

/dev/md1:

Version : 1.2

Creation Time : Sun Aug 25 15:38:44 2019

Raid Level : raid1

Array Size : 20953088 (19.98 GiB 21.46 GB)

Used Dev Size : 20953088 (19.98 GiB 21.46 GB)

Raid Devices : 2

Total Devices : 3

Persistence : Superblock is persistent

Update Time : Sun Aug 25 15:49:28 2019

State : active

Active Devices : 2

Working Devices : 2

Failed Devices : 1

Spare Devices : 0

Consistency Policy : resync

Name : localhost:1 (local to host localhost)

UUID : b921e8b3:a18e2fc9:11706ba4:ed633dfd

Events : 37

Number Major Minor RaidDevice State

2 8 49 0 active sync /dev/sdd1

1 8 33 1 active sync /dev/sdc1

0 8 17 - faulty /dev/sdb1

12.移除损坏的磁盘

[root@localhost ~]# mdadm -r /dev/md1 /dev/sdb1

mdadm: hot removed /dev/sdb1 from /dev/md1

[root@localhost ~]# mdadm -D /dev/md1

/dev/md1:

Version : 1.2

Creation Time : Sun Aug 25 15:38:44 2019

Raid Level : raid1

Array Size : 20953088 (19.98 GiB 21.46 GB)

Used Dev Size : 20953088 (19.98 GiB 21.46 GB)

Raid Devices : 2

Total Devices : 2

Persistence : Superblock is persistent

Update Time : Sun Aug 25 15:52:57 2019

State : active

Active Devices : 2

Working Devices : 2

Failed Devices : 0

Spare Devices : 0

Consistency Policy : resync

Name : localhost:1 (local to host localhost)

UUID : b921e8b3:a18e2fc9:11706ba4:ed633dfd

Events : 38

Number Major Minor RaidDevice State

2 8 49 0 active sync /dev/sdd1

1 8 33 1 active sync /dev/sdc1

13.重新添加热备份盘。

[root@localhost ~]# mdadm -a /dev/md1 /dev/sdb1

mdadm: added /dev/sdb1

[root@localhost ~]# mdadm -D /dev/md1

/dev/md1:

Version : 1.2

Creation Time : Sun Aug 25 15:38:44 2019

Raid Level : raid1

Array Size : 20953088 (19.98 GiB 21.46 GB)

Used Dev Size : 20953088 (19.98 GiB 21.46 GB)

Raid Devices : 2

Total Devices : 3

Persistence : Superblock is persistent

Update Time : Sun Aug 25 15:53:32 2019

State : active

Active Devices : 2

Working Devices : 3

Failed Devices : 0

Spare Devices : 1

Consistency Policy : resync

Name : localhost:1 (local to host localhost)

UUID : b921e8b3:a18e2fc9:11706ba4:ed633dfd

Events : 39

Number Major Minor RaidDevice State

2 8 49 0 active sync /dev/sdd1

1 8 33 1 active sync /dev/sdc1

3 8 17 - spare /dev/sdb1

RAID5

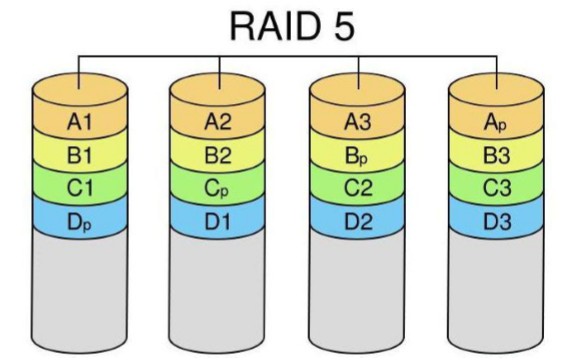

RAID5最少由三个硬盘组成,它将数据分散存储于阵列中的每个硬盘,并且还伴有一个数据校验位,数据位与校验位通过算法能相互验证,当丢失其中的一位时,RAID控制器能通过算法,利用其它两位数据将丢失的数据进行计算还原。因而RAID5最多能允许一个硬盘损坏,有容错性。RAID5相对于其它的组合方式,在容错与成本方面有一个平衡,因而受到大多数使用者的欢迎。一般的磁盘阵列,最常使用的就是RAID5这种方式。

实验:RAID5创建,格式化,挂载使用,故障模拟,重新添加热备份。

1.添加4块20G的硬盘,分区,类型ID为fd。

[root@localhost ~]# fdisk -l | grep raid

/dev/sdb1 2048 41943039 20970496 fd Linux raid autodetect

/dev/sdc1 2048 41943039 20970496 fd Linux raid autodetect

/dev/sdd1 2048 41943039 20970496 fd Linux raid autodetect

/dev/sde1 2048 41943039 20970496 fd Linux raid autodetect

2.创建RAID5,并添加1个热备份盘。

[root@localhost ~]# mdadm -C -v /dev/md5 -l5 -n3 /dev/sd[b-d]1 -x1 /dev/sde1

mdadm: layout defaults to left-symmetric

mdadm: layout defaults to left-symmetric

mdadm: chunk size defaults to 512K

mdadm: size set to 20953088K

mdadm: Fail create md5 when using /sys/module/md_mod/parameters/new_array

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md5 started.

3.查看raidstat状态。

[root@localhost ~]# cat /proc/mdstat

Personalities : [raid6] [raid5] [raid4]

md5 : active raid5 sdd1[4] sde1[3](S) sdc1[1] sdb1[0]

41906176 blocks super 1.2 level 5, 512k chunk, algorithm 2 [3/2] [UU_]

[====>................] recovery = 24.1% (5057340/20953088) finish=1.3min speed=202293K/sec

unused devices: <none>

[root@localhost ~]# cat /proc/mdstat

Personalities : [raid6] [raid5] [raid4]

md5 : active raid5 sdd1[4] sde1[3](S) sdc1[1] sdb1[0]

41906176 blocks super 1.2 level 5, 512k chunk, algorithm 2 [3/3] [UUU]

unused devices: <none>

4.查看RAID5的详细信息。

[root@localhost ~]# mdadm -D /dev/md5

/dev/md5:

Version : 1.2

Creation Time : Sun Aug 25 16:13:44 2019

Raid Level : raid5

Array Size : 41906176 (39.96 GiB 42.91 GB)

Used Dev Size : 20953088 (19.98 GiB 21.46 GB)

Raid Devices : 3

Total Devices : 4

Persistence : Superblock is persistent

Update Time : Sun Aug 25 16:15:29 2019

State : clean

Active Devices : 3

Working Devices : 4

Failed Devices : 0

Spare Devices : 1

Layout : left-symmetric

Chunk Size : 512K

Consistency Policy : resync

Name : localhost:5 (local to host localhost)

UUID : a055094e:9adaff79:2edae9b9:0dcc3f1b

Events : 18

Number Major Minor RaidDevice State

0 8 17 0 active sync /dev/sdb1

1 8 33 1 active sync /dev/sdc1

4 8 49 2 active sync /dev/sdd1

3 8 65 - spare /dev/sde1

5.格式化。

[root@localhost ~]# mkfs.xfs /dev/md5

meta-data=/dev/md5 isize=512 agcount=16, agsize=654720 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=0, sparse=0

data = bsize=4096 blocks=10475520, imaxpct=25

= sunit=128 swidth=256 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=1

log =internal log bsize=4096 blocks=5120, version=2

= sectsz=512 sunit=8 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

6.挂载使用。

[root@localhost ~]# mkdir /mnt/md5

[root@localhost ~]# mount /dev/md5 /mnt/md5/

[root@localhost ~]# df -hT

Filesystem Type Size Used Avail Use% Mounted on

/dev/mapper/centos-root xfs 17G 1014M 16G 6% /

devtmpfs devtmpfs 901M 0 901M 0% /dev

tmpfs tmpfs 912M 0 912M 0% /dev/shm

tmpfs tmpfs 912M 8.7M 904M 1% /run

tmpfs tmpfs 912M 0 912M 0% /sys/fs/cgroup

/dev/sda1 xfs 1014M 143M 872M 15% /boot

tmpfs tmpfs 183M 0 183M 0% /run/user/0

/dev/md5 xfs 40G 33M 40G 1% /mnt/md5

7.创建测试文件。

[root@localhost ~]# touch /mnt/md5/test{1..9}.txt

[root@localhost ~]# ls /mnt/md5/

test1.txt test2.txt test3.txt test4.txt test5.txt test6.txt test7.txt test8.txt test9.txt

8.故障模拟。

[root@localhost ~]# mdadm -f /dev/md5 /dev/sdb1

mdadm: set /dev/sdb1 faulty in /dev/md5

9.查看测试文件。

[root@localhost ~]# ls /mnt/md5/

test1.txt test2.txt test3.txt test4.txt test5.txt test6.txt test7.txt test8.txt test9.txt

10.查看状态。

[root@localhost ~]# cat /proc/mdstat

Personalities : [raid6] [raid5] [raid4]

md5 : active raid5 sdd1[4] sde1[3] sdc1[1] sdb1[0](F)

41906176 blocks super 1.2 level 5, 512k chunk, algorithm 2 [3/2] [_UU]

[====>................] recovery = 21.0% (4411136/20953088) finish=1.3min speed=210054K/sec

unused devices: <none>

[root@localhost ~]# mdadm -D /dev/md5

/dev/md5:

Version : 1.2

Creation Time : Sun Aug 25 16:13:44 2019

Raid Level : raid5

Array Size : 41906176 (39.96 GiB 42.91 GB)

Used Dev Size : 20953088 (19.98 GiB 21.46 GB)

Raid Devices : 3

Total Devices : 4

Persistence : Superblock is persistent

Update Time : Sun Aug 25 16:21:31 2019

State : clean, degraded, recovering

Active Devices : 2

Working Devices : 3

Failed Devices : 1

Spare Devices : 1

Layout : left-symmetric

Chunk Size : 512K

Consistency Policy : resync

Rebuild Status : 12% complete

Name : localhost:5 (local to host localhost)

UUID : a055094e:9adaff79:2edae9b9:0dcc3f1b

Events : 23

Number Major Minor RaidDevice State

3 8 65 0 spare rebuilding /dev/sde1

1 8 33 1 active sync /dev/sdc1

4 8 49 2 active sync /dev/sdd1

0 8 17 - faulty /dev/sdb1

11.再次查看状态。

[root@localhost ~]# cat /proc/mdstat

Personalities : [raid6] [raid5] [raid4]

md5 : active raid5 sdd1[4] sde1[3] sdc1[1] sdb1[0](F)

41906176 blocks super 1.2 level 5, 512k chunk, algorithm 2 [3/3] [UUU]

unused devices: <none>

[root@localhost ~]# mdadm -D /dev/md5

/dev/md5:

Version : 1.2

Creation Time : Sun Aug 25 16:13:44 2019

Raid Level : raid5

Array Size : 41906176 (39.96 GiB 42.91 GB)

Used Dev Size : 20953088 (19.98 GiB 21.46 GB)

Raid Devices : 3

Total Devices : 4

Persistence : Superblock is persistent

Update Time : Sun Aug 25 16:23:09 2019

State : clean

Active Devices : 3

Working Devices : 3

Failed Devices : 1

Spare Devices : 0

Layout : left-symmetric

Chunk Size : 512K

Consistency Policy : resync

Name : localhost:5 (local to host localhost)

UUID : a055094e:9adaff79:2edae9b9:0dcc3f1b

Events : 39

Number Major Minor RaidDevice State

3 8 65 0 active sync /dev/sde1

1 8 33 1 active sync /dev/sdc1

4 8 49 2 active sync /dev/sdd1

0 8 17 - faulty /dev/sdb1

12.移除损坏的磁盘。

[root@localhost ~]# mdadm -r /dev/md5 /dev/sdb1

mdadm: hot removed /dev/sdb1 from /dev/md5

[root@localhost ~]# mdadm -D /dev/md5

/dev/md5:

Version : 1.2

Creation Time : Sun Aug 25 16:13:44 2019

Raid Level : raid5

Array Size : 41906176 (39.96 GiB 42.91 GB)

Used Dev Size : 20953088 (19.98 GiB 21.46 GB)

Raid Devices : 3

Total Devices : 3

Persistence : Superblock is persistent

Update Time : Sun Aug 25 16:25:01 2019

State : clean

Active Devices : 3

Working Devices : 3

Failed Devices : 0

Spare Devices : 0

Layout : left-symmetric

Chunk Size : 512K

Consistency Policy : resync

Name : localhost:5 (local to host localhost)

UUID : a055094e:9adaff79:2edae9b9:0dcc3f1b

Events : 40

Number Major Minor RaidDevice State

3 8 65 0 active sync /dev/sde1

1 8 33 1 active sync /dev/sdc1

4 8 49 2 active sync /dev/sdd1

13.重新添加热备份盘。

[root@localhost ~]# mdadm -a /dev/md5 /dev/sdb1

mdadm: added /dev/sdb1

[root@localhost ~]# mdadm -D /dev/md5

/dev/md5:

Version : 1.2

Creation Time : Sun Aug 25 16:13:44 2019

Raid Level : raid5

Array Size : 41906176 (39.96 GiB 42.91 GB)

Used Dev Size : 20953088 (19.98 GiB 21.46 GB)

Raid Devices : 3

Total Devices : 4

Persistence : Superblock is persistent

Update Time : Sun Aug 25 16:25:22 2019

State : clean

Active Devices : 3

Working Devices : 4

Failed Devices : 0

Spare Devices : 1

Layout : left-symmetric

Chunk Size : 512K

Consistency Policy : resync

Name : localhost:5 (local to host localhost)

UUID : a055094e:9adaff79:2edae9b9:0dcc3f1b

Events : 41

Number Major Minor RaidDevice State

3 8 65 0 active sync /dev/sde1

1 8 33 1 active sync /dev/sdc1

4 8 49 2 active sync /dev/sdd1

5 8 17 - spare /dev/sdb1

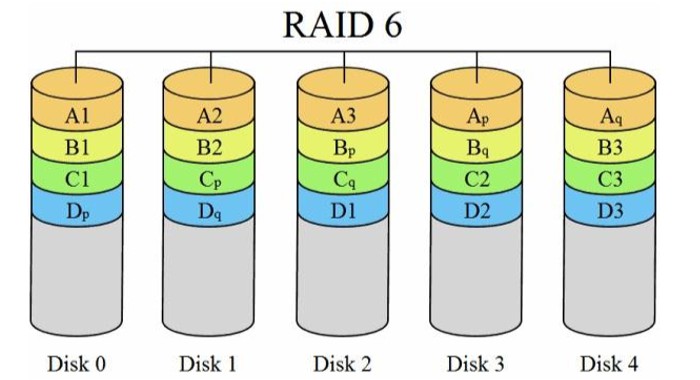

RAID6

RAID6是在RAID5的基础上改良而成的,RAID6再将数据校验位增加一位,所以允许损坏的硬盘数量也由 RAID5的一个增加到二个。由于同一阵列中两个硬盘同时损坏的概率非常少,所以,RAID6用增加一块硬盘的代价,换来了比RAID5更高的数据安全性。

实验:RAID6创建,格式化,挂载使用,故障模拟,重新添加热备份。

1.添加6块20G的硬盘,分区,类型ID为fd。

[root@localhost ~]# fdisk -l | grep raid

/dev/sdb1 2048 41943039 20970496 fd Linux raid autodetect

/dev/sdc1 2048 41943039 20970496 fd Linux raid autodetect

/dev/sdd1 2048 41943039 20970496 fd Linux raid autodetect

/dev/sde1 2048 41943039 20970496 fd Linux raid autodetect

/dev/sdf1 2048 41943039 20970496 fd Linux raid autodetect

/dev/sdg1 2048 41943039 20970496 fd Linux raid autodetect

2.创建RAID6,并添加2个热备份盘。

[root@localhost ~]# mdadm -C -v /dev/md6 -l6 -n4 /dev/sd[b-e]1 -x2 /dev/sd[f-g]1

mdadm: layout defaults to left-symmetric

mdadm: layout defaults to left-symmetric

mdadm: chunk size defaults to 512K

mdadm: size set to 20953088K

mdadm: Fail create md6 when using /sys/module/md_mod/parameters/new_array

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md6 started.

3.查看raidstat状态。

[root@localhost ~]# cat /proc/mdstat

Personalities : [raid6] [raid5] [raid4]

md6 : active raid6 sdg1[5](S) sdf1[4](S) sde1[3] sdd1[2] sdc1[1] sdb1[0]

41906176 blocks super 1.2 level 6, 512k chunk, algorithm 2 [4/4] [UUUU]

[===>.................] resync = 18.9% (3962940/20953088) finish=1.3min speed=208575K/sec

unused devices: <none>

[root@localhost ~]# cat /proc/mdstat

Personalities : [raid6] [raid5] [raid4]

md6 : active raid6 sdg1[5](S) sdf1[4](S) sde1[3] sdd1[2] sdc1[1] sdb1[0]

41906176 blocks super 1.2 level 6, 512k chunk, algorithm 2 [4/4] [UUUU]

unused devices: <none>

4.查看RAID6的详细信息。

[root@localhost ~]# mdadm -D /dev/md6

/dev/md6:

Version : 1.2

Creation Time : Sun Aug 25 16:34:36 2019

Raid Level : raid6

Array Size : 41906176 (39.96 GiB 42.91 GB)

Used Dev Size : 20953088 (19.98 GiB 21.46 GB)

Raid Devices : 4

Total Devices : 6

Persistence : Superblock is persistent

Update Time : Sun Aug 25 16:34:43 2019

State : clean, resyncing

Active Devices : 4

Working Devices : 6

Failed Devices : 0

Spare Devices : 2

Layout : left-symmetric

Chunk Size : 512K

Consistency Policy : resync

Resync Status : 10% complete

Name : localhost:6 (local to host localhost)

UUID : 7c3d15a2:4066f2c6:742f3e4c:82aae1bb

Events : 1

Number Major Minor RaidDevice State

0 8 17 0 active sync /dev/sdb1

1 8 33 1 active sync /dev/sdc1

2 8 49 2 active sync /dev/sdd1

3 8 65 3 active sync /dev/sde1

4 8 81 - spare /dev/sdf1

5 8 97 - spare /dev/sdg1

5.格式化。

[root@localhost ~]# mkfs.xfs /dev/md6

meta-data=/dev/md6 isize=512 agcount=16, agsize=654720 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=0, sparse=0

data = bsize=4096 blocks=10475520, imaxpct=25

= sunit=128 swidth=256 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=1

log =internal log bsize=4096 blocks=5120, version=2

= sectsz=512 sunit=8 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

6.挂载使用。

[root@localhost ~]# mkdir /mnt/md6

[root@localhost ~]# mount /dev/md6 /mnt/md6/

[root@localhost ~]# df -hT

Filesystem Type Size Used Avail Use% Mounted on

/dev/mapper/centos-root xfs 17G 1014M 16G 6% /

devtmpfs devtmpfs 901M 0 901M 0% /dev

tmpfs tmpfs 912M 0 912M 0% /dev/shm

tmpfs tmpfs 912M 8.7M 903M 1% /run

tmpfs tmpfs 912M 0 912M 0% /sys/fs/cgroup

/dev/sda1 xfs 1014M 143M 872M 15% /boot

tmpfs tmpfs 183M 0 183M 0% /run/user/0

/dev/md6 xfs 40G 33M 40G 1% /mnt/md6

7.创建测试文件。

[root@localhost ~]# touch /mnt/md6/test{1..9}.txt

[root@localhost ~]# ls /mnt/md6/

test1.txt test2.txt test3.txt test4.txt test5.txt test6.txt test7.txt test8.txt test9.txt

8.故障模拟。

[root@localhost ~]# mdadm -f /dev/md6 /dev/sdb1

mdadm: set /dev/sdb1 faulty in /dev/md6

[root@localhost ~]# mdadm -f /dev/md6 /dev/sdc1

mdadm: set /dev/sdc1 faulty in /dev/md6

9.查看测试文件。

[root@localhost ~]# ls /mnt/md6/

test1.txt test2.txt test3.txt test4.txt test5.txt test6.txt test7.txt test8.txt test9.txt

10.查看状态。

[root@localhost ~]# cat /proc/mdstat

Personalities : [raid6] [raid5] [raid4]

md6 : active raid6 sdg1[5] sdf1[4] sde1[3] sdd1[2] sdc1[1](F) sdb1[0](F)

41906176 blocks super 1.2 level 6, 512k chunk, algorithm 2 [4/2] [__UU]

[====>................] recovery = 23.8% (4993596/20953088) finish=1.2min speed=208066K/sec

unused devices: <none>

[root@localhost ~]# mdadm -D /dev/md6

/dev/md6:

Version : 1.2

Creation Time : Sun Aug 25 16:34:36 2019

Raid Level : raid6

Array Size : 41906176 (39.96 GiB 42.91 GB)

Used Dev Size : 20953088 (19.98 GiB 21.46 GB)

Raid Devices : 4

Total Devices : 6

Persistence : Superblock is persistent

Update Time : Sun Aug 25 16:41:09 2019

State : clean, degraded, recovering

Active Devices : 2

Working Devices : 4

Failed Devices : 2

Spare Devices : 2

Layout : left-symmetric

Chunk Size : 512K

Consistency Policy : resync

Rebuild Status : 13% complete

Name : localhost:6 (local to host localhost)

UUID : 7c3d15a2:4066f2c6:742f3e4c:82aae1bb

Events : 27

Number Major Minor RaidDevice State

5 8 97 0 spare rebuilding /dev/sdg1

4 8 81 1 spare rebuilding /dev/sdf1

2 8 49 2 active sync /dev/sdd1

3 8 65 3 active sync /dev/sde1

0 8 17 - faulty /dev/sdb1

1 8 33 - faulty /dev/sdc1

11.再次查看状态。

[root@localhost ~]# cat /proc/mdstat

Personalities : [raid6] [raid5] [raid4]

md6 : active raid6 sdg1[5] sdf1[4] sde1[3] sdd1[2] sdc1[1](F) sdb1[0](F)

41906176 blocks super 1.2 level 6, 512k chunk, algorithm 2 [4/4] [UUUU]

unused devices: <none>

[root@localhost ~]# mdadm -D /dev/md6

/dev/md6:

Version : 1.2

Creation Time : Sun Aug 25 16:34:36 2019

Raid Level : raid6

Array Size : 41906176 (39.96 GiB 42.91 GB)

Used Dev Size : 20953088 (19.98 GiB 21.46 GB)

Raid Devices : 4

Total Devices : 6

Persistence : Superblock is persistent

Update Time : Sun Aug 25 16:42:42 2019

State : clean

Active Devices : 4

Working Devices : 4

Failed Devices : 2

Spare Devices : 0

Layout : left-symmetric

Chunk Size : 512K

Consistency Policy : resync

Name : localhost:6 (local to host localhost)

UUID : 7c3d15a2:4066f2c6:742f3e4c:82aae1bb

Events : 46

Number Major Minor RaidDevice State

5 8 97 0 active sync /dev/sdg1

4 8 81 1 active sync /dev/sdf1

2 8 49 2 active sync /dev/sdd1

3 8 65 3 active sync /dev/sde1

0 8 17 - faulty /dev/sdb1

1 8 33 - faulty /dev/sdc1

12.移除损坏的磁盘。

[root@localhost ~]# mdadm -r /dev/md6 /dev/sd{b,c}1

mdadm: hot removed /dev/sdb1 from /dev/md6

mdadm: hot removed /dev/sdc1 from /dev/md6

[root@localhost ~]# mdadm -D /dev/md6

/dev/md6:

Version : 1.2

Creation Time : Sun Aug 25 16:34:36 2019

Raid Level : raid6

Array Size : 41906176 (39.96 GiB 42.91 GB)

Used Dev Size : 20953088 (19.98 GiB 21.46 GB)

Raid Devices : 4

Total Devices : 4

Persistence : Superblock is persistent

Update Time : Sun Aug 25 16:43:43 2019

State : clean

Active Devices : 4

Working Devices : 4

Failed Devices : 0

Spare Devices : 0

Layout : left-symmetric

Chunk Size : 512K

Consistency Policy : resync

Name : localhost:6 (local to host localhost)

UUID : 7c3d15a2:4066f2c6:742f3e4c:82aae1bb

Events : 47

Number Major Minor RaidDevice State

5 8 97 0 active sync /dev/sdg1

4 8 81 1 active sync /dev/sdf1

2 8 49 2 active sync /dev/sdd1

3 8 65 3 active sync /dev/sde1

13.重新添加热备份盘。

[root@localhost ~]# mdadm -a /dev/md6 /dev/sd{b,c}1

mdadm: added /dev/sdb1

mdadm: added /dev/sdc1

[root@localhost ~]# mdadm -D /dev/md6

/dev/md6:

Version : 1.2

Creation Time : Sun Aug 25 16:34:36 2019

Raid Level : raid6

Array Size : 41906176 (39.96 GiB 42.91 GB)

Used Dev Size : 20953088 (19.98 GiB 21.46 GB)

Raid Devices : 4

Total Devices : 6

Persistence : Superblock is persistent

Update Time : Sun Aug 25 16:44:01 2019

State : clean

Active Devices : 4

Working Devices : 6

Failed Devices : 0

Spare Devices : 2

Layout : left-symmetric

Chunk Size : 512K

Consistency Policy : resync

Name : localhost:6 (local to host localhost)

UUID : 7c3d15a2:4066f2c6:742f3e4c:82aae1bb

Events : 49

Number Major Minor RaidDevice State

5 8 97 0 active sync /dev/sdg1

4 8 81 1 active sync /dev/sdf1

2 8 49 2 active sync /dev/sdd1

3 8 65 3 active sync /dev/sde1

6 8 17 - spare /dev/sdb1

7 8 33 - spare /dev/sdc1

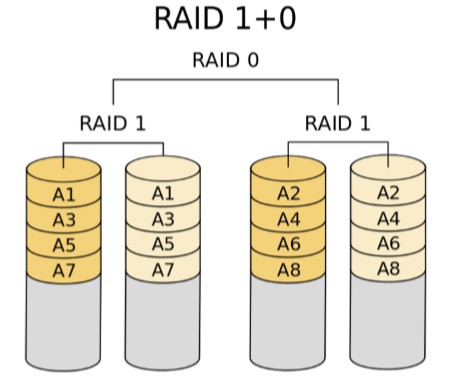

RAID10

RAID10是先将数据进行镜像操作,然后再对数据进行分组,RAID1在这里就是一个冗余的备份阵列,而RAID0则负责数据的读写阵列。至少要四块盘,两两组合做RAID1,然后做RAID0,RAID10对存储容量的利用率和RAID1一样低,只有50%。Raid10方案造成了50%的磁盘浪费,但是它提供了200%的速度和单磁盘损坏的数据安全性,并且当同时损坏的磁盘不在同一RAID1中,就能保证数据安全性,RAID10能提供比RAID5更好的性能。这种新结构的可扩充性不好,使用此方案比较昂贵。

实验:RAID10创建,格式化,挂载使用,故障模拟,重新添加热备份。

1.添加4块20G的硬盘,分区,类型ID为fd。

[root@localhost ~]# fdisk -l | grep raid

/dev/sdb1 2048 41943039 20970496 fd Linux raid autodetect

/dev/sdc1 2048 41943039 20970496 fd Linux raid autodetect

/dev/sdd1 2048 41943039 20970496 fd Linux raid autodetect

/dev/sde1 2048 41943039 20970496 fd Linux raid autodetect

2.创建两个RAID1,不添加热备份盘。

[root@localhost ~]# mdadm -C -v /dev/md101 -l1 -n2 /dev/sd{b,c}1

mdadm: Note: this array has metadata at the start and

may not be suitable as a boot device. If you plan to

store '/boot' on this device please ensure that

your boot-loader understands md/v1.x metadata, or use

--metadata=0.90

mdadm: size set to 20953088K

Continue creating array? y

mdadm: Fail create md101 when using /sys/module/md_mod/parameters/new_array

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md101 started.

[root@localhost ~]# mdadm -C -v /dev/md102 -l1 -n2 /dev/sd{d,e}1

mdadm: Note: this array has metadata at the start and

may not be suitable as a boot device. If you plan to

store '/boot' on this device please ensure that

your boot-loader understands md/v1.x metadata, or use

--metadata=0.90

mdadm: size set to 20953088K

Continue creating array? y

mdadm: Fail create md102 when using /sys/module/md_mod/parameters/new_array

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md102 started.

3.查看raidstat状态。

[root@localhost ~]# cat /proc/mdstat

Personalities : [raid1]

md102 : active raid1 sde1[1] sdd1[0]

20953088 blocks super 1.2 [2/2] [UU]

[=========>...........] resync = 48.4% (10148224/20953088) finish=0.8min speed=200056K/sec

md101 : active raid1 sdc1[1] sdb1[0]

20953088 blocks super 1.2 [2/2] [UU]

[=============>.......] resync = 69.6% (14604672/20953088) finish=0.5min speed=200052K/sec

unused devices: <none>

[root@localhost ~]# cat /proc/mdstat

Personalities : [raid1]

md102 : active raid1 sde1[1] sdd1[0]

20953088 blocks super 1.2 [2/2] [UU]

md101 : active raid1 sdc1[1] sdb1[0]

20953088 blocks super 1.2 [2/2] [UU]

unused devices: <none>

4.查看两个RAID1的详细信息。

[root@localhost ~]# mdadm -D /dev/md101

/dev/md101:

Version : 1.2

Creation Time : Sun Aug 25 16:53:00 2019

Raid Level : raid1

Array Size : 20953088 (19.98 GiB 21.46 GB)

Used Dev Size : 20953088 (19.98 GiB 21.46 GB)

Raid Devices : 2

Total Devices : 2

Persistence : Superblock is persistent

Update Time : Sun Aug 25 16:53:58 2019

State : clean, resyncing

Active Devices : 2

Working Devices : 2

Failed Devices : 0

Spare Devices : 0

Consistency Policy : resync

Resync Status : 62% complete

Name : localhost:101 (local to host localhost)

UUID : 80bb4fc5:1a628936:275ba828:17f23330

Events : 9

Number Major Minor RaidDevice State

0 8 17 0 active sync /dev/sdb1

1 8 33 1 active sync /dev/sdc1

[root@localhost ~]# mdadm -D /dev/md102

/dev/md102:

Version : 1.2

Creation Time : Sun Aug 25 16:53:23 2019

Raid Level : raid1

Array Size : 20953088 (19.98 GiB 21.46 GB)

Used Dev Size : 20953088 (19.98 GiB 21.46 GB)

Raid Devices : 2

Total Devices : 2

Persistence : Superblock is persistent

Update Time : Sun Aug 25 16:54:02 2019

State : clean, resyncing

Active Devices : 2

Working Devices : 2

Failed Devices : 0

Spare Devices : 0

Consistency Policy : resync

Resync Status : 42% complete

Name : localhost:102 (local to host localhost)

UUID : 38abac72:74fa8a53:3a21b5e4:01ae64cd

Events : 6

Number Major Minor RaidDevice State

0 8 49 0 active sync /dev/sdd1

1 8 65 1 active sync /dev/sde1

5.创建RAID10。

[root@localhost ~]# mdadm -C -v /dev/md10 -l0 -n2 /dev/md10{1,2}

mdadm: chunk size defaults to 512K

mdadm: Fail create md10 when using /sys/module/md_mod/parameters/new_array

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md10 started.

6.查看raidstat状态。

[root@localhost ~]# cat /proc/mdstat

Personalities : [raid1] [raid0]

md10 : active raid0 md102[1] md101[0]

41871360 blocks super 1.2 512k chunks

md102 : active raid1 sde1[1] sdd1[0]

20953088 blocks super 1.2 [2/2] [UU]

md101 : active raid1 sdc1[1] sdb1[0]

20953088 blocks super 1.2 [2/2] [UU]

unused devices: <none>

7.查看RAID10的详细信息。

[root@localhost ~]# mdadm -D /dev/md10

/dev/md10:

Version : 1.2

Creation Time : Sun Aug 25 16:56:08 2019

Raid Level : raid0

Array Size : 41871360 (39.93 GiB 42.88 GB)

Raid Devices : 2

Total Devices : 2

Persistence : Superblock is persistent

Update Time : Sun Aug 25 16:56:08 2019

State : clean

Active Devices : 2

Working Devices : 2

Failed Devices : 0

Spare Devices : 0

Chunk Size : 512K

Consistency Policy : none

Name : localhost:10 (local to host localhost)

UUID : 23c6abac:b131a049:db25cac8:686fb045

Events : 0

Number Major Minor RaidDevice State

0 9 101 0 active sync /dev/md101

1 9 102 1 active sync /dev/md102

8.格式化。

[root@localhost ~]# mkfs.xfs /dev/md10

meta-data=/dev/md10 isize=512 agcount=16, agsize=654208 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=0, sparse=0

data = bsize=4096 blocks=10467328, imaxpct=25

= sunit=128 swidth=256 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=1

log =internal log bsize=4096 blocks=5112, version=2

= sectsz=512 sunit=8 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

9.挂载使用。

[root@localhost ~]# mkdir /mnt/md10

[root@localhost ~]# mount /dev/md10 /mnt/md10/

[root@localhost ~]# df -hT

Filesystem Type Size Used Avail Use% Mounted on

/dev/mapper/centos-root xfs 17G 1014M 16G 6% /

devtmpfs devtmpfs 901M 0 901M 0% /dev

tmpfs tmpfs 912M 0 912M 0% /dev/shm

tmpfs tmpfs 912M 8.7M 903M 1% /run

tmpfs tmpfs 912M 0 912M 0% /sys/fs/cgroup

/dev/sda1 xfs 1014M 143M 872M 15% /boot

tmpfs tmpfs 183M 0 183M 0% /run/user/0

/dev/md10 xfs 40G 33M 40G 1% /mnt/md10

10.创建测试文件。

[root@localhost ~]# touch /mnt/md10/test{1..9}.txt

[root@localhost ~]# ls /mnt/md10/

test1.txt test2.txt test3.txt test4.txt test5.txt test6.txt test7.txt test8.txt test9.txt

11.故障模拟。

[root@localhost ~]# mdadm -f /dev/md101 /dev/sdb1

mdadm: set /dev/sdb1 faulty in /dev/md101

[root@localhost ~]# mdadm -f /dev/md102 /dev/sdd1

mdadm: set /dev/sdd1 faulty in /dev/md102

12.查看测试文件。

[root@localhost ~]# ls /mnt/md10/

test1.txt test2.txt test3.txt test4.txt test5.txt test6.txt test7.txt test8.txt test9.txt

13.查看状态。

[root@localhost ~]# cat /proc/mdstat

Personalities : [raid1] [raid0]

md10 : active raid0 md102[1] md101[0]

41871360 blocks super 1.2 512k chunks

md102 : active raid1 sde1[1] sdd1[0](F)

20953088 blocks super 1.2 [2/1] [_U]

md101 : active raid1 sdc1[1] sdb1[0](F)

20953088 blocks super 1.2 [2/1] [_U]

unused devices: <none>

[root@localhost ~]# mdadm -D /dev/md101

/dev/md101:

Version : 1.2

Creation Time : Sun Aug 25 16:53:00 2019

Raid Level : raid1

Array Size : 20953088 (19.98 GiB 21.46 GB)

Used Dev Size : 20953088 (19.98 GiB 21.46 GB)

Raid Devices : 2

Total Devices : 2

Persistence : Superblock is persistent

Update Time : Sun Aug 25 17:01:11 2019

State : clean, degraded

Active Devices : 1

Working Devices : 1

Failed Devices : 1

Spare Devices : 0

Consistency Policy : resync

Name : localhost:101 (local to host localhost)

UUID : 80bb4fc5:1a628936:275ba828:17f23330

Events : 23

Number Major Minor RaidDevice State

- 0 0 0 removed

1 8 33 1 active sync /dev/sdc1

0 8 17 - faulty /dev/sdb1

[root@localhost ~]# mdadm -D /dev/md102

/dev/md102:

Version : 1.2

Creation Time : Sun Aug 25 16:53:23 2019

Raid Level : raid1

Array Size : 20953088 (19.98 GiB 21.46 GB)

Used Dev Size : 20953088 (19.98 GiB 21.46 GB)

Raid Devices : 2

Total Devices : 2

Persistence : Superblock is persistent

Update Time : Sun Aug 25 17:00:43 2019

State : clean, degraded

Active Devices : 1

Working Devices : 1

Failed Devices : 1

Spare Devices : 0

Consistency Policy : resync

Name : localhost:102 (local to host localhost)

UUID : 38abac72:74fa8a53:3a21b5e4:01ae64cd

Events : 19

Number Major Minor RaidDevice State

- 0 0 0 removed

1 8 65 1 active sync /dev/sde1

0 8 49 - faulty /dev/sdd1

[root@localhost ~]# mdadm -D /dev/md10

/dev/md10:

Version : 1.2

Creation Time : Sun Aug 25 16:56:08 2019

Raid Level : raid0

Array Size : 41871360 (39.93 GiB 42.88 GB)

Raid Devices : 2

Total Devices : 2

Persistence : Superblock is persistent

Update Time : Sun Aug 25 16:56:08 2019

State : clean

Active Devices : 2

Working Devices : 2

Failed Devices : 0

Spare Devices : 0

Chunk Size : 512K

Consistency Policy : none

Name : localhost:10 (local to host localhost)

UUID : 23c6abac:b131a049:db25cac8:686fb045

Events : 0

Number Major Minor RaidDevice State

0 9 101 0 active sync /dev/md101

1 9 102 1 active sync /dev/md102

14.移除损坏的磁盘。

[root@localhost ~]# mdadm -r /dev/md101 /dev/sdb1

mdadm: hot removed /dev/sdb1 from /dev/md101

[root@localhost ~]# mdadm -r /dev/md102 /dev/sdd1

mdadm: hot removed /dev/sdd1 from /dev/md102

[root@localhost ~]# mdadm -D /dev/md101

/dev/md101:

Version : 1.2

Creation Time : Sun Aug 25 16:53:00 2019

Raid Level : raid1

Array Size : 20953088 (19.98 GiB 21.46 GB)

Used Dev Size : 20953088 (19.98 GiB 21.46 GB)

Raid Devices : 2

Total Devices : 1

Persistence : Superblock is persistent

Update Time : Sun Aug 25 17:04:59 2019

State : clean, degraded

Active Devices : 1

Working Devices : 1

Failed Devices : 0

Spare Devices : 0

Consistency Policy : resync

Name : localhost:101 (local to host localhost)

UUID : 80bb4fc5:1a628936:275ba828:17f23330

Events : 26

Number Major Minor RaidDevice State

- 0 0 0 removed

1 8 33 1 active sync /dev/sdc1

[root@localhost ~]# mdadm -D /dev/md102

/dev/md102:

Version : 1.2

Creation Time : Sun Aug 25 16:53:23 2019

Raid Level : raid1

Array Size : 20953088 (19.98 GiB 21.46 GB)

Used Dev Size : 20953088 (19.98 GiB 21.46 GB)

Raid Devices : 2

Total Devices : 1

Persistence : Superblock is persistent

Update Time : Sun Aug 25 17:05:07 2019

State : clean, degraded

Active Devices : 1

Working Devices : 1

Failed Devices : 0

Spare Devices : 0

Consistency Policy : resync

Name : localhost:102 (local to host localhost)

UUID : 38abac72:74fa8a53:3a21b5e4:01ae64cd

Events : 20

Number Major Minor RaidDevice State

- 0 0 0 removed

1 8 65 1 active sync /dev/sde1

15.重新添加热备份盘。

[root@localhost ~]# mdadm -a /dev/md101 /dev/sdb1

mdadm: added /dev/sdb1

[root@localhost ~]# mdadm -a /dev/md102 /dev/sdd1

mdadm: added /dev/sdd1

16.再次查看状态。

[root@localhost ~]# cat /proc/mdstat

Personalities : [raid1] [raid0]

md10 : active raid0 md102[1] md101[0]

41871360 blocks super 1.2 512k chunks

md102 : active raid1 sdd1[2] sde1[1]

20953088 blocks super 1.2 [2/1] [_U]

[====>................] recovery = 23.8% (5000704/20953088) finish=1.2min speed=208362K/sec

md101 : active raid1 sdb1[2] sdc1[1]

20953088 blocks super 1.2 [2/1] [_U]

[======>..............] recovery = 32.0% (6712448/20953088) finish=1.1min speed=203407K/sec

unused devices: <none>

[root@localhost ~]# cat /proc/mdstat

Personalities : [raid1] [raid0]

md10 : active raid0 md102[1] md101[0]

41871360 blocks super 1.2 512k chunks

md102 : active raid1 sdd1[2] sde1[1]

20953088 blocks super 1.2 [2/2] [UU]

md101 : active raid1 sdb1[2] sdc1[1]

20953088 blocks super 1.2 [2/2] [UU]

unused devices: <none>

[root@localhost ~]# mdadm -D /dev/md101

/dev/md101:

Version : 1.2

Creation Time : Sun Aug 25 16:53:00 2019

Raid Level : raid1

Array Size : 20953088 (19.98 GiB 21.46 GB)

Used Dev Size : 20953088 (19.98 GiB 21.46 GB)

Raid Devices : 2

Total Devices : 2

Persistence : Superblock is persistent

Update Time : Sun Aug 25 17:07:28 2019

State : clean

Active Devices : 2

Working Devices : 2

Failed Devices : 0

Spare Devices : 0

Consistency Policy : resync

Name : localhost:101 (local to host localhost)

UUID : 80bb4fc5:1a628936:275ba828:17f23330

Events : 45

Number Major Minor RaidDevice State

2 8 17 0 active sync /dev/sdb1

1 8 33 1 active sync /dev/sdc1

[root@localhost ~]# mdadm -D /dev/md102

/dev/md102:

Version : 1.2

Creation Time : Sun Aug 25 16:53:23 2019

Raid Level : raid1

Array Size : 20953088 (19.98 GiB 21.46 GB)

Used Dev Size : 20953088 (19.98 GiB 21.46 GB)

Raid Devices : 2

Total Devices : 2

Persistence : Superblock is persistent

Update Time : Sun Aug 25 17:07:36 2019

State : clean

Active Devices : 2

Working Devices : 2

Failed Devices : 0

Spare Devices : 0

Consistency Policy : resync

Name : localhost:102 (local to host localhost)

UUID : 38abac72:74fa8a53:3a21b5e4:01ae64cd

Events : 39

Number Major Minor RaidDevice State

2 8 49 0 active sync /dev/sdd1

1 8 65 1 active sync /dev/sde1

常用 RAID 间比较

| 名称 | 硬盘数量 | 容量/利用率 | 读性能 | 写性能 | 数据冗余 |

|---|---|---|---|---|---|

| RAID0 | N | N块总和 | N倍 | N倍 | 无,一个故障,丢失所有数据 |

| RAID1 | N(偶数) | 50% | ↑ | ↓ | 写两个设备,允许一个故障 |

| RAID5 | N≥3 | (N-1)/N | ↑↑ | ↓ | 计算校验,允许一个故障 |

| RAID6 | N≥4 | (N-2)/N | ↑↑ | ↓↓ | 双重校验,允许两个故障 |

| RAID10 | N(偶数,N≥4) | 50% | (N/2)倍 | (N/2)倍 | 允许基组中的磁盘各损坏一个 |

一些话

此篇涉及到的操作很简单,但是,有很多的查看占用了大量的篇幅,看关键点,过程都是一个套路,都是重复的。

Linux 常见 RAID 及软 RAID 创建的更多相关文章

- 硬RAID和软RAID

RAID简介: RAID是 Redundant Array of Independent Disks的简写,意为独立磁盘冗余阵列,简称磁盘阵列.基本思想是把多个相对便宜的硬盘结合起来,称为一个磁盘阵列 ...

- 硬RAID与软RAID的区别

什么是RAID? RAID是英文Redundant Array of Independent Disks的缩写,翻译成中文即为独立磁盘冗余阵列,或简称磁盘阵列.简单的说,RAID是一种把多块独立的硬盘 ...

- linux磁盘管理系列-软RAID的实现

1 什么是RAID RAID全称是独立磁盘冗余阵列(Redundant Array of Independent Disks),基本思想是把多个磁盘组合起来,组合一个磁盘阵列组,使得性能大幅提高. R ...

- RAID、软RAID和硬RAID

RAID(redundant array of inexpensive disks):独立的硬盘冗余阵列,基本思想是把多个小硬盘组合在一起成为一个磁盘组,通过软件或硬件的管理达到性能提升或容量增大或增 ...

- 图例演示在Linux上快速安装软RAID的详细步骤

物理环境:虚拟机centos6.4 配置:8G内存.2*2核cpu.3块虚拟硬盘(sda,sdb,sdc,sdb和sdc是完全一样的) 在实际生产环境中,系统硬盘与数据库和应用是分开的, ...

- LINUX中软RAID的实现方案

转自linux就该这么学 应用场景 Raid大家都知道是冗余磁盘的意思(Redundant Arrays of Independent Disks,RAID),可以按业务系统的需要提供高可用性和冗余性 ...

- 软RAID 0的技术概要及实现

1 什么是RAID,RAID的级别和特点 : 什么是RAID呢?全称是 “A Case for Redundant Arrays of Inexpensive Disks (RAID)”,在1987年 ...

- 软RAID管理

软RAID管理 软RAID 软RAID 提供管理界面:mdadm 软RAID为空余磁盘添加冗余,结合了内核中的md(multi devices). RAID 设备可命名为/dev/md0./dev/m ...

- 软RAID和硬RAID的区别

要实现RAID可以分为硬件实现和软件实现两种.所谓硬RAID就是指通过硬件实现,同理软件实现就作为软RAID. 硬RAID 就是用专门的RAID控制器将硬盘和电脑连接起来,RAID控制器负责将所有 ...

随机推荐

- Ubuntu14 配置开机自启动/关闭

1.ubuntu默认运行级别为2(runlevel),所以在/etc/rc2.b中S开头的链接文件(连接到/etc/init.d)就是自启动项.不想开机自动启动可以把S开头的文件重命名或删除,重命名好 ...

- bootCDN引用的bootstrap前端框架套件和示例

这是bootCDN上引用的bootstrap前端框架套件,由多个框架组合而成,方便平时学习和测试使用.生产环境要仔细琢磨一下,不要用开发版,而要用生产版.bootCDN的地址是:https://www ...

- ETF:pcf文件制作

pcf文件依赖数据: ETF基本信息() 指数权重文件(次日权重文件,中证指数公司) 现金替代标志文件(根据中证指数的停复牌文件) 净值文件(基金公司估值系统计算) 成分股数量计算公式: 1.估值系统 ...

- 升级go mod采坑录

为了使用go mod把golang升级到了最新的1.12版本,go mod是1.11版本引入的,go mod的引入极大的方便了golang项目的依赖管理,同时把golang项目从GOPATH中解放了出 ...

- IBM X3650 M4 M5 设置服务器用UEFI模式启动支持磁盘GPT分区

1 系统启动 2 按 F1 3 进入BIOS 4 进入 System Configuration 5 找到 Boot Manager 6 找到Boot Modes 7 进入Boot Modes, 找到 ...

- PowerDNS + PowerDNS-Admin

一.基础配置 1.1 环境说明 Centos 7.5.1804 PDNS MariaDB 1.2 关闭防火墙和 selinux setenforce sed -i 's/SELINUX=enforci ...

- layui switch 确定之后才变更状态

let x = data.elem.checked; data.elem.checked = !x; form.render(); 完整代码 form.on('switch(is_enable)', ...

- LeetCode 1089. 复写零(Duplicate Zeros) 72

1089. 复写零 1089. Duplicate Zeros 题目描述 给你一个长度固定的整数数组 arr,请你将该数组中出现的每个零都复写一遍,并将其余的元素向右平移. 注意:请不要在超过该数组长 ...

- Codeforces Round #603 (Div. 2) (题解)

A. Sweet Problem (找规律) 题目链接 大致思路: 有一点瞎猜的,首先排一个序, \(a_1>a_2>a_3\) ,发现如果 \(a_1>=a_2+a_3\) ,那么 ...

- LocalStack和Local对象实现栈的管理

flask里面有两个重要的类Local和LocalStack 输入from flask import globals 左键+ctrl点globals进入源码,进去后找57行 flask只会实例化出这两 ...