prometheus使用2

参考不错的

Prometheus监控实战之node_exporter详解:

https://blog.csdn.net/ygq13572549874/article/details/129115350

一般操作

查看之前安装的

[root@mcw03 ~]# cd /usr/local/prometheus/

[root@mcw03 prometheus]# ls

console_libraries consoles LICENSE NOTICE prometheus prometheus.yml promtool

[root@mcw03 prometheus]# less prometheus.yml

[root@mcw03 prometheus]#

查看配置

[root@mcw03 prometheus]# cat prometheus.yml

# my global config

global:

scrape_interval: 15s # Set the scrape interval to every 15 seconds. Default is every 1 minute.

evaluation_interval: 15s # Evaluate rules every 15 seconds. The default is every 1 minute.

# scrape_timeout is set to the global default (10s). # Alertmanager configuration

alerting:

alertmanagers:

- static_configs:

- targets:

# - alertmanager:9093 # Load rules once and periodically evaluate them according to the global 'evaluation_interval'.

rule_files:

# - "first_rules.yml"

# - "second_rules.yml" # A scrape configuration containing exactly one endpoint to scrape:

# Here it's Prometheus itself.

scrape_configs:

# The job name is added as a label `job=<job_name>` to any timeseries scraped from this config.

- job_name: 'prometheus' # metrics_path defaults to '/metrics'

# scheme defaults to 'http'. static_configs:

- targets: ['localhost:9090']

- job_name: 'agent1'

static_configs:

- targets: ['10.0.0.14:9100']

- job_name: 'promserver'

static_configs:

- targets: ['10.0.0.13:9100']

- job_name: 'server_mariadb'

static_configs:

- targets: ['10.0.0.13:9104']

[root@mcw03 prometheus]#

启动:

[root@mcw03 prometheus]#

[root@mcw03 prometheus]# /usr/local/prometheus/prometheus --config.file="/usr/local/prometheus/prometheus.yml" &

[1] 82834

[root@mcw03 prometheus]# level=info ts=2024-01-29T15:38:28.958560959Z caller=main.go:244 msg="Starting Prometheus" version="(version=2.5.0, branch=HEAD, revision=67dc912ac8b24f94a1fc478f352d25179c94ab9b)"

level=info ts=2024-01-29T15:38:28.958619046Z caller=main.go:245 build_context="(go=go1.11.1, user=root@578ab108d0b9, date=20181106-11:40:44)"

level=info ts=2024-01-29T15:38:28.95863261Z caller=main.go:246 host_details="(Linux 3.10.0-693.el7.x86_64 #1 SMP Tue Aug 22 21:09:27 UTC 2017 x86_64 mcw03 (none))"

level=info ts=2024-01-29T15:38:28.958644576Z caller=main.go:247 fd_limits="(soft=65535, hard=65535)"

level=info ts=2024-01-29T15:38:28.958654061Z caller=main.go:248 vm_limits="(soft=unlimited, hard=unlimited)"

level=info ts=2024-01-29T15:38:28.959638098Z caller=main.go:562 msg="Starting TSDB ..."

level=info ts=2024-01-29T15:38:28.96581693Z caller=main.go:572 msg="TSDB started"

level=info ts=2024-01-29T15:38:28.966063978Z caller=main.go:632 msg="Loading configuration file" filename=/usr/local/prometheus/prometheus.yml

level=info ts=2024-01-29T15:38:28.968164139Z caller=main.go:658 msg="Completed loading of configuration file" filename=/usr/local/prometheus/prometheus.yml

level=info ts=2024-01-29T15:38:28.968197199Z caller=main.go:531 msg="Server is ready to receive web requests."

level=info ts=2024-01-29T15:38:28.969282856Z caller=web.go:399 component=web msg="Start listening for connections" address=0.0.0.0:9090

访问地址:http://10.0.0.13:9090/,启动的时候可以看到,默认跳到http://10.0.0.13:9090/graph了

验证配置文件

[root@mcw03 prometheus]# ls

console_libraries consoles data LICENSE NOTICE prometheus prometheus.yml promtool

[root@mcw03 prometheus]# ./promtool check config prometheus.yml

Checking prometheus.yml

SUCCESS: 0 rule files found [root@mcw03 prometheus]#

将配置前面多加个o,检查配置失败

[root@mcw03 prometheus]# tail -3 prometheus.yml

- job_name: 'server_mariadb'

static_configs:

o- targets: ['10.0.0.13:9104']

[root@mcw03 prometheus]# ./promtool check config prometheus.yml

Checking prometheus.yml

FAILED: parsing YAML file prometheus.yml: yaml: unmarshal errors:

line 38: field o- targets not found in type config.plain [root@mcw03 prometheus]#

修改配置重载

修改配置把14的注释掉,检查配置,重载配置,报错

[root@mcw03 prometheus]# vim prometheus.yml

[root@mcw03 prometheus]# cat prometheus.yml

# my global config

global:

scrape_interval: 15s # Set the scrape interval to every 15 seconds. Default is every 1 minute.

evaluation_interval: 15s # Evaluate rules every 15 seconds. The default is every 1 minute.

# scrape_timeout is set to the global default (10s). # Alertmanager configuration

alerting:

alertmanagers:

- static_configs:

- targets:

# - alertmanager:9093 # Load rules once and periodically evaluate them according to the global 'evaluation_interval'.

rule_files:

# - "first_rules.yml"

# - "second_rules.yml" # A scrape configuration containing exactly one endpoint to scrape:

# Here it's Prometheus itself.

scrape_configs:

# The job name is added as a label `job=<job_name>` to any timeseries scraped from this config.

- job_name: 'prometheus' # metrics_path defaults to '/metrics'

# scheme defaults to 'http'. static_configs:

- targets: ['localhost:9090']

#- job_name: 'agent1'

# static_configs:

# - targets: ['10.0.0.14:9100']

- job_name: 'promserver'

static_configs:

- targets: ['10.0.0.13:9100']

- job_name: 'server_mariadb'

static_configs:

- targets: ['10.0.0.13:9104']

[root@mcw03 prometheus]# ./promtool check config prometheus.yml

Checking prometheus.yml

SUCCESS: 0 rule files found [root@mcw03 prometheus]# curl -X POST http://localhost:9090/-/reload

Lifecycle APIs are not enabled[root@mcw03 prometheus]#

热加载

prometheus启动后修改配置文件就需要再重启生效

可以通过以下方式 热加载

curl -X POST http://localhost:9090/-/reload

请求接口后返回 Lifecycle API is not enabled. 那么就是启动的时候没有开启热更新配置,需要在启动的命令行增加参数: --web.enable-lifecycle

./prometheus --web.enable-lifecycle --config.file=prometheus.yml

如果已经把promtheus配置到了Linux系统服务系统里面,需要到systemd的system文件夹下修改promtheus对应的.service文件。

大概步骤如下:

然后执行命令

systemctl daemon-reload

systemctl restart prometheus

1

2

后面每次修改了prometheus配置文件后,可以调用接口进行配置的热加载:

curl -X POST http://ip:9090/-/reload

1

参考文章:

prometheus热加载配置文件

https://blog.csdn.net/qq_21133131/article/details/117568214

Prometheus监控学习笔记之Prometheus如何热加载更新配置

https://www.cnblogs.com/momoyan/p/12039895.html

原文链接:https://blog.csdn.net/qq_39595769/article/details/119240941

@@@

先杀掉,

[root@mcw03 prometheus]# ps -ef|grep prome

root 82834 2094 0 Jan29 pts/0 00:00:01 /usr/local/prometheus/prometheus --config.file=/usr/local/prometheus/prometheus.yml

root 84432 2094 0 00:17 pts/0 00:00:00 grep --color=auto prome

[root@mcw03 prometheus]# kill 82834

[root@mcw03 prometheus]# level=warn ts=2024-01-29T16:17:55.448944181Z caller=main.go:406 msg="Received SIGTERM, exiting gracefully..."

level=info ts=2024-01-29T16:17:55.448992753Z caller=main.go:431 msg="Stopping scrape discovery manager..."

level=info ts=2024-01-29T16:17:55.448999882Z caller=main.go:445 msg="Stopping notify discovery manager..."

level=info ts=2024-01-29T16:17:55.449004831Z caller=main.go:467 msg="Stopping scrape manager..."

level=info ts=2024-01-29T16:17:55.449023164Z caller=main.go:427 msg="Scrape discovery manager stopped"

level=info ts=2024-01-29T16:17:55.449031517Z caller=main.go:441 msg="Notify discovery manager stopped"

level=info ts=2024-01-29T16:17:55.449051788Z caller=manager.go:657 component="rule manager" msg="Stopping rule manager..."

level=info ts=2024-01-29T16:17:55.449060796Z caller=manager.go:663 component="rule manager" msg="Rule manager stopped"

level=info ts=2024-01-29T16:17:55.449622055Z caller=main.go:461 msg="Scrape manager stopped"

level=info ts=2024-01-29T16:17:55.449728933Z caller=notifier.go:512 component=notifier msg="Stopping notification manager..."

level=info ts=2024-01-29T16:17:55.44974018Z caller=main.go:616 msg="Notifier manager stopped"

level=info ts=2024-01-29T16:17:55.449872966Z caller=main.go:628 msg="See you next time!"

加上上面的参数启动,这样支持热加载了

[root@mcw03 prometheus]# /usr/local/prometheus/prometheus --web.enable-lifecycle --config.file="/usr/local/prometheus/prometheus.yml" &

[1] 84520

[root@mcw03 prometheus]# level=info ts=2024-01-29T16:19:57.779420663Z caller=main.go:244 msg="Starting Prometheus" version="(version=2.5.0, branch=HEAD, revision=67dc912ac8b24f94a1fc478f352d25179c94ab9b)"

level=info ts=2024-01-29T16:19:57.779482093Z caller=main.go:245 build_context="(go=go1.11.1, user=root@578ab108d0b9, date=20181106-11:40:44)"

level=info ts=2024-01-29T16:19:57.779505718Z caller=main.go:246 host_details="(Linux 3.10.0-693.el7.x86_64 #1 SMP Tue Aug 22 21:09:27 UTC 2017 x86_64 mcw03 (none))"

level=info ts=2024-01-29T16:19:57.779518271Z caller=main.go:247 fd_limits="(soft=65535, hard=65535)"

level=info ts=2024-01-29T16:19:57.77952732Z caller=main.go:248 vm_limits="(soft=unlimited, hard=unlimited)"

level=info ts=2024-01-29T16:19:57.780838853Z caller=main.go:562 msg="Starting TSDB ..."

level=info ts=2024-01-29T16:19:57.813389846Z caller=web.go:399 component=web msg="Start listening for connections" address=0.0.0.0:9090

level=info ts=2024-01-29T16:19:57.828718461Z caller=main.go:572 msg="TSDB started"

level=info ts=2024-01-29T16:19:57.828777376Z caller=main.go:632 msg="Loading configuration file" filename=/usr/local/prometheus/prometheus.yml

level=info ts=2024-01-29T16:19:57.829456749Z caller=main.go:658 msg="Completed loading of configuration file" filename=/usr/local/prometheus/prometheus.yml

level=info ts=2024-01-29T16:19:57.829470351Z caller=main.go:531 msg="Server is ready to receive web requests."

此时没有14的,

将14的配置注释去掉

[root@mcw03 prometheus]# vim prometheus.yml

[root@mcw03 prometheus]# cat prometheus.yml

# my global config

global:

scrape_interval: 15s # Set the scrape interval to every 15 seconds. Default is every 1 minute.

evaluation_interval: 15s # Evaluate rules every 15 seconds. The default is every 1 minute.

# scrape_timeout is set to the global default (10s). # Alertmanager configuration

alerting:

alertmanagers:

- static_configs:

- targets:

# - alertmanager:9093 # Load rules once and periodically evaluate them according to the global 'evaluation_interval'.

rule_files:

# - "first_rules.yml"

# - "second_rules.yml" # A scrape configuration containing exactly one endpoint to scrape:

# Here it's Prometheus itself.

scrape_configs:

# The job name is added as a label `job=<job_name>` to any timeseries scraped from this config.

- job_name: 'prometheus' # metrics_path defaults to '/metrics'

# scheme defaults to 'http'. static_configs:

- targets: ['localhost:9090']

- job_name: 'agent1'

static_configs:

- targets: ['10.0.0.14:9100']

- job_name: 'promserver'

static_configs:

- targets: ['10.0.0.13:9100']

- job_name: 'server_mariadb'

static_configs:

- targets: ['10.0.0.13:9104']

[root@mcw03 prometheus]#

执行重载

[root@mcw03 prometheus]# curl -X POST http://localhost:9090/-/reload

level=info ts=2024-01-29T16:22:22.264583475Z caller=main.go:632 msg="Loading configuration file" filename=/usr/local/prometheus/prometheus.yml

level=info ts=2024-01-29T16:22:22.264875915Z caller=main.go:658 msg="Completed loading of configuration file" filename=/usr/local/prometheus/prometheus.yml

[root@mcw03 prometheus]#

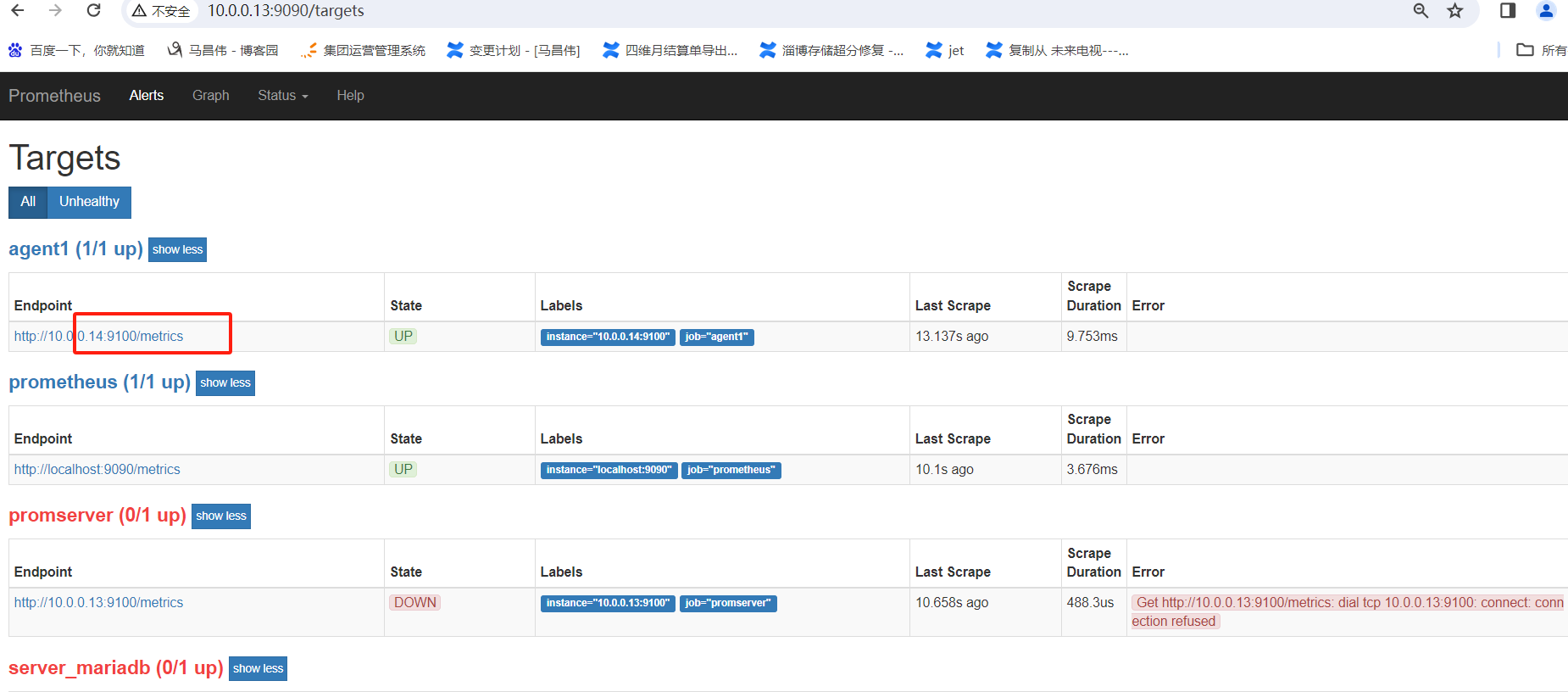

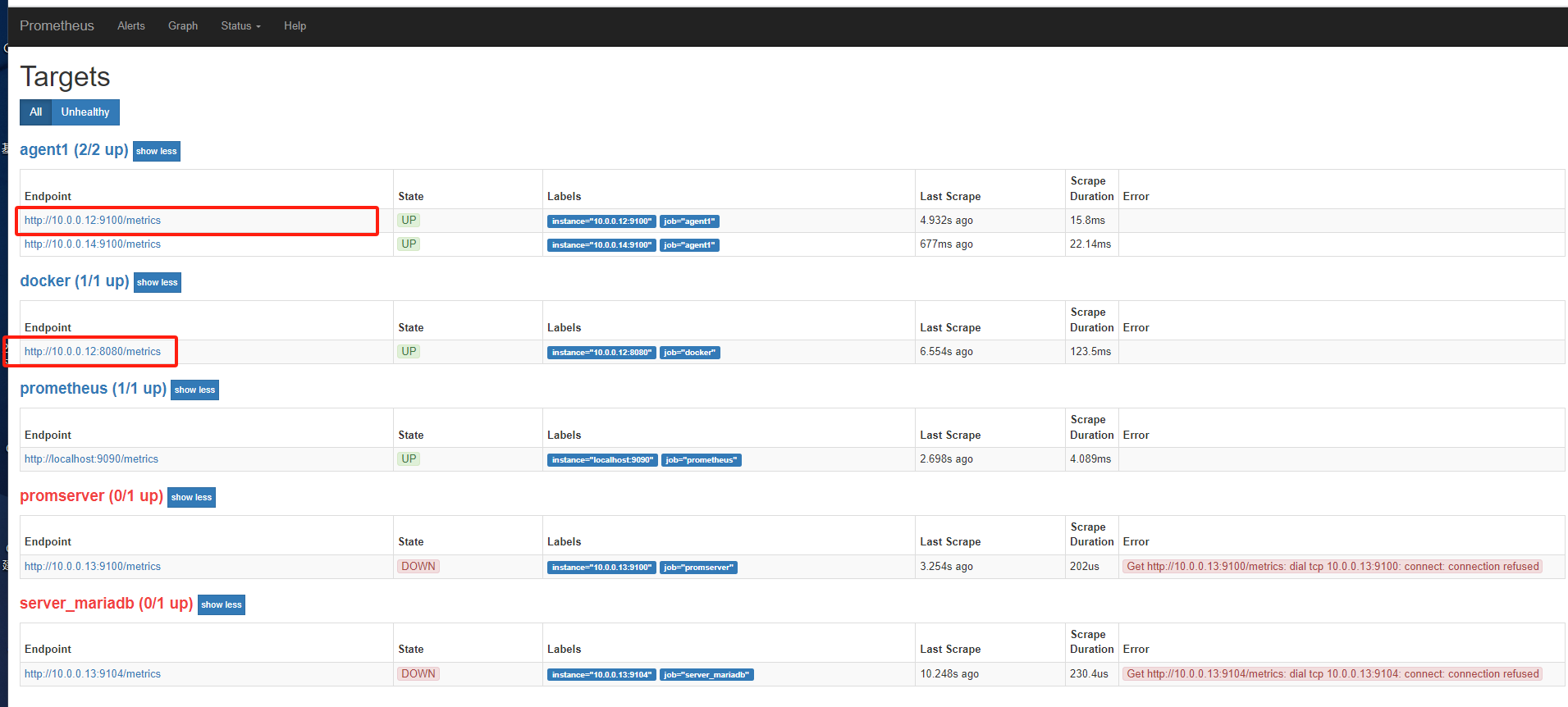

刷新页面可以看到14已经有了

设置systemctl管理

# cat /usr/lib/systemd/system/prometheus.service[Unit]Description=Prometheus Node ExporterAfter=network.target[Service]ExecStart=/usr/local/prometheus/prometheus --config.file=/etc/prometheus.yml --web.read-timeout=5m --web.max-connections=10 --storage.tsdb.retention=15d --storage.tsdb.path=/prometheus/data --query.max-concurrency=20 --query.timeout=2mUser=root[Install]WantedBy=multi-user.target |

启动参数解释

|

1

2

3

4

5

6

7

8

9

10

11

12

13

|

–config.file=/etc/prometheus.yml 指定配置文件 –web.read-timeout=5m 请求链接的最大等待时间,防止太多的空闲链接占用资源 –web.max-connections=512 针对prometheus,获取数据源的时候,建立的网络链接数,做一个最大数字的限制,防止链接数过多造成资源过大的消耗 –storage.tsdb.retention=15d 重要参数,prometheus 开始采集监控数据后,会存在内存和硬盘中;对于保存期限的设置。时间过长,硬盘和内存都吃不消;时间太短,要查历史数据就没了。企业15天最为合适。 –storage.tsdb.path="/prometheus/data" 存储数据路径,不要随便定义 –query.max-concurrency=20 用户查询最大并发数 –query.timeout=2m 慢查询强制终止 |

注意:配置文件不能加双引号,否则启动报错找不到文件或目录

本次启动用户是root生产中最好新建一个用户用于启动,需要设置配置文件及数据文件权限

数据目录在生产中最好单独配置数据硬盘,使用LVM硬盘格式配置

启动

|

1

2

3

4

|

#启动systemctl start prometheus#设置开机自启动systemctl enable prometheus |

查看是否启动

|

1

2

|

lsof -i:9090ps -ef|grep prometheus |

@@@

创建文件,并创建对应的目录

[root@mcw03 prometheus]# cat /usr/lib/systemd/system/prometheus.service

cat: /usr/lib/systemd/system/prometheus.service: No such file or directory

[root@mcw03 prometheus]# systemctl status prometheus

Unit prometheus.service could not be found.

[root@mcw03 prometheus]# vim /usr/lib/systemd/system/prometheus.service

[root@mcw03 prometheus]# pwd

/usr/local/prometheus

[root@mcw03 prometheus]# vim /usr/lib/systemd/system/prometheus.service

[root@mcw03 prometheus]#

[root@mcw03 prometheus]#

[root@mcw03 prometheus]# ls

console_libraries consoles data LICENSE NOTICE prometheus prometheus.yml promtool

[root@mcw03 prometheus]#

[root@mcw03 prometheus]# ls /data/

gv0 gv1 gv2 gv3

[root@mcw03 prometheus]# ls /

bin boot data dev etc home hs_err_pid18517.log lib lib64 media mnt opt proc root run sbin srv sys tmp user usr var

[root@mcw03 prometheus]# mkdir /prometheus/data

mkdir: cannot create directory ‘/prometheus/data’: No such file or directory

[root@mcw03 prometheus]# mkdir /prometheus/data -p

[root@mcw03 prometheus]# ls

console_libraries consoles data LICENSE NOTICE prometheus prometheus.yml promtool

[root@mcw03 prometheus]# cp prometheus.yml /etc/

[root@mcw03 prometheus]# cat /usr/lib/systemd/system/prometheus.service

[Unit]

Description=Prometheus Node Exporter

After=network.target [Service]

ExecStart=/usr/local/prometheus/prometheus --config.file=/etc/prometheus.yml --web.read-timeout=5m --web.max-connections=10 --storage.tsdb.retention=15d --storage.tsdb.path=/prometheus/data --query.max-concurrency=20 --query.timeout=2m

User=root

[Install]

WantedBy=multi-user.target

[root@mcw03 prometheus]# ls data/

lock wal

[root@mcw03 prometheus]# ls data/wal/

00000000

[root@mcw03 prometheus]# ls console

ls: cannot access console: No such file or directory

[root@mcw03 prometheus]# ls consoles/

index.html.example node-cpu.html node-disk.html node.html node-overview.html prometheus.html prometheus-overview.html

[root@mcw03 prometheus]# ls console_libraries/

menu.lib prom.lib

[root@mcw03 prometheus]#

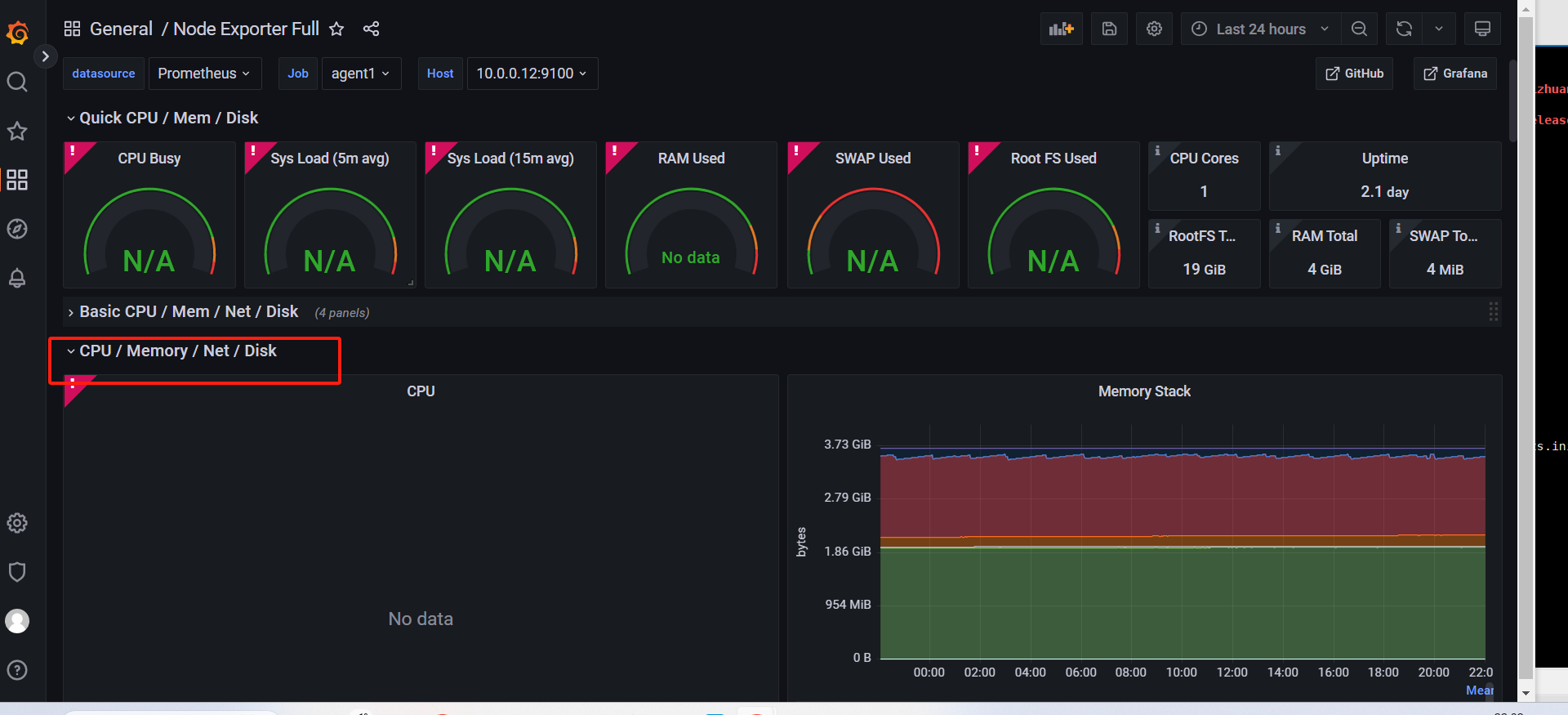

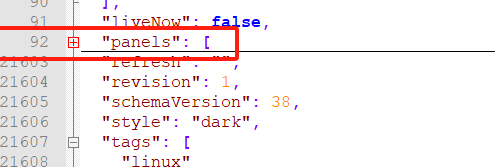

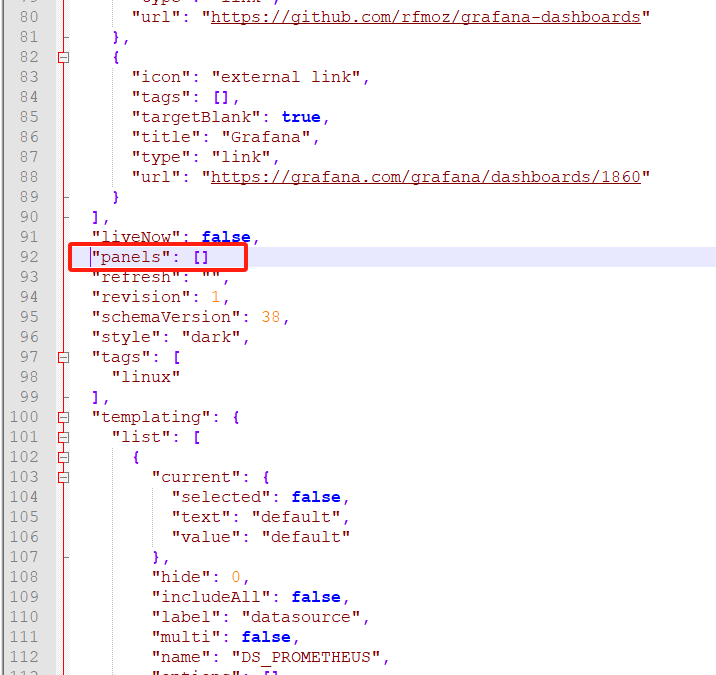

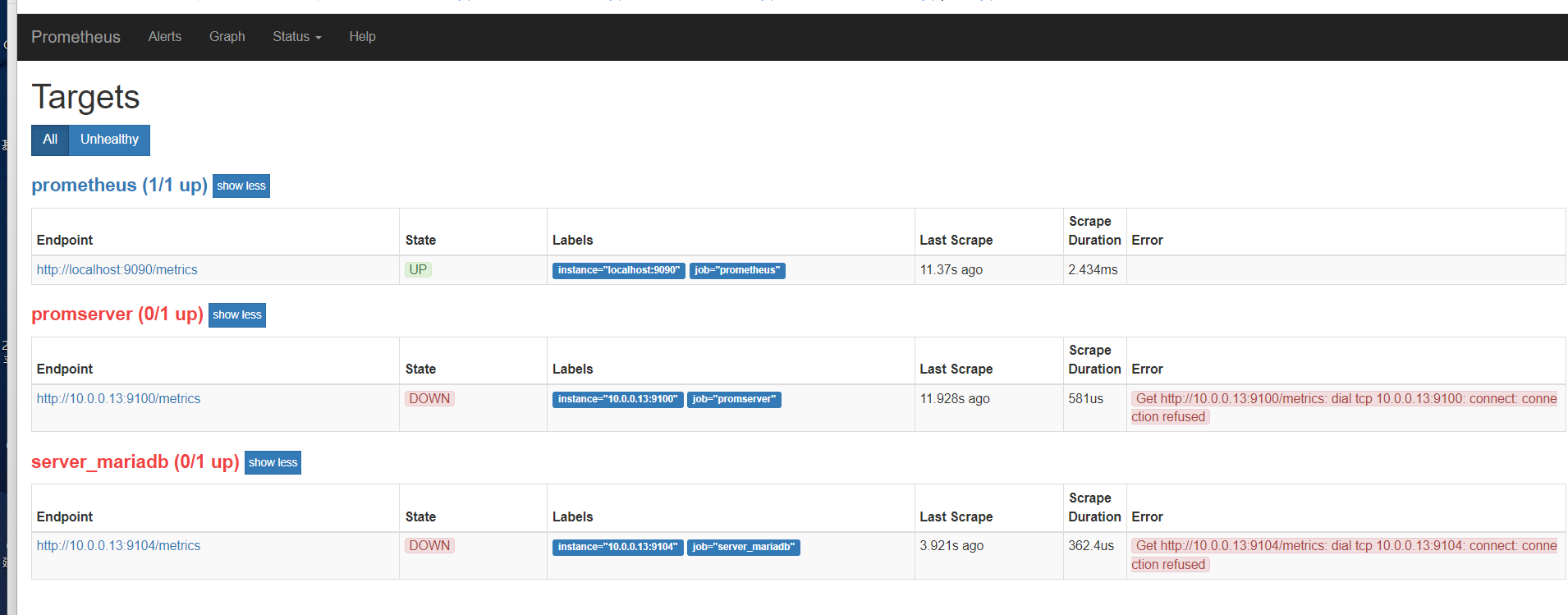

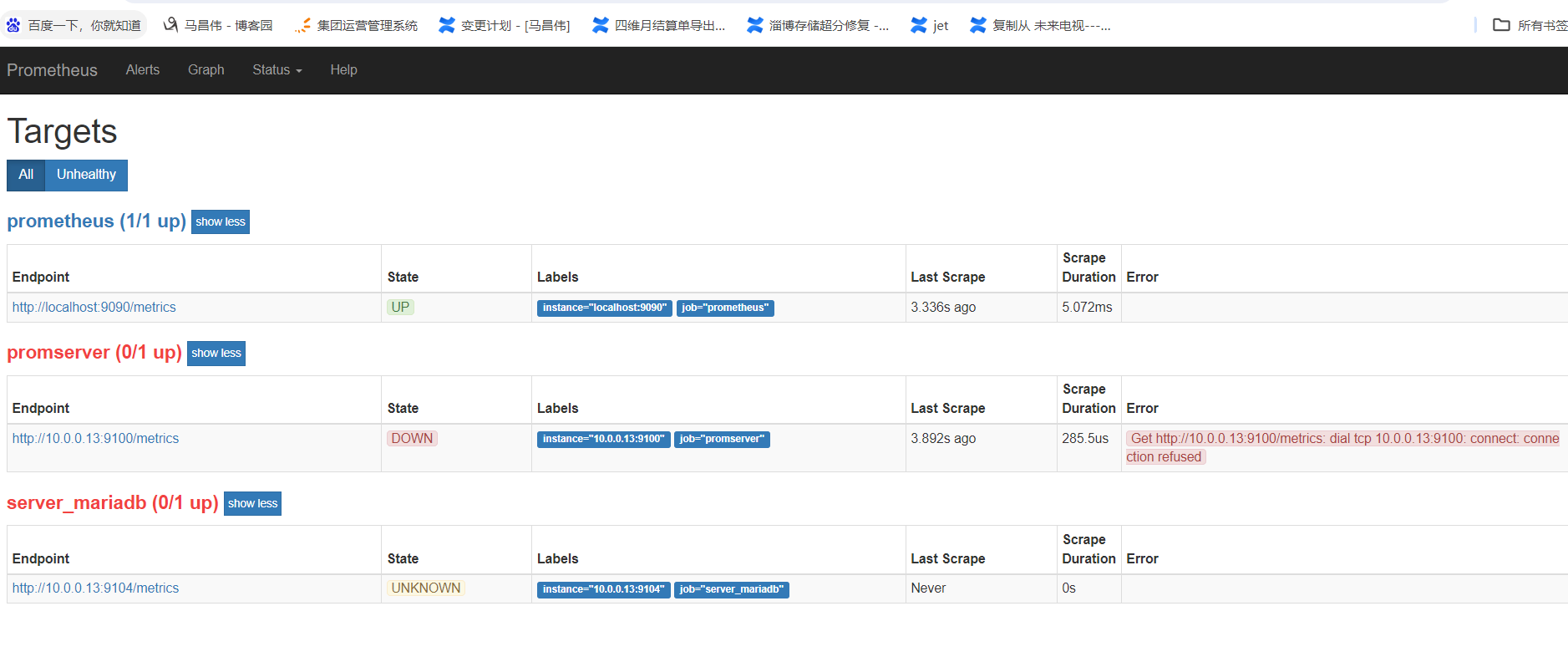

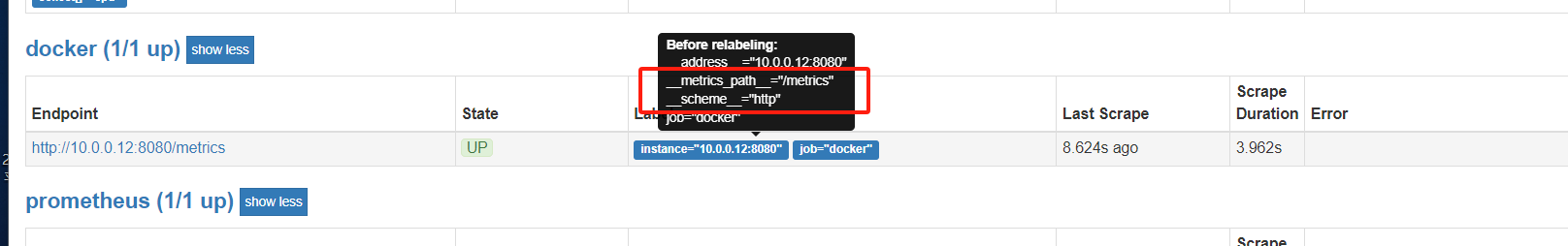

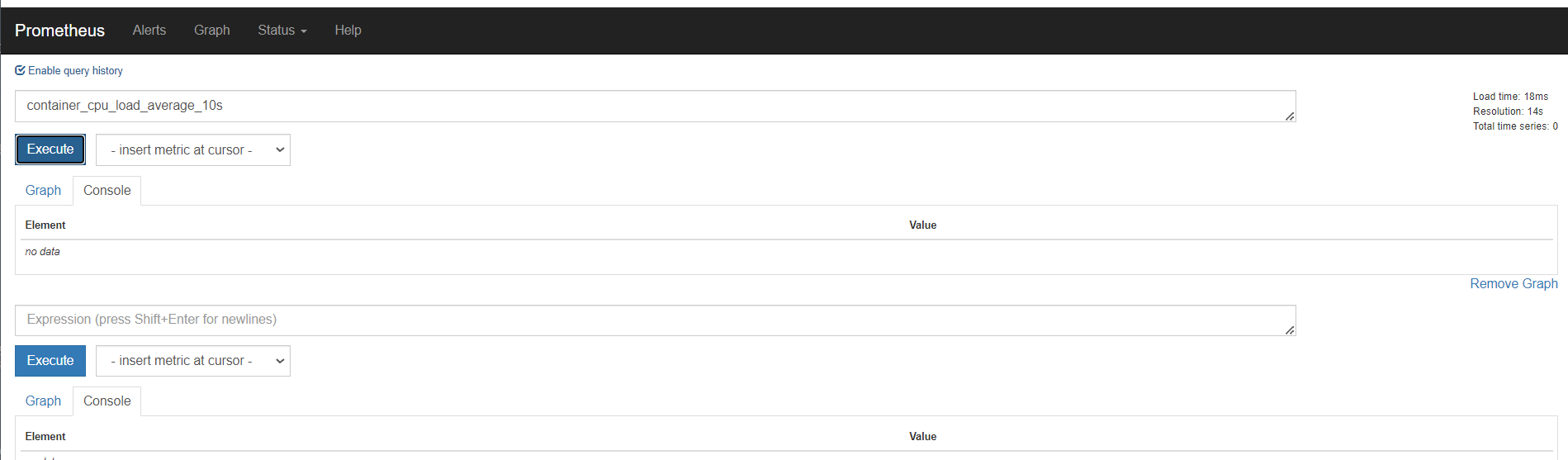

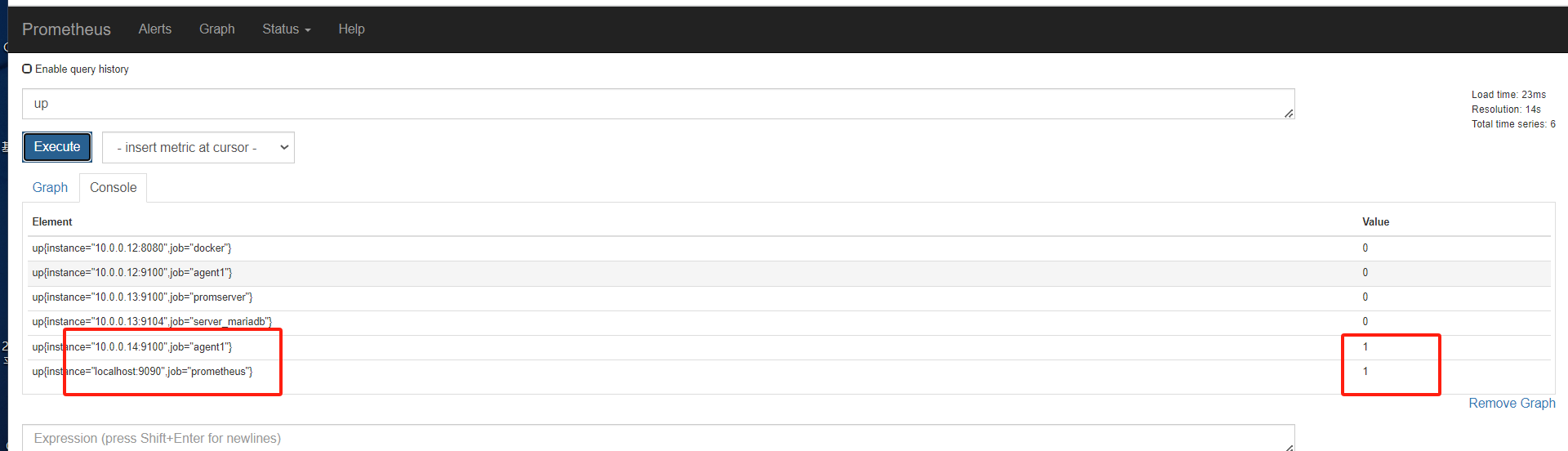

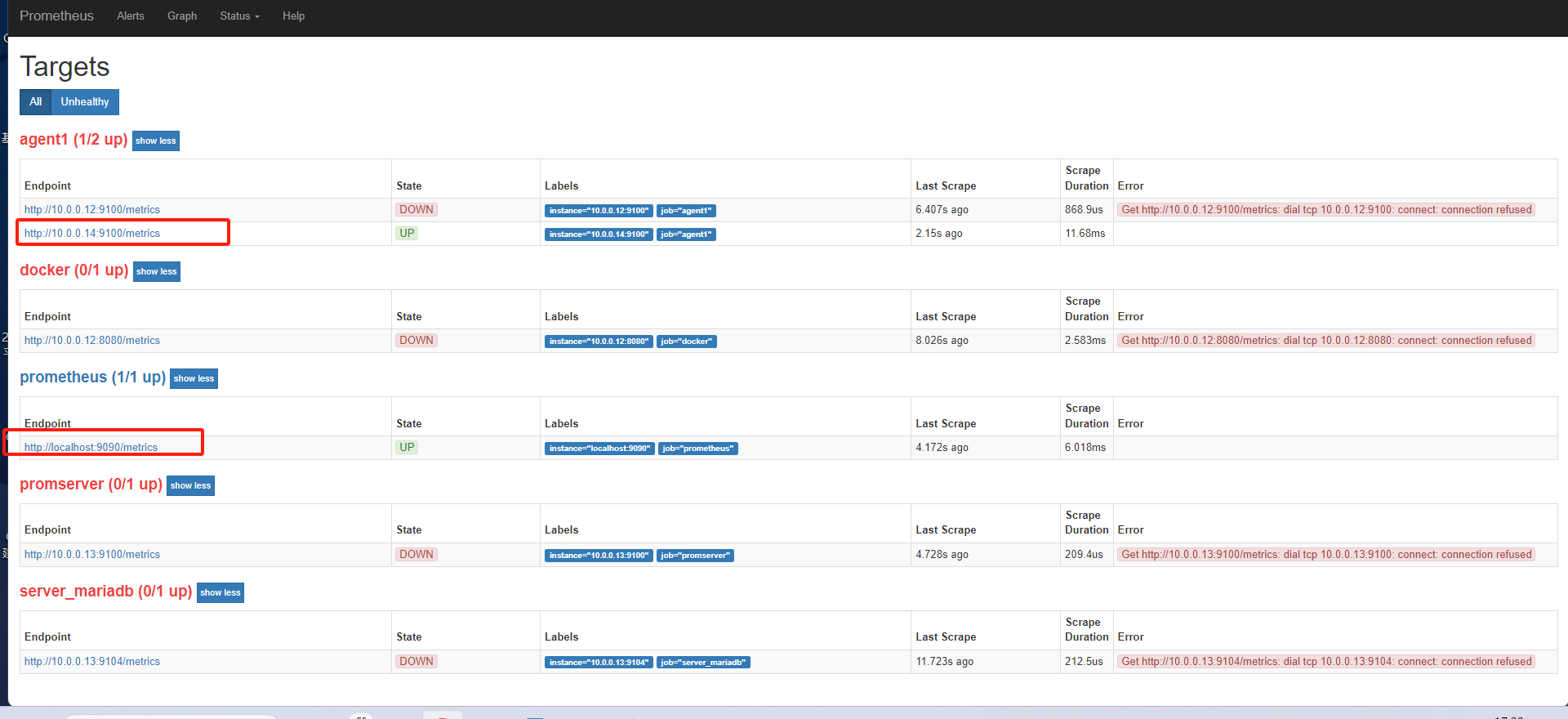

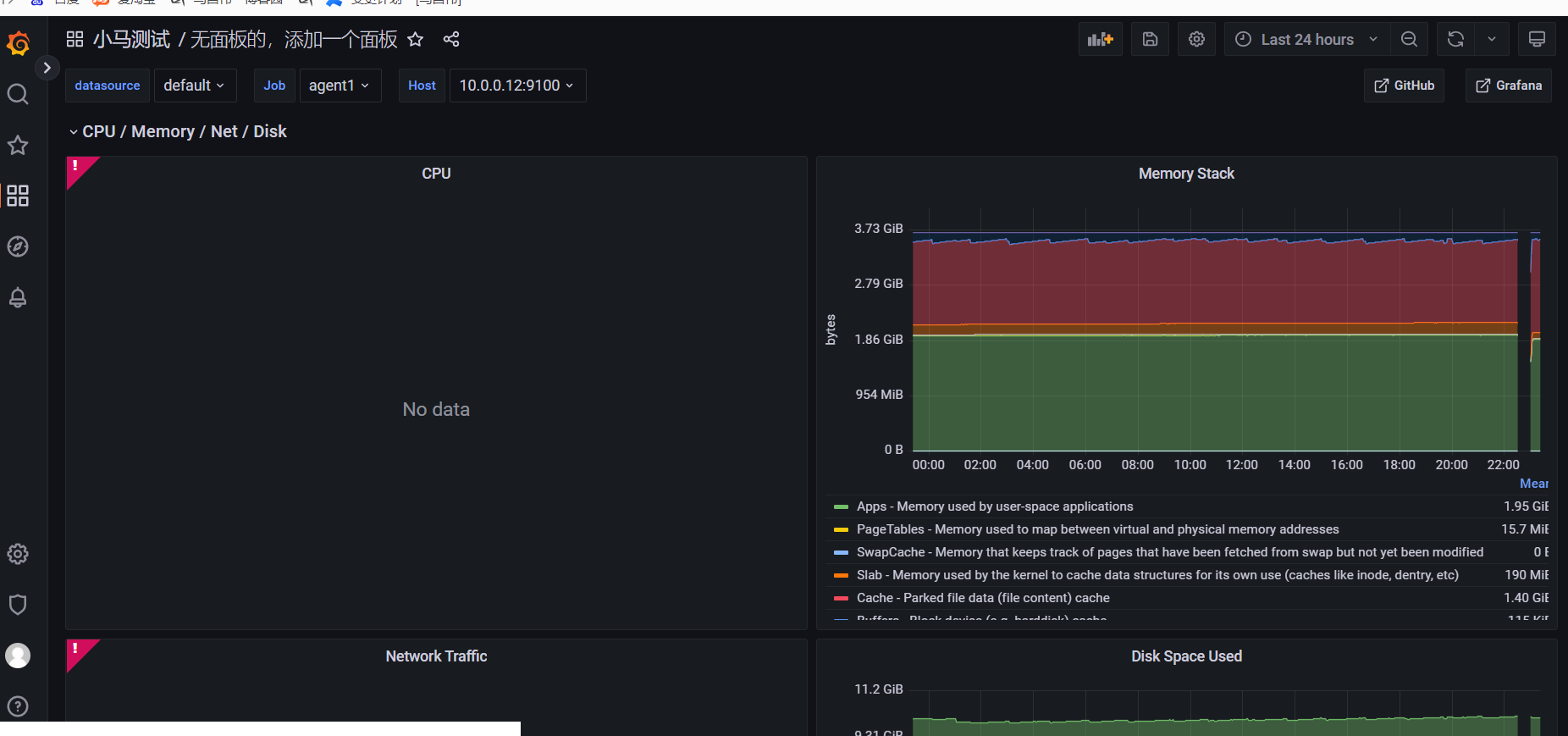

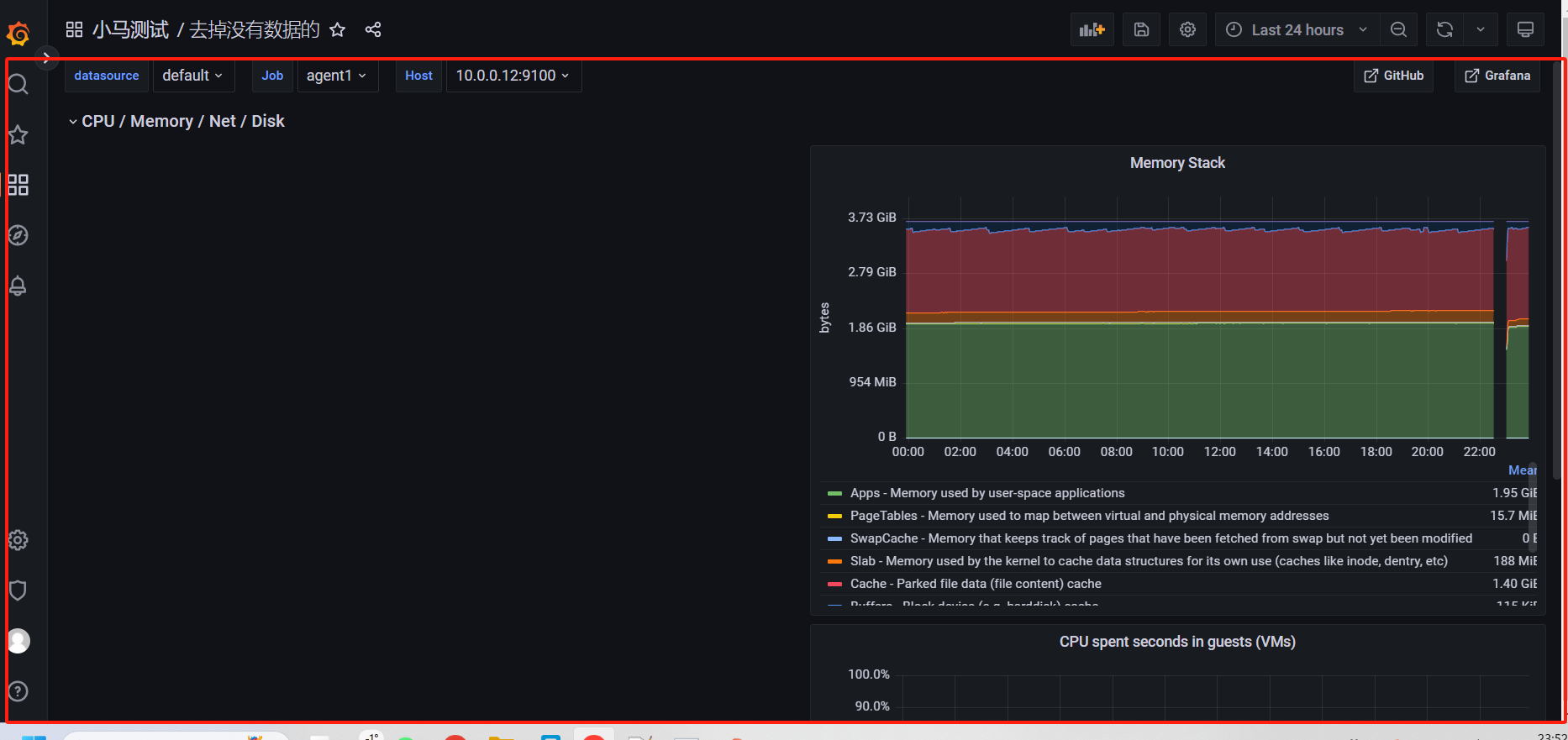

此时页面是这样的

停止并用systemctl启动

[root@mcw03 prometheus]# ps -ef|grep prom

root 84520 2094 0 00:19 pts/0 00:00:00 /usr/local/prometheus/prometheus --web.enable-lifecycle --config.file=/usr/local/prometheus/prometheus.yml

root 85064 2094 0 00:32 pts/0 00:00:00 grep --color=auto prom

[root@mcw03 prometheus]# kill 84520

[root@mcw03 prometheus]# level=warn ts=2024-01-29T16:32:10.65552338Z caller=main.go:406 msg="Received SIGTERM, exiting gracefully..."

level=info ts=2024-01-29T16:32:10.655566913Z caller=main.go:431 msg="Stopping scrape discovery manager..."

level=info ts=2024-01-29T16:32:10.655574899Z caller=main.go:445 msg="Stopping notify discovery manager..."

level=info ts=2024-01-29T16:32:10.65557968Z caller=main.go:467 msg="Stopping scrape manager..."

level=info ts=2024-01-29T16:32:10.655598695Z caller=main.go:427 msg="Scrape discovery manager stopped"

level=info ts=2024-01-29T16:32:10.655606567Z caller=main.go:441 msg="Notify discovery manager stopped"

level=info ts=2024-01-29T16:32:10.655627041Z caller=manager.go:657 component="rule manager" msg="Stopping rule manager..."

level=info ts=2024-01-29T16:32:10.655635473Z caller=manager.go:663 component="rule manager" msg="Rule manager stopped"

level=info ts=2024-01-29T16:32:10.65608701Z caller=main.go:461 msg="Scrape manager stopped"

level=info ts=2024-01-29T16:32:10.656138338Z caller=notifier.go:512 component=notifier msg="Stopping notification manager..."

level=info ts=2024-01-29T16:32:10.65615002Z caller=main.go:616 msg="Notifier manager stopped"

level=info ts=2024-01-29T16:32:10.656259633Z caller=main.go:628 msg="See you next time!" [1]+ Done /usr/local/prometheus/prometheus --web.enable-lifecycle --config.file="/usr/local/prometheus/prometheus.yml"

[root@mcw03 prometheus]# systemctl status prometheus

● prometheus.service - Prometheus Node Exporter

Loaded: loaded (/usr/lib/systemd/system/prometheus.service; disabled; vendor preset: disabled)

Active: inactive (dead)

[root@mcw03 prometheus]# systemctl start prometheus

[root@mcw03 prometheus]# systemctl status prometheus

● prometheus.service - Prometheus Node Exporter

Loaded: loaded (/usr/lib/systemd/system/prometheus.service; disabled; vendor preset: disabled)

Active: active (running) since Tue 2024-01-30 00:32:29 CST; 7s ago

Main PID: 85086 (prometheus)

CGroup: /system.slice/prometheus.service

└─85086 /usr/local/prometheus/prometheus --config.file=/etc/prometheus.yml --web.read-timeout=5m --web.max-connections=10 --storage.tsdb.retention=15d --storage.tsdb.path=... Jan 30 00:32:29 mcw03 prometheus[85086]: level=info ts=2024-01-29T16:32:29.116661038Z caller=main.go:245 build_context="(go=go1.11.1, user=root@578ab108d0b9, date=20181106-11:40:44)"

Jan 30 00:32:29 mcw03 prometheus[85086]: level=info ts=2024-01-29T16:32:29.116676722Z caller=main.go:246 host_details="(Linux 3.10.0-693.el7.x86_64 #1 SMP Tue Aug 22 21:0...w03 (none))"

Jan 30 00:32:29 mcw03 prometheus[85086]: level=info ts=2024-01-29T16:32:29.116690993Z caller=main.go:247 fd_limits="(soft=1024, hard=4096)"

Jan 30 00:32:29 mcw03 prometheus[85086]: level=info ts=2024-01-29T16:32:29.116701722Z caller=main.go:248 vm_limits="(soft=unlimited, hard=unlimited)"

Jan 30 00:32:29 mcw03 prometheus[85086]: level=info ts=2024-01-29T16:32:29.118003926Z caller=main.go:562 msg="Starting TSDB ..."

Jan 30 00:32:29 mcw03 prometheus[85086]: level=info ts=2024-01-29T16:32:29.122879549Z caller=main.go:572 msg="TSDB started"

Jan 30 00:32:29 mcw03 prometheus[85086]: level=info ts=2024-01-29T16:32:29.122934471Z caller=main.go:632 msg="Loading configuration file" filename=/etc/prometheus.yml

Jan 30 00:32:29 mcw03 prometheus[85086]: level=info ts=2024-01-29T16:32:29.123963083Z caller=main.go:658 msg="Completed loading of configuration file" filename=/etc/prometheus.yml

Jan 30 00:32:29 mcw03 prometheus[85086]: level=info ts=2024-01-29T16:32:29.123980522Z caller=main.go:531 msg="Server is ready to receive web requests."

Jan 30 00:32:29 mcw03 prometheus[85086]: level=info ts=2024-01-29T16:32:29.124447919Z caller=web.go:399 component=web msg="Start listening for connections" address=0.0.0.0:9090

Hint: Some lines were ellipsized, use -l to show in full.

[root@mcw03 prometheus]# ps -ef|grep prome

root 85086 1 0 00:32 ? 00:00:00 /usr/local/prometheus/prometheus --config.file=/etc/prometheus.yml --web.read-timeout=5m --web.max-connections=10 --storage.tsdb.retention=15d --storage.tsdb.path=/prometheus/data --query.max-concurrency=20 --query.timeout=2m

root 85105 2094 0 00:32 pts/0 00:00:00 grep --color=auto prome

[root@mcw03 prometheus]#

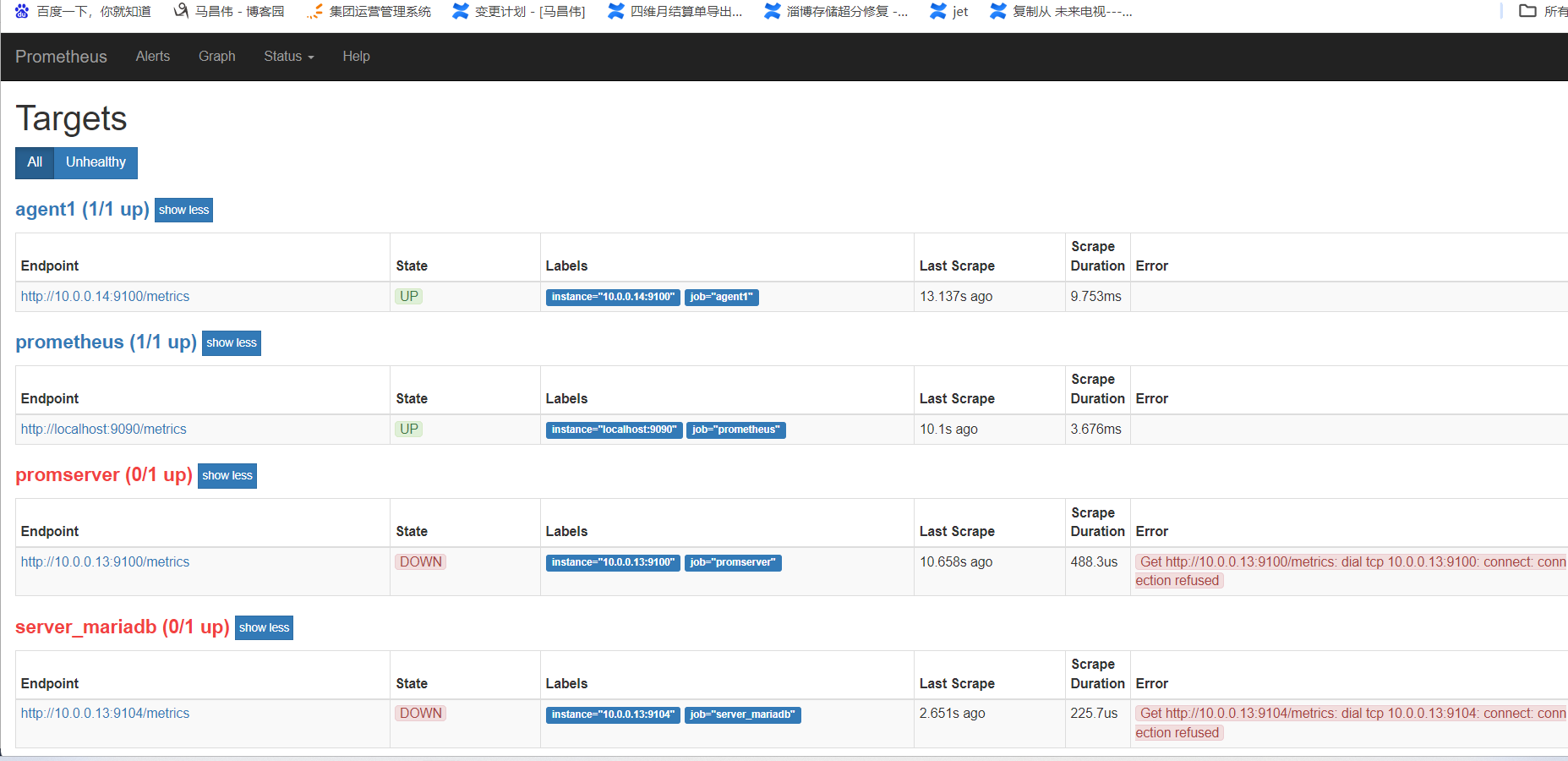

刷新页面,没有啥变化

将14的配置注释掉,发现还是不能用重载了

[root@mcw03 prometheus]# vim /etc/prometheus.yml

[root@mcw03 prometheus]# curl -X POST http://localhost:9090/-/reload

Lifecycle APIs are not enabled[root@mcw03 prometheus]#

加上这个参数 --web.enable-lifecycle,然后重新启动

[root@mcw03 prometheus]# vim /usr/lib/systemd/system/prometheus.service

[root@mcw03 prometheus]# cat /usr/lib/systemd/system/prometheus.service

[Unit]

Description=Prometheus Node Exporter

After=network.target [Service]

ExecStart=/usr/local/prometheus/prometheus --config.file=/etc/prometheus.yml --web.enable-lifecycle --web.read-timeout=5m --web.max-connections=10 --storage.tsdb.retention=15d --storage.tsdb.path=/prometheus/data --query.max-concurrency=20 --query.timeout=2m

User=root

[Install]

WantedBy=multi-user.target

[root@mcw03 prometheus]# systemctl start prometheus

Warning: prometheus.service changed on disk. Run 'systemctl daemon-reload' to reload units.

[root@mcw03 prometheus]# systemctl daemon-reload

[root@mcw03 prometheus]# systemctl start prometheus

[root@mcw03 prometheus]#

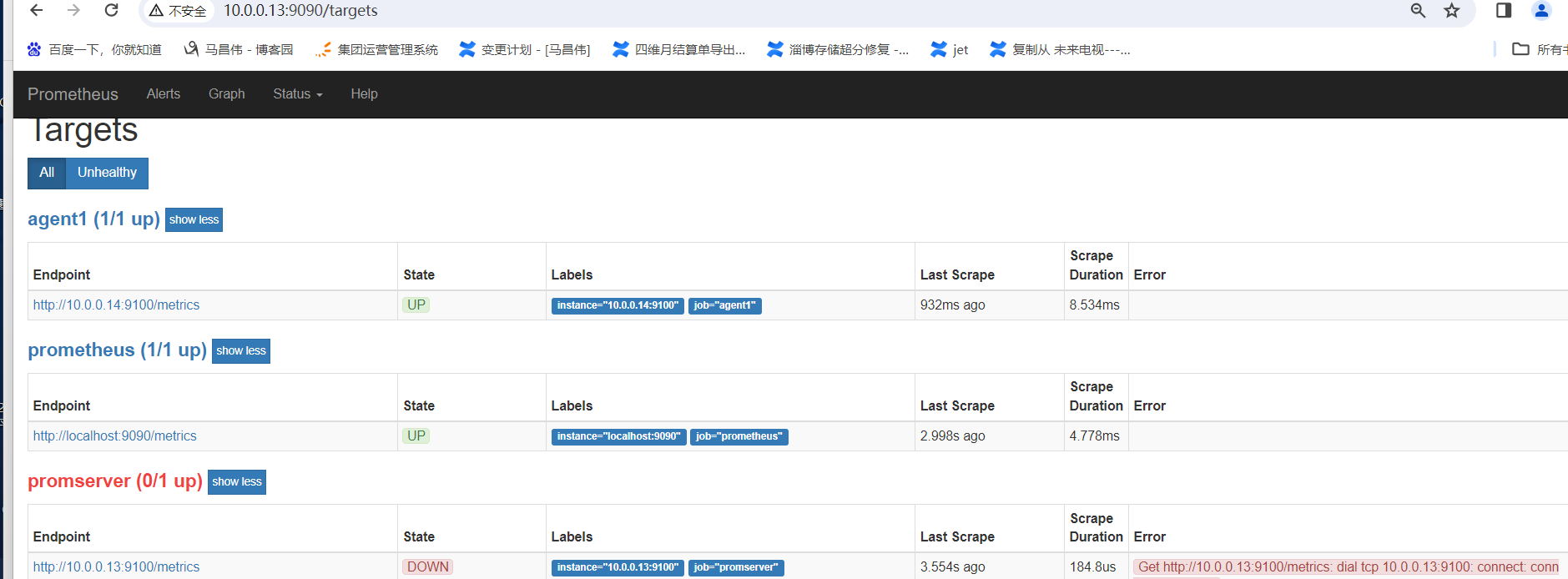

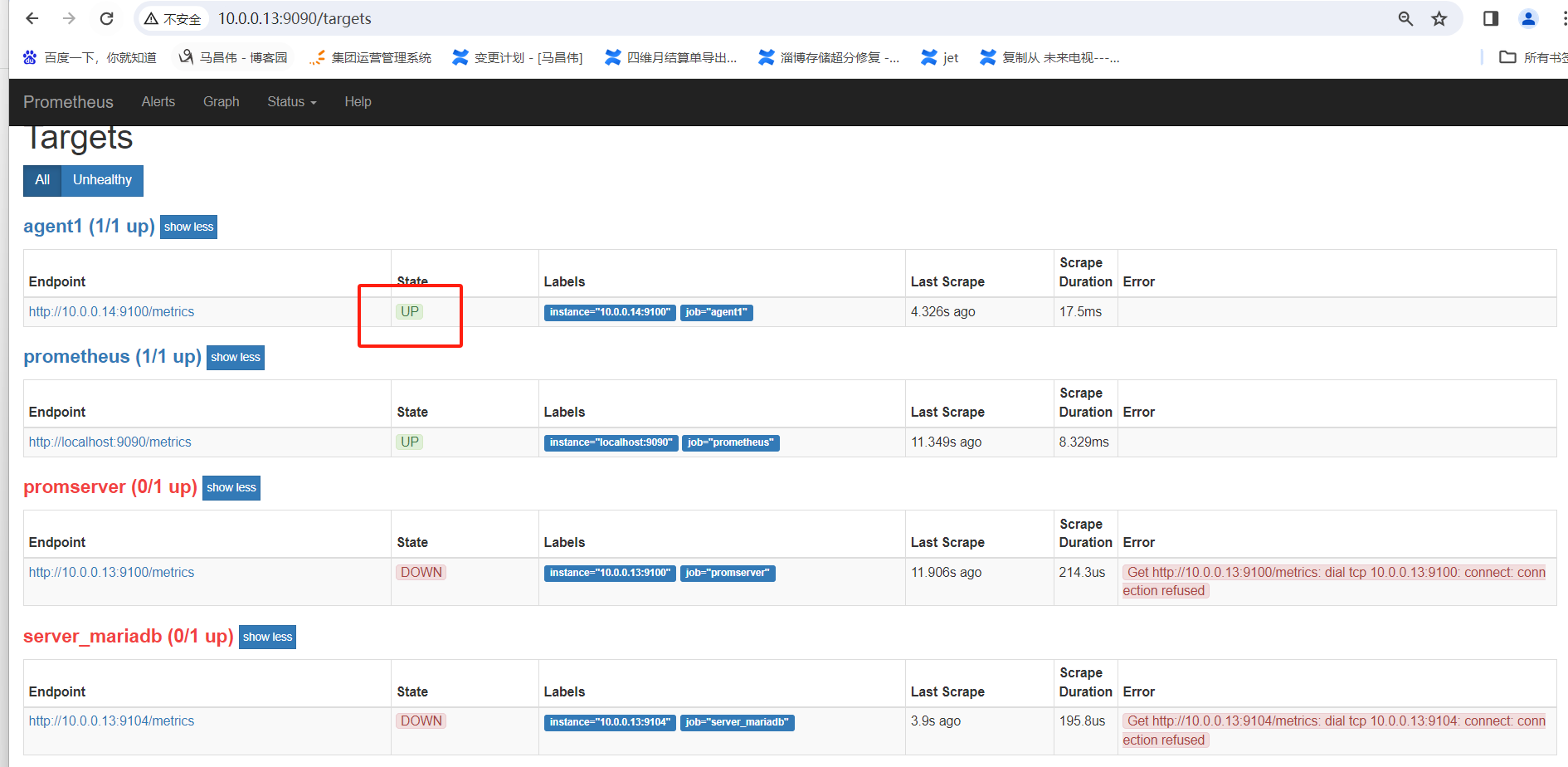

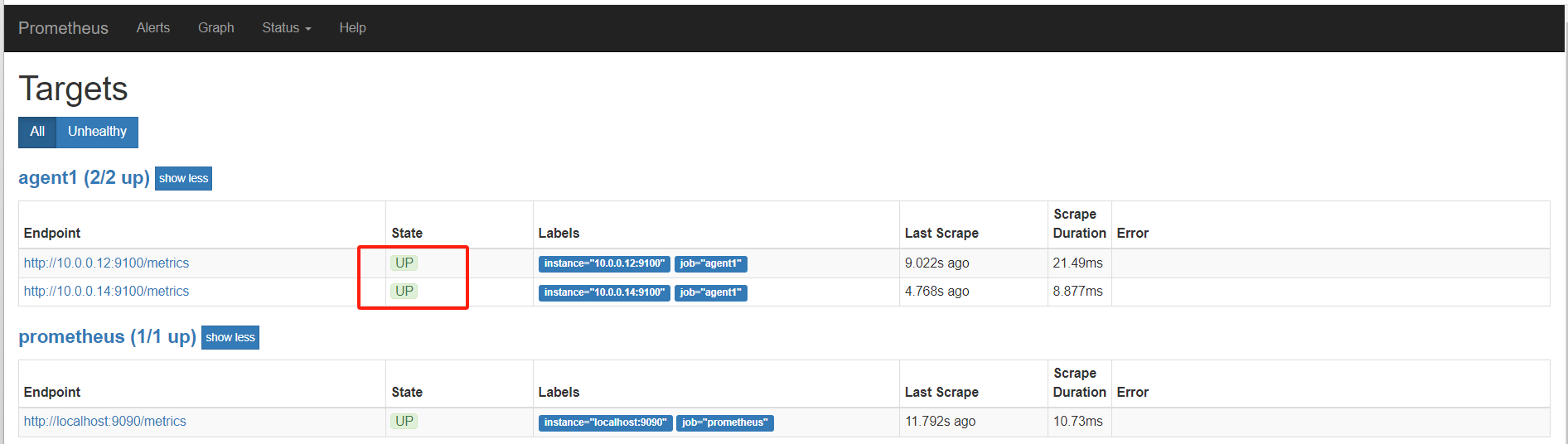

此时14down

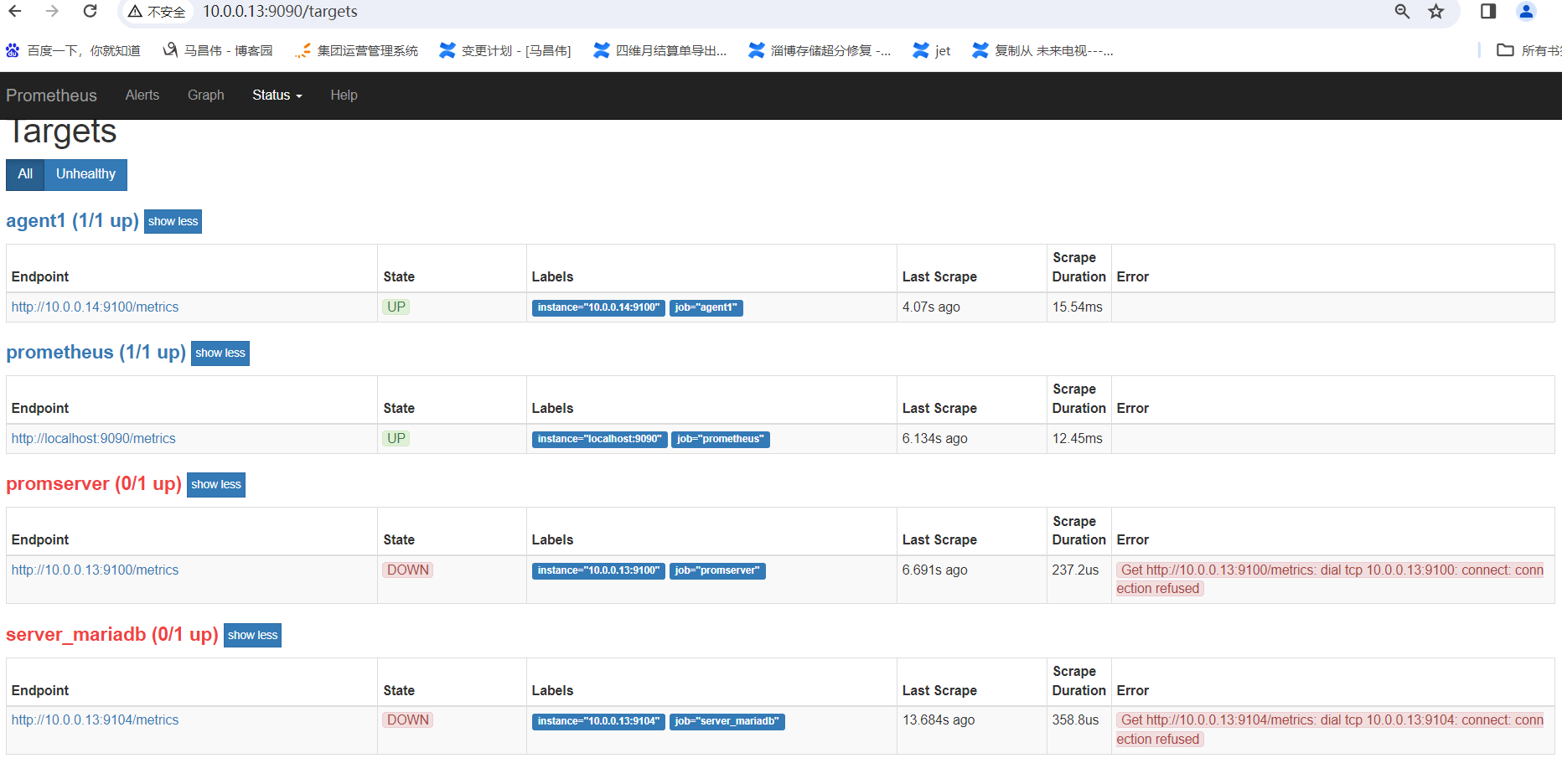

取消注释掉的14机器,然后修改配置重载,正常重载

[root@mcw03 prometheus]# vim /etc/prometheus.yml

[root@mcw03 prometheus]# grep agent1 -A 4 /etc/prometheus.yml

- job_name: 'agent1'

static_configs:

- targets: ['10.0.0.14:9100']

- job_name: 'promserver'

static_configs:

[root@mcw03 prometheus]# curl -X POST http://localhost:9090/-/reload

[root@mcw03 prometheus]#

刷新一下,14up了

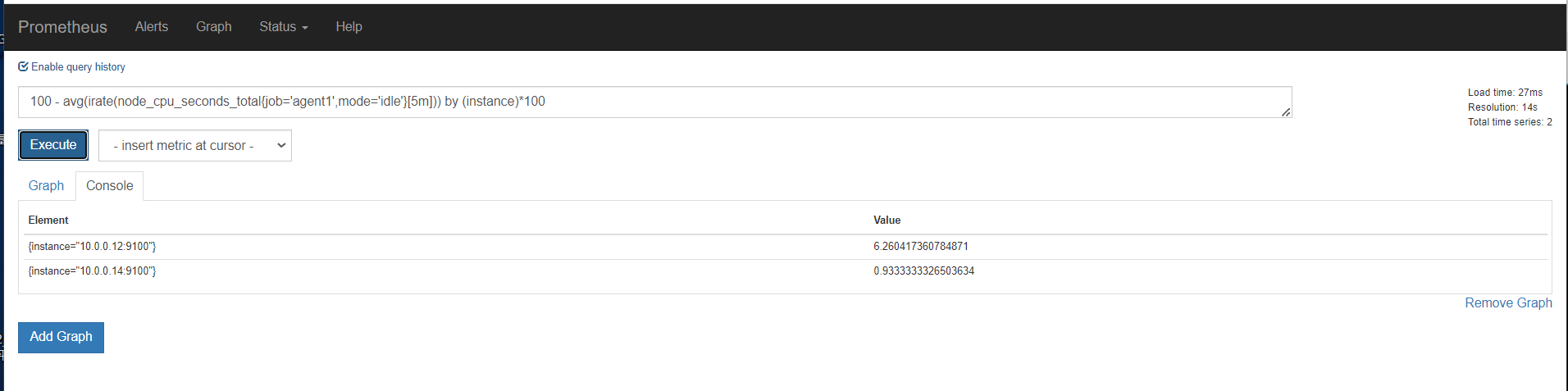

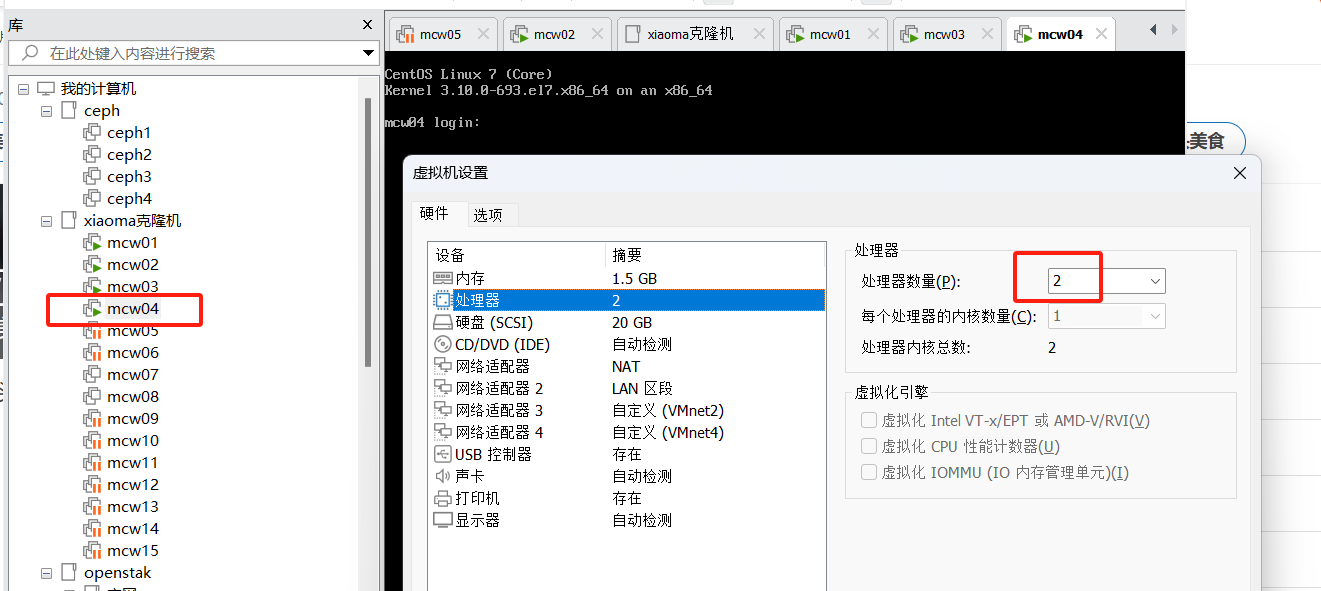

添加第二个node,放在同一组下面

先将客户端程序从mcw02复制到mcw02

[root@mcw04 ~]# scp -rp /usr/local/node_exporter/ 10.0.0.12:/usr/local

The authenticity of host '10.0.0.12 (10.0.0.12)' can't be established.

ECDSA key fingerprint is SHA256:mc9PiiU0mo/DDfwqVPG5s2VIrSDe1B+9iZM7rSeC/Zg.

ECDSA key fingerprint is MD5:86:5b:8b:ee:46:2b:47:a5:fb:cf:f9:68:e3:ee:b0:2a.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '10.0.0.12' (ECDSA) to the list of known hosts.

root@10.0.0.12's password:

LICENSE 100% 11KB 966.1KB/s 00:00

node_exporter 100% 16MB 43.6MB/s 00:00

NOTICE 100% 463 248.9KB/s 00:00

[root@mcw04 ~]#

mcw02上启动起来

[root@mcw02 ~]# nohup /usr/local/node_exporter/node_exporter &

[1] 25347

[root@mcw02 ~]# nohup: ignoring input and appending output to ‘nohup.out’ [root@mcw02 ~]# ps -ef|grep node_export

root 25347 1746 0 10:50 pts/0 00:00:00 /usr/local/node_exporter/node_exporter

root 25354 1746 0 10:50 pts/0 00:00:00 grep --color=auto node_export

[root@mcw02 ~]# ss -lntup|grep 25347

tcp LISTEN 0 16384 :::9100 :::* users:(("node_exporter",pid=25347,fd=3))

[root@mcw02 ~]#

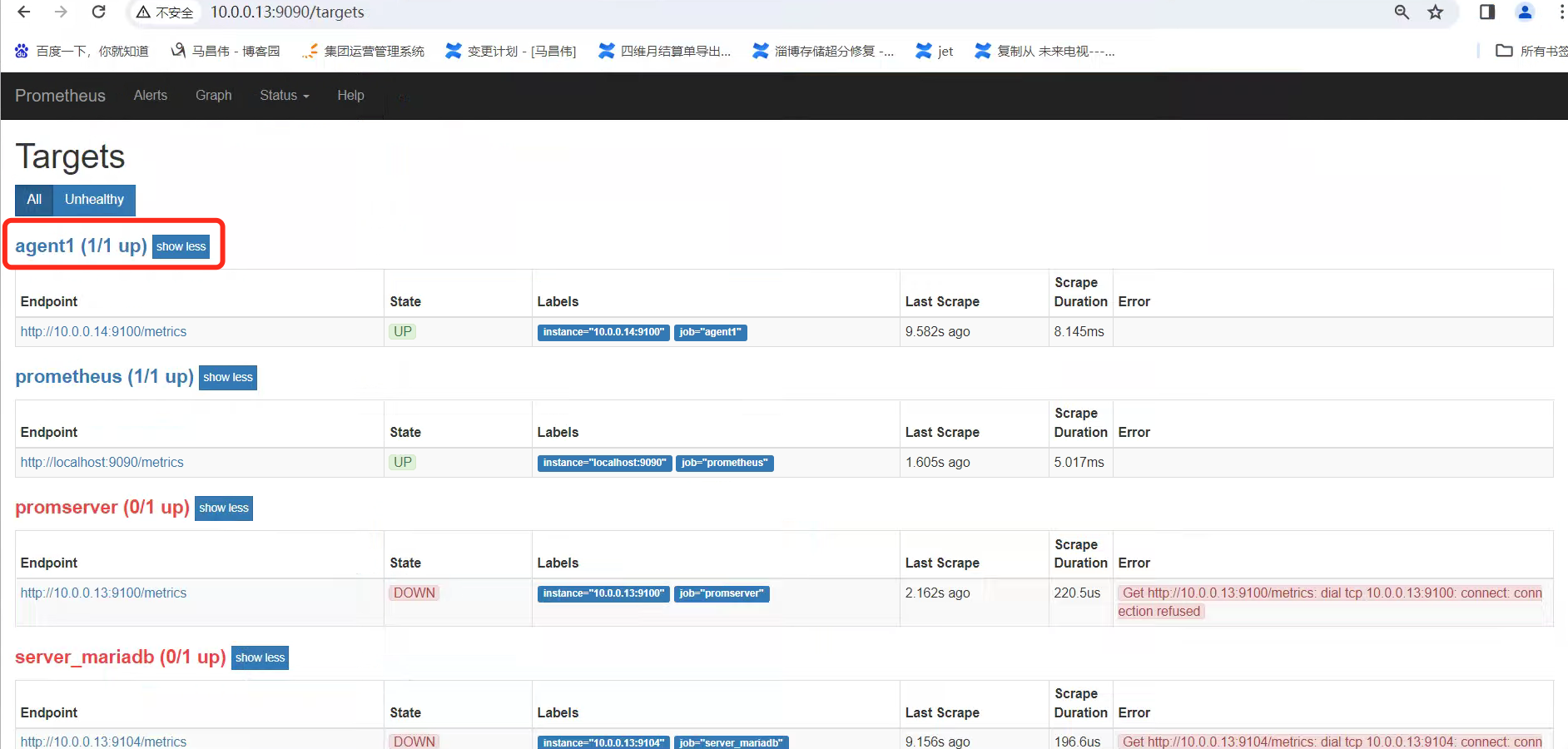

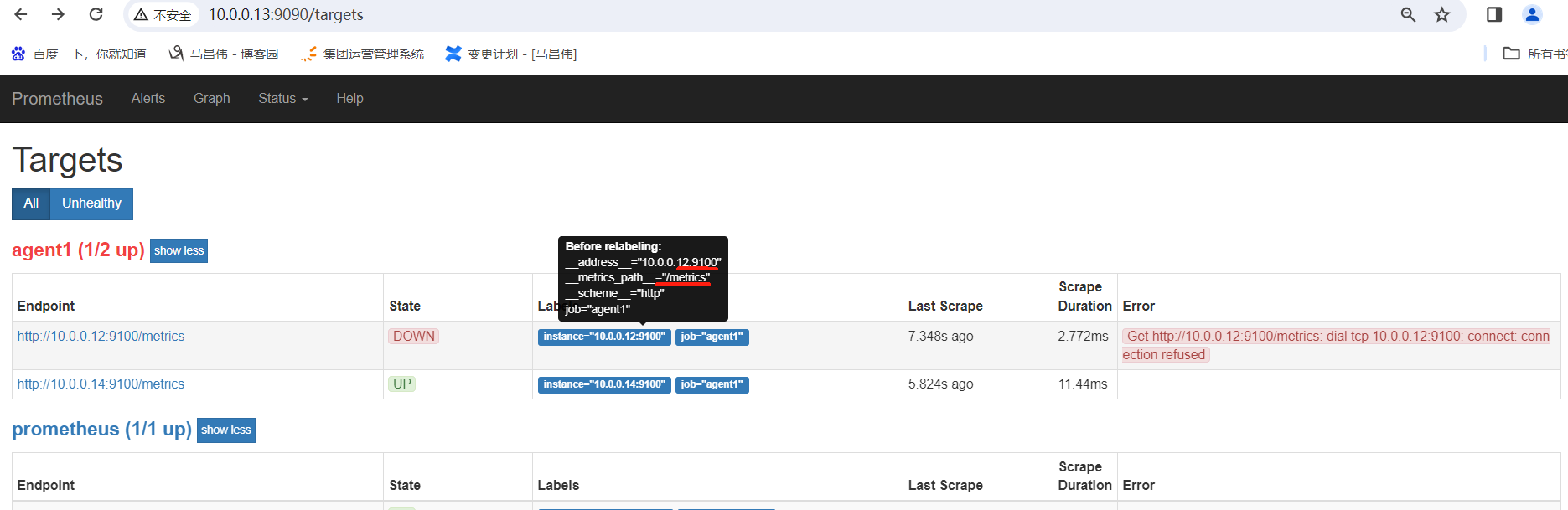

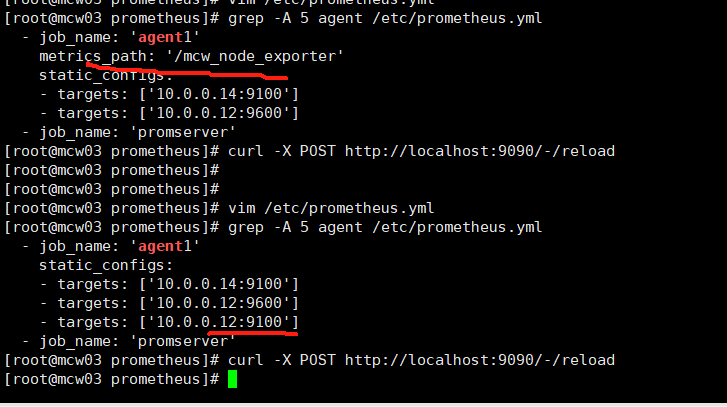

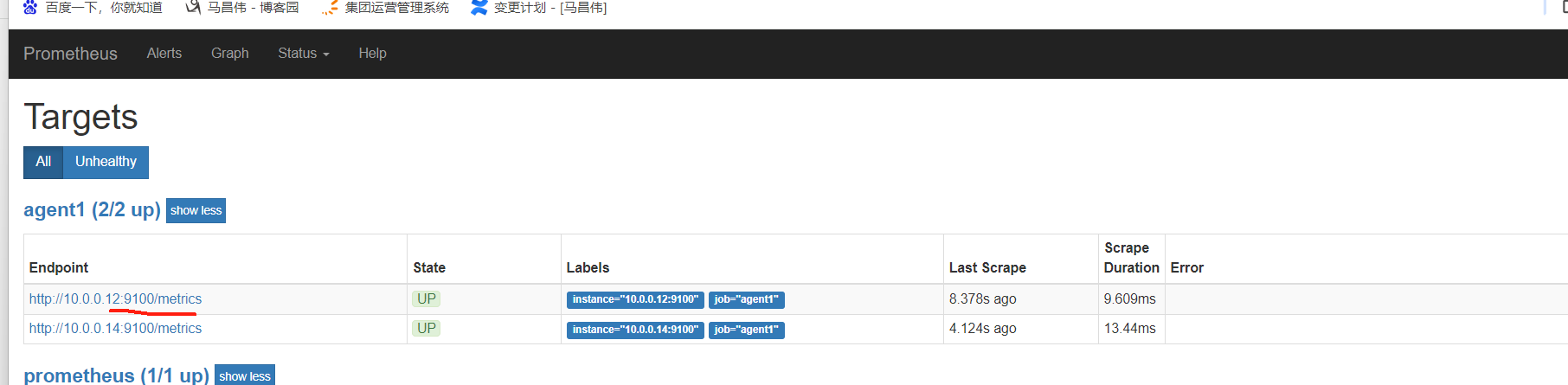

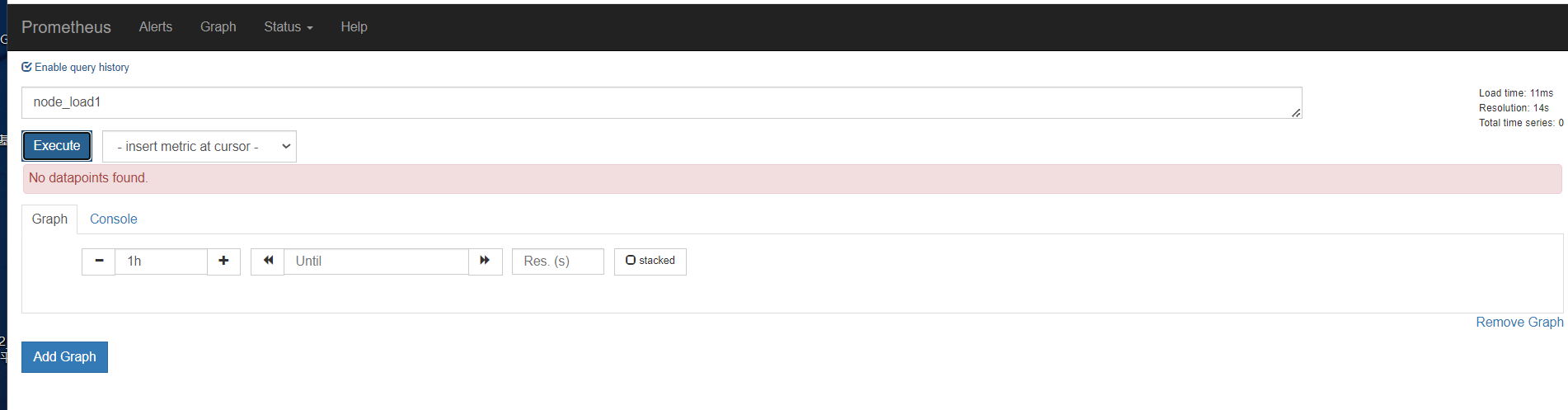

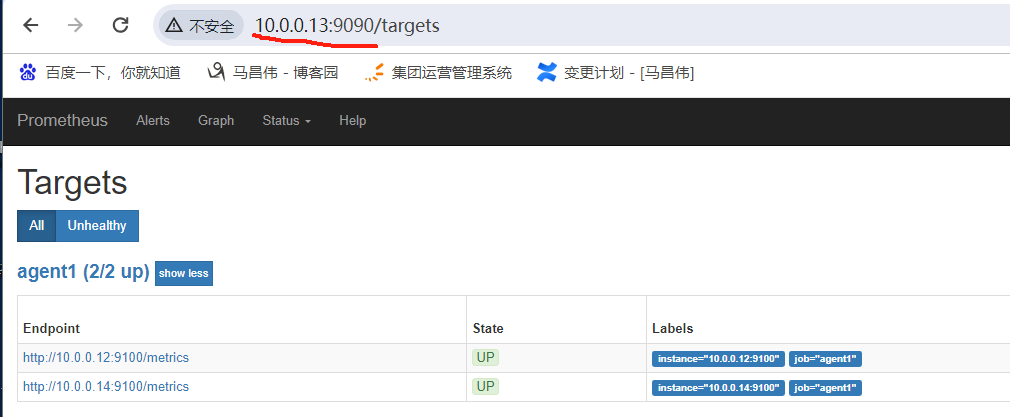

添加这个节点的监控之前

添加到agent1监控组下,然后重载配置

[root@mcw03 prometheus]# vim /etc/prometheus.yml

[root@mcw03 prometheus]# cat /etc/prometheus.yml

# my global config

global:

scrape_interval: 15s # Set the scrape interval to every 15 seconds. Default is every 1 minute.

evaluation_interval: 15s # Evaluate rules every 15 seconds. The default is every 1 minute.

# scrape_timeout is set to the global default (10s). # Alertmanager configuration

alerting:

alertmanagers:

- static_configs:

- targets:

# - alertmanager:9093 # Load rules once and periodically evaluate them according to the global 'evaluation_interval'.

rule_files:

# - "first_rules.yml"

# - "second_rules.yml" # A scrape configuration containing exactly one endpoint to scrape:

# Here it's Prometheus itself.

scrape_configs:

# The job name is added as a label `job=<job_name>` to any timeseries scraped from this config.

- job_name: 'prometheus' # metrics_path defaults to '/metrics'

# scheme defaults to 'http'. static_configs:

- targets: ['localhost:9090']

- job_name: 'agent1'

static_configs:

- targets: ['10.0.0.14:9100']

- targets: ['10.0.0.12:9100']

- job_name: 'promserver'

static_configs:

- targets: ['10.0.0.13:9100']

- job_name: 'server_mariadb'

static_configs:

- targets: ['10.0.0.13:9104']

[root@mcw03 prometheus]# curl -X POST http://localhost:9090/-/reload

[root@mcw03 prometheus]#

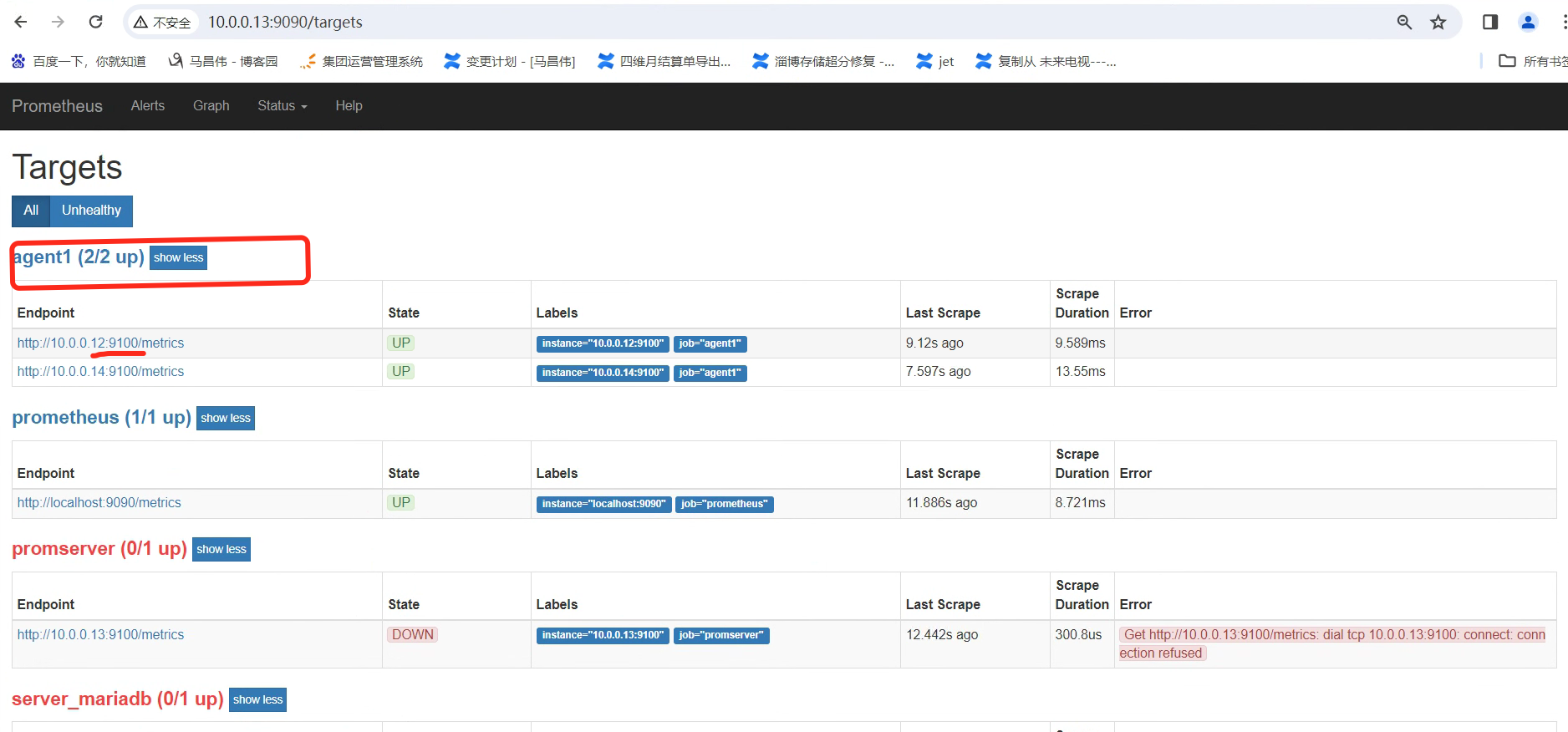

刷新一下,可以看到监控组下有两个了,是job name来分组的

其它

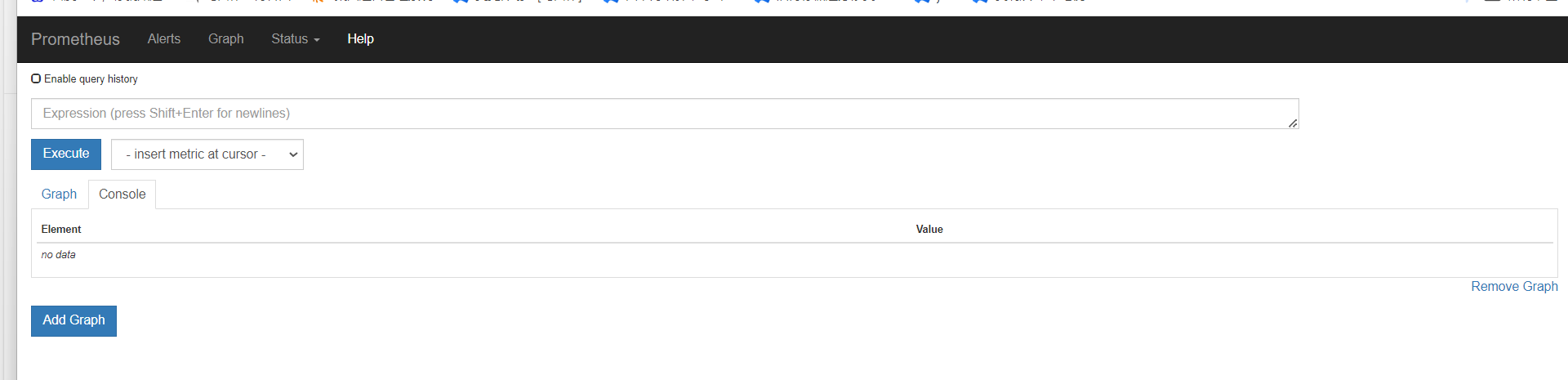

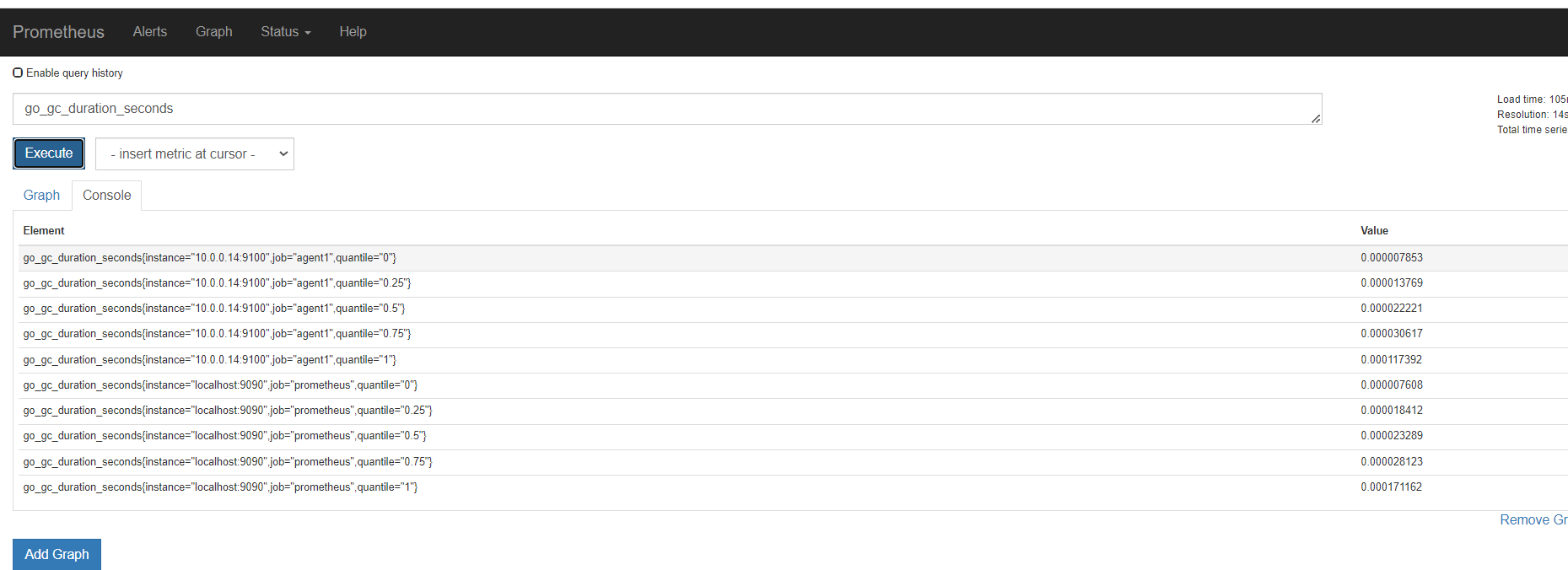

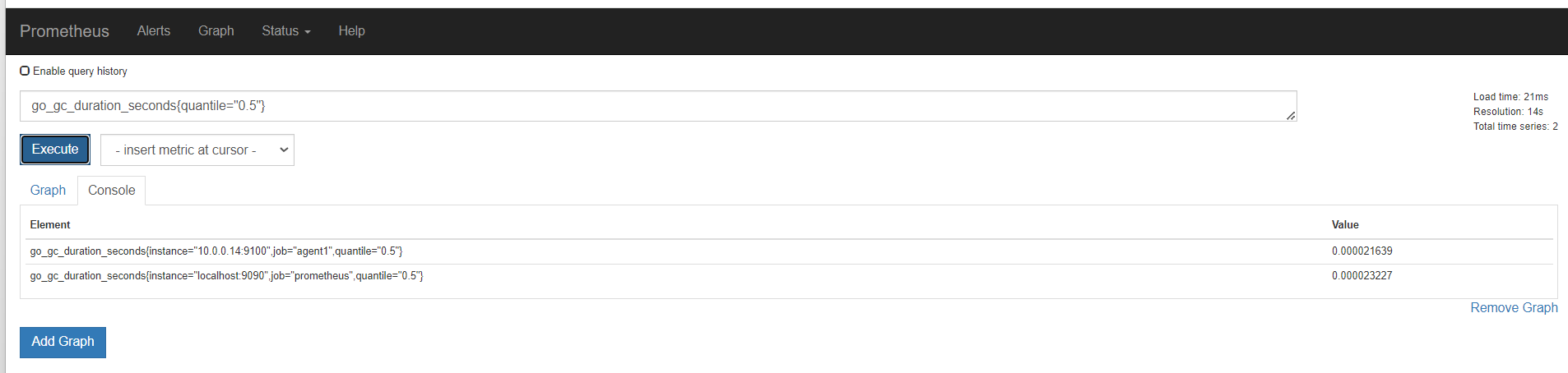

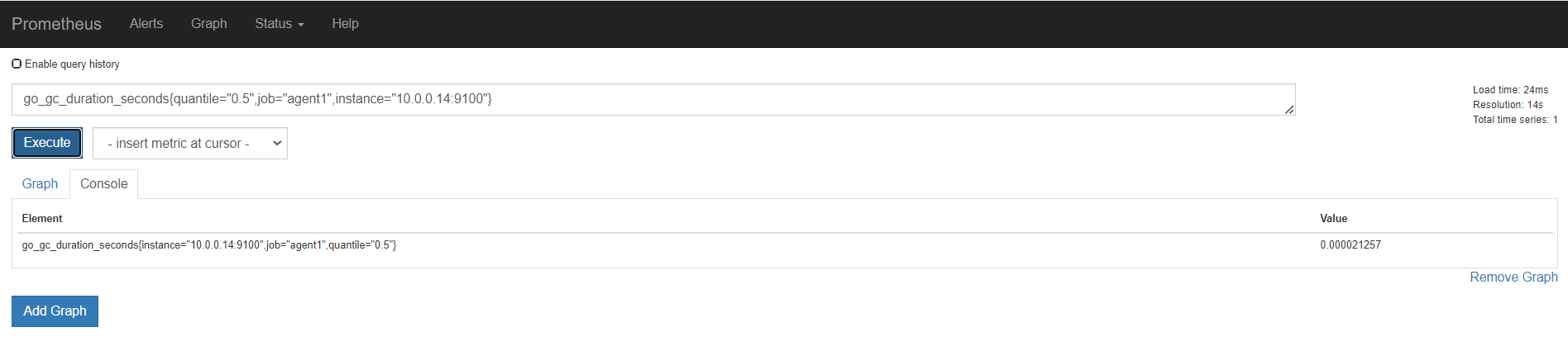

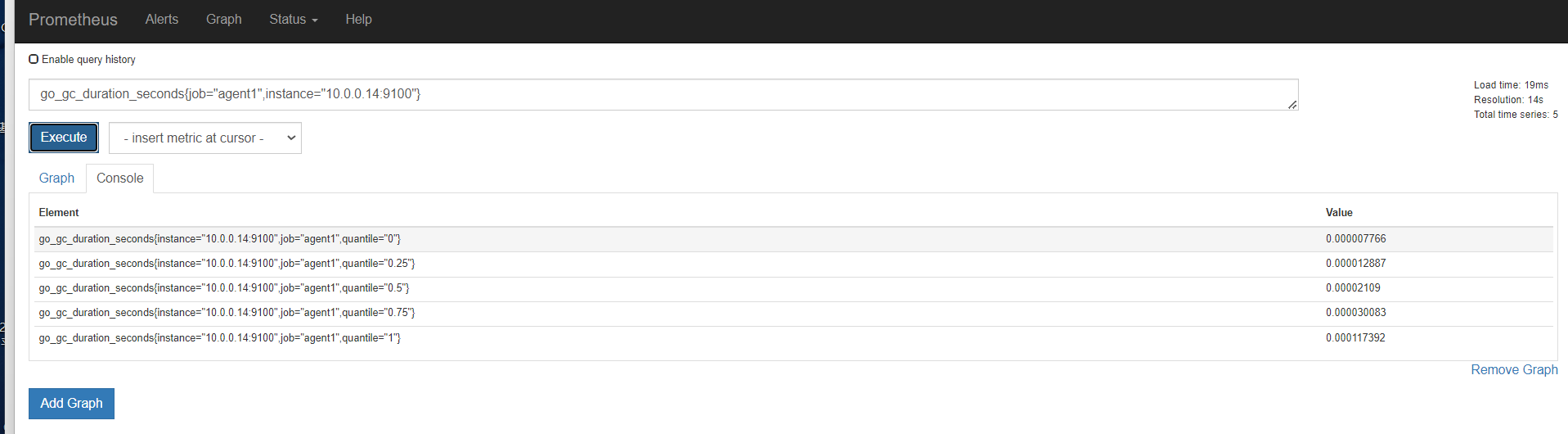

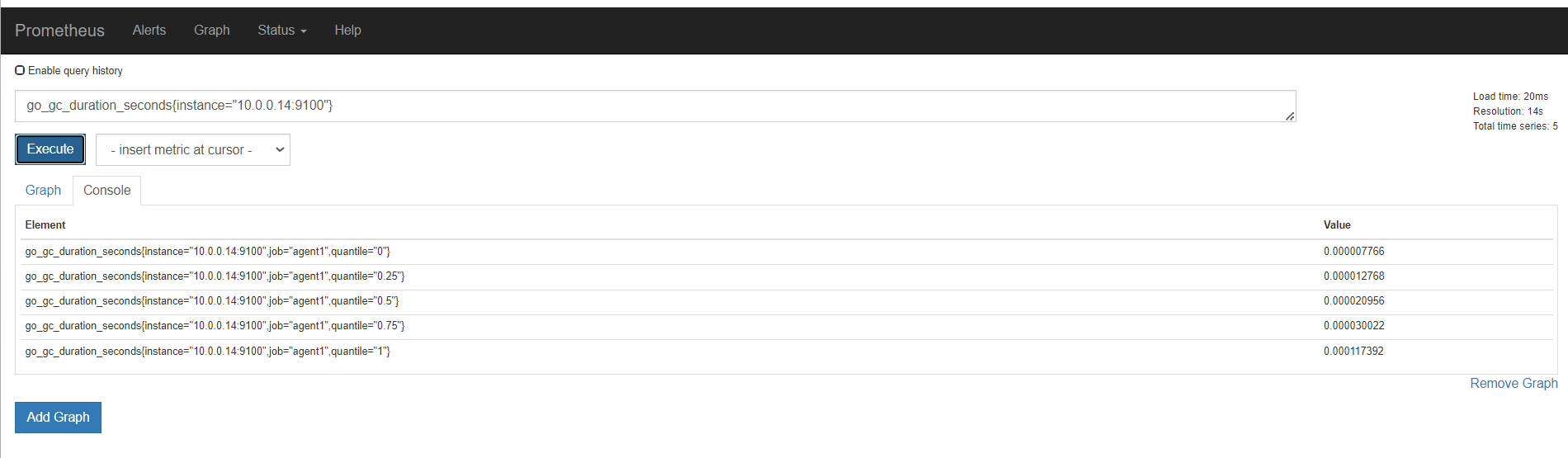

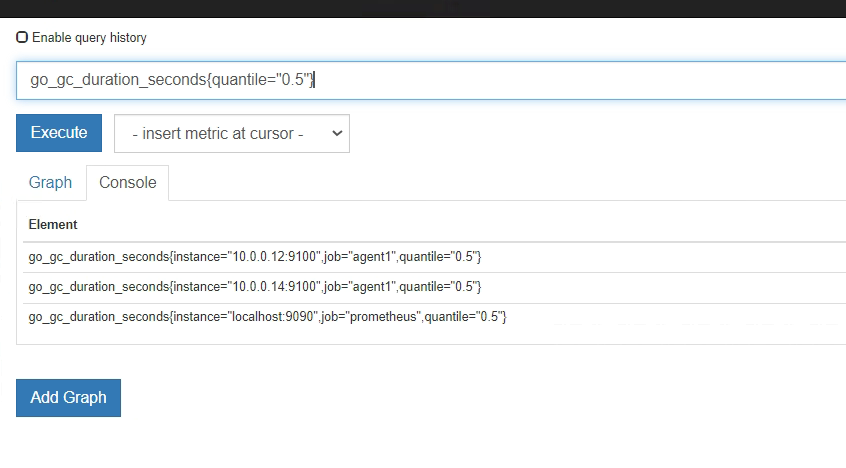

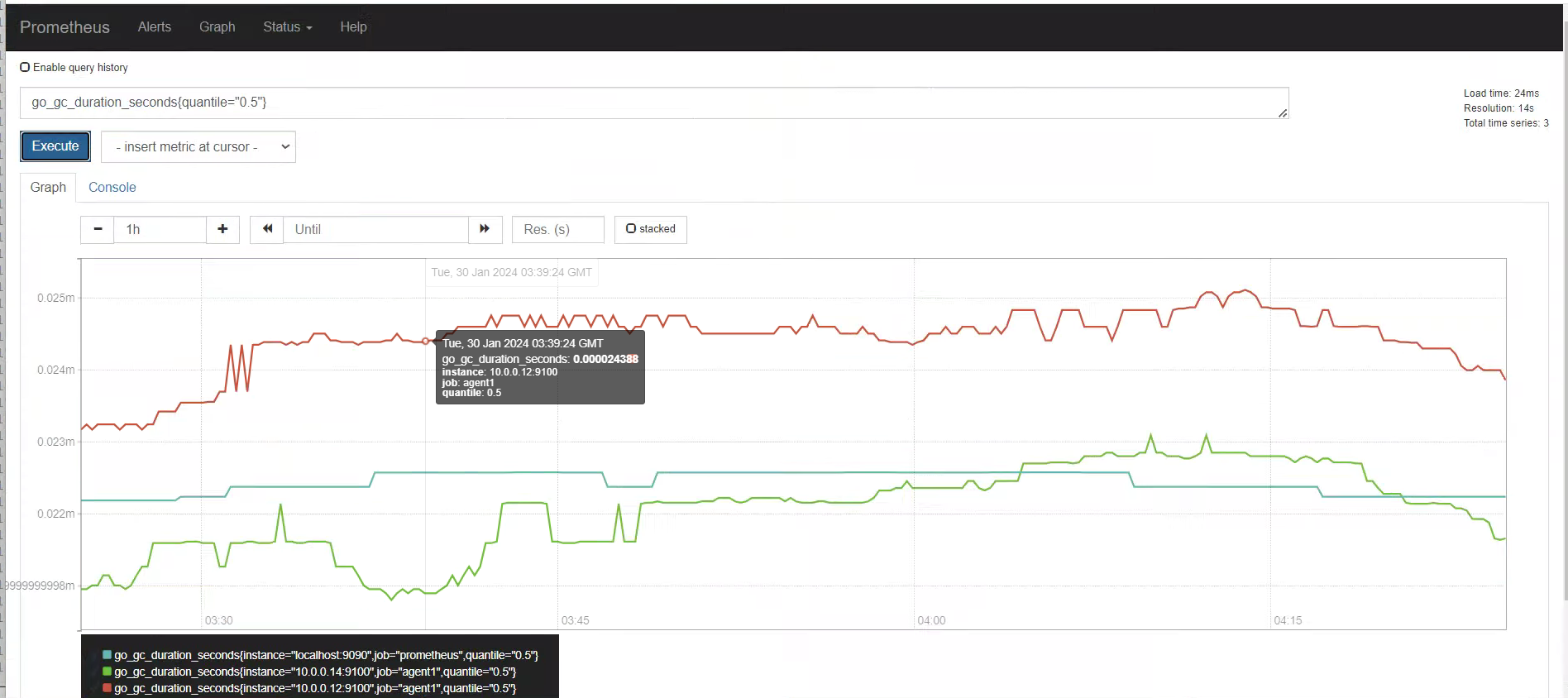

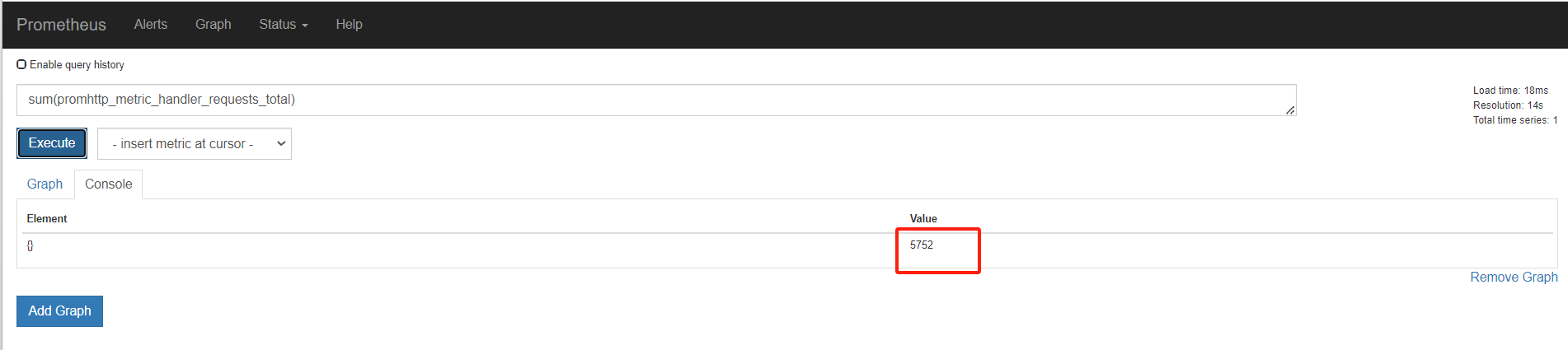

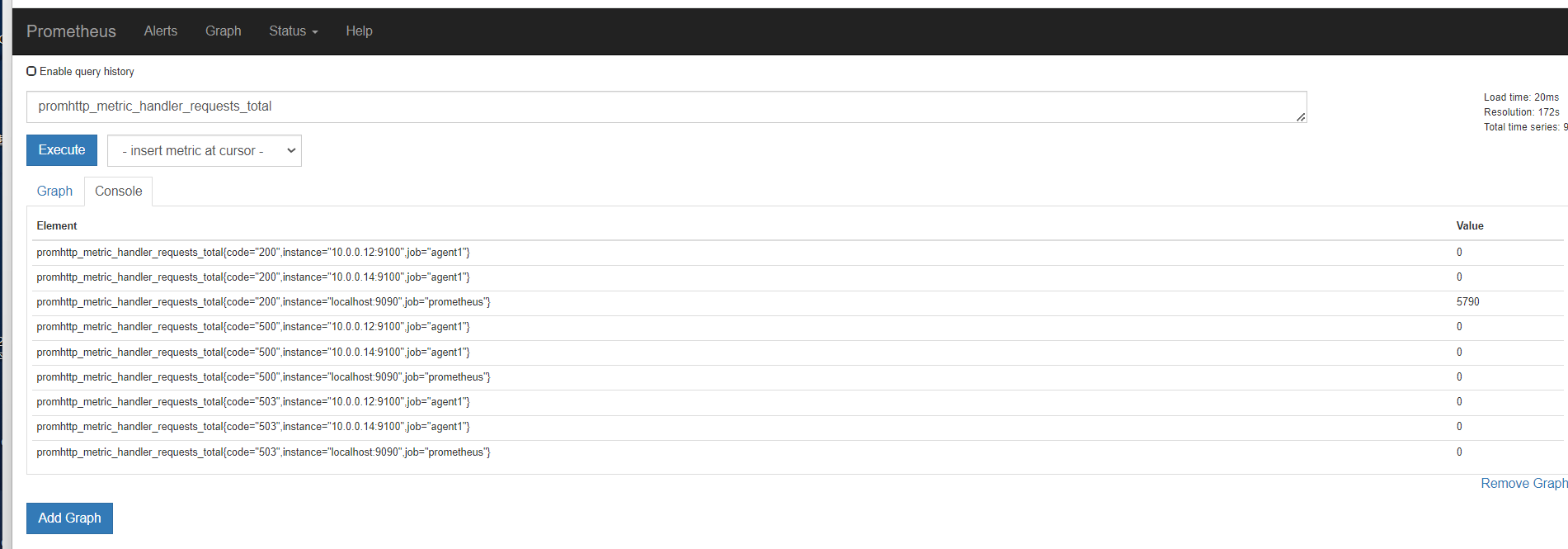

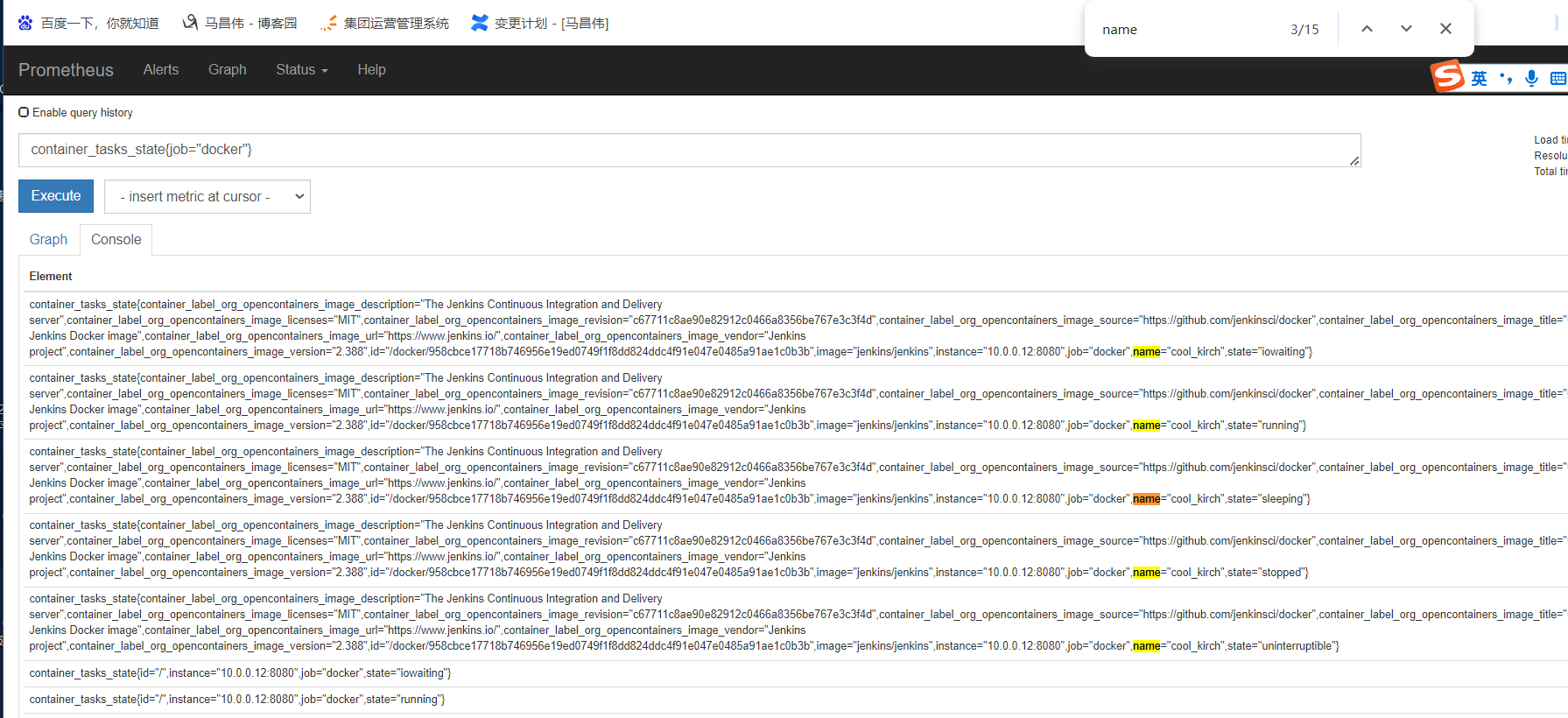

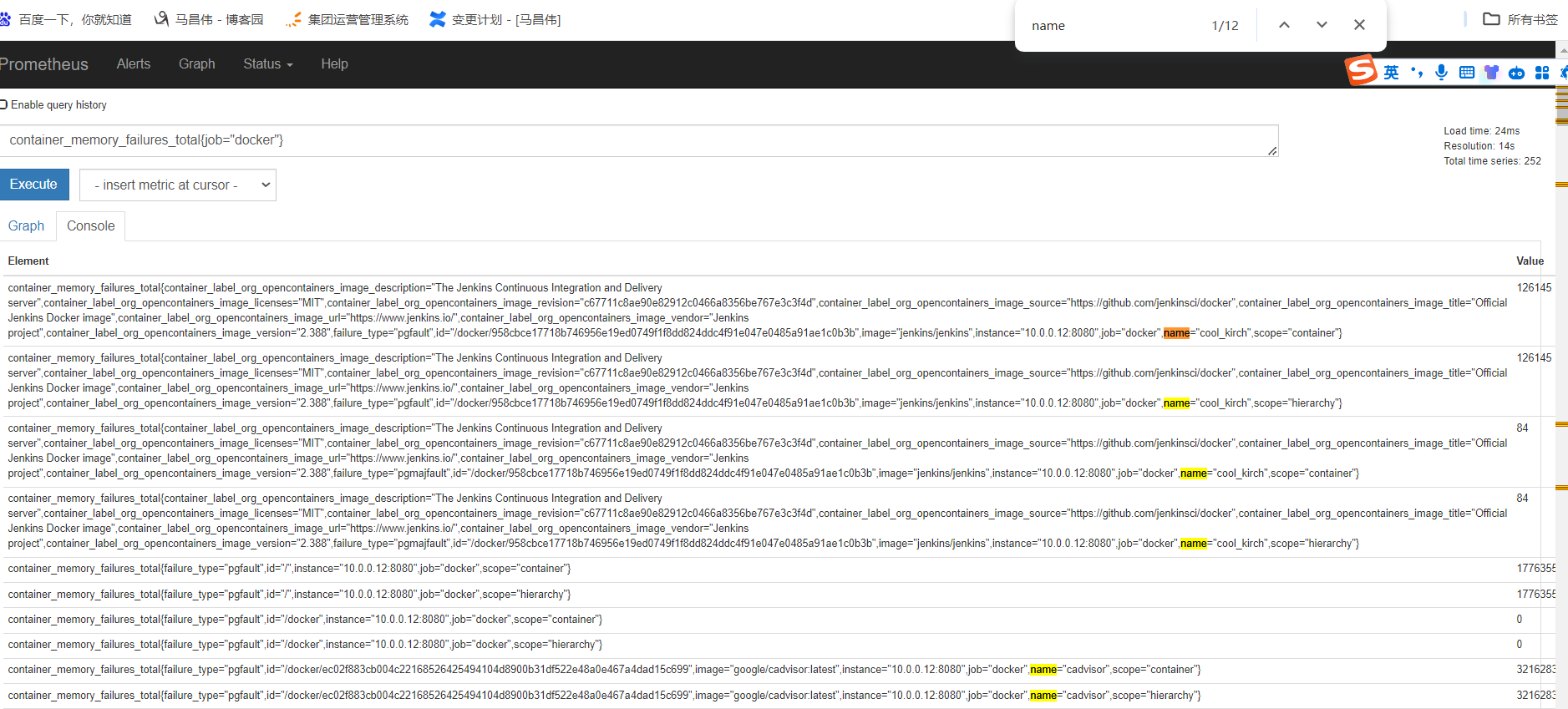

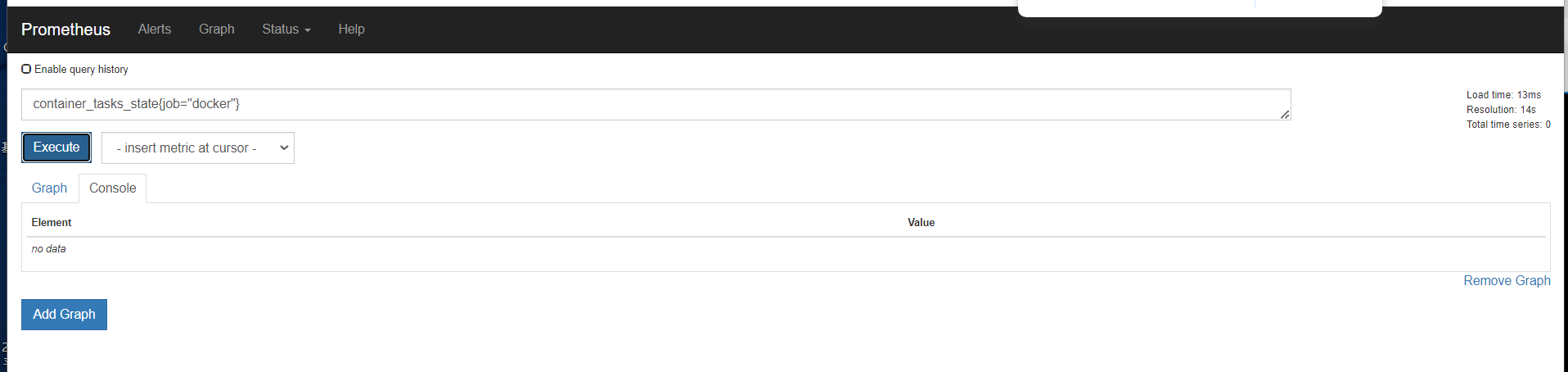

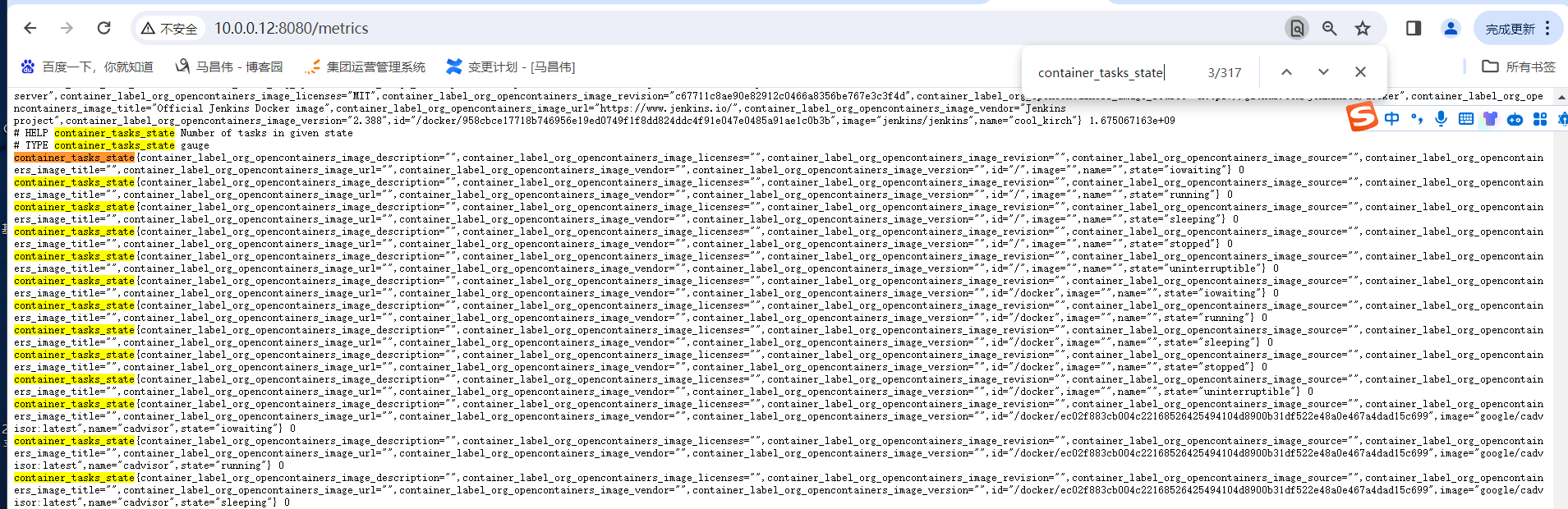

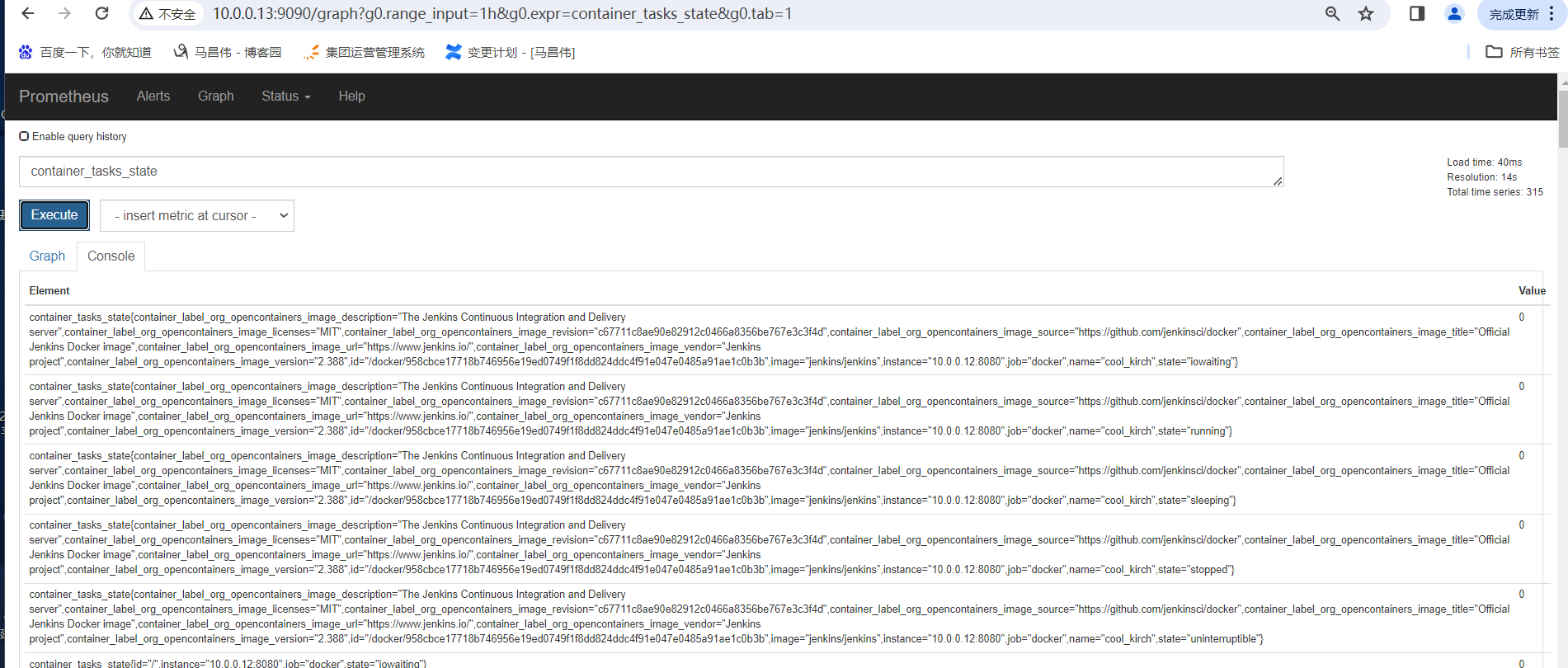

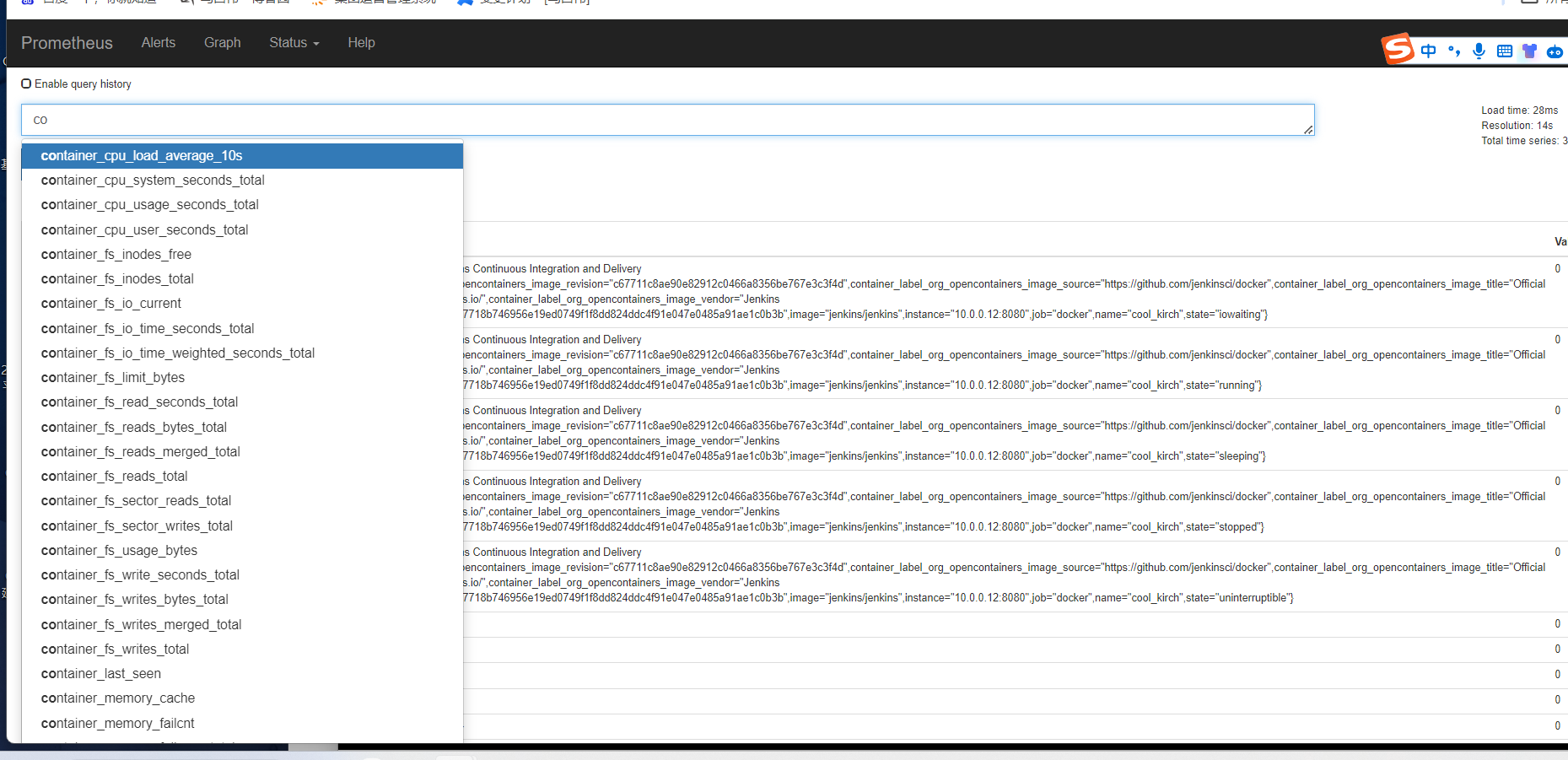

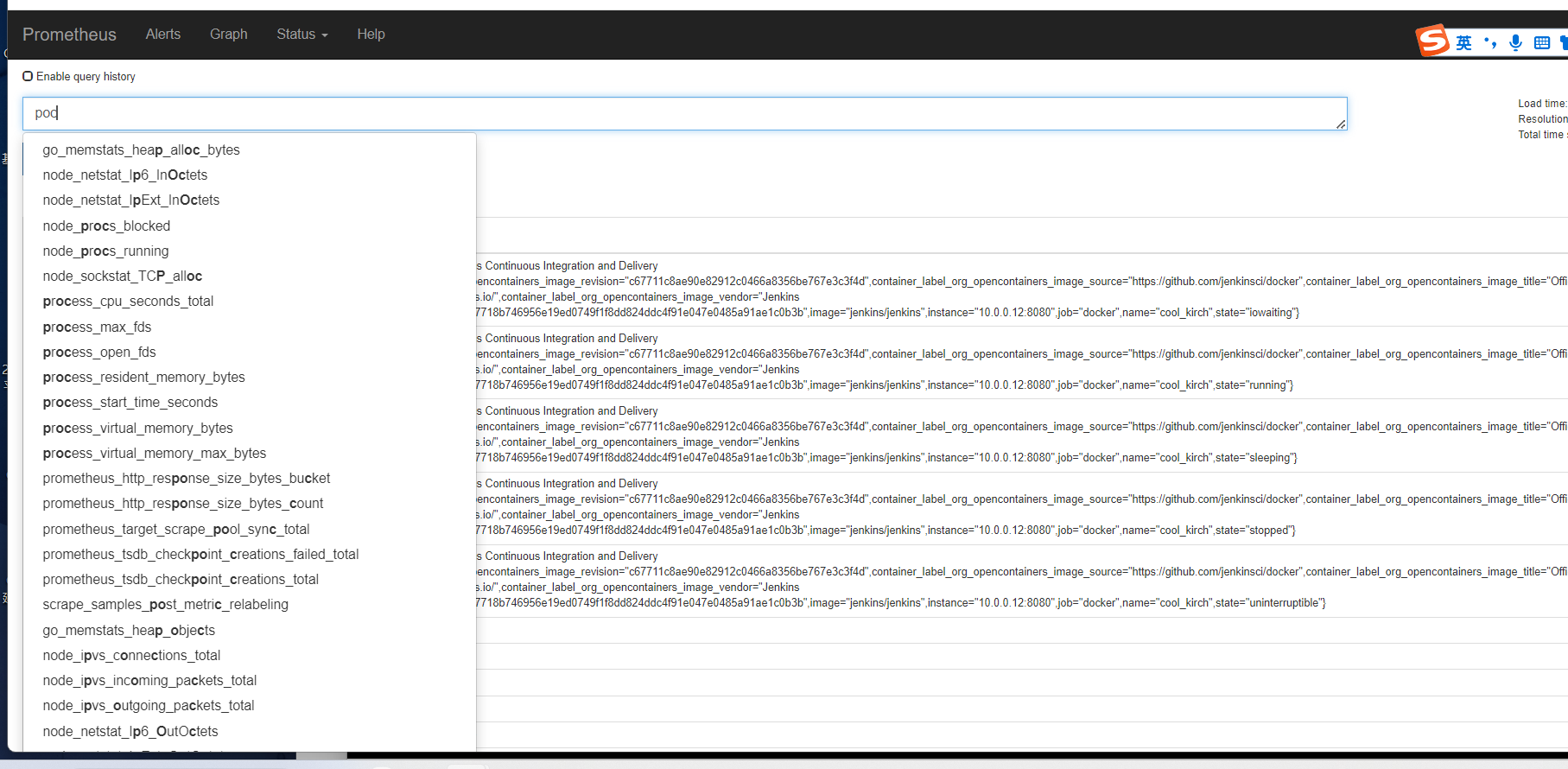

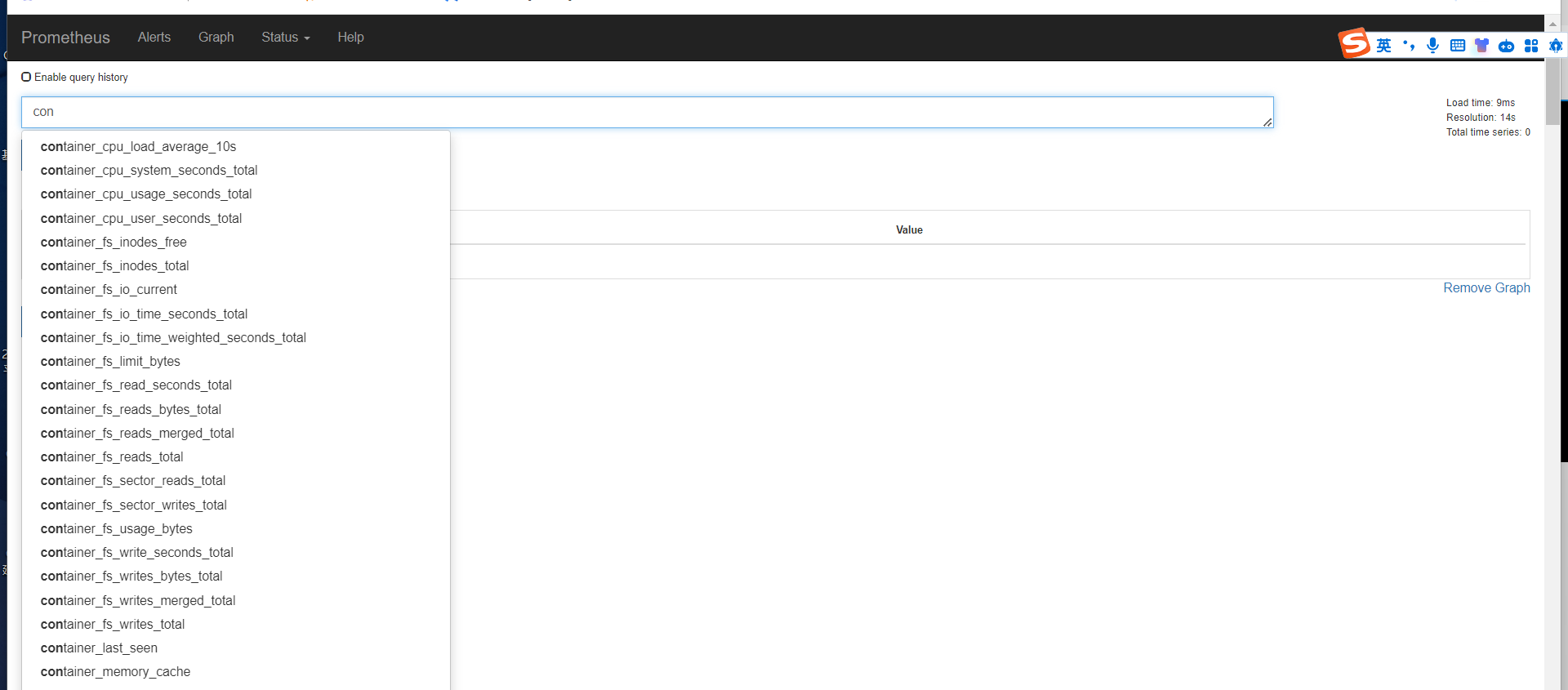

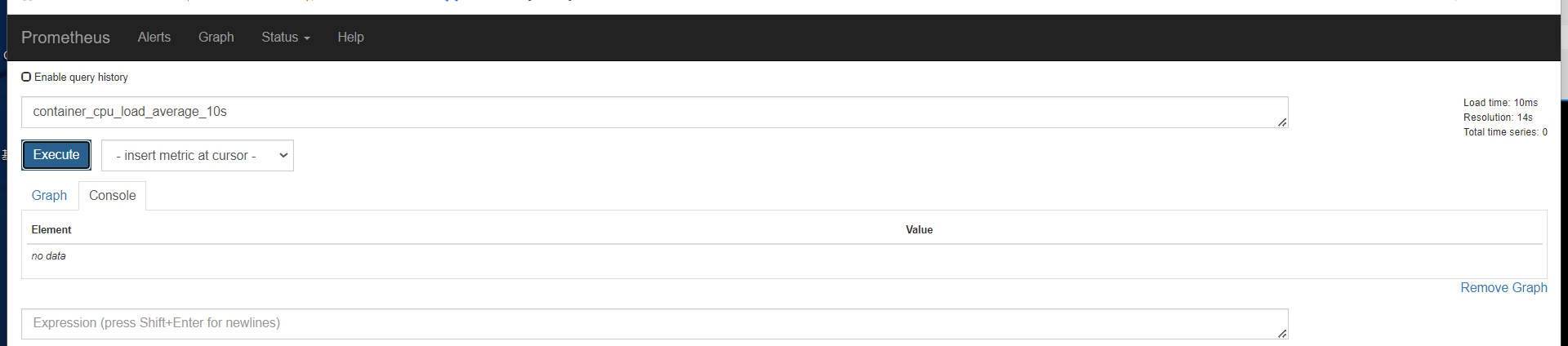

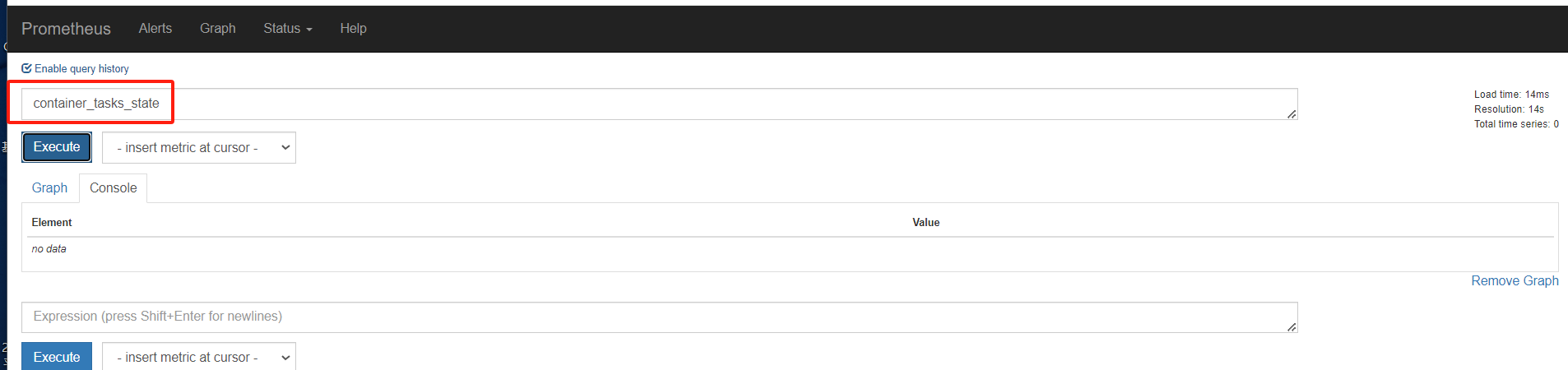

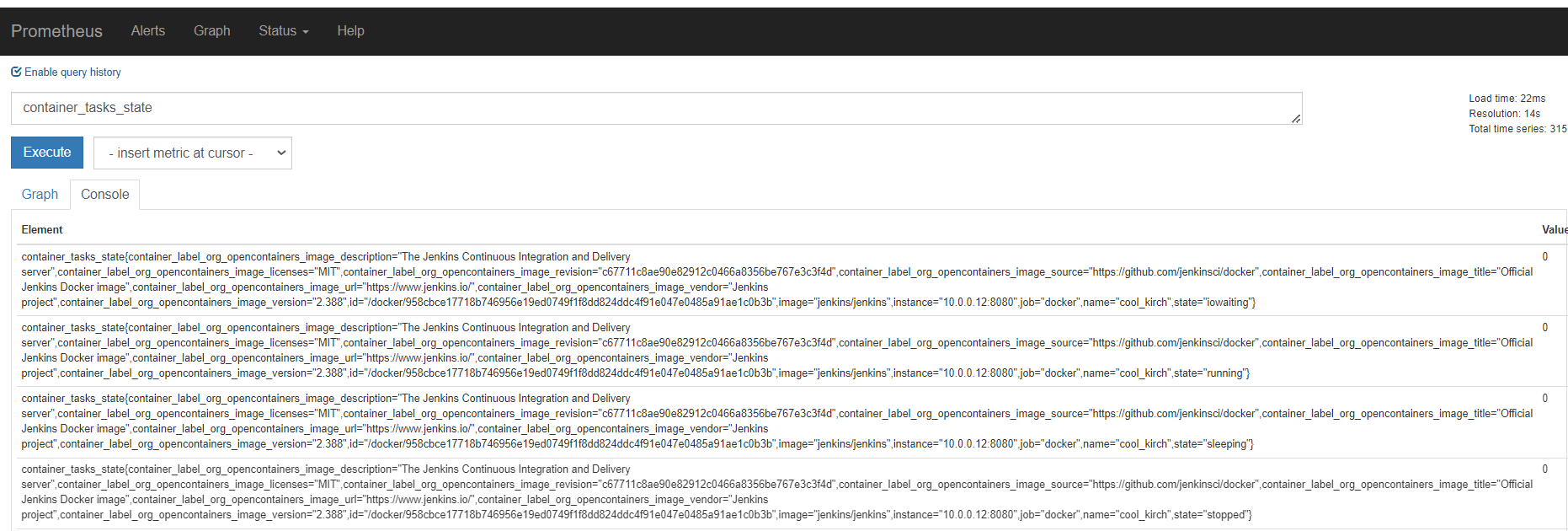

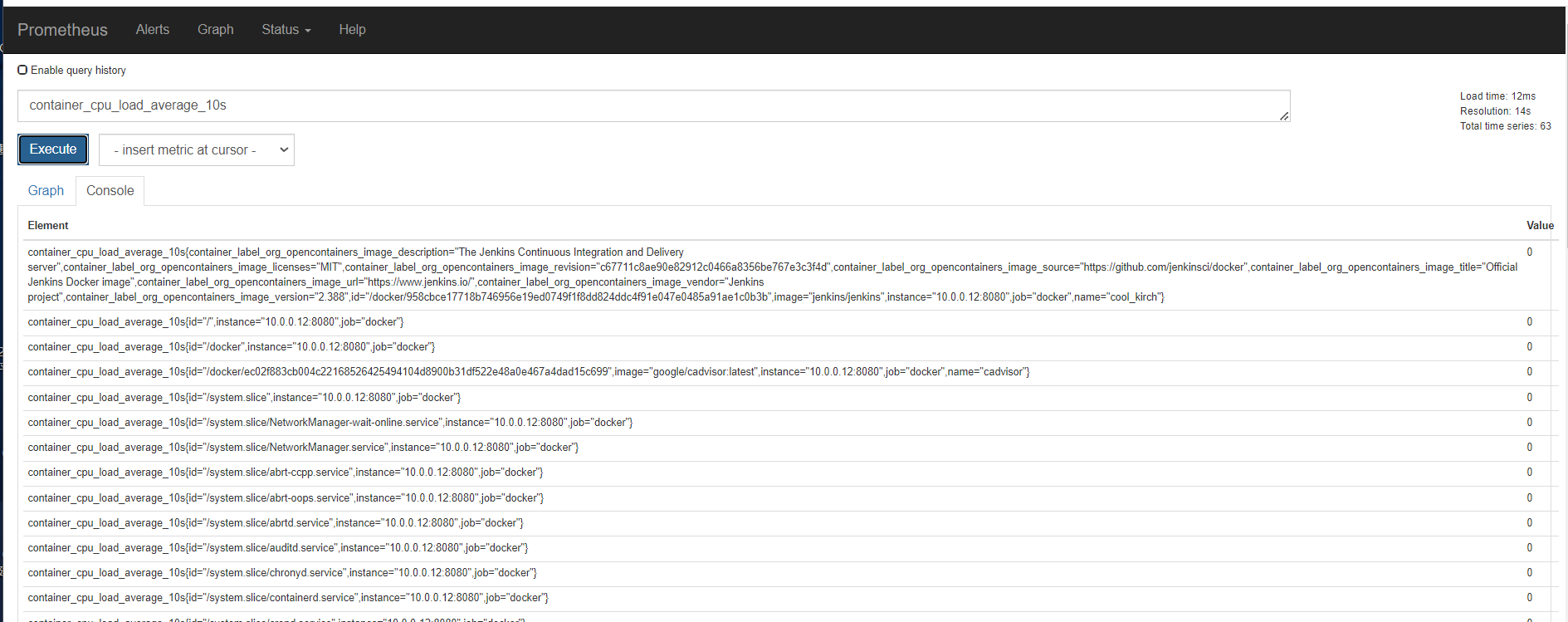

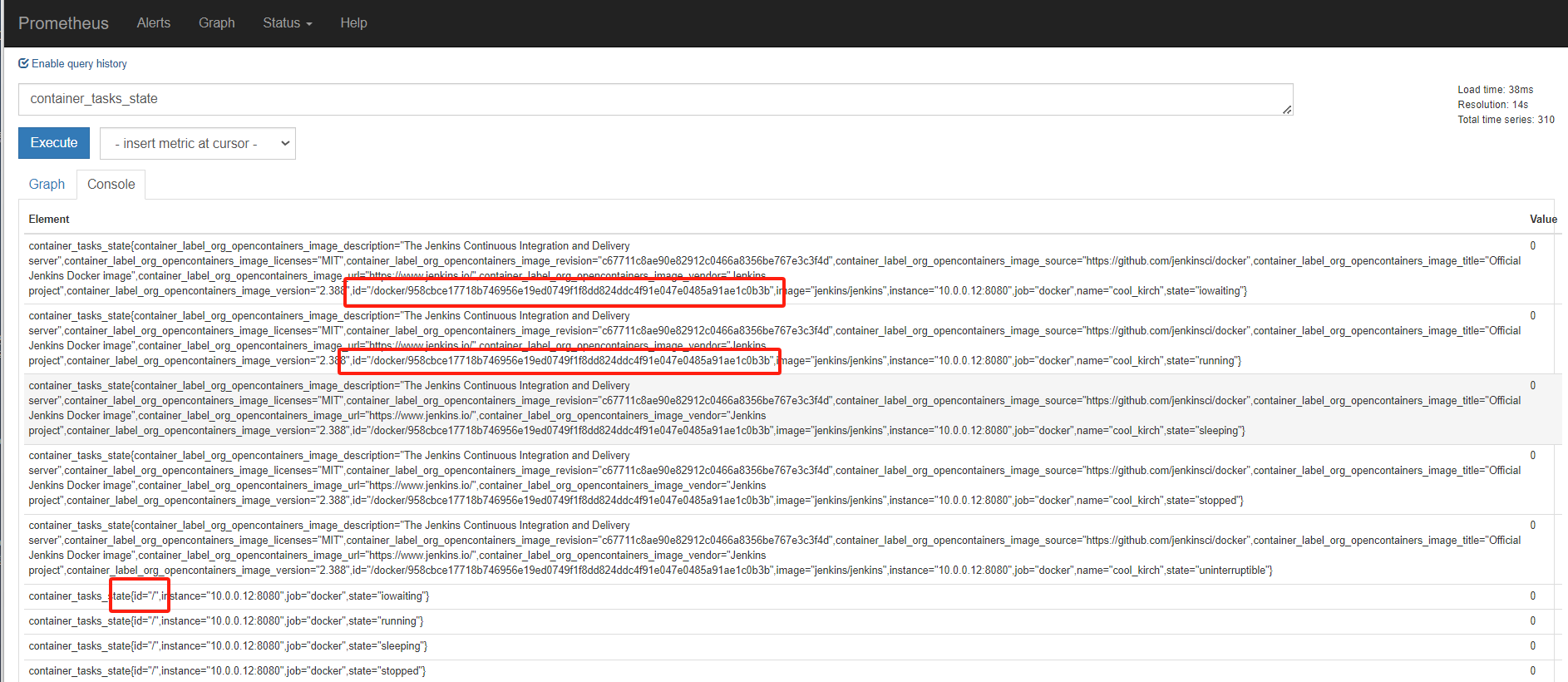

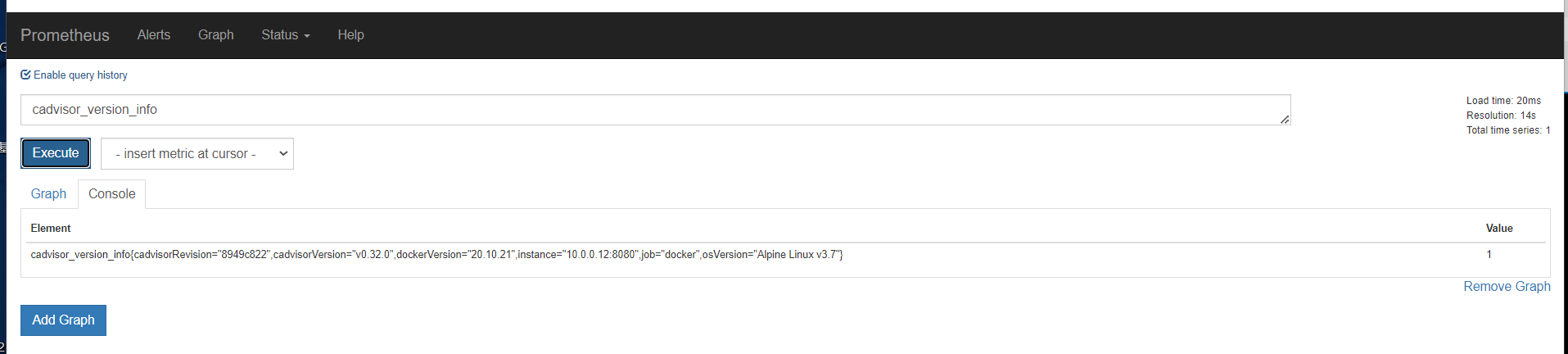

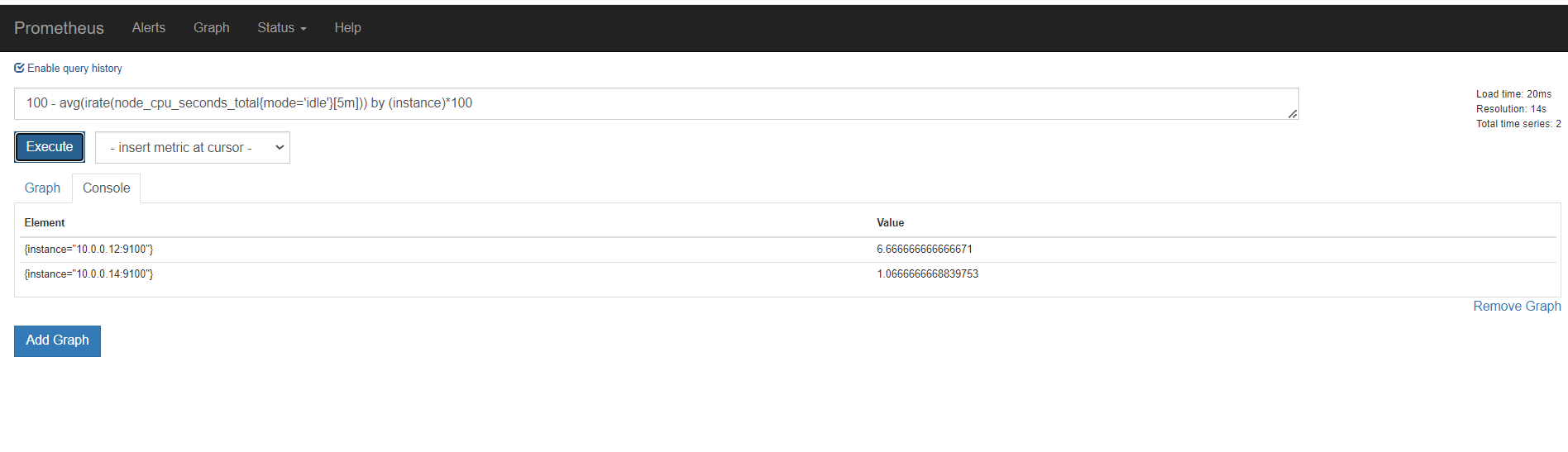

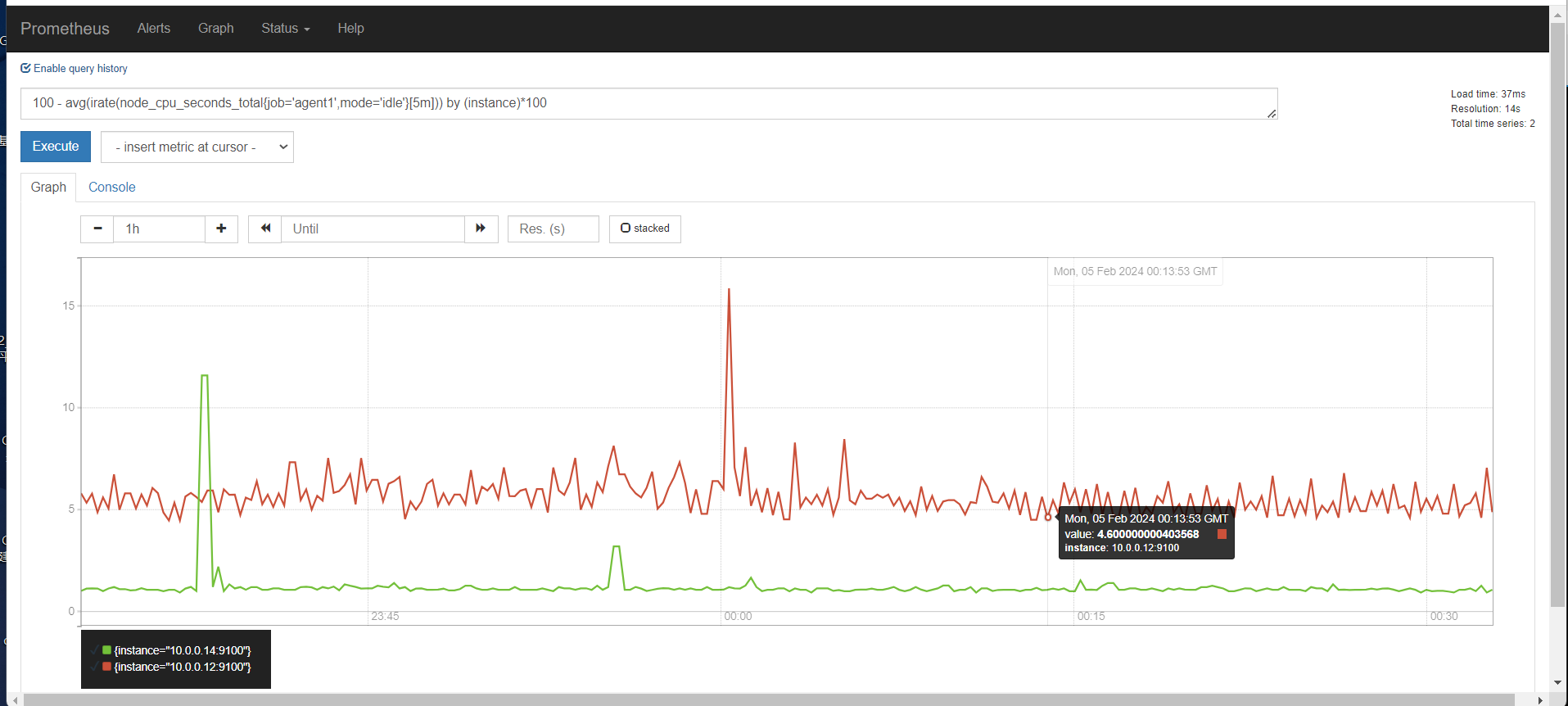

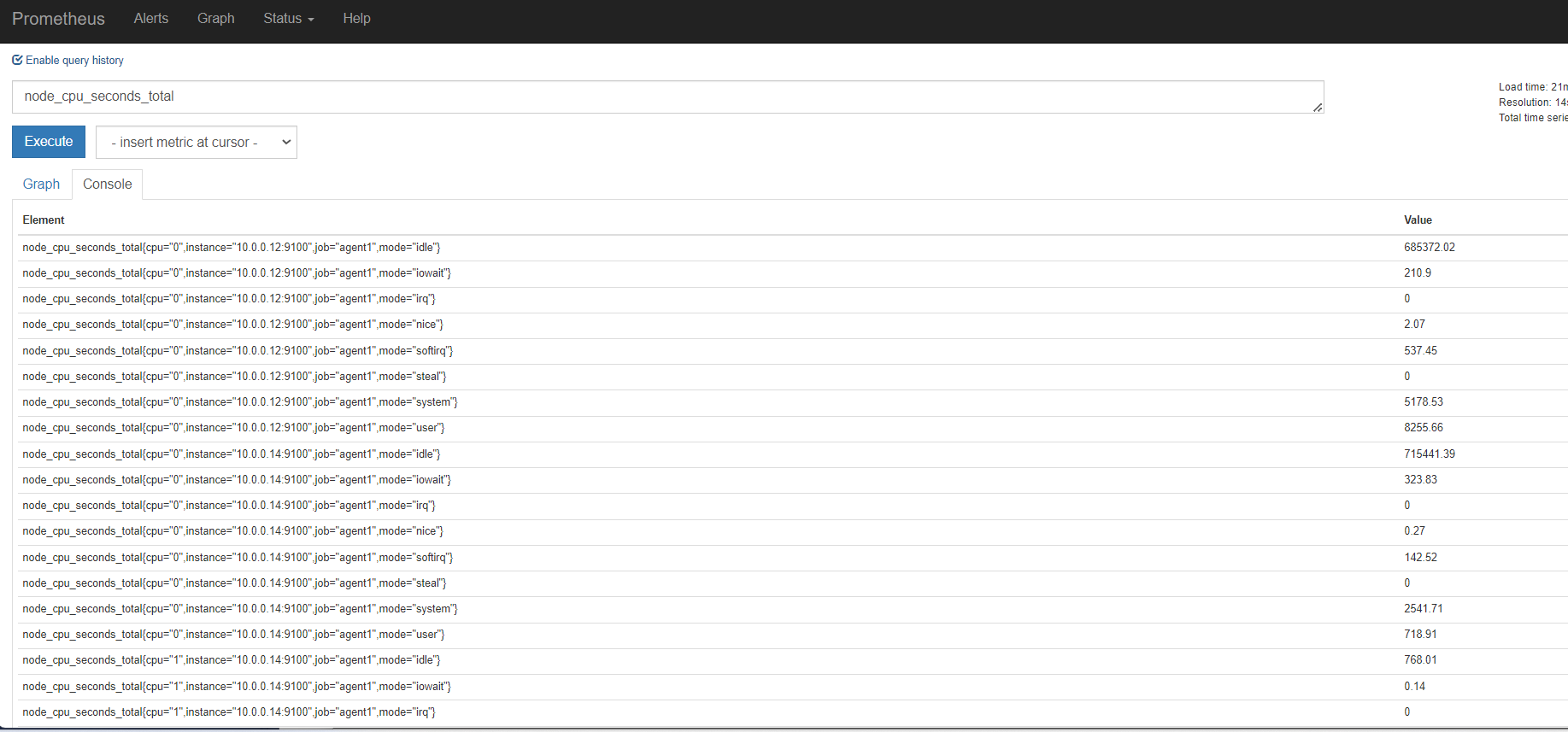

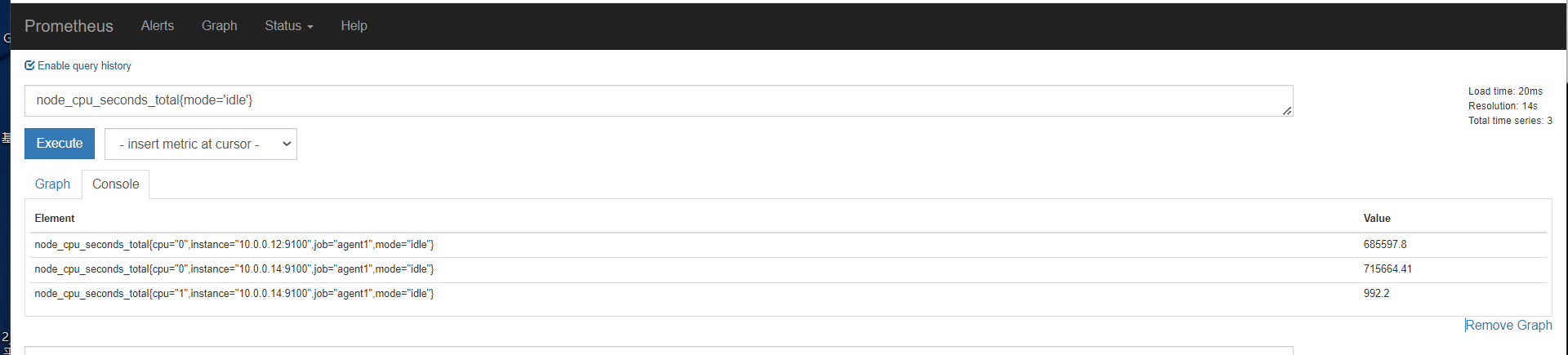

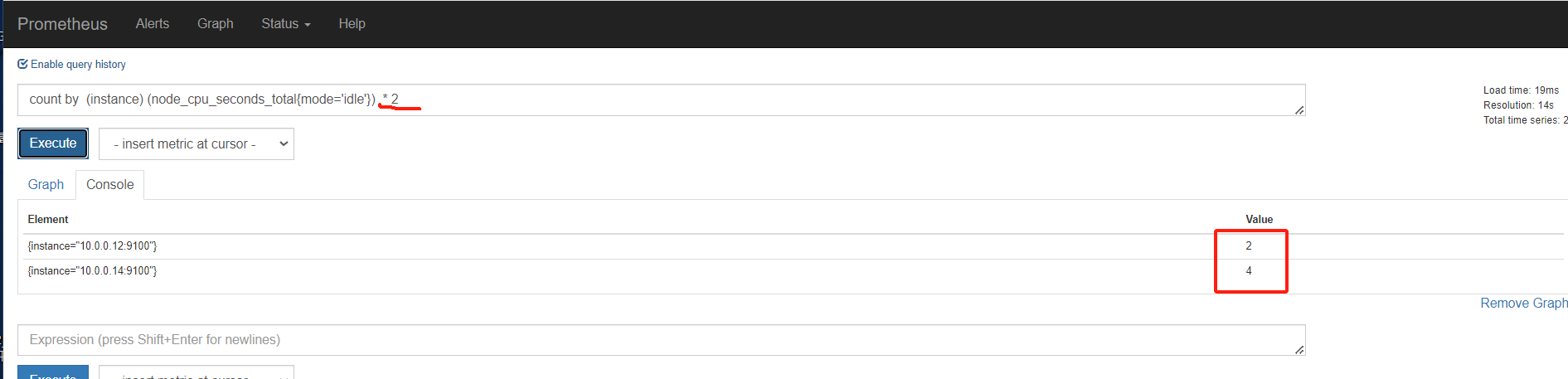

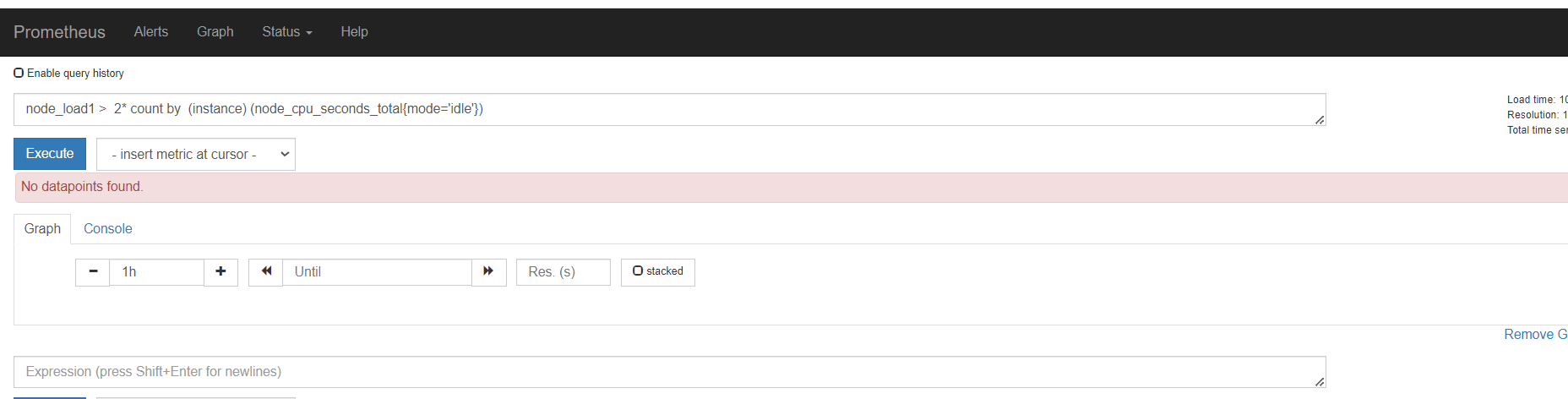

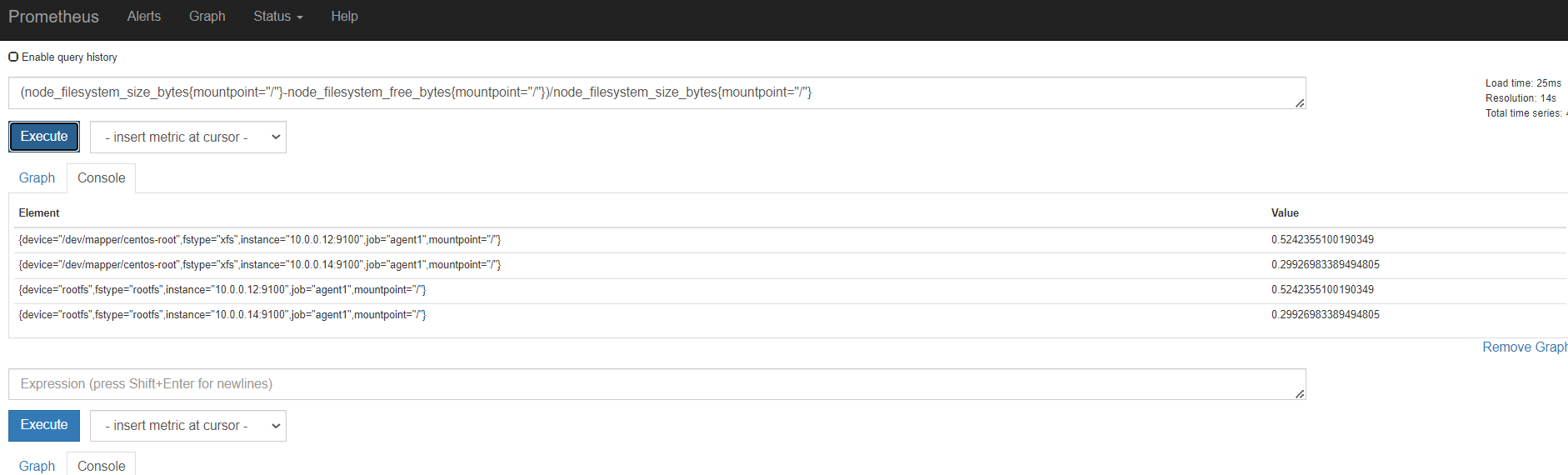

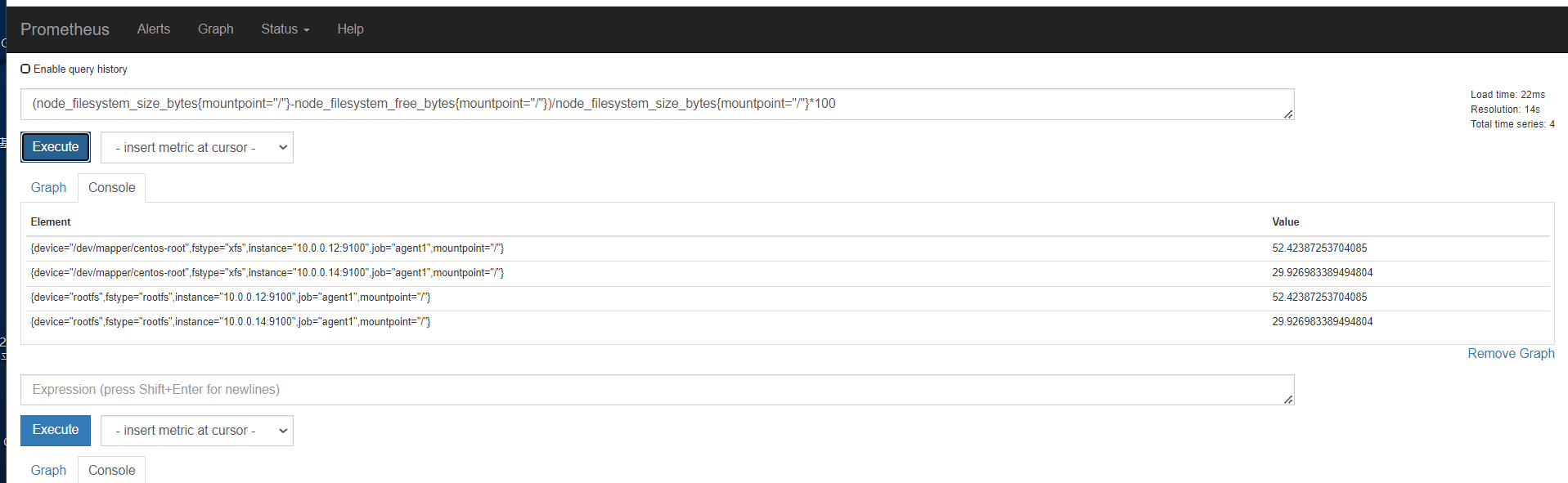

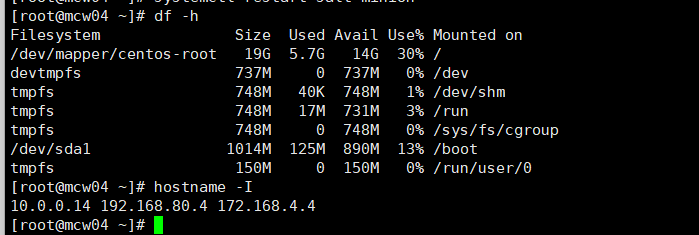

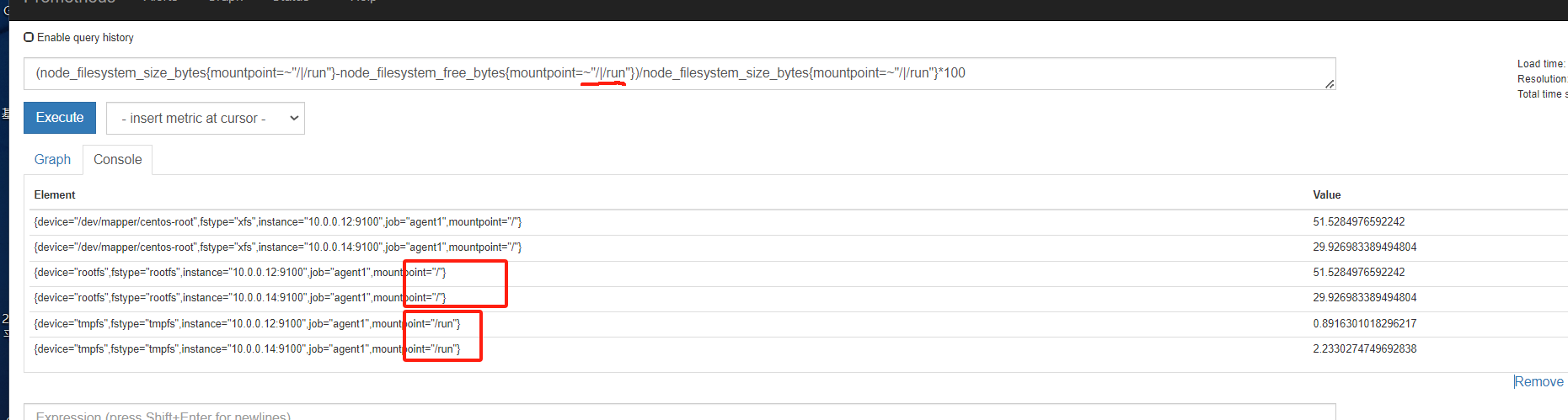

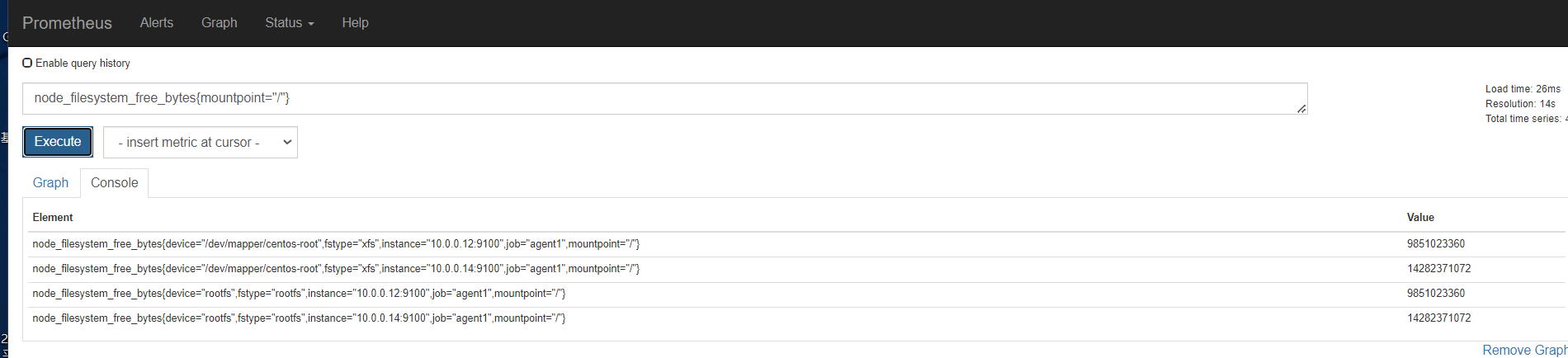

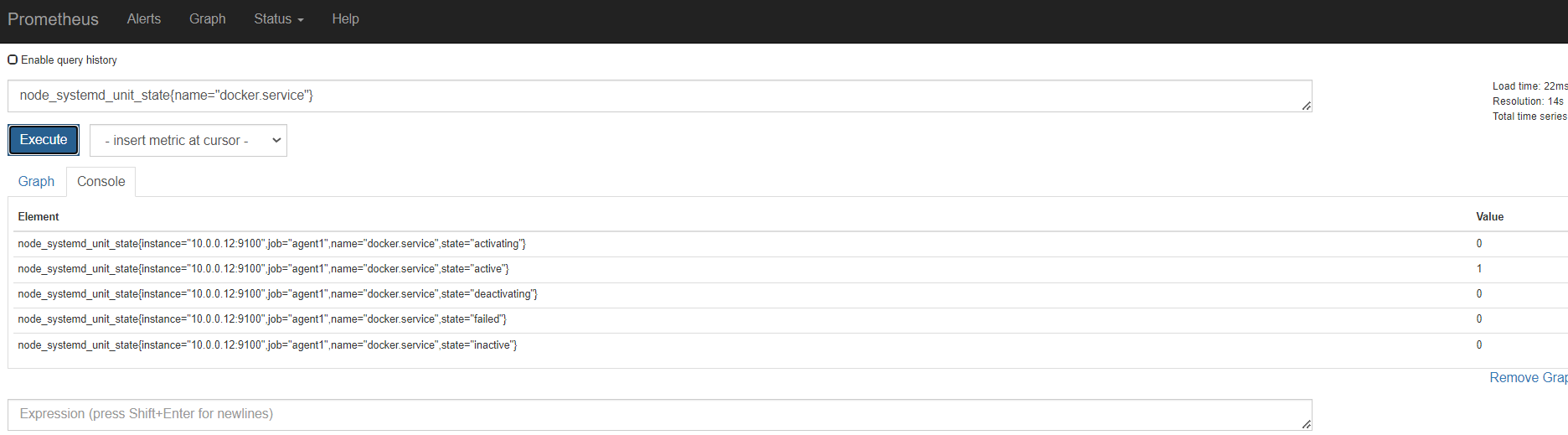

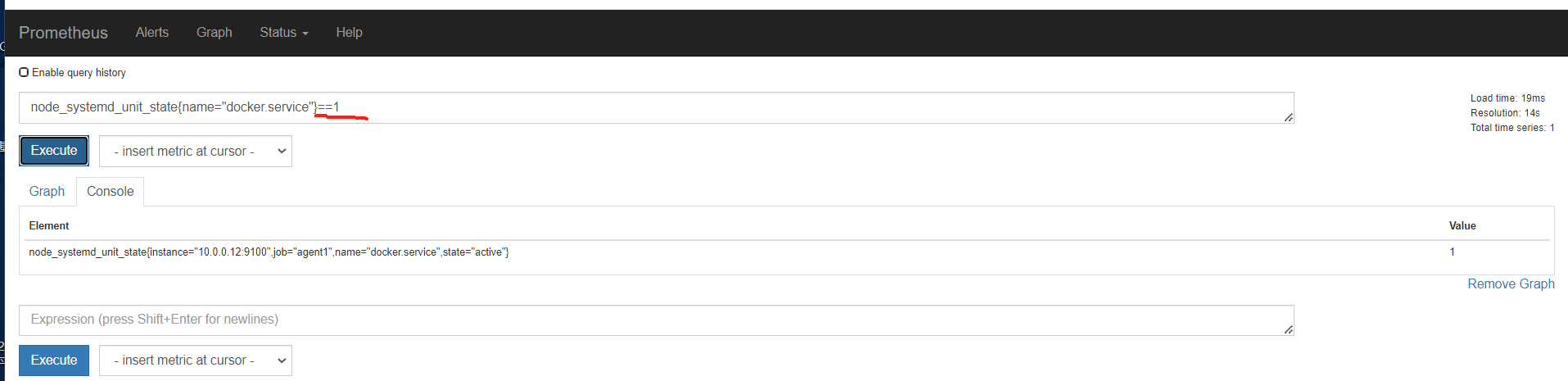

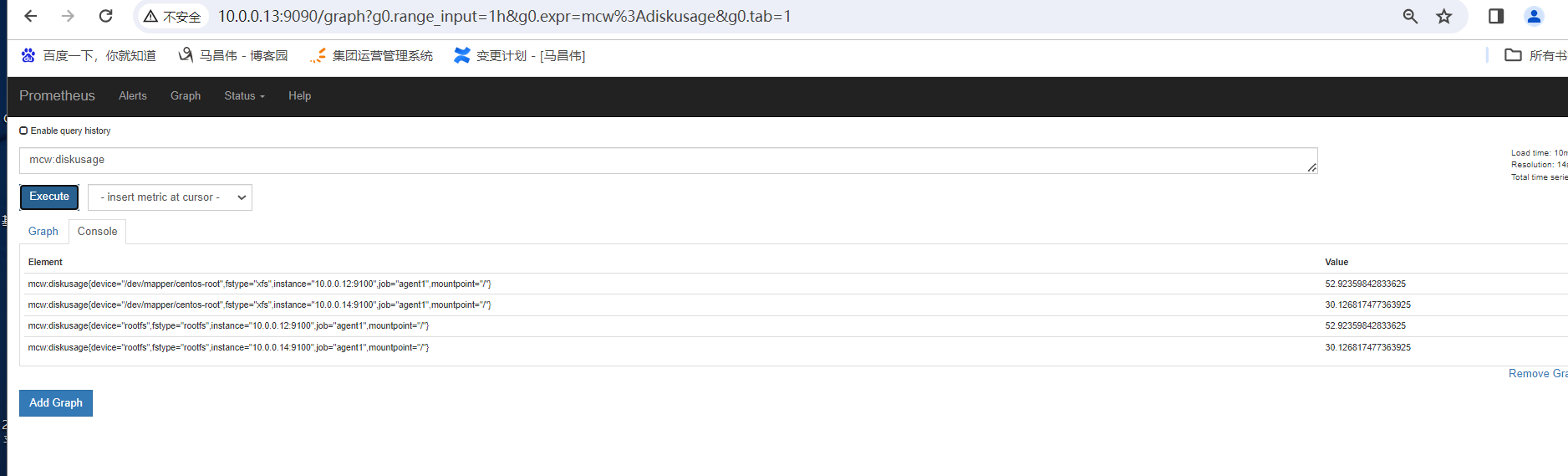

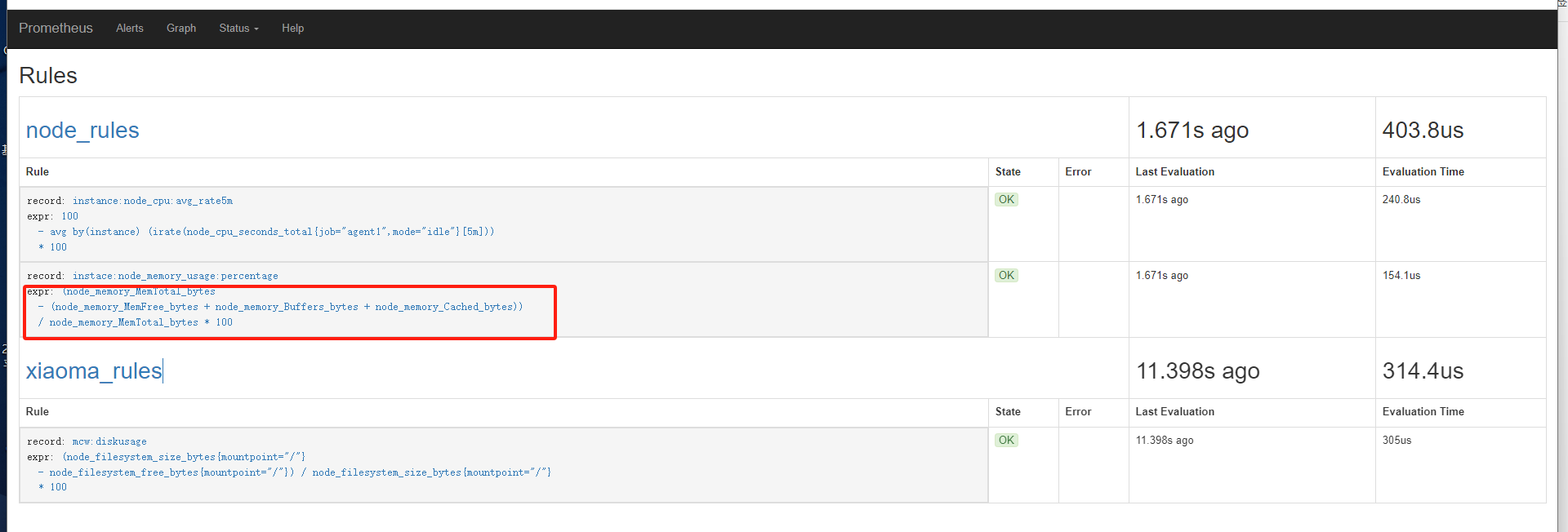

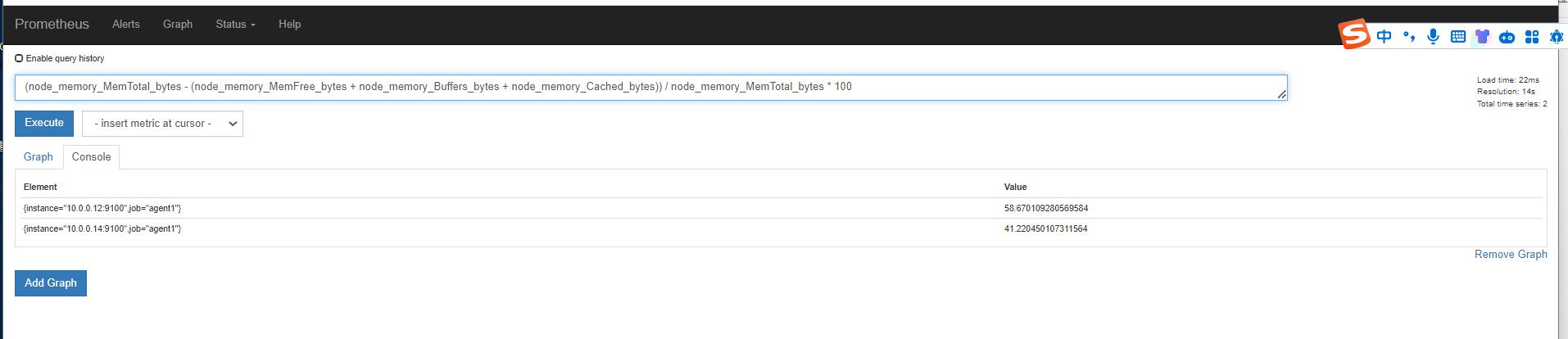

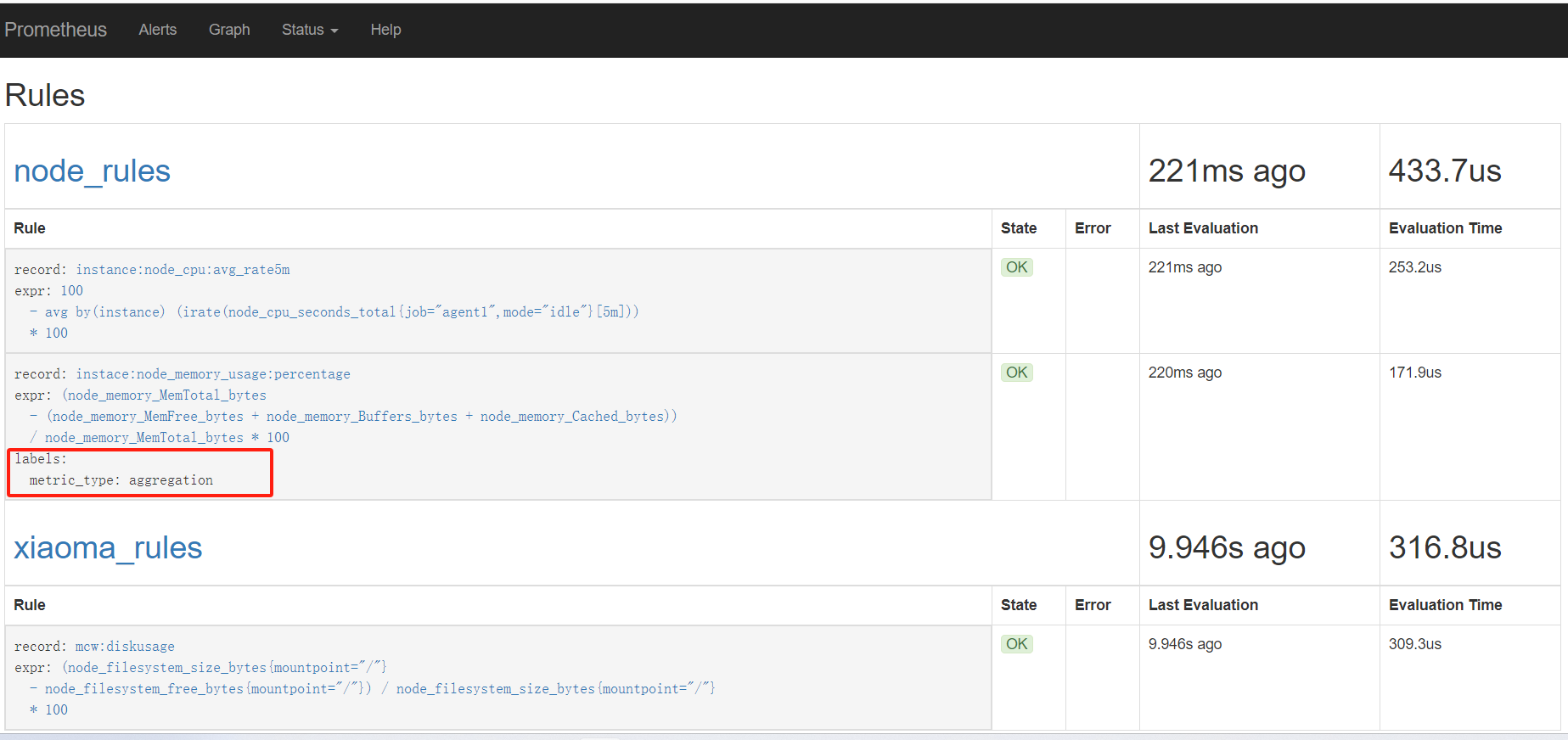

第一个指标 ,表达式浏览器

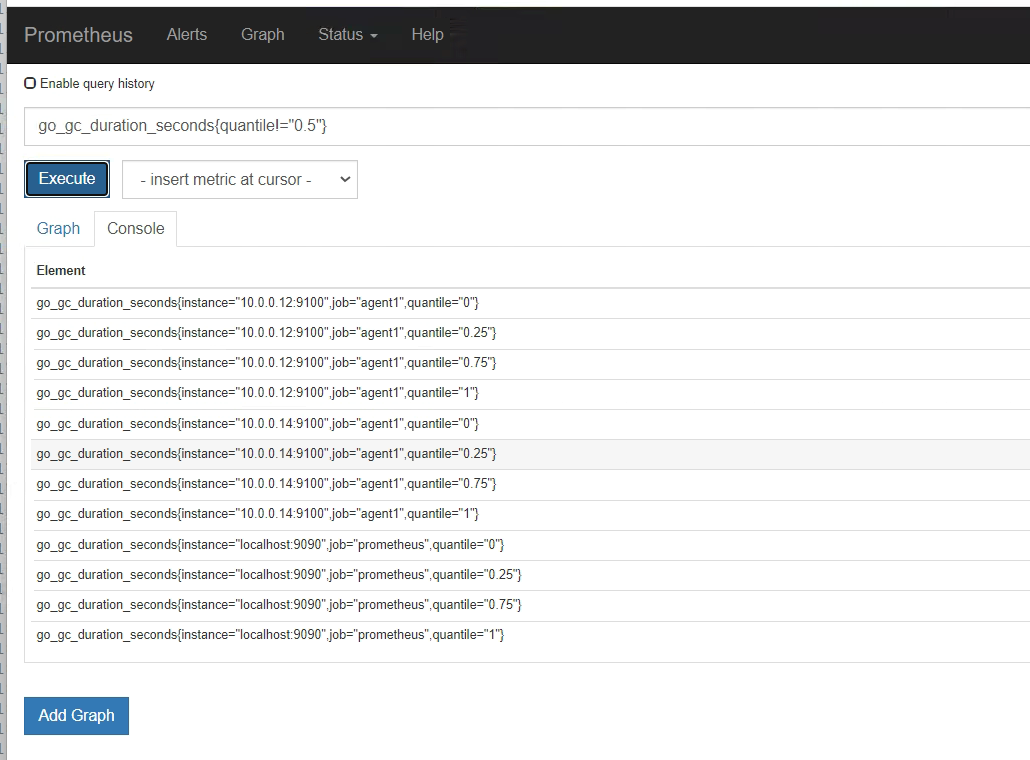

不等于,取反

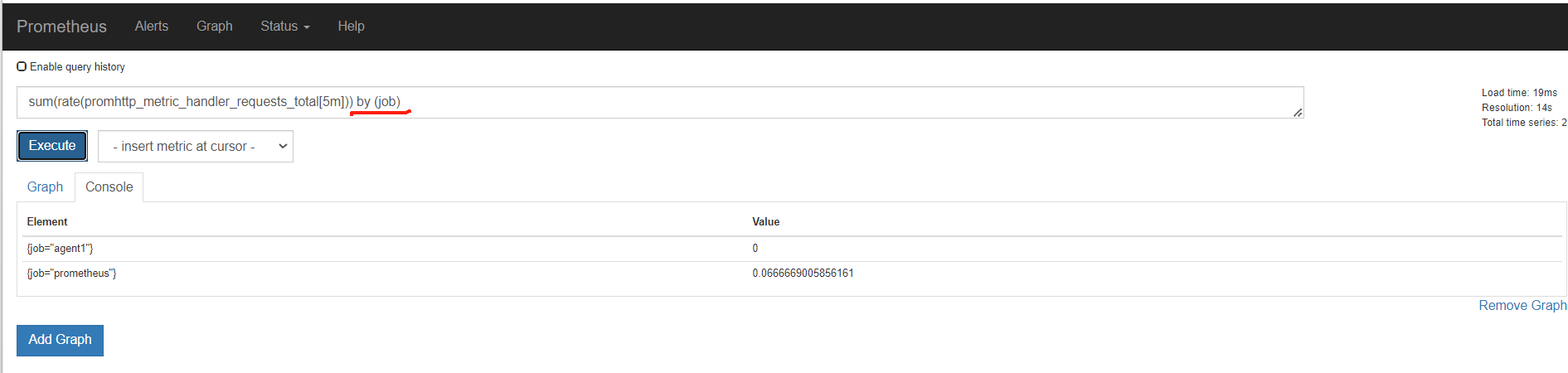

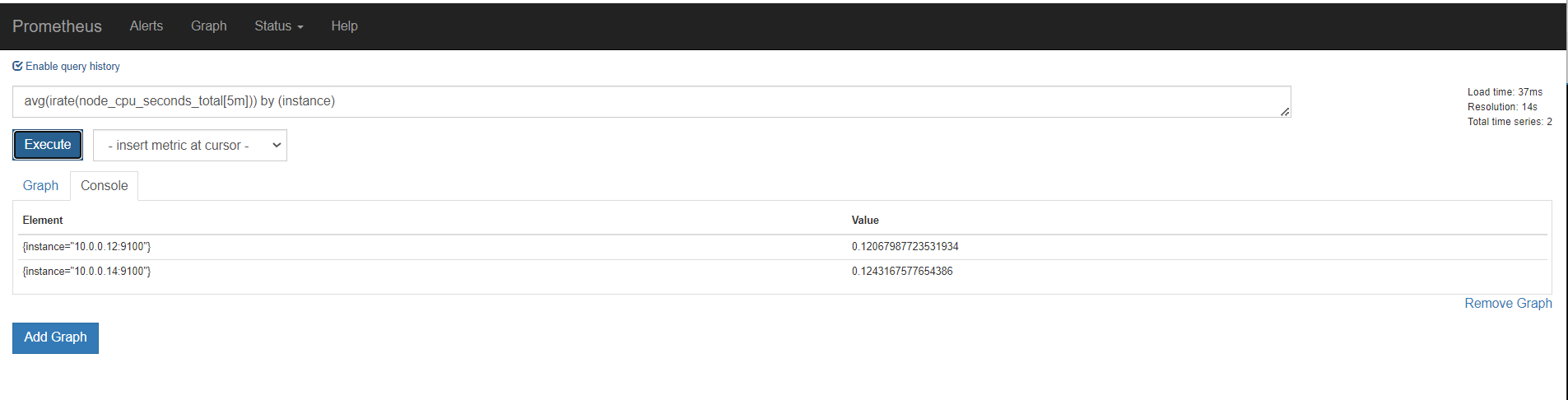

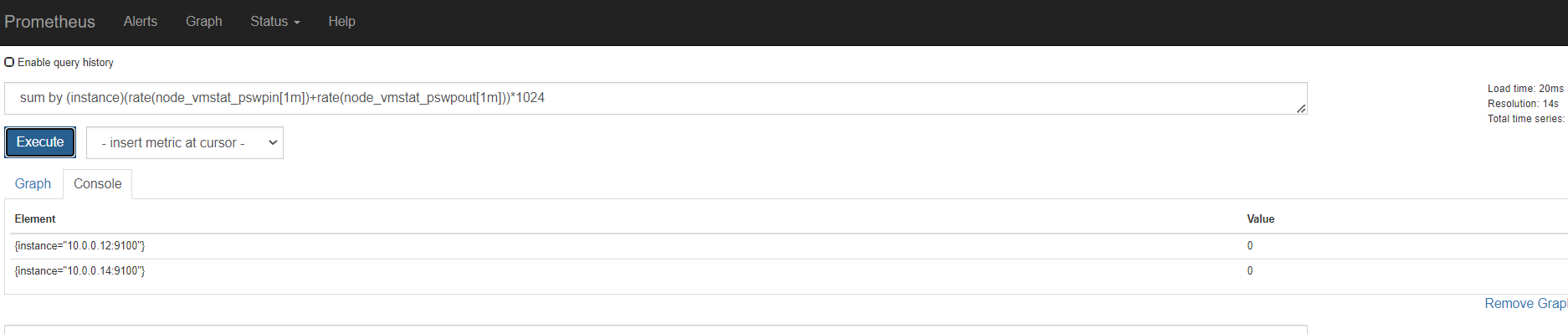

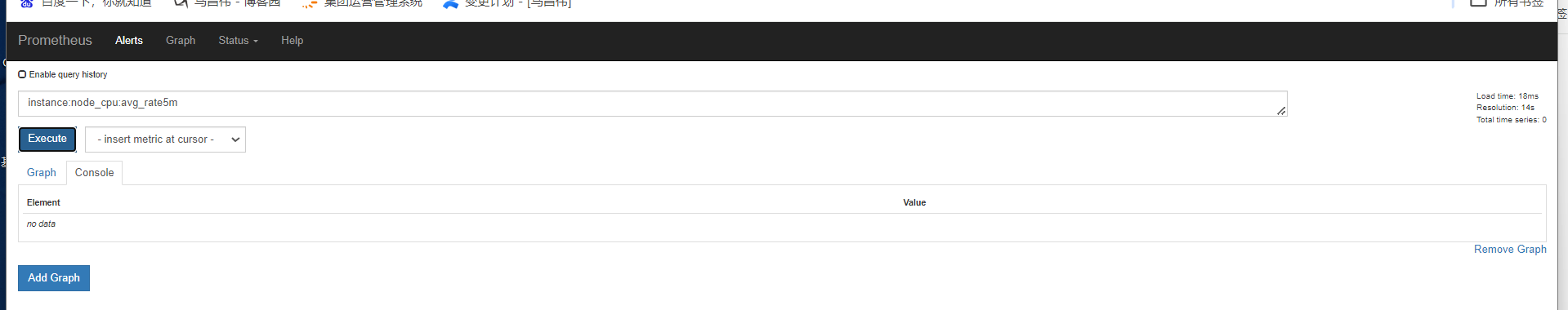

聚合时间序列 sum rate

sum

服务端撞去数据产生的http请求总数

sum,对这些结果累加,值是随时变化的,因为请求次数很快就多出来一个

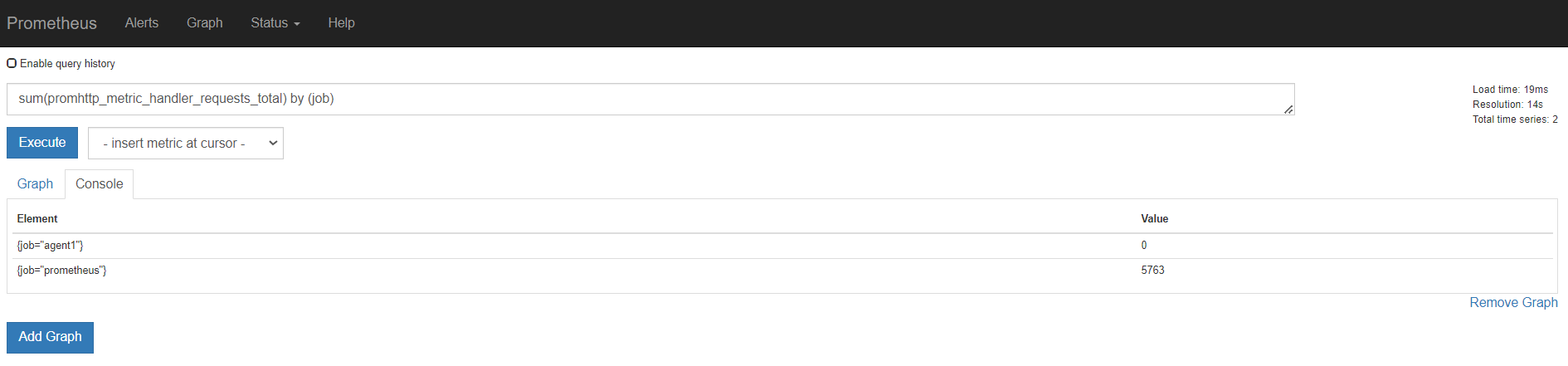

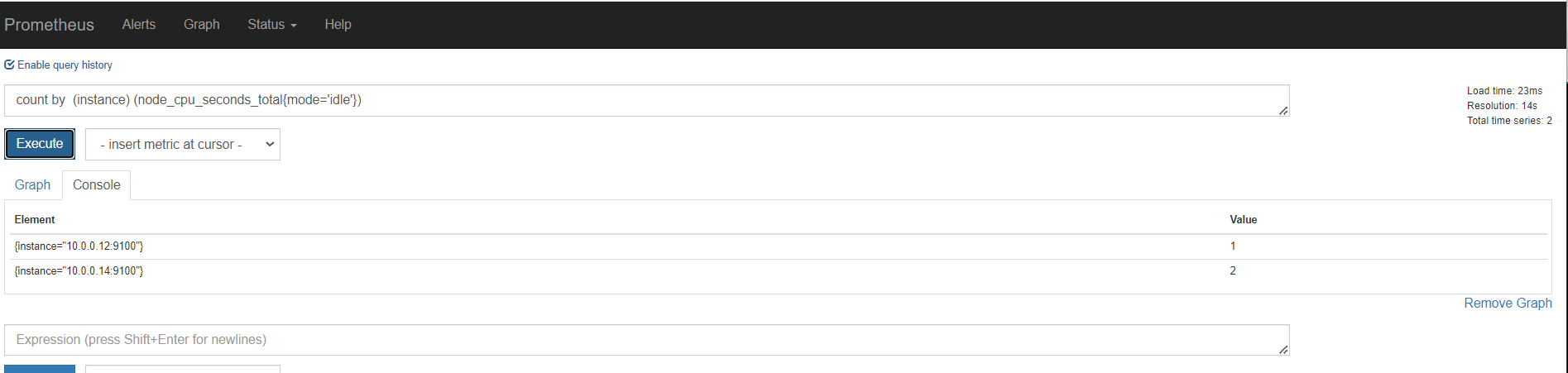

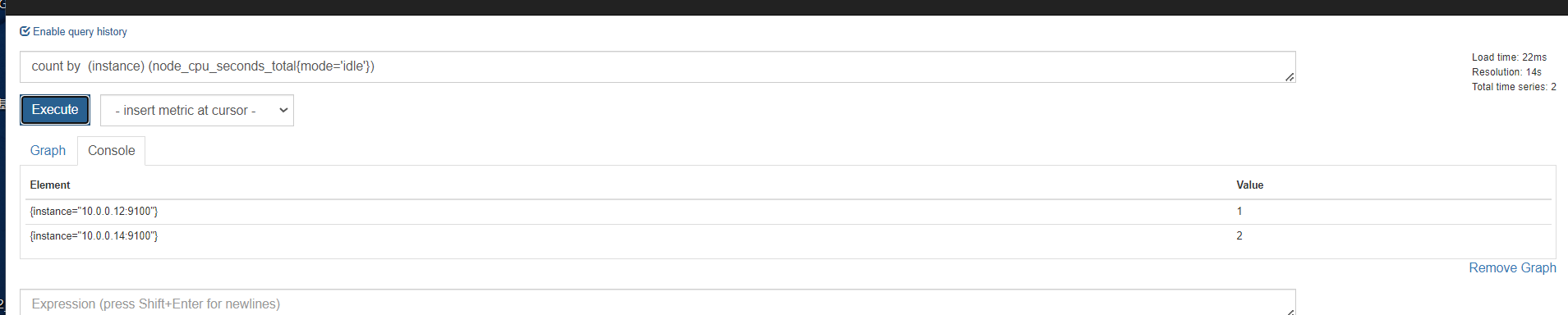

sum()是统计所有,是这个指标求和。 by ()就是根据那个进行分组求和 。也就是分组聚合 sum(promhttp_metric_handler_requests_total) by (job)

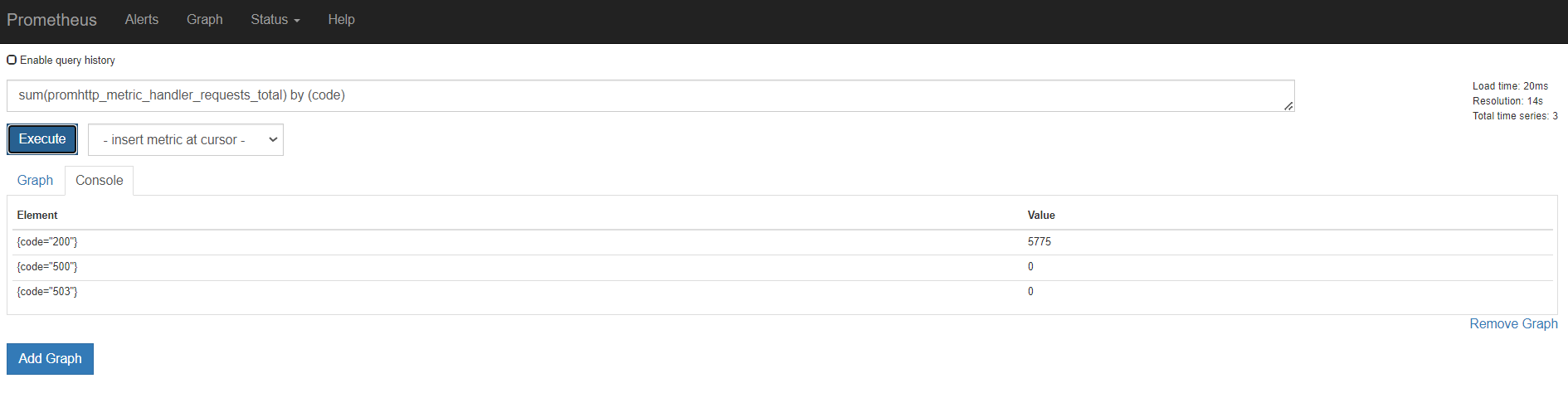

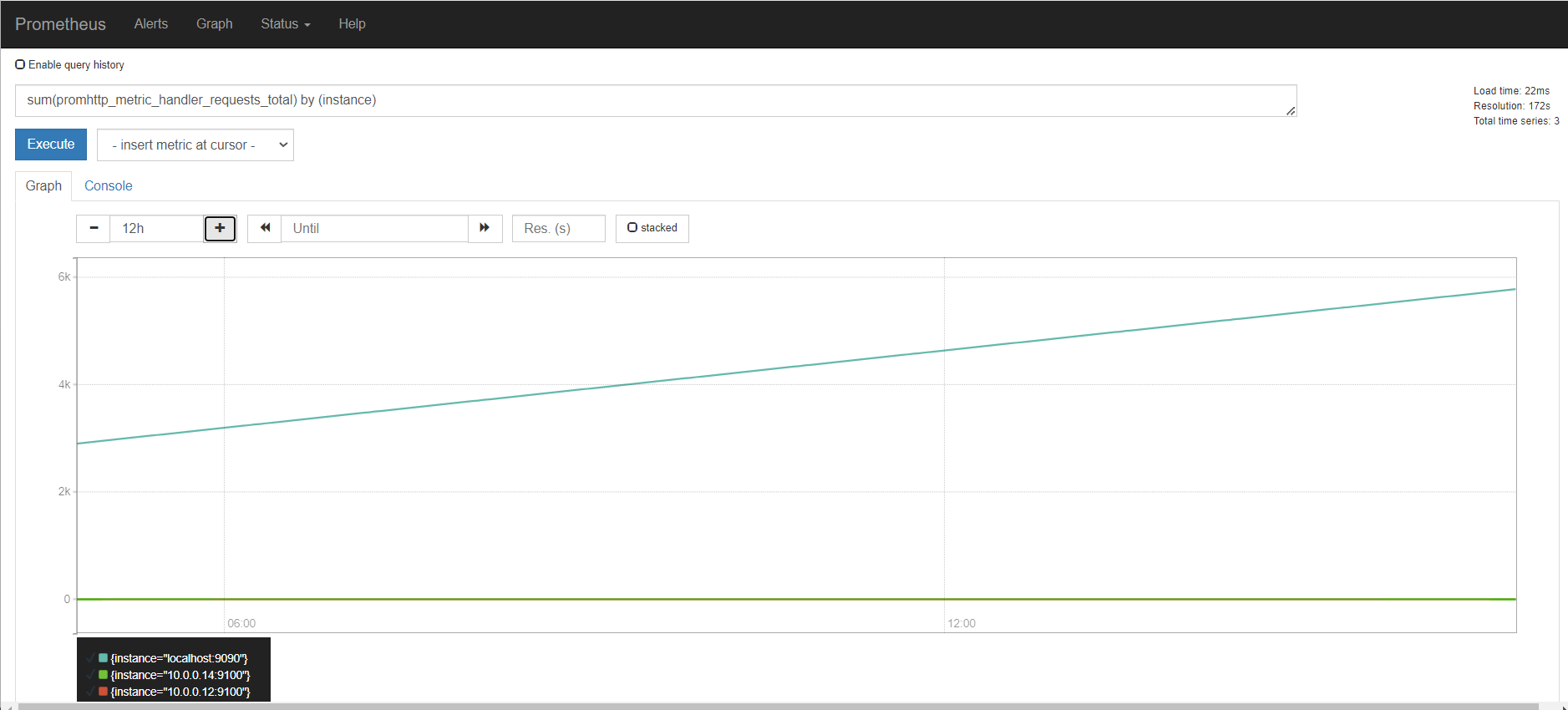

我们看上面那个指标,我们可以知道,还可以根据code和instance分组聚合。分组统计http请求个数

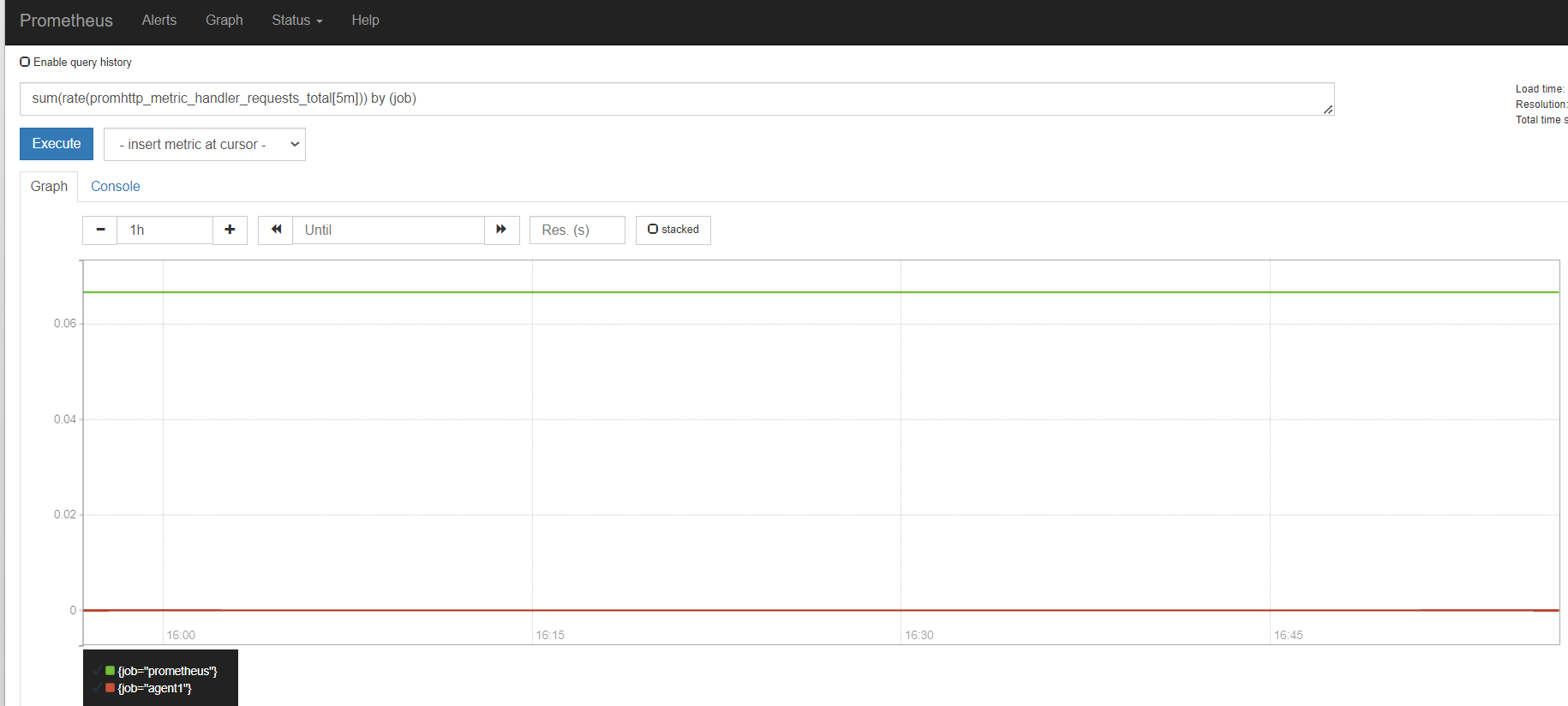

分组统计个数之后,还能看到图形

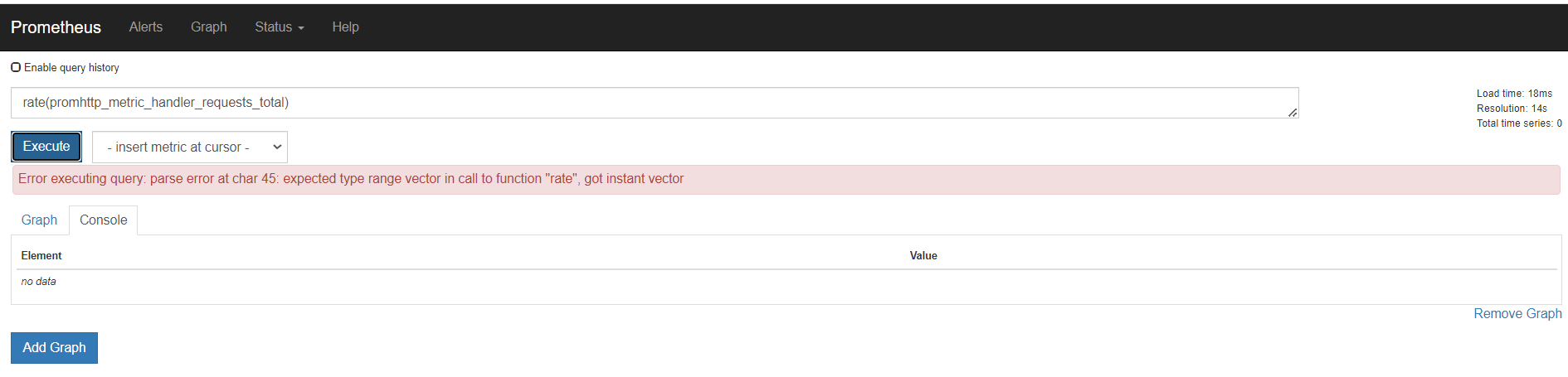

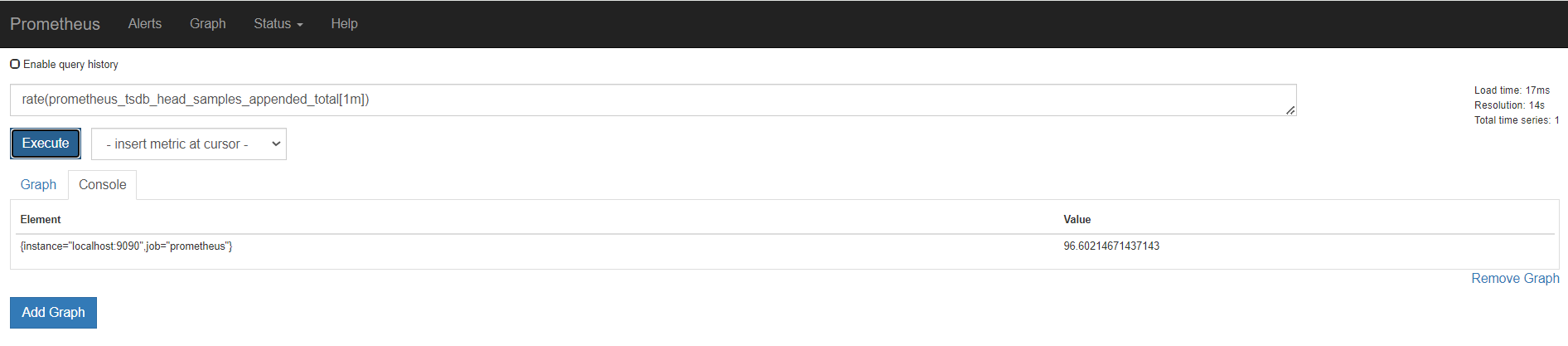

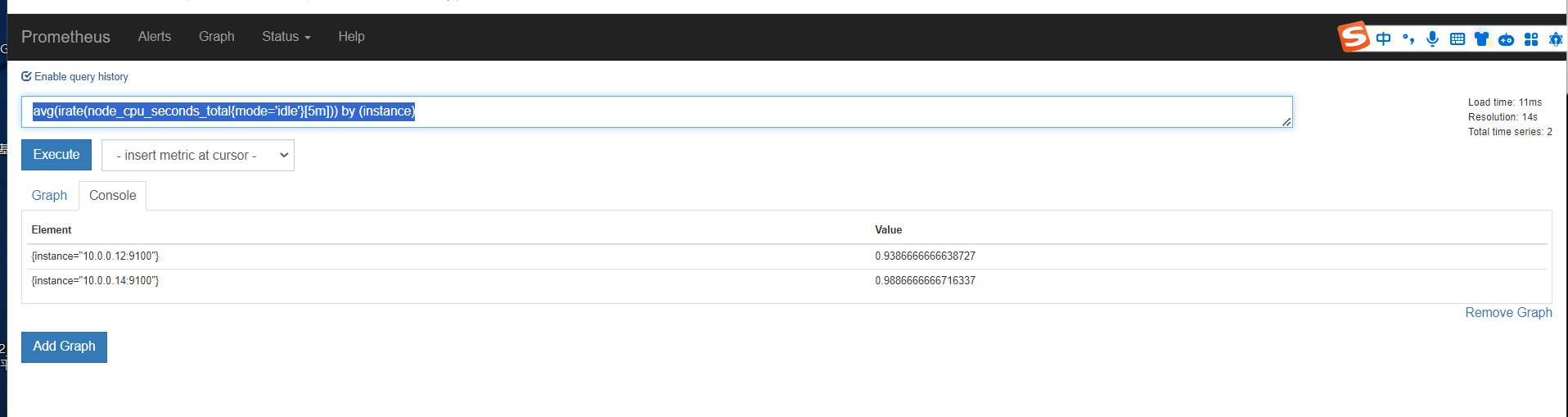

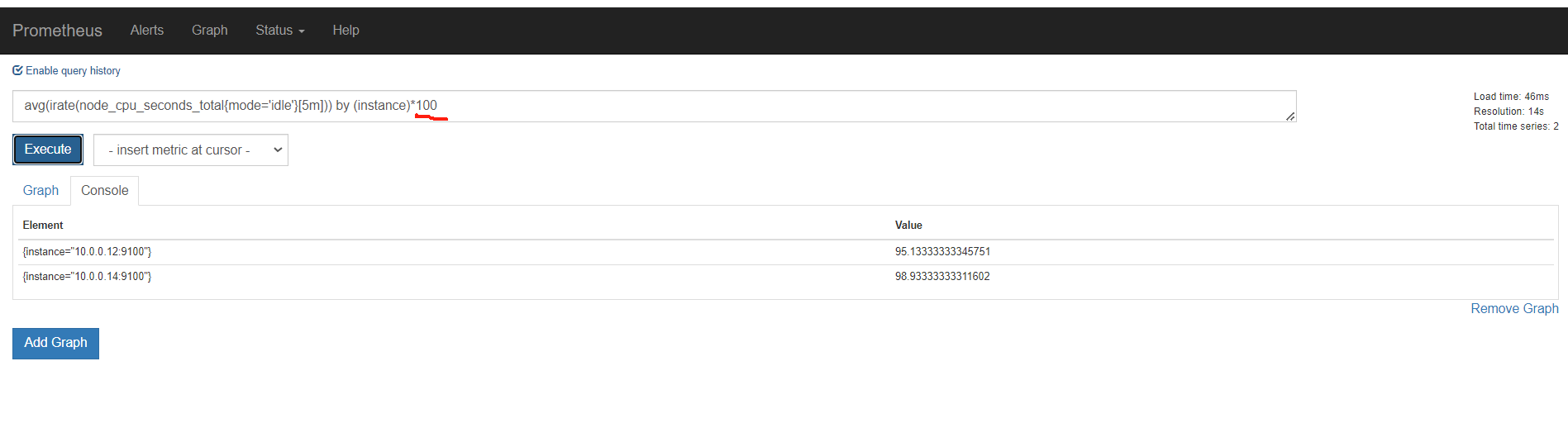

rate

s m h d 天 w 周

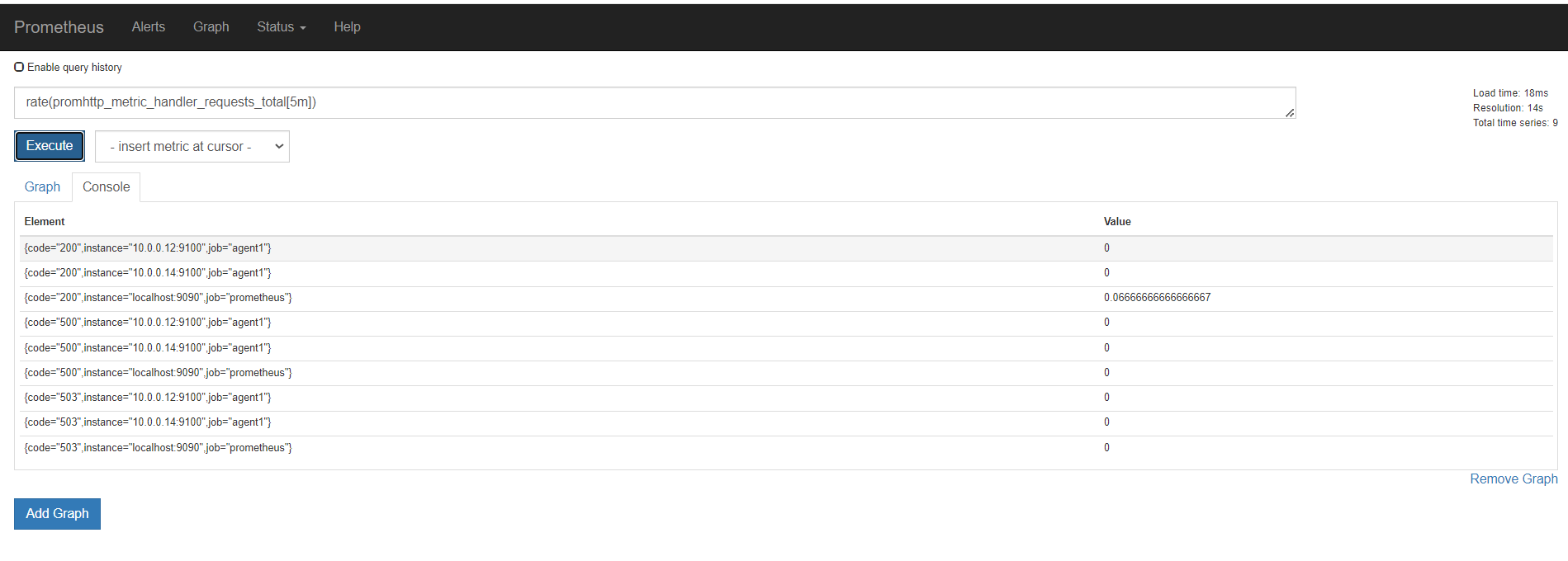

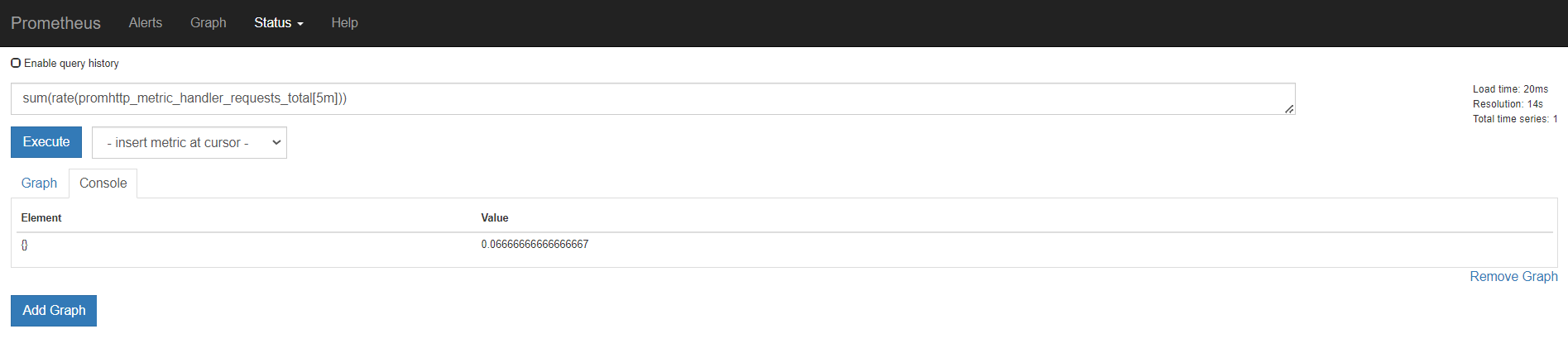

sum(rate(promhttp_metric_handler_requests_total[5m])) by (job)

原始这样的

需要指定时间

这里指定5分钟rate用来计算一定范围内时间序列的每秒平均增长率。只能遇见计数器一起使用

上面是所有元素各自的结果,加上sum(),就是上面所有的统计求和,这里结果是一样的

再用by分组聚合

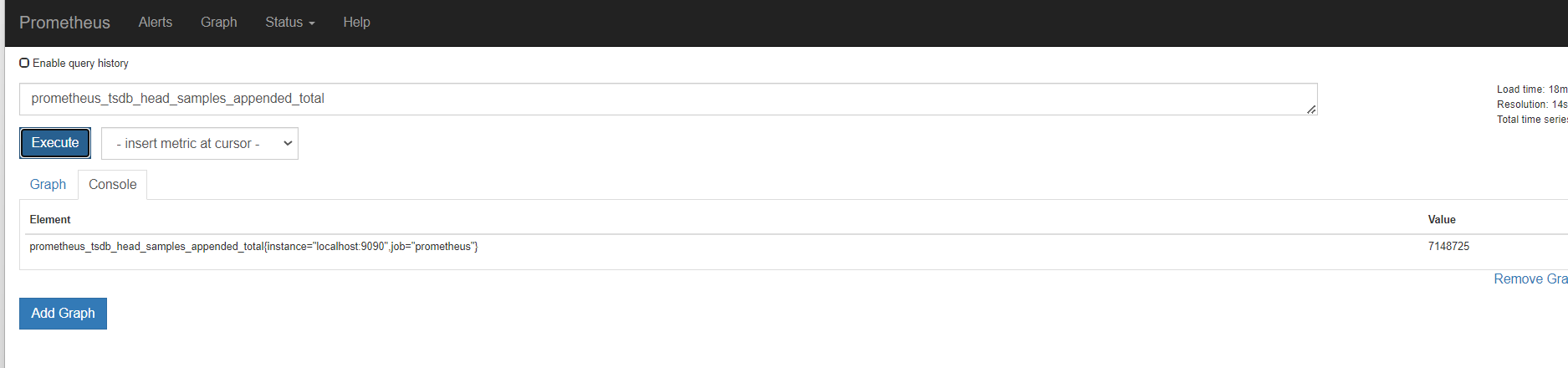

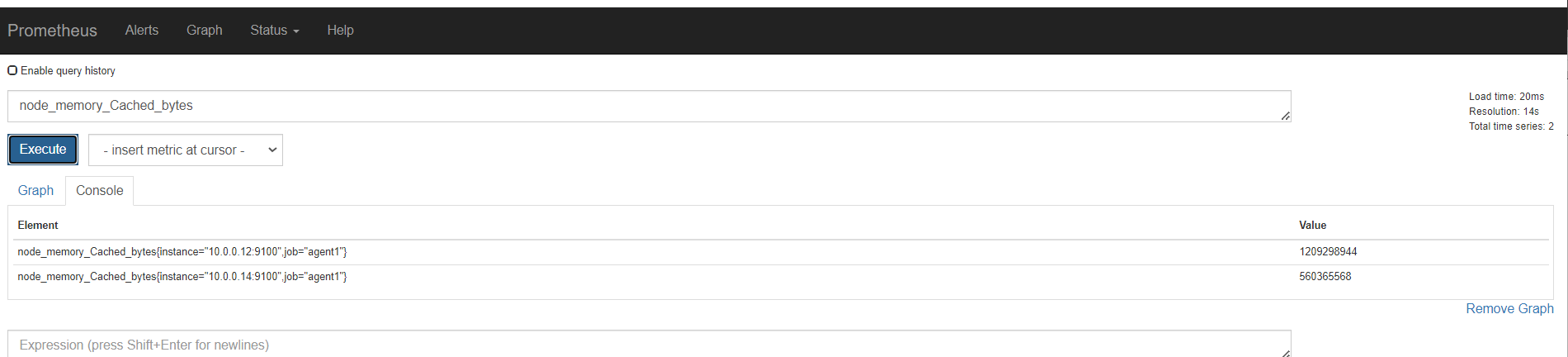

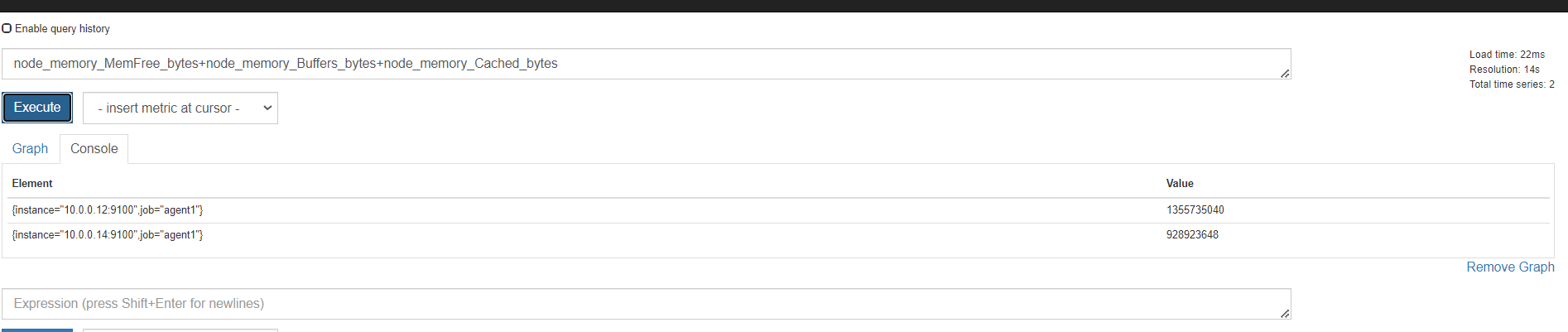

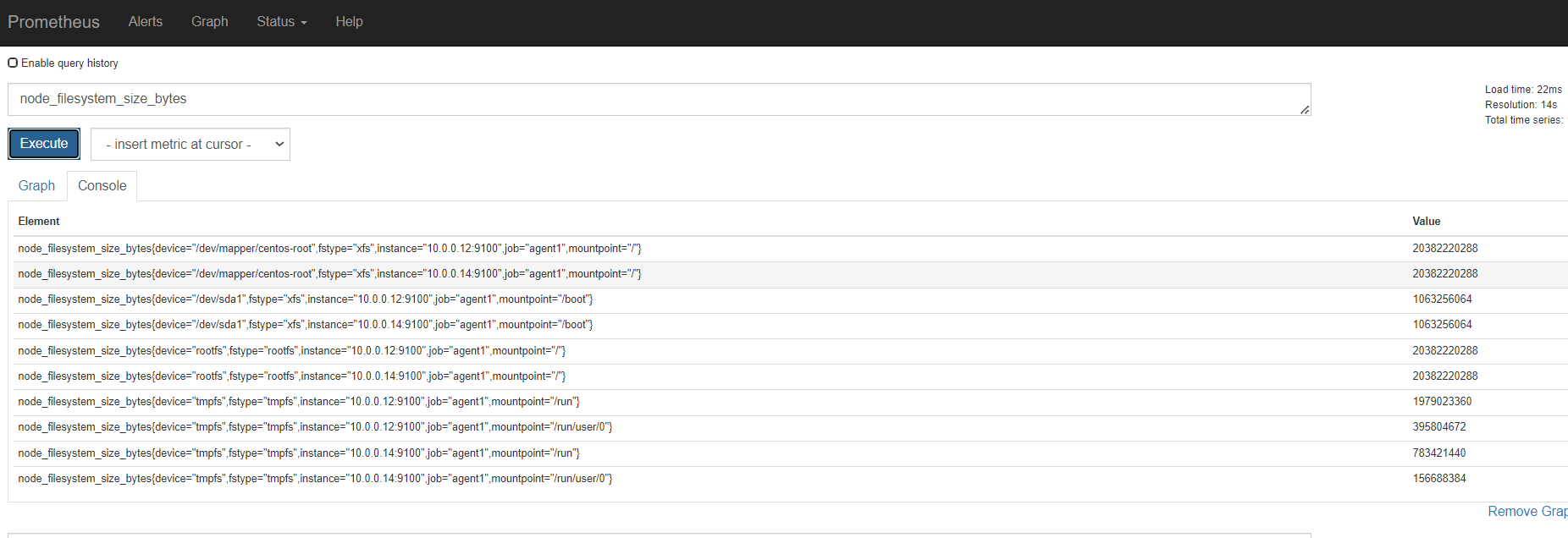

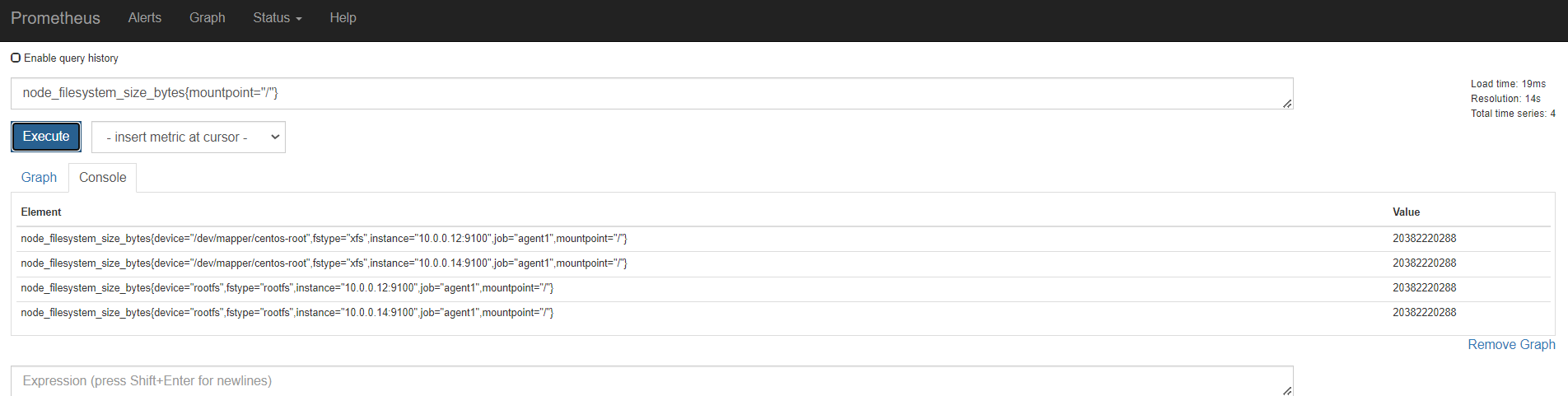

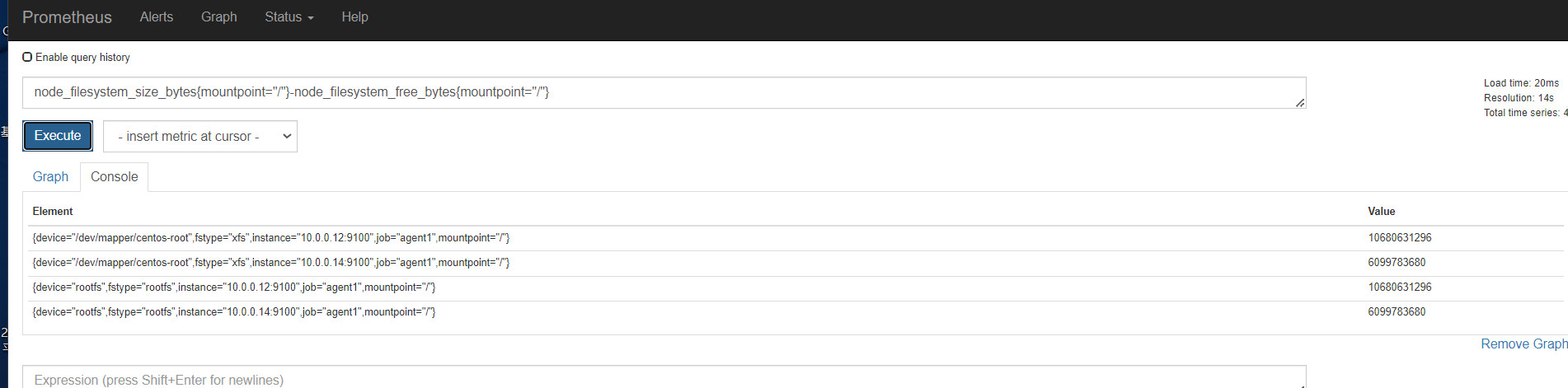

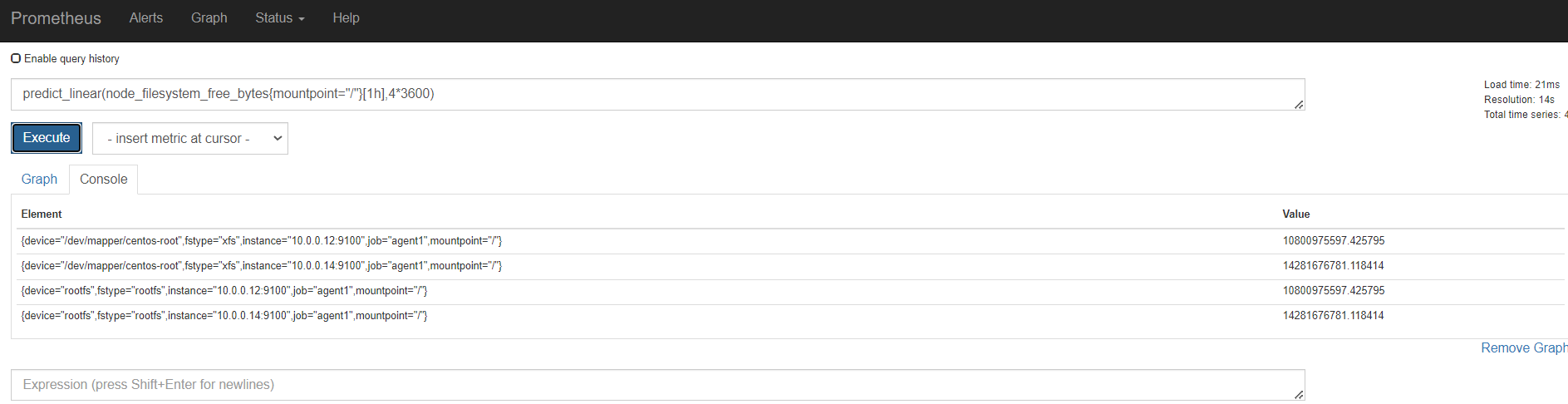

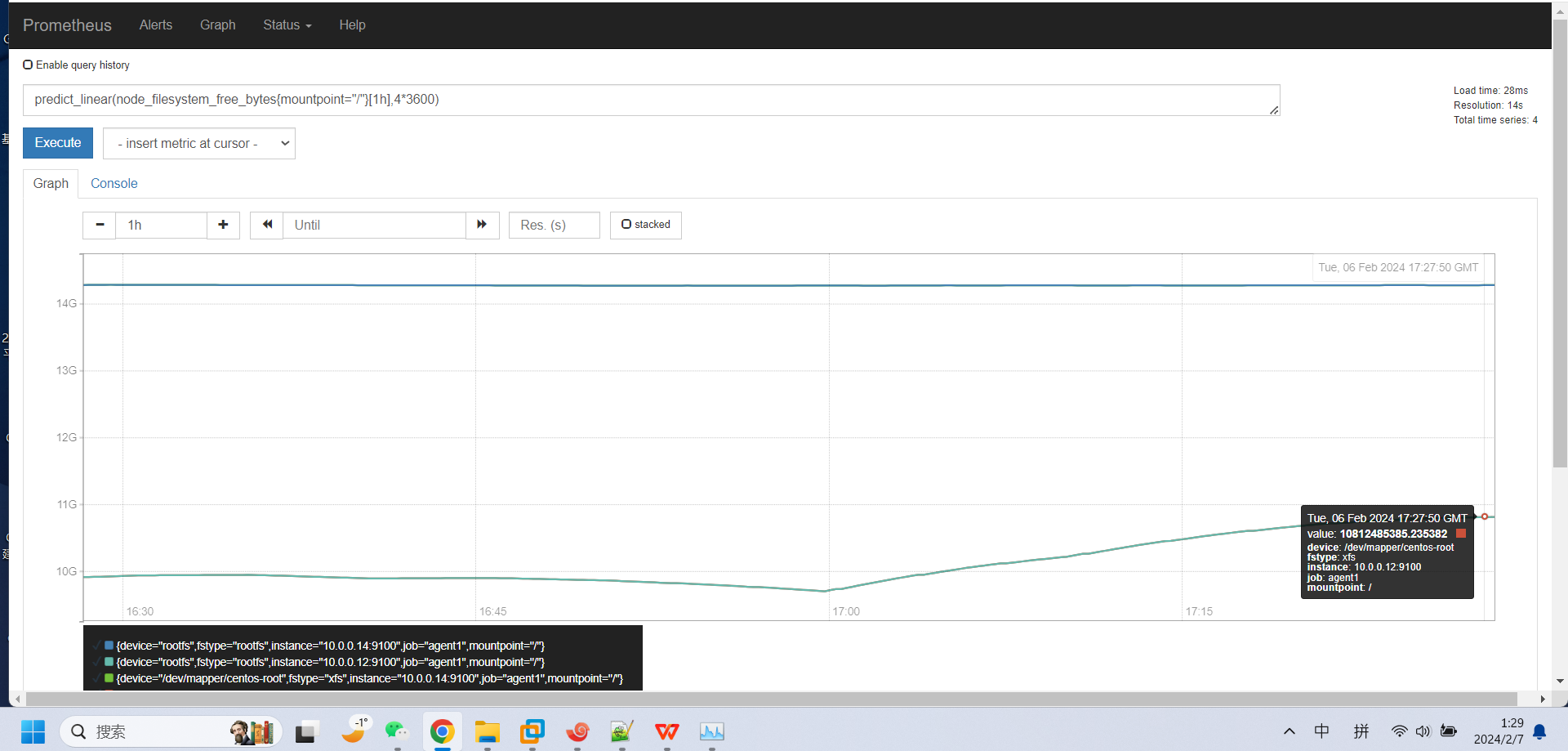

容量规划

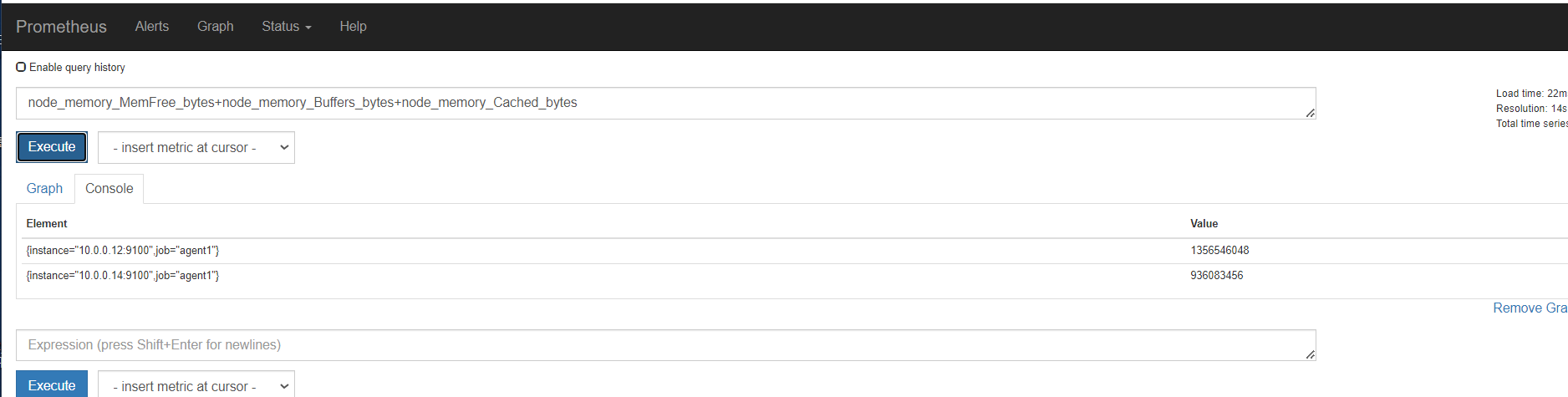

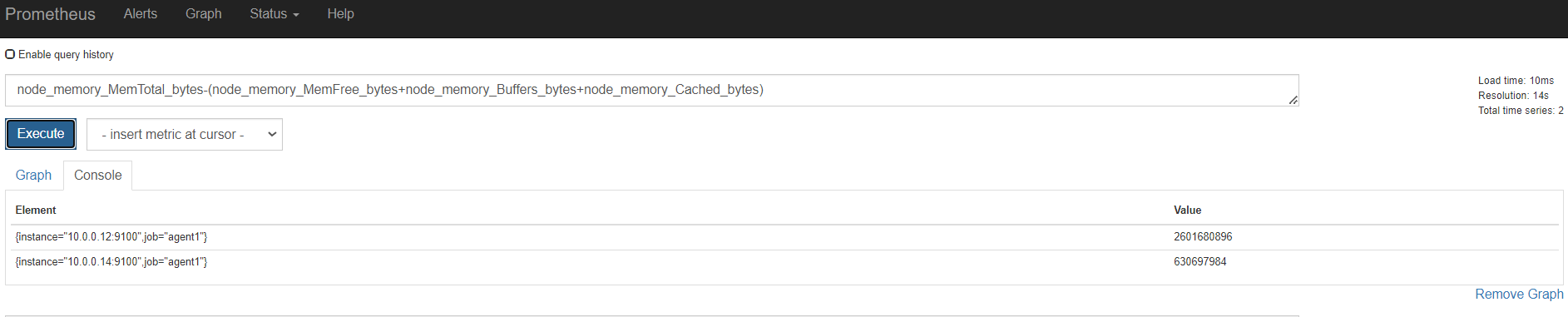

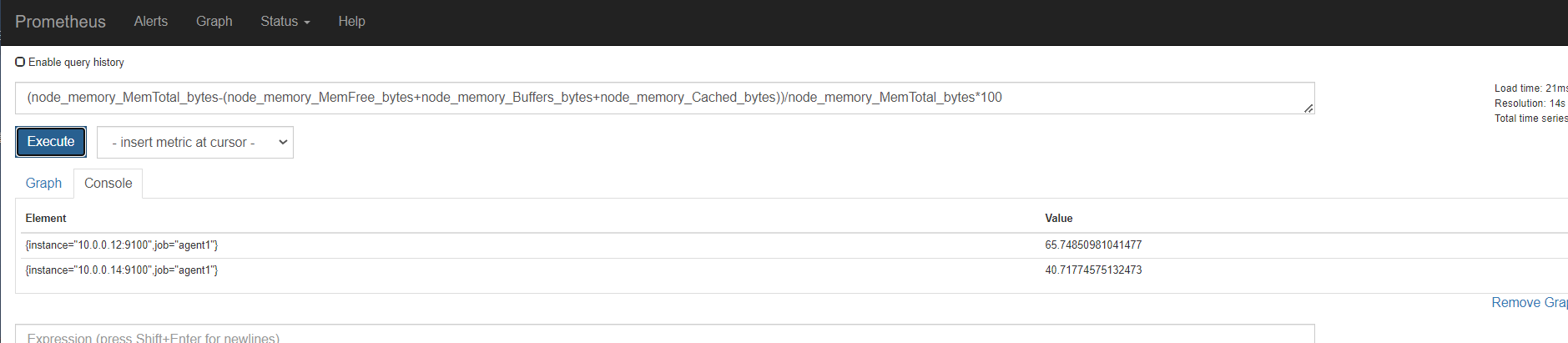

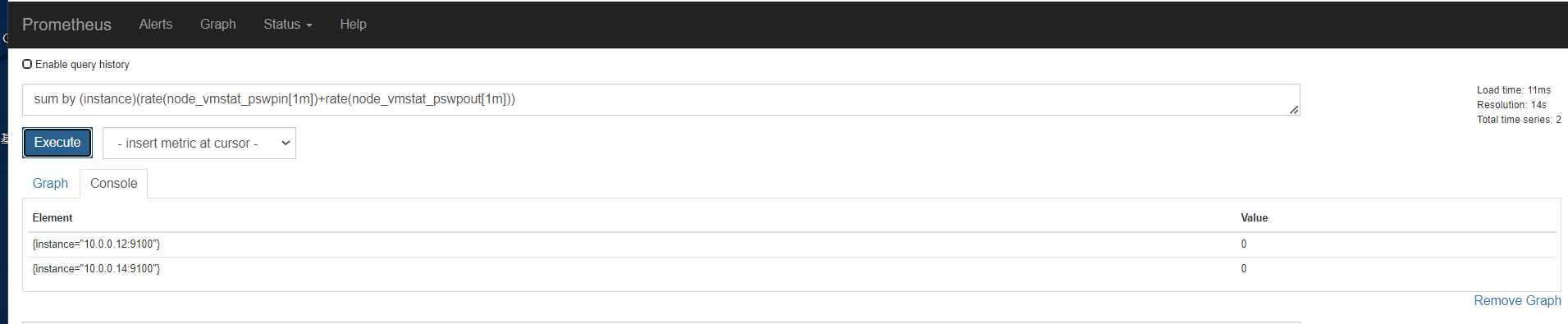

内存

磁盘

监控节点

安装node exporter

wget https://github.com/prometheus/node_exporter/releases/download/v0.16.0/node_exporter-0.16.0.linux-amd64.tar.gz

tar xf node_exporter-*

sudo cp node_exporter-*/node_exporter /usr/local/bin/

查看版本

[root@mcw02 ~]# /usr/local/node_exporter/node_exporter --version

node_exporter, version 0.16.0 (branch: HEAD, revision: d42bd70f4363dced6b77d8fc311ea57b63387e4f)

build user: root@a67a9bc13a69

build date: 20180515-15:52:42

go version: go1.9.6

[root@mcw02 ~]#

配置node exporter

[root@mcw02 ~]# /usr/local/node_exporter/node_exporter --help

usage: node_exporter [<flags>] Flags:

-h, --help Show context-sensitive help (also try --help-long and --help-man).

--collector.diskstats.ignored-devices="^(ram|loop|fd|(h|s|v|xv)d[a-z]|nvme\\d+n\\d+p)\\d+$"

Regexp of devices to ignore for diskstats.

--collector.filesystem.ignored-mount-points="^/(dev|proc|sys|var/lib/docker)($|/)"

Regexp of mount points to ignore for filesystem collector.

--collector.filesystem.ignored-fs-types="^(autofs|binfmt_misc|cgroup|configfs|debugfs|devpts|devtmpfs|fusectl|hugetlbfs|mqueue|overlay|proc|procfs|pstore|rpc_pipefs|securityfs|sysfs|tracefs)$"

Regexp of filesystem types to ignore for filesystem collector.

--collector.netdev.ignored-devices="^$"

Regexp of net devices to ignore for netdev collector.

--collector.netstat.fields="^(.*_(InErrors|InErrs)|Ip_Forwarding|Ip(6|Ext)_(InOctets|OutOctets)|Icmp6?_(InMsgs|OutMsgs)|TcpExt_(Listen.*|Syncookies.*)|Tcp_(ActiveOpens|PassiveOpens|RetransSegs|CurrEstab)|Udp6?_(InDatagrams|OutDatagrams|NoPorts))$"

Regexp of fields to return for netstat collector.

--collector.ntp.server="127.0.0.1"

NTP server to use for ntp collector

--collector.ntp.protocol-version=4

NTP protocol version

--collector.ntp.server-is-local

Certify that collector.ntp.server address is the same local host as this collector.

--collector.ntp.ip-ttl=1 IP TTL to use while sending NTP query

--collector.ntp.max-distance=3.46608s

Max accumulated distance to the root

--collector.ntp.local-offset-tolerance=1ms

Offset between local clock and local ntpd time to tolerate

--path.procfs="/proc" procfs mountpoint.

--path.sysfs="/sys" sysfs mountpoint.

--collector.qdisc.fixtures=""

test fixtures to use for qdisc collector end-to-end testing

--collector.runit.servicedir="/etc/service"

Path to runit service directory.

--collector.supervisord.url="http://localhost:9001/RPC2"

XML RPC endpoint.

--collector.systemd.unit-whitelist=".+"

Regexp of systemd units to whitelist. Units must both match whitelist and not match blacklist to be included.

--collector.systemd.unit-blacklist=".+\\.scope"

Regexp of systemd units to blacklist. Units must both match whitelist and not match blacklist to be included.

--collector.systemd.private

Establish a private, direct connection to systemd without dbus.

--collector.textfile.directory=""

Directory to read text files with metrics from.

--collector.vmstat.fields="^(oom_kill|pgpg|pswp|pg.*fault).*"

Regexp of fields to return for vmstat collector.

--collector.wifi.fixtures=""

test fixtures to use for wifi collector metrics

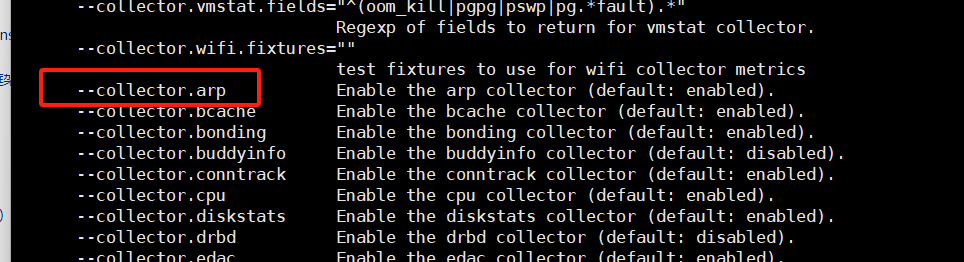

--collector.arp Enable the arp collector (default: enabled).

--collector.bcache Enable the bcache collector (default: enabled).

--collector.bonding Enable the bonding collector (default: enabled).

--collector.buddyinfo Enable the buddyinfo collector (default: disabled).

--collector.conntrack Enable the conntrack collector (default: enabled).

--collector.cpu Enable the cpu collector (default: enabled).

--collector.diskstats Enable the diskstats collector (default: enabled).

--collector.drbd Enable the drbd collector (default: disabled).

--collector.edac Enable the edac collector (default: enabled).

--collector.entropy Enable the entropy collector (default: enabled).

--collector.filefd Enable the filefd collector (default: enabled).

--collector.filesystem Enable the filesystem collector (default: enabled).

--collector.hwmon Enable the hwmon collector (default: enabled).

--collector.infiniband Enable the infiniband collector (default: enabled).

--collector.interrupts Enable the interrupts collector (default: disabled).

--collector.ipvs Enable the ipvs collector (default: enabled).

--collector.ksmd Enable the ksmd collector (default: disabled).

--collector.loadavg Enable the loadavg collector (default: enabled).

--collector.logind Enable the logind collector (default: disabled).

--collector.mdadm Enable the mdadm collector (default: enabled).

--collector.meminfo Enable the meminfo collector (default: enabled).

--collector.meminfo_numa Enable the meminfo_numa collector (default: disabled).

--collector.mountstats Enable the mountstats collector (default: disabled).

--collector.netdev Enable the netdev collector (default: enabled).

--collector.netstat Enable the netstat collector (default: enabled).

--collector.nfs Enable the nfs collector (default: enabled).

--collector.nfsd Enable the nfsd collector (default: enabled).

--collector.ntp Enable the ntp collector (default: disabled).

--collector.qdisc Enable the qdisc collector (default: disabled).

--collector.runit Enable the runit collector (default: disabled).

--collector.sockstat Enable the sockstat collector (default: enabled).

--collector.stat Enable the stat collector (default: enabled).

--collector.supervisord Enable the supervisord collector (default: disabled).

--collector.systemd Enable the systemd collector (default: disabled).

--collector.tcpstat Enable the tcpstat collector (default: disabled).

--collector.textfile Enable the textfile collector (default: enabled).

--collector.time Enable the time collector (default: enabled).

--collector.uname Enable the uname collector (default: enabled).

--collector.vmstat Enable the vmstat collector (default: enabled).

--collector.wifi Enable the wifi collector (default: enabled).

--collector.xfs Enable the xfs collector (default: enabled).

--collector.zfs Enable the zfs collector (default: enabled).

--collector.timex Enable the timex collector (default: enabled).

--web.listen-address=":9100"

Address on which to expose metrics and web interface.

--web.telemetry-path="/metrics"

Path under which to expose metrics.

--log.level="info" Only log messages with the given severity or above. Valid levels: [debug, info, warn, error, fatal]

--log.format="logger:stderr"

Set the log target and format. Example: "logger:syslog?appname=bob&local=7" or "logger:stdout?json=true"

--version Show application version. [root@mcw02 ~]#

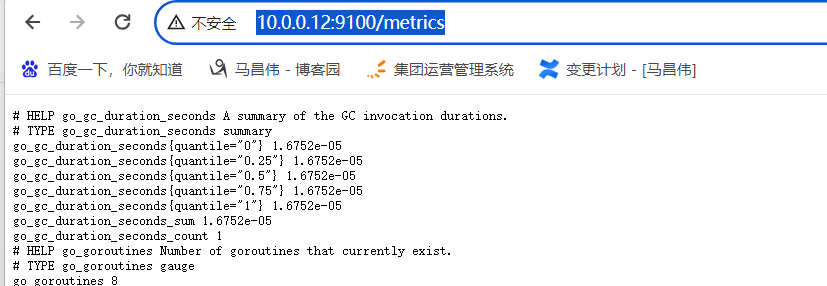

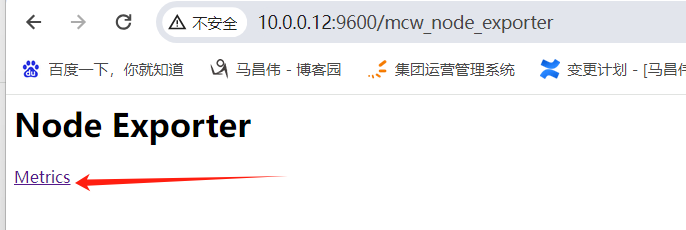

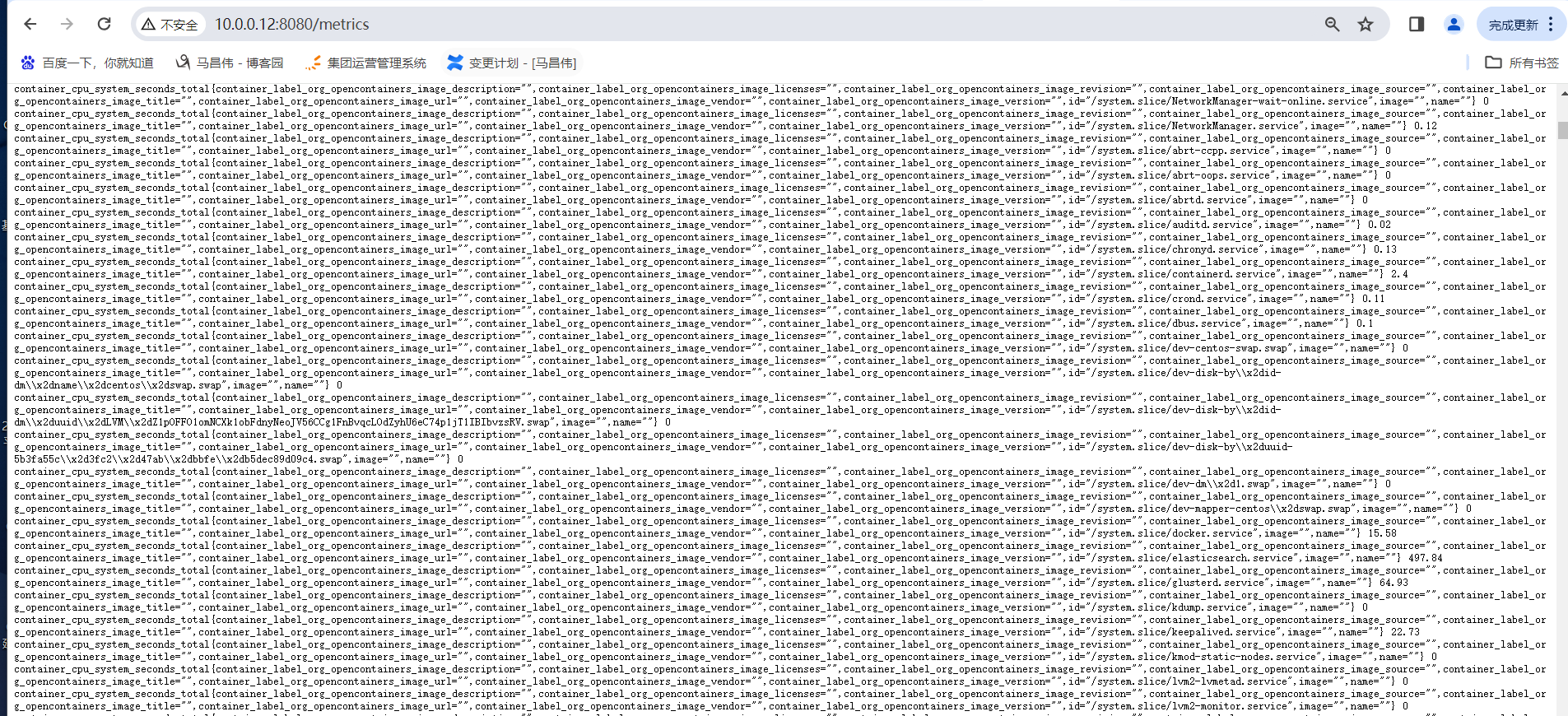

下面这样启动的,默认访问地址 http://10.0.0.12:9100/metrics

[root@mcw02 ~]# nohup /usr/local/node_exporter/node_exporter &

[1] 43955

[root@mcw02 ~]#

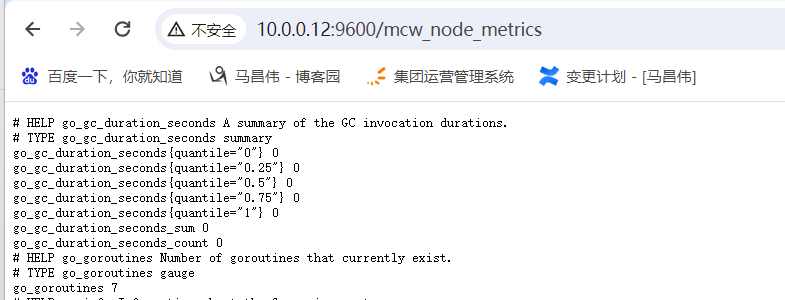

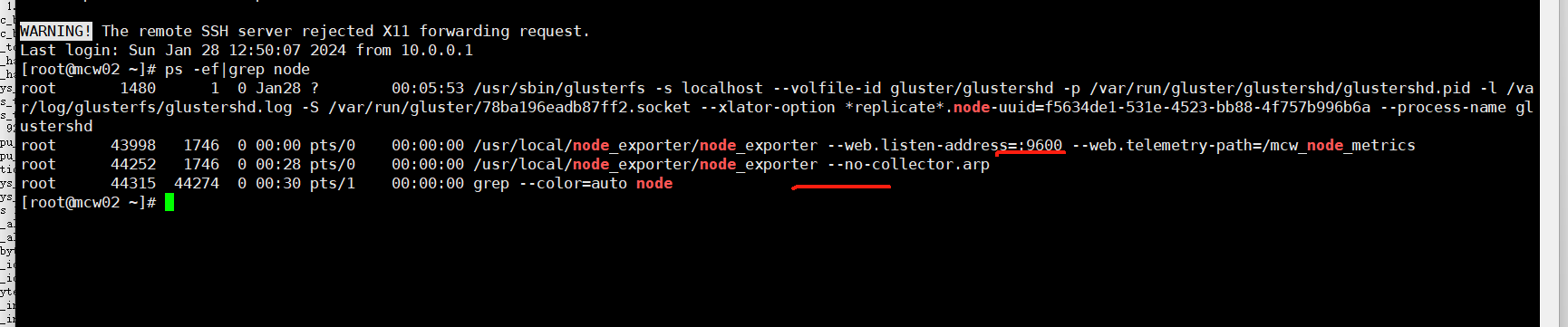

如下添加参数访问,指定端口和访问路径

[root@mcw02 ~]# nohup /usr/local/node_exporter/node_exporter --web.listen-address=":9600" --web.telemetry-path="/mcw_node_metrics" &

[1] 43998

[root@mcw02 ~]#

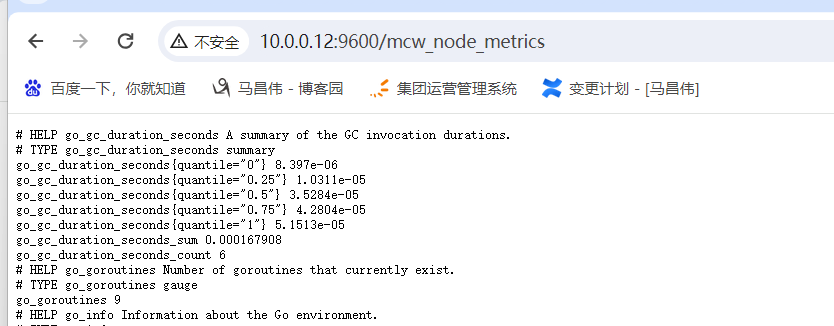

可以看到,正常访问到数据 http://10.0.0.12:9600/mcw_node_metrics

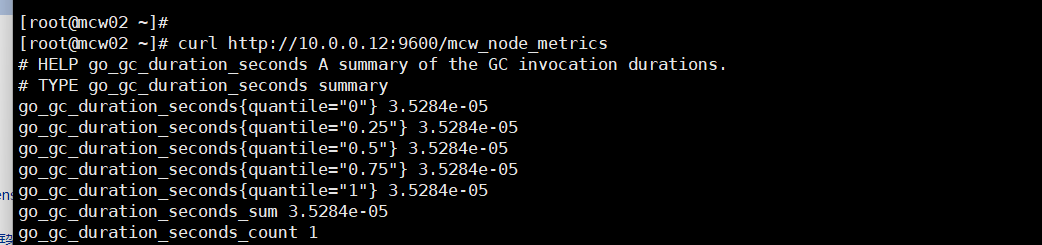

curl请求

报错了,是因为我们已经改了端口了

服务端修改配置端口,重载配置

[root@mcw03 prometheus]# vim /etc/prometheus.yml

[root@mcw03 prometheus]# grep -A 5 agent /etc/prometheus.yml

- job_name: 'agent1'

static_configs:

- targets: ['10.0.0.14:9100']

- targets: ['10.0.0.12:9600']

- job_name: 'promserver'

static_configs:

[root@mcw03 prometheus]# curl -X POST http://localhost:9090/-/reload

[root@mcw03 prometheus]#

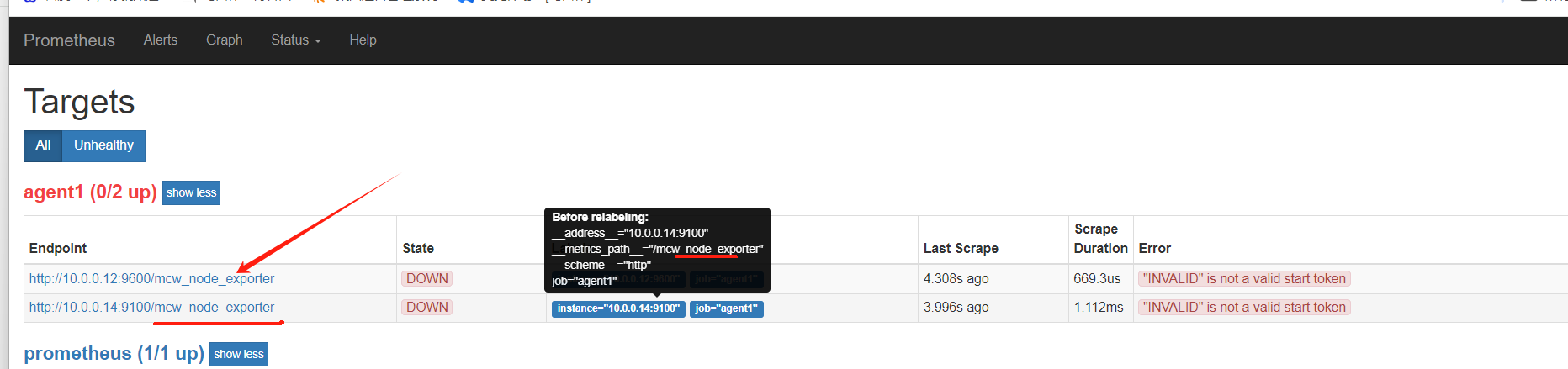

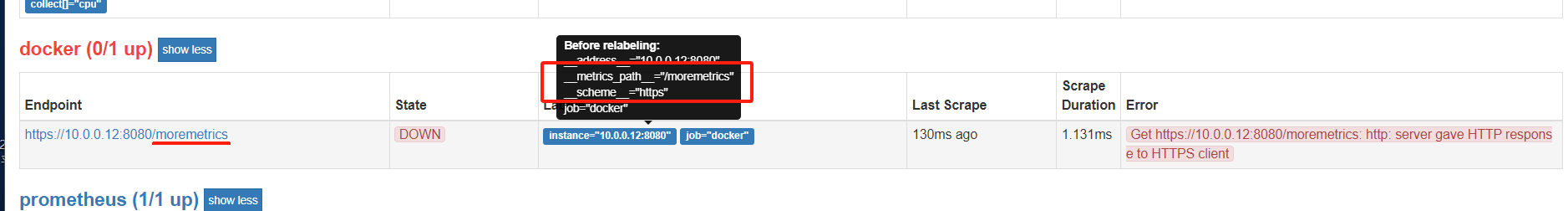

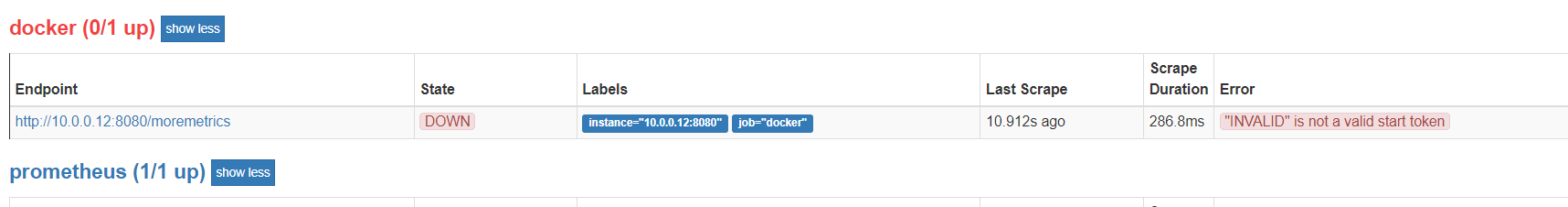

"INVALID" is not a valid start token

端口改了,但是访问路径没有改,还是报错

一修改,改的一组的,访问路径。点击访问12节点的

可以访问到数据

数据粗来了

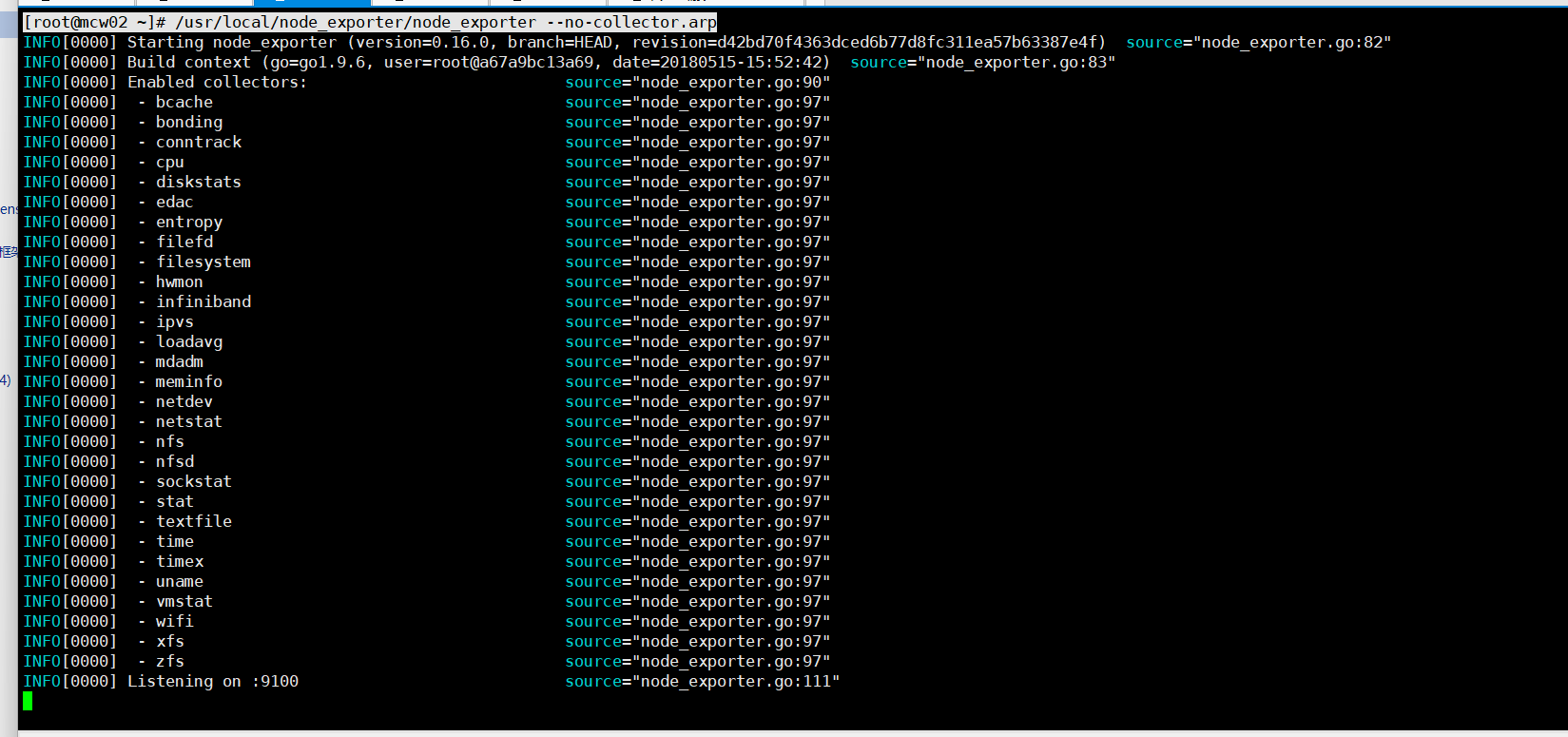

默认是开启的收集器,想要关闭,前面加no-

关闭arp执行

[root@mcw02 ~]# /usr/local/node_exporter/node_exporter --no-collector.arp

这因为端口 不同,直接一个机器上起了两个客户端,并且可以 访问

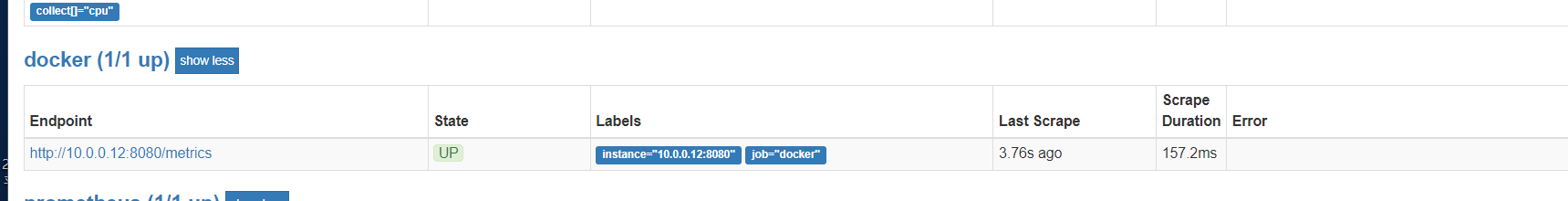

把path去掉之后,使用默认的路径,都up了,并且12节点上起了两个客户端

9600端口,如果不改路径,那么也可up

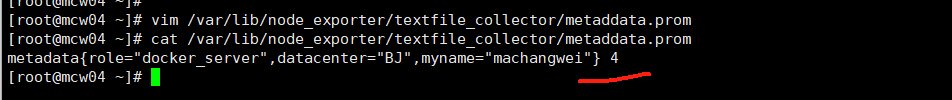

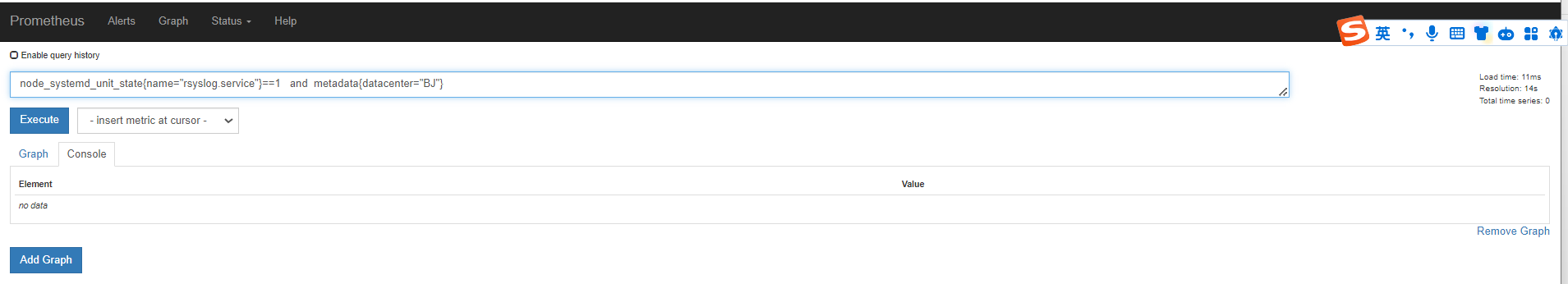

配置textfile收集器

创建目录保存指标定义文件

[root@mcw02 ~]# mkdir -p /var/lib/node_exporter/textfile_collector

[root@mcw02 ~]#

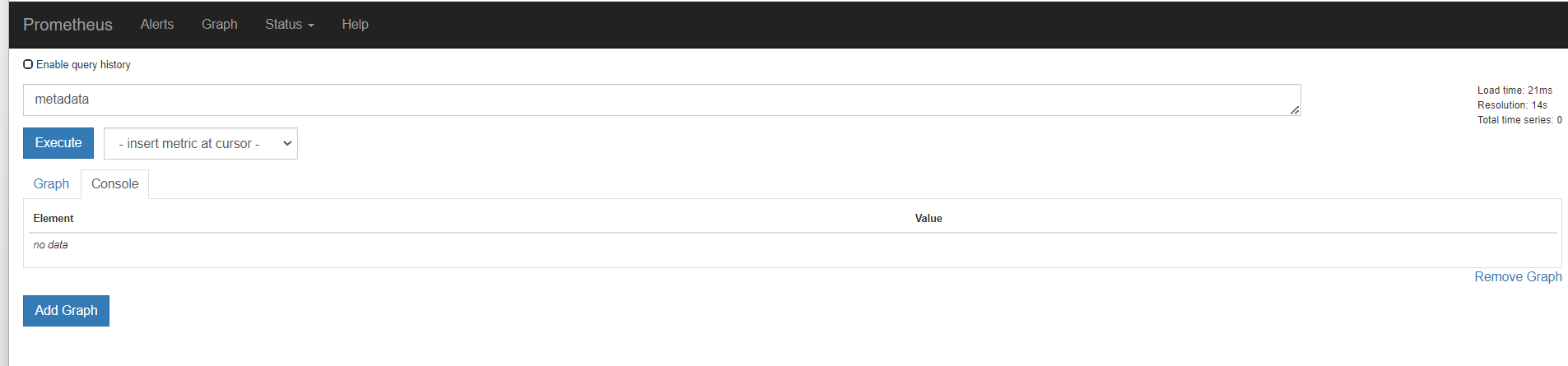

没有这个指标的

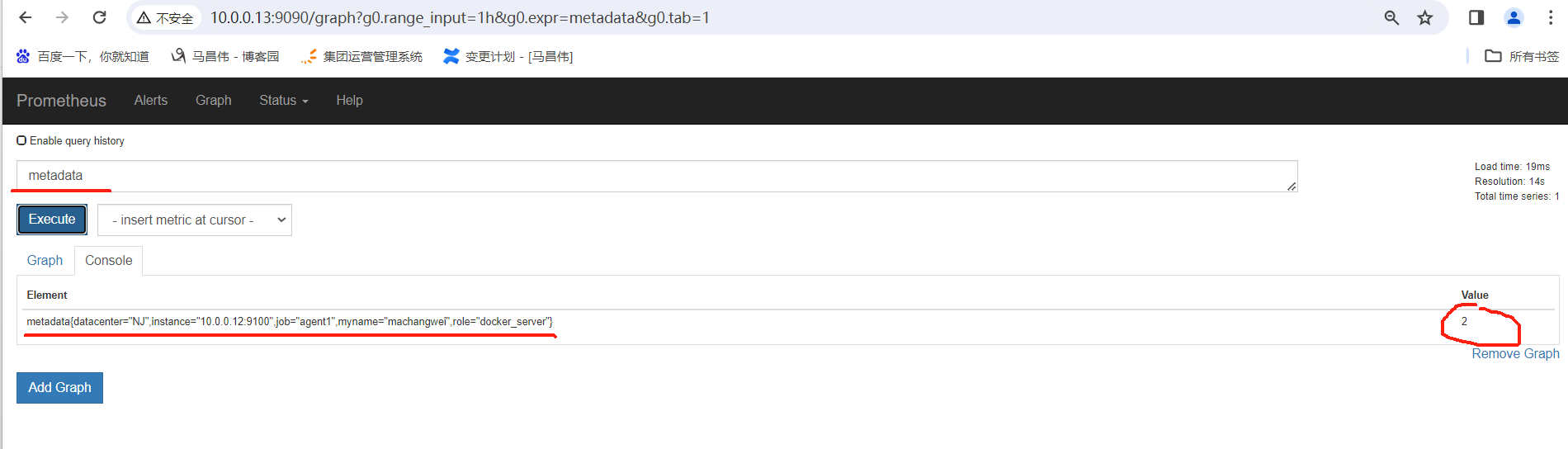

创建目录之后,添加元数据指标。以.prom结尾,花括号里放标签,前面是指标,后面是指标值。指标名称是metadata

[root@mcw02 ~]# mkdir -p /var/lib/node_exporter/textfile_collector

[root@mcw02 ~]# echo 'metadata{role="docker_server",datacenter="NJ",myname="machangwei"}' 2|sudo tee /var/lib/node_exporter/textfile_collector/metaddata.prom

metadata{role="docker_server",datacenter="NJ",myname="machangwei"} 2

[root@mcw02 ~]# cat /var/lib/node_exporter/textfile_collector/metaddata.prom

metadata{role="docker_server",datacenter="NJ",myname="machangwei"} 2

[root@mcw02 ~]#

启动客户端需要指定收集器目录,默认收集器已经开启了

[root@mcw02 ~]# nohup /usr/local/node_exporter/node_exporter --collector.textfile.directory="/var/lib/node_exporter/textfile_collector/" &

[1] 44569

[root@mcw02 ~]#

客户端已经好了

然后再次搜索,可以看到这个指标,只要有程序实时覆盖该文件中的值,那么就是实时监控数据

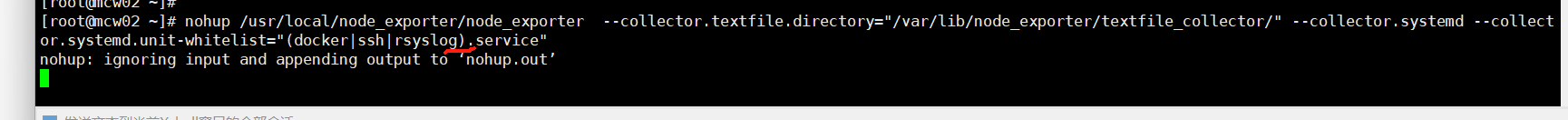

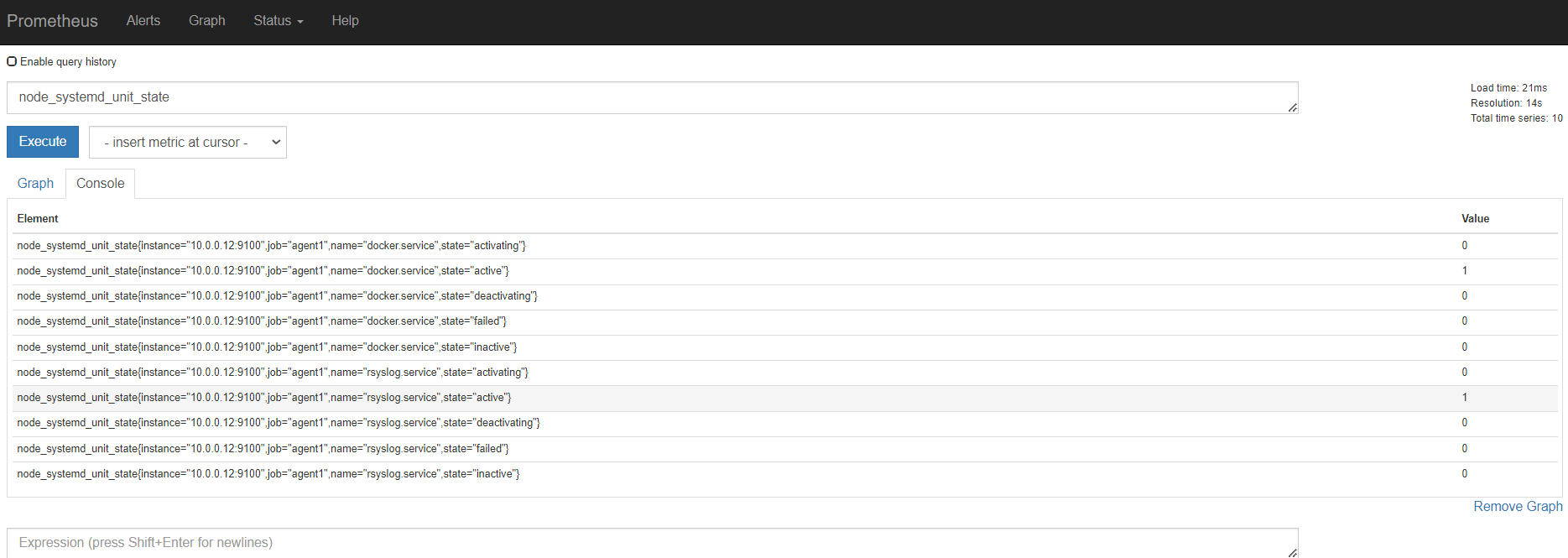

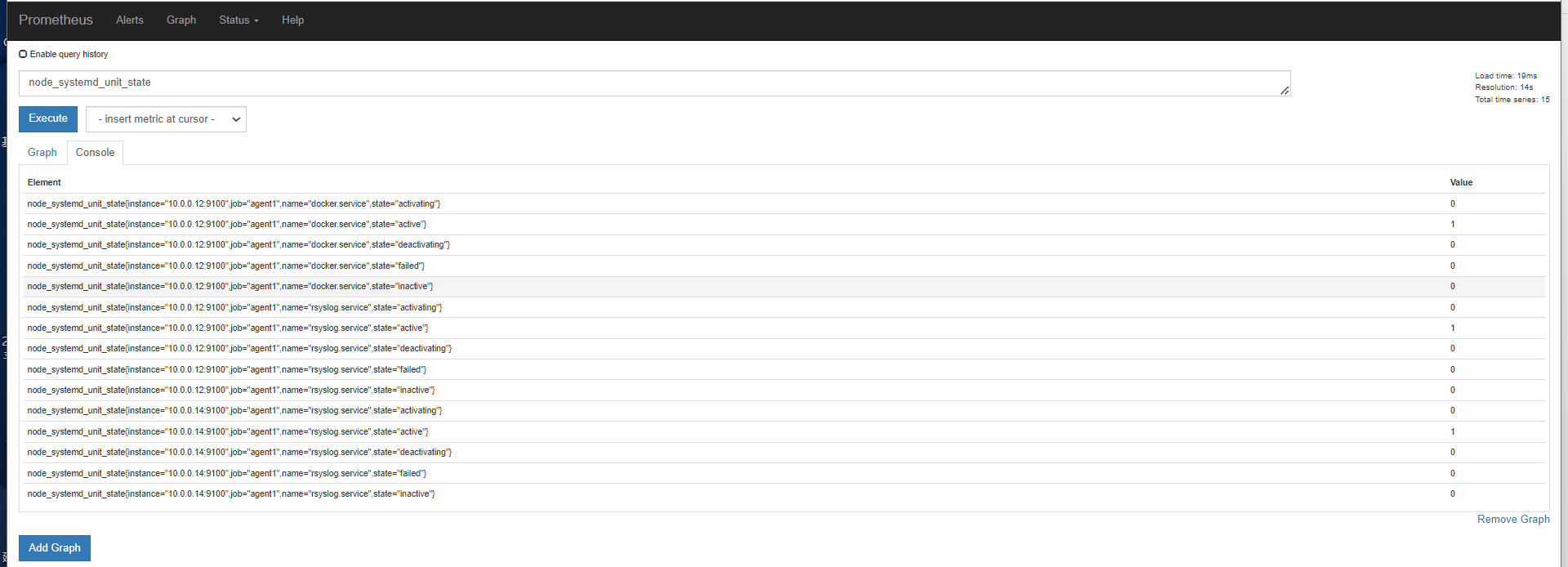

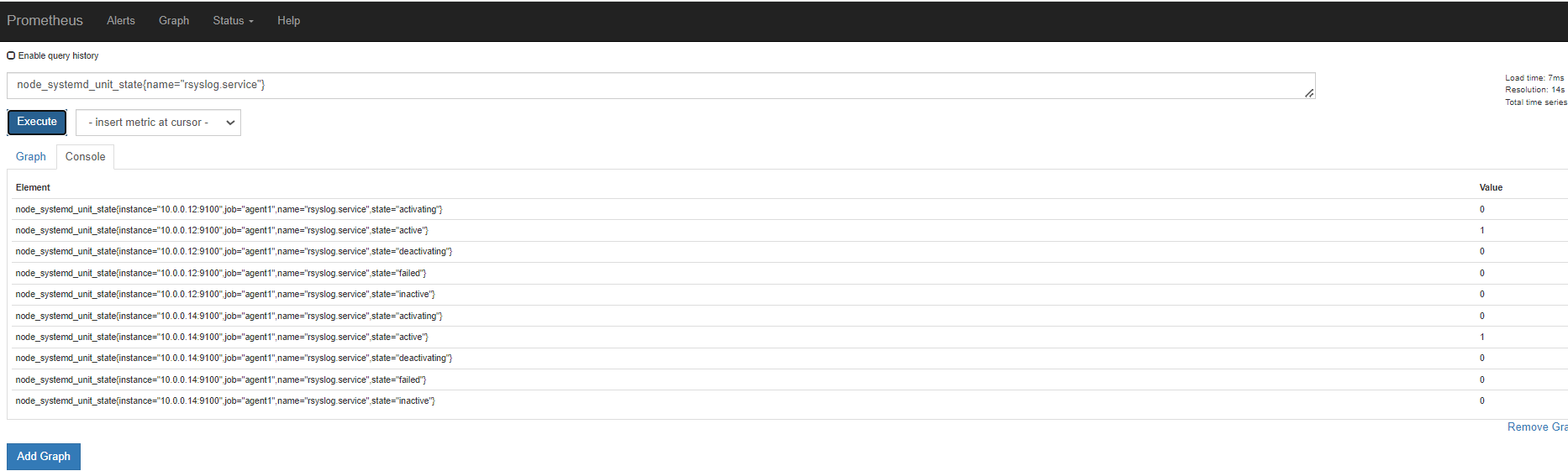

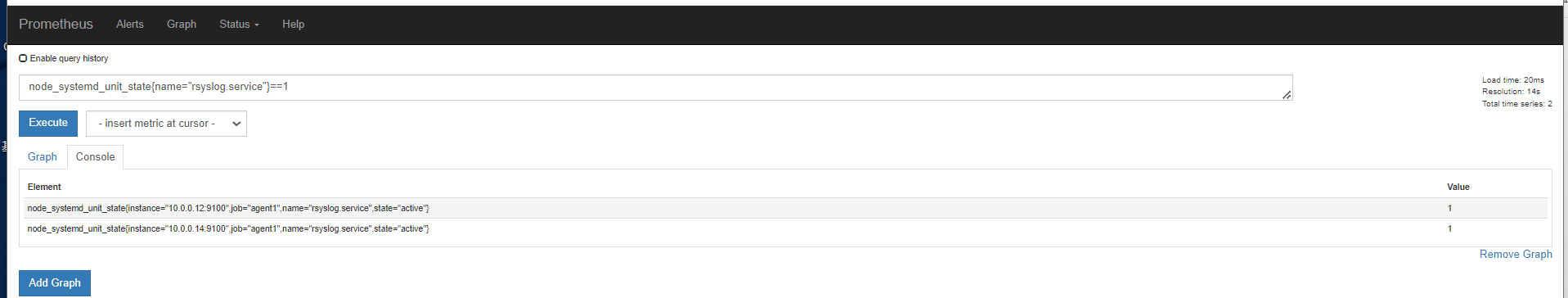

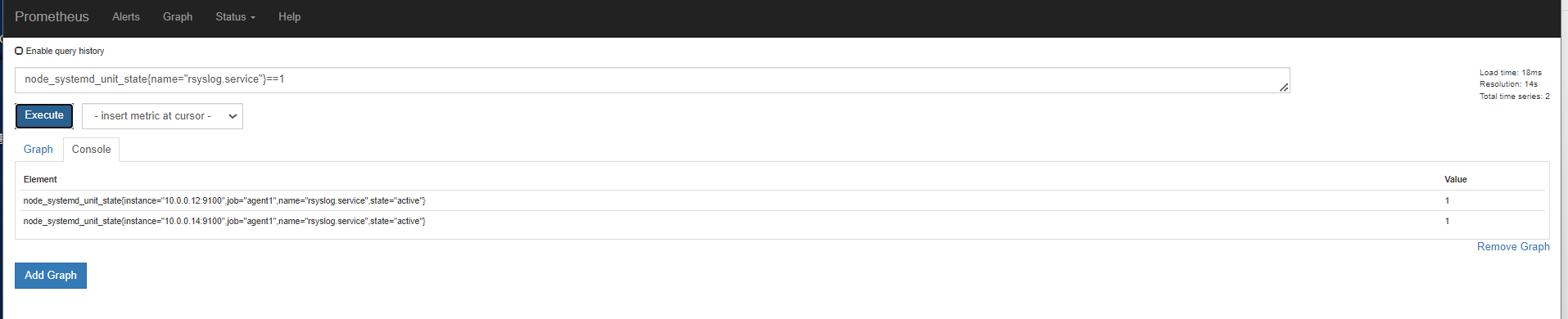

启用systemd收集器

启用后,只想收集的服务加入到白名单:

nohup /usr/local/node_exporter/node_exporter --collector.textfile.directory="/var/lib/node_exporter/textfile_collector/" --collector.systemd --collector.systemd.unit-whitelist="(docker|ssh|rsyslog).service" &

[root@mcw02 ~]# nohup /usr/local/node_exporter/node_exporter --collector.textfile.directory="/var/lib/node_exporter/textfile_collector/" --collector.systemd --collector.system.unit-whitelist="(docker|ssh|rsyslog}.service" &

[1] 48427

[root@mcw02 ~]#

结果报错了

[root@mcw02 ~]# tail nohup.out

time="2024-02-01T01:00:24+08:00" level=info msg=" - timex" source="node_exporter.go:97"

time="2024-02-01T01:00:24+08:00" level=info msg=" - uname" source="node_exporter.go:97"

time="2024-02-01T01:00:24+08:00" level=info msg=" - vmstat" source="node_exporter.go:97"

time="2024-02-01T01:00:24+08:00" level=info msg=" - wifi" source="node_exporter.go:97"

time="2024-02-01T01:00:24+08:00" level=info msg=" - xfs" source="node_exporter.go:97"

time="2024-02-01T01:00:24+08:00" level=info msg=" - zfs" source="node_exporter.go:97"

time="2024-02-01T01:00:24+08:00" level=info msg="Listening on :9100" source="node_exporter.go:111"

node_exporter: error: unknown long flag '--collector.system.unit-whitelist', try --help

node_exporter: error: unknown long flag '--collector.system.unit-whitelist', try --help

node_exporter: error: unknown long flag '--collector.system.unit-whitelist', try --help

[root@mcw02 ~]#

再次尝试启动

[root@mcw02 ~]# nohup /usr/local/node_exporter/node_exporter --collector.textfile.directory="/var/lib/node_exporter/textfile_collector/" --collector.systemd --collector.systemd.unit-whitelist="(docker|ssh|rsyslog}.service"

nohup: ignoring input and appending output to ‘nohup.out’

[root@mcw02 ~]#

正则有问题吧

time="2024-02-01T08:54:14+08:00" level=info msg="Starting node_exporter (version=0.16.0, branch=HEAD, revision=d42bd70f4363dced6b77d8fc311ea57b63387e4f)" source="node_exporter.go:82"

time="2024-02-01T08:54:14+08:00" level=info msg="Build context (go=go1.9.6, user=root@a67a9bc13a69, date=20180515-15:52:42)" source="node_exporter.go:83"

panic: regexp: Compile(`^(?:(docker|ssh|rsyslog}.service)$`): error parsing regexp: missing closing ): `^(?:(docker|ssh|rsyslog}.service)$` goroutine 1 [running]:

regexp.MustCompile(0xc42001eed0, 0x22, 0xc42014fb18)

/usr/local/go/src/regexp/regexp.go:240 +0x171

github.com/prometheus/node_exporter/collector.NewSystemdCollector(0xa1ac40, 0xc42012df80, 0xac973e, 0x7)

/go/src/github.com/prometheus/node_exporter/collector/systemd_linux.go:69 +0x44c

github.com/prometheus/node_exporter/collector.NewNodeCollector(0x0, 0x0, 0x0, 0xc420147dd0, 0xc4201784b0, 0xc42016b0a0)

/go/src/github.com/prometheus/node_exporter/collector/collector.go:94 +0x435

main.main()

/go/src/github.com/prometheus/node_exporter/node_exporter.go:86 +0x622

(END)

这下可以了,花括号问题

正常启动

[root@mcw02 ~]# nohup /usr/local/node_exporter/node_exporter --collector.textfile.directory="/var/lib/node_exporter/textfile_collector/" --collector.systemd --collector.systemd.unit-whitelist="(docker|ssh|rsyslog).service" &

[1] 48675

[root@mcw02 ~]#

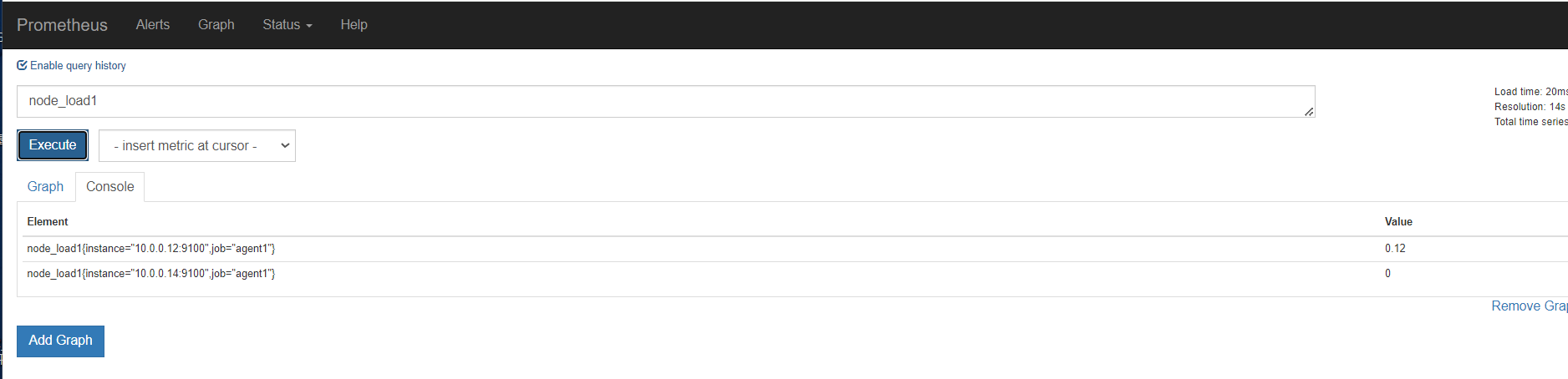

将target合并到一个target里面,作为列表元素

[root@mcw03 prometheus]# grep -A 5 agent /etc/prometheus.yml

- job_name: 'agent1'

static_configs:

- targets: ['10.0.0.14:9100']

- targets: ['10.0.0.12:9100']

- job_name: 'promserver'

static_configs:

[root@mcw03 prometheus]#

[root@mcw03 prometheus]# vim /etc/prometheus.yml

[root@mcw03 prometheus]# grep -A 5 agent /etc/prometheus.yml

- job_name: 'agent1'

static_configs:

- targets: ['10.0.0.14:9100','10.0.0.12:9100']

- job_name: 'promserver'

static_configs:

- targets: ['10.0.0.13:9100']

[root@mcw03 prometheus]# curl -X POST http://localhost:9090/-/reload

[root@mcw03 prometheus]#

正常up

过滤收集器

查看两者启动方式不同

[root@mcw02 ~]# ps -ef|grep -v grep |grep node_exporter

root 48675 1746 0 08:58 pts/0 00:00:00 /usr/local/node_exporter/node_exporter --collector.textfile.directory=/var/lib/node_exporter/textfile_collector/ --collector.systemd --collector.systemd.unit-whitelist=(docker|ssh|rsyslog).service

[root@mcw02 ~]#

[root@mcw04 ~]# ps -ef|grep -v grep |grep node_exporter

root 16003 2129 0 Jan30 pts/0 00:03:13 /usr/local/node_exporter/node_exporter

[root@mcw04 ~]#

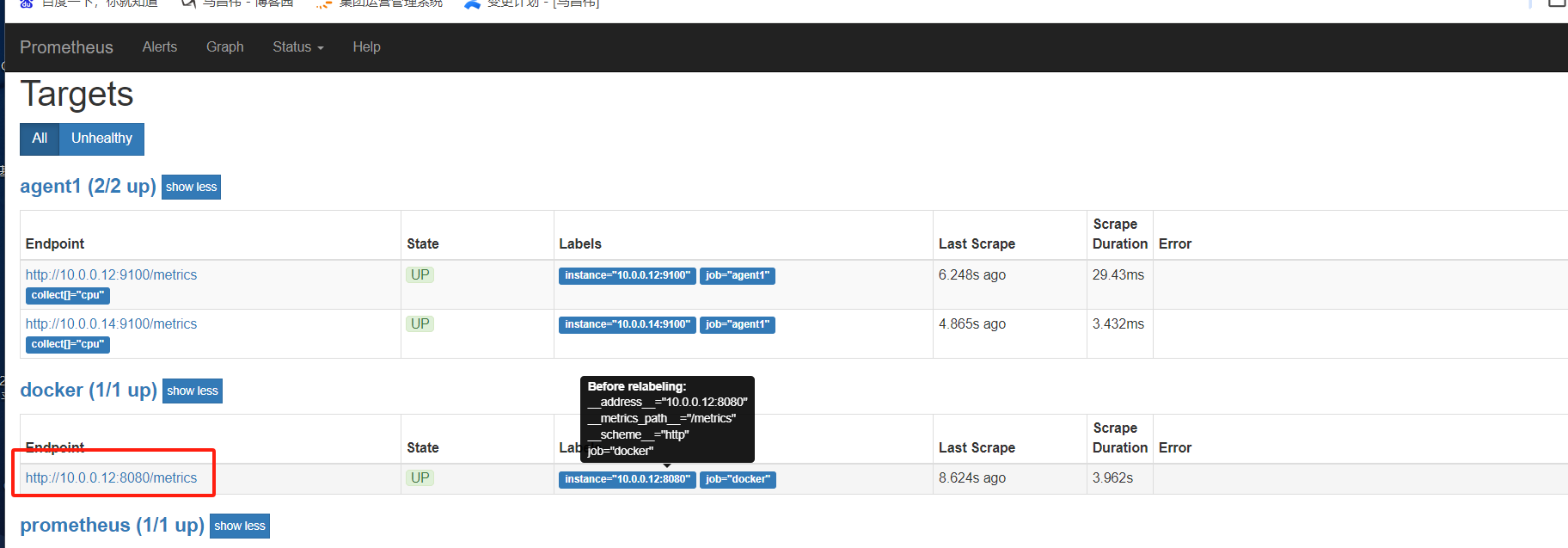

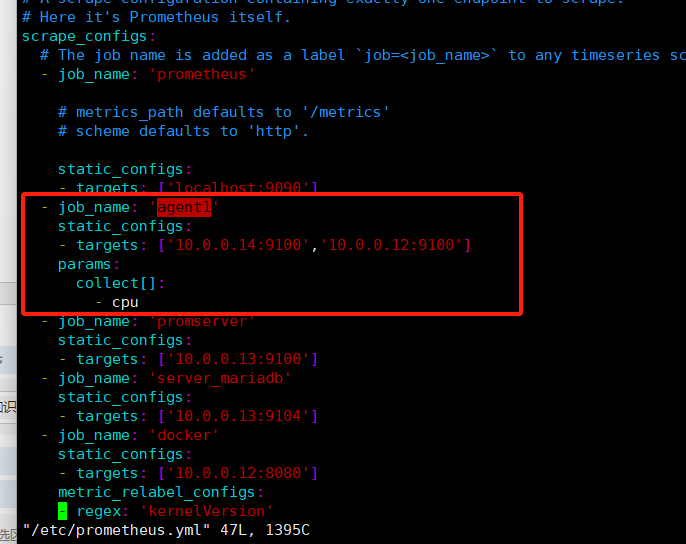

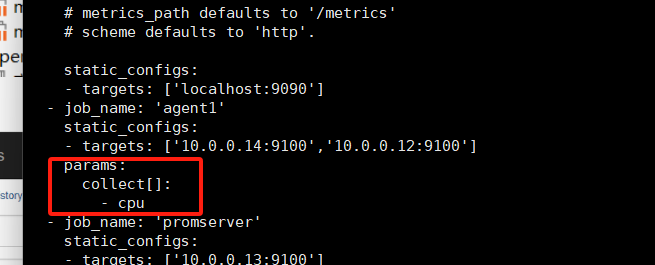

添加params。只收集下面的那些

[root@mcw03 prometheus]# grep -A 5 agent /etc/prometheus.yml

- job_name: 'agent1'

static_configs:

- targets: ['10.0.0.14:9100','10.0.0.12:9100']

- job_name: 'promserver'

static_configs:

- targets: ['10.0.0.13:9100']

[root@mcw03 prometheus]# vim /etc/prometheus.yml

[root@mcw03 prometheus]# grep -A 14 agent /etc/prometheus.yml

- job_name: 'agent1'

static_configs:

- targets: ['10.0.0.14:9100','10.0.0.12:9100']

params:

collect[]:

- cpu

- meminfo

- diskstats

- netdev

- filefd

- filesystem

- xfs

- systemd

- job_name: 'promserver'

static_configs:

[root@mcw03 prometheus]# curl -X POST http://localhost:9090/-/reload

[root@mcw03 prometheus]#

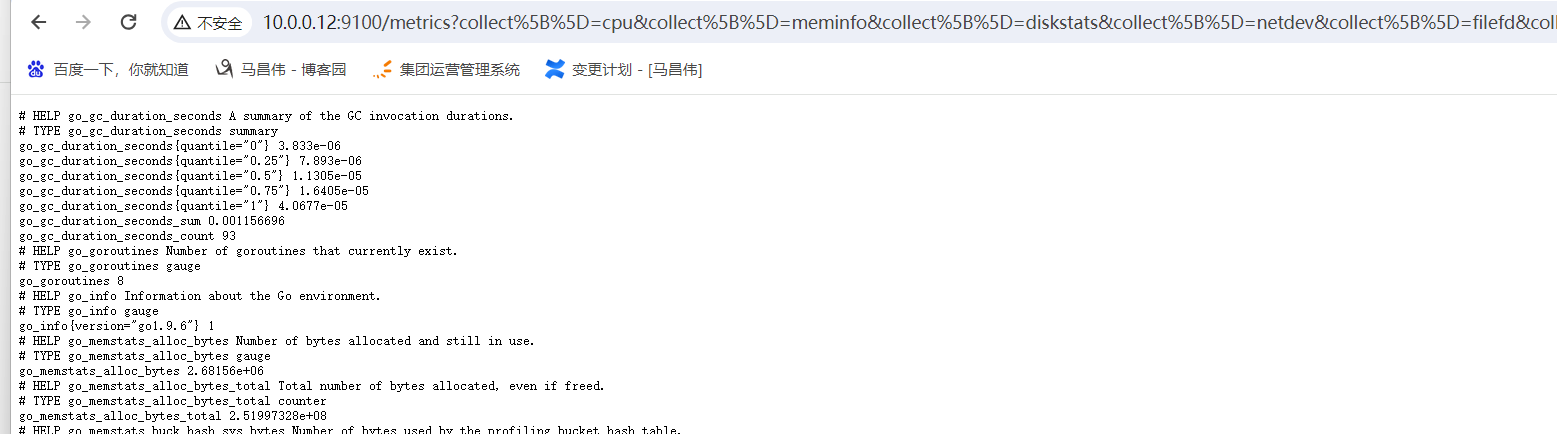

启用了systemd的,使用params之后才正常up,么有启动的14节点的报错。并且endpoint下面有标签,收集那些指标

| server returned HTTP status 400 Bad Request |

查看12节点,还是有很多指标

# HELP go_gc_duration_seconds A summary of the GC invocation durations.

# TYPE go_gc_duration_seconds summary

go_gc_duration_seconds{quantile="0"} 3.833e-06

go_gc_duration_seconds{quantile="0.25"} 7.893e-06

go_gc_duration_seconds{quantile="0.5"} 1.1305e-05

go_gc_duration_seconds{quantile="0.75"} 1.6405e-05

go_gc_duration_seconds{quantile="1"} 4.0677e-05

go_gc_duration_seconds_sum 0.001156696

go_gc_duration_seconds_count 93

# HELP go_goroutines Number of goroutines that currently exist.

# TYPE go_goroutines gauge

go_goroutines 8

# HELP go_info Information about the Go environment.

# TYPE go_info gauge

go_info{version="go1.9.6"} 1

# HELP go_memstats_alloc_bytes Number of bytes allocated and still in use.

# TYPE go_memstats_alloc_bytes gauge

go_memstats_alloc_bytes 2.68156e+06

# HELP go_memstats_alloc_bytes_total Total number of bytes allocated, even if freed.

# TYPE go_memstats_alloc_bytes_total counter

go_memstats_alloc_bytes_total 2.51997328e+08

# HELP go_memstats_buck_hash_sys_bytes Number of bytes used by the profiling bucket hash table.

# TYPE go_memstats_buck_hash_sys_bytes gauge

go_memstats_buck_hash_sys_bytes 1.477664e+06

# HELP go_memstats_frees_total Total number of frees.

# TYPE go_memstats_frees_total counter

go_memstats_frees_total 1.882502e+06

# HELP go_memstats_gc_cpu_fraction The fraction of this program's available CPU time used by the GC since the program started.

# TYPE go_memstats_gc_cpu_fraction gauge

go_memstats_gc_cpu_fraction 0.00016678596611586499

# HELP go_memstats_gc_sys_bytes Number of bytes used for garbage collection system metadata.

# TYPE go_memstats_gc_sys_bytes gauge

go_memstats_gc_sys_bytes 462848

# HELP go_memstats_heap_alloc_bytes Number of heap bytes allocated and still in use.

# TYPE go_memstats_heap_alloc_bytes gauge

go_memstats_heap_alloc_bytes 2.68156e+06

# HELP go_memstats_heap_idle_bytes Number of heap bytes waiting to be used.

# TYPE go_memstats_heap_idle_bytes gauge

go_memstats_heap_idle_bytes 3.866624e+06

# HELP go_memstats_heap_inuse_bytes Number of heap bytes that are in use.

# TYPE go_memstats_heap_inuse_bytes gauge

go_memstats_heap_inuse_bytes 3.735552e+06

# HELP go_memstats_heap_objects Number of allocated objects.

# TYPE go_memstats_heap_objects gauge

go_memstats_heap_objects 18119

# HELP go_memstats_heap_released_bytes Number of heap bytes released to OS.

# TYPE go_memstats_heap_released_bytes gauge

go_memstats_heap_released_bytes 0

# HELP go_memstats_heap_sys_bytes Number of heap bytes obtained from system.

# TYPE go_memstats_heap_sys_bytes gauge

go_memstats_heap_sys_bytes 7.602176e+06

# HELP go_memstats_last_gc_time_seconds Number of seconds since 1970 of last garbage collection.

# TYPE go_memstats_last_gc_time_seconds gauge

go_memstats_last_gc_time_seconds 1.7067497371836941e+09

# HELP go_memstats_lookups_total Total number of pointer lookups.

# TYPE go_memstats_lookups_total counter

go_memstats_lookups_total 2795

# HELP go_memstats_mallocs_total Total number of mallocs.

# TYPE go_memstats_mallocs_total counter

go_memstats_mallocs_total 1.900621e+06

# HELP go_memstats_mcache_inuse_bytes Number of bytes in use by mcache structures.

# TYPE go_memstats_mcache_inuse_bytes gauge

go_memstats_mcache_inuse_bytes 1736

# HELP go_memstats_mcache_sys_bytes Number of bytes used for mcache structures obtained from system.

# TYPE go_memstats_mcache_sys_bytes gauge

go_memstats_mcache_sys_bytes 16384

# HELP go_memstats_mspan_inuse_bytes Number of bytes in use by mspan structures.

# TYPE go_memstats_mspan_inuse_bytes gauge

go_memstats_mspan_inuse_bytes 44384

# HELP go_memstats_mspan_sys_bytes Number of bytes used for mspan structures obtained from system.

# TYPE go_memstats_mspan_sys_bytes gauge

go_memstats_mspan_sys_bytes 65536

# HELP go_memstats_next_gc_bytes Number of heap bytes when next garbage collection will take place.

# TYPE go_memstats_next_gc_bytes gauge

go_memstats_next_gc_bytes 4.194304e+06

# HELP go_memstats_other_sys_bytes Number of bytes used for other system allocations.

# TYPE go_memstats_other_sys_bytes gauge

go_memstats_other_sys_bytes 466136

# HELP go_memstats_stack_inuse_bytes Number of bytes in use by the stack allocator.

# TYPE go_memstats_stack_inuse_bytes gauge

go_memstats_stack_inuse_bytes 327680

# HELP go_memstats_stack_sys_bytes Number of bytes obtained from system for stack allocator.

# TYPE go_memstats_stack_sys_bytes gauge

go_memstats_stack_sys_bytes 327680

# HELP go_memstats_sys_bytes Number of bytes obtained from system.

# TYPE go_memstats_sys_bytes gauge

go_memstats_sys_bytes 1.0418424e+07

# HELP go_threads Number of OS threads created.

# TYPE go_threads gauge

go_threads 4

# HELP node_cpu_guest_seconds_total Seconds the cpus spent in guests (VMs) for each mode.

# TYPE node_cpu_guest_seconds_total counter

node_cpu_guest_seconds_total{cpu="0",mode="nice"} 0

node_cpu_guest_seconds_total{cpu="0",mode="user"} 0

# HELP node_cpu_seconds_total Seconds the cpus spent in each mode.

# TYPE node_cpu_seconds_total counter

node_cpu_seconds_total{cpu="0",mode="idle"} 318303.94

node_cpu_seconds_total{cpu="0",mode="iowait"} 160.76

node_cpu_seconds_total{cpu="0",mode="irq"} 0

node_cpu_seconds_total{cpu="0",mode="nice"} 0.09

node_cpu_seconds_total{cpu="0",mode="softirq"} 118.83

node_cpu_seconds_total{cpu="0",mode="steal"} 0

node_cpu_seconds_total{cpu="0",mode="system"} 2119.13

node_cpu_seconds_total{cpu="0",mode="user"} 2840.86

# HELP node_disk_io_now The number of I/Os currently in progress.

# TYPE node_disk_io_now gauge

node_disk_io_now{device="dm-0"} 0

node_disk_io_now{device="dm-1"} 0

node_disk_io_now{device="sda"} 0

node_disk_io_now{device="sr0"} 0

# HELP node_disk_io_time_seconds_total Total seconds spent doing I/Os.

# TYPE node_disk_io_time_seconds_total counter

node_disk_io_time_seconds_total{device="dm-0"} 764.7230000000001

node_disk_io_time_seconds_total{device="dm-1"} 0.325

node_disk_io_time_seconds_total{device="sda"} 764.6610000000001

node_disk_io_time_seconds_total{device="sr0"} 0

# HELP node_disk_io_time_weighted_seconds_total The weighted # of seconds spent doing I/Os. See https://www.kernel.org/doc/Documentation/iostats.txt.

# TYPE node_disk_io_time_weighted_seconds_total counter

node_disk_io_time_weighted_seconds_total{device="dm-0"} 1936.368

node_disk_io_time_weighted_seconds_total{device="dm-1"} 0.325

node_disk_io_time_weighted_seconds_total{device="sda"} 1856.987

node_disk_io_time_weighted_seconds_total{device="sr0"} 0

# HELP node_disk_read_bytes_total The total number of bytes read successfully.

# TYPE node_disk_read_bytes_total counter

node_disk_read_bytes_total{device="dm-0"} 1.756449792e+09

node_disk_read_bytes_total{device="dm-1"} 380928

node_disk_read_bytes_total{device="sda"} 1.790110208e+09

node_disk_read_bytes_total{device="sr0"} 0

# HELP node_disk_read_time_seconds_total The total number of milliseconds spent by all reads.

# TYPE node_disk_read_time_seconds_total counter

node_disk_read_time_seconds_total{device="dm-0"} 1024.198

node_disk_read_time_seconds_total{device="dm-1"} 0.325

node_disk_read_time_seconds_total{device="sda"} 1062.2060000000001

node_disk_read_time_seconds_total{device="sr0"} 0

# HELP node_disk_reads_completed_total The total number of reads completed successfully.

# TYPE node_disk_reads_completed_total counter

node_disk_reads_completed_total{device="dm-0"} 16044

node_disk_reads_completed_total{device="dm-1"} 62

node_disk_reads_completed_total{device="sda"} 18306

node_disk_reads_completed_total{device="sr0"} 0

# HELP node_disk_reads_merged_total The total number of reads merged. See https://www.kernel.org/doc/Documentation/iostats.txt.

# TYPE node_disk_reads_merged_total counter

node_disk_reads_merged_total{device="dm-0"} 0

node_disk_reads_merged_total{device="dm-1"} 0

node_disk_reads_merged_total{device="sda"} 39

node_disk_reads_merged_total{device="sr0"} 0

# HELP node_disk_write_time_seconds_total This is the total number of seconds spent by all writes.

# TYPE node_disk_write_time_seconds_total counter

node_disk_write_time_seconds_total{device="dm-0"} 912.142

node_disk_write_time_seconds_total{device="dm-1"} 0

node_disk_write_time_seconds_total{device="sda"} 809.452

node_disk_write_time_seconds_total{device="sr0"} 0

# HELP node_disk_writes_completed_total The total number of writes completed successfully.

# TYPE node_disk_writes_completed_total counter

node_disk_writes_completed_total{device="dm-0"} 965171

node_disk_writes_completed_total{device="dm-1"} 0

node_disk_writes_completed_total{device="sda"} 873870

node_disk_writes_completed_total{device="sr0"} 0

# HELP node_disk_writes_merged_total The number of writes merged. See https://www.kernel.org/doc/Documentation/iostats.txt.

# TYPE node_disk_writes_merged_total counter

node_disk_writes_merged_total{device="dm-0"} 0

node_disk_writes_merged_total{device="dm-1"} 0

node_disk_writes_merged_total{device="sda"} 91307

node_disk_writes_merged_total{device="sr0"} 0

# HELP node_disk_written_bytes_total The total number of bytes written successfully.

# TYPE node_disk_written_bytes_total counter

node_disk_written_bytes_total{device="dm-0"} 3.3735647744e+10

node_disk_written_bytes_total{device="dm-1"} 0

node_disk_written_bytes_total{device="sda"} 3.3737777664e+10

node_disk_written_bytes_total{device="sr0"} 0

# HELP node_exporter_build_info A metric with a constant '1' value labeled by version, revision, branch, and goversion from which node_exporter was built.

# TYPE node_exporter_build_info gauge

node_exporter_build_info{branch="HEAD",goversion="go1.9.6",revision="d42bd70f4363dced6b77d8fc311ea57b63387e4f",version="0.16.0"} 1

# HELP node_filefd_allocated File descriptor statistics: allocated.

# TYPE node_filefd_allocated gauge

node_filefd_allocated 3552

# HELP node_filefd_maximum File descriptor statistics: maximum.

# TYPE node_filefd_maximum gauge

node_filefd_maximum 2e+06

# HELP node_filesystem_avail_bytes Filesystem space available to non-root users in bytes.

# TYPE node_filesystem_avail_bytes gauge

node_filesystem_avail_bytes{device="/dev/mapper/centos-root",fstype="xfs",mountpoint="/"} 9.521995776e+09

node_filesystem_avail_bytes{device="/dev/sda1",fstype="xfs",mountpoint="/boot"} 9.13555456e+08

node_filesystem_avail_bytes{device="rootfs",fstype="rootfs",mountpoint="/"} 9.521995776e+09

node_filesystem_avail_bytes{device="tmpfs",fstype="tmpfs",mountpoint="/run"} 1.969913856e+09

node_filesystem_avail_bytes{device="tmpfs",fstype="tmpfs",mountpoint="/run/user/0"} 3.95804672e+08

# HELP node_filesystem_device_error Whether an error occurred while getting statistics for the given device.

# TYPE node_filesystem_device_error gauge

node_filesystem_device_error{device="/dev/mapper/centos-root",fstype="xfs",mountpoint="/"} 0

node_filesystem_device_error{device="/dev/sda1",fstype="xfs",mountpoint="/boot"} 0

node_filesystem_device_error{device="rootfs",fstype="rootfs",mountpoint="/"} 0

node_filesystem_device_error{device="tmpfs",fstype="tmpfs",mountpoint="/run"} 0

node_filesystem_device_error{device="tmpfs",fstype="tmpfs",mountpoint="/run/user/0"} 0

# HELP node_filesystem_files Filesystem total file nodes.

# TYPE node_filesystem_files gauge

node_filesystem_files{device="/dev/mapper/centos-root",fstype="xfs",mountpoint="/"} 9.957376e+06

node_filesystem_files{device="/dev/sda1",fstype="xfs",mountpoint="/boot"} 524288

node_filesystem_files{device="rootfs",fstype="rootfs",mountpoint="/"} 9.957376e+06

node_filesystem_files{device="tmpfs",fstype="tmpfs",mountpoint="/run"} 483160

node_filesystem_files{device="tmpfs",fstype="tmpfs",mountpoint="/run/user/0"} 483160

# HELP node_filesystem_files_free Filesystem total free file nodes.

# TYPE node_filesystem_files_free gauge

node_filesystem_files_free{device="/dev/mapper/centos-root",fstype="xfs",mountpoint="/"} 9.660103e+06

node_filesystem_files_free{device="/dev/sda1",fstype="xfs",mountpoint="/boot"} 523960

node_filesystem_files_free{device="rootfs",fstype="rootfs",mountpoint="/"} 9.660103e+06

node_filesystem_files_free{device="tmpfs",fstype="tmpfs",mountpoint="/run"} 482605

node_filesystem_files_free{device="tmpfs",fstype="tmpfs",mountpoint="/run/user/0"} 483159

# HELP node_filesystem_free_bytes Filesystem free space in bytes.

# TYPE node_filesystem_free_bytes gauge

node_filesystem_free_bytes{device="/dev/mapper/centos-root",fstype="xfs",mountpoint="/"} 9.521995776e+09

node_filesystem_free_bytes{device="/dev/sda1",fstype="xfs",mountpoint="/boot"} 9.13555456e+08

node_filesystem_free_bytes{device="rootfs",fstype="rootfs",mountpoint="/"} 9.521995776e+09

node_filesystem_free_bytes{device="tmpfs",fstype="tmpfs",mountpoint="/run"} 1.969913856e+09

node_filesystem_free_bytes{device="tmpfs",fstype="tmpfs",mountpoint="/run/user/0"} 3.95804672e+08

# HELP node_filesystem_readonly Filesystem read-only status.

# TYPE node_filesystem_readonly gauge

node_filesystem_readonly{device="/dev/mapper/centos-root",fstype="xfs",mountpoint="/"} 0

node_filesystem_readonly{device="/dev/sda1",fstype="xfs",mountpoint="/boot"} 0

node_filesystem_readonly{device="rootfs",fstype="rootfs",mountpoint="/"} 0

node_filesystem_readonly{device="tmpfs",fstype="tmpfs",mountpoint="/run"} 0

node_filesystem_readonly{device="tmpfs",fstype="tmpfs",mountpoint="/run/user/0"} 0

# HELP node_filesystem_size_bytes Filesystem size in bytes.

# TYPE node_filesystem_size_bytes gauge

node_filesystem_size_bytes{device="/dev/mapper/centos-root",fstype="xfs",mountpoint="/"} 2.0382220288e+10

node_filesystem_size_bytes{device="/dev/sda1",fstype="xfs",mountpoint="/boot"} 1.063256064e+09

node_filesystem_size_bytes{device="rootfs",fstype="rootfs",mountpoint="/"} 2.0382220288e+10

node_filesystem_size_bytes{device="tmpfs",fstype="tmpfs",mountpoint="/run"} 1.97902336e+09

node_filesystem_size_bytes{device="tmpfs",fstype="tmpfs",mountpoint="/run/user/0"} 3.95804672e+08

# HELP node_memory_Active_anon_bytes Memory information field Active_anon_bytes.

# TYPE node_memory_Active_anon_bytes gauge

node_memory_Active_anon_bytes 1.359429632e+09

# HELP node_memory_Active_bytes Memory information field Active_bytes.

# TYPE node_memory_Active_bytes gauge

node_memory_Active_bytes 2.195337216e+09

# HELP node_memory_Active_file_bytes Memory information field Active_file_bytes.

# TYPE node_memory_Active_file_bytes gauge

node_memory_Active_file_bytes 8.35907584e+08

# HELP node_memory_AnonHugePages_bytes Memory information field AnonHugePages_bytes.

# TYPE node_memory_AnonHugePages_bytes gauge

node_memory_AnonHugePages_bytes 1.430257664e+09

# HELP node_memory_AnonPages_bytes Memory information field AnonPages_bytes.

# TYPE node_memory_AnonPages_bytes gauge

node_memory_AnonPages_bytes 1.69039872e+09

# HELP node_memory_Bounce_bytes Memory information field Bounce_bytes.

# TYPE node_memory_Bounce_bytes gauge

node_memory_Bounce_bytes 0

# HELP node_memory_Buffers_bytes Memory information field Buffers_bytes.

# TYPE node_memory_Buffers_bytes gauge

node_memory_Buffers_bytes 73728

# HELP node_memory_Cached_bytes Memory information field Cached_bytes.

# TYPE node_memory_Cached_bytes gauge

node_memory_Cached_bytes 1.669480448e+09

# HELP node_memory_CommitLimit_bytes Memory information field CommitLimit_bytes.

# TYPE node_memory_CommitLimit_bytes gauge

node_memory_CommitLimit_bytes 1.983213568e+09

# HELP node_memory_Committed_AS_bytes Memory information field Committed_AS_bytes.

# TYPE node_memory_Committed_AS_bytes gauge

node_memory_Committed_AS_bytes 2.837794816e+09

# HELP node_memory_DirectMap1G_bytes Memory information field DirectMap1G_bytes.

# TYPE node_memory_DirectMap1G_bytes gauge

node_memory_DirectMap1G_bytes 2.147483648e+09

# HELP node_memory_DirectMap2M_bytes Memory information field DirectMap2M_bytes.

# TYPE node_memory_DirectMap2M_bytes gauge

node_memory_DirectMap2M_bytes 4.211081216e+09

# HELP node_memory_DirectMap4k_bytes Memory information field DirectMap4k_bytes.

# TYPE node_memory_DirectMap4k_bytes gauge

node_memory_DirectMap4k_bytes 8.3689472e+07

# HELP node_memory_Dirty_bytes Memory information field Dirty_bytes.

# TYPE node_memory_Dirty_bytes gauge

node_memory_Dirty_bytes 4.374528e+06

# HELP node_memory_HardwareCorrupted_bytes Memory information field HardwareCorrupted_bytes.

# TYPE node_memory_HardwareCorrupted_bytes gauge

node_memory_HardwareCorrupted_bytes 0

# HELP node_memory_HugePages_Free Memory information field HugePages_Free.

# TYPE node_memory_HugePages_Free gauge

node_memory_HugePages_Free 0

# HELP node_memory_HugePages_Rsvd Memory information field HugePages_Rsvd.

# TYPE node_memory_HugePages_Rsvd gauge

node_memory_HugePages_Rsvd 0

# HELP node_memory_HugePages_Surp Memory information field HugePages_Surp.

# TYPE node_memory_HugePages_Surp gauge

node_memory_HugePages_Surp 0

# HELP node_memory_HugePages_Total Memory information field HugePages_Total.

# TYPE node_memory_HugePages_Total gauge

node_memory_HugePages_Total 0

# HELP node_memory_Hugepagesize_bytes Memory information field Hugepagesize_bytes.

# TYPE node_memory_Hugepagesize_bytes gauge

node_memory_Hugepagesize_bytes 2.097152e+06

# HELP node_memory_Inactive_anon_bytes Memory information field Inactive_anon_bytes.

# TYPE node_memory_Inactive_anon_bytes gauge

node_memory_Inactive_anon_bytes 3.40914176e+08

# HELP node_memory_Inactive_bytes Memory information field Inactive_bytes.

# TYPE node_memory_Inactive_bytes gauge

node_memory_Inactive_bytes 1.164660736e+09

# HELP node_memory_Inactive_file_bytes Memory information field Inactive_file_bytes.

# TYPE node_memory_Inactive_file_bytes gauge

node_memory_Inactive_file_bytes 8.2374656e+08

# HELP node_memory_KernelStack_bytes Memory information field KernelStack_bytes.

# TYPE node_memory_KernelStack_bytes gauge

node_memory_KernelStack_bytes 6.995968e+06

# HELP node_memory_Mapped_bytes Memory information field Mapped_bytes.

# TYPE node_memory_Mapped_bytes gauge

node_memory_Mapped_bytes 9.2192768e+07

# HELP node_memory_MemAvailable_bytes Memory information field MemAvailable_bytes.

# TYPE node_memory_MemAvailable_bytes gauge

node_memory_MemAvailable_bytes 1.817350144e+09

# HELP node_memory_MemFree_bytes Memory information field MemFree_bytes.

# TYPE node_memory_MemFree_bytes gauge

node_memory_MemFree_bytes 1.1429888e+08

# HELP node_memory_MemTotal_bytes Memory information field MemTotal_bytes.

# TYPE node_memory_MemTotal_bytes gauge

node_memory_MemTotal_bytes 3.95804672e+09

# HELP node_memory_Mlocked_bytes Memory information field Mlocked_bytes.

# TYPE node_memory_Mlocked_bytes gauge

node_memory_Mlocked_bytes 0

# HELP node_memory_NFS_Unstable_bytes Memory information field NFS_Unstable_bytes.

# TYPE node_memory_NFS_Unstable_bytes gauge

node_memory_NFS_Unstable_bytes 0

# HELP node_memory_PageTables_bytes Memory information field PageTables_bytes.

# TYPE node_memory_PageTables_bytes gauge

node_memory_PageTables_bytes 1.6457728e+07

# HELP node_memory_SReclaimable_bytes Memory information field SReclaimable_bytes.

# TYPE node_memory_SReclaimable_bytes gauge

node_memory_SReclaimable_bytes 3.39439616e+08

# HELP node_memory_SUnreclaim_bytes Memory information field SUnreclaim_bytes.

# TYPE node_memory_SUnreclaim_bytes gauge

node_memory_SUnreclaim_bytes 3.7535744e+07

# HELP node_memory_Shmem_bytes Memory information field Shmem_bytes.

# TYPE node_memory_Shmem_bytes gauge

node_memory_Shmem_bytes 9.945088e+06

# HELP node_memory_Slab_bytes Memory information field Slab_bytes.

# TYPE node_memory_Slab_bytes gauge

node_memory_Slab_bytes 3.7697536e+08

# HELP node_memory_SwapCached_bytes Memory information field SwapCached_bytes.

# TYPE node_memory_SwapCached_bytes gauge

node_memory_SwapCached_bytes 0

# HELP node_memory_SwapFree_bytes Memory information field SwapFree_bytes.

# TYPE node_memory_SwapFree_bytes gauge

node_memory_SwapFree_bytes 4.190208e+06

# HELP node_memory_SwapTotal_bytes Memory information field SwapTotal_bytes.

# TYPE node_memory_SwapTotal_bytes gauge

node_memory_SwapTotal_bytes 4.190208e+06

# HELP node_memory_Unevictable_bytes Memory information field Unevictable_bytes.

# TYPE node_memory_Unevictable_bytes gauge

node_memory_Unevictable_bytes 0

# HELP node_memory_VmallocChunk_bytes Memory information field VmallocChunk_bytes.

# TYPE node_memory_VmallocChunk_bytes gauge

node_memory_VmallocChunk_bytes 3.5183933779968e+13

# HELP node_memory_VmallocTotal_bytes Memory information field VmallocTotal_bytes.

# TYPE node_memory_VmallocTotal_bytes gauge

node_memory_VmallocTotal_bytes 3.5184372087808e+13

# HELP node_memory_VmallocUsed_bytes Memory information field VmallocUsed_bytes.

# TYPE node_memory_VmallocUsed_bytes gauge

node_memory_VmallocUsed_bytes 1.88870656e+08

# HELP node_memory_WritebackTmp_bytes Memory information field WritebackTmp_bytes.

# TYPE node_memory_WritebackTmp_bytes gauge

node_memory_WritebackTmp_bytes 0

# HELP node_memory_Writeback_bytes Memory information field Writeback_bytes.

# TYPE node_memory_Writeback_bytes gauge

node_memory_Writeback_bytes 0

# HELP node_network_receive_bytes_total Network device statistic receive_bytes.

# TYPE node_network_receive_bytes_total counter

node_network_receive_bytes_total{device="ens33"} 3.773615896e+09

node_network_receive_bytes_total{device="ens34"} 0

node_network_receive_bytes_total{device="lo"} 2.9276118e+07

# HELP node_network_receive_compressed_total Network device statistic receive_compressed.

# TYPE node_network_receive_compressed_total counter

node_network_receive_compressed_total{device="ens33"} 0

node_network_receive_compressed_total{device="ens34"} 0

node_network_receive_compressed_total{device="lo"} 0

# HELP node_network_receive_drop_total Network device statistic receive_drop.

# TYPE node_network_receive_drop_total counter

node_network_receive_drop_total{device="ens33"} 0

node_network_receive_drop_total{device="ens34"} 0

node_network_receive_drop_total{device="lo"} 0

# HELP node_network_receive_errs_total Network device statistic receive_errs.

# TYPE node_network_receive_errs_total counter

node_network_receive_errs_total{device="ens33"} 0

node_network_receive_errs_total{device="ens34"} 0

node_network_receive_errs_total{device="lo"} 0

# HELP node_network_receive_fifo_total Network device statistic receive_fifo.

# TYPE node_network_receive_fifo_total counter

node_network_receive_fifo_total{device="ens33"} 0

node_network_receive_fifo_total{device="ens34"} 0

node_network_receive_fifo_total{device="lo"} 0

# HELP node_network_receive_frame_total Network device statistic receive_frame.

# TYPE node_network_receive_frame_total counter

node_network_receive_frame_total{device="ens33"} 0

node_network_receive_frame_total{device="ens34"} 0

node_network_receive_frame_total{device="lo"} 0

# HELP node_network_receive_multicast_total Network device statistic receive_multicast.

# TYPE node_network_receive_multicast_total counter

node_network_receive_multicast_total{device="ens33"} 0

node_network_receive_multicast_total{device="ens34"} 0

node_network_receive_multicast_total{device="lo"} 0

# HELP node_network_receive_packets_total Network device statistic receive_packets.

# TYPE node_network_receive_packets_total counter

node_network_receive_packets_total{device="ens33"} 7.692141e+06

node_network_receive_packets_total{device="ens34"} 0

node_network_receive_packets_total{device="lo"} 490855

# HELP node_network_transmit_bytes_total Network device statistic transmit_bytes.

# TYPE node_network_transmit_bytes_total counter

node_network_transmit_bytes_total{device="ens33"} 1.079574901e+09

node_network_transmit_bytes_total{device="ens34"} 0

node_network_transmit_bytes_total{device="lo"} 2.9276118e+07

# HELP node_network_transmit_carrier_total Network device statistic transmit_carrier.

# TYPE node_network_transmit_carrier_total counter

node_network_transmit_carrier_total{device="ens33"} 0

node_network_transmit_carrier_total{device="ens34"} 0

node_network_transmit_carrier_total{device="lo"} 0

# HELP node_network_transmit_colls_total Network device statistic transmit_colls.

# TYPE node_network_transmit_colls_total counter

node_network_transmit_colls_total{device="ens33"} 0

node_network_transmit_colls_total{device="ens34"} 0

node_network_transmit_colls_total{device="lo"} 0

# HELP node_network_transmit_compressed_total Network device statistic transmit_compressed.

# TYPE node_network_transmit_compressed_total counter

node_network_transmit_compressed_total{device="ens33"} 0

node_network_transmit_compressed_total{device="ens34"} 0

node_network_transmit_compressed_total{device="lo"} 0

# HELP node_network_transmit_drop_total Network device statistic transmit_drop.

# TYPE node_network_transmit_drop_total counter

node_network_transmit_drop_total{device="ens33"} 0

node_network_transmit_drop_total{device="ens34"} 0

node_network_transmit_drop_total{device="lo"} 0

# HELP node_network_transmit_errs_total Network device statistic transmit_errs.

# TYPE node_network_transmit_errs_total counter

node_network_transmit_errs_total{device="ens33"} 0

node_network_transmit_errs_total{device="ens34"} 0

node_network_transmit_errs_total{device="lo"} 0

# HELP node_network_transmit_fifo_total Network device statistic transmit_fifo.

# TYPE node_network_transmit_fifo_total counter

node_network_transmit_fifo_total{device="ens33"} 0

node_network_transmit_fifo_total{device="ens34"} 0

node_network_transmit_fifo_total{device="lo"} 0

# HELP node_network_transmit_packets_total Network device statistic transmit_packets.

# TYPE node_network_transmit_packets_total counter

node_network_transmit_packets_total{device="ens33"} 5.880282e+06

node_network_transmit_packets_total{device="ens34"} 0

node_network_transmit_packets_total{device="lo"} 490855

# HELP node_scrape_collector_duration_seconds node_exporter: Duration of a collector scrape.

# TYPE node_scrape_collector_duration_seconds gauge

node_scrape_collector_duration_seconds{collector="cpu"} 0.00022418

node_scrape_collector_duration_seconds{collector="diskstats"} 0.000150278

node_scrape_collector_duration_seconds{collector="filefd"} 2.0333e-05

node_scrape_collector_duration_seconds{collector="filesystem"} 0.000141861

node_scrape_collector_duration_seconds{collector="meminfo"} 9.9877e-05

node_scrape_collector_duration_seconds{collector="netdev"} 9.8296e-05

node_scrape_collector_duration_seconds{collector="systemd"} 0.008461932

node_scrape_collector_duration_seconds{collector="xfs"} 0.000365428

# HELP node_scrape_collector_success node_exporter: Whether a collector succeeded.

# TYPE node_scrape_collector_success gauge

node_scrape_collector_success{collector="cpu"} 1

node_scrape_collector_success{collector="diskstats"} 1

node_scrape_collector_success{collector="filefd"} 1

node_scrape_collector_success{collector="filesystem"} 1

node_scrape_collector_success{collector="meminfo"} 1

node_scrape_collector_success{collector="netdev"} 1

node_scrape_collector_success{collector="systemd"} 1

node_scrape_collector_success{collector="xfs"} 1

# HELP node_systemd_system_running Whether the system is operational (see 'systemctl is-system-running')

# TYPE node_systemd_system_running gauge

node_systemd_system_running 1

# HELP node_systemd_unit_state Systemd unit

# TYPE node_systemd_unit_state gauge

node_systemd_unit_state{name="rsyslog.service",state="activating"} 0

node_systemd_unit_state{name="rsyslog.service",state="active"} 1

node_systemd_unit_state{name="rsyslog.service",state="deactivating"} 0

node_systemd_unit_state{name="rsyslog.service",state="failed"} 0

node_systemd_unit_state{name="rsyslog.service",state="inactive"} 0

# HELP node_systemd_units Summary of systemd unit states

# TYPE node_systemd_units gauge

node_systemd_units{state="activating"} 0

node_systemd_units{state="active"} 154

node_systemd_units{state="deactivating"} 0

node_systemd_units{state="failed"} 0

node_systemd_units{state="inactive"} 74

# HELP node_xfs_allocation_btree_compares_total Number of allocation B-tree compares for a filesystem.

# TYPE node_xfs_allocation_btree_compares_total counter

node_xfs_allocation_btree_compares_total{device="dm-0"} 0

node_xfs_allocation_btree_compares_total{device="sda1"} 0

# HELP node_xfs_allocation_btree_lookups_total Number of allocation B-tree lookups for a filesystem.

# TYPE node_xfs_allocation_btree_lookups_total counter

node_xfs_allocation_btree_lookups_total{device="dm-0"} 0

node_xfs_allocation_btree_lookups_total{device="sda1"} 0

# HELP node_xfs_allocation_btree_records_deleted_total Number of allocation B-tree records deleted for a filesystem.

# TYPE node_xfs_allocation_btree_records_deleted_total counter

node_xfs_allocation_btree_records_deleted_total{device="dm-0"} 0

node_xfs_allocation_btree_records_deleted_total{device="sda1"} 0

# HELP node_xfs_allocation_btree_records_inserted_total Number of allocation B-tree records inserted for a filesystem.

# TYPE node_xfs_allocation_btree_records_inserted_total counter

node_xfs_allocation_btree_records_inserted_total{device="dm-0"} 0

node_xfs_allocation_btree_records_inserted_total{device="sda1"} 0

# HELP node_xfs_block_map_btree_compares_total Number of block map B-tree compares for a filesystem.

# TYPE node_xfs_block_map_btree_compares_total counter

node_xfs_block_map_btree_compares_total{device="dm-0"} 0

node_xfs_block_map_btree_compares_total{device="sda1"} 0

# HELP node_xfs_block_map_btree_lookups_total Number of block map B-tree lookups for a filesystem.

# TYPE node_xfs_block_map_btree_lookups_total counter

node_xfs_block_map_btree_lookups_total{device="dm-0"} 0

node_xfs_block_map_btree_lookups_total{device="sda1"} 0

# HELP node_xfs_block_map_btree_records_deleted_total Number of block map B-tree records deleted for a filesystem.

# TYPE node_xfs_block_map_btree_records_deleted_total counter

node_xfs_block_map_btree_records_deleted_total{device="dm-0"} 0

node_xfs_block_map_btree_records_deleted_total{device="sda1"} 0

# HELP node_xfs_block_map_btree_records_inserted_total Number of block map B-tree records inserted for a filesystem.

# TYPE node_xfs_block_map_btree_records_inserted_total counter

node_xfs_block_map_btree_records_inserted_total{device="dm-0"} 0

node_xfs_block_map_btree_records_inserted_total{device="sda1"} 0

# HELP node_xfs_block_mapping_extent_list_compares_total Number of extent list compares for a filesystem.

# TYPE node_xfs_block_mapping_extent_list_compares_total counter

node_xfs_block_mapping_extent_list_compares_total{device="dm-0"} 0

node_xfs_block_mapping_extent_list_compares_total{device="sda1"} 0

# HELP node_xfs_block_mapping_extent_list_deletions_total Number of extent list deletions for a filesystem.

# TYPE node_xfs_block_mapping_extent_list_deletions_total counter

node_xfs_block_mapping_extent_list_deletions_total{device="dm-0"} 1.988396e+06

node_xfs_block_mapping_extent_list_deletions_total{device="sda1"} 0

# HELP node_xfs_block_mapping_extent_list_insertions_total Number of extent list insertions for a filesystem.

# TYPE node_xfs_block_mapping_extent_list_insertions_total counter

node_xfs_block_mapping_extent_list_insertions_total{device="dm-0"} 191678

node_xfs_block_mapping_extent_list_insertions_total{device="sda1"} 0

# HELP node_xfs_block_mapping_extent_list_lookups_total Number of extent list lookups for a filesystem.

# TYPE node_xfs_block_mapping_extent_list_lookups_total counter

node_xfs_block_mapping_extent_list_lookups_total{device="dm-0"} 4.843281e+07

node_xfs_block_mapping_extent_list_lookups_total{device="sda1"} 164

# HELP node_xfs_block_mapping_reads_total Number of block map for read operations for a filesystem.

# TYPE node_xfs_block_mapping_reads_total counter

node_xfs_block_mapping_reads_total{device="dm-0"} 3.2221106e+07

node_xfs_block_mapping_reads_total{device="sda1"} 164

# HELP node_xfs_block_mapping_unmaps_total Number of block unmaps (deletes) for a filesystem.

# TYPE node_xfs_block_mapping_unmaps_total counter

node_xfs_block_mapping_unmaps_total{device="dm-0"} 3.77095e+06

node_xfs_block_mapping_unmaps_total{device="sda1"} 0

# HELP node_xfs_block_mapping_writes_total Number of block map for write operations for a filesystem.

# TYPE node_xfs_block_mapping_writes_total counter

node_xfs_block_mapping_writes_total{device="dm-0"} 1.2439528e+07

node_xfs_block_mapping_writes_total{device="sda1"} 0

# HELP node_xfs_extent_allocation_blocks_allocated_total Number of blocks allocated for a filesystem.

# TYPE node_xfs_extent_allocation_blocks_allocated_total counter

node_xfs_extent_allocation_blocks_allocated_total{device="dm-0"} 8.194099e+06

node_xfs_extent_allocation_blocks_allocated_total{device="sda1"} 0

# HELP node_xfs_extent_allocation_blocks_freed_total Number of blocks freed for a filesystem.

# TYPE node_xfs_extent_allocation_blocks_freed_total counter

node_xfs_extent_allocation_blocks_freed_total{device="dm-0"} 8.042035e+06

node_xfs_extent_allocation_blocks_freed_total{device="sda1"} 0

# HELP node_xfs_extent_allocation_extents_allocated_total Number of extents allocated for a filesystem.

# TYPE node_xfs_extent_allocation_extents_allocated_total counter

node_xfs_extent_allocation_extents_allocated_total{device="dm-0"} 191698

node_xfs_extent_allocation_extents_allocated_total{device="sda1"} 0

# HELP node_xfs_extent_allocation_extents_freed_total Number of extents freed for a filesystem.

# TYPE node_xfs_extent_allocation_extents_freed_total counter

node_xfs_extent_allocation_extents_freed_total{device="dm-0"} 191572

node_xfs_extent_allocation_extents_freed_total{device="sda1"} 0

# HELP process_cpu_seconds_total Total user and system CPU time spent in seconds.

# TYPE process_cpu_seconds_total counter

process_cpu_seconds_total 0.75

# HELP process_max_fds Maximum number of open file descriptors.

# TYPE process_max_fds gauge

process_max_fds 65535

# HELP process_open_fds Number of open file descriptors.

# TYPE process_open_fds gauge

process_open_fds 9

# HELP process_resident_memory_bytes Resident memory size in bytes.

# TYPE process_resident_memory_bytes gauge

process_resident_memory_bytes 1.3766656e+07

# HELP process_start_time_seconds Start time of the process since unix epoch in seconds.

# TYPE process_start_time_seconds gauge

process_start_time_seconds 1.70674908932e+09

# HELP process_virtual_memory_bytes Virtual memory size in bytes.

# TYPE process_virtual_memory_bytes gauge

process_virtual_memory_bytes 3.8486016e+07

# HELP promhttp_metric_handler_requests_in_flight Current number of scrapes being served.

# TYPE promhttp_metric_handler_requests_in_flight gauge

promhttp_metric_handler_requests_in_flight 1

# HELP promhttp_metric_handler_requests_total Total number of scrapes by HTTP status code.

# TYPE promhttp_metric_handler_requests_total counter

promhttp_metric_handler_requests_total{code="200"} 0

promhttp_metric_handler_requests_total{code="500"} 0

promhttp_metric_handler_requests_total{code="503"} 0

12指标

开启只收集cpu的,

[root@mcw03 prometheus]# vim /etc/prometheus.yml

[root@mcw03 prometheus]# grep -A 14 agent /etc/prometheus.yml

- job_name: 'agent1'

static_configs:

- targets: ['10.0.0.14:9100','10.0.0.12:9100']

params:

collect[]:

- cpu

- meminfo

- diskstats

- netdev

- filefd

- filesystem

- xfs

- systemd

- job_name: 'promserver'

static_configs:

[root@mcw03 prometheus]# vim /etc/prometheus.yml

[root@mcw03 prometheus]# grep -A 7 agent /etc/prometheus.yml

- job_name: 'agent1'

static_configs:

- targets: ['10.0.0.14:9100','10.0.0.12:9100']

params:

collect[]:

- cpu

- job_name: 'promserver'

static_configs:

[root@mcw03 prometheus]# curl -X POST http://localhost:9090/-/reload

[root@mcw03 prometheus]#

指标变少了很多,不过好像不只有cpu的,不过大部分都是

# HELP go_gc_duration_seconds A summary of the GC invocation durations.

# TYPE go_gc_duration_seconds summary

go_gc_duration_seconds{quantile="0"} 3.723e-06

go_gc_duration_seconds{quantile="0.25"} 7.176e-06

go_gc_duration_seconds{quantile="0.5"} 1.0807e-05

go_gc_duration_seconds{quantile="0.75"} 1.61e-05

go_gc_duration_seconds{quantile="1"} 4.0677e-05

go_gc_duration_seconds_sum 0.001449453

go_gc_duration_seconds_count 120

# HELP go_goroutines Number of goroutines that currently exist.

# TYPE go_goroutines gauge

go_goroutines 8

# HELP go_info Information about the Go environment.