Zeppelin0.5.6使用spark解释器

Zeppelin为0.5.6

Zeppelin默认自带本地spark,可以不依赖任何集群,下载bin包,解压安装就可以使用。

使用其他的spark集群在yarn模式下。

配置:

vi zeppelin-env.sh

添加:

export SPARK_HOME=/usr/crh/current/spark-client

export SPARK_SUBMIT_OPTIONS="--driver-memory 512M --executor-memory 1G"

export HADOOP_CONF_DIR=/etc/hadoop/conf

Zeppelin Interpreter配置

注意:设置完重启解释器。

Properties的master属性如下:

新建Notebook

Tips:几个月前zeppelin还是0.5.6,现在最新0.6.2,zeppelin 0.5.6写notebook时前面必须加%spark,而0.6.2若什么也不加就默认是scala语言。

zeppelin 0.5.6不加就报如下错:

Connect to 'databank:4300' failed

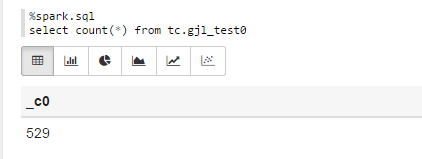

%spark.sql

select count(*) from tc.gjl_test0

报错:

com.fasterxml.jackson.databind.JsonMappingException: Could not find creator property with name 'id' (in class org.apache.spark.rdd.RDDOperationScope)

at [Source: {"id":"2","name":"ConvertToSafe"}; line: 1, column: 1]

at com.fasterxml.jackson.databind.JsonMappingException.from(JsonMappingException.java:148)

at com.fasterxml.jackson.databind.DeserializationContext.mappingException(DeserializationContext.java:843)

at com.fasterxml.jackson.databind.deser.BeanDeserializerFactory.addBeanProps(BeanDeserializerFactory.java:533)

at com.fasterxml.jackson.databind.deser.BeanDeserializerFactory.buildBeanDeserializer(BeanDeserializerFactory.java:220)

at com.fasterxml.jackson.databind.deser.BeanDeserializerFactory.createBeanDeserializer(BeanDeserializerFactory.java:143)

at com.fasterxml.jackson.databind.deser.DeserializerCache._createDeserializer2(DeserializerCache.java:409)

at com.fasterxml.jackson.databind.deser.DeserializerCache._createDeserializer(DeserializerCache.java:358)

at com.fasterxml.jackson.databind.deser.DeserializerCache._createAndCache2(DeserializerCache.java:265)

at com.fasterxml.jackson.databind.deser.DeserializerCache._createAndCacheValueDeserializer(DeserializerCache.java:245)

at com.fasterxml.jackson.databind.deser.DeserializerCache.findValueDeserializer(DeserializerCache.java:143)

at com.fasterxml.jackson.databind.DeserializationContext.findRootValueDeserializer(DeserializationContext.java:439)

at com.fasterxml.jackson.databind.ObjectMapper._findRootDeserializer(ObjectMapper.java:3666)

at com.fasterxml.jackson.databind.ObjectMapper._readMapAndClose(ObjectMapper.java:3558)

at com.fasterxml.jackson.databind.ObjectMapper.readValue(ObjectMapper.java:2578)

at org.apache.spark.rdd.RDDOperationScope$.fromJson(RDDOperationScope.scala:85)

at org.apache.spark.rdd.RDDOperationScope$$anonfun$5.apply(RDDOperationScope.scala:136)

at org.apache.spark.rdd.RDDOperationScope$$anonfun$5.apply(RDDOperationScope.scala:136)

at scala.Option.map(Option.scala:145)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:136)

at org.apache.spark.sql.execution.SparkPlan.execute(SparkPlan.scala:130)

at org.apache.spark.sql.execution.ConvertToSafe.doExecute(rowFormatConverters.scala:56)

at org.apache.spark.sql.execution.SparkPlan$$anonfun$execute$5.apply(SparkPlan.scala:132)

at org.apache.spark.sql.execution.SparkPlan$$anonfun$execute$5.apply(SparkPlan.scala:130)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:150)

at org.apache.spark.sql.execution.SparkPlan.execute(SparkPlan.scala:130)

at org.apache.spark.sql.execution.SparkPlan.executeTake(SparkPlan.scala:187)

at org.apache.spark.sql.execution.Limit.executeCollect(basicOperators.scala:165)

at org.apache.spark.sql.execution.SparkPlan.executeCollectPublic(SparkPlan.scala:174)

at org.apache.spark.sql.DataFrame$$anonfun$org$apache$spark$sql$DataFrame$$execute$1$1.apply(DataFrame.scala:1499)

at org.apache.spark.sql.DataFrame$$anonfun$org$apache$spark$sql$DataFrame$$execute$1$1.apply(DataFrame.scala:1499)

at org.apache.spark.sql.execution.SQLExecution$.withNewExecutionId(SQLExecution.scala:56)

at org.apache.spark.sql.DataFrame.withNewExecutionId(DataFrame.scala:2086)

at org.apache.spark.sql.DataFrame.org$apache$spark$sql$DataFrame$$execute$1(DataFrame.scala:1498)

at org.apache.spark.sql.DataFrame.org$apache$spark$sql$DataFrame$$collect(DataFrame.scala:1505)

at org.apache.spark.sql.DataFrame$$anonfun$head$1.apply(DataFrame.scala:1375)

at org.apache.spark.sql.DataFrame$$anonfun$head$1.apply(DataFrame.scala:1374)

at org.apache.spark.sql.DataFrame.withCallback(DataFrame.scala:2099)

at org.apache.spark.sql.DataFrame.head(DataFrame.scala:1374)

at org.apache.spark.sql.DataFrame.take(DataFrame.scala:1456)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:606)

at org.apache.zeppelin.spark.ZeppelinContext.showDF(ZeppelinContext.java:297)

at org.apache.zeppelin.spark.SparkSqlInterpreter.interpret(SparkSqlInterpreter.java:144)

at org.apache.zeppelin.interpreter.ClassloaderInterpreter.interpret(ClassloaderInterpreter.java:57)

at org.apache.zeppelin.interpreter.LazyOpenInterpreter.interpret(LazyOpenInterpreter.java:93)

at org.apache.zeppelin.interpreter.remote.RemoteInterpreterServer$InterpretJob.jobRun(RemoteInterpreterServer.java:300)

at org.apache.zeppelin.scheduler.Job.run(Job.java:169)

at org.apache.zeppelin.scheduler.FIFOScheduler$1.run(FIFOScheduler.java:134)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:471)

at java.util.concurrent.FutureTask.run(FutureTask.java:262)

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$201(ScheduledThreadPoolExecutor.java:178)

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:292)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1145)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:615)

at java.lang.Thread.run(Thread.java:745)

原因:

进入/opt/zeppelin-0.5.6-incubating-bin-all目录下:

# ls lib |grep jackson

jackson-annotations-2.5.0.jar

jackson-core-2.5.3.jar

jackson-databind-2.5.3.jar

将里面的版本换成如下版本:

# ls lib |grep jackson

jackson-annotations-2.4.4.jar

jackson-core-2.4.4.jar

jackson-databind-2.4.4.jar

测试成功!

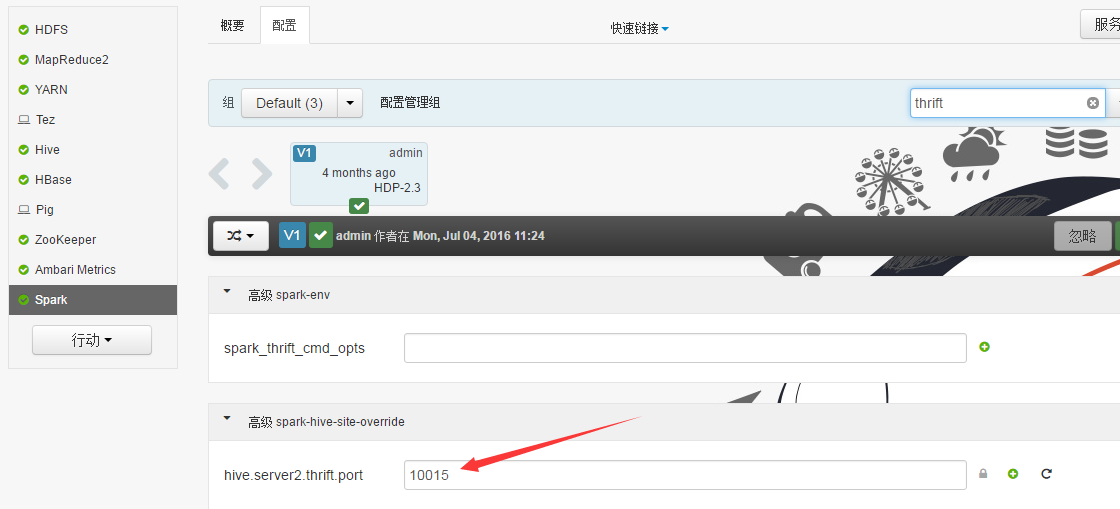

Sparksql也可直接通过hive jdbc连接,只需换端口,如下图:

Zeppelin0.5.6使用spark解释器的更多相关文章

- Zeppelin使用spark解释器

Zeppelin为0.5.6 Zeppelin默认自带本地spark,可以不依赖任何集群,下载bin包,解压安装就可以使用. 使用其他的spark集群在yarn模式下. 配置: vi zeppelin ...

- Zeppelin0.6.2使用hive解释器

Zeppelin0.6.2的jdbc Interpreter 配置 1.拷贝hive的配置文件hive-site.xml到zeppelin-0.6.2-bin-all/conf下. 2.进入conf下 ...

- Zeppelin0.5.6使用hive解释器

此zeppelin为官方0.5.6版,可能还在孵化阶段,可能出现一些bug吧. 配置 cp zeppelin-env.sh.template zeppelin-env.sh vi zeppelin-e ...

- Zeppelin0.7.2结合hive解释器进行报表展示

前提:服务器已经安装好了hadoop_client端即hadoop的环境hbase,hive等相关组件 1.环境和变量配置①拷贝hive的配置文件hive-site.xml到zeppelin-0.7. ...

- Zeppelin使用Spark的yarn-client模式

Zeppelin版本0.6.2 1. Export SPARK_HOME In conf/zeppelin-env.sh, export SPARK_HOME environment variable ...

- Apache Spark 2.2.0 中文文档 - Spark RDD(Resilient Distributed Datasets)论文 | ApacheCN

Spark RDD(Resilient Distributed Datasets)论文 概要 1: 介绍 2: Resilient Distributed Datasets(RDDs) 2.1 RDD ...

- Apache Spark RDD(Resilient Distributed Datasets)论文

Spark RDD(Resilient Distributed Datasets)论文 概要 1: 介绍 2: Resilient Distributed Datasets(RDDs) 2.1 RDD ...

- hadoop-2.7.3.tar.gz + spark-2.0.2-bin-hadoop2.7.tgz + zeppelin-0.6.2-incubating-bin-all.tgz(master、slave1和slave2)(博主推荐)(图文详解)

不多说,直接上干货! 我这里,采取的是ubuntu 16.04系统,当然大家也可以在CentOS6.5里,这些都是小事 CentOS 6.5的安装详解 hadoop-2.6.0.tar.gz + sp ...

- Zeppelin 0.6.2使用Spark的yarn-client模式

Zeppelin版本0.6.2 1. Export SPARK_HOME In conf/zeppelin-env.sh, export SPARK_HOME environment variable ...

随机推荐

- mysql 配置 explicit_defaults_for_timestamp

在之前的配置中,除了目录之外,唯独添加了这一项配置,为什么? 因为mysql中timestamp类型和其他的类型不一样: 在之前先了解一下current timestamp和on update cur ...

- 压缩大文件时如何限制CPU使用率?----几种CPU资源限制方法的测试说明

一.说明 我们的MySQL实例在备份后需要将数据打包压缩,部分低配机器在压缩时容易出现CPU打满导致报警的情况,需要在压缩文件时进行CPU资源的限制. 因此针对此问题进行了相关测试,就有了此文章. 二 ...

- Windows 10 版本信息

原文 https://technet.microsoft.com/zh-cn/windows/release-info Windows 10 版本信息 Microsoft 已更新其服务模型. 半年频道 ...

- 在 Windows 10 专业版、企业版或教育版上设置展台

原文: 在 Windows 10 专业版.企业版或教育版上设置展台 Set up a kiosk on Windows 10 Pro, Enterprise, or Education 适用于 Win ...

- uwp开发:数据绑定——值转换器 的简单使用

原文:uwp开发:数据绑定--值转换器 的简单使用 今天,我在做最近正在开发的“简影”uwp应用时遇到一个问题,其中有个栏目,叫做“画报”,是分组显示一组一组的 图片,每组图片在界面上只显示9个,点击 ...

- 基于mipsel编译Qt4.6.2版本(有具体参数和编译时遇到的问题)

1.使用的configure配置为:./configure -embedded mips -little-endian -xplatform qws/linux-mips-g++ -prefix /o ...

- c#编写的基于Socket的异步通信系统封装DLL--SanNiuSignal.DLL

SanNiuSignal是一个基于异步socket的完全免费DLL:它里面封装了Client,Server以及UDP:有了这个DLL:用户不用去关心心跳:粘包 :组包:发送文件等繁琐的事情:大家只要简 ...

- awk数组统计

处理以下文件内容,将域名取出并根据域名进行计数排序处理:(百度和sohu面试题) http://www.etiantian.org/index.html http://www.etiantian.or ...

- sqlserver/mysql按天,按小时,按分钟统计连续时间段数据

文 | 子龙 有技术,有干货,有故事的斜杠青年 一,写在前面的话 最近公司需要按天,按小时查看数据,可以直观的看到时间段的数据峰值.接到需求,就开始疯狂百度搜索,但是搜索到的资料有很多都不清楚,需要自 ...

- 关于Git GUI的使用方式

1.选择Clone Existing Repository 2.选择clone地址和存放位置,然后clone 3失败 4如果失败,让对方去这里(github的界面)邀请下,如果是自己就不用 5然后等待 ...