【原】二进制部署 k8s 1.18.3

二进制部署 k8s 1.18.3

1、相关前置信息

1.1 版本信息

kube_version: v1.18.3

etcd_version: v3.4.9

flannel: v0.12.0

coredns: v1.6.7

cni-plugins: v0.8.6

pod 网段:10.244.0.0/16

service 网段:10.96.0.0/12

kubernetes 内部地址:10.96.0.1

coredns 地址: 10.96.0.10

apiserver 域名:lb.5179.top

1.2 机器安排

| 主机名 | IP | 角色及组件 | k8s 相关组件 |

|---|---|---|---|

| centos7-nginx | 10.10.10.127 | nginx 四层代理 | nginx |

| centos7-a | 10.10.10.128 | master,node,etcd,flannel | kube-apiserver kube-controller-manager kube-scheduler kubelet kube-proxy |

| centos7-b | 10.10.10.129 | master,node,etcd,flannel | kube-apiserver kube-controller-manager kube-scheduler kubelet kube-proxy |

| centos7-c | 10.10.10.130 | master,node,etcd,flannel | kube-apiserver kube-controller-manager kube-scheduler kubelet kube-proxy |

| centos7-d | 10.10.10.131 | node,flannel | kubelet kube-proxy |

| centos7-e | 10.10.10.132 | node,flannel | kubelet kube-proxy |

2、部署前环境准备

以 centos7-nginx 当主控机对其他机器做免密

2.1、 安装ansible用于批量操作

安装过程略

[root@centos7-nginx ~]# cat /etc/ansible/hosts

[masters]

10.10.10.128

10.10.10.129

10.10.10.130

[nodes]

10.10.10.131

10.10.10.132

[k8s]

10.10.10.[128:132]

推送宿主机 hosts 文件

cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

10.10.10.127 centos7-nginx lb.5179.top

10.10.10.128 centos7-a

10.10.10.129 centos7-b

10.10.10.130 centos7-c

10.10.10.131 centos7-d

10.10.10.132 centos7-e

ansible k8s -m shell -a "mv /etc/hosts /etc/hosts.bak"

ansible k8s -m copy -a "src=/etc/hosts dest=/etc/hosts"

2.2 关闭防火墙及SELINUX

# 关闭防火墙

ansible k8s -m shell -a "systemctl stop firewalld && systemctl disable firewalld"

# 关闭 selinux

ansible k8s -m shell -a "setenforce 0 && sed -i "s/^SELINUX=enforcing/SELINUX=disabled/g" /etc/sysconfig/selinux && sed -i "s/^SELINUX=enforcing/SELINUX=disabled/g" /etc/selinux/config "

2.3 关闭 swap 分区

ansible k8s -m shell -a "swapoff -a && sed -i 's/.*swap.*/#&/' /etc/fstab"

2.4 安装 docker及加速器

vim ./install_docker.sh

#!/bin/bash

#

yum install -y yum-utils device-mapper-persistent-data lvm2

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum -y install docker-ce-19.03.11-19.03.11

systemctl enable docker

systemctl start docker

docker version

# 安装加速器

tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://ajpb7tdn.mirror.aliyuncs.com"],

"log-opts": {"max-size":"100m", "max-file":"5"}

}

EOF

systemctl daemon-reload

systemctl restart docker

然后使用 ansible 批量执行

ansible k8s -m script -a "./install_docker.sh"

2.5 修改内核参数

vim 99-k8s.conf

#sysctls for k8s node config

net.ipv4.ip_forward=1

net.ipv4.tcp_slow_start_after_idle=0

net.core.rmem_max=16777216

fs.inotify.max_user_watches=524288

kernel.softlockup_all_cpu_backtrace=1

kernel.softlockup_panic=1

fs.file-max=2097152

fs.inotify.max_user_instances=8192

fs.inotify.max_queued_events=16384

vm.max_map_count=262144

vm.swappiness=0

vm.overcommit_memory=1

vm.panic_on_oom=0

fs.may_detach_mounts=1

net.core.netdev_max_backlog=16384

net.ipv4.tcp_wmem=4096 12582912 16777216

net.core.wmem_max=16777216

net.core.somaxconn=32768

net.ipv4.ip_forward=1

net.ipv4.tcp_max_syn_backlog=8096

net.bridge.bridge-nf-call-iptables=1

net.bridge.bridge-nf-call-ip6tables=1

net.ipv4.tcp_rmem=4096 12582912 16777216

拷贝至远程

ansible k8s -m copy -a "src=./99-k8s.conf dest=/etc/sysctl.d/"

ansible k8s -m shell -a "cd /etc/sysctl.d/ && sysctl --system"

2.6 创建对应的目录

master 用

vim mkdir_k8s_master.sh

#!/bin/bash

mkdir /opt/etcd/{bin,data,cfg,ssl} -p

mkdir /opt/kubernetes/{bin,cfg,ssl,logs} -p

mkdir /opt/kubernetes/logs/{kubelet,kube-proxy,kube-scheduler,kube-apiserver,kube-controller-manager} -p

echo 'export PATH=$PATH:/opt/kubernetes/bin' >> /etc/profile

echo 'export PATH=$PATH:/opt/etcd/bin' >> /etc/profile

source /etc/profile

node 用

vim mkdir_k8s_node.sh

#!/bin/bash

mkdir /opt/kubernetes/{bin,cfg,ssl,logs} -p

mkdir /opt/kubernetes/logs/{kubelet,kube-proxy} -p

echo 'export PATH=$PATH:/opt/kubernetes/bin' >> /etc/profile

source /etc/profile

调用 ansible 执行

ansible masters -m script -a "./mkdir_k8s_master.sh"

ansible nodes -m script -a "./mkdir_k8s_node.sh"

2.7 准备 LB

为三台master提供高可用,可以选用云厂商的 slb,也可以用 两台 nginx + keepalived 实现。

此处,为实验环境,用单台 nginx 坐四层代理实现

# 安装 nginx

[root@centos7-nginx ~]# yum install -y nginx

# 创建子配置文件

[root@centos7-nginx ~]# cd /etc/nginx/conf.d/

[root@centos7-nginx conf.d]# vim lb.tcp

stream {

upstream master {

hash $remote_addr consistent;

server 10.10.10.128:6443 max_fails=3 fail_timeout=30;

server 10.10.10.129:6443 max_fails=3 fail_timeout=30;

server 10.10.10.130:6443 max_fails=3 fail_timeout=30;

}

server {

listen 6443;

proxy_pass master;

}

}

# 在主配置文件中引入该文件

[root@centos7-nginx ~]# cd /etc/nginx/

[root@centos7-nginx nginx]# vim nginx.conf

...

include /etc/nginx/conf.d/*.tcp;

...

# 加入开机自启,并启动 nginx

[root@centos7-nginx nginx]# systemctl enable nginx

Created symlink from /etc/systemd/system/multi-user.target.wants/nginx.service to /usr/lib/systemd/system/nginx.service.

[root@centos7-nginx nginx]# systemctl start nginx

3、部署

3.1 生成证书

执行脚本

[root@centos7-nginx ~]# mkdir ssl && cd ssl

[root@centos7-nginx ssl]# vim ./k8s-certificate.sh

[root@centos7-nginx ssl]# ./k8s-certificate.sh 10.10.10.127,10.10.10.128,10.10.10.129,10.10.10.130,lb.5179.top,10.96.0.1

IP 说明:

10.10.10.127|lb.5179.top: nginx

10.10.10.128|129|130: masters

10.96.0.1: kubernetes(service 网段的第一个 IP)

脚本内容如下

#!/bin/bash

# 二进制部署,生成 k8s 证书文件

if [ $# -ne 1 ];then

echo "please user in: `basename $0` MASTERS[10.10.10.127,10.10.10.128,10.10.10.129,10.10.10.130,lb.5179.top,10.96.0.1]"

exit 1

fi

MASTERS=$1

KUBERNETES_HOSTNAMES=kubernetes,kubernetes.default,kubernetes.default.svc,kubernetes.default.svc.cluster,kubernetes.svc.cluster.local

for i in `echo $MASTERS | tr ',' ' '`;do

if [ -z $IPS ];then

IPS=\"$i\",

else

IPS=$IPS\"$i\",

fi

done

command_exists() {

command -v "$@" > /dev/null 2>&1

}

if command_exists cfssl; then

echo "命令已存在"

else

# 下载生成证书命令

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64

wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64

wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64

# 添加执行权限

chmod +x cfssl_linux-amd64 cfssljson_linux-amd64 cfssl-certinfo_linux-amd64

# 移动到 /usr/local/bin 目录下

mv cfssl_linux-amd64 /usr/local/bin/cfssl

mv cfssljson_linux-amd64 /usr/local/bin/cfssljson

mv cfssl-certinfo_linux-amd64 /usr/bin/cfssl-certinfo

fi

# 默认签 10 年

cat > ca-config.json <<EOF

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF

cat > ca-csr.json <<EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Beijing",

"ST": "Beijing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

cfssl gencert -initca ca-csr.json | cfssljson -bare ca -

#-----------------------

cat > server-csr.json <<EOF

{

"CN": "kubernetes",

"hosts": [

${IPS}

"127.0.0.1",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes server-csr.json | cfssljson -bare server

# 或者

#cat > server-csr.json <<EOF

#{

# "CN": "kubernetes",

# "key": {

# "algo": "rsa",

# "size": 2048

# },

# "names": [

# {

# "C": "CN",

# "L": "BeiJing",

# "ST": "BeiJing",

# "O": "k8s",

# "OU": "System"

# }

# ]

#}

#EOF

#

#cfssl gencert \

# -ca=ca.pem \

# -ca-key=ca-key.pem \

# -config=ca-config.json \

# -hostname=${MASTERS},127.0.0.1,${KUBERNETES_HOSTNAMES} \

# -profile=kubernetes \

# server-csr.json | cfssljson -bare server

#-----------------------

cat > admin-csr.json <<EOF

{

"CN": "admin",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "system:masters",

"OU": "System"

}

]

}

EOF

cfssl gencert \

-ca=ca.pem \

-ca-key=ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

admin-csr.json | cfssljson -bare admin

#-----------------------

cat > kube-proxy-csr.json <<EOF

{

"CN": "system:kube-proxy",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

cfssl gencert \

-ca=ca.pem \

-ca-key=ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

kube-proxy-csr.json | cfssljson -bare kube-proxy

# 注意: "CN": "system:metrics-server" 一定是这个,因为后面授权时用到这个名称,否则会报禁止匿名访问

cat > metrics-server-csr.json <<EOF

{

"CN": "system:metrics-server",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "k8s",

"OU": "system"

}

]

}

EOF

cfssl gencert \

-ca=ca.pem \

-ca-key=ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

metrics-server-csr.json | cfssljson -bare metrics-server

for item in $(ls *.pem |grep -v key) ;do echo ======================$item===================;openssl x509 -in $item -text -noout| grep Not;done

#[root@aliyun k8s]# for item in $(ls *.pem |grep -v key) ;do echo ======================$item===================;openssl x509 -in $item -text -noout| grep Not;done

#======================admin.pem====================

# Not Before: Jun 18 14:32:00 2020 GMT

# Not After : Jun 16 14:32:00 2030 GMT

#======================ca.pem=======================

# Not Before: Jun 18 14:32:00 2020 GMT

# Not After : Jun 17 14:32:00 2025 GMT

#======================kube-proxy.pem===============

# Not Before: Jun 18 14:32:00 2020 GMT

# Not After : Jun 16 14:32:00 2030 GMT

#======================metrics-server.pem===========

# Not Before: Jun 18 14:32:00 2020 GMT

# Not After : Jun 16 14:32:00 2030 GMT

#======================server.pem===================

# Not Before: Jun 18 14:32:00 2020 GMT

# Not After : Jun 16 14:32:00 2030 GMT

注意:cfssl产生的ca证书固定5年有效期

// CAPolicy contains the CA issuing policy as default policy.

var CAPolicy = func() *config.Signing {

return &config.Signing{

Default: &config.SigningProfile{

Usage: []string{"cert sign", "crl sign"},

ExpiryString: "43800h",

Expiry: 5 * helpers.OneYear,

CAConstraint: config.CAConstraint{IsCA: true},

},

}

}

可以通过修改源码方式重新编译更改 ca 过期时间,或者在ca-csr.json添加如下

"ca": {

"expiry": "438000h" #---> 50年

}

3.2 拷贝证书

3.2.1 拷贝 etcd 集群使用的证书

[root@centos7-nginx ~]# cd ssl

[root@centos7-nginx ssl]#

[root@centos7-nginx ssl]# ansible masters -m copy -a "src=./ca.pem dest=/opt/etcd/ssl"

[root@centos7-nginx ssl]# ansible masters -m copy -a "src=./server.pem dest=/opt/etcd/ssl"

[root@centos7-nginx ssl]# ansible masters -m copy -a "src=./server-key.pem dest=/opt/etcd/ssl"

3.2.2 拷贝 k8s 集群使用的证书

[root@centos7-nginx ~]# cd ssl

[root@centos7-nginx ssl]#

[root@centos7-nginx ssl]# scp *.pem root@10.10.10.128:/opt/kubernetes/ssl/

[root@centos7-nginx ssl]# scp *.pem root@10.10.10.129:/opt/kubernetes/ssl/

[root@centos7-nginx ssl]# scp *.pem root@10.10.10.130:/opt/kubernetes/ssl/

[root@centos7-nginx ssl]# scp *.pem root@10.10.10.131:/opt/kubernetes/ssl/

[root@centos7-nginx ssl]# scp *.pem root@10.10.10.132:/opt/kubernetes/ssl/

3.3 安装 ETCD 集群

下载二进制etcd包,并把执行文件推到各 master节点的 /opt/etcd/bin/ 目录下

[root@centos7-nginx ~]# mkdir ./etcd && cd ./etcd

[root@centos7-nginx etcd]# wget https://github.com/etcd-io/etcd/releases/download/v3.4.9/etcd-v3.4.9-linux-amd64.tar.gz

[root@centos7-nginx etcd]# tar zxvf etcd-v3.3.12-linux-amd64.tar.gz

[root@centos7-nginx etcd]# cd etcd-v3.4.9-linux-amd64

[root@centos7-nginx etcd-v3.4.9-linux-amd64]# ll

总用量 40540

drwxr-xr-x. 14 630384594 600260513 4096 5月 22 03:54 Documentation

-rwxr-xr-x. 1 630384594 600260513 23827424 5月 22 03:54 etcd

-rwxr-xr-x. 1 630384594 600260513 17612384 5月 22 03:54 etcdctl

-rw-r--r--. 1 630384594 600260513 43094 5月 22 03:54 README-etcdctl.md

-rw-r--r--. 1 630384594 600260513 8431 5月 22 03:54 README.md

-rw-r--r--. 1 630384594 600260513 7855 5月 22 03:54 READMEv2-etcdctl.md

[root@centos7-nginx etcd-v3.4.9-linux-amd64]# ansible masters -m copy -a "src=./etcd dest=/opt/etcd/bin mode=755"

[root@centos7-nginx etcd-v3.4.9-linux-amd64]# ansible masters -m copy -a "src=./etcdctl dest=/opt/etcd/bin mode=755"

编写 etcd 配置文件脚本

#!/bin/bash

# 使用说明

#./etcd.sh etcd01 10.10.10.128 etcd01=https://10.10.10.128:2380,etcd02=https://10.10.10.129:2380,etcd03=https://10.10.10.130:2380

#./etcd.sh etcd02 10.10.10.129 etcd01=https://10.10.10.128:2380,etcd02=https://10.10.10.129:2380,etcd03=https://10.10.10.130:2380

#./etcd.sh etcd03 10.10.10.130 etcd01=https://10.10.10.128:2380,etcd02=https://10.10.10.129:2380,etcd03=https://10.10.10.130:2380

ETCD_NAME=${1:-"etcd01"}

ETCD_IP=${2:-"127.0.0.1"}

ETCD_CLUSTER=${3:-"etcd01=https://127.0.0.1:2379"}

# ETCD 版本选择[3.3,3.4]

# 要用 3.3.14 以上版本:https://kubernetes.io/zh/docs/tasks/administer-cluster/configure-upgrade-etcd/#%E5%B7%B2%E7%9F%A5%E9%97%AE%E9%A2%98-%E5%85%B7%E6%9C%89%E5%AE%89%E5%85%A8%E7%AB%AF%E7%82%B9%E7%9A%84-etcd-%E5%AE%A2%E6%88%B7%E7%AB%AF%E5%9D%87%E8%A1%A1%E5%99%A8

ETCD_VERSION=3.4.9

if [ ${ETCD_VERSION%.*} == "3.4" ] ;then

cat <<EOF >/opt/etcd/cfg/etcd.yml

#etcd ${ETCD_VERSION}

name: ${ETCD_NAME}

data-dir: /opt/etcd/data

listen-peer-urls: https://${ETCD_IP}:2380

listen-client-urls: https://${ETCD_IP}:2379,https://127.0.0.1:2379

advertise-client-urls: https://${ETCD_IP}:2379

initial-advertise-peer-urls: https://${ETCD_IP}:2380

initial-cluster: ${ETCD_CLUSTER}

initial-cluster-token: etcd-cluster

initial-cluster-state: new

enable-v2: true

client-transport-security:

cert-file: /opt/etcd/ssl/server.pem

key-file: /opt/etcd/ssl/server-key.pem

client-cert-auth: false

trusted-ca-file: /opt/etcd/ssl/ca.pem

auto-tls: false

peer-transport-security:

cert-file: /opt/etcd/ssl/server.pem

key-file: /opt/etcd/ssl/server-key.pem

client-cert-auth: false

trusted-ca-file: /opt/etcd/ssl/ca.pem

auto-tls: false

debug: false

logger: zap

log-outputs: [stderr]

EOF

else

cat <<EOF >/opt/etcd/cfg/etcd.yml

#etcd ${ETCD_VERSION}

name: ${ETCD_NAME}

data-dir: /opt/etcd/data

listen-peer-urls: https://${ETCD_IP}:2380

listen-client-urls: https://${ETCD_IP}:2379,https://127.0.0.1:2379

advertise-client-urls: https://${ETCD_IP}:2379

initial-advertise-peer-urls: https://${ETCD_IP}:2380

initial-cluster: ${ETCD_CLUSTER}

initial-cluster-token: etcd-cluster

initial-cluster-state: new

client-transport-security:

cert-file: /opt/etcd/ssl/server.pem

key-file: /opt/etcd/ssl/server-key.pem

client-cert-auth: false

trusted-ca-file: /opt/etcd/ssl/ca.pem

auto-tls: false

peer-transport-security:

cert-file: /opt/etcd/ssl/server.pem

key-file: /opt/etcd/ssl/server-key.pem

peer-client-cert-auth: false

trusted-ca-file: /opt/etcd/ssl/ca.pem

auto-tls: false

debug: false

log-package-levels: etcdmain=CRITICAL,etcdserver=DEBUG

log-outputs: default

EOF

fi

cat <<EOF >/usr/lib/systemd/system/etcd.service

[Unit]

Description=Etcd Server

Documentation=https://github.com/etcd-io/etcd

Conflicts=etcd.service

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

LimitNOFILE=65536

Restart=on-failure

RestartSec=5s

TimeoutStartSec=0

ExecStart=/opt/etcd/bin/etcd --config-file=/opt/etcd/cfg/etcd.yml

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable etcd

systemctl restart etcd

推送到 masters 机器上

ansible masters -m copy -a "src=./etcd.sh dest=/opt/etcd/bin mode=755"

分别登陆到三台机器上执行脚本文件

[root@centos7-a bin]# ./etcd.sh etcd01 10.10.10.128 etcd01=https://10.10.10.128:2380,etcd02=https://10.10.10.129:2380,etcd03=https://10.10.10.130:2380

[root@centos7-b bin]# ./etcd.sh etcd02 10.10.10.129 etcd01=https://10.10.10.128:2380,etcd02=https://10.10.10.129:2380,etcd03=https://10.10.10.130:2380

[root@centos7-c bin]# ./etcd.sh etcd03 10.10.10.130 etcd01=https://10.10.10.128:2380,etcd02=https://10.10.10.129:2380,etcd03=https://10.10.10.130:2380

验证集群是否是健康的

### 3.4.9

[root@centos7-a ~]# ETCDCTL_API=3 etcdctl --write-out="table" --cacert=/opt/etcd/ssl/ca.pem --cert=/opt/etcd/ssl/server.pem --key=/opt/etcd/ssl/server-key.pem --endpoints=https://10.10.10.128:2379,https://10.10.10.129:2379,https://10.10.10.130:2379 endpoint health

+---------------------------+--------+-------------+-------+

| ENDPOINT | HEALTH | TOOK | ERROR |

+---------------------------+--------+-------------+-------+

| https://10.10.10.128:2379 | true | 31.126223ms | |

| https://10.10.10.129:2379 | true | 28.698669ms | |

| https://10.10.10.130:2379 | true | 32.508681ms | |

+---------------------------+--------+-------------+-------+

查看集群成员

[root@centos7-a ~]# ETCDCTL_API=3 etcdctl --write-out="table" --cacert=/opt/etcd/ssl/ca.pem --cert=/opt/etcd/ssl/server.pem --key=/opt/etcd/ssl/server-key.pem --endpoints=https://10.10.10.128:2379,https://10.10.10.129:2379,https://10.10.10.130:2379 member list

+------------------+---------+--------+---------------------------+---------------------------+------------+

| ID | STATUS | NAME | PEER ADDRS | CLIENT ADDRS | IS LEARNER |

+------------------+---------+--------+---------------------------+---------------------------+------------+

| 2cec243d35ad0881 | started | etcd02 | https://10.10.10.129:2380 | https://10.10.10.129:2379 | false |

| c6e694d272df93e8 | started | etcd03 | https://10.10.10.130:2380 | https://10.10.10.130:2379 | false |

| e9b57a5a8276394a | started | etcd01 | https://10.10.10.128:2380 | https://10.10.10.128:2379 | false |

+------------------+---------+--------+---------------------------+---------------------------+------------+

给 etcdctl创建别名,三台机器分别执行

vim .bashrc

alias etcdctl2="ETCDCTL_API=2 etcdctl --ca-file=/opt/etcd/ssl/ca.pem --cert-file=/opt/etcd/ssl/server.pem --key-file=/opt/etcd/ssl/server-key.pem --endpoints=https://10.10.10.128:2379,https://10.10.10.129:2379,https://10.10.10.130:2379"

alias etcdctl3="ETCDCTL_API=3 etcdctl --cacert=/opt/etcd/ssl/ca.pem --cert=/opt/etcd/ssl/server.pem --key=/opt/etcd/ssl/server-key.pem --endpoints=https://10.10.10.128:2379,https://10.10.10.129:2379,https://10.10.10.130:2379"

source .bashrc

3.3 安装 k8s 相关组件

3.3.1 下载二进制安装包

[root@centos7-nginx ~]# mkdir k8s-1.18.3 && cd k8s-1.18.3/

[root@centos7-nginx k8s-1.18.3]# wget https://dl.k8s.io/v1.18.3/kubernetes-server-linux-amd64.tar.gz

[root@centos7-nginx k8s-1.18.3]# tar xf kubernetes-server-linux-amd64.tar.gz

[root@centos7-nginx k8s-1.18.3]# cd kubernetes

[root@centos7-nginx kubernetes]# ll

总用量 33092

drwxr-xr-x. 2 root root 6 5月 20 21:32 addons

-rw-r--r--. 1 root root 32587733 5月 20 21:32 kubernetes-src.tar.gz

-rw-r--r--. 1 root root 1297746 5月 20 21:32 LICENSES

drwxr-xr-x. 3 root root 17 5月 20 21:27 server

[root@centos7-nginx kubernetes]# cd server/bin/

[root@centos7-nginx bin]# ll

总用量 1087376

-rwxr-xr-x. 1 root root 48128000 5月 20 21:32 apiextensions-apiserver

-rwxr-xr-x. 1 root root 39813120 5月 20 21:32 kubeadm

-rwxr-xr-x. 1 root root 120668160 5月 20 21:32 kube-apiserver

-rw-r--r--. 1 root root 8 5月 20 21:27 kube-apiserver.docker_tag

-rw-------. 1 root root 174558720 5月 20 21:27 kube-apiserver.tar

-rwxr-xr-x. 1 root root 110059520 5月 20 21:32 kube-controller-manager

-rw-r--r--. 1 root root 8 5月 20 21:27 kube-controller-manager.docker_tag

-rw-------. 1 root root 163950080 5月 20 21:27 kube-controller-manager.tar

-rwxr-xr-x. 1 root root 44032000 5月 20 21:32 kubectl

-rwxr-xr-x. 1 root root 113283800 5月 20 21:32 kubelet

-rwxr-xr-x. 1 root root 38379520 5月 20 21:32 kube-proxy

-rw-r--r--. 1 root root 8 5月 20 21:28 kube-proxy.docker_tag

-rw-------. 1 root root 119099392 5月 20 21:28 kube-proxy.tar

-rwxr-xr-x. 1 root root 42950656 5月 20 21:32 kube-scheduler

-rw-r--r--. 1 root root 8 5月 20 21:27 kube-scheduler.docker_tag

-rw-------. 1 root root 96841216 5月 20 21:27 kube-scheduler.tar

-rwxr-xr-x. 1 root root 1687552 5月 20 21:32 mounter

将对应文件拷贝到目标机器上

# masters

[root@centos7-nginx bin]# scp kube-apiserver kube-controller-manager kube-scheduler kubectl kubelet kube-proxy root@10.10.10.128:/opt/kubernetes/bin/

[root@centos7-nginx bin]# scp kube-apiserver kube-controller-manager kube-scheduler kubectl kubelet kube-proxy root@10.10.10.129:/opt/kubernetes/bin/

[root@centos7-nginx bin]# scp kube-apiserver kube-controller-manager kube-scheduler kubectl kubelet kube-proxy root@10.10.10.130:/opt/kubernetes/bin/

# nodes

[root@centos7-nginx bin]# scp kubelet kube-proxy root@10.10.10.131:/opt/kubernetes/bin/

[root@centos7-nginx bin]# scp kubelet kube-proxy root@10.10.10.131:/opt/kubernetes/bin/

# 本机

[root@centos7-nginx bin]# cp kubectl /usr/local/bin/

3.3.2 创建Node节点kubeconfig文件

- 创建TLS Bootstrapping Token

- 创建kubelet kubeconfig

- 创建kube-proxy kubeconfig

- 创建admin kubeconfig

[root@centos7-nginx ~]# cd ~/ssl/

[root@centos7-nginx ssl]# vim kubeconfig.sh # 修改第10行 KUBE_APISERVER 地址

[root@centos7-nginx ssl]# bash ./kubeconfig.sh

Cluster "kubernetes" set.

User "kubelet-bootstrap" set.

Context "default" created.

Switched to context "default".

Cluster "kubernetes" set.

User "kube-proxy" set.

Context "default" created.

Switched to context "default".

Cluster "kubernetes" set.

User "admin" set.

Context "default" created.

Switched to context "default".

脚本内容如下:

# 创建 TLS Bootstrapping Token

export BOOTSTRAP_TOKEN=$(head -c 16 /dev/urandom | od -An -t x | tr -d ' ')

cat > token.csv <<EOF

${BOOTSTRAP_TOKEN},kubelet-bootstrap,10001,"system:kubelet-bootstrap"

EOF

#----------------------

# 创建kubelet bootstrapping kubeconfig

export KUBE_APISERVER="https://lb.5179.top:6443"

# 设置集群参数

kubectl config set-cluster kubernetes \

--certificate-authority=./ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=bootstrap.kubeconfig

# 设置客户端认证参数

kubectl config set-credentials kubelet-bootstrap \

--token=${BOOTSTRAP_TOKEN} \

--kubeconfig=bootstrap.kubeconfig

# 设置上下文参数

kubectl config set-context default \

--cluster=kubernetes \

--user=kubelet-bootstrap \

--kubeconfig=bootstrap.kubeconfig

# 设置默认上下文

kubectl config use-context default --kubeconfig=bootstrap.kubeconfig

#----------------------

# 创建kube-proxy kubeconfig文件

kubectl config set-cluster kubernetes \

--certificate-authority=./ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-credentials kube-proxy \

--client-certificate=./kube-proxy.pem \

--client-key=./kube-proxy-key.pem \

--embed-certs=true \

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-context default \

--cluster=kubernetes \

--user=kube-proxy \

--kubeconfig=kube-proxy.kubeconfig

kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig

#----------------------

# 创建 admin kubeconfig文件

kubectl config set-cluster kubernetes \

--certificate-authority=./ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=admin.kubeconfig

kubectl config set-credentials admin \

--client-certificate=./admin.pem \

--client-key=./admin-key.pem \

--embed-certs=true \

--kubeconfig=admin.kubeconfig

kubectl config set-context default \

--cluster=kubernetes \

--user=admin \

--kubeconfig=admin.kubeconfig

kubectl config use-context default --kubeconfig=admin.kubeconfig

将文件拷贝至对应位置

ansible k8s -m copy -a "src=./bootstrap.kubeconfig dest=/opt/kubernetes/cfg"

ansible k8s -m copy -a "src=./kube-proxy.kubeconfig dest=/opt/kubernetes/cfg"

ansible k8s -m copy -a "src=./token.csv dest=/opt/kubernetes/cfg"

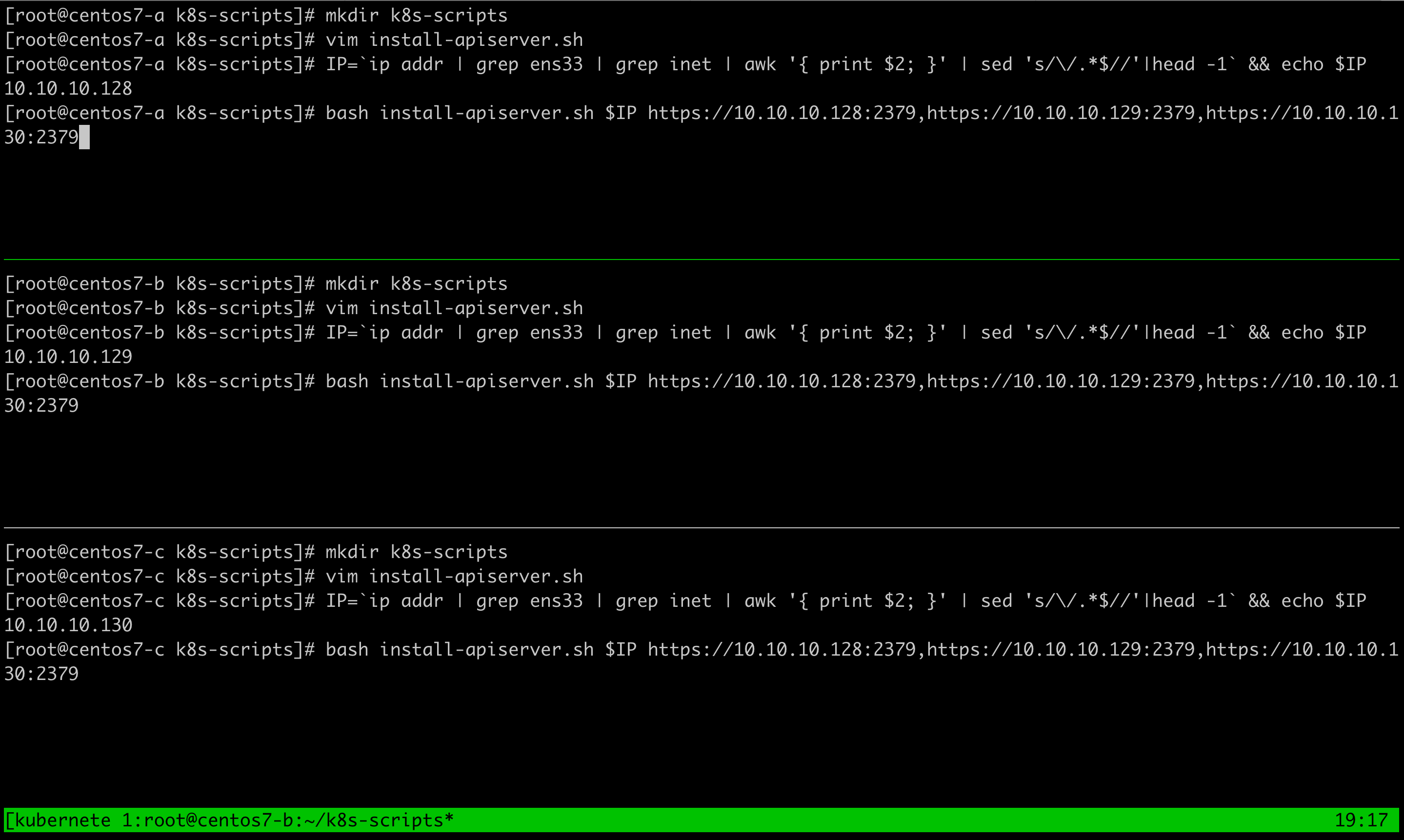

3.4 安装 kube-apiserver

Masters 节点安装

此处可以使用 tmux 打开三个终端窗口进行,并行输入

也可以在三台机器上分开执行

[root@centos7-a ~]# mkdir k8s-scripts

[root@centos7-a k8s-scripts]# vim install-apiserver.sh

[root@centos7-a k8s-scripts]# IP=`ip addr | grep ens33 | grep inet | awk '{ print $2; }' | sed 's/\/.*$//'|head -1` && echo $IP

10.10.10.128

[root@centos7-a k8s-scripts]# bash install-apiserver.sh $IP https://10.10.10.128:2379,https://10.10.10.129:2379,https://10.10.10.130:2379

脚本内容如下:

#!/bin/bash

# MASTER_ADDRESS 写本机

MASTER_ADDRESS=${1:-"10.10.10.128"}

ETCD_SERVERS=${2:-"http://127.0.0.1:2379"}

cat <<EOF >/opt/kubernetes/cfg/kube-apiserver

KUBE_APISERVER_OPTS="--logtostderr=false \\

--v=2 \\

--log-dir=/opt/kubernetes/logs/kube-apiserver \\

--etcd-servers=${ETCD_SERVERS} \\

--bind-address=0.0.0.0 \\

--secure-port=6443 \\

--advertise-address=${MASTER_ADDRESS} \\

--allow-privileged=true \\

--service-cluster-ip-range=10.96.0.0/12 \\

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,MutatingAdmissionWebhook,ValidatingAdmissionWebhook,ResourceQuota,NodeRestriction \\

--authorization-mode=RBAC,Node \\

--kubelet-https=true \\

--enable-bootstrap-token-auth=true \\

--token-auth-file=/opt/kubernetes/cfg/token.csv \\

--service-node-port-range=30000-50000 \\

--kubelet-client-certificate=/opt/kubernetes/ssl/server.pem \\

--kubelet-client-key=/opt/kubernetes/ssl/server-key.pem \\

--tls-cert-file=/opt/kubernetes/ssl/server.pem \\

--tls-private-key-file=/opt/kubernetes/ssl/server-key.pem \\

--client-ca-file=/opt/kubernetes/ssl/ca.pem \\

--service-account-key-file=/opt/kubernetes/ssl/ca-key.pem \\

--etcd-cafile=/opt/kubernetes/ssl/ca.pem \\

--etcd-certfile=/opt/kubernetes/ssl/server.pem \\

--etcd-keyfile=/opt/kubernetes/ssl/server-key.pem \\

--requestheader-client-ca-file=/opt/kubernetes/ssl/ca.pem \\

--requestheader-extra-headers-prefix=X-Remote-Extra- \\

--requestheader-group-headers=X-Remote-Group \\

--requestheader-username-headers=X-Remote-User \\

--proxy-client-cert-file=/opt/kubernetes/ssl/metrics-server.pem \\

--proxy-client-key-file=/opt/kubernetes/ssl/metrics-server-key.pem \\

--runtime-config=api/all=true \\

--audit-log-maxage=30 \\

--audit-log-maxbackup=3 \\

--audit-log-maxsize=100 \\

--audit-log-truncate-enabled=true \\

--audit-log-path=/opt/kubernetes/logs/k8s-audit.log"

EOF

cat <<EOF >/usr/lib/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=-/opt/kubernetes/cfg/kube-apiserver

ExecStart=/opt/kubernetes/bin/kube-apiserver \$KUBE_APISERVER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable kube-apiserver

systemctl restart kube-apiserver

3.5 安装 kube-scheduler

Masters 节点安装

此处可以使用 tmux 打开三个终端窗口进行,并行输入,也可以在三台机器上分开执行

[root@centos7-a ~]# cd k8s-scripts

[root@centos7-a k8s-scripts]# vim install-scheduler.sh

[root@centos7-a k8s-scripts]# bash install-scheduler.sh 127.0.0.1

脚本内容如下

#!/bin/bash

MASTER_ADDRESS=${1:-"127.0.0.1"}

cat <<EOF >/opt/kubernetes/cfg/kube-scheduler

KUBE_SCHEDULER_OPTS="--logtostderr=false \\

--v=2 \\

--log-dir=/opt/kubernetes/logs/kube-scheduler \\

--master=${MASTER_ADDRESS}:8080 \\

--address=0.0.0.0 \\

--leader-elect"

EOF

cat <<EOF >/usr/lib/systemd/system/kube-scheduler.service

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=-/opt/kubernetes/cfg/kube-scheduler

ExecStart=/opt/kubernetes/bin/kube-scheduler \$KUBE_SCHEDULER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable kube-scheduler

systemctl restart kube-scheduler

3.6 安装 kube-controller-manager

Masters 节点安装

此处可以使用 tmux 打开三个终端窗口进行,并行输入,也可以在三台机器上分开执行

[root@centos7-a ~]# cd k8s-scripts

[root@centos7-a k8s-scripts]# vim install-controller-manager.sh

[root@centos7-a k8s-scripts]# bash install-controller-manager.sh 127.0.0.1

脚本内容如下

#!/bin/bash

MASTER_ADDRESS=${1:-"127.0.0.1"}

cat <<EOF >/opt/kubernetes/cfg/kube-controller-manager

KUBE_CONTROLLER_MANAGER_OPTS="--logtostderr=false \\

--v=2 \\

--log-dir=/opt/kubernetes/logs/kube-controller-manager \\

--master=${MASTER_ADDRESS}:8080 \\

--leader-elect=true \\

--bind-address=0.0.0.0 \\

--service-cluster-ip-range=10.96.0.0/12 \\

--cluster-name=kubernetes \\

--cluster-signing-cert-file=/opt/kubernetes/ssl/ca.pem \\

--cluster-signing-key-file=/opt/kubernetes/ssl/ca-key.pem \\

--service-account-private-key-file=/opt/kubernetes/ssl/ca-key.pem \\

--experimental-cluster-signing-duration=87600h0m0s \\

--feature-gates=RotateKubeletServerCertificate=true \\

--feature-gates=RotateKubeletClientCertificate=true \\

--allocate-node-cidrs=true \\

--cluster-cidr=10.244.0.0/16 \\

--root-ca-file=/opt/kubernetes/ssl/ca.pem"

EOF

cat <<EOF >/usr/lib/systemd/system/kube-controller-manager.service

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=-/opt/kubernetes/cfg/kube-controller-manager

ExecStart=/opt/kubernetes/bin/kube-controller-manager \$KUBE_CONTROLLER_MANAGER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable kube-controller-manager

systemctl restart kube-controller-manager

3.7 查看组件状态

在三台机器上任意一台执行kubectl get cs

[root@centos7-a k8s-scripts]# kubectl get cs

NAME STATUS MESSAGE ERROR

etcd-1 Healthy {"health":"true"}

etcd-2 Healthy {"health":"true"}

etcd-0 Healthy {"health":"true"}

controller-manager Healthy ok

scheduler Healthy ok

3.8 配置kubelet证书自动申请 CSR、审核及自动续期

3.8.1 节点自动创建 CSR 请求

节点 kubelet 启动时自动创建 CSR 请求,将kubelet-bootstrap用户绑定到系统集群角色 ,这个是为了颁发证书用的权限

# Bind kubelet-bootstrap user to system cluster roles.

kubectl create clusterrolebinding kubelet-bootstrap \

--clusterrole=system:node-bootstrapper \

--user=kubelet-bootstrap

3.8.2 证书审批及自动续期

1)手动审批脚本(启动 node 节点 kubelet 之后操作)

vim k8s-csr-approve.sh

#!/bin/bash

CSRS=$(kubectl get csr | awk '{if(NR>1) print $1}')

for csr in $CSRS; do

kubectl certificate approve $csr;

done

- 自动审批及续期

创建自动批准相关 CSR 请求的 ClusterRole

[root@centos7-a ~]# mkdir yaml

[root@centos7-a ~]# cd yaml/

[root@centos7-a yaml]# vim tls-instructs-csr.yaml

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: system:certificates.k8s.io:certificatesigningrequests:selfnodeserver

rules:

- apiGroups: ["certificates.k8s.io"]

resources: ["certificatesigningrequests/selfnodeserver"]

verbs: ["create"]

[root@centos7-a yaml]# kubectl apply -f tls-instructs-csr.yaml

自动批准 kubelet-bootstrap 用户 TLS bootstrapping 首次申请证书的 CSR 请求

kubectl create clusterrolebinding node-client-auto-approve-csr --clusterrole=system:certificates.k8s.io:certificatesigningrequests:nodeclient --user=kubelet-bootstrap

自动批准 system:nodes 组用户更新 kubelet 自身与 apiserver 通讯证书的 CSR 请求

kubectl create clusterrolebinding node-client-auto-renew-crt --clusterrole=system:certificates.k8s.io:certificatesigningrequests:selfnodeclient --group=system:nodes

自动批准 system:nodes 组用户更新 kubelet 10250 api 端口证书的 CSR 请求

kubectl create clusterrolebinding node-server-auto-renew-crt --clusterrole=system:certificates.k8s.io:certificatesigningrequests:selfnodeserver --group=system:nodes

自动获签后的状态如下:

[root@centos7-a kubelet]# kubectl get csr

NAME AGE SIGNERNAME REQUESTOR CONDITION

csr-44lt8 4m10s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Approved,Issued

csr-45njg 0s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Approved,Issued

csr-nsbc9 4m9s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Approved,Issued

csr-vk64f 4m9s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Approved,Issued

csr-wftvq 59s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Approved,Issued

3.9 安装 kube-proxy

拷贝对应包至所有节点

[root@centos7-nginx ~]# cd k8s-1.18.3/kubernetes/server/bin/

[root@centos7-nginx bin]# ansible k8s -m copy -a "src=./kube-proxy dest=/opt/kubernetes/bin mode=755"

此处可以使用 tmux 打开五个终端窗口进行,并行输入,也可以在五台机器上分开执行

[root@centos7-a ~]# cd k8s-scripts

[root@centos7-a k8s-scripts]# vim install-proxy.sh

[root@centos7-a k8s-scripts]# bash install-proxy.sh ${HOSTNAME}

脚本内容如下

#!/bin/bash

HOSTNAME=${1:-"`hostname`"}

cat <<EOF >/opt/kubernetes/cfg/kube-proxy.conf

KUBE_PROXY_OPTS="--logtostderr=false \\

--v=2 \\

--log-dir=/opt/kubernetes/logs/kube-proxy \\

--config=/opt/kubernetes/cfg/kube-proxy-config.yml"

EOF

cat <<EOF >/opt/kubernetes/cfg/kube-proxy-config.yml

kind: KubeProxyConfiguration

apiVersion: kubeproxy.config.k8s.io/v1alpha1

address: 0.0.0.0 # 监听地址

metricsBindAddress: 0.0.0.0:10249 # 监控指标地址,监控获取相关信息 就从这里获取

clientConnection:

kubeconfig: /opt/kubernetes/cfg/kube-proxy.kubeconfig # 读取配置文件

hostnameOverride: ${HOSTNAME} # 注册到k8s的节点名称唯一

clusterCIDR: 10.244.0.0/16

mode: iptables # 使用iptables模式

# 使用 ipvs 模式

#mode: ipvs # ipvs 模式

#ipvs:

# scheduler: "rr"

#iptables:

# masqueradeAll: true

EOF

cat <<EOF >/usr/lib/systemd/system/kube-proxy.service

[Unit]

Description=Kubernetes Proxy

After=network.target

[Service]

EnvironmentFile=-/opt/kubernetes/cfg/kube-proxy.conf

ExecStart=/opt/kubernetes/bin/kube-proxy \$KUBE_PROXY_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable kube-proxy

systemctl restart kube-proxy

3.10 安装 kubelet

拷贝对应包至所有节点

[root@centos7-nginx ~]# cd k8s-1.18.3/kubernetes/server/bin/

[root@centos7-nginx bin]# ansible k8s -m copy -a "src=./kubelet dest=/opt/kubernetes/bin mode=755"

此处可以使用 tmux 打开五个终端窗口进行,并行输入,也可以在五台机器上分开执行

[root@centos7-a ~]# cd k8s-scripts

[root@centos7-a k8s-scripts]# vim install-kubelet.sh

[root@centos7-a k8s-scripts]# bash install-kubelet.sh 10.96.0.10 ${HOSTNAME} cluster.local

脚本内容如下

#!/bin/bash

DNS_SERVER_IP=${1:-"10.96.0.10"}

HOSTNAME=${2:-"`hostname`"}

CLUETERDOMAIN=${3:-"cluster.local"}

cat <<EOF >/opt/kubernetes/cfg/kubelet.conf

KUBELET_OPTS="--logtostderr=false \\

--v=2 \\

--log-dir=/opt/kubernetes/logs/kubelet \\

--hostname-override=${HOSTNAME} \\

--kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig \\

--bootstrap-kubeconfig=/opt/kubernetes/cfg/bootstrap.kubeconfig \\

--config=/opt/kubernetes/cfg/kubelet-config.yml \\

--cert-dir=/opt/kubernetes/ssl \\

--network-plugin=cni \\

--cni-conf-dir=/etc/cni/net.d \\

--cni-bin-dir=/opt/cni/bin \\

--pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0 \\

--system-reserved=memory=300Mi \\

--kube-reserved=memory=400Mi"

EOF

cat <<EOF >/opt/kubernetes/cfg/kubelet-config.yml

kind: KubeletConfiguration # 使用对象

apiVersion: kubelet.config.k8s.io/v1beta1 # api版本

address: 0.0.0.0 # 监听地址

port: 10250 # 当前kubelet的端口

readOnlyPort: 10255 # kubelet暴露的端口

cgroupDriver: cgroupfs # 驱动,要与docker info显示的驱动一致

clusterDNS:

- ${DNS_SERVER_IP}

clusterDomain: ${CLUETERDOMAIN} # 集群域

failSwapOn: false # 关闭swap

# 身份验证

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 2m0s

enabled: true

x509:

clientCAFile: /opt/kubernetes/ssl/ca.pem

# 授权

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 5m0s

cacheUnauthorizedTTL: 30s

# Node 资源保留

evictionHard:

imagefs.available: 15%

memory.available: 300Mi

nodefs.available: 10%

nodefs.inodesFree: 5%

evictionPressureTransitionPeriod: 5m0s

# 镜像删除策略

imageGCHighThresholdPercent: 85

imageGCLowThresholdPercent: 80

imageMinimumGCAge: 2m0s

# 旋转证书

rotateCertificates: true # 旋转kubelet client 证书

featureGates:

RotateKubeletServerCertificate: true

RotateKubeletClientCertificate: true

maxOpenFiles: 1000000

maxPods: 110

EOF

cat <<EOF >/usr/lib/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet

After=docker.service

Requires=docker.service

[Service]

EnvironmentFile=-/opt/kubernetes/cfg/kubelet.conf

ExecStart=/opt/kubernetes/bin/kubelet \$KUBELET_OPTS

Restart=on-failure

KillMode=process

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable kubelet

systemctl restart kubelet

3.11 查看节点个数

等待一段时间后出现

[root@centos7-a ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

centos7-a NotReady <none> 7m v1.18.3

centos7-b NotReady <none> 6m v1.18.3

centos7-c NotReady <none> 6m v1.18.3

centos7-d NotReady <none> 6m v1.18.3

centos7-e NotReady <none> 5m v1.18.3

3.12 安装网络插件

3.12.1 安装 flannel

[root@centos7-nginx ~]# mkdir flannel

[root@centos7-nginx flannel]# wget https://github.com/coreos/flannel/releases/download/v0.12.0/flannel-v0.12.0-linux-amd64.tar.gz

[root@centos7-nginx flannel]# tar xf flannel-v0.12.0-linux-amd64.tar.gz

[root@centos7-nginx flannel]# ll

总用量 43792

-rwxr-xr-x. 1 lyj lyj 35253112 3月 13 08:01 flanneld

-rw-r--r--. 1 root root 9565406 6月 16 19:41 flannel-v0.12.0-linux-amd64.tar.gz

-rwxr-xr-x. 1 lyj lyj 2139 5月 29 2019 mk-docker-opts.sh

-rw-r--r--. 1 lyj lyj 4300 5月 29 2019 README.md

[root@centos7-nginx flannel]# vim remove-docker0.sh

#!/bin/bash

# Copyright 2014 The Kubernetes Authors All rights reserved.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# Delete default docker bridge, so that docker can start with flannel network.

# exit on any error

set -e

rc=0

ip link show docker0 >/dev/null 2>&1 || rc="$?"

if [[ "$rc" -eq "0" ]]; then

ip link set dev docker0 down

ip link delete docker0

fi

将包拷贝至所有主机对应位置

[root@centos7-nginx flannel]# ansible k8s -m copy -a "src=./flanneld dest=/opt/kubernetes/bin mode=755"

[root@centos7-nginx flannel]# ansible k8s -m copy -a "src=./mk-docker-opts.sh dest=/opt/kubernetes/bin mode=755"

[root@centos7-nginx flannel]# ansible k8s -m copy -a "src=./remove-docker0.sh dest=/opt/kubernetes/bin mode=755"

准备启动脚本

[root@centos7-nginx scripts]# vim install-flannel.sh

[root@centos7-nginx scripts]# bash install-flannel.sh

[root@centos7-nginx scripts]# ansible k8s -m script -a "./install-flannel.sh https://10.10.10.128:2379,https://10.10.10.129:2379,https://10.10.10.130:2379"

脚本内容如下:

#!/bin/bash

ETCD_ENDPOINTS=${1:-'https://127.0.0.1:2379'}

cat >/opt/kubernetes/cfg/flanneld <<EOF

FLANNEL_OPTIONS="--etcd-endpoints=${ETCD_ENDPOINTS} \

-etcd-cafile=/opt/kubernetes/ssl/ca.pem \

-etcd-certfile=/opt/kubernetes/ssl/server.pem \

-etcd-keyfile=/opt/kubernetes/ssl/server-key.pem"

EOF

cat >/usr/lib/systemd/system/flanneld.service <<EOF

[Unit]

Description=Flanneld Overlay address etcd agent

After=network-online.target network.target

Before=docker.service

[Service]

Type=notify

EnvironmentFile=/opt/kubernetes/cfg/flanneld

#ExecStartPre=/opt/kubernetes/bin/remove-docker0.sh

ExecStart=/opt/kubernetes/bin/flanneld --ip-masq \$FLANNEL_OPTIONS

#ExecStartPost=/opt/kubernetes/bin/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/subnet.env

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable flanneld

systemctl restart flanneld

将 pod 网段信息写入 etcd 中

登陆到任意一台 master 节点上

[root@centos7-a ~]# cd k8s-scripts/

[root@centos7-a k8s-scripts]# vim install-flannel-to-etcd.sh

[root@centos7-a k8s-scripts]# bash install-flannel-to-etcd.sh https://10.10.10.128:2379,https://10.10.10.129:2379,https://10.10.10.130:2379 10.244.0.0/16 vxlan

脚本内容如下

#!/bin/bash

# bash install-flannel-to-etcd.sh https://10.10.10.128:2379,https://10.10.10.129:2379,https://10.10.10.130:2379 10.244.0.0/16 vxlan

ETCD_ENDPOINTS=${1:-'https://127.0.0.1:2379'}

NETWORK=${2:-'10.244.0.0/16'}

NETWORK_MODE=${3:-'vxlan'}

ETCDCTL_API=2 etcdctl --ca-file=/opt/etcd/ssl/ca.pem --cert-file=/opt/etcd/ssl/server.pem --key-file=/opt/etcd/ssl/server-key.pem --endpoints=${ETCD_ENDPOINTS} set /coreos.com/network/config '{"Network": '\"$NETWORK\"', "Backend": {"Type": '\"${NETWORK_MODE}\"'}}'

#ETCDCTL_API=3 etcdctl --cacert=/opt/etcd/ssl/ca.pem --cert=/opt/etcd/ssl/server.pem --key=/opt/etcd/ssl/server-key.pem --endpoints=${ETCD_ENDPOINTS} put /coreos.com/network/config -- '{"Network": "10.244.0.0/16", "Backend": {"Type": "vxlan"}}'

由于flannel 使用的是v2版本的 etcd,所以此处 etcdctl 使用 v2 的 API

3.12.2 安装 cni-plugin

下载 cni 插件

[root@centos7-nginx ~]# mkdir cni

[root@centos7-nginx ~]# cd cni

[root@centos7-nginx cni]# wget https://github.com/containernetworking/plugins/releases/download/v0.8.6/cni-plugins-linux-amd64-v0.8.6.tgz

[root@centos7-nginx cni]# tar xf cni-plugins-linux-amd64-v0.8.6.tgz

[root@centos7-nginx cni]# ll

总用量 106512

-rwxr-xr-x. 1 root root 4159518 5月 14 03:50 bandwidth

-rwxr-xr-x. 1 root root 4671647 5月 14 03:50 bridge

-rw-r--r--. 1 root root 36878412 6月 17 20:07 cni-plugins-linux-amd64-v0.8.6.tgz

-rwxr-xr-x. 1 root root 12124326 5月 14 03:50 dhcp

-rwxr-xr-x. 1 root root 5945760 5月 14 03:50 firewall

-rwxr-xr-x. 1 root root 3069556 5月 14 03:50 flannel

-rwxr-xr-x. 1 root root 4174394 5月 14 03:50 host-device

-rwxr-xr-x. 1 root root 3614480 5月 14 03:50 host-local

-rwxr-xr-x. 1 root root 4314598 5月 14 03:50 ipvlan

-rwxr-xr-x. 1 root root 3209463 5月 14 03:50 loopback

-rwxr-xr-x. 1 root root 4389622 5月 14 03:50 macvlan

-rwxr-xr-x. 1 root root 3939867 5月 14 03:50 portmap

-rwxr-xr-x. 1 root root 4590277 5月 14 03:50 ptp

-rwxr-xr-x. 1 root root 3392826 5月 14 03:50 sbr

-rwxr-xr-x. 1 root root 2885430 5月 14 03:50 static

-rwxr-xr-x. 1 root root 3356587 5月 14 03:50 tuning

-rwxr-xr-x. 1 root root 4314446 5月 14 03:50 vlan

[root@centos7-nginx cni]# cd ..

[root@centos7-nginx ~]# ansible k8s -m copy -a "src=./cni/ dest=/opt/cni/bin mode=755"

创建 cni 配置文件

[root@centos7-nginx scripts]# vim install-cni.sh

[root@centos7-nginx scripts]# ansible k8s -m script -a "./install-cni.sh"

脚本内容如下:

#!/bin/bash

mkdir /etc/cni/net.d/ -pv

cat <<EOF > /etc/cni/net.d/10-flannel.conflist

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

EOF

3.13 查看 node 状态

[root@centos7-c cfg]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

10.10.10.128 Ready <none> 1h v1.18.3

10.10.10.129 Ready <none> 1h v1.18.3

10.10.10.130 Ready <none> 1h v1.18.3

10.10.10.131 Ready <none> 1h v1.18.3

10.10.10.132 Ready <none> 1h v1.18.3

3.14 安装 coredns

注意:k8s 与 coredns 的版本对应关系

https://github.com/coredns/deployment/blob/master/kubernetes/CoreDNS-k8s_version.md

安装 dns 插件

kubectl apply -f coredns.yaml

文件内容如下

cat coredns.yaml # 注意修改clusterIP 和 镜像版本1.6.7

apiVersion: v1

kind: ServiceAccount

metadata:

name: coredns

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:coredns

rules:

- apiGroups:

- ""

resources:

- endpoints

- services

- pods

- namespaces

verbs:

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:coredns

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:coredns

subjects:

- kind: ServiceAccount

name: coredns

namespace: kube-system

---

apiVersion: v1

kind: ConfigMap

metadata:

name: coredns

namespace: kube-system

data:

Corefile: |

.:53 {

errors

health {

lameduck 5s

}

ready

kubernetes cluster.local in-addr.arpa ip6.arpa {

fallthrough in-addr.arpa ip6.arpa

}

prometheus :9153

forward . /etc/resolv.conf

cache 30

loop

reload

loadbalance

}

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: coredns

namespace: kube-system

labels:

k8s-app: kube-dns

kubernetes.io/name: "CoreDNS"

spec:

# replicas: not specified here:

# 1. Default is 1.

# 2. Will be tuned in real time if DNS horizontal auto-scaling is turned on.

replicas: 2

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

selector:

matchLabels:

k8s-app: kube-dns

template:

metadata:

labels:

k8s-app: kube-dns

spec:

priorityClassName: system-cluster-critical

serviceAccountName: coredns

tolerations:

- key: "CriticalAddonsOnly"

operator: "Exists"

nodeSelector:

kubernetes.io/os: linux

affinity:

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

podAffinityTerm:

labelSelector:

matchExpressions:

- key: k8s-app

operator: In

values: ["kube-dns"]

topologyKey: kubernetes.io/hostname

containers:

- name: coredns

image: coredns/coredns:1.6.7

imagePullPolicy: IfNotPresent

resources:

limits:

memory: 170Mi

requests:

cpu: 100m

memory: 70Mi

args: [ "-conf", "/etc/coredns/Corefile" ]

volumeMounts:

- name: config-volume

mountPath: /etc/coredns

readOnly: true

ports:

- containerPort: 53

name: dns

protocol: UDP

- containerPort: 53

name: dns-tcp

protocol: TCP

- containerPort: 9153

name: metrics

protocol: TCP

securityContext:

allowPrivilegeEscalation: false

capabilities:

add:

- NET_BIND_SERVICE

drop:

- all

readOnlyRootFilesystem: true

livenessProbe:

httpGet:

path: /health

port: 8080

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

readinessProbe:

httpGet:

path: /ready

port: 8181

scheme: HTTP

dnsPolicy: Default

volumes:

- name: config-volume

configMap:

name: coredns

items:

- key: Corefile

path: Corefile

---

apiVersion: v1

kind: Service

metadata:

name: kube-dns

namespace: kube-system

annotations:

prometheus.io/port: "9153"

prometheus.io/scrape: "true"

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

kubernetes.io/name: "CoreDNS"

spec:

selector:

k8s-app: kube-dns

clusterIP: 10.96.0.10

ports:

- name: dns

port: 53

protocol: UDP

- name: dns-tcp

port: 53

protocol: TCP

- name: metrics

port: 9153

protocol: TCP

验证是否可以正常运行

# 先创建一个 busybox 容器作为客户端

[root@centos7-nginx ~]# kubectl create -f https://k8s.io/examples/admin/dns/busybox.yaml

# 解析 kubernetes

[root@centos7-nginx ~]# kubectl exec -it busybox -- nslookup kubernetes

Server: 10.96.0.10

Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.local

Name: kubernetes

Address 1: 10.96.0.1 kubernetes.default.svc.cluster.local

[root@centos7-nginx ~]#

3.15 安装 metrics-server

项目地址:https://github.com/kubernetes-sigs/metrics-server

按照说明执行如下命令即可,需要根据自身集群状态进行修改,比如,镜像地址、资源限制...

kubectl apply -f https://github.com/kubernetes-sigs/metrics-server/releases/download/v0.3.6/components.yaml

将文件下载到本地

[root@centos7-nginx scripts]# wget https://github.com/kubernetes-sigs/metrics-server/releases/download/v0.3.6/components.yaml

修改内容:修改镜像地址,添加资源限制和相关命令

apiVersion: apps/v1

kind: Deployment

metadata:

name: metrics-server

spec:

template:

spec:

containers:

- name: metrics-server

image: registry.cn-beijing.aliyuncs.com/liyongjian5179/metrics-server-amd64:v0.3.6

imagePullPolicy: IfNotPresent

resources:

limits:

cpu: 400m

memory: 512Mi

requests:

cpu: 50m

memory: 50Mi

command:

- /metrics-server

- --kubelet-insecure-tls

- --kubelet-preferred-address-types=InternalIP

根据您的群集设置,您可能还需要更改传递给Metrics Server容器的标志。最有用的标志:

--kubelet-preferred-address-types-确定连接到特定节点的地址时使用的节点地址类型的优先级(default [Hostname,InternalDNS,InternalIP,ExternalDNS,ExternalIP])--kubelet-insecure-tls-不要验证Kubelets提供的服务证书的CA。仅用于测试目的。--requestheader-client-ca-file-指定根证书捆绑包,以验证传入请求上的客户端证书。

执行该文件

[root@centos7-nginx scripts]# kubectl apply -f components.yaml

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created

clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator created

rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader created

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created

serviceaccount/metrics-server created

deployment.apps/metrics-server created

service/metrics-server created

clusterrole.rbac.authorization.k8s.io/system:metrics-server created

clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server created

等待一段时间即可查看效果

[root@centos7-nginx scripts]# kubectl top nodes

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

centos7-a 159m 15% 1069Mi 62%

centos7-b 158m 15% 1101Mi 64%

centos7-c 168m 16% 1153Mi 67%

centos7-d 48m 4% 657Mi 38%

centos7-e 45m 4% 440Mi 50%

[root@centos7-nginx scripts]# kubectl top pods -A

NAMESPACE NAME CPU(cores) MEMORY(bytes)

kube-system coredns-6fdfb45d56-79jhl 5m 12Mi

kube-system coredns-6fdfb45d56-pvnzt 3m 13Mi

kube-system metrics-server-5f8fdf59b9-8chz8 1m 11Mi

kube-system tiller-deploy-6b75d7dccd-r6sz2 2m 6Mi

完整文件内容如下

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: system:aggregated-metrics-reader

labels:

rbac.authorization.k8s.io/aggregate-to-view: "true"

rbac.authorization.k8s.io/aggregate-to-edit: "true"

rbac.authorization.k8s.io/aggregate-to-admin: "true"

rules:

- apiGroups: ["metrics.k8s.io"]

resources: ["pods", "nodes"]

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: metrics-server:system:auth-delegator

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:auth-delegator

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: metrics-server-auth-reader

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: extension-apiserver-authentication-reader

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: apiregistration.k8s.io/v1beta1

kind: APIService

metadata:

name: v1beta1.metrics.k8s.io

spec:

service:

name: metrics-server

namespace: kube-system

group: metrics.k8s.io

version: v1beta1

insecureSkipTLSVerify: true

groupPriorityMinimum: 100

versionPriority: 100

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: metrics-server

namespace: kube-system

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: metrics-server

namespace: kube-system

labels:

k8s-app: metrics-server

spec:

selector:

matchLabels:

k8s-app: metrics-server

template:

metadata:

name: metrics-server

labels:

k8s-app: metrics-server

spec:

serviceAccountName: metrics-server

volumes:

# mount in tmp so we can safely use from-scratch images and/or read-only containers

- name: tmp-dir

emptyDir: {}

containers:

- name: metrics-server

image: registry.cn-beijing.aliyuncs.com/liyongjian5179/metrics-server-amd64:v0.3.6

imagePullPolicy: IfNotPresent

resources:

limits:

cpu: 400m

memory: 512Mi

requests:

cpu: 50m

memory: 50Mi

command:

- /metrics-server

- --kubelet-insecure-tls

- --kubelet-preferred-address-types=InternalIP

args:

- --cert-dir=/tmp

- --secure-port=4443

ports:

- name: main-port

containerPort: 4443

protocol: TCP

securityContext:

readOnlyRootFilesystem: true

runAsNonRoot: true

runAsUser: 1000

volumeMounts:

- name: tmp-dir

mountPath: /tmp

nodeSelector:

kubernetes.io/os: linux

kubernetes.io/arch: "amd64"

---

apiVersion: v1

kind: Service

metadata:

name: metrics-server

namespace: kube-system

labels:

kubernetes.io/name: "Metrics-server"

kubernetes.io/cluster-service: "true"

spec:

selector:

k8s-app: metrics-server

ports:

- port: 443

protocol: TCP

targetPort: main-port

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: system:metrics-server

rules:

- apiGroups:

- ""

resources:

- pods

- nodes

- nodes/stats

- namespaces

- configmaps

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: system:metrics-server

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:metrics-server

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

3.16 安装 ingress

3.16.1 LB 方案

采用裸金属服务器的方案:https://kubernetes.github.io/ingress-nginx/deploy/#bare-metal

可选NodePort或者LoadBalancer,默认是 NodePort 的方案

在云上的环境可以使用现成的 LB的方案:

比如阿里云Internal load balancer示例,可以通过注解的方式

[...]

metadata:

annotations:

service.beta.kubernetes.io/alibaba-cloud-loadbalancer-address-type: "intranet"

[...]

裸金属服务器上可选方案:

1)纯软件解决方案:MetalLB(https://metallb.universe.tf/)

该项目发布于 2017 年底,当前处于 Beta 阶段。

MetalLB支持两种声明模式:

- Layer 2模式:ARP/NDP

- BGP模式

Layer 2 模式

Layer 2模式下,每个service会有集群中的一个node来负责。当服务客户端发起ARP解析的时候,对应的node会响应该ARP请求,之后,该service的流量都会指向该node(看上去该node上有多个地址)。

Layer 2模式并不是真正的负载均衡,因为流量都会先经过1个node后,再通过kube-proxy转给多个end points。如果该node故障,MetalLB会迁移 IP到另一个node,并重新发送免费ARP告知客户端迁移。现代操作系统基本都能正确处理免费ARP,因此failover不会产生太大问题。

Layer 2模式更为通用,不需要用户有额外的设备;但由于Layer 2模式使用ARP/ND,地址池分配需要跟客户端在同一子网,地址分配略为繁琐。

BGP模式

BGP模式下,集群中所有node都会跟上联路由器建立BGP连接,并且会告知路由器应该如何转发service的流量。

BGP模式是真正的LoadBalancer。

2)通过NodePort

使用NodePort有一些局限性

- Source IP address

默认情况下,NodePort类型的服务执行源地址转换。这意味着HTTP请求的源IP始终是从NGINX侧接收到该请求的Kubernetes节点的IP地址。

建议在NodePort设置中保留源IP的方法是将ingress-nginx的Service中spec的externalTrafficPolicy字段的值设置为Local,如下面的例子:

kind: Service

apiVersion: v1

metadata:

name: ingress-nginx

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

annotations:

# by default the type is elb (classic load balancer).

service.beta.kubernetes.io/aws-load-balancer-type: nlb

spec:

# this setting is t make sure the source IP address is preserved.

externalTrafficPolicy: Local

type: LoadBalancer

selector:

app.kubernetes.io/name: ingress-nginx

ports:

- name: http

port: 80

targetPort: http

- name: https

port: 443

targetPort: https

注意:此设置有效地丢弃了发送到未运行NGINX Ingress控制器任何实例的Kubernetes节点的数据包。考虑将NGINX Pod分配给特定节点,以控制应调度或不调度NGINX Ingress控制器的节点,可以通过nodeSelector实现。如果有三台机器,但是只有两个 nginx 的 replica,分别部署在 node-2和 node-3,那么当请求到 node-1 时,会因为在这台机器上没有运行 nginx 的 replica 而被丢弃。

给对应节点打标签

[root@centos7-nginx ~]# kubectl label nodes centos7-d lb-type=nginx

node/centos7-d labeled

[root@centos7-nginx ~]# kubectl label nodes centos7-e lb-type=nginx

node/centos7-e labeled

3.16.2 安装

本次实验采用默认的方式:

kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/deploy/static/provider/baremetal/deploy.yaml

如果需要进行修改,先下载到本地

[root@centos7-nginx yaml]# wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/deploy/static/provider/baremetal/deploy.yaml

[root@centos7-nginx yaml]# vim deploy.yaml

[root@centos7-nginx yaml]# kubectl apply -f deploy.yaml

namespace/ingress-nginx created

serviceaccount/ingress-nginx created

configmap/ingress-nginx-controller created

clusterrole.rbac.authorization.k8s.io/ingress-nginx created

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx created

role.rbac.authorization.k8s.io/ingress-nginx created

rolebinding.rbac.authorization.k8s.io/ingress-nginx created

service/ingress-nginx-controller-admission created

service/ingress-nginx-controller created

deployment.apps/ingress-nginx-controller created

validatingwebhookconfiguration.admissionregistration.k8s.io/ingress-nginx-admission created

clusterrole.rbac.authorization.k8s.io/ingress-nginx-admission created

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx-admission created

job.batch/ingress-nginx-admission-create created

job.batch/ingress-nginx-admission-patch created

role.rbac.authorization.k8s.io/ingress-nginx-admission created

rolebinding.rbac.authorization.k8s.io/ingress-nginx-admission created

serviceaccount/ingress-nginx-admission created

也可以先跑起来,在修改

[root@centos7-nginx ~]# kubectl edit deploy ingress-nginx-controller -n ingress-nginx

...

spec:

progressDeadlineSeconds: 600

replicas: 2 #----> 修改为 2 实现高可用

...

template:

...

spec:

nodeSelector: #----> 增加节点选择器

lb-type: nginx #----> 匹配标签

或者使用

[root@centos7-nginx yaml]# kubectl -n ingress-nginx patch deployment ingress-nginx-controller -p '{"spec": {"template": {"spec": {"nodeSelector": {"lb-type": "nginx"}}}}}'

deployment.apps/ingress-nginx-controller patched

[root@centos7-nginx yaml]# kubectl -n ingress-nginx scale --replicas=2 deployment/ingress-nginx-controller

deployment.apps/ingress-nginx-controller scaled

查看 svc 状态可以看到端口已经分配

[root@centos7-nginx ~]# kubectl get svc -n ingress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller NodePort 10.101.121.120 <none> 80:36459/TCP,443:33171/TCP 43m

ingress-nginx-controller-admission ClusterIP 10.111.108.89 <none> 443/TCP 43m

所有机器上的端口也已经开启,为了防止请求被丢弃,建议将代理后的节点 ip 固定在已经打了lb-type=nginx的节点

[root@centos7-a ~]# netstat -ntpl |grep proxy

tcp 0 0 0.0.0.0:36459 0.0.0.0:* LISTEN 69169/kube-proxy

tcp 0 0 0.0.0.0:33171 0.0.0.0:* LISTEN 69169/kube-proxy

...

[root@centos7-d ~]# netstat -ntpl |grep proxy

tcp 0 0 0.0.0.0:36459 0.0.0.0:* LISTEN 84181/kube-proxy

tcp 0 0 0.0.0.0:33171 0.0.0.0:* LISTEN 84181/kube-proxy

[root@centos7-e ~]# netstat -ntpl |grep proxy

tcp 0 0 0.0.0.0:36459 0.0.0.0:* LISTEN 74881/kube-proxy

tcp 0 0 0.0.0.0:33171 0.0.0.0:* LISTEN 74881/kube-proxy

3.16.3 验证

# 创建一个应用

[root@centos7-nginx ~]# kubectl create deployment nginx-dns --image=nginx

deployment.apps/nginx-dns created

# 创建 svc

[root@centos7-nginx ~]# kubectl expose deployment nginx-dns --port=80

service/nginx-dns exposed

[root@centos7-nginx ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

busybox 1/1 Running 29 29h

nginx-dns-5c6b6b99df-qvnjh 1/1 Running 0 13s

[root@centos7-nginx ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 3d5h

nginx-dns ClusterIP 10.108.88.75 <none> 80/TCP 10s

# 创建 ingress 文件并执行

[root@centos7-nginx yaml]# vim ingress.yaml

[root@centos7-nginx yaml]# cat ingress.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: ingress-nginx-dns

namespace: default

annotations:

kubernetes.io/ingress.class: "nginx"

spec:

rules:

- host: ng.5179.top

http:

paths:

- path: /

backend:

serviceName: nginx-dns

servicePort: 80

[root@centos7-nginx yaml]# kubectl apply -f ingress.yaml

ingress.extensions/ingress-nginx-dns created

[root@centos7-nginx yaml]# kubectl get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

ingress-nginx-dns <none> ng.5179.top 80 9s

先将日志刷起来

[root@centos7-nginx yaml]# kubectl get pods

NAME READY STATUS RESTARTS AGE

busybox 1/1 Running 30 30h

nginx-dns-5c6b6b99df-qvnjh 1/1 Running 0 28m

[root@centos7-nginx yaml]# kubectl logs -f nginx-dns-5c6b6b99df-qvnjh

/docker-entrypoint.sh: /docker-entrypoint.d/ is not empty, will attempt to perform configuration

/docker-entrypoint.sh: Looking for shell scripts in /docker-entrypoint.d/

/docker-entrypoint.sh: Launching /docker-entrypoint.d/10-listen-on-ipv6-by-default.sh

10-listen-on-ipv6-by-default.sh: Getting the checksum of /etc/nginx/conf.d/default.conf

10-listen-on-ipv6-by-default.sh: Enabled listen on IPv6 in /etc/nginx/conf.d/default.conf

/docker-entrypoint.sh: Launching /docker-entrypoint.d/20-envsubst-on-templates.sh

/docker-entrypoint.sh: Configuration complete; ready for start up

10.244.3.123 - - [20/Jun/2020:12:58:20 +0000] "GET / HTTP/1.1" 200 612 "-" "curl/7.29.0" "10.244.4.0"

后端 Pod 中 nginx 的日志格式为

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

另起一个终端进行访问

[root@centos7-a ~]# curl -H 'Host:ng.5179.top' http://10.10.10.132:36459 -I

HTTP/1.1 200 OK

Server: nginx/1.19.0

Date: Sat, 20 Jun 2020 12:58:27 GMT

Content-Type: text/html

Content-Length: 612

Connection: keep-alive

Vary: Accept-Encoding

Last-Modified: Tue, 26 May 2020 15:00:20 GMT

ETag: "5ecd2f04-264"

Accept-Ranges: bytes

可以看到日志10.244.3.123 - - [20/Jun/2020:12:58:20 +0000] "GET / HTTP/1.1" 200 612 "-" "curl/7.29.0" "10.244.4.0"

然后我们可以配置前端的 LB

[root@centos7-nginx conf.d]# vim ng.conf

[root@centos7-nginx conf.d]# cat ng.conf

upstream nginx-dns{

ip_hash;

server 10.10.10.131:36459 ;

server 10.10.10.132:36459;

}

server {

listen 80;

server_name ng.5179.top;

#access_log logs/host.access.log main;

location / {

root html;

proxy_pass http://nginx-dns;

proxy_set_header Host $host;

proxy_set_header X-Forwarded-For $remote_addr;

index index.html index.htm;

}

}

# 添加内部解析

[root@centos7-nginx conf.d]# vim /etc/hosts

[root@centos7-nginx conf.d]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

10.10.10.127 centos7-nginx lb.5179.top ng.5179.top

10.10.10.128 centos7-a

10.10.10.129 centos7-b

10.10.10.130 centos7-c

10.10.10.131 centos7-d

10.10.10.132 centos7-e

# 重启 nginx

[root@centos7-nginx conf.d]# nginx -t

nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

nginx: configuration file /etc/nginx/nginx.conf test is successful

[root@centos7-nginx conf.d]# nginx -s reload

访问该域名

[root@centos7-nginx conf.d]# curl http://ng.5179.top -I

HTTP/1.1 200 OK

Server: nginx/1.16.1

Date: Sat, 20 Jun 2020 13:07:38 GMT

Content-Type: text/html

Content-Length: 612

Connection: keep-alive

Vary: Accept-Encoding

Last-Modified: Tue, 26 May 2020 15:00:20 GMT

ETag: "5ecd2f04-264"

Accept-Ranges: bytes

后端也能正常收到日志

10.244.4.17 - - [20/Jun/2020:13:22:11 +0000] "HEAD / HTTP/1.1" 200 0 "-" "curl/7.29.0" "10.244.4.1

$remote_addr ---> 10.244.4.17:为某一台 ingress-nginx 的 nginx_IP

$http_x_forwarded_for ---> 10.244.4.1:为节点上的 cni0 网卡 IP

[root@centos7-nginx conf.d]# kubectl get pods -n ingress-nginx -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

ingress-nginx-admission-create-tqp5w 0/1 Completed 0 112m 10.244.3.119 centos7-d <none> <none>

ingress-nginx-admission-patch-78jmf 0/1 Completed 0 112m 10.244.3.120 centos7-d <none> <none>

ingress-nginx-controller-5946fd499c-6cx4x 1/1 Running 0 11m 10.244.3.125 centos7-d <none> <none>

ingress-nginx-controller-5946fd499c-khjdn 1/1 Running 0 11m 10.244.4.17 centos7-e <none> <none>

修改 ingress-nginx-controller 的 svc

[root@centos7-nginx conf.d]# kubectl get svc -n ingress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller NodePort 10.101.121.120 <none> 80:36459/TCP,443:33171/TCP 97m

ingress-nginx-controller-admission ClusterIP 10.111.108.89 <none> 443/TCP 97m

[root@centos7-nginx conf.d]# kubectl edit svc ingress-nginx-controller -n ingress-nginx

...

spec:

clusterIP: 10.101.121.120

externalTrafficPolicy: Cluster #---> 修改为 Local

...

service/ingress-nginx-controller edited

再次访问

[root@centos7-nginx conf.d]# curl http://ng.5179.top -I

HTTP/1.1 200 OK

Server: nginx/1.16.1

Date: Sat, 20 Jun 2020 13:28:05 GMT

Content-Type: text/html

Content-Length: 612

Connection: keep-alive

Vary: Accept-Encoding

Last-Modified: Tue, 26 May 2020 15:00:20 GMT

ETag: "5ecd2f04-264"

Accept-Ranges: bytes

# 查看本机网卡 IP

[root@centos7-nginx conf.d]# ip addr show ens33

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:38:d4:e3 brd ff:ff:ff:ff:ff:ff

inet 10.10.10.127/24 brd 10.10.10.255 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe38:d4e3/64 scope link

valid_lft forever preferred_lft forever

nginx的日志($http_x_forwarded_for)已经记录了客户端的真实IP

10.244.4.17 - - [20/Jun/2020:13:28:05 +0000] "HEAD / HTTP/1.1" 200 0 "-" "curl/7.29.0" "10.10.10.127"

3.16.4 运行多个 ingress

注意:如果要运行多个 ingress ,一个服务于公共流量,一个服务于“内部”流量。为此,必须将选项--ingress-class更改为控制器定义内群集的唯一值。

spec:

template:

spec:

containers:

- name: nginx-ingress-internal-controller

args:

- /nginx-ingress-controller

- '--election-id=ingress-controller-leader-internal'

- '--ingress-class=nginx-internal'

- '--configmap=ingress/nginx-ingress-internal-controller'

需要创建单独的Configmap、Service、Deployment的文件,其他与默认安装的 ingress 共用即可

---

# Source: ingress-nginx/templates/controller-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

labels:

helm.sh/chart: ingress-nginx-2.4.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.33.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-internal-controller # 修改名字

namespace: ingress-nginx

data:

---

# Source: ingress-nginx/templates/controller-service.yaml

apiVersion: v1

kind: Service

metadata:

labels:

helm.sh/chart: ingress-nginx-2.4.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.33.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-internal-controller # 修改名字

namespace: ingress-nginx

spec:

type: NodePort

ports:

- name: http

port: 80

protocol: TCP

targetPort: http

- name: https

port: 443

protocol: TCP

targetPort: https

selector:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

---

# Source: ingress-nginx/templates/controller-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

helm.sh/chart: ingress-nginx-2.4.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.33.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-internal-controller # 修改名字

namespace: ingress-nginx

spec:

selector:

matchLabels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

revisionHistoryLimit: 10

minReadySeconds: 0

template:

metadata:

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

spec:

dnsPolicy: ClusterFirst

containers:

- name: controller

#image: quay.io/kubernetes-ingress-controller/nginx-ingress-controller:0.33.0

image: registry.cn-beijing.aliyuncs.com/liyongjian5179/nginx-ingress-controller:0.33.0

imagePullPolicy: IfNotPresent

lifecycle:

preStop:

exec:

command:

- /wait-shutdown

args:

- /nginx-ingress-controller

- --election-id=ingress-controller-leader-internal

- --ingress-class=nginx-internal

- --configmap=ingress-nginx/ingress-nginx-internal-controller

- --validating-webhook=:8443

- --validating-webhook-certificate=/usr/local/certificates/cert

- --validating-webhook-key=/usr/local/certificates/key

securityContext:

capabilities:

drop:

- ALL

add:

- NET_BIND_SERVICE

runAsUser: 101

allowPrivilegeEscalation: true

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

livenessProbe:

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

timeoutSeconds: 1

successThreshold: 1

failureThreshold: 3

readinessProbe:

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

timeoutSeconds: 1

successThreshold: 1

failureThreshold: 3

ports:

- name: http

containerPort: 80

protocol: TCP

- name: https

containerPort: 443

protocol: TCP

- name: webhook

containerPort: 8443

protocol: TCP

volumeMounts:

- name: webhook-cert

mountPath: /usr/local/certificates/

readOnly: true

resources:

requests:

cpu: 100m

memory: 90Mi

serviceAccountName: ingress-nginx

terminationGracePeriodSeconds: 300

volumes:

- name: webhook-cert

secret:

secretName: ingress-nginx-admission

然后执行即可,然后还需要在原配置文件中的 Role中添加一行信息

# Source: ingress-nginx/templates/controller-role.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

...

name: ingress-nginx

namespace: ingress-nginx

rules:

...

- apiGroups:

- ''

resources:

- configmaps

resourceNames:

# Defaults to "<election-id>-<ingress-class>"

# Here: "<ingress-controller-leader>-<nginx>"

# This has to be adapted if you change either parameter

# when launching the nginx-ingress-controller.

- ingress-controller-leader-nginx

- ingress-controller-leader-internal-nginx-internal #此处要增加一行,如果不加,会出现下面的报错

verbs:

- get

- update

上述所说,如果不添加,ingress-controller 的 nginx 会出现这个报错信息

E0621 08:25:07.531202 6 leaderelection.go:356] Failed to update lock: configmaps "ingress-controller-leader-internal-nginx-internal" is forbidden: User "system:serviceaccount:ingress-nginx:ingress-nginx" cannot update resource "configmaps" in API group "" in the namespace "ingress-nginx"

然后修改 ingress 文件

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: nginx

annotations: