Spark机器学习(12):神经网络算法

1. 神经网络基础知识

1.1 神经元

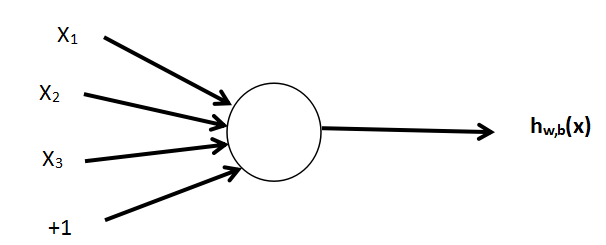

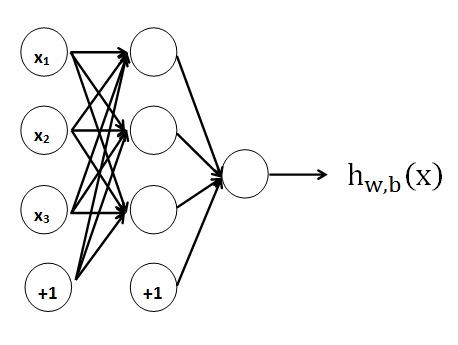

神经网络(Neural Net)是由大量的处理单元相互连接形成的网络。神经元是神经网络的最小单元,神经网络由若干个神经元组成。一个神经元的结构如下:

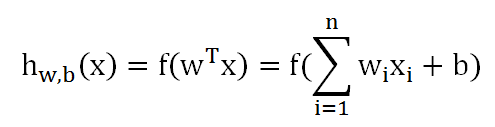

上面的神经元x1,x2,x3和1是输入,hw,b(x)是输出。

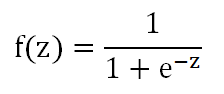

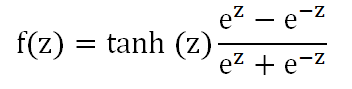

其中f(x)是激活函数,常用的激活函数有sigmoid函数和tanh(双曲正切)函数。

sigmoid函数:

tanh(双曲正切)函数:

1.2 神经网络

神经网络由若干个层次,相邻层次之间的神经元存在输入的关系。第一层称为输入层,最后一层称为输出层,中间的层次称为隐含层。

1.3 信号前向传播和误差反向传播

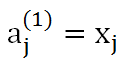

设神经网络有n层,第1层为L1,第2层为L2,第n层为Ln,第p(p=1,2,...n)层的神经元节点数量是mp。aj(k)表示第k层第j个节点的输出值。则对于L1(也就是输入层),有

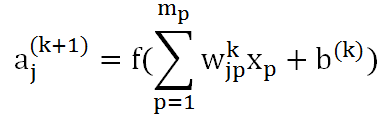

第(k+1)层第j个神经元的输出

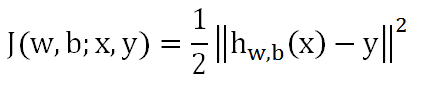

设一个训练样本的误差为

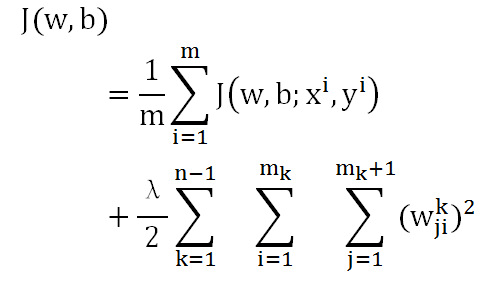

整体误差函数

为了防止过拟合,增加了第二项L2正则化。

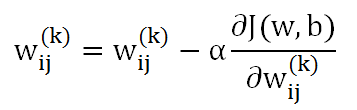

目标是求(w,b),使得J(w,b)最小。为此使用梯度下降法,每次迭代按照下面的公式对w和b进行更新

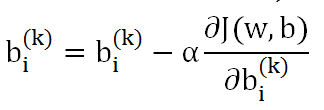

第n层(也就是输出层)的输出神经元j,其残差为

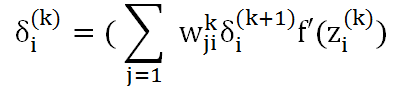

第k层第i个节点的残差为

求解(w,b)的过程如下:

1) 对于所有的k,令w(k):=0,b(k):=0;

2) 信号前向传播,根据每个样本的输入值和w(k)、w(k),逐层计算出hw,b(x);

3) 误差反向传播,逐层计算出每一层每个神经元的残差;

4) 对w和b的值进行更新。

反复进行步骤(2)~(4),直到完成指定的迭代次数为止。

2. MLlib神经网络的实现

MLlib的神经网络类是NerualNet。主要参数包括:

Size:Array[Int],神经网络每一层的节点数量;

Layer:神经网络的层数;

Activation_function:激活函数,可以是sigm或tanh

Ouput_function:输出函数,可以是sigm、softmax或linear。

代码:

import org.apache.log4j.{ Level, Logger }

import org.apache.spark.{ SparkConf, SparkContext }

import breeze.linalg.{

DenseMatrix => BDM,

max => Bmax,

min => Bmin

}

import scala.collection.mutable.ArrayBuffer

/**

* Created by Administrator on 2017/7/27.

*/

object NNTest {

def main(args: Array[String]) = {

// 设置运行环境

val conf = new SparkConf().setAppName("Neural Net")

.setMaster("spark://master:7077").setJars(Seq("E:\\Intellij\\Projects\\MachineLearning\\MachineLearning.jar"))

val sc = new SparkContext(conf)

Logger.getRootLogger.setLevel(Level.WARN)

// 随机生成样本数据

Logger.getRootLogger.setLevel(Level.WARN)

val sampleRow = 1000

val sampleColumn = 5

val randSamp_01 = RandSampleData.RandM(sampleRow, sampleColumn, -10, 10, "sphere")

// 归一化

val norMax = Bmax(randSamp_01(::, breeze.linalg.*))

val norMin = Bmin(randSamp_01(::, breeze.linalg.*))

val nor1 = randSamp_01 - (BDM.ones[Double](randSamp_01.rows, 1)) * norMin

val nor2 = nor1 :/ ((BDM.ones[Double](nor1.rows, 1)) * (norMax - norMin))

// 转换样本

val randSamp_02 = ArrayBuffer[BDM[Double]]()

for (i <- 0 to sampleRow - 1) {

val mi = nor2(i, ::)

val mi1 = mi.inner

val mi2 = mi1.toArray

val mi3 = new BDM(1, mi2.length, mi2)

randSamp_02 += mi3

}

val randSamp_03 = sc.parallelize(randSamp_02, 10)

sc.setCheckpointDir("hdfs://master:9000/ml/data/checkpoint")

randSamp_03.checkpoint()

val trainRDD = randSamp_03.map(f => (new BDM(1, 1, f(::, 0).data), f(::, 1 to -1)))

// 训练,建立模型

val opts = Array(100.0, 50.0, 0.0)

trainRDD.cache

val numExamples = trainRDD.count()

println(s"Number of Examples: $numExamples")

val NNModel = new NeuralNet().

setSize(Array(5, 10, 10, 10, 10, 10, 1)).

setLayer(7).

setActivation_function("tanh_opt").

setLearningRate(2.0).

setScaling_learningRate(1.0).

setWeightPenaltyL2(0.0).

setNonSparsityPenalty(0.0).

setSparsityTarget(0.05).

setInputZeroMaskedFraction(0.0).

setDropoutFraction(0.0).

setOutput_function("sigm").

NNtrain(trainRDD, opts)

// 测试模型

val NNPrediction = NNModel.predict(trainRDD)

val NNPredictionError = NNModel.Loss(NNPrediction)

println(s"NNerror = $NNPredictionError")

val showPrediction = NNPrediction.map(f => (f.label.data(0), f.predict_label.data(0))).take(100)

println("Prediction Result")

println("Value" + "\t" + "Prediction" + "\t" + "Error")

for (i <- 0 until showPrediction.length)

println(showPrediction(i)._1 + "\t" + showPrediction(i)._2 + "\t" + (showPrediction(i)._2 - showPrediction(i)._1))

var tmpWeight = NNModel.weights(0)

for (i <-0 to 5) {

tmpWeight = NNModel.weights(i)

println(s"Weight of Layer ${i+1}")

for (j <- 0 to tmpWeight.rows - 1) {

for (k <- 0 to tmpWeight.cols - 1) {

print(tmpWeight(j, k) + "\t")

}

println()

}

}

}

}

以上代码建立了一个7层的神经网络,各层的节点数量为Array(5, 10, 10, 10, 10, 10, 1),对Sphere函数进行了测试。

运行结果:

Number of Examples: 1000

epoch: numepochs = 1 , Took = 17 seconds; Full-batch train mse = 0.066738, val mse = 0.000000.

epoch: numepochs = 2 , Took = 12 seconds; Full-batch train mse = 0.069649, val mse = 0.000000.

epoch: numepochs = 3 , Took = 10 seconds; Full-batch train mse = 0.055260, val mse = 0.000000.

epoch: numepochs = 4 , Took = 10 seconds; Full-batch train mse = 0.016346, val mse = 0.000000.

epoch: numepochs = 5 , Took = 9 seconds; Full-batch train mse = 0.013802, val mse = 0.000000.

epoch: numepochs = 6 , Took = 13 seconds; Full-batch train mse = 0.045142, val mse = 0.000000.

epoch: numepochs = 7 , Took = 7 seconds; Full-batch train mse = 0.031211, val mse = 0.000000.

epoch: numepochs = 8 , Took = 7 seconds; Full-batch train mse = 0.016334, val mse = 0.000000.

epoch: numepochs = 9 , Took = 9 seconds; Full-batch train mse = 0.013348, val mse = 0.000000.

epoch: numepochs = 10 , Took = 7 seconds; Full-batch train mse = 0.017879, val mse = 0.000000.

epoch: numepochs = 11 , Took = 7 seconds; Full-batch train mse = 0.012627, val mse = 0.000000.

epoch: numepochs = 12 , Took = 7 seconds; Full-batch train mse = 0.018080, val mse = 0.000000.

epoch: numepochs = 13 , Took = 7 seconds; Full-batch train mse = 0.016755, val mse = 0.000000.

epoch: numepochs = 14 , Took = 7 seconds; Full-batch train mse = 0.012250, val mse = 0.000000.

epoch: numepochs = 15 , Took = 7 seconds; Full-batch train mse = 0.044833, val mse = 0.000000.

epoch: numepochs = 16 , Took = 7 seconds; Full-batch train mse = 0.024345, val mse = 0.000000.

epoch: numepochs = 17 , Took = 7 seconds; Full-batch train mse = 0.039005, val mse = 0.000000.

epoch: numepochs = 18 , Took = 7 seconds; Full-batch train mse = 0.012298, val mse = 0.000000.

epoch: numepochs = 19 , Took = 7 seconds; Full-batch train mse = 0.012371, val mse = 0.000000.

epoch: numepochs = 20 , Took = 6 seconds; Full-batch train mse = 0.014077, val mse = 0.000000.

epoch: numepochs = 21 , Took = 7 seconds; Full-batch train mse = 0.040328, val mse = 0.000000.

epoch: numepochs = 22 , Took = 6 seconds; Full-batch train mse = 0.036575, val mse = 0.000000.

epoch: numepochs = 23 , Took = 6 seconds; Full-batch train mse = 0.033986, val mse = 0.000000.

epoch: numepochs = 24 , Took = 6 seconds; Full-batch train mse = 0.026421, val mse = 0.000000.

epoch: numepochs = 25 , Took = 6 seconds; Full-batch train mse = 0.036776, val mse = 0.000000.

epoch: numepochs = 26 , Took = 6 seconds; Full-batch train mse = 0.011838, val mse = 0.000000.

epoch: numepochs = 27 , Took = 6 seconds; Full-batch train mse = 0.010749, val mse = 0.000000.

epoch: numepochs = 28 , Took = 6 seconds; Full-batch train mse = 0.012717, val mse = 0.000000.

epoch: numepochs = 29 , Took = 6 seconds; Full-batch train mse = 0.011883, val mse = 0.000000.

epoch: numepochs = 30 , Took = 7 seconds; Full-batch train mse = 0.010562, val mse = 0.000000.

epoch: numepochs = 31 , Took = 6 seconds; Full-batch train mse = 0.010591, val mse = 0.000000.

epoch: numepochs = 32 , Took = 6 seconds; Full-batch train mse = 0.010389, val mse = 0.000000.

epoch: numepochs = 33 , Took = 6 seconds; Full-batch train mse = 0.015908, val mse = 0.000000.

epoch: numepochs = 34 , Took = 6 seconds; Full-batch train mse = 0.012413, val mse = 0.000000.

epoch: numepochs = 35 , Took = 6 seconds; Full-batch train mse = 0.010442, val mse = 0.000000.

epoch: numepochs = 36 , Took = 6 seconds; Full-batch train mse = 0.056686, val mse = 0.000000.

epoch: numepochs = 37 , Took = 6 seconds; Full-batch train mse = 0.054850, val mse = 0.000000.

epoch: numepochs = 38 , Took = 6 seconds; Full-batch train mse = 0.019422, val mse = 0.000000.

epoch: numepochs = 39 , Took = 6 seconds; Full-batch train mse = 0.016443, val mse = 0.000000.

epoch: numepochs = 40 , Took = 6 seconds; Full-batch train mse = 0.010289, val mse = 0.000000.

epoch: numepochs = 41 , Took = 7 seconds; Full-batch train mse = 0.022615, val mse = 0.000000.

epoch: numepochs = 42 , Took = 6 seconds; Full-batch train mse = 0.010723, val mse = 0.000000.

epoch: numepochs = 43 , Took = 6 seconds; Full-batch train mse = 0.010289, val mse = 0.000000.

epoch: numepochs = 44 , Took = 6 seconds; Full-batch train mse = 0.033933, val mse = 0.000000.

epoch: numepochs = 45 , Took = 7 seconds; Full-batch train mse = 0.030156, val mse = 0.000000.

epoch: numepochs = 46 , Took = 7 seconds; Full-batch train mse = 0.022068, val mse = 0.000000.

epoch: numepochs = 47 , Took = 7 seconds; Full-batch train mse = 0.029382, val mse = 0.000000.

epoch: numepochs = 48 , Took = 6 seconds; Full-batch train mse = 0.021275, val mse = 0.000000.

epoch: numepochs = 49 , Took = 6 seconds; Full-batch train mse = 0.039427, val mse = 0.000000.

epoch: numepochs = 50 , Took = 7 seconds; Full-batch train mse = 0.016674, val mse = 0.000000.

NNerror = 0.016674267332022572

Prediction Result

Value Prediction Error

0.6048934040010798 0.19097551722554007 -0.41391788677553976

0.5917463309959767 0.35726681238891195 -0.23447951860706479

0.5798180746808277 0.19232727566724744 -0.38749079901358024

0.39808885303777447 0.1926440400752866 -0.20544481296248787

0.4140924247674261 0.19529777426853168 -0.2187946504988944

0.08847408598189055 0.19110126347316514 0.10262717749127459

0.3583460134199821 0.21170344602417424 -0.1466425673958079

0.29635258460747904 0.3549086780038481 0.05855609339636908

0.21947238532147648 0.19156569159762857 -0.02790669372384791

0.5357166982629155 0.36018248221537214 -0.17553421604754332

0.5547810234563126 0.1912501730851674 -0.36353085037114524

0.40529948654006304 0.21826323152039923 -0.1870362550196638

0.4765320387665492 0.34409113646061484 -0.13244090230593436

0.05759629179315594 0.1914373047341408 0.13384101294098488

0.25415182638221206 0.29169483353745973 0.037543007155247665

0.2731217394258585 0.19452719525740314 -0.07859454416845535

0.021103715077802527 0.19131792203441428 0.17021420695661174

0.24098254783013137 0.334302879677641 0.09332033184750962

0.6300811731076671 0.3595001582783692 -0.2705810148292979

0.41827613603130404 0.195477735057971 -0.22279840097333303

0.2526404805902617 0.1945578268820965 -0.05808265370816518

0.16619916368077442 0.191265206532793 0.025066042852018577

0.007724491831775392 0.1909446242319318 0.1832201324001564

0.08926696720959378 0.19197139383958237 0.10270442662998859

0.4822857005955674 0.19244393418394434 -0.28984176641162307

0.12166559083216193 0.19242076231047756 0.07075517147831563

0.2883494676971952 0.30939742289582284 0.02104795519862762

0.38817298742061984 0.1909921814285587 -0.19718080599206114

0.34588396966368695 0.1957690915303307 -0.15011487813335625

0.19958641570784796 0.19348928314854685 -0.0060971325593011105

0.31340425691874024 0.19828489007869293 -0.11511936684004731

0.31775749422734 0.19211592601952254 -0.12564156820781747

0.48789392695999645 0.19120722177454247 -0.296686705185454

0.4359840834351843 0.3604340050247724 -0.07555007841041189

0.17359981155470314 0.1914334455263964 0.01783363397169327

0.3629355770221922 0.2004476969345776 -0.1624878800876146

0.4627621372503198 0.2111988404691097 -0.2515632967812101

0.49652077030838826 0.19101585452942166 -0.3055049157789666

0.12618599928245963 0.19939585850613975 0.07320985922368012

0.45276204270081455 0.1924159942977412 -0.26034604840307335

0.2837721853443281 0.2016124468403725 -0.08215973850395558

0.34590164213713354 0.3601210376231753 0.014219395486041786

0.1961497656762427 0.19408639222665872 -0.0020633734495839884

0.22135763175909048 0.27616370537642354 0.054806073617333056

0.43356473411523927 0.19150317510575426 -0.242061559009485

0.09566706862199378 0.19087327269062435 0.09520620406863056

0.29830626566849494 0.19959705355592236 -0.09870921211257258

0.3070532379895792 0.34322116560057725 0.036167927610998074

0.07052673330364767 0.19118739087384276 0.12066065757019509

0.5501181200918814 0.2024015375945202 -0.34771658249736126

0.31894277127298554 0.1917670097886867 -0.12717576148429885

0.08585450906008718 0.20848620726607436 0.12263169820598718

0.20245657700014166 0.19218060734066275 -0.010275969659478912

0.1712767340967007 0.1913375355437103 0.020060801447009613

0.3779192242827297 0.2035011707996587 -0.17441805348307102

0.241909871430447 0.19089783315658176 -0.051012038273865246

0.40578032667620945 0.3561807946562045 -0.049599532020004944

0.20834390560196567 0.19103138812628986 -0.017312517475675804

0.49675932490421343 0.1915234454414188 -0.3052358794627946

0.2342257039800733 0.1920213029433058 -0.04220440103676751

0.18045883957051312 0.20420037376704497 0.023741534196531855

0.2309607430153665 0.1912620988835584 -0.0396986441318081

0.40644947116571745 0.19173032204451546 -0.214719149121202

0.11691561072493983 0.19280159115148832 0.07588598042654848

0.05696889589626215 0.19083593927270395 0.13386704337644179

0.47164532559761124 0.2506614607550888 -0.22098386484252242

0.6208470748110626 0.35822718159638256 -0.26261989321468004

0.46559040490785325 0.2083058633813562 -0.25728454152649705

0.5052214973114583 0.19867901868911944 -0.30654247862233885

0.4127229537166962 0.35982142534462497 -0.05290152837207124

0.16960925650784137 0.19135483819811452 0.021745581690273158

0.19722393334464125 0.19080547758699506 -0.006418455757646185

0.4335762052660574 0.20920751654239156 -0.22436868872366583

0.1496556423910719 0.19090570957335065 0.04125006718227875

0.3015215343928844 0.1922591754560472 -0.10926235893683722

0.0 0.19143943303505245 0.19143943303505245

0.36555981464056164 0.19189800228180368 -0.17366181235875797

0.3963164889187304 0.19451555510717428 -0.20180093381155612

0.313325868748335 0.19168776655589245 -0.12163810219244256

0.5034713123520999 0.3339326133308013 -0.16953869902129853

0.4224576693623929 0.3539965263782299 -0.06846114298416295

0.08523050351506854 0.19132247714662606 0.10609197363155752

0.26080914691197654 0.19095418139777426 -0.06985496551420228

0.1324640982588358 0.19304336020222349 0.0605792619433877

0.13055674031551295 0.19224589387242375 0.06168915355691079

0.23625412018106318 0.1917614371123628 -0.044492683068700384

0.5019570376831385 0.3081554524341633 -0.19380158524897517

0.030390738837917763 0.19083521852879112 0.16044447969087336

0.34274552561551896 0.19120112478612986 -0.1515444008293891

0.4514974655646171 0.1916124319598441 -0.259885033604773

0.531777023034474 0.3515396924867077 -0.18023733054776636

0.367772718094668 0.3317275143536775 -0.03604520374099052

0.41600472261866916 0.22278029398575255 -0.1932244286329166

0.36506543552315474 0.325628070833062 -0.039437364690092735

0.314008782918081 0.32408907795815034 0.010080295040069354

0.2925887989109779 0.1921158349811155 -0.10047296392986238

0.4658619691058588 0.2146831464338164 -0.2511788226720424

0.2280242270958607 0.19158705334902099 -0.03643717374683972

0.5003581077100195 0.19149431703681175 -0.3088637906732078

0.4448442553362914 0.19086228548828346 -0.2539819698480079

Weight of Layer 1

1.3741710823232989 1.0997962988037757 -2.3077515870713716 2.0946962013291297 2.24588083756021 0.7186952475525394

-1.1885813301306254 0.25046447165487246 -1.253986920617667 1.535570764339994 0.1440090623878452 1.2110656874633237

-0.23784821158321864 -0.5133767761738681 0.5355594752965599 -0.9862762256909807 2.234245108441277 -0.5216923380767392

2.0496153507146033 -0.9000455162282417 1.3406201642695788 2.1185256789014897 1.038387978643167 -0.011886136436036997

2.4017180810229086 0.5342060426581219 2.188686239727936 -0.604587031465719 0.061697537675081446 -0.48429030459304306

-1.234468451038262 0.7790398934631602 -0.22067594788975725 -2.0414139797176176 -0.9324514648411226 0.798505045375407

0.843464180836847 1.8612698144445792 2.290144904438349 1.2291878648431667 2.3639566790099784 -2.1175568466779437

2.1488480623696975 2.253851655104785 -1.879142801282798 -0.23011616258273254 2.4342506675413413 -2.184430097374211

1.3446335417651347 0.39411399422872706 -1.4588967794444714 2.6567285233270366 -0.8576819932211762 -1.9914472547514181

1.4277752508742856 0.6379599194760166 -0.3783031968398195 1.4158689111045317 1.5318358789872808 -1.201612551759085

Weight of Layer 2

-1.264966034912716 2.045363428231917 0.39087016834115496 1.0930481252787911 -1.571245354275874 -0.9655062462170442 2.1709800176902982 -1.025175316866544 -1.5230797088149843 1.769571487127593 0.2347823358786302

-0.7297761283100554 -0.7576138927387723 -0.16523415352888424 -1.8516805610014189 1.3715533800487367 1.95737270209438 0.08246852496952446 1.0190398538209786 0.38679398113645574 0.529334650423367 0.43356573369591683

-1.494114816211369 0.47441528091212 -0.4329092188524354 1.8318597886945986 1.7458297556728688 -2.0957897682487707 -0.12195567981037991 0.0378548041005975 -0.6616871154791367 -1.2988919749370011 -2.0146727073983346

-2.0369675326219587 -0.6502946004953208 -0.5031500283188425 1.2033687522496184 -0.35900710238718897 -1.213965816985491 -0.6247306519040674 -1.172636102729738 0.1492977187034359 0.7805087252939967 0.42756349073372346

1.9444610499195103 -1.684949676049153 -2.214370792742285 1.4529568002779754 -0.817314868362314 -2.0437576009396534 1.414190131329268 0.8002930022447152 0.5103985267247684 1.072725074367445 0.7728306875824079

1.3280098832755556 -0.5723175992914568 1.8965322712004982 1.9131475857947766 0.7116308703102919 -1.1337078046228692 1.4624037563264591 -1.2661436485440505 0.2074359557718086 1.7605365810734208 0.5503309132836761

-0.12889849968368497 -1.5813673816630978 0.3179522409763945 -1.4093501587164268 0.37747278027064335 2.0973248562810287 1.3796729017078182 -1.32247724141541 0.05176617793309023 0.2797968400006565 0.2649190482622152

0.3553074482253876 -1.236624823614366 -1.7608721088406603 1.0024974314351445 -2.174840973945128 -0.6578975450189226 2.301788638015837 1.1479110741419556 -0.3844810208733819 -1.757161551068665 0.005888873948249745

1.0889152043326655 -0.4907523692459491 -1.5314215158890179 -2.068119203282905 -0.6966652421295678 1.7172836960101752 -1.030902225947676 1.7894526684520087 1.5213695041770878 -2.0310793395869613 -0.23638299504893515

-0.9900330517352804 -1.0052522373970076 -0.25640336055482327 0.5747216493939299 -0.6727702236294272 -0.0968976241335074 1.828632192751469 -1.2027447051840479 1.462449341909922 1.866886724932346 0.337038328583328

Weight of Layer 3

1.836452591890687 -1.1016514469136454 1.9551193591759655 -1.5732459452330396 -1.1800834414335224 -1.1923893040285825 0.06312897816177482 1.457981772832194 1.7288840730921886 1.0295473067747805 -0.6835578234742354

-1.281488825782123 1.9855453373804615 -0.21337154884305315 -0.8204219180246067 -0.8260103421573441 -1.3974890573670238 -0.18789338539658415 1.5852967650612315 -0.9475186470859063 -1.0100358806860719 1.0069697324461917

0.7336707009234793 -0.8190101361063539 -1.9821987263863545 -2.0789438096783956 -1.1812929830305807 -0.12007384037833814 -1.8876632918570322 -0.8968914717818263 1.3471019678011835 -0.152196929664138 -0.9273968120578239

-0.5427506117781439 -1.6379161635634556 1.6603536697042776 -0.2826332939784261 -1.7089295991871511 -0.3086776080327076 -0.838637438570992 0.45911079203796323 0.26754055606009786 -1.8482009521236713 -0.23130366979329475

-0.37585662596248814 0.4900765346436331 0.6860109049091877 -0.9572551404136589 0.30380993421860114 -1.73380834254428 -0.9187544507012596 -2.143513804630084 1.9614638862521159 0.513675117374747 1.7364284348955028

1.4300160181482728 -0.028644837063659466 0.9434705098905873 -1.256117787020064 -2.286119449246773 0.8392807137479158 1.0463524997789728 0.22524746294136241 -2.065346398428731 -1.3984782782329925 0.8064849904915904

-0.020036943079112954 1.3571828713761132 1.8168106691174883 1.1732287314521193 -1.1216050426635968 -0.7992471983374778 1.4737000544885857 -1.2629467521609772 -2.166720398969067 -0.13444189253030867 -0.06990910534870051

-1.1475378284991893 0.7939976065553747 -0.6034315465733754 1.2609824176884306 -1.0556544940783124 -1.2600392532679041 0.20515032057156965 -0.9368115470081553 1.8486189613353239 -1.748953620555969 0.5962310435917523

-1.6677307685118707 1.6475809798433472 0.8635630357973535 -1.794106867032129 0.23576724690928239 1.9345586718254242 1.8665220736111652 1.715231095424259 1.4448153663136947 1.4585220061056003 -2.097657471713302

0.8605510379359859 0.48221398085417627 1.5176826373865975 1.4652552486449435 1.9094578768378816 1.1144707218150902 0.4891850304975143 -1.6217012198757983 -0.26648664353939455 -0.702859436768092 1.0351022938433367

Weight of Layer 4

-0.911096181634767 1.393613461284606 -0.9330396913369214 1.2364172665419595 0.6539246160388238 -0.3497594712315348 -0.5272926339150141 1.0073562856426537 -0.7754136627565675 0.3978221698601255 0.6516969484538829

-2.1313935565863322 0.6870222803829747 -0.14063545929924332 -1.4601798330750633 -0.5046337368523596 0.45490880803140493 -0.3469665791558201 -1.1242340473155688 -1.2723993607026778 0.7754297084802044 -0.1971882092105515

1.268461391063688 1.433232422744769 -1.9734642121928314 -2.1500226866603094 1.69187327087415 -0.5342565995220732 -1.8236939987229444 -0.9248308295424809 -1.0585137171567485 -0.758573013381397 -1.4786474341672335

-1.3004208980147893 -1.4206386974340788 -1.83226719079787 1.853287649173723 -1.5346566916523345 0.18937676571029147 -2.14876104739136 0.19300765463829608 2.2600431704632133 -0.7439149441396848 -0.7944037931661944

-1.5998484896496197 -1.6524100951400051 1.5947517659570918 -1.8662327423253926 1.2338013894973758 -0.4884517327249603 -1.933617967710128 -0.16207327398832927 -0.257515806279318 0.8147979296307275 1.3995976816521387

2.094656544970861 -0.9941271469702547 1.6445550567163796 -1.8006734384410419 -1.639945317843356 -0.04008156577686328 -1.5983003403214098 -0.5203002571128433 1.7875581773282712 -1.094555900585581 1.2068765635018603

2.1254252250165493 -0.32295734337741594 -1.3909813447797978 -1.7675398403329456 1.6196489153696365 -0.11330208718956442 1.2249494342821745 -0.9623282852038381 0.5949871990921731 -0.4589253834864904 -1.4480879152384845

0.41124565810833846 1.8266387733496516 -1.3444906678350586 -1.8466017258629779 -1.2710822759712612 -2.3672244544192775 0.07985138355524748 1.1928505759997003 0.1224150977985233 1.581079240096574 -1.800780284328034

-1.7595274297258634 1.2703227203108252 -0.01664878904783711 -1.9795940886734285 -0.020392614773247077 -1.4314141516158432 -1.365137154825855 1.7302923882870662 1.9823859978980145 0.35232814451148275 0.7343215278791788

1.394419951767305 1.2499547753082167 -1.549641575886935 1.848772251833023 0.7361855983730334 0.6310928126046181 -0.8813463668969193 0.004308048034432659 0.43321638933450207 0.8087966273251945 0.07110982269880414

Weight of Layer 5

0.03585680860332078 0.6094562888567525 1.7968463081634347 -2.362627715032082 2.2247821225164652 -1.0784288939947677 -1.0554498524378448 0.5861411371363056 -1.3689382763820177 -1.6272403702710627 -1.6503549168843508

1.6301987233118784 -0.8906914047579433 0.24243168770601023 0.4009515829010596 -1.0758568810272509 -0.9051235220484805 -2.2014662058001826 -0.6016562255004753 -1.54618907737507 0.3068858681819345 -0.1910939351954038

0.3949377293208745 -0.7652271919004981 0.68090033725971 1.2348269143656605 2.197797374061151 0.7456474083783481 2.177221715481275 0.577448303135589 0.6713302440403498 -1.422241156275792 0.3923309252775803

2.016732930847877 0.9072966194060744 -1.173786606850799 0.762874680679405 0.5785929523331224 -0.2517270024395546 2.0525009570621475 1.3299906196120428 -0.3016789963343337 1.6433222480576049 1.4658027315448252

-0.7093708542273217 -0.363654080609567 0.14670807608588535 1.2229445344521144 2.0365363421196743 -0.025435674952313806 1.1537986276254326 -1.2324297702242806 0.7761473466711113 0.8799485068219668 -0.5873930067918902

0.3139747558740749 -1.6573144697633098 0.7038102951541025 -1.408818539999227 2.097157257533922 -0.7036366036514073 0.4160486916023455 0.8262172799352652 -1.4795358417499833 0.2852056896956041 -1.542169273346858

-1.3692080951184145 -0.18564381183893783 1.4773933766691916 1.566187371091047 2.2303361972935196 1.6867117590655034 -2.2562477027382437 -0.7074433661402227 0.31697668962943404 -0.7387985710568243 -1.1533917617505614

-1.2255743400186403 -1.0127411060516087 -1.3756205847124954 1.930937272879095 0.5512007768312437 -1.8860525843110458 1.83432047092595 1.7499230942835498 -0.05470238124314854 0.1415405841710963 0.5347734456158572

0.8685622790833061 1.0117880568953437 -1.0680283034993974 -0.6423950104042628 2.0957313900176207 -0.3292735667051877 0.4115339082100468 0.2448817017887727 -0.36690487429065827 1.0946609803320706 -1.2972731428065445

0.929738769296319 -0.8483315095032794 0.9886368647914796 -1.1490945738647198 0.48817906098502184 1.201937687948849 -1.8405795878382836 1.6127096671527423 -2.0480015245423417 -0.9757299992342688 0.5211781810863436

Weight of Layer 6

-1.1548299180301753 -1.6001306147116388 -2.387282014077577 -1.082677370520492 -0.013943138965433734 0.10533899958501511 0.5742412321742517 -1.5014155245560539 0.8057997937824102 1.8479652037781695 -0.23508934192649694

Spark机器学习(12):神经网络算法的更多相关文章

- Spark机器学习之协同过滤算法

Spark机器学习之协同过滤算法 一).协同过滤 1.1 概念 协同过滤是一种借助"集体计算"的途径.它利用大量已有的用户偏好来估计用户对其未接触过的物品的喜好程度.其内在思想是相 ...

- Spark机器学习(8):LDA主题模型算法

1. LDA基础知识 LDA(Latent Dirichlet Allocation)是一种主题模型.LDA一个三层贝叶斯概率模型,包含词.主题和文档三层结构. LDA是一个生成模型,可以用来生成一篇 ...

- Spark机器学习解析下集

上次我们讲过<Spark机器学习(上)>,本文是Spark机器学习的下部分,请点击回顾上部分,再更好地理解本文. 1.机器学习的常见算法 常见的机器学习算法有:l 构造条件概率:回归分 ...

- Spark机器学习笔记一

Spark机器学习库现支持两种接口的API:RDD-based和DataFrame-based,Spark官方网站上说,RDD-based APIs在2.0后进入维护模式,主要的机器学习API是spa ...

- 【转载】NeurIPS 2018 | 腾讯AI Lab详解3大热点:模型压缩、机器学习及最优化算法

原文:NeurIPS 2018 | 腾讯AI Lab详解3大热点:模型压缩.机器学习及最优化算法 导读 AI领域顶会NeurIPS正在加拿大蒙特利尔举办.本文针对实验室关注的几个研究热点,模型压缩.自 ...

- 机器学习&深度学习基础(机器学习基础的算法概述及代码)

参考:机器学习&深度学习算法及代码实现 Python3机器学习 传统机器学习算法 决策树.K邻近算法.支持向量机.朴素贝叶斯.神经网络.Logistic回归算法,聚类等. 一.机器学习算法及代 ...

- 掌握Spark机器学习库(课程目录)

第1章 初识机器学习 在本章中将带领大家概要了解什么是机器学习.机器学习在当前有哪些典型应用.机器学习的核心思想.常用的框架有哪些,该如何进行选型等相关问题. 1-1 导学 1-2 机器学习概述 1- ...

- Spark机器学习MLlib系列1(for python)--数据类型,向量,分布式矩阵,API

Spark机器学习MLlib系列1(for python)--数据类型,向量,分布式矩阵,API 关键词:Local vector,Labeled point,Local matrix,Distrib ...

- 客户流失?来看看大厂如何基于spark+机器学习构建千万数据规模上的用户留存模型 ⛵

作者:韩信子@ShowMeAI 大数据技术 ◉ 技能提升系列:https://www.showmeai.tech/tutorials/84 行业名企应用系列:https://www.showmeai. ...

随机推荐

- 基于TCPCopy的仿真压测方案

一.tcpcopy工具介绍 tcpcopy 是一个分布式在线压力测试工具,可以将线上流量拷贝到测试机器,实时的模拟线上环境,达到在程序不上线的情况下实时承担线上流量的效果,尽早发现 bug,增加上线信 ...

- this和super不能同时出现在构造方法中

package com.bjpowernode.t02inheritance.c09; /* * 使用super调用父类的构造方法 */public class TestSuper02 { publi ...

- spring配置redis注解缓存

前几天在spring整合Redis的时候使用了手动的方式,也就是可以手动的向redis添加缓存与清除缓存,参考:http://www.cnblogs.com/qlqwjy/p/8562703.html ...

- bzoj1026

题意: windy定义了一种windy数.不含前导零且相邻两个数字之差至少为2的正整数被称为windy数. windy想知道,在A和B之间,包括A和B,总共有多少个windy数? 数据范围 A,B≤ ...

- <noip2017>列队

题解: 考场实际得分:45 重新看了一下,发现至少80分是很好拿的 对于前30% 暴力 另20% 显然离线搞一下就可以了(大概当初连离线是啥都不知道) 另另30%其实只要维护第一行和最后一列就可以了, ...

- php 代码中的箭头“ ->”是什么意思

类是一个复杂数据类型,这个类型的数据主要有属性.方法两种东西. 属性其实是一些变量,可以存放数据,存放的数据可以是整数.字符串,也可以是数组,甚至是类. 方法实际上是一些函数,用来完成某些功能. 引用 ...

- 019 spark on yarn(Job的运行流程,可以对比mapreduce的yarn运行)

1.大纲 spark应用构成:Driver(资源申请.job调度) + Executors(Task具体执行) Yarn上应用运行构成:ApplicationMaster(资源申请.job调度) + ...

- U盘装机教程

http://winpe.uqidong.asia/upzxpxt/upzxpxt.html

- python中confIgparser模块学习

python中configparser模块学习 ConfigParser模块在python中用来读取配置文件,配置文件的格式跟windows下的ini配置文件相似,可以包含一个或多个节(section ...

- CentOS 6.4在运行XFS时系统crash的bug分析

最近有一台CentOS 6.4的服务器发生多次crash,kernel version 是Linux 2.6.32-431.29.2.el6.x86_64.从vmcore-dmesg日志内容及cras ...