第五章第四周习题: Transformers Architecture with TensorFlow

Transformer Network

Welcome to Week 4's assignment, the last assignment of Course 5 of the Deep Learning Specialization! And congratulations on making it to the last assignment of the entire Deep Learning Specialization - you're almost done!

Ealier in the course, you've implemented sequential neural networks such as RNNs, GRUs, and LSTMs. In this notebook you'll explore the Transformer architecture, a neural network that takes advantage of parallel processing and allows you to substantially speed up the training process.

After this assignment you'll be able to:

- Create positional encodings to capture sequential relationships in data

- Calculate scaled dot-product self-attention with word embeddings

- Implement masked multi-head attention

- Build and train a Transformer model

For the last time, let's get started!

Packages

Run the following cell to load the packages you'll need.

import tensorflow as tf

import pandas as pd

import time

import numpy as np

import matplotlib.pyplot as plt

from tensorflow.keras.layers import Embedding, MultiHeadAttention, Dense, Input, Dropout, LayerNormalization

from transformers import DistilBertTokenizerFast #, TFDistilBertModel

from transformers import TFDistilBertForTokenClassification

from tqdm import tqdm_notebook as tqdm

1 - Positional Encoding

In sequence to sequence tasks, the relative order of your data is extremely important to its meaning. When you were training sequential neural networks such as RNNs, you fed your inputs into the network in order. Information about the order of your data was automatically fed into your model. However, when you train a Transformer network using multi-head attention, you feed your data into the model all at once. While this dramatically reduces training time, there is no information about the order of your data. This is where positional encoding is useful - you can specifically encode the positions of your inputs and pass them into the network using these sine and cosine formulas:

\tag{1}\]

$$

PE_{(pos, 2i+1)}= cos\left(\frac{pos}{{10000}^{\frac{2i}{d}}}\right)

\tag{2}$$

- \(d\) is the dimension of the word embedding and positional encoding

- \(pos\) is the position of the word.

- \(i\) refers to each of the different dimensions of the positional encoding.

To develop some intuition about positional encodings, you can think of them broadly as a feature that contains the information about the relative positions of words. The sum of the positional encoding and word embedding is ultimately what is fed into the model. If you just hard code the positions in, say by adding a matrix of 1's or whole numbers to the word embedding, the semantic meaning is distorted. Conversely, the values of the sine and cosine equations are small enough (between -1 and 1) that when you add the positional encoding to a word embedding, the word embedding is not significantly distorted, and is instead enriched with positional information. Using a combination of these two equations helps your Transformer network attend to the relative positions of your input data. This was a short discussion on positional encodings, but develop further intuition, check out the Positional Encoding Ungraded Lab.

Note: In the lectures Andrew uses vertical vectors, but in this assignment all vectors are horizontal. All matrix multiplications should be adjusted accordingly.

1.1 - Sine and Cosine Angles

Notice that even though the sine and cosine positional encoding equations take in different arguments (2i versus 2i+1, or even versus odd numbers) the inner terms for both equations are the same: $$\theta(pos, i, d) = \frac{pos}{10000^{\frac{2i}{d}}} \tag{3}$$

Consider the inner term as you calculate the positional encoding for a word in a sequence.

\(PE_{(pos, 0)}= sin\left(\frac{pos}{{10000}^{\frac{0}{d}}}\right)\), since solving 2i = 0 gives i = 0

\(PE_{(pos, 1)}= cos\left(\frac{pos}{{10000}^{\frac{0}{d}}}\right)\), since solving 2i + 1 = 1 gives i = 0

The angle is the same for both! The angles for \(PE_{(pos, 2)}\) and \(PE_{(pos, 3)}\) are the same as well, since for both, i = 1 and therefore the inner term is \(\left(\frac{pos}{{10000}^{\frac{1}{d}}}\right)\). This relationship holds true for all paired sine and cosine curves:

| k | 0 |

1 |

2 |

3 |

... |

d - 2 |

d - 1 |

|---|---|---|---|---|---|---|---|

| encoding(0) = | [\(sin(\theta(0, 0, d))\) | \(cos(\theta(0, 0, d))\) | \(sin(\theta(0, 1, d))\) | \(cos(\theta(0, 1, d))\) | ... | \(sin(\theta(0, d//2, d))\) | \(cos(\theta(0, d//2, d))\)] |

| encoding(1) = | [\(sin(\theta(1, 0, d))\) | \(cos(\theta(1, 0, d))\) | \(sin(\theta(1, 1, d))\) | \(cos(\theta(1, 1, d))\) | ... | \(sin(\theta(1, d//2, d))\) | \(cos(\theta(1, d//2, d))\)] |

...

| encoding(pos) = | [\(sin(\theta(pos, 0, d))\)| \(cos(\theta(pos, 0, d))\)| \(sin(\theta(pos, 1, d))\)| \(cos(\theta(pos, 1, d))\)|... |\(sin(\theta(pos, d//2, d))\)| \(cos(\theta(pos, d//2, d))]\)|

Exercise 1 - get_angles

Implement the function get_angles() to calculate the possible angles for the sine and cosine positional encodings

Hints

- If

k = [0, 1, 2, 3, 4, 5], then,imust bei = [0, 0, 1, 1, 2, 2] i = k//2

# UNQ_C1 (UNIQUE CELL IDENTIFIER, DO NOT EDIT)

# GRADED FUNCTION get_angles

def get_angles(pos, k, d):

"""

Get the angles for the positional encoding

Arguments:

pos -- Column vector containing the positions [[0], [1], ...,[N-1]]

k -- Row vector containing the dimension span [[0, 1, 2, ..., d-1]]

d(integer) -- Encoding size

Returns:

angles -- (pos, d) numpy array

"""

# START CODE HERE

# Get i from dimension span k

i =k//2

# Calculate the angles using pos, i and d

angles = pos/(np.power(10000,2*i/d))

# END CODE HERE

return angles

then

from public_tests import *

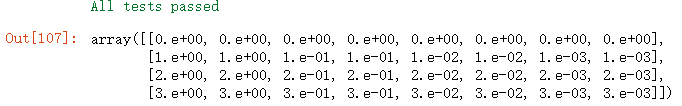

get_angles_test(get_angles)

# Example

position = 4

d_model = 8

pos_m = np.arange(position)[:, np.newaxis]

dims = np.arange(d_model)[np.newaxis, :]

get_angles(pos_m, dims, d_model)

1.2 - Sine and Cosine Positional Encodings

Now you can use the angles you computed to calculate the sine and cosine positional encodings.

\]

$$

PE_{(pos, 2i+1)}= cos\left(\frac{pos}{{10000}^{\frac{2i}{d}}}\right)

$$

Exercise 2 - positional_encoding

Implement the function positional_encoding() to calculate the sine and cosine positional encodings

Reminder: Use the sine equation when \(i\) is an even number and the cosine equation when \(i\) is an odd number.

Additional Hints

- You may find

np.newaxis useful depending on the implementation you choose.

# UNQ_C2 (UNIQUE CELL IDENTIFIER, DO NOT EDIT)

# GRADED FUNCTION positional_encoding

def positional_encoding(positions, d):

"""

Precomputes a matrix with all the positional encodings

Arguments:

positions (int) -- Maximum number of positions to be encoded ,要编码的单词的数量

d (int) -- Encoding size ,编码的维度

Returns:

pos_encoding -- (1, position, d_model) A matrix with the positional encodings

"""

# START CODE HERE

# initialize a matrix angle_rads of all the angles

angle_rads = get_angles( np.arange(positions)[:, np.newaxis],#变为列向量,值为0~positions-1

np.arange(d)[np.newaxis, :],#同理

d)

# apply sin to even indices in the array; 2i

angle_rads[:, 0::2] = np.sin(angle_rads[:, 0::2])#语法为seq[start:end:step],即从0开始步长为2,一定要注意左右两边的维度要相同

# apply cos to odd indices in the array; 2i+1

angle_rads[:, 1::2] = np.cos(angle_rads[:, 1::2])

# END CODE HERE

pos_encoding = angle_rads[np.newaxis, ...]

return tf.cast(pos_encoding, dtype=tf.float32)

then

# UNIT TEST

positional_encoding_test(positional_encoding, get_angles)

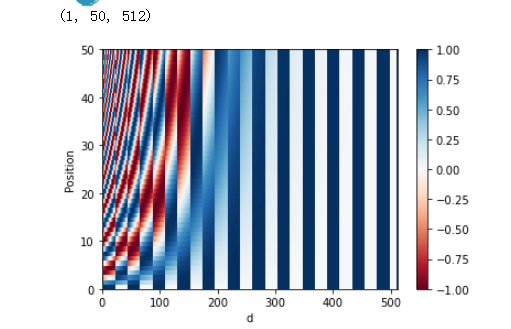

Nice work calculating the positional encodings! Now you can visualize them.

pos_encoding = positional_encoding(50, 512)

print (pos_encoding.shape)

plt.pcolormesh(pos_encoding[0], cmap='RdBu')

plt.xlabel('d')

plt.xlim((0, 512))

plt.ylabel('Position')

plt.colorbar()

plt.show()

Each row represents a positional encoding - notice how none of the rows are identical! You have created a unique positional encoding for each of the words.

2 - Masking

There are two types of masks that are useful when building your Transformer network: the padding mask and the look-ahead mask. Both help the softmax computation give the appropriate weights to the words in your input sentence.

2.1 - Padding Mask

Oftentimes your input sequence will exceed the maximum length of a sequence your network can process. Let's say the maximum length of your model is five, it is fed the following sequences:

[["Do", "you", "know", "when", "Jane", "is", "going", "to", "visit", "Africa"],

["Jane", "visits", "Africa", "in", "September" ],

["Exciting", "!"]

]

which might get vectorized as:

[[ 71, 121, 4, 56, 99, 2344, 345, 1284, 15],

[ 56, 1285, 15, 181, 545],

[ 87, 600]

]

When passing sequences into a transformer model, it is important that they are of uniform length. You can achieve this by padding the sequence with zeros, and truncating sentences that exceed the maximum length of your model:

[[ 71, 121, 4, 56, 99],

[ 2344, 345, 1284, 15, 0],

[ 56, 1285, 15, 181, 545],

[ 87, 600, 0, 0, 0],

]

Sequences longer than the maximum length of five will be truncated, and zeros will be added to the truncated sequence to achieve uniform length. Similarly, for sequences shorter than the maximum length, they zeros will also be added for padding. However, these zeros will affect the softmax calculation - this is when a padding mask comes in handy! You will need to define a boolean mask that specifies which elements you must attend(1) and which elements you must ignore(0). Later you will use that mask to set all the zeros in the sequence to a value close to negative infinity (-1e9). We'll implement this for you so you can get to the fun of building the Transformer network! Just make sure you go through the code so you can correctly implement padding when building your model.

After masking, your input should go from [87, 600, 0, 0, 0] to [87, 600, -1e9, -1e9, -1e9], so that when you take the softmax, the zeros don't affect the score.

The MultiheadAttention layer implemented in Keras, use this masking logic.

def create_padding_mask(decoder_token_ids):

"""

Creates a matrix mask for the padding cells

Arguments:

decoder_token_ids -- (n, m) matrix

Returns:

mask -- (n, 1, 1, m) binary tensor

"""

seq = 1 - tf.cast(tf.math.equal(decoder_token_ids, 0), tf.float32)

# add extra dimensions to add the padding

# to the attention logits.

return seq[:, tf.newaxis, :]

then

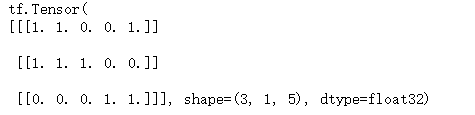

x = tf.constant([[7., 6., 0., 0., 1.], [1., 2., 3., 0., 0.], [0., 0., 0., 4., 5.]])

print(create_padding_mask(x))#可以看到,就是将非零的位置标为1,将数值为0的位置标为0

If we multiply (1 - mask) by -1e9 and add it to the sample input sequences, the zeros are essentially set to negative infinity. Notice the difference when taking the softmax of the original sequence and the masked sequence:

print(tf.keras.activations.softmax(x))

print(tf.keras.activations.softmax(x + (1 - create_padding_mask(x)) * -1.0e9))#将1-mask乘以一个极小值,那么表示0的掩码就变为一个极小值

#总体相当于在原始x的基础上,将为0的部分变为一个极小值而非零部分基本不做改变

2.2 - Look-ahead Mask

The look-ahead mask follows similar intuition. In training, you will have access to the complete correct output of your training example. The look-ahead mask helps your model pretend that it correctly predicted a part of the output and see if, without looking ahead, it can correctly predict the next output.

For example, if the expected correct output is [1, 2, 3] and you wanted to see if given that the model correctly predicted the first value it could predict the second value, you would mask out the second and third values. So you would input the masked sequence [1, -1e9, -1e9] and see if it could generate [1, 2, -1e9].

Just because you've worked so hard, we'll also implement this mask for you . Again, take a close look at the code so you can effictively implement it later.

def create_look_ahead_mask(sequence_length):

"""

Returns an upper triangular matrix filled with ones

Arguments:

sequence_length -- matrix size

Returns:

mask -- (size, size) tensor

"""

mask = tf.linalg.band_part(tf.ones((1, sequence_length, sequence_length)), -1, 0)

return mask

then

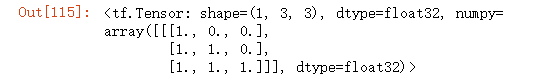

x = tf.random.uniform((1, 3))

temp = create_look_ahead_mask(x.shape[1])

temp

3 - Self-Attention

As the authors of the Transformers paper state, "Attention is All You Need".

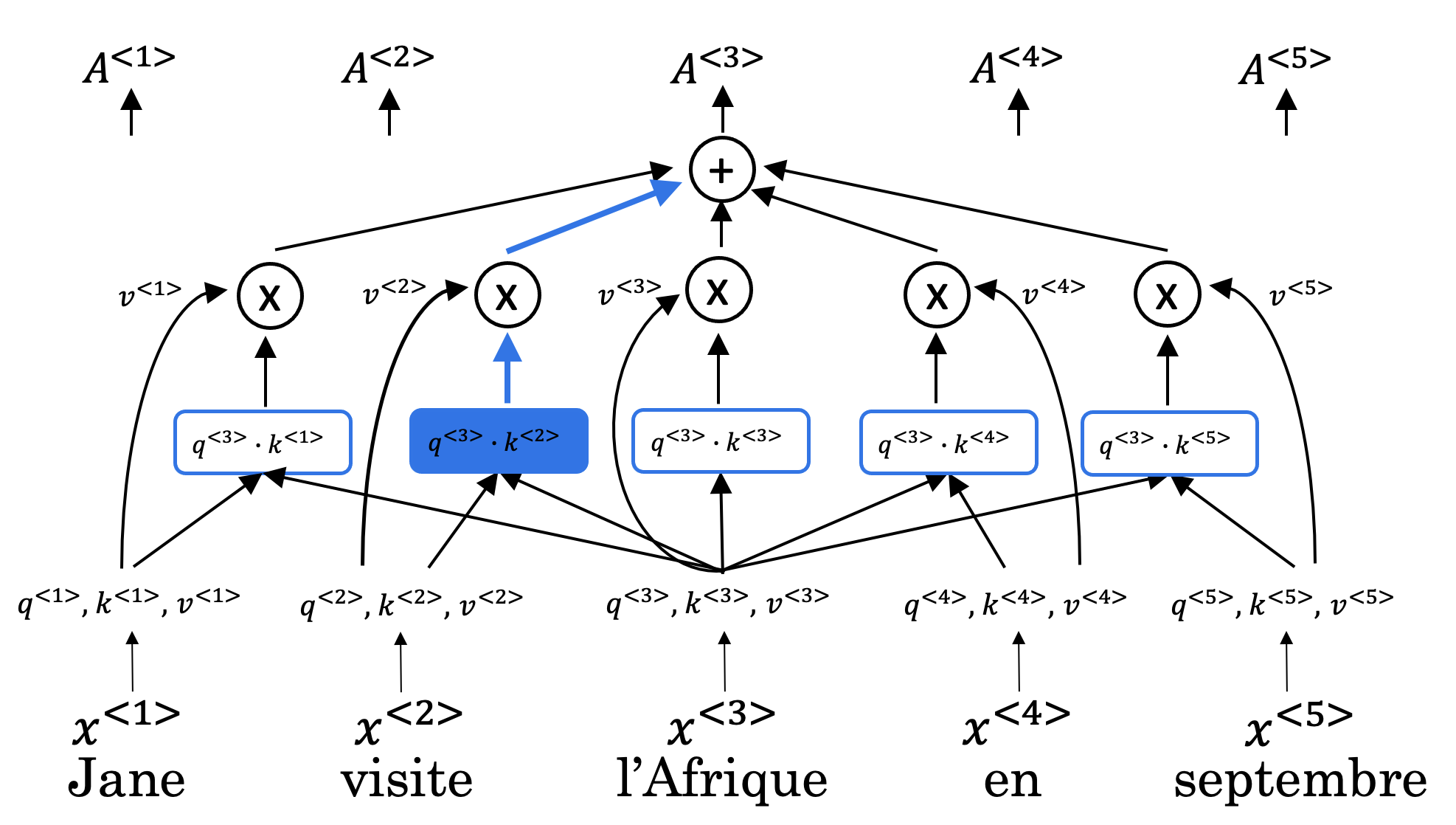

Figure 1: Self-Attention calculation visualization

The use of self-attention paired with traditional convolutional networks allows for the parallization which speeds up training. You will implement scaled dot product attention which takes in a query, key, value, and a mask as inputs to returns rich, attention-based vector representations of the words in your sequence. This type of self-attention can be mathematically expressed as:

\]

- \(Q\) is the matrix of queries

- \(K\) is the matrix of keys

- \(V\) is the matrix of values

- \(M\) is the optional mask you choose to apply

- \({d_k}\) is the dimension of the keys, which is used to scale everything down so the softmax doesn't explode

Exercise 3 - scaled_dot_product_attention

Implement the function `scaled_dot_product_attention()` to create attention-based representations

Reminder: The boolean mask parameter can be passed in as none or as either padding or look-ahead.

Multiply (1. - mask) by -1e9 before applying the softmax.

Additional Hints

- You may find tf.matmul useful for matrix multiplication.

# UNQ_C3 (UNIQUE CELL IDENTIFIER, DO NOT EDIT)

# GRADED FUNCTION scaled_dot_product_attention

def scaled_dot_product_attention(q, k, v, mask):

"""

Calculate the attention weights.

q, k, v must have matching leading dimensions.

k, v must have matching penultimate dimension, i.e.: seq_len_k = seq_len_v.

The mask has different shapes depending on its type(padding or look ahead)

but it must be broadcastable for addition.

Arguments:

q -- query shape == (..., seq_len_q, depth)

k -- key shape == (..., seq_len_k, depth)

v -- value shape == (..., seq_len_v, depth_v)

mask: Float tensor with shape broadcastable

to (..., seq_len_q, seq_len_k). Defaults to None.

Returns:

output -- attention_weights

"""

# START CODE HERE

matmul_qk = tf.matmul(q,k,transpose_b=True) # (..., seq_len_q, seq_len_k)

# scale matmul_qk

dk = float(k.shape[1])#一定要注意要将dk换为float型,否则在下一步会出现格式错误,

#这里k的维度到底是哪个我也不确定,因为这里k.shape为(4,4)

scaled_attention_logits = matmul_qk/tf.sqrt(dk)

# add the mask to the scaled tensor.

if mask is not None: # Don't replace this None

scaled_attention_logits += (1.-mask)* -1e9

# softmax is normalized on the last axis (seq_len_k) so that the scores

# add up to 1.

attention_weights = tf.keras.activations.softmax(scaled_attention_logits,axis=-1 ) # (..., seq_len_q, seq_len_k)

output = tf.matmul(attention_weights,v) # (..., seq_len_q, depth_v)

# END CODE HERE

return output, attention_weights

then

# UNIT TEST

scaled_dot_product_attention_test(scaled_dot_product_attention

Excellent work! You can now implement self-attention. With that, you can start building the encoder block!

4 - Encoder

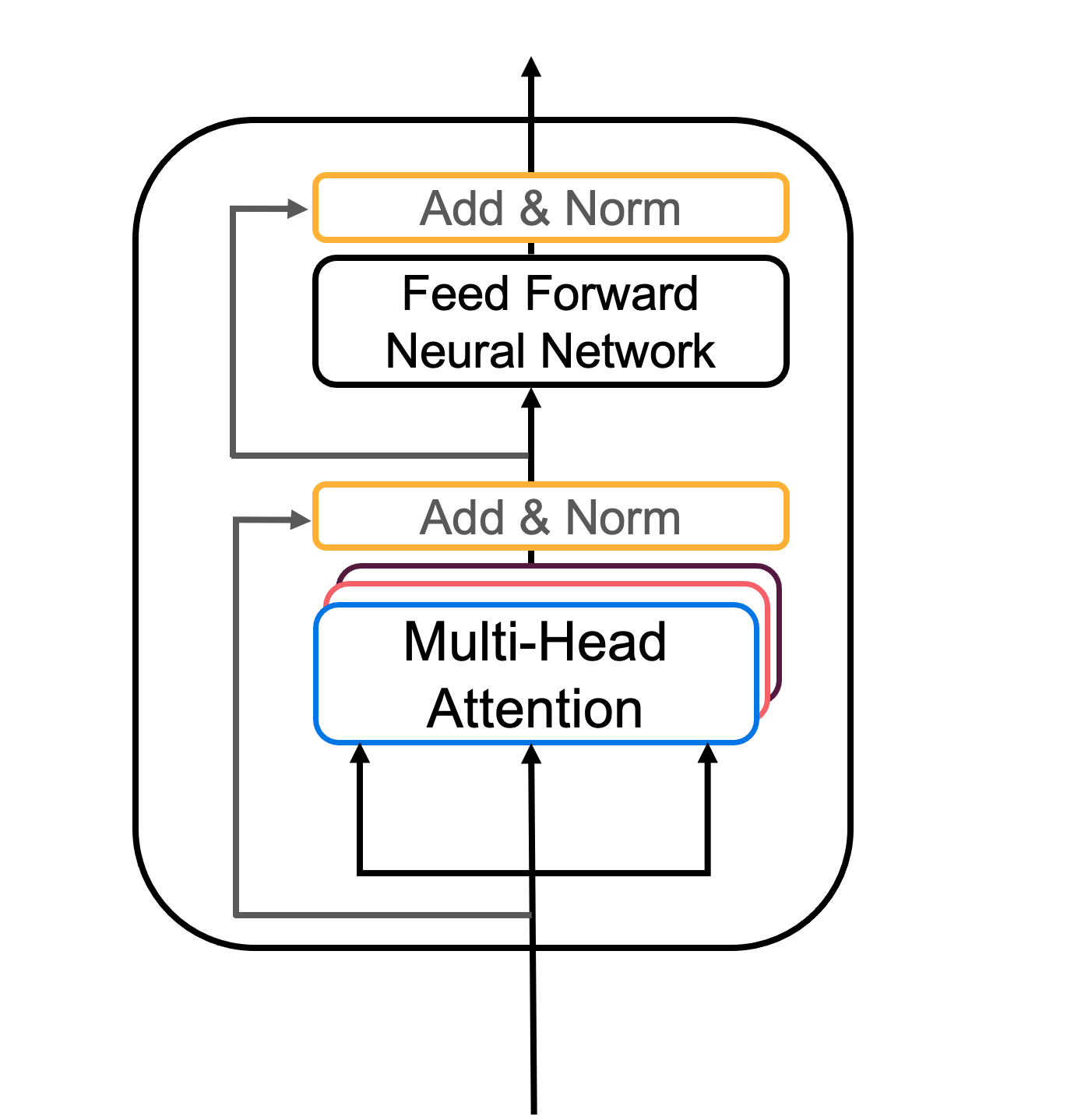

The Transformer Encoder layer pairs self-attention and convolutional neural network style of processing to improve the speed of training and passes K and V matrices to the Decoder, which you'll build later in the assignment. In this section of the assignment, you will implement the Encoder by pairing multi-head attention and a feed forward neural network (Figure 2a).

Figure 2a: Transformer encoder layer

MultiHeadAttentionyou can think of as computing the self-attention several times to detect different features.- Feed forward neural network contains two Dense layers which we'll implement as the function

FullyConnected

Your input sentence first passes through a multi-head attention layer, where the encoder looks at other words in the input sentence as it encodes a specific word. The outputs of the multi-head attention layer are then fed to a feed forward neural network. The exact same feed forward network is independently applied to each position.

- For the

MultiHeadAttentionlayer, you will use the Keras implementation. If you're curious about how to split the query matrix Q, key matrix K, and value matrix V into different heads, you can look through the implementation. - You will also use the Sequential API with two dense layers to built the feed forward neural network layers.

def FullyConnected(embedding_dim, fully_connected_dim):

return tf.keras.Sequential([

tf.keras.layers.Dense(fully_connected_dim, activation='relu'), # (batch_size, seq_len, dff)

tf.keras.layers.Dense(embedding_dim) # (batch_size, seq_len, d_model)

])

4.1 Encoder Layer

Now you can pair multi-head attention and feed forward neural network together in an encoder layer! You will also use residual connections and layer normalization to help speed up training (Figure 2a).

Exercise 4 - EncoderLayer

Implement EncoderLayer() using the call() method

In this exercise, you will implement one encoder block (Figure 2) using the call() method. The function should perform the following steps:

- You will pass the Q, V, K matrices and a boolean mask to a multi-head attention layer. Remember that to compute self-attention Q, V and K should be the same. Let the default values for

return_attention_scoresandtraining. You will also perform Dropout in this multi-head attention layer during training. - Now add a skip connection by adding your original input

xand the output of the your multi-head attention layer. - After adding the skip connection, pass the output through the first normalization layer.

- Finally, repeat steps 1-3 but with the feed forward neural network with a dropout layer instead of the multi-head attention layer.

- The

__init__method creates all the layers that will be accesed by the thecallmethod. Wherever you want to use a layer defined inside the__init__method you will have to use the syntaxself.[insert layer name]. - You will find the documentation of MultiHeadAttention helpful. Note that if query, key and value are the same, then this function performs self-attention.

- The call arguments for

self.mhaare (Where B is for batch_size, T is for target sequence shapes, and S is output_shape):

query: Query Tensor of shape (B, T, dim).value: Value Tensor of shape (B, S, dim).key: Optional key Tensor of shape (B, S, dim). If not given, will use value for both key and value, which is the most common case.attention_mask: a boolean mask of shape (B, T, S), that prevents attention to certain positions. The boolean mask specifies which query elements can attend to which key elements, 1 indicates attention and 0 indicates no attention. Broadcasting can happen for the missing batch dimensions and the head dimension.return_attention_scores: A boolean to indicate whether the output should be attention output if True, or (attention_output, attention_scores) if False. Defaults to False.training: Python boolean indicating whether the layer should behave in training mode (adding dropout) or in inference mode (no dropout). Defaults to either using the training mode of the parent layer/model, or False (inference) if there is no parent layer.

# UNQ_C4 (UNIQUE CELL IDENTIFIER, DO NOT EDIT)

# GRADED FUNCTION EncoderLayer

class EncoderLayer(tf.keras.layers.Layer):

"""

The encoder layer is composed by a multi-head self-attention mechanism,

followed by a simple, positionwise fully connected feed-forward network.

This archirecture includes a residual connection around each of the two

sub-layers, followed by layer normalization.

"""

def __init__(self, embedding_dim, num_heads, fully_connected_dim,

dropout_rate=0.1, layernorm_eps=1e-6):

super(EncoderLayer, self).__init__()

self.mha = MultiHeadAttention(num_heads=num_heads,

key_dim=embedding_dim,

dropout=dropout_rate)

self.ffn = FullyConnected(embedding_dim=embedding_dim,

fully_connected_dim=fully_connected_dim)#实现两个dense层,见上面实现代码

self.layernorm1 = LayerNormalization(epsilon=layernorm_eps)#用作对不同时间步进行标准化

self.layernorm2 = LayerNormalization(epsilon=layernorm_eps)

self.dropout_ffn = Dropout(dropout_rate)

def call(self, x, training, mask):

"""

Forward pass for the Encoder Layer

Arguments:

x -- Tensor of shape (batch_size, input_seq_len, fully_connected_dim),要注意x已经是tensor(张量)了,不需要在转换

training -- Boolean, set to true to activate

the training mode for dropout layers

mask -- Boolean mask to ensure that the padding is not

treated as part of the input

Returns:

encoder_layer_out -- Tensor of shape (batch_size, input_seq_len, fully_connected_dim)

"""

# START CODE HERE

# calculate self-attention using mha(~1 line). Dropout will be applied during training

attn_output = self.mha(query=x,

value=x,

key=x,

attention_mask=mask,training=training) # Self attention (batch_size, input_seq_len, fully_connected_dim)

#前面的hints里又说training为默认值,默认值即为true所以这里删去training也可以,所以这里实际已经droupout了不需要单独的dense层在执行

# apply layer normalization on sum of the input and the attention output to get the

# output of the multi-head attention layer (~1 line)

out1 = self.layernorm1(x+attn_output) # (batch_size, input_seq_len, fully_connected_dim)

# pass the output of the multi-head attention layer through a ffn (~1 line)

ffn_output = self.ffn(out1) # (batch_size, input_seq_len, fully_connected_dim)

# apply dropout layer to ffn output during training (~1 line)

ffn_output = self.dropout_ffn(ffn_output,training)

# apply layer normalization on sum of the output from multi-head attention and ffn output to get the

# output of the encoder layer (~1 line)

encoder_layer_out = self.layernorm2(out1+ffn_output) # (batch_size, input_seq_len, fully_connected_dim)

# END CODE HERE

return encoder_layer_out

then

# UNIT TEST

EncoderLayer_test(EncoderLayer)

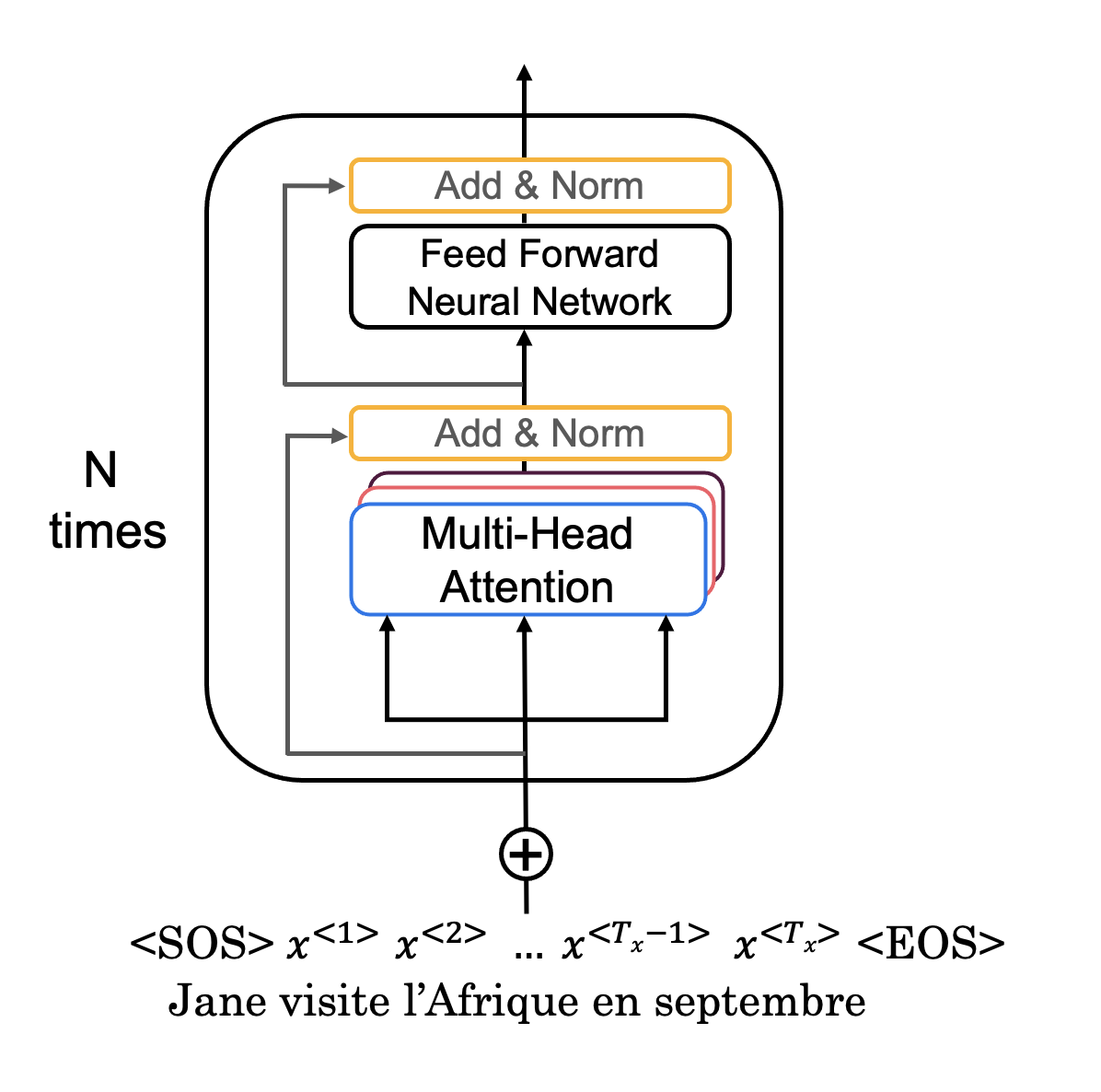

4.2 - Full Encoder

Awesome job! You have now successfully implemented positional encoding, self-attention, and an encoder layer - give yourself a pat on the back. Now you're ready to build the full Transformer Encoder (Figure 2b), where you will embedd your input and add the positional encodings you calculated. You will then feed your encoded embeddings to a stack of Encoder layers.

Figure 2b: Transformer Encoder

Exercise 5 - Encoder

Complete the Encoder() function using the call() method to embed your input, add positional encoding, and implement multiple encoder layers

In this exercise, you will initialize your Encoder with an Embedding layer, positional encoding, and multiple EncoderLayers. Your call() method will perform the following steps:

- Pass your input through the Embedding layer.

- Scale your embedding by multiplying it by the square root of your embedding dimension. Remember to cast the embedding dimension to data type

tf.float32before computing the square root. - Add the position encoding: self.pos_encoding

[:, :seq_len, :]to your embedding. - Pass the encoded embedding through a dropout layer, remembering to use the

trainingparameter to set the model training mode. - Pass the output of the dropout layer through the stack of encoding layers using a for loop.

# UNQ_C5 (UNIQUE CELL IDENTIFIER, DO NOT EDIT)

# GRADED FUNCTION

class Encoder(tf.keras.layers.Layer):

"""

The entire Encoder starts by passing the input to an embedding layer

and using positional encoding to then pass the output through a stack of

encoder Layers

"""

def __init__(self, num_layers, embedding_dim, num_heads, fully_connected_dim, input_vocab_size,

maximum_position_encoding, dropout_rate=0.1, layernorm_eps=1e-6):

super(Encoder, self).__init__()

self.embedding_dim = embedding_dim

self.num_layers = num_layers

self.embedding = Embedding(input_vocab_size, self.embedding_dim)

self.pos_encoding = positional_encoding(maximum_position_encoding,

self.embedding_dim)

self.enc_layers = [EncoderLayer(embedding_dim=self.embedding_dim,

num_heads=num_heads,

fully_connected_dim=fully_connected_dim,

dropout_rate=dropout_rate,

layernorm_eps=layernorm_eps)

for _ in range(self.num_layers)]

self.dropout = Dropout(dropout_rate)

def call(self, x, training, mask):

"""

Forward pass for the Encoder

Arguments:

x -- Tensor of shape (batch_size, input_seq_len)

training -- Boolean, set to true to activate

the training mode for dropout layers

mask -- Boolean mask to ensure that the padding is not

treated as part of the input

Returns:

out2 -- Tensor of shape (batch_size, input_seq_len, fully_connected_dim)

"""

#mask = create_padding_mask(x)

seq_len = tf.shape(x)[1]

# START CODE HERE

# Pass input through the Embedding layer

x = self.embedding(x) # (batch_size, input_seq_len, fully_connected_dim)

# Scale embedding by multiplying it by the square root of the embedding dimension

x *= np.sqrt(self.embedding_dim)

# Add the position encoding to embedding

x += self.pos_encoding[:, :seq_len, :]

# Pass the encoded embedding through a dropout layer

x = self.dropout(x,training)

# Pass the output through the stack of encoding layers

for i in range(self.num_layers):

x = self.enc_layers[i](x, training, mask)

# END CODE HERE

return x # (batch_size, input_seq_len, fully_connected_dim)

then测试

# UNIT TEST

Encoder_test(Encoder)

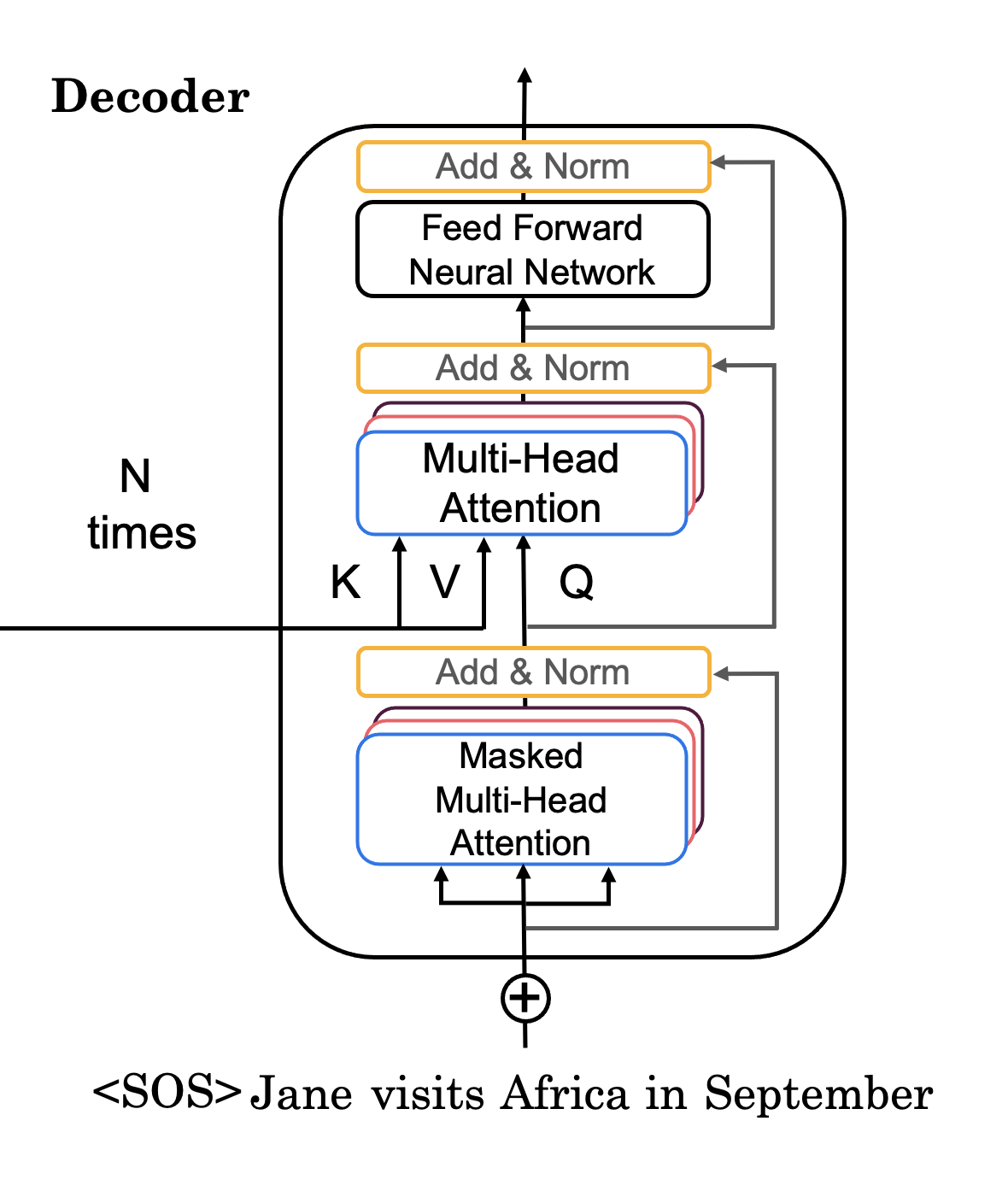

5 - Decoder

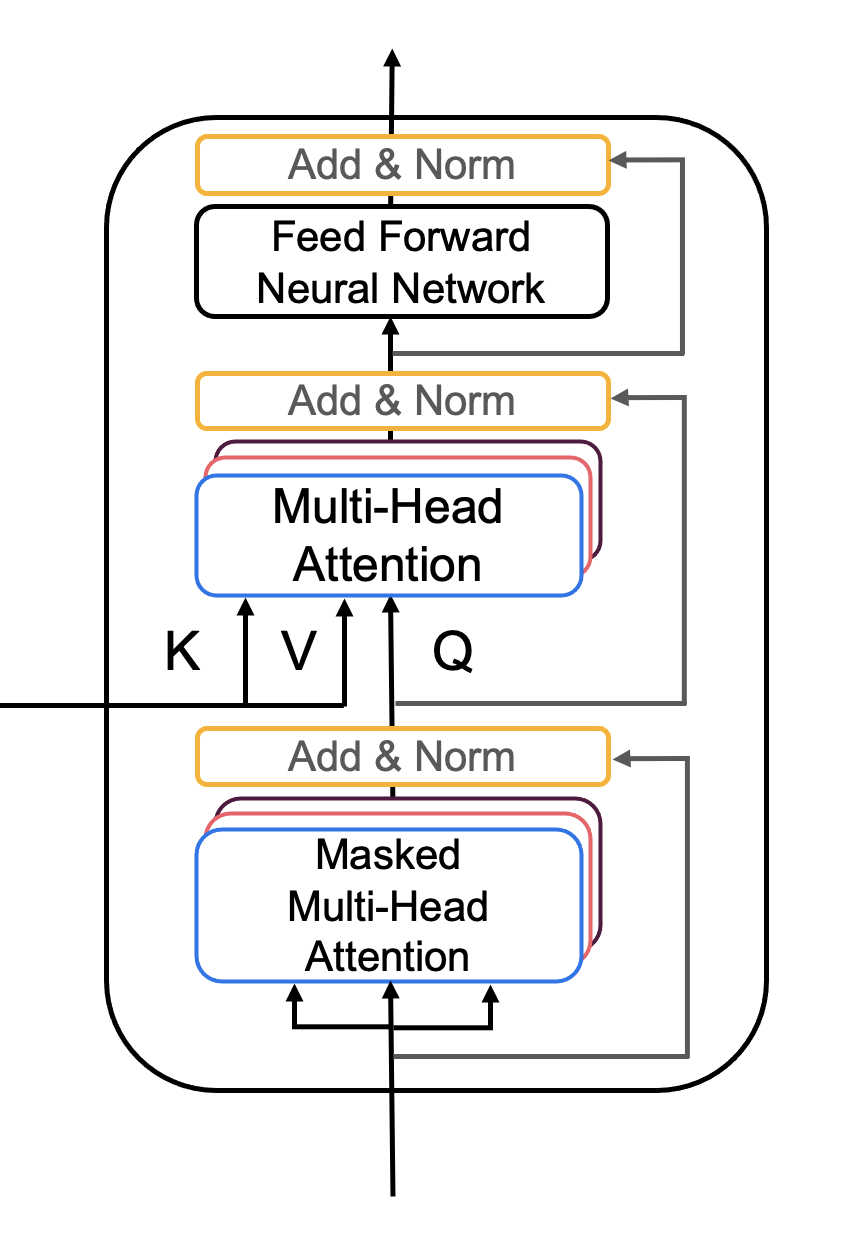

The Decoder layer takes the K and V matrices generated by the Encoder and in computes the second multi-head attention layer with the Q matrix from the output (Figure 3a).

Figure 3a: Transformer Decoder layer

5.1 - Decoder Layer

Again, you'll pair multi-head attention with a feed forward neural network, but this time you'll implement two multi-head attention layers. You will also use residual connections and layer normalization to help speed up training (Figure 3a).

Exercise 6 - DecoderLayer

Implement DecoderLayer() using the call() method

- Block 1 is a multi-head attention layer with a residual connection, and look-ahead mask. Like in the

EncoderLayer, Dropout is defined within the multi-head attention layer. - Block 2 will take into account the output of the Encoder, so the multi-head attention layer will receive K and V from the encoder, and Q from the Block 1. You will then apply a normalization layer and a residual connection, just like you did before with the

EncoderLayer. - Finally, Block 3 is a feed forward neural network with dropout and normalization layers and a residual connection.

Additional Hints:

- The first two blocks are fairly similar to the EncoderLayer except you will return

attention_scoreswhen computing self-attention

# UNQ_C6 (UNIQUE CELL IDENTIFIER, DO NOT EDIT)

# GRADED FUNCTION DecoderLayer

class DecoderLayer(tf.keras.layers.Layer):

"""

The decoder layer is composed by two multi-head attention blocks,

one that takes the new input and uses self-attention, and the other

one that combines it with the output of the encoder, followed by a

fully connected block.

"""

def __init__(self, embedding_dim, num_heads, fully_connected_dim, dropout_rate=0.1, layernorm_eps=1e-6):

super(DecoderLayer, self).__init__()

self.mha1 = MultiHeadAttention(num_heads=num_heads,

key_dim=embedding_dim,

dropout=dropout_rate)

self.mha2 = MultiHeadAttention(num_heads=num_heads,

key_dim=embedding_dim,

dropout=dropout_rate)

self.ffn = FullyConnected(embedding_dim=embedding_dim,

fully_connected_dim=fully_connected_dim)

self.layernorm1 = LayerNormalization(epsilon=layernorm_eps)

self.layernorm2 = LayerNormalization(epsilon=layernorm_eps)

self.layernorm3 = LayerNormalization(epsilon=layernorm_eps)

self.dropout_ffn = Dropout(dropout_rate)

def call(self, x, enc_output, training, look_ahead_mask, padding_mask):

"""

Forward pass for the Decoder Layer

Arguments:

x -- Tensor of shape (batch_size, target_seq_len, fully_connected_dim)

enc_output -- Tensor of shape(batch_size, input_seq_len, fully_connected_dim)

training -- Boolean, set to true to activate

the training mode for dropout layers

look_ahead_mask -- Boolean mask for the target_input

padding_mask -- Boolean mask for the second multihead attention layer

Returns:

out3 -- Tensor of shape (batch_size, target_seq_len, fully_connected_dim)

attn_weights_block1 -- Tensor of shape(batch_size, num_heads, target_seq_len, input_seq_len)

attn_weights_block2 -- Tensor of shape(batch_size, num_heads, target_seq_len, input_seq_len)

"""

# START CODE HERE

# enc_output.shape == (batch_size, input_seq_len, fully_connected_dim)

# BLOCK 1

# calculate self-attention and return attention scores as attn_weights_block1.

# Dropout will be applied during training (~1 line).

mult_attn_out1, attn_weights_block1 = self.mha1(query=x,

value=x,

key=x,

attention_mask=look_ahead_mask,

return_attention_scores=True,

training=training) # (batch_size, target_seq_len, d_model)

# apply layer normalization (layernorm1) to the sum of the attention output and the input (~1 line)

Q1 = self.layernorm1(x + mult_attn_out1)

# BLOCK 2

# calculate self-attention using the Q from the first block and K and V from the encoder output.

# Dropout will be applied during training

# Return attention scores as attn_weights_block2 (~1 line)

mult_attn_out2, attn_weights_block2 = self.mha2(query=Q1,

value=enc_output,

key=enc_output,

attention_mask=padding_mask,

return_attention_scores=True,

training=training) # (batch_size, target_seq_len, d_model)

# apply layer normalization (layernorm2) to the sum of the attention output and the output of the first block (~1 line)

mult_attn_out2 = self.layernorm1(Q1 + mult_attn_out2) # (batch_size, target_seq_len, fully_connected_dim)

#BLOCK 3

# pass the output of the second block through a ffn

ffn_output = self.ffn(mult_attn_out2) # (batch_size, target_seq_len, fully_connected_dim)

# apply a dropout layer to the ffn output

ffn_output = self.dropout_ffn(ffn_output,training)

# apply layer normalization (layernorm3) to the sum of the ffn output and the output of the second block

out3 = self.layernorm3(mult_attn_out2+ffn_output) # (batch_size, target_seq_len, fully_connected_dim)

# END CODE HERE

return out3, attn_weights_block1, attn_weights_block2

then测试

# UNIT TEST

DecoderLayer_test(DecoderLayer, create_look_ahead_mask)

5.2 - Full Decoder

You're almost there! Time to use your Decoder layer to build a full Transformer Decoder (Figure 3b). You will embedd your output and add positional encodings. You will then feed your encoded embeddings to a stack of Decoder layers.

Figure 3b: Transformer Decoder

Exercise 7 - Decoder

Implement Decoder() using the call() method to embed your output, add positional encoding, and implement multiple decoder layers

In this exercise, you will initialize your Decoder with an Embedding layer, positional encoding, and multiple DecoderLayers. Your call() method will perform the following steps:

- Pass your generated output through the Embedding layer.

- Scale your embedding by multiplying it by the square root of your embedding dimension. Remember to cast the embedding dimension to data type

tf.float32before computing the square root. - Add the position encoding: self.pos_encoding

[:, :seq_len, :]to your embedding. - Pass the encoded embedding through a dropout layer, remembering to use the

trainingparameter to set the model training mode. - Pass the output of the dropout layer through the stack of Decoding layers using a for loop.

# UNQ_C7 (UNIQUE CELL IDENTIFIER, DO NOT EDIT)

# GRADED FUNCTION Decoder

class Decoder(tf.keras.layers.Layer):

"""

The entire Encoder is starts by passing the target input to an embedding layer

and using positional encoding to then pass the output through a stack of

decoder Layers

"""

def __init__(self, num_layers, embedding_dim, num_heads, fully_connected_dim, target_vocab_size,

maximum_position_encoding, dropout_rate=0.1, layernorm_eps=1e-6):

super(Decoder, self).__init__()

self.embedding_dim = embedding_dim

self.num_layers = num_layers

self.embedding = Embedding(target_vocab_size, self.embedding_dim)

self.pos_encoding = positional_encoding(maximum_position_encoding, self.embedding_dim)

self.dec_layers = [DecoderLayer(embedding_dim=self.embedding_dim,

num_heads=num_heads,

fully_connected_dim=fully_connected_dim,

dropout_rate=dropout_rate,

layernorm_eps=layernorm_eps)

for _ in range(self.num_layers)]

self.dropout = Dropout(dropout_rate)

def call(self, x, enc_output, training,

look_ahead_mask, padding_mask):

"""

Forward pass for the Decoder

Arguments:

x -- Tensor of shape (batch_size, target_seq_len, fully_connected_dim)

enc_output -- Tensor of shape(batch_size, input_seq_len, fully_connected_dim)

training -- Boolean, set to true to activate

the training mode for dropout layers

look_ahead_mask -- Boolean mask for the target_input

padding_mask -- Boolean mask for the second multihead attention layer

Returns:

x -- Tensor of shape (batch_size, target_seq_len, fully_connected_dim)

attention_weights - Dictionary of tensors containing all the attention weights

each of shape Tensor of shape (batch_size, num_heads, target_seq_len, input_seq_len)

"""

seq_len = tf.shape(x)[1]

attention_weights = {}

# START CODE HERE

# create word embeddings

x = self.embedding(x) # (batch_size, target_seq_len, fully_connected_dim)

# scale embeddings by multiplying by the square root of their dimension

x *= np.sqrt(self.embedding_dim)

# calculate positional encodings and add to word embedding

x += self.pos_encoding[:, :seq_len, :]

# apply a dropout layer to x

x = self.dropout(x, training)

# use a for loop to pass x through a stack of decoder layers and update attention_weights (~4 lines total)

for i in range(self.num_layers):

# pass x and the encoder output through a stack of decoder layers and save the attention weights

# of block 1 and 2 (~1 line)

x, block1, block2 = self.dec_layers[i](x, enc_output, training,

look_ahead_mask, padding_mask)

#update attention_weights dictionary with the attention weights of block 1 and block 2

attention_weights['decoder_layer{}_block1_self_att'.format(i+1)] = block1

attention_weights['decoder_layer{}_block2_decenc_att'.format(i+1)] = block2

# END CODE HERE

# x.shape == (batch_size, target_seq_len, fully_connected_dim)

return x, attention_weights

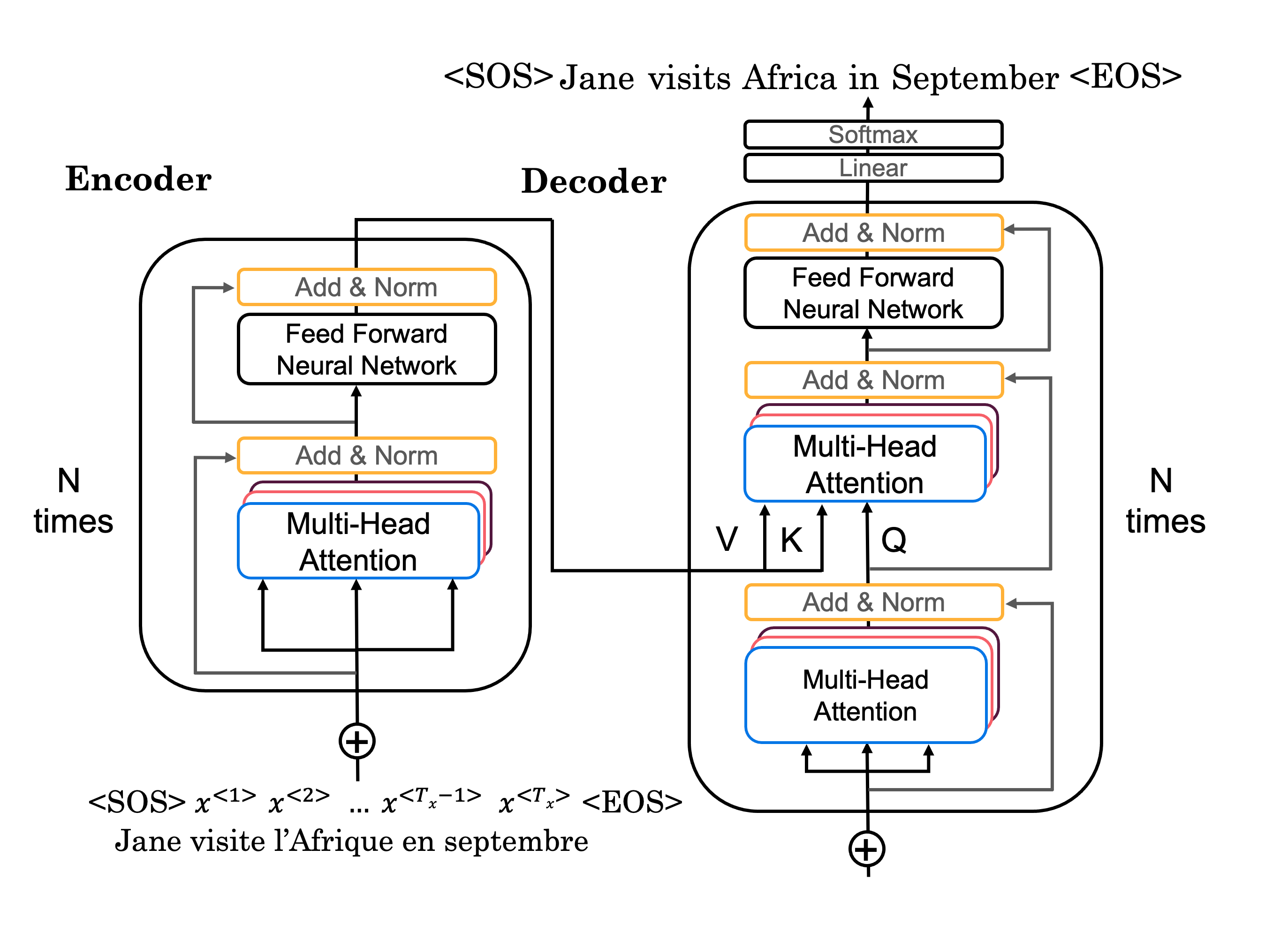

6 - Transformer

Phew! This has been quite the assignment, and now you've made it to your last exercise of the Deep Learning Specialization. Congratulations! You've done all the hard work, now it's time to put it all together.

Figure 4: Transformer

The flow of data through the Transformer Architecture is as follows:

- First your input passes through an Encoder, which is just repeated Encoder layers that you implemented:

- embedding and positional encoding of your input

- multi-head attention on your input

- feed forward neural network to help detect features

- Then the predicted output passes through a Decoder, consisting of the decoder layers that you implemented:

- embedding and positional encoding of the output

- multi-head attention on your generated output

- multi-head attention with the Q from the first multi-head attention layer and the K and V from the Encoder

- a feed forward neural network to help detect features

- Finally, after the Nth Decoder layer, two dense layers and a softmax are applied to generate prediction for the next output in your sequence.

Exercise 8 - Transformer

Implement Transformer() using the call() method

- Pass the input through the Encoder with the appropiate mask.

- Pass the encoder output and the target through the Decoder with the appropiate mask.

- Apply a linear transformation and a softmax to get a prediction.

# UNQ_C8 (UNIQUE CELL IDENTIFIER, DO NOT EDIT)

# GRADED FUNCTION Transformer

class Transformer(tf.keras.Model):

"""

Complete transformer with an Encoder and a Decoder

"""

def __init__(self, num_layers, embedding_dim, num_heads, fully_connected_dim, input_vocab_size,

target_vocab_size, max_positional_encoding_input,

max_positional_encoding_target, dropout_rate=0.1, layernorm_eps=1e-6):

super(Transformer, self).__init__()

self.encoder = Encoder(num_layers=num_layers,

embedding_dim=embedding_dim,

num_heads=num_heads,

fully_connected_dim=fully_connected_dim,

input_vocab_size=input_vocab_size,

maximum_position_encoding=max_positional_encoding_input,

dropout_rate=dropout_rate,

layernorm_eps=layernorm_eps)

self.decoder = Decoder(num_layers=num_layers,

embedding_dim=embedding_dim,

num_heads=num_heads,

fully_connected_dim=fully_connected_dim,

target_vocab_size=target_vocab_size,

maximum_position_encoding=max_positional_encoding_target,

dropout_rate=dropout_rate,

layernorm_eps=layernorm_eps)

self.final_layer = Dense(target_vocab_size, activation='softmax')

def call(self, input_sentence, output_sentence, training, enc_padding_mask, look_ahead_mask, dec_padding_mask):

"""

Forward pass for the entire Transformer

Arguments:

input_sentence -- Tensor of shape (batch_size, input_seq_len, fully_connected_dim)

An array of the indexes of the words in the input sentence

output_sentence -- Tensor of shape (batch_size, target_seq_len, fully_connected_dim)

An array of the indexes of the words in the output sentence

training -- Boolean, set to true to activate

the training mode for dropout layers

enc_padding_mask -- Boolean mask to ensure that the padding is not

treated as part of the input

look_ahead_mask -- Boolean mask for the target_input

dec_padding_mask -- Boolean mask for the second multihead attention layer

Returns:

final_output -- Describe me

attention_weights - Dictionary of tensors containing all the attention weights for the decoder

each of shape Tensor of shape (batch_size, num_heads, target_seq_len, input_seq_len)

"""

# START CODE HERE

# call self.encoder with the appropriate arguments to get the encoder output

enc_output = self.encoder(input_sentence, training, enc_padding_mask) # (batch_size, inp_seq_len, fully_connected_dim)

# call self.decoder with the appropriate arguments to get the decoder output

# dec_output.shape == (batch_size, tar_seq_len, fully_connected_dim)

dec_output, attention_weights = self.decoder(output_sentence, enc_output, training, look_ahead_mask, dec_padding_mask)

#(tar, enc_output, training, look_ahead_mask, dec_padding_mask)

# pass decoder output through a linear layer and softmax (~2 lines)

final_output = self.final_layer(dec_output) # (batch_size, tar_seq_len, target_vocab_size)

# END CODE HERE

return final_output, attention_weights

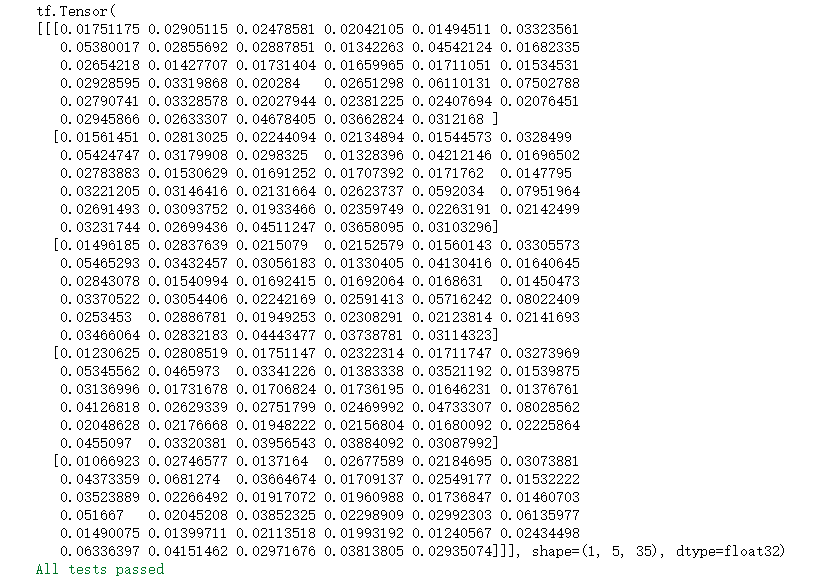

测试

# UNIT TEST

Transformer_test(Transformer, create_look_ahead_mask, create_padding_mask)

Conclusion

You've come to the end of the graded portion of the assignment. By now, you've:

- Create positional encodings to capture sequential relationships in data

- Calculate scaled dot-product self-attention with word embeddings

- Implement masked multi-head attention

- Build and train a Transformer model

What you should remember:

- The combination of self-attention and convolutional network layers allows of parallization of training and faster training.

- Self-attention is calculated using the generated query Q, key K, and value V matrices.

- Adding positional encoding to word embeddings is an effective way of include sequence information in self-attention calculations.

- Multi-head attention can help detect multiple features in your sentence.

- Masking stops the model from 'looking ahead' during training, or weighting zeroes too much when processing cropped sentences.

Now that you have completed the Transformer assignment, make sure you check out the ungraded labs to apply the Transformer model to practical use cases such as Name Entity Recogntion (NER) and Question Answering (QA).

Congratulations on finishing the Deep Learning Specialization!!!!!!

This was the last graded assignment of the specialization. It is now time to celebrate all your hard work and dedication!

7 - References

The Transformer algorithm was due to Vaswani et al. (2017).

- Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N. Gomez, Lukasz Kaiser, Illia Polosukhin (2017). Attention Is All You Need

第五章第四周习题: Transformers Architecture with TensorFlow的更多相关文章

- 《Python核心编程》 第五章 数字 - 课后习题

课后习题 5-1 整形. 讲讲 Python 普通整型和长整型的区别. 答:普通整型是绝大多数现代系统都能识别的. Python的长整型类型能表达的数值仅仅与你机器支持的(虚拟)内存大小有关. 5- ...

- UVa第五章STL应用 习题((解题报告))具体!

例题5--9 数据库 Database UVa 1592 #include<iostream> #include<stdio.h> #include<string.h&g ...

- 《Python编程:从入门到实践》第五章 if语句 习题答案

#5.1 major = 'Software Engineering' print("Is major =='Software Engineering'? I predict True.&q ...

- 《学习Opencv》第五章 习题6

这是第五章 习题5.6的结合版,其中实现了摄像头抓拍功能,能够成功运行. #include "stdafx.h" #include "cv.h" #includ ...

- 统计学习导论:基于R应用——第五章习题

第五章习题 1. 我们主要用到下面三个公式: 根据上述公式,我们将式子化简为 对求导即可得到得到公式5-6. 2. (a) 1 - 1/n (b) 自助法是有有放回的,所以第二个的概率还是1 - 1/ ...

- 《Linux内核设计与实现》第四周读书笔记——第五章

<Linux内核设计与实现>第四周读书笔记--第五章 20135301张忻 估算学习时间:共1.5小时 读书:1.0 代码:0 作业:0 博客:0.5 实际学习时间:共2.0小时 读书:1 ...

- 《C++Primer》第五版习题答案--第五章【学习笔记】

<C++Primer>第五版习题答案--第五章[学习笔记] ps:答案是个人在学习过程中书写,可能存在错漏之处,仅作参考. 作者:cosefy Date: 2020/1/15 第五章:语句 ...

- 【TIJ4】第五章全部习题

第五章习题 5.1 package ex0501; //[5.1]创建一个类,它包含一个未初始化的String引用.验证该引用被Java初始化成null class TestDefaultNull { ...

- python程序设计基础(嵩天)第五章课后习题部分答案

第五章p1515.2:实现isodd()函数,参数为整数,如果参数为奇数,返回true,否则返回false.def isodd(s): x=eval(s) if(x%2==0): return Fal ...

随机推荐

- Robot Framework(10)- 使用资源文件

如果你还想从头学起Robot Framework,可以看看这个系列的文章哦! https://www.cnblogs.com/poloyy/category/1770899.html 啥是资源文件 资 ...

- ZBLOG PHP调用相关文章列表以及上一篇下一篇文章代码

如果是比较小的个人博客.专题类网站项目,老蒋还是比较喜欢使用ZBLOG PHP程序的,无论是轻便度还是易用性上比WordPress简单很多,虽然WP的功能很强大,比如强大的插件和主题丰富功能是当前最为 ...

- vue-自定义指令(directive )的使用方法

前言 在vue项目中我们经常使用到 v-show ,v-if,v-for等内置的指令,除此之外vue还提供了非常方便的自定义指令,供我们对普通的dom元素进行底层的操作.使我们的日常开发变得更加方便快 ...

- CSS linear-gradient() 函数

用于背景颜色渐变或画线条等场景 linear-gradient() 函数用于创建一个表示两种或多种颜色线性渐变的图片. 创建一个线性渐变,需要指定两种颜色,还可以实现不同方向(指定为一个角度)的渐变效 ...

- symfony2显示调试工具栏

1. app/config/config_dev.yml framework: templating: engines: ['twig'] router: resource: "%kerne ...

- Shell系列(22)- 字符截取命令awk

简介 awk是一个数据处理工具,相比于sed常常作用于一整行的处理,awk则比较倾向于将一行分成数个"字段"来处理 awk的流程是依次读取每一行数据,读取完一行数据后,进行条件判断 ...

- Faster RCNN 改进论文及资料

1,面向小目标的多尺度Faster RCNN检测算法 黄继鹏等 对高分辨率图像进行下采样和上采样,使得网上获取的数据与实际测试数据分布接近. 下采样:最大池化和平均池化 上采样:线性插值,区域插值,最 ...

- cannot connect to chrome at 127.0.0.1:9222

window10系统,先cmd打开chrome, chrome --remote-debugging-port=9222 执行脚本 from selenium import webdriver fro ...

- 虚拟机安装配置centos7

安装 https://blog.csdn.net/babyxue/article/details/80970526 主机环境预设 更换国内yum源 epel源 https://www.cnblogs. ...

- YbtOJ#732-斐波那契【特征方程,LCT】

正题 题目链接:http://www.ybtoj.com.cn/contest/125/problem/2 题目大意 给出\(n\)个点的一棵树,以\(1\)为根,每个点有点权\(a_i\).要求支持 ...