matlab示例程序--Motion-Based Multiple Object Tracking--卡尔曼多目标跟踪程序--解读

静止背景下的卡尔曼多目标跟踪

最近学习了一下多目标跟踪,看了看MathWorks的关于Motion-Based Multiple Object Tracking的Documention。

程序来自matlab的CV工具箱Computer Vision System Toolbox。这种方法用于静止背景下的多目标检测与跟踪。

程序可以分为两部分,1.每一帧检测运动objects;

2.实时的将检测得到的区域匹配到相同一个物体;

检测部分,用的是基于高斯混合模型的背景剪除法;

参考链接:http://blog.pluskid.org/?p=39

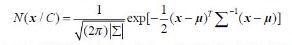

所谓单高斯模型,就是用多维高斯分布概率来进行模式分类

其中μ用训练样本均值代替,Σ用样本方差代替,X为d维的样本向量。通过高斯概率公式就可以得出类别C属于正(负)样本的概率。

而混合高斯模型(GMM)就是数据从多个高斯分布中产生的。每个GMM由K个高斯分布线性叠加而成。

P(x)=Σp(k)*p(x|k) 相当于对各个高斯分布进行加权(权系数越大,那么这个数据属于这个高斯分布的可能性越大)

而在实际过程中,我们是在已知数据的前提下,对GMM进行参数估计,具体在这里即为图片训练一个合适的GMM模型。

那么在前景检测中,我们会取静止背景(约50帧图像)来进行GMM参数估计,进行背景建模。分类域值官网取得0.7,经验取值0.7-0.75可调。这一步将会分离前景和背景,输出为前景二值掩码。

然后进行形态学运算,并通过函数返回运动区域的centroids和bboxes,完成前景检测部分。

跟踪部分,用的是卡尔曼滤波。卡尔曼是一个线性估计算法,可以建立帧间bboxs的关系。

跟踪分为5种状态: 1,新目标出现 2,目标匹配 3,目标遮挡 4,目标分离 5,目标消失。

卡尔曼原理在这儿我就不贴了,网上很多。

状态方程: X(k+1)=A(K+1,K)X(K)+w(K) 其中 X(k)=[x(k),y(k),w(k),h(k),v(k)], x,y,w,h,分别表示bboxs的横纵坐标,长,宽。

观测方程: Z(k)=H(k)X(k)+v(k) w(k), v(k),不相关的高斯白噪声。

定义好了观测方程与状态方程之后就可以用卡尔曼滤波器实现运动目标的跟踪,步骤如下:

1)计算运动目标的特征信息(运动质心,以及外接矩形)。

2)用得到的特征信息初始化卡尔曼滤波器(开始时可以初始为0)。

3)用卡尔曼滤波器对下一帧中对应的目标区域进行预测,当下一帧到来时,在预测区域内进行目标匹配。

4)如果匹配成功,则更新卡尔曼滤波器

在匹配的过程中,使用的是匈牙利匹配算法,匈牙利算法在这里有很好的介绍:http://blog.csdn.net/pi9nc/article/details/11848327

匈牙利匹配算法在此处是将新一帧图片中检测到的运动物体匹配到对应的轨迹。匹配过程是通过最小化卡尔曼预测得到的质心与检测到的质心之间的欧氏距离之和实现的

具体可以分为两步:

1, 计算损失矩阵,大小为[M N],其中,M是轨迹数目,N是检测到的运动物体数目。

2, 求解损失矩阵

主要思路就是这么多,下面贴上matlab的demo,大家可以跑一跑。

- function multiObjectTracking()

- % create system objects used for reading video, detecting moving objects,

- % and displaying the results

- obj = setupSystemObjects(); %初始化函数

- tracks = initializeTracks(); % create an empty array of tracks %初始化轨迹对象

- nextId = ; % ID of the next track

- % detect moving objects, and track them across video frames

- while ~isDone(obj.reader)

- frame = readFrame(); %读取一帧

- [centroids, bboxes, mask] = detectObjects(frame); %前景检测

- predictNewLocationsOfTracks(); %根据位置进行卡尔曼预测

- [assignments, unassignedTracks, unassignedDetections] = ...

- detectionToTrackAssignment(); %匈牙利匹配算法进行匹配

- updateAssignedTracks();%分配好的轨迹更新

- updateUnassignedTracks();%未分配的轨迹更新

- deleteLostTracks();%删除丢掉的轨迹

- createNewTracks();%创建新轨迹

- displayTrackingResults();%结果展示

- end

- %% Create System Objects

- % Create System objects used for reading the video frames, detecting

- % foreground objects, and displaying results.

- function obj = setupSystemObjects()

- % Initialize Video I/O

- % Create objects for reading a video from a file, drawing the tracked

- % objects in each frame, and playing the video.

- % create a video file reader

- obj.reader = vision.VideoFileReader('atrium.avi'); %读入视频

- % create two video players, one to display the video,

- % and one to display the foreground mask

- obj.videoPlayer = vision.VideoPlayer('Position', [, , , ]); %创建两个窗口

- obj.maskPlayer = vision.VideoPlayer('Position', [, , , ]);

- % Create system objects for foreground detection and blob analysis

- % The foreground detector is used to segment moving objects from

- % the background. It outputs a binary mask, where the pixel value

- % of corresponds to the foreground and the value of corresponds

- % to the background.

- obj.detector = vision.ForegroundDetector('NumGaussians', , ... %GMM进行前景检测,高斯核数目为3,前40帧为背景帧,域值为0.7

- 'NumTrainingFrames', , 'MinimumBackgroundRatio', 0.7);

- % Connected groups of foreground pixels are likely to correspond to moving

- % objects. The blob analysis system object is used to find such groups

- % (called 'blobs' or 'connected components'), and compute their

- % characteristics, such as area, centroid, and the bounding box.

- obj.blobAnalyser = vision.BlobAnalysis('BoundingBoxOutputPort', true, ... %输出质心和外接矩形

- 'AreaOutputPort', true, 'CentroidOutputPort', true, ...

- 'MinimumBlobArea', );

- end

- %% Initialize Tracks

- % The |initializeTracks| function creates an array of tracks, where each

- % track is a structure representing a moving object in the video. The

- % purpose of the structure is to maintain the state of a tracked object.

- % The state consists of information used for detection to track assignment,

- % track termination, and display.

- %

- % The structure contains the following fields:

- %

- % * |id| : the integer ID of the track

- % * |bbox| : the current bounding box of the object; used

- % for display

- % * |kalmanFilter| : a Kalman filter object used for motion-based

- % tracking

- % * |age| : the number of frames since the track was first

- % detected

- % * |totalVisibleCount| : the total number of frames in which the track

- % was detected (visible)

- % * |consecutiveInvisibleCount| : the number of consecutive frames for

- % which the track was not detected (invisible).

- %

- % Noisy detections tend to result in short-lived tracks. For this reason,

- % the example only displays an object after it was tracked for some number

- % of frames. This happens when |totalVisibleCount| exceeds a specified

- % threshold.

- %

- % When no detections are associated with a track for several consecutive

- % frames, the example assumes that the object has left the field of view

- % and deletes the track. This happens when |consecutiveInvisibleCount|

- % exceeds a specified threshold. A track may also get deleted as noise if

- % it was tracked for a short time, and marked invisible for most of the of

- % the frames.

- function tracks = initializeTracks()

- % create an empty array of tracks

- tracks = struct(...

- 'id', {}, ... %轨迹ID

- 'bbox', {}, ... %外接矩形

- 'kalmanFilter', {}, ...%轨迹的卡尔曼滤波器

- 'age', {}, ...%总数量

- 'totalVisibleCount', {}, ...%可视数量

- 'consecutiveInvisibleCount', {});%不可视数量

- end

- %% Read a Video Frame

- % Read the next video frame from the video file.

- function frame = readFrame()

- frame = obj.reader.step();%激活读图函数

- end

- %% Detect Objects

- % The |detectObjects| function returns the centroids and the bounding boxes

- % of the detected objects. It also returns the binary mask, which has the

- % same size as the input frame. Pixels with a value of correspond to the

- % foreground, and pixels with a value of correspond to the background.

- %

- % The function performs motion segmentation using the foreground detector.

- % It then performs morphological operations on the resulting binary mask to

- % remove noisy pixels and to fill the holes in the remaining blobs.

- function [centroids, bboxes, mask] = detectObjects(frame)

- % detect foreground

- mask = obj.detector.step(frame);

- % apply morphological operations to remove noise and fill in holes

- mask = imopen(mask, strel('rectangle', [,]));%开运算

- mask = imclose(mask, strel('rectangle', [, ])); %闭运算

- mask = imfill(mask, 'holes');%填洞

- % perform blob analysis to find connected components

- [~, centroids, bboxes] = obj.blobAnalyser.step(mask);

- end

- %% Predict New Locations of Existing Tracks

- % Use the Kalman filter to predict the centroid of each track in the

- % current frame, and update its bounding box accordingly.

- function predictNewLocationsOfTracks()

- for i = :length(tracks)

- bbox = tracks(i).bbox;

- % predict the current location of the track

- predictedCentroid = predict(tracks(i).kalmanFilter);%根据以前的轨迹,预测当前位置

- % shift the bounding box so that its center is at

- % the predicted location

- predictedCentroid = int32(predictedCentroid) - bbox(:) / ;

- tracks(i).bbox = [predictedCentroid, bbox(:)];%真正的当前位置

- end

- end

- %% Assign Detections to Tracks

- % Assigning object detections in the current frame to existing tracks is

- % done by minimizing cost. The cost is defined as the negative

- % log-likelihood of a detection corresponding to a track.

- %

- % The algorithm involves two steps:

- %

- % Step : Compute the cost of assigning every detection to each track using

- % the |distance| method of the |vision.KalmanFilter| System object. The

- % cost takes into account the Euclidean distance between the predicted

- % centroid of the track and the centroid of the detection. It also includes

- % the confidence of the prediction, which is maintained by the Kalman

- % filter. The results are stored in an MxN matrix, where M is the number of

- % tracks, and N is the number of detections.

- %

- % Step : Solve the assignment problem represented by the cost matrix using

- % the |assignDetectionsToTracks| function. The function takes the cost

- % matrix and the cost of not assigning any detections to a track.

- %

- % The value for the cost of not assigning a detection to a track depends on

- % the range of values returned by the |distance| method of the

- % |vision.KalmanFilter|. This value must be tuned experimentally. Setting

- % it too low increases the likelihood of creating a new track, and may

- % result in track fragmentation. Setting it too high may result in a single

- % track corresponding to a series of separate moving objects.

- %

- % The |assignDetectionsToTracks| function uses the Munkres' version of the

- % Hungarian algorithm to compute an assignment which minimizes the total

- % cost. It returns an M x matrix containing the corresponding indices of

- % assigned tracks and detections in its two columns. It also returns the

- % indices of tracks and detections that remained unassigned.

- function [assignments, unassignedTracks, unassignedDetections] = ...

- detectionToTrackAssignment()

- nTracks = length(tracks);

- nDetections = size(centroids, );

- % compute the cost of assigning each detection to each track

- cost = zeros(nTracks, nDetections);

- for i = :nTracks

- cost(i, :) = distance(tracks(i).kalmanFilter, centroids);%损失矩阵计算

- end

- % solve the assignment problem

- costOfNonAssignment = ;

- [assignments, unassignedTracks, unassignedDetections] = ...

- assignDetectionsToTracks(cost, costOfNonAssignment);%匈牙利算法匹配

- end

- %% Update Assigned Tracks

- % The |updateAssignedTracks| function updates each assigned track with the

- % corresponding detection. It calls the |correct| method of

- % |vision.KalmanFilter| to correct the location estimate. Next, it stores

- % the new bounding box, and increases the age of the track and the total

- % visible count by . Finally, the function sets the invisible count to .

- function updateAssignedTracks()

- numAssignedTracks = size(assignments, );

- for i = :numAssignedTracks

- trackIdx = assignments(i, );

- detectionIdx = assignments(i, );

- centroid = centroids(detectionIdx, :);

- bbox = bboxes(detectionIdx, :);

- % correct the estimate of the object's location

- % using the new detection

- correct(tracks(trackIdx).kalmanFilter, centroid);

- % replace predicted bounding box with detected

- % bounding box

- tracks(trackIdx).bbox = bbox;

- % update track's age

- tracks(trackIdx).age = tracks(trackIdx).age + ;

- % update visibility

- tracks(trackIdx).totalVisibleCount = ...

- tracks(trackIdx).totalVisibleCount + ;

- tracks(trackIdx).consecutiveInvisibleCount = ;

- end

- end

- %% Update Unassigned Tracks

- % Mark each unassigned track as invisible, and increase its age by .

- function updateUnassignedTracks()

- for i = :length(unassignedTracks)

- ind = unassignedTracks(i);

- tracks(ind).age = tracks(ind).age + ;

- tracks(ind).consecutiveInvisibleCount = ...

- tracks(ind).consecutiveInvisibleCount + ;

- end

- end

- %% Delete Lost Tracks

- % The |deleteLostTracks| function deletes tracks that have been invisible

- % for too many consecutive frames. It also deletes recently created tracks

- % that have been invisible for too many frames overall.

- function deleteLostTracks()

- if isempty(tracks)

- return;

- end

- invisibleForTooLong = ;

- ageThreshold = ;

- % compute the fraction of the track's age for which it was visible

- ages = [tracks(:).age];

- totalVisibleCounts = [tracks(:).totalVisibleCount];

- visibility = totalVisibleCounts ./ ages;

- % find the indices of 'lost' tracks

- lostInds = (ages < ageThreshold & visibility < 0.6) | ...

- [tracks(:).consecutiveInvisibleCount] >= invisibleForTooLong;

- % delete lost tracks

- tracks = tracks(~lostInds);

- end

- %% Create New Tracks

- % Create new tracks from unassigned detections. Assume that any unassigned

- % detection is a start of a new track. In practice, you can use other cues

- % to eliminate noisy detections, such as size, location, or appearance.

- function createNewTracks()

- centroids = centroids(unassignedDetections, :);

- bboxes = bboxes(unassignedDetections, :);

- for i = :size(centroids, )

- centroid = centroids(i,:);

- bbox = bboxes(i, :);

- % create a Kalman filter object

- kalmanFilter = configureKalmanFilter('ConstantVelocity', ...

- centroid, [, ], [, ], );

- % create a new track

- newTrack = struct(...

- 'id', nextId, ...

- 'bbox', bbox, ...

- 'kalmanFilter', kalmanFilter, ...

- 'age', , ...

- 'totalVisibleCount', , ...

- 'consecutiveInvisibleCount', );

- % add it to the array of tracks

- tracks(end + ) = newTrack;

- % increment the next id

- nextId = nextId + ;

- end

- end

- %% Display Tracking Results

- % The |displayTrackingResults| function draws a bounding box and label ID

- % for each track on the video frame and the foreground mask. It then

- % displays the frame and the mask in their respective video players.

- function displayTrackingResults()

- % convert the frame and the mask to uint8 RGB

- frame = im2uint8(frame);

- mask = uint8(repmat(mask, [, , ])) .* ;

- minVisibleCount = ;

- if ~isempty(tracks)

- % noisy detections tend to result in short-lived tracks

- % only display tracks that have been visible for more than

- % a minimum number of frames.

- reliableTrackInds = ...

- [tracks(:).totalVisibleCount] > minVisibleCount;

- reliableTracks = tracks(reliableTrackInds);

- % display the objects. If an object has not been detected

- % in this frame, display its predicted bounding box.

- if ~isempty(reliableTracks)

- % get bounding boxes

- bboxes = cat(, reliableTracks.bbox);

- % get ids

- ids = int32([reliableTracks(:).id]);

- % create labels for objects indicating the ones for

- % which we display the predicted rather than the actual

- % location

- labels = cellstr(int2str(ids'));

- predictedTrackInds = ...

- [reliableTracks(:).consecutiveInvisibleCount] > ;

- isPredicted = cell(size(labels));

- isPredicted(predictedTrackInds) = {' predicted'};

- labels = strcat(labels, isPredicted);

- % draw on the frame

- frame = insertObjectAnnotation(frame, 'rectangle', ...

- bboxes, labels);

- % draw on the mask

- mask = insertObjectAnnotation(mask, 'rectangle', ...

- bboxes, labels);

- end

- end

- % display the mask and the frame

- obj.maskPlayer.step(mask);

- obj.videoPlayer.step(frame);

- end

- %% Summary

- % This example created a motion-based system for detecting and

- % tracking multiple moving objects. Try using a different video to see if

- % you are able to detect and track objects. Try modifying the parameters

- % for the detection, assignment, and deletion steps.

- %

- % The tracking in this example was solely based on motion with the

- % assumption that all objects move in a straight line with constant speed.

- % When the motion of an object significantly deviates from this model, the

- % example may produce tracking errors. Notice the mistake in tracking the

- % person labeled #, when he is occluded by the tree.

- %

- % The likelihood of tracking errors can be reduced by using a more complex

- % motion model, such as constant acceleration, or by using multiple Kalman

- % filters for every object. Also, you can incorporate other cues for

- % associating detections over time, such as size, shape, and color.

- displayEndOfDemoMessage(mfilename)

- end

matlab示例程序--Motion-Based Multiple Object Tracking--卡尔曼多目标跟踪程序--解读的更多相关文章

- Motion-Based Multiple Object Tracking

kalman filter tracking... %% Motion-Based Multiple Object Tracking % This example shows how to perfo ...

- Multiple Object Tracking using K-Shortest Paths Optimization简要

参考文献:Multiple Object Tracking using K-Shortest Paths Optimization 核心步骤: 两步:一.detection 二.link detect ...

- MATLAB 例子研究 Motion-Based Multiple Object Tracking

这个例子是用来识别视频中多个物体运动的.我要研究的是:搞清楚识别的步骤和相应的算法,识别出物体运动的轨迹. 详细参见官方帮助文档,总结如下: 移动物体的识别算法:a background subtra ...

- 多目标跟踪(MOT)论文随笔-POI: Multiple Object Tracking with High Performance Detection and Appearance Feature

网上已有很多关于MOT的文章,此系列仅为个人阅读随笔,便于初学者的共同成长.若希望详细了解,建议阅读原文. 本文是tracking by detection 方法进行多目标跟踪的文章,最大的特点是使用 ...

- 论文笔记-Deep Affinity Network for Multiple Object Tracking

作者: ShijieSun, Naveed Akhtar, HuanShengSong, Ajmal Mian, Mubarak Shah 来源: arXiv:1810.11780v1 项目:http ...

- [Object Tracking] Overview of Object Tracking

From: 目标跟踪方法的发展概述 From: 目标跟踪领域进展报告 通用目标的跟踪 经典目标跟踪方法 2010 年以前,目标跟踪领域大部分采用一些经典的跟踪方法,比如 Meanshift.Parti ...

- 论文笔记:Visual Object Tracking based on Adaptive Siamese and Motion Estimation Network

Visual Object Tracking based on Adaptive Siamese and Motion Estimation 本文提出一种利用上一帧目标位置坐标,在本帧中找出目标可能出 ...

- Object Tracking Benchmark

Abstract 问题: 1)evaluation is often not suffcient 2)biased for certain types of algorthms 3)datasets ...

- Online Object Tracking: A Benchmark 论文笔记(转)

转自:http://blog.csdn.net/lanbing510/article/details/40411877 有博主翻译了这篇论文:http://blog.csdn.net/roamer_n ...

随机推荐

- ubuntu SecureCRT破解

操作过程: 操作过程都在终端中执行.Ubuntu 的破解 : 下载程序: wget 链接: https://pan.baidu.com/s/1nvdJl7j 密码: 2ryk 运行破解 /usr/ ...

- Rhel6-cacti+nagios+ganglia(apache)配置文档

(lamp平台) 系统环境: rhel6 x86_64 iptables and selinux disabled 主机: 192.168.122.119 server19.example.com 1 ...

- [vijos P1391] 想越狱的小杉

考前最后一题,竟然是第一次码SPFA,虽然这个算法早有耳闻,甚至在闻所未闻之前自己有过一个类似的想法,说白了就是广搜啊,但是敲起来还是第一次啊,而且这还不是真正意义上的SPFA. 完全按照自己想法来码 ...

- css布局之三列布局

网站上使用三列布局的还是比较多的,不过三列和两列有些相似: 1.自适应三列 <!DOCTYPE html> <html lang="en"> <hea ...

- AngularJs的UI组件ui-Bootstrap分享(二)——Collapse

Collapse折叠控件使用uib-collapse指令 <!DOCTYPE html> <html ng-app="ui.bootstrap.demo" xml ...

- jQueryMobile控件之复选框

<!DOCTYPE html> <html lang="en"> <head> <meta charset="UTF-8&quo ...

- xml 解析的四种方式

=========================================xml文件<?xml version="1.0" encoding="GB2312 ...

- 【转载】知乎答案----孙志岗----Google 发布了程序员养成指南,国内互联网巨头是否也有类似的指南和课程推荐

国内公司在复制国外商业模式的同时,也应复制人家的社会担当.所以,来答题了!就参考 Google 的框架,列一下中文的课程.大体上在线学完一个计算机专业,是基本不成问题的.但是,这不意味着你可以不上大学 ...

- lsof 解决无法删除文件夹问题

今天在HPCC上面想要删除一个文件夹,结果说“Device or resource busy". 于是google一下,发现这个是因为有程序正在运行,所以无法删除. 那么怎样解决? lso ...

- Java设计模式(十一) 享元模式

原创文章,同步发自作者个人博客 http://www.jasongj.com/design_pattern/flyweight/.转载请注明出处 享元模式介绍 享元模式适用场景 面向对象技术可以很好的 ...