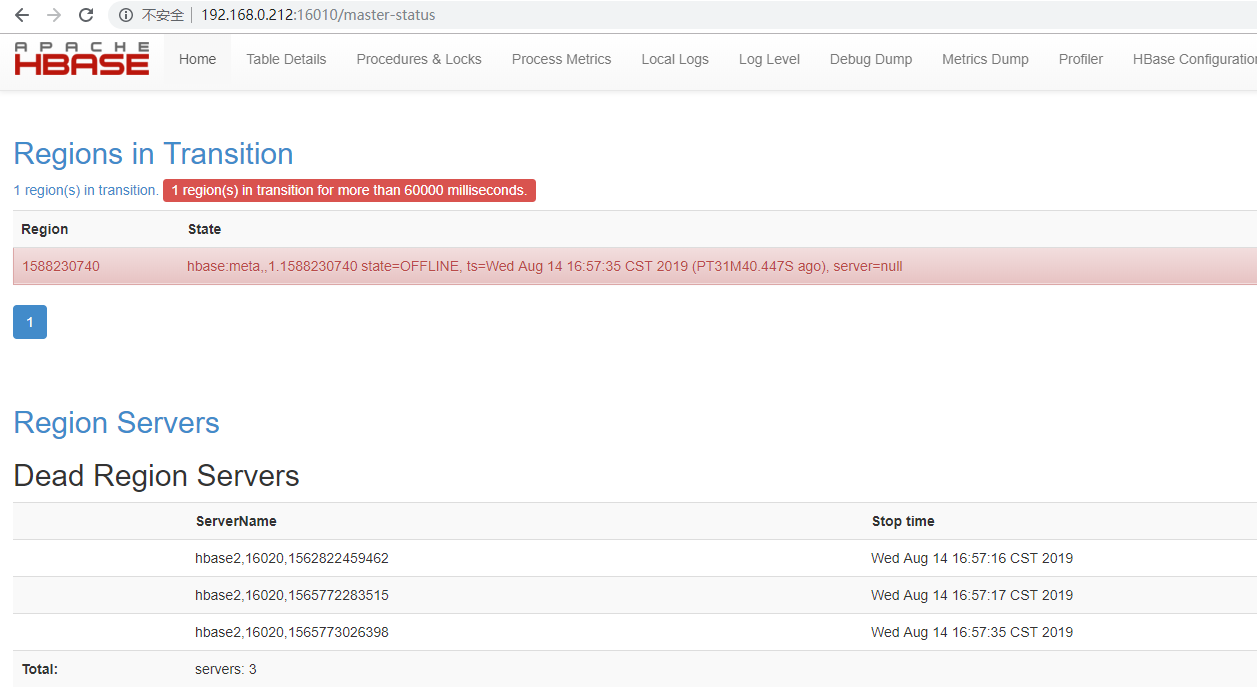

hbase报Dead Region Servers

问题描述:

16010端口启动成功,16020未启动。

hbase-root-regionserver-hbase2.log日志:

2019-08-14 16:45:10,552 WARN [Thread-37] hdfs.DFSClient: DataStreamer Exception

org.apache.hadoop.ipc.RemoteException(java.io.IOException): File /hbase/default/tsdb/3f2398c5b49b581c09687c49a739b007/recovered.edits/0000000000006253152-hbase2%2C16020%2C1562822459462.1565198820284.temp could only be written to 0 of the 1 minReplication nodes. There are 1 datanode(s) running and 1 node(s) are excluded in this operation.

at org.apache.hadoop.hdfs.server.blockmanagement.BlockManager.chooseTarget4NewBlock(BlockManager.java:2121)

at org.apache.hadoop.hdfs.server.namenode.FSDirWriteFileOp.chooseTargetForNewBlock(FSDirWriteFileOp.java:295)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getAdditionalBlock(FSNamesystem.java:2702)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.addBlock(NameNodeRpcServer.java:875)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.addBlock(ClientNamenodeProtocolServerSideTranslatorPB.java:561)

at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:523)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:991)

at org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:872)

at org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:818)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1729)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2678) at org.apache.hadoop.ipc.Client.call(Client.java:1476)

at org.apache.hadoop.ipc.Client.call(Client.java:1413)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:229)

at com.sun.proxy.$Proxy18.addBlock(Unknown Source)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.addBlock(ClientNamenodeProtocolTranslatorPB.java:418)

at sun.reflect.GeneratedMethodAccessor7.invoke(Unknown Source)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:191)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:102)

at com.sun.proxy.$Proxy19.addBlock(Unknown Source)

at sun.reflect.GeneratedMethodAccessor7.invoke(Unknown Source)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.hbase.fs.HFileSystem$1.invoke(HFileSystem.java:372)

at com.sun.proxy.$Proxy20.addBlock(Unknown Source)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.locateFollowingBlock(DFSOutputStream.java:1603)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.nextBlockOutputStream(DFSOutputStream.java:1388)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.run(DFSOutputStream.java:554)

2019-08-14 16:45:10,568 ERROR [RS_LOG_REPLAY_OPS-regionserver/hbase2:16020-1-Writer-2] wal.WALSplitter: Got while writing log entry to log

java.io.IOException: File /hbase/default/tsdb/3f2398c5b49b581c09687c49a739b007/recovered.edits/0000000000006253152-hbase2%2C16020%2C1562822459462.1565198820284.temp could only be written to 0 of the 1 minReplication nodes. There are 1 datanode(s) running and 1 node(s) are excluded in this operation.

at org.apache.hadoop.hdfs.server.blockmanagement.BlockManager.chooseTarget4NewBlock(BlockManager.java:2121)

at org.apache.hadoop.hdfs.server.namenode.FSDirWriteFileOp.chooseTargetForNewBlock(FSDirWriteFileOp.java:295)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getAdditionalBlock(FSNamesystem.java:2702)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.addBlock(NameNodeRpcServer.java:875)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.addBlock(ClientNamenodeProtocolServerSideTranslatorPB.java:561)

at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:523)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:991)

at org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:872)

at org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:818)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1729)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2678) at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.hadoop.ipc.RemoteException.instantiateException(RemoteException.java:106)

at org.apache.hadoop.ipc.RemoteException.unwrapRemoteException(RemoteException.java:95)

at org.apache.hadoop.hbase.wal.WALSplitter$LogRecoveredEditsOutputSink.appendBuffer(WALSplitter.java:1601)

at org.apache.hadoop.hbase.wal.WALSplitter$LogRecoveredEditsOutputSink.append(WALSplitter.java:1559)

at org.apache.hadoop.hbase.wal.WALSplitter$WriterThread.writeBuffer(WALSplitter.java:1084)

at org.apache.hadoop.hbase.wal.WALSplitter$WriterThread.doRun(WALSplitter.java:1076)

at org.apache.hadoop.hbase.wal.WALSplitter$WriterThread.run(WALSplitter.java:1046)

16010上日志:

原因:参考网址https://issues.apache.org/jira/browse/HBASE-12426

Description

I initially had a set of 5 region servers which had a single table which was pre split into 30 regions and was evenly distributed to all the regions with data.I then went ahead and removed/decommissioned a coupe of region servers,so in the end I have 3 region servers.Ran hbase hbck and verified there were 0 inconsistencies.However when 'status' command is issued is from hbase shell it shows a dead region server and the same is displayed in master UI as well.Fail over of hbase master did not fix the issue.On investigation we could see some WAL entries which was still pointing to the old region server.

/hbase/WALs/myserver,60020,1406745344969-splitting After removing these orphan entries from hdfs and master failover the dead region servers went away.I wonder if this could have caused any replication issues in the cluster.

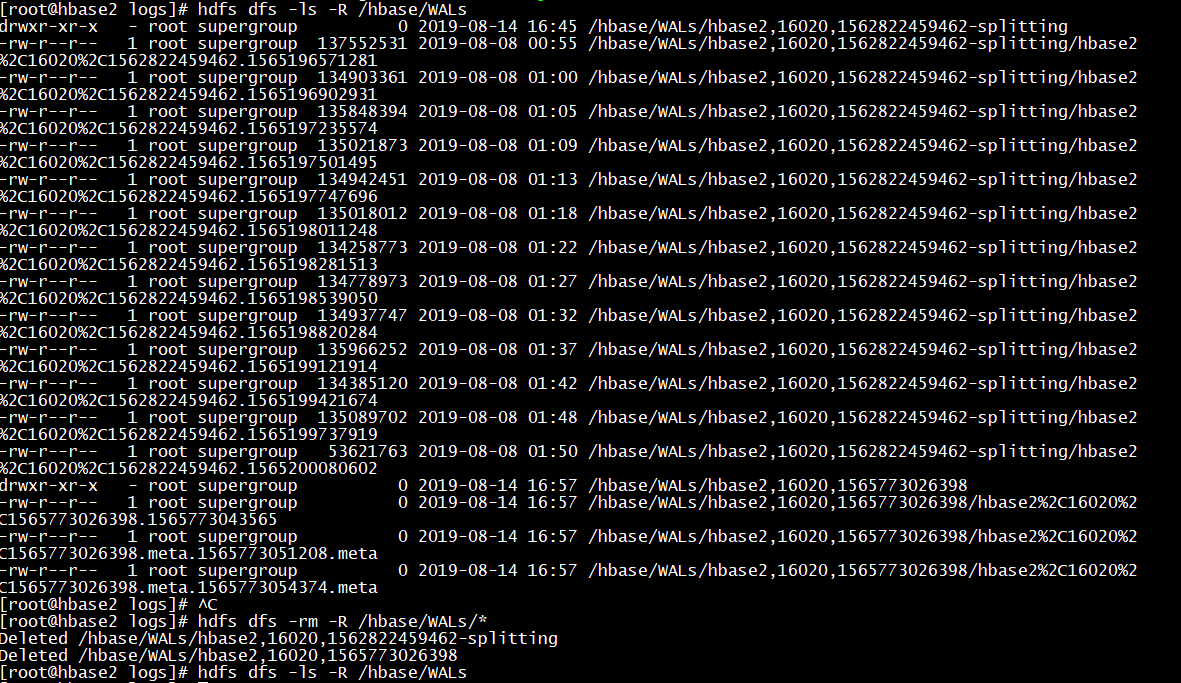

解决:删除/hbase/WALs上的分割文件

<!--查看--> hdfs dfs -ls -R /hbase/WALs <!--删除-->

hdfs dfs -rm -R /hbase/WALs/*

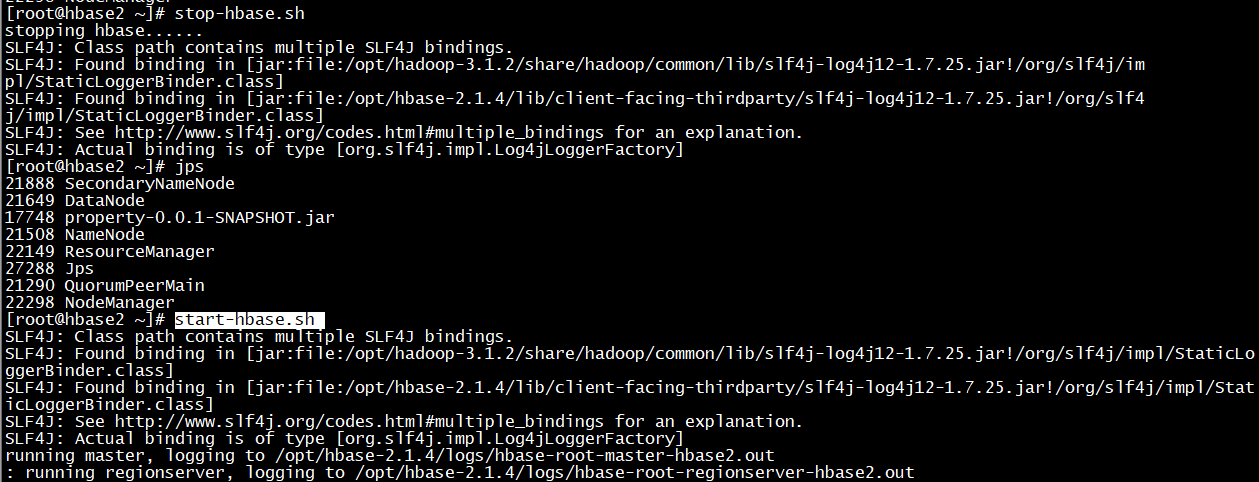

<!--重启hbase-->

stop-hbase.sh

start-hbase.sh

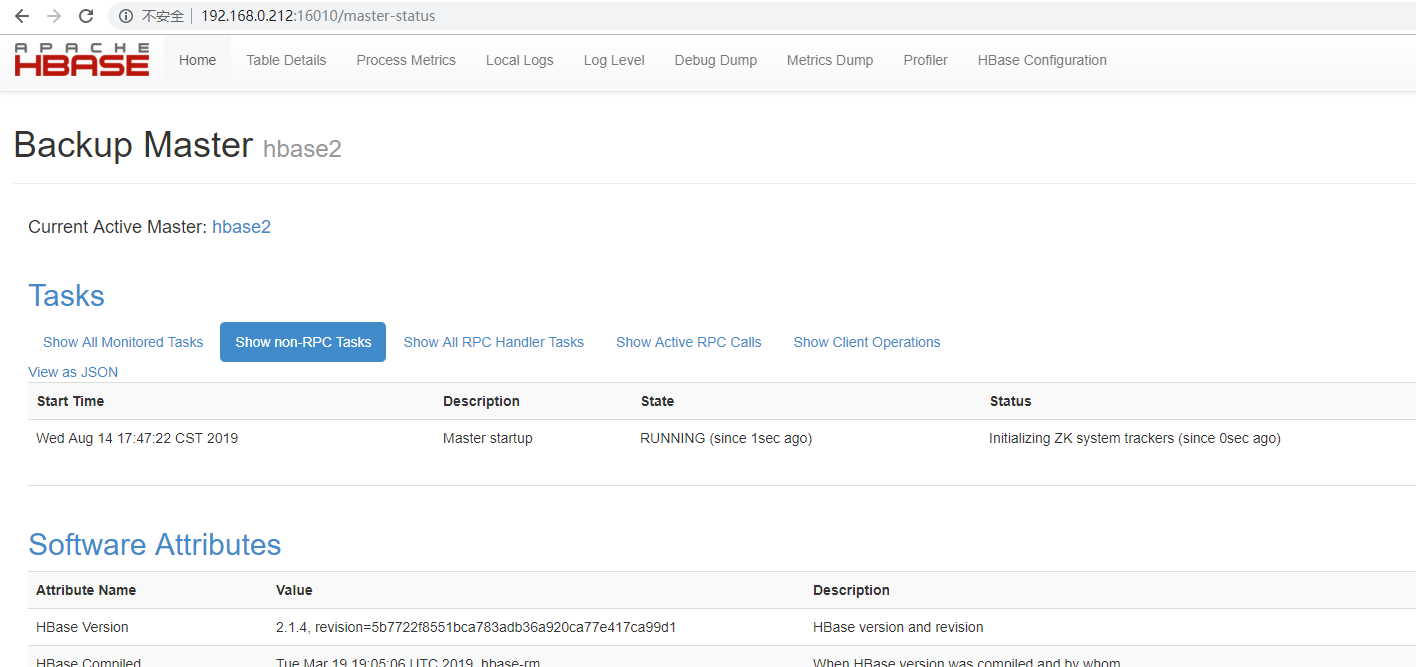

16010上验证:

hbase报Dead Region Servers的更多相关文章

- HBase管理与监控——Dead Region Servers

[问题描述] 在持续批量写入HBase的情况下,出现了Dead Region Servers的情况.集群会把dead掉节点上的region自动分发到另外2个节点上,集群还能继续运行,只是少了1个节点. ...

- 应用程序连接hbase报错:java.net.SocketTimeoutException: callTimeout=60000

背景说明: 今天对生产环境hbase增加了节点,下午的时候一个同事反馈,应用程序后台报错,如下: Tue Feb 26 17:35:35 CST 2019, null, java.net.Socket ...

- HBase原理–所有Region切分的细节都在这里了

本文由 网易云发布. 作者:范欣欣(本篇文章仅限内部分享,如需转载,请联系网易获取授权.) Region自动切分是HBase能够拥有良好扩张性的最重要因素之一,也必然是所有分布式系统追求无限 ...

- Eclipse连接HBase 报错:org.apache.hadoop.hbase.PleaseHoldException: Master is initializing

在eclipse中连接到HBase报错org.apache.hadoop.hbase.PleaseHoldException: Master is initializing,搜索了好久,网上其它人说的 ...

- hbase hbck及region RIT处理

hbase hbck主要用来检查hbase集群region的状态以及对有问题的region进行修复. hbase hbck :检查hbase所有表的一致性,如果正常,就会Print OK hbase ...

- hbase集群region数量和大小的影响

1.Region数量的影响 通常较少的region数量可使群集运行的更加平稳,官方指出每个RegionServer大约100个regions的时候效果最好,理由如下: 1)Hbase的一个特性MSLA ...

- 读者来信-5 | 如果你家HBase集群Region太多请点进来看看,这个问题你可能会遇到

前言:<读者来信>是HBase老店开设的一个问答专栏,旨在能为更多的小伙伴解决工作中常遇到的HBase相关的问题.老店会尽力帮大家解决这些问题或帮你发出求救贴,老店希望这会是一个互帮互助的 ...

- 读者来信 | 如果你家HBase集群Region太多请点进来看看,这个问题你可能会遇到

前言:<读者来信>是HBase老店开设的一个问答专栏,旨在能为更多的小伙伴解决工作中常遇到的HBase相关的问题.老店会尽力帮大家解决这些问题或帮你发出求救贴,老店希望这会是一个互帮互助的 ...

- java连接hbase报错

报错信息如下: The node /hbase is not in ZooKeeper. It should have been written by the master. Check the va ...

随机推荐

- Git-第三篇廖雪峰Git教程学习笔记(2)回退修改,恢复文件

1.工作区 C:\fyliu\lfyTemp\gitLocalRepository\yangjie 2.版本库 我们使用git init命令创建的.git就是我们的版本库.Git的版本库里存了很多东西 ...

- 新接口注册LED字符驱动设备

#include <linux/init.h> // __init __exit #include <linux/module.h> // module_init module ...

- 靶形数独 (dfs+预处理+状态压缩)

#2591. 「NOIP2009」靶形数独 [题目描述] 小城和小华都是热爱数学的好学生,最近,他们不约而同地迷上了数独游戏,好胜的他们想用数独来一比高低.但普通的数独对他们来说都过于简单了,于是他们 ...

- c++知识点总结3

http://akaedu.github.io/book/ week1 引用:相当于变量的别名.下面r和n就相当于同一回事 ; int &r=n; 引用做函数参数: void swap(int ...

- CSS的重用

<!DOCTYPE html> <html lang="en"> <head> <meta charset="UTF-8&quo ...

- 前端:HTML5和CSS3新特性一览

转载:https://www.cnblogs.com/star91/p/5659134.html

- maven 打包Scala代码到jar包

idea的pom.xml文件配置 <dependencies> <dependency> <groupId>org.scala-lang</groupId&g ...

- ES6——面向对象-基础

面向对象原来写法 类和构造函数一样 属性和方法分开写的 // 老版本 function User(name, pass) { this.name = name this.pass = pass } U ...

- Sql Server 出现此数据库没有有效所有者问题

在新建数据库或附加数据库后,想添加关系表,结果出现下面的错误: 此数据库没有有效所有者,因此无法安装数据库关系图支持对象.若要继续,请首先使用“数据库属性”对话框的“文件”页或ALTER AUTHO ...

- java并发学习--第六章 线程之间的通信

一.等待通知机制wait()与notify() 在线程中除了线程同步机制外,还有一个最重要的机制就是线程之间的协调任务.比如说最常见的生产者与消费者模式,很明显如果要实现这个模式,我们需要创建两个线程 ...