MongoDB 3.4 高可用集群搭建(二)replica set 副本集

转自:http://www.lanceyan.com/tech/mongodb/mongodb_repset1.html

在上一篇文章《MongoDB 3.4 高可用集群搭建(一):主从模式》提到了几个问题还没有解决。

- 主节点挂了能否自动切换连接?目前需要手工切换。

- 主节点的读写压力过大如何解决?

- 从节点每个上面的数据都是对数据库全量拷贝,从节点压力会不会过大?

- 数据压力大到机器支撑不了的时候能否做到自动扩展?

这篇文章看完这些问题就可以搞定了。NoSQL的产生就是为了解决大数据量、高扩展性、高性能、灵活数据模型、高可用性。但是光通过主从模式的架构远远达不到上面几点,由此MongoDB设计了副本集和分片的功能。这篇文章主要介绍副本集:

mongoDB官方已经不建议使用主从模式了,替代方案是采用副本集的模式,点击查看 ,如图:

那什么是副本集呢?打魔兽世界总说打副本,其实这两个概念差不多一个意思。游戏里的副本是指玩家集中在高峰时间去一个场景打怪,会出现玩家暴多怪物少的情况,游戏开发商为了保证玩家的体验度,就为每一批玩家单独开放一个同样的空间同样的数量的怪物,这一个复制的场景就是一个副本,不管有多少个玩家各自在各自的副本里玩不会互相影响。 mongoDB的副本也是这个,主从模式其实就是一个单副本的应用,没有很好的扩展性和容错性。而副本集具有多个副本保证了容错性,就算一个副本挂掉了还有很多副本存在,并且解决了上面第一个问题“主节点挂掉了,整个集群内会自动切换”。难怪mongoDB官方推荐使用这种模式。我们来看看mongoDB副本集的架构图:

由图可以看到客户端连接到整个副本集,不关心具体哪一台机器是否挂掉。主服务器负责整个副本集的读写,副本集定期同步数据备份,一但主节点挂掉,副本节点就会选举一个新的主服务器,这一切对于应用服务器不需要关心。我们看一下主服务器挂掉后的架构:

副本集中的副本节点在主节点挂掉后通过心跳机制检测到后,就会在集群内发起主节点的选举机制,自动选举一位新的主服务器。看起来很牛X的样子,我们赶紧操作部署一下!

官方推荐的副本集机器数量为至少3个,那我们也按照这个数量配置测试。

1、准备两台机器 10.202.11.117,10.202.11.118,10.202.37.75。 10.202.11.117 当作副本集主节点,10.202.11.118,10.202.37.75作为副本集副本节点。

2、分别在每台机器上建立mongodb副本集测试文件夹

在mongodb-3.4.2目录下创建replset/data

3、下载mongodb的安装程序包

4、分别在每台机器上启动mongodb

./mongod --dbpath=/home/appdeploy/dev/mongodb/mongodb-3.4./replset/data --port= --fork --logpath=/home/appdeploy/dev/mongodb/mongodb-3.4./logs/mongodb.log --httpinterface --rest --replSet repset

主要是参数:--replSet repset

可以看到控制台上显示副本集还没有配置初始化信息。

--29T10::59.878+ I REPL [initandlisten] Did not find local voted for document at startup.

--29T10::59.878+ I REPL [initandlisten] Did not find local replica set configuration document at startup; NoMatchingDocument: Did not find replica set configuration document in local.system.replset

5、初始化副本集

在三台机器上任意一台机器登陆mongodb

#使用admin数据库

use admin

#定义副本集配置变量,这里的 _id:”repset” 和上面命令参数“ –replSet repset” 要保持一样。

> config = { _id:"repset", members:[

... {_id:,host:"10.202.11.117:27017"},

... {_id:,host:"10.202.11.118:27017"},

... {_id:,host:"10.202.37.75:27017"}]

... }

{

"_id" : "repset",

"members" : [

{

"_id" : ,

"host" : "10.202.11.117:27017"

},

{

"_id" : ,

"host" : "10.202.11.118:27017"

},

{

"_id" : ,

"host" : "10.202.37.75:27017"

}

]

}

#初始化副本集配置

> rs.initiate(config);

{ "ok" : 1 }

repset:OTHER>

注意上面的变化,标红部分。

#查看日志,副本集启动成功后,138为主节点PRIMARY,136、137为副本节点SECONDARY。

--29T11::07.678+ I REPL [rsSync] transition to SECONDARY

--29T11::09.470+ I NETWORK [thread1] connection accepted from 10.202.11.118: # ( connections now open)

--29T11::09.470+ I - [conn6] end connection 10.202.11.118: ( connections now open)

--29T11::09.483+ I NETWORK [thread1] connection accepted from 10.202.37.75: # ( connections now open)

--29T11::09.483+ I - [conn7] end connection 10.202.37.75: ( connections now open)

--29T11::09.775+ I NETWORK [thread1] connection accepted from 10.202.11.118: # ( connections now open)

--29T11::09.775+ I NETWORK [conn8] received client metadata from 10.202.11.118: conn8: { driver: { name: "NetworkInterfaceASIO-RS", version: "3.4.2" }, os: { type: "Linux", name: "CentOS release 6.6 (Final)", architecture: "x86_64", version: "Kernel 2.6.32-504.el6.x86_64" } }

--29T11::09.777+ I NETWORK [thread1] connection accepted from 10.202.11.118: # ( connections now open)

--29T11::09.778+ I NETWORK [conn9] received client metadata from 10.202.11.118: conn9: { driver: { name: "NetworkInterfaceASIO-RS", version: "3.4.2" }, os: { type: "Linux", name: "CentOS release 6.6 (Final)", architecture: "x86_64", version: "Kernel 2.6.32-504.el6.x86_64" } }

--29T11::10.153+ I NETWORK [thread1] connection accepted from 10.202.37.75: # ( connections now open)

--29T11::10.153+ I NETWORK [conn10] received client metadata from 10.202.37.75: conn10: { driver: { name: "NetworkInterfaceASIO-RS", version: "3.4.2" }, os: { type: "Linux", name: "CentOS release 6.6 (Final)", architecture: "x86_64", version: "Kernel 2.6.32-504.el6.x86_64" } }

--29T11::10.156+ I NETWORK [thread1] connection accepted from 10.202.37.75: # ( connections now open)

--29T11::10.157+ I NETWORK [conn11] received client metadata from 10.202.37.75: conn11: { driver: { name: "NetworkInterfaceASIO-RS", version: "3.4.2" }, os: { type: "Linux", name: "CentOS release 6.6 (Final)", architecture: "x86_64", version: "Kernel 2.6.32-504.el6.x86_64" } }

--29T11::12.676+ I REPL [ReplicationExecutor] Member 10.202.11.118: is now in state SECONDARY

--29T11::12.677+ I REPL [ReplicationExecutor] Member 10.202.37.75: is now in state SECONDARY

--29T11::14.778+ I - [conn9] end connection 10.202.11.118: ( connections now open)

--29T11::15.159+ I - [conn11] end connection 10.202.37.75: ( connections now open)

--29T11::17.996+ I REPL [ReplicationExecutor] Starting an election, since we've seen no PRIMARY in the past 10000ms

--29T11::17.996+ I REPL [ReplicationExecutor] conducting a dry run election to see if we could be elected

--29T11::17.996+ I REPL [ReplicationExecutor] VoteRequester(term dry run) received a yes vote from 10.202.11.118:; response message: { term: , voteGranted: true, reason: "", ok: 1.0 }

--29T11::17.997+ I REPL [ReplicationExecutor] dry election run succeeded, running for election

--29T11::18.039+ I ASIO [NetworkInterfaceASIO-Replication-] Connecting to 10.202.37.75:

--29T11::18.044+ I ASIO [NetworkInterfaceASIO-Replication-] Successfully connected to 10.202.37.75:

--29T11::18.100+ I REPL [ReplicationExecutor] VoteRequester(term ) received a yes vote from 10.202.11.118:; response message: { term: , voteGranted: true, reason: "", ok: 1.0 }

#查看集群节点的状态

#查看集群节点的状态

repset:SECONDARY> rs.status()

{

"set" : "repset",

"date" : ISODate("2017-03-29T03:33:14.286Z"),

"myState" : 1,

"term" : NumberLong(1),

"heartbeatIntervalMillis" : NumberLong(2000),

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(1490758388, 1),

"t" : NumberLong(1)

},

"appliedOpTime" : {

"ts" : Timestamp(1490758388, 1),

"t" : NumberLong(1)

},

"durableOpTime" : {

"ts" : Timestamp(1490758388, 1),

"t" : NumberLong(1)

}

},

"members" : [

{

"_id" : 0,

"name" : "10.202.11.117:27017",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 644,

"optime" : {

"ts" : Timestamp(1490758388, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2017-03-29T03:33:08Z"),

"electionTime" : Timestamp(1490757918, 1),

"electionDate" : ISODate("2017-03-29T03:25:18Z"),

"configVersion" : 1,

"self" : true

},

{

"_id" : 1,

"name" : "10.202.11.118:27017",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 486,

"optime" : {

"ts" : Timestamp(1490758388, 1),

"t" : NumberLong(1)

},

"optimeDurable" : {

"ts" : Timestamp(1490758388, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2017-03-29T03:33:08Z"),

"optimeDurableDate" : ISODate("2017-03-29T03:33:08Z"),

"lastHeartbeat" : ISODate("2017-03-29T03:33:14.282Z"),

"lastHeartbeatRecv" : ISODate("2017-03-29T03:33:13.087Z"),

"pingMs" : NumberLong(0),

"syncingTo" : "10.202.11.117:27017",

"configVersion" : 1

},

{

"_id" : 2,

"name" : "10.202.37.75:27017",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 486,

"optime" : {

"ts" : Timestamp(1490758388, 1),

"t" : NumberLong(1)

},

"optimeDurable" : {

"ts" : Timestamp(1490758388, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2017-03-29T03:33:08Z"),

"optimeDurableDate" : ISODate("2017-03-29T03:33:08Z"),

"lastHeartbeat" : ISODate("2017-03-29T03:33:12.377Z"),

"lastHeartbeatRecv" : ISODate("2017-03-29T03:33:13.665Z"),

"pingMs" : NumberLong(0),

"syncingTo" : "10.202.11.118:27017",

"configVersion" : 1

}

],

"ok" : 1

}

repset:PRIMARY>

整个副本集已经搭建成功了。

6、测试副本集数据复制功能

#在主节点10.202.11.117上连接到终端:

#建立tong 数据库。

#往testdb表插入数据。

repset:PRIMARY> use tong

switched to db tong

repset:PRIMARY> show collections;

repset:PRIMARY> show collections;

repset:PRIMARY> db.testdb.insert({"name":"shenzhen","addr":"nanshan"})

WriteResult({ "nInserted" : 1 })

repset:PRIMARY> #在副本节点 10.202.11.118,10.202.37.75 上连接到mongodb查看数据是否复制过来。

./mongo

#使用tong 数据库。

repset:SECONDARY> use tong

switched to db tong

repset:SECONDARY> db.testdb.find()

Error: error: {

"ok" : 0,

"errmsg" : "not master and slaveOk=false",

"code" : 13435,

"codeName" : "NotMasterNoSlaveOk"

}

repset:SECONDARY> rs.slaveOk();

repset:SECONDARY> db.testdb.find()

{ "_id" : ObjectId("58db2b6572cf3b348b3cc0f5"), "name" : "shenzhen", "addr" : "nanshan" }

repset:SECONDARY> show tables;

testdb

repset:SECONDARY>

7、测试副本集故障转移功能

先停掉主节点mongodb 117,查看118、75的日志可以看到经过一系列的投票选择操作,75当选主节点,118从75同步数据过来。

--29T11::15.315+ I NETWORK [conn8] received client metadata from 127.0.0.1: conn8: { application: { name: "MongoDB Shell" }, driver: { name: "MongoDB Internal Client", version: "3.4.2" }, os: { type: "Linux", name: "CentOS release 6.6 (Final)", architecture: "x86_64", version: "Kernel 2.6.32-504.el6.x86_64" } }

--29T11::58.446+ I - [conn7] end connection 10.202.11.117: ( connections now open)

--29T11::58.699+ I REPL [replication-] Choosing new sync source because our current sync source, 10.202.11.118:, has an OpTime ({ ts: Timestamp |, t: }) which is not ahead of ours ({ ts: Timestamp |, t: }), it does not have a sync source, and it's not the primary (sync source does not know the primary)

2017-03-29T11:38:58.699+0800 I REPL [replication-1] Canceling oplog query because we have to choose a new sync source. Current source: 10.202.11.118:27017, OpTime { ts: Timestamp 0|0, t: -1 }, its sync source index:-1

2017-03-29T11:38:58.699+0800 W REPL [rsBackgroundSync] Fetcher stopped querying remote oplog with error: InvalidSyncSource: sync source 10.202.11.118:27017 (last visible optime: { ts: Timestamp 0|0, t: -1 }; config version: 1; sync source index: -1; primary index: -1) is no longer valid

2017-03-29T11:38:58.700+0800 I REPL [rsBackgroundSync] could not find member to sync from

2017-03-29T11:38:58.700+0800 I ASIO [ReplicationExecutor] dropping unhealthy pooled connection to 10.202.11.117:27017

2017-03-29T11:38:58.700+0800 I ASIO [ReplicationExecutor] after drop, pool was empty, going to spawn some connections

2017-03-29T11:38:58.700+0800 I ASIO [NetworkInterfaceASIO-Replication-0] Connecting to 10.202.11.117:27017

2017-03-29T11:38:58.702+0800 I ASIO [NetworkInterfaceASIO-Replication-0] Failed to connect to 10.202.11.117:27017 - HostUnreachable: Connection refused

2017-03-29T11:38:58.702+0800 I REPL [ReplicationExecutor] Error in heartbeat request to 10.202.11.117:27017; HostUnreachable: Connection refused

查看整个集群的状态,可以看到117为状态不可达。

#在118上查看状态

repset:SECONDARY> rs.status()

{

"set" : "repset",

"date" : ISODate("2017-03-29T03:43:17.178Z"),

"myState" : 2,

"term" : NumberLong(2),

"syncingTo" : "10.202.37.75:27017",

"heartbeatIntervalMillis" : NumberLong(2000),

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(1490758988, 1),

"t" : NumberLong(2)

},

"appliedOpTime" : {

"ts" : Timestamp(1490758988, 1),

"t" : NumberLong(2)

},

"durableOpTime" : {

"ts" : Timestamp(1490758988, 1),

"t" : NumberLong(2)

}

},

"members" : [

{

"_id" : 0,

"name" : "10.202.11.117:27017",

"health" : 0,

"state" : 8,

"stateStr" : "(not reachable/healthy)",

"uptime" : 0,

"optime" : {

"ts" : Timestamp(0, 0),

"t" : NumberLong(-1)

},

"optimeDurable" : {

"ts" : Timestamp(0, 0),

"t" : NumberLong(-1)

},

"optimeDate" : ISODate("1970-01-01T00:00:00Z"),

"optimeDurableDate" : ISODate("1970-01-01T00:00:00Z"),

"lastHeartbeat" : ISODate("2017-03-29T03:43:16.754Z"),

"lastHeartbeatRecv" : ISODate("2017-03-29T03:38:58.418Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "Connection refused",

"configVersion" : -1

},

{

"_id" : 1,

"name" : "10.202.11.118:27017",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 1184,

"optime" : {

"ts" : Timestamp(1490758988, 1),

"t" : NumberLong(2)

},

"optimeDate" : ISODate("2017-03-29T03:43:08Z"),

"syncingTo" : "10.202.37.75:27017",

"configVersion" : 1,

"self" : true

},

{

"_id" : 2,

"name" : "10.202.37.75:27017",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 1087,

"optime" : {

"ts" : Timestamp(1490758988, 1),

"t" : NumberLong(2)

},

"optimeDurable" : {

"ts" : Timestamp(1490758988, 1),

"t" : NumberLong(2)

},

"optimeDate" : ISODate("2017-03-29T03:43:08Z"),

"optimeDurableDate" : ISODate("2017-03-29T03:43:08Z"),

"lastHeartbeat" : ISODate("2017-03-29T03:43:16.624Z"),

"lastHeartbeatRecv" : ISODate("2017-03-29T03:43:16.271Z"),

"pingMs" : NumberLong(0),

"electionTime" : Timestamp(1490758748, 1),

"electionDate" : ISODate("2017-03-29T03:39:08Z"),

"configVersion" : 1

}

],

"ok" : 1

}

repset:SECONDARY>

在新的PRIMARY节点上新增一条记录,看SECONDARY节点能否同步过去。

#.75上等上mongo

./mongo

repset:PRIMARY> use tong

switched to db tong

repset:PRIMARY> db.testdb.find()

{ "_id" : ObjectId("58db2b6572cf3b348b3cc0f5"), "name" : "shenzhen", "addr" : "nanshan" }

repset:PRIMARY> db.testdb.insert({"name":"37.75 primary","addr":"75"})

WriteResult({ "nInserted" : 1 })

repset:PRIMARY> db.testdb.find()

{ "_id" : ObjectId("58db2b6572cf3b348b3cc0f5"), "name" : "shenzhen", "addr" : "nanshan" }

{ "_id" : ObjectId("58db312b200c0b77a06fc328"), "name" : "37.75 primary", "addr" : "75" }

repset:PRIMARY>

在10.202.11.118上查看同步结果:

repset:SECONDARY> rs.slaveOk()

repset:SECONDARY> use tong

switched to db tong

repset:SECONDARY> db.testdb.find()

{ "_id" : ObjectId("58db2b6572cf3b348b3cc0f5"), "name" : "shenzhen", "addr" : "nanshan" }

{ "_id" : ObjectId("58db312b200c0b77a06fc328"), "name" : "37.75 primary", "addr" : "75" }

repset:SECONDARY>

再启动原来的主节点 117,发现117变为 SECONDARY,还是37.75为主节点 PRIMARY。

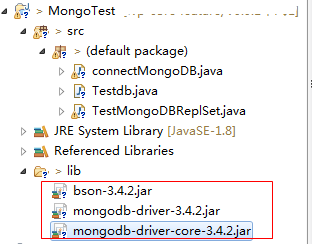

8、java程序连接副本集测试。三个节点有一个节点挂掉也不会影响应用程序客户端对整个副本集的读写!

要引入的jar有:

bson-3.4.2.jar,mongodb-driver-3.4.2.jar,mongodb-driver-core-3.4.2.jar

import java.util.ArrayList;

import java.util.List; import com.mongodb.BasicDBObject;

import com.mongodb.DB;

import com.mongodb.DBCollection;

import com.mongodb.DBCursor;

import com.mongodb.DBObject;

import com.mongodb.MongoClient;

import com.mongodb.ServerAddress; public class TestMongoDBReplSet { public static void main(String[] args) { try {

List<ServerAddress> addresses = new ArrayList<ServerAddress>();

ServerAddress address1 = new ServerAddress("10.202.11.117", 27017);

ServerAddress address2 = new ServerAddress("10.202.37.75", 27017);

ServerAddress address3 = new ServerAddress("10.202.11.118", 27017);

addresses.add(address1);

addresses.add(address2);

addresses.add(address3); MongoClient client = new MongoClient(addresses);

DB db = client.getDB("tong");

DBCollection coll = db.getCollection("testdb"); // 鎻掑叆

BasicDBObject object = new BasicDBObject();

Object obj = new Object();

obj = "value";

object.append("test", obj);

coll.insert(object); DBCursor dbCursor = coll.find(); while (dbCursor.hasNext()) {

DBObject dbObject = dbCursor.next();

System.out.println(dbObject.toString());

} } catch (Exception e) {

e.printStackTrace();

} } }

结果:

三月 , :: 下午 com.mongodb.diagnostics.logging.JULLogger log

信息: Cluster created with settings {hosts=[10.202.11.117:, 10.202.37.75:, 10.202.11.118:], mode=MULTIPLE, requiredClusterType=UNKNOWN, serverSelectionTimeout='30000 ms', maxWaitQueueSize=}

三月 , :: 下午 com.mongodb.diagnostics.logging.JULLogger log

信息: Adding discovered server 10.202.11.117: to client view of cluster

三月 , :: 下午 com.mongodb.diagnostics.logging.JULLogger log

信息: Adding discovered server 10.202.37.75: to client view of cluster

三月 , :: 下午 com.mongodb.diagnostics.logging.JULLogger log

信息: Adding discovered server 10.202.11.118: to client view of cluster

三月 , :: 下午 com.mongodb.diagnostics.logging.JULLogger log

信息: No server chosen by WritableServerSelector from cluster description ClusterDescription{type=UNKNOWN, connectionMode=MULTIPLE, serverDescriptions=[ServerDescription{address=10.202.11.118:, type=UNKNOWN, state=CONNECTING}, ServerDescription{address=10.202.11.117:, type=UNKNOWN, state=CONNECTING}, ServerDescription{address=10.202.37.75:, type=UNKNOWN, state=CONNECTING}]}. Waiting for ms before timing out

三月 , :: 下午 com.mongodb.diagnostics.logging.JULLogger log

信息: Opened connection [connectionId{localValue:, serverValue:}] to 10.202.37.75:

三月 , :: 下午 com.mongodb.diagnostics.logging.JULLogger log

信息: Opened connection [connectionId{localValue:, serverValue:}] to 10.202.11.117:

三月 , :: 下午 com.mongodb.diagnostics.logging.JULLogger log

信息: Opened connection [connectionId{localValue:, serverValue:}] to 10.202.11.118:

三月 , :: 下午 com.mongodb.diagnostics.logging.JULLogger log

信息: Monitor thread successfully connected to server with description ServerDescription{address=10.202.11.117:, type=REPLICA_SET_SECONDARY, state=CONNECTED, ok=true, version=ServerVersion{versionList=[, , ]}, minWireVersion=, maxWireVersion=, maxDocumentSize=, roundTripTimeNanos=, setName='repset', canonicalAddress=10.202.11.117:, hosts=[10.202.37.75:, 10.202.11.117:, 10.202.11.118:], passives=[], arbiters=[], primary='10.202.37.75:27017', tagSet=TagSet{[]}, electionId=null, setVersion=, lastWriteDate=Wed Mar :: CST , lastUpdateTimeNanos=}

三月 , :: 下午 com.mongodb.diagnostics.logging.JULLogger log

信息: Monitor thread successfully connected to server with description ServerDescription{address=10.202.37.75:, type=REPLICA_SET_PRIMARY, state=CONNECTED, ok=true, version=ServerVersion{versionList=[, , ]}, minWireVersion=, maxWireVersion=, maxDocumentSize=, roundTripTimeNanos=, setName='repset', canonicalAddress=10.202.37.75:, hosts=[10.202.37.75:, 10.202.11.117:, 10.202.11.118:], passives=[], arbiters=[], primary='10.202.37.75:27017', tagSet=TagSet{[]}, electionId=7fffffff0000000000000002, setVersion=, lastWriteDate=Wed Mar :: CST , lastUpdateTimeNanos=}

三月 , :: 下午 com.mongodb.diagnostics.logging.JULLogger log

信息: Discovered cluster type of REPLICA_SET

三月 , :: 下午 com.mongodb.diagnostics.logging.JULLogger log

信息: Monitor thread successfully connected to server with description ServerDescription{address=10.202.11.118:, type=REPLICA_SET_SECONDARY, state=CONNECTED, ok=true, version=ServerVersion{versionList=[, , ]}, minWireVersion=, maxWireVersion=, maxDocumentSize=, roundTripTimeNanos=, setName='repset', canonicalAddress=10.202.11.118:, hosts=[10.202.37.75:, 10.202.11.117:, 10.202.11.118:], passives=[], arbiters=[], primary='10.202.37.75:27017', tagSet=TagSet{[]}, electionId=null, setVersion=, lastWriteDate=Wed Mar :: CST , lastUpdateTimeNanos=}

三月 , :: 下午 com.mongodb.diagnostics.logging.JULLogger log

信息: Setting max election id to 7fffffff0000000000000002 from replica set primary 10.202.37.75:

三月 , :: 下午 com.mongodb.diagnostics.logging.JULLogger log

信息: Setting max set version to from replica set primary 10.202.37.75:

三月 , :: 下午 com.mongodb.diagnostics.logging.JULLogger log

信息: Discovered replica set primary 10.202.37.75:

三月 , :: 下午 com.mongodb.diagnostics.logging.JULLogger log

信息: Opened connection [connectionId{localValue:, serverValue:}] to 10.202.37.75:

{ "_id" : { "$oid" : "58db2b6572cf3b348b3cc0f5"} , "name" : "shenzhen" , "addr" : "nanshan"}

{ "_id" : { "$oid" : "58db312b200c0b77a06fc328"} , "name" : "37.75 primary" , "addr" : "75"}

{ "_id" : { "$oid" : "58db5075524e6107003d9978"} , "test" : "value"}

用mongo的shell命令查看结果:

repset:SECONDARY> db.testdb.find()

{ "_id" : ObjectId("58db2b6572cf3b348b3cc0f5"), "name" : "shenzhen", "addr" : "nanshan" }

{ "_id" : ObjectId("58db312b200c0b77a06fc328"), "name" : "37.75 primary", "addr" : "75" }

{ "_id" : ObjectId("58db5075524e6107003d9978"), "test" : "value" }

repset:SECONDARY>

目前看起来支持完美的故障转移了,这个架构是不是比较完美了?其实还有很多地方可以优化,比如开头的第二个问题:主节点的读写压力过大如何解决?常见的解决方案是读写分离,mongodb副本集的读写分离如何做呢?

看图说话:

常规写操作来说并没有读操作多,所以一台主节点负责写,两台副本节点负责读。

1、设置读写分离需要先在副本节点SECONDARY 设置 setSlaveOk。

2、在程序中设置副本节点负责读操作,如下代码:

import java.util.ArrayList;

import java.util.List; import com.mongodb.BasicDBObject;

import com.mongodb.DB;

import com.mongodb.DBCollection;

import com.mongodb.DBObject;

import com.mongodb.MongoClient;

import com.mongodb.ReadPreference;

import com.mongodb.ServerAddress; public class TestMongoDBReplSetReadSplit { public static void main(String[] args) { try {

List<ServerAddress> addresses = new ArrayList<ServerAddress>();

ServerAddress address1 = new ServerAddress("10.202.11.117", 27017);

ServerAddress address2 = new ServerAddress("10.202.37.75", 27017);

ServerAddress address3 = new ServerAddress("10.202.11.118", 27017);

addresses.add(address1);

addresses.add(address2);

addresses.add(address3); MongoClient client = new MongoClient(addresses);

DB db = client.getDB("tong");

DBCollection coll = db.getCollection("testdb"); BasicDBObject object = new BasicDBObject();

object.append("test", "value"); // 读操作从副本节点读取

ReadPreference preference = ReadPreference.secondary();

DBObject dbObject = coll.findOne(object, null, preference); System.out.println(dbObject); } catch (Exception e) {

e.printStackTrace();

}

}

}

结果:

信息: Discovered replica set primary 10.202.37.75:27017

三月 29, 2017 2:18:34 下午 com.mongodb.diagnostics.logging.JULLogger log

信息: Opened connection [connectionId{localValue:4, serverValue:17}] to 10.202.11.118:27017

{ "_id" : { "$oid" : "58db5075524e6107003d9978"} , "test" : "value"}

读参数除了secondary一共还有五个参数:primary、primaryPreferred、secondary、secondaryPreferred、nearest。

primary:默认参数,只从主节点上进行读取操作;

primaryPreferred:大部分从主节点上读取数据,只有主节点不可用时从secondary节点读取数据。

secondary:只从secondary节点上进行读取操作,存在的问题是secondary节点的数据会比primary节点数据“旧”。

secondaryPreferred:优先从secondary节点进行读取操作,secondary节点不可用时从主节点读取数据;

nearest:不管是主节点、secondary节点,从网络延迟最低的节点上读取数据。

好,读写分离做好我们可以数据分流,减轻压力解决了“主节点的读写压力过大如何解决?”这个问题。不过当我们的副本节点增多时,主节点的复制压力会加大有什么办法解决吗?mongodb早就有了相应的解决方案。

看图:

其中的仲裁节点不存储数据,只是负责故障转移的群体投票,这样就少了数据复制的压力。是不是想得很周到啊,一看mongodb的开发兄弟熟知大数据架构体系,其实不只是主节点、副本节点、仲裁节点,还有Secondary-Only、Hidden、Delayed、Non-Voting。

Secondary-Only:不能成为primary节点,只能作为secondary副本节点,防止一些性能不高的节点成为主节点。

Hidden:这类节点是不能够被客户端制定IP引用,也不能被设置为主节点,但是可以投票,一般用于备份数据。

Delayed:可以指定一个时间延迟从primary节点同步数据。主要用于备份数据,如果实时同步,误删除数据马上同步到从节点,恢复又恢复不了。

Non-Voting:没有选举权的secondary节点,纯粹的备份数据节点。

到此整个mongodb副本集搞定了两个问题:

- 主节点挂了能否自动切换连接?目前需要手工切换。

- 主节点的读写压力过大如何解决?

还有这两个问题后续解决:

- 从节点每个上面的数据都是对数据库全量拷贝,从节点压力会不会过大?

- 数据压力大到机器支撑不了的时候能否做到自动扩展?

做了副本集发现又一些问题:

- 副本集故障转移,主节点是如何选举的?能否手动干涉下架某一台主节点。

- 官方说副本集数量最好是奇数,为什么?

- mongodb副本集是如何同步的?如果同步不及时会出现什么情况?会不会出现不一致性?

- mongodb的故障转移会不会无故自动发生?什么条件会触发?频繁触发可能会带来系统负载加重

参考:

http://cn.docs.mongodb.org/manual/administration/replica-set-member-configuration/

http://docs.mongodb.org/manual/reference/connection-string/

http://www.cnblogs.com/magialmoon/p/3268963.html

MongoDB 3.4 高可用集群搭建(二)replica set 副本集的更多相关文章

- MongoDB分布式集群搭建(分片加副本集)

# 环境准备 服务器 # 环境搭建 文件配置和目录添加 新建目录的操作要在三台机器中进行,为配置服务器新建数据目录和日志目录 mkdir -p $MONGODB_HOME/config/data mk ...

- 搭建高可用mongodb集群(三)—— 深入副本集内部机制

在上一篇文章<搭建高可用mongodb集群(二)—— 副本集> 介绍了副本集的配置,这篇文章深入研究一下副本集的内部机制.还是带着副本集的问题来看吧! 副本集故障转移,主节点是如何选举的? ...

- 搭建高可用mongodb集群(三)—— 深入副本集内部机制

在上一篇文章<搭建高可用mongodb集群(二)-- 副本集> 介绍了副本集的配置,这篇文章深入研究一下副本集的内部机制.还是带着副本集的问题来看吧! 副本集故障转移,主节点是如何选举的? ...

- Mongodb集群搭建之 Replica Set

Mongodb集群搭建之 Replica Set Replica Set 中文翻译叫做副本集,不过我并不喜欢把英文翻译成中文,总是感觉怪怪的.其实简单来说就是集群当中包含了多份数据,保证主节点挂掉了, ...

- windows+mysql集群搭建-三分钟搞定集群

注:本文来源: 陈晓婵 < windows+mysql集群搭建-三分钟搞定集群 > 一:mysql集群搭建教程-基础篇 计算机一级考试系统要用集群,目标是把集群搭建起来,保证一 ...

- MongoDB 集群搭建(主从复制、副本及)(五)

六:架构管理 mongodb的主从集群分为两种: 1:master-Slave 复制(主从) --从server不会主动变成主server,须要设置才行 2:replica Sets 复制(副本 ...

- redis集群主从集群搭建、sentinel(哨兵集群)配置以及Jedis 哨兵模式简要配置

前端时间项目上为了提高平台性能,为应用添加了redis缓存,为了提高服务的可靠性,redis部署了高可用的主从缓存,主从切换使用的redis自带的sentinel集群.现在权作记录.

- mongodb的分布式集群(4、分片和副本集的结合)

概述 前面3篇博客讲了mongodb的分布式和集群,当中第一种的主从复制我们差点儿不用,没有什么意义,剩下的两种,我们不论单独的使用哪一个.都会出现对应的问题.比較好的一种解决方式就是.分片和副本集的 ...

- 持续集成高级篇之Jenkins windows/linux混合集群搭建(二)

系列目录 前面我们说过,要使用ssh方式来配置windows从节点,如果采用ssh方式,则windows和linux配置从节点几乎没有区别,目前发现的惟一的区别在于windows从节点上目录要设置在c ...

- k8s入门之集群搭建(二)

一.准备三台节点 从上篇文章 k8s入门之基础环境准备(一)安装的Ubuntu虚拟机克隆出三台虚拟机,如图所示 启动这三台虚拟机节点,分别做如下配置 虚拟机名称 IP HostName k8sMast ...

随机推荐

- 普通神经网络和RNN简单demo (一)

2017-08-04 花了两天时间看了下神经网络的一点基础知识,包括单层的感知机模型,普通的没有记忆功能的多层神经网咯,还有递归神经网络RNN.这里主要是参考了一个博客,实现了几个简单的代码,这里把源 ...

- Spark 总结2

网页访问时候 没有打开 注意防火墙! 启动park shell bin下面的spark-shell 这样启动的是单机版的 可以看到没有接入集群中: 应该这么玩儿 用park协议 spark:/ ...

- SCOI2017酱油记

Day0: 虽然是8点30开始模拟赛,还是设了个7点的闹钟调节生物钟.结果硬生生睡到7点40... 打开题目:T1期望,直接弃掉(到现在都不会期望已经可以滚粗了..) T2一眼可做,恩,先写个暴力.然 ...

- Android fill_parent和wrap_content分析

fill_parent设置一个顶部布局或控件强制性让它布满整个屏幕.(这是不管内容大小,直接适应整个屏幕的大小,例长度设置了这,就只有长度适应屏幕的长度) wrap_content布局指根据视图内部内 ...

- MVC 绑定 下拉框数据

HTML: <div class="form-group col-sm-12"> <div class="col-sm-4"> < ...

- JMeter ——Test fragment

fragment 为片段,可以是一个不完整的用例.比如你可以把一个http请求保存为fragment,如果不这样做的话,你是必须先要添加一个测试计划-线程组-http请求的.你可以把某步骤一系列的请求 ...

- 理解面向消息的中间件和 JMS

本章知识点: 企业消息和面向消息的中间件 理解 Java Message Service(JMS) 使用 JMS APIs 发送和接收消息 消息驱动 bean 的一个例子 简介 一般来说,掌握了企业级 ...

- tagclass,taglib,tld设置

<tag> <name>dateOutput</name> <tagclass>tags.DateOutput</tagclass> // ...

- 51 nod 1091 贪心

http://www.51nod.com/onlineJudge/questionCode.html#!problemId=1091 1091 线段的重叠 基准时间限制:1 秒 空间限制:131072 ...

- 交换排序—快速排序(Quick Sort)原理以及Java实现

交换排序—快速排序(Quick Sort) 基本思想: 1)选择一个基准元素,通常选择第一个元素或者最后一个元素, 2)通过一趟排序讲待排序的记录分割成独立的两部分,其中一部分记录的元素值均比基准元素 ...