分布式存储系统之Ceph集群部署

前文我们了解了Ceph的基础架构和相关组件的介绍,回顾请参考https://www.cnblogs.com/qiuhom-1874/p/16720234.html;今天我们来部署一个ceph集群;

部署工具介绍

1、ceph-deploy:该部署工具是ceph官方的部署工具,它只依赖SSH访问服务器,不需要额外的agent;它可以完全运行在自己的工作站上(比如admin host),不需要服务器,数据库类似的东西;该工具不是一个通用的部署工具,只针对ceph;相比ansible,puppet,功能相对单一;该工具可以推送配置文件,但它不处理客户端配置,以及客户端部署相关依赖等;

2、ceph-ansible:该工具是用ansible写的剧本角色,我们只需要把对应的项目克隆下来,修改必要的参数,就可以正常的拉起一个ceph集群;但这前提是我们需要熟练使用ansible;项目地址https://github.com/ceph/ceph-ansible;

3、ceph-chef:chef也是类似ansible、puppet这类自动化部署工具,我们需要手动先安装好chef,然后手动写代码实现部署;ceph-chef就是写好部署ceph的一个项目,我们可以下载对应项目在本地修改必要参数,也能正常拉起一个ceph集群;前提是我们要熟练使用chef才行;项目下载地址https://github.com/ceph/ceph-chef;

4、puppet-ceph:很显然该工具就是用puppet写好的部署ceph的模块,也是下载下来修改必要参数,就可以正常拉起一个ceph集群;

不管用什么工具,我们首先都要熟练知道ceph集群架构,它的必要组件,每个组件是怎么工作的,有什么作用,需要怎么配置等等;除此之外我们还需要熟练使用各种自动化部署工具,才能很好的使用上述工具部署ceph集群;

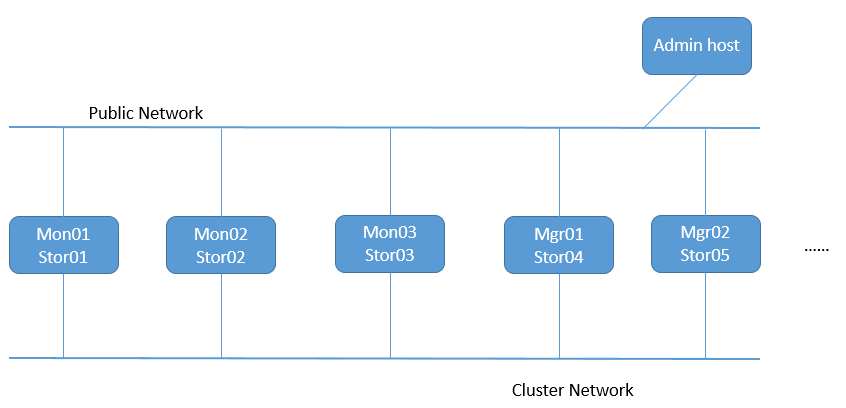

集群拓扑网络

提示:Public Network是指公共网络,专供客户端连接ceph集群使用;一般如果节点不多,集群规模和用户的访问量不大的情况下,只有一个public network也是完全ok;存在cluster network主要原因是,集群内部的事务处理,可能影响到客户端在ceph存储数据;所以cluster network是集群私有网络,专门用于集群内部各组件通信协调使用;我们用于部署ceph的admin host 只需要有一个公共网络连入集群下发配置即可;

Ceph集群系统基础环境设定

| 主机地址 | 角色 |

|

public network:192.168.0.70/24 |

admin host |

|

public network:192.168.0.71/24 cluster network:172.16.30.71/24 |

mon01/stor01 |

|

public network:192.168.0.72/24 cluster network:172.16.30.72/24 |

mon02/stor02 |

|

public network:192.168.0.73/24 cluster network:172.16.30.73/24 |

mon03/stor03 |

|

public network:192.168.0.74/24 cluster network:172.16.30.74/24 |

mgr01/stor04 |

|

public network:192.168.0.75/24 cluster network:172.16.30.75/24 |

mgr02/stor05 |

各主机主机名解析

192.168.0.70 ceph-admin ceph-admin.ilinux.io

192.168.0.71 ceph-mon01 ceph-mon01.ilinux.io ceph-stor01 ceph-stor01.ilinux.io

192.168.0.72 ceph-mon02 ceph-mon02.ilinux.io ceph-stor02 ceph-stor02.ilinux.io

192.168.0.73 ceph-mon03 ceph-mon03.ilinux.io ceph-stor03 ceph-stor03.ilinux.io

192.168.0.74 ceph-mgr01 ceph-mgr01.ilinux.io ceph-stor04 ceph-stor04.ilinux.io

192.168.0.75 ceph-mgr02 ceph-mgr02.ilinux.io ceph-stor05 ceph-stor05.ilinux.io 172.16.30.71 ceph-mon01 ceph-mon01.ilinux.io ceph-stor01 ceph-stor01.ilinux.io

172.16.30.72 ceph-mon02 ceph-mon02.ilinux.io ceph-stor02 ceph-stor02.ilinux.io

172.16.30.73 ceph-mon03 ceph-mon03.ilinux.io ceph-stor03 ceph-stor03.ilinux.io

172.16.30.74 ceph-mgr01 ceph-mgr01.ilinux.io ceph-stor04 ceph-stor04.ilinux.io

172.16.30.75 ceph-mgr02 ceph-mgr02.ilinux.io ceph-stor05 ceph-stor05.ilinux.io

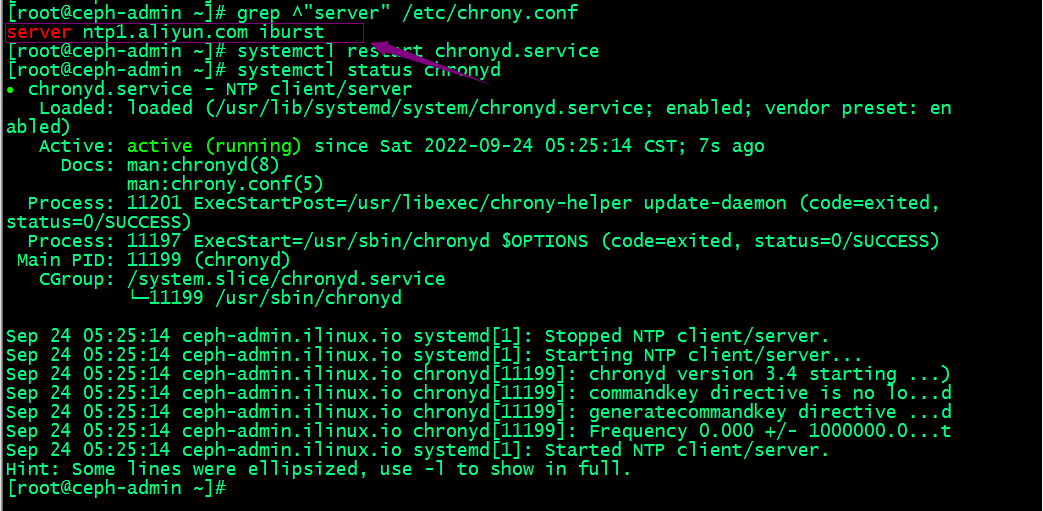

各主机配置ntp服务设定各节点时间精准同步

[root@ceph-admin ~]# sed -i 's@^\(server \).*@\1ntp1.aliyun.com iburst@' /etc/chrony.conf

[root@ceph-admin ~]# systemctl restart chronyd

提示:上述服务器都需要在chrony.conf中配置同步时间的服务器,这里推荐使用阿里云,然后重启chronyd服务即可;

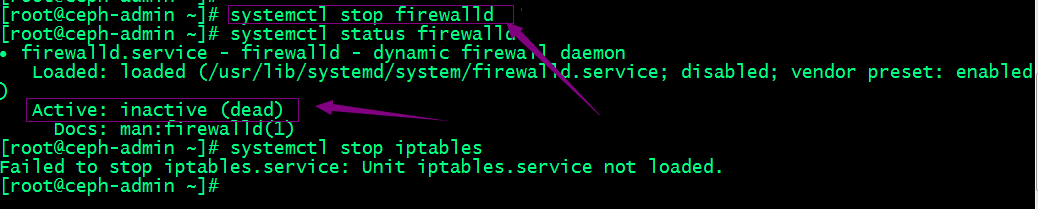

各节点关闭iptables 或firewalld服务

[root@ceph-admin ~]# systemctl stop firewalld

[root@ceph-admin ~]# systemctl disable firewalld

提示:centos7 默认没有安装iptalbes服务,我们只需要关闭firewalld即可;

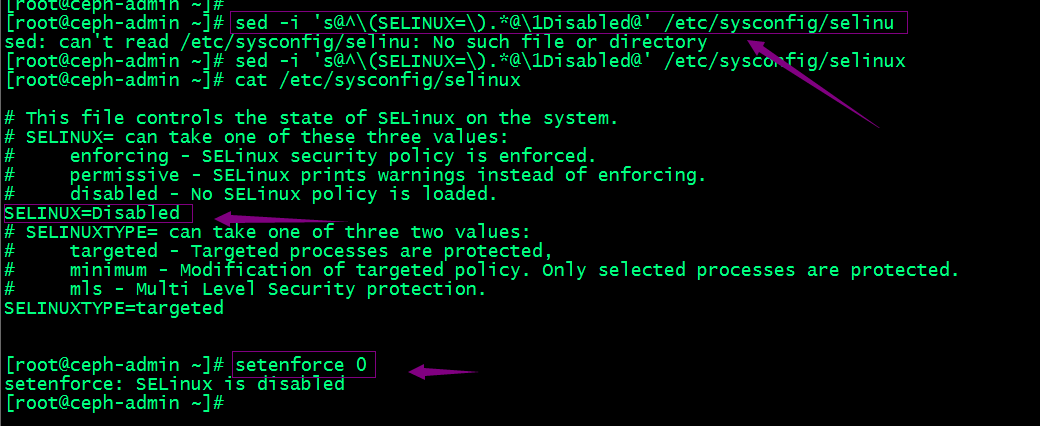

各节点关闭并禁用selinux

[root@ceph-admin ~]# sed -i 's@^\(SELINUX=\).*@\1Disabled@' /etc/sysconfig/selinu

[root@ceph-admin ~]# setenforce 0

提示:上述sed命令表示查找/etc/sysconfig/selinux配置文件中,以SELINUX开头的行当所有内容,并将其替换为SELINUX=Disabled;ok,准备好集群基础环境以后,接下来我们开始部署ceph;

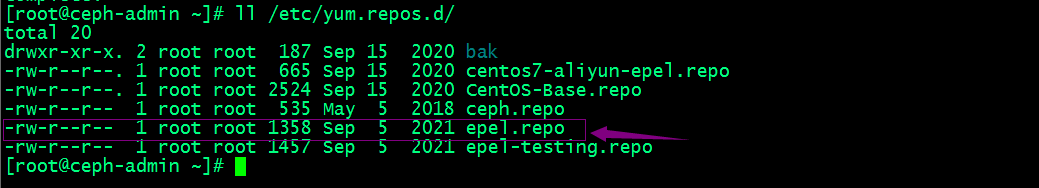

准备yum仓库配置文件

提示:在阿里云的镜像站找ceph,然后找到我们需要安装的版本;然后找到ceph-release,可以下载,也可以复制对应下载链接,然后在服务器的每个节点都安装好对应的ceph-release包;

在集群各节点安装ceph-release包生成ceph仓库配置文件

rpm -ivh https://mirrors.aliyun.com/ceph/rpm-mimic/el7/noarch/ceph-release-1-1.el7.noarch.rpm?spm=a2c6h.25603864.0.0.6e8b635btfWSIx

在集群各节点安装epel-release生成epel仓库配置

[root@ceph-admin ~]# yum install -y epel-release

到此ceph的yum仓库配置文件就准备好了

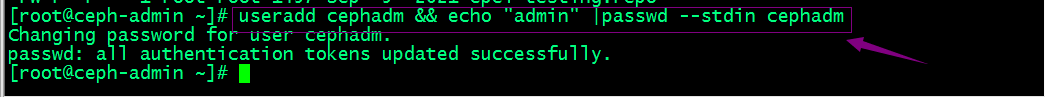

在集群各节点创建部署ceph的特定用户帐号

[root@ceph-admin ~]# useradd cephadm && echo "admin" |passwd --stdin cephadm

提示:部署工具ceph-deploy 必须以普通用户登录到Ceph集群的各目标节点,且此用户需要拥有无密码使用sudo命令的权限,以便在安装软件及生成配置文件的过程中无需中断配置过程。不过,较新版的ceph-deploy也支持用 ”--username“ 选项提供可无密码使用sudo命令的用户名(包括 root ,但不建议这样做)。另外,使用”ceph-deploy --username {username} “命令时,指定的用户需要能够通过SSH协议自动认证并连接到各Ceph节点,以免ceph-deploy命令在配置中途需要用户输入密码。

确保集群节点上新创建的cephadm用户能够无密码运行sudo权限

[root@ceph-admin ~]# echo "cephadm ALL = (root) NOPASSWD:ALL" |sudo tee /etc/sudoers.d/cephadm

[root@ceph-admin ~]# chmod 0440 /etc/sudoers.d/cephadm

切换至cephadm用户,查看sudo权限

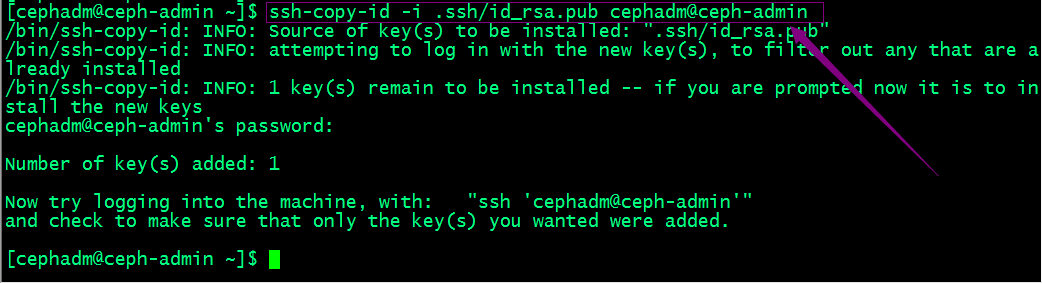

配置cephadm基于密钥的ssh认证

提示:先切换用户到cephadm下然后生成密钥;

拷贝公钥给自己

复制被地.ssh目录给其他主机,放置在cephadm用户家目录下

[cephadm@ceph-admin ~]$ scp -rp .ssh cephadm@ceph-mon01:/home/cephadm/

[cephadm@ceph-admin ~]$ scp -rp .ssh cephadm@ceph-mon02:/home/cephadm/

[cephadm@ceph-admin ~]$ scp -rp .ssh cephadm@ceph-mon03:/home/cephadm/

[cephadm@ceph-admin ~]$ scp -rp .ssh cephadm@ceph-mgr01:/home/cephadm/

[cephadm@ceph-admin ~]$ scp -rp .ssh cephadm@ceph-mgr02:/home/cephadm/

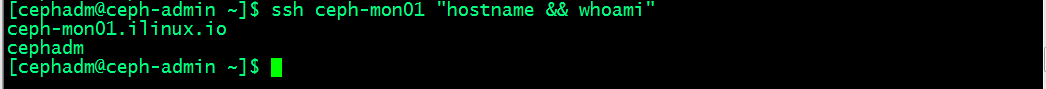

验证:以cephadm用户远程集群节点,看看是否是免密登录

提示:能够正常远程执行命令说明我们的免密登录就没有问题;

在admin host上安装ceph-deploy

[cephadm@ceph-admin ~]$ sudo yum update

[cephadm@ceph-admin ~]$ sudo yum install ceph-deploy python-setuptools python2-subprocess32

验证ceph-deploy是否成功安装

[cephadm@ceph-admin ~]$ ceph-deploy --version

2.0.1

[cephadm@ceph-admin ~]$

提示:能够正常看到ceph-deploy的版本,说明ceph-deploy安装成功;

部署RADOS存储集群

1、在admin host以cephadm用户创建集群相关配置文件目录

[cephadm@ceph-admin ~]$ mkdir ceph-cluster

[cephadm@ceph-admin ~]$ cd ceph-cluster

[cephadm@ceph-admin ceph-cluster]$ pwd

/home/cephadm/ceph-cluster

[cephadm@ceph-admin ceph-cluster]$

2、初始化第一个mon节点

[cephadm@ceph-admin ceph-cluster]$ ceph-deploy --help

usage: ceph-deploy [-h] [-v | -q] [--version] [--username USERNAME]

[--overwrite-conf] [--ceph-conf CEPH_CONF]

COMMAND ... Easy Ceph deployment -^-

/ \

|O o| ceph-deploy v2.0.1

).-.(

'/|||\`

| '|` |

'|` Full documentation can be found at: http://ceph.com/ceph-deploy/docs optional arguments:

-h, --help show this help message and exit

-v, --verbose be more verbose

-q, --quiet be less verbose

--version the current installed version of ceph-deploy

--username USERNAME the username to connect to the remote host

--overwrite-conf overwrite an existing conf file on remote host (if

present)

--ceph-conf CEPH_CONF

use (or reuse) a given ceph.conf file commands:

COMMAND description

new Start deploying a new cluster, and write a

CLUSTER.conf and keyring for it.

install Install Ceph packages on remote hosts.

rgw Ceph RGW daemon management

mgr Ceph MGR daemon management

mds Ceph MDS daemon management

mon Ceph MON Daemon management

gatherkeys Gather authentication keys for provisioning new nodes.

disk Manage disks on a remote host.

osd Prepare a data disk on remote host.

repo Repo definition management

admin Push configuration and client.admin key to a remote

host.

config Copy ceph.conf to/from remote host(s)

uninstall Remove Ceph packages from remote hosts.

purgedata Purge (delete, destroy, discard, shred) any Ceph data

from /var/lib/ceph

purge Remove Ceph packages from remote hosts and purge all

data.

forgetkeys Remove authentication keys from the local directory.

pkg Manage packages on remote hosts.

calamari Install and configure Calamari nodes. Assumes that a

repository with Calamari packages is already

configured. Refer to the docs for examples

(http://ceph.com/ceph-deploy/docs/conf.html) See 'ceph-deploy <command> --help' for help on a specific command

[cephadm@ceph-admin ceph-cluster]$

提示:我们通过查看ceph-deploy 的帮助可以知道 它的子命令new就是创建一个集群配置和生成一个keyring文件;

查看ceph-deploy new的用法

[cephadm@ceph-admin ceph-cluster]$ ceph-deploy new --help

usage: ceph-deploy new [-h] [--no-ssh-copykey] [--fsid FSID]

[--cluster-network CLUSTER_NETWORK]

[--public-network PUBLIC_NETWORK]

MON [MON ...] Start deploying a new cluster, and write a CLUSTER.conf and keyring for it. positional arguments:

MON initial monitor hostname, fqdn, or hostname:fqdn pair optional arguments:

-h, --help show this help message and exit

--no-ssh-copykey do not attempt to copy SSH keys

--fsid FSID provide an alternate FSID for ceph.conf generation

--cluster-network CLUSTER_NETWORK

specify the (internal) cluster network

--public-network PUBLIC_NETWORK

specify the public network for a cluster

[cephadm@ceph-admin ceph-cluster]$

提示:ceph-deploy new的命令格式 我们只需要对应节点的主机名即可;但是前提是对应主机名做了正确的解析;

[cephadm@ceph-admin ceph-cluster]$ ceph-deploy new ceph-mon01

[ceph_deploy.conf][DEBUG ] found configuration file at: /home/cephadm/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /bin/ceph-deploy new ceph-mon01

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] func : <function new at 0x7f0660799ed8>

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f065ff11b48>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] ssh_copykey : True

[ceph_deploy.cli][INFO ] mon : ['ceph-mon01']

[ceph_deploy.cli][INFO ] public_network : None

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] cluster_network : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.cli][INFO ] fsid : None

[ceph_deploy.new][DEBUG ] Creating new cluster named ceph

[ceph_deploy.new][INFO ] making sure passwordless SSH succeeds

[ceph-mon01][DEBUG ] connected to host: ceph-admin.ilinux.io

[ceph-mon01][INFO ] Running command: ssh -CT -o BatchMode=yes ceph-mon01

[ceph-mon01][DEBUG ] connection detected need for sudo

[ceph-mon01][DEBUG ] connected to host: ceph-mon01

[ceph-mon01][DEBUG ] detect platform information from remote host

[ceph-mon01][DEBUG ] detect machine type

[ceph-mon01][DEBUG ] find the location of an executable

[ceph-mon01][INFO ] Running command: sudo /usr/sbin/ip link show

[ceph-mon01][INFO ] Running command: sudo /usr/sbin/ip addr show

[ceph-mon01][DEBUG ] IP addresses found: [u'172.16.30.71', u'192.168.0.71']

[ceph_deploy.new][DEBUG ] Resolving host ceph-mon01

[ceph_deploy.new][DEBUG ] Monitor ceph-mon01 at 192.168.0.71

[ceph_deploy.new][DEBUG ] Monitor initial members are ['ceph-mon01']

[ceph_deploy.new][DEBUG ] Monitor addrs are ['192.168.0.71']

[ceph_deploy.new][DEBUG ] Creating a random mon key...

[ceph_deploy.new][DEBUG ] Writing monitor keyring to ceph.mon.keyring...

[ceph_deploy.new][DEBUG ] Writing initial config to ceph.conf...

[cephadm@ceph-admin ceph-cluster]$

提示:我们可以在命令行使用--public-network 选项来指定集群公共网络和使用--cluster-network选项来指定对应集群网络;当然也可以生成好配置文件,然后在配置文件里修改也行;

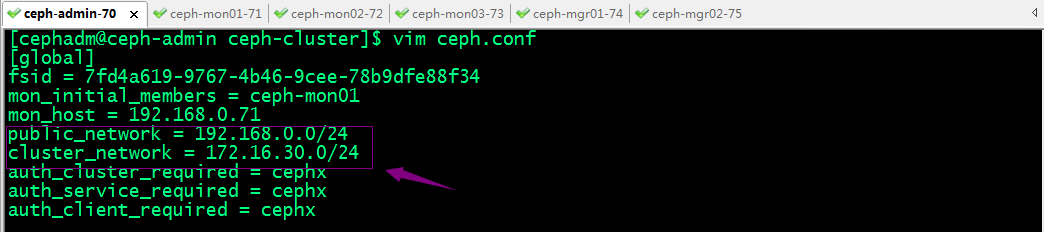

3、修改配置文件指定集群的公共网络和集群网络

提示:编辑生成的ceph.conf配置文件,在【global】配置段中设置ceph集群面向客户端通信的网络ip地址所在公网网络地址和面向集群各节点通信的网络ip地址所在集群网络地址,如上所示;

4、安装ceph集群

[cephadm@ceph-admin ceph-cluster]$ ceph-deploy install ceph-mon01 ceph-mon02 ceph-mon03 ceph-mgr01 ceph-mgr02

提示:ceph-deploy命令能够以远程的方式连入Ceph集群各节点完成程序包安装等操作,所以我们只需要告诉ceph-deploy 那些主机需要安装即可;

集群各主机如果需要独立安装ceph程序包,方法如下

yum install ceph ceph-radosgw

提示:前提是该主机基础环境都配置好了,比如关闭iptables或firewalld,同步时间,ssh免密登录,关闭selinux等;重要的是对应ceph的yum仓库配置和epel仓库配置都已经正常配置;

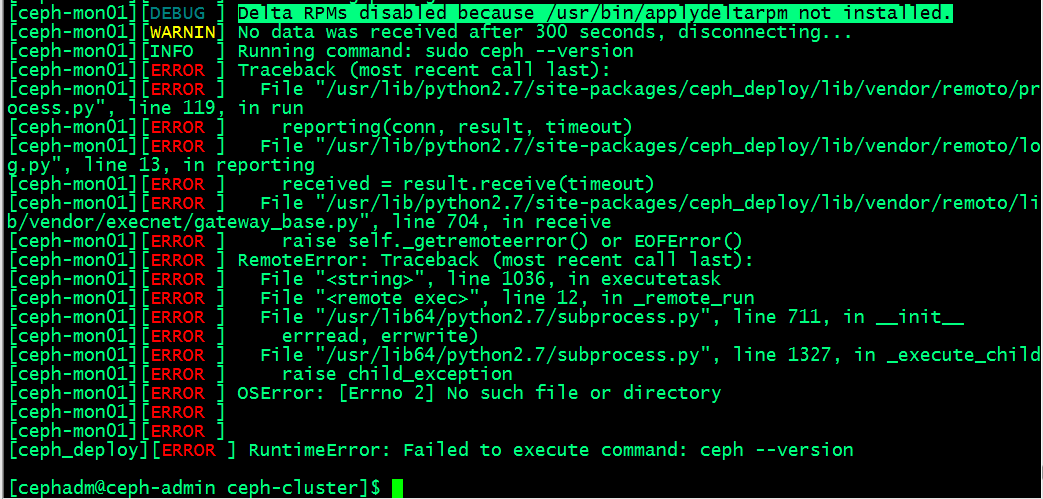

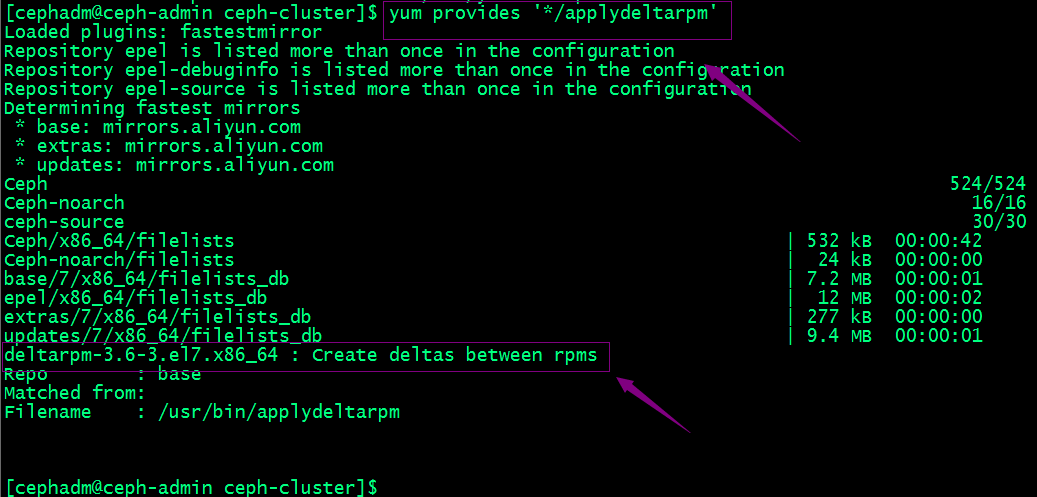

提示:这里提示我们没有安装applydeltarpm;

查找applydeltarpm安装包

在集群各节点安装deltarpm包来解决上述报错

yum install -y deltarpm-3.6-3.el7.x86_64

再次在admin host上用ceph-deploy安装ceph集群

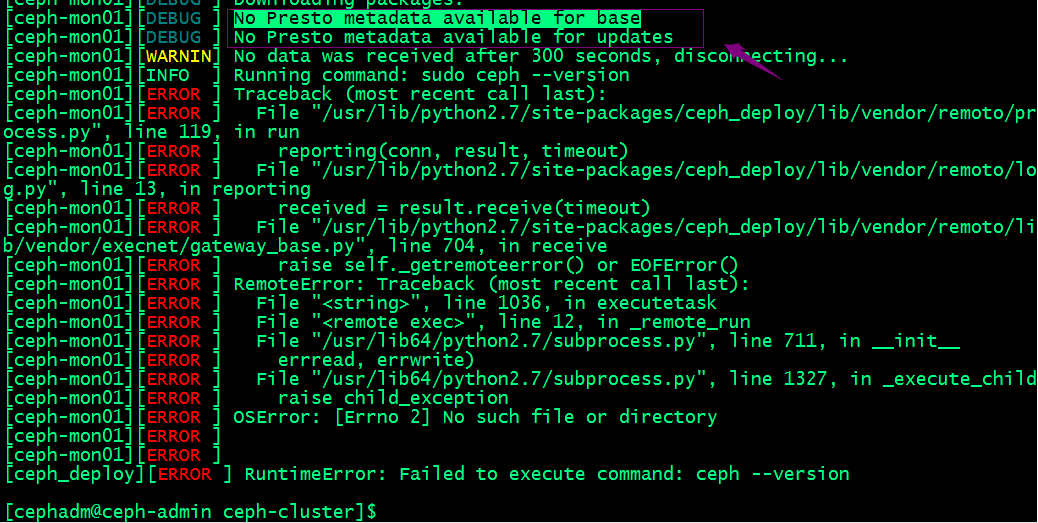

提示:这里告诉我们没有presto元数据;解决办法清除所有yum缓存,重新生成缓存;

yum clean all && yum makecache

提示:以上操作都需要在集群各节点上操作;

再次在admin host上用ceph-deploy安装ceph集群

提示:如果在最后能够看到ceph的版本,说明我们指定的节点都已经安装好对应ceph集群所需的程序包了;

5、配置初始MON节点,并收集所有密钥

查看ceph-deploy mon帮助

[cephadm@ceph-admin ceph-cluster]$ ceph-deploy mon --help

usage: ceph-deploy mon [-h] {add,create,create-initial,destroy} ... Ceph MON Daemon management positional arguments:

{add,create,create-initial,destroy}

add Add a monitor to an existing cluster:

ceph-deploy mon add node1

Or:

ceph-deploy mon add --address 192.168.1.10 node1

If the section for the monitor exists and defines a `mon addr` that

will be used, otherwise it will fallback by resolving the hostname to an

IP. If `--address` is used it will override all other options.

create Deploy monitors by specifying them like:

ceph-deploy mon create node1 node2 node3

If no hosts are passed it will default to use the

`mon initial members` defined in the configuration.

create-initial Will deploy for monitors defined in `mon initial

members`, wait until they form quorum and then

gatherkeys, reporting the monitor status along the

process. If monitors don't form quorum the command

will eventually time out.

destroy Completely remove Ceph MON from remote host(s) optional arguments:

-h, --help show this help message and exit

[cephadm@ceph-admin ceph-cluster]$

提示:add 是添加mon节点,create是创建一个mon节点,但不初始化,如果要初始化需要在对应节点的配置文件中定义配置mon成员;create-initial是创建并初始化mon成员;destroy是销毁一个mon移除mon节点;

初始化mon节点

[cephadm@ceph-admin ceph-cluster]$ ceph-deploy mon create-initial

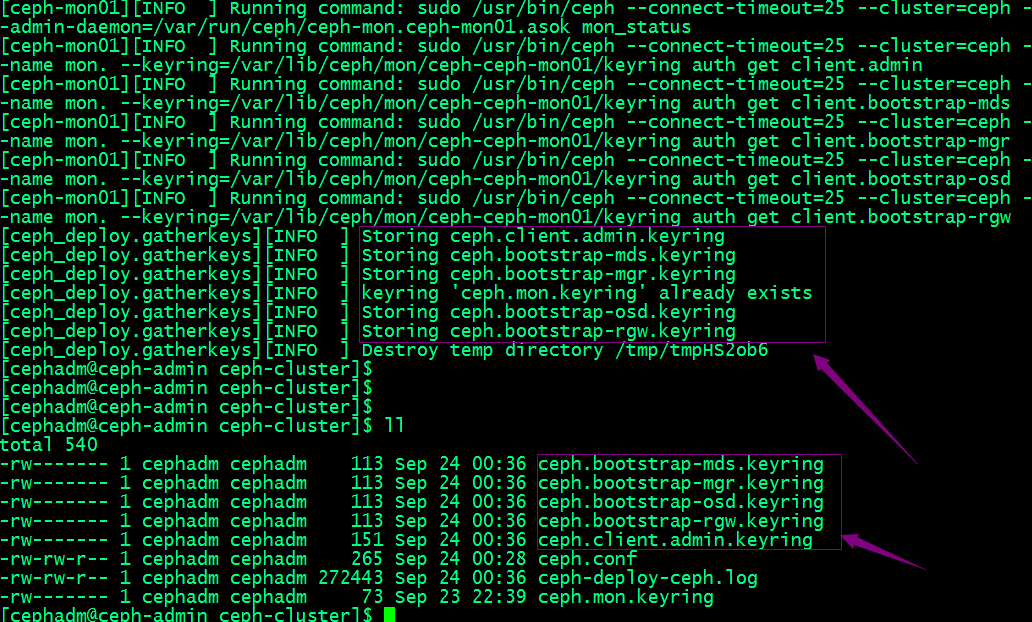

提示:从上面的输出信息可以看到该命令是从当前配置文件读取mon节点信息,然后初始化;我们在上面的new 命令里只有给了mon01,所以这里只初始化了mon01;并在当前目录生成了引导mds、mgr、osd、rgw和客户端连接ceph集群的管理员密钥;

6、拷贝配置文件和admin密钥到集群各节点

查看ceph-deploy admin的帮助

[cephadm@ceph-admin ceph-cluster]$ ceph-deploy admin --help

usage: ceph-deploy admin [-h] HOST [HOST ...] Push configuration and client.admin key to a remote host. positional arguments:

HOST host to configure for Ceph administration optional arguments:

-h, --help show this help message and exit

[cephadm@ceph-admin ceph-cluster]$

提示:ceph-deploy admin命令主要作用是向指定的集群主机推送配置文件和客户端管理员密钥;以免得每次执行”ceph“命令行时不得不明确指定MON节点地址和ceph.client.admin.keyring;

[cephadm@ceph-admin ceph-cluster]$ ceph-deploy admin ceph-mon01 ceph-mon02 ceph-mon03 ceph-stor04 ceph-stor05

提示:推送配置和管理员密钥我们只需要后面跟上对应集群主机即可,注意主机名要做对应的解析;这里还需要多说一句,配置文件是集群每个节点都需要推送的,但是管理密钥通常只需要推送给需要在对应主机上执行管理命令的主机上使用;所以ceph-deploy config命令就是用于专门推送配置文件,不推送管理密钥;

查看ceph-deploy config帮助

[cephadm@ceph-admin ceph-cluster]$ ceph-deploy config --help

usage: ceph-deploy config [-h] {push,pull} ... Copy ceph.conf to/from remote host(s) positional arguments:

{push,pull}

push push Ceph config file to one or more remote hosts

pull pull Ceph config file from one or more remote hosts optional arguments:

-h, --help show this help message and exit

[cephadm@ceph-admin ceph-cluster]$ ceph-deploy config push --help

usage: ceph-deploy config push [-h] HOST [HOST ...] positional arguments:

HOST host(s) to push the config file to optional arguments:

-h, --help show this help message and exit

[cephadm@ceph-admin ceph-cluster]$ ceph-deploy config pull --help

usage: ceph-deploy config pull [-h] HOST [HOST ...] positional arguments:

HOST host(s) to pull the config file from optional arguments:

-h, --help show this help message and exit

[cephadm@ceph-admin ceph-cluster]$

提示:ceph-deploy config 有两个子命令,一个是push,表示把本机配置推送到对应指定的主机;pull表示把远端主机的配置拉去到本地;

验证:查看mon01主机上,对应配置文件和管理员密钥文件是否都推送过去了?

提示:可以看到对应配置文件和管理员密钥都推送到对应主机上,但是管理员密钥的权限对于cephadm是没有读权限;

设置管理员密钥能够被cephadm用户有读权限

提示:上述设置权限的命令需要在每个节点都要设置;

7、配置Manager节点,启动ceph-mgr进程(仅Luminious+版本)

查看ceph-deploy mgr帮助

[cephadm@ceph-admin ceph-cluster]$ ceph-deploy mgr --help

usage: ceph-deploy mgr [-h] {create} ... Ceph MGR daemon management positional arguments:

{create}

create Deploy Ceph MGR on remote host(s) optional arguments:

-h, --help show this help message and exit

[cephadm@ceph-admin ceph-cluster]$

提示:mgr子命令就只有一个create命令用于部署mgr;

[cephadm@ceph-admin ceph-cluster]$ ceph-deploy mgr create ceph-mgr01 ceph-mgr02

[ceph_deploy.conf][DEBUG ] found configuration file at: /home/cephadm/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /bin/ceph-deploy mgr create ceph-mgr01 ceph-mgr02

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] mgr : [('ceph-mgr01', 'ceph-mgr01'), ('ceph-mgr02', 'ceph-mgr02')]

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] subcommand : create

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f58a0514950>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] func : <function mgr at 0x7f58a0d8d230>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.mgr][DEBUG ] Deploying mgr, cluster ceph hosts ceph-mgr01:ceph-mgr01 ceph-mgr02:ceph-mgr02

[ceph-mgr01][DEBUG ] connection detected need for sudo

[ceph-mgr01][DEBUG ] connected to host: ceph-mgr01

[ceph-mgr01][DEBUG ] detect platform information from remote host

[ceph-mgr01][DEBUG ] detect machine type

[ceph_deploy.mgr][INFO ] Distro info: CentOS Linux 7.9.2009 Core

[ceph_deploy.mgr][DEBUG ] remote host will use systemd

[ceph_deploy.mgr][DEBUG ] deploying mgr bootstrap to ceph-mgr01

[ceph-mgr01][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph-mgr01][WARNIN] mgr keyring does not exist yet, creating one

[ceph-mgr01][DEBUG ] create a keyring file

[ceph-mgr01][DEBUG ] create path recursively if it doesn't exist

[ceph-mgr01][INFO ] Running command: sudo ceph --cluster ceph --name client.bootstrap-mgr --keyring /var/lib/ceph/bootstrap-mgr/ceph.keyring auth get-or-create mgr.ceph-mgr01 mon allow profile mgr osd allow * mds allow * -o /var/lib/ceph/mgr/ceph-ceph-mgr01/keyring

[ceph-mgr01][INFO ] Running command: sudo systemctl enable ceph-mgr@ceph-mgr01

[ceph-mgr01][WARNIN] Created symlink from /etc/systemd/system/ceph-mgr.target.wants/ceph-mgr@ceph-mgr01.service to /usr/lib/systemd/system/ceph-mgr@.service.

[ceph-mgr01][INFO ] Running command: sudo systemctl start ceph-mgr@ceph-mgr01

[ceph-mgr01][INFO ] Running command: sudo systemctl enable ceph.target

[ceph-mgr02][DEBUG ] connection detected need for sudo

[ceph-mgr02][DEBUG ] connected to host: ceph-mgr02

[ceph-mgr02][DEBUG ] detect platform information from remote host

[ceph-mgr02][DEBUG ] detect machine type

[ceph_deploy.mgr][INFO ] Distro info: CentOS Linux 7.9.2009 Core

[ceph_deploy.mgr][DEBUG ] remote host will use systemd

[ceph_deploy.mgr][DEBUG ] deploying mgr bootstrap to ceph-mgr02

[ceph-mgr02][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph-mgr02][WARNIN] mgr keyring does not exist yet, creating one

[ceph-mgr02][DEBUG ] create a keyring file

[ceph-mgr02][DEBUG ] create path recursively if it doesn't exist

[ceph-mgr02][INFO ] Running command: sudo ceph --cluster ceph --name client.bootstrap-mgr --keyring /var/lib/ceph/bootstrap-mgr/ceph.keyring auth get-or-create mgr.ceph-mgr02 mon allow profile mgr osd allow * mds allow * -o /var/lib/ceph/mgr/ceph-ceph-mgr02/keyring

[ceph-mgr02][INFO ] Running command: sudo systemctl enable ceph-mgr@ceph-mgr02

[ceph-mgr02][WARNIN] Created symlink from /etc/systemd/system/ceph-mgr.target.wants/ceph-mgr@ceph-mgr02.service to /usr/lib/systemd/system/ceph-mgr@.service.

[ceph-mgr02][INFO ] Running command: sudo systemctl start ceph-mgr@ceph-mgr02

[ceph-mgr02][INFO ] Running command: sudo systemctl enable ceph.target

[cephadm@ceph-admin ceph-cluster]$

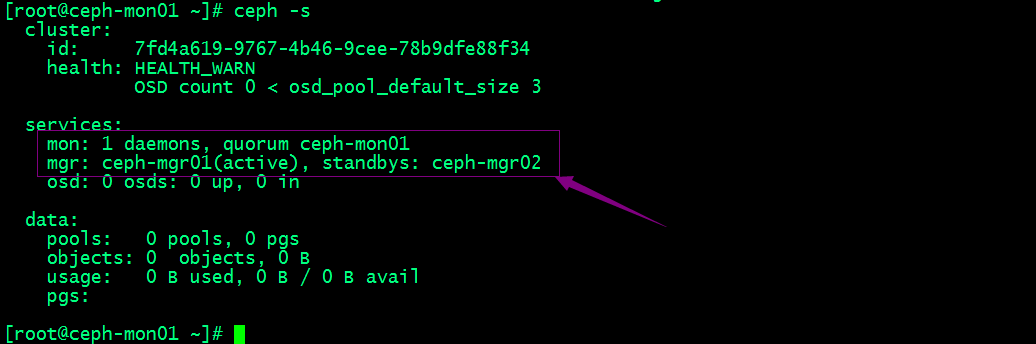

在集群节点上执行ceph -s来查看现在ceph集群的状态

提示:可以看到现在集群有一个mon节点和两个mgr节点;mgr01处于当前活跃状态,mgr02处于备用状态;对应没有osd,所以集群状态显示health warning;

向RADOS集群添加OSD

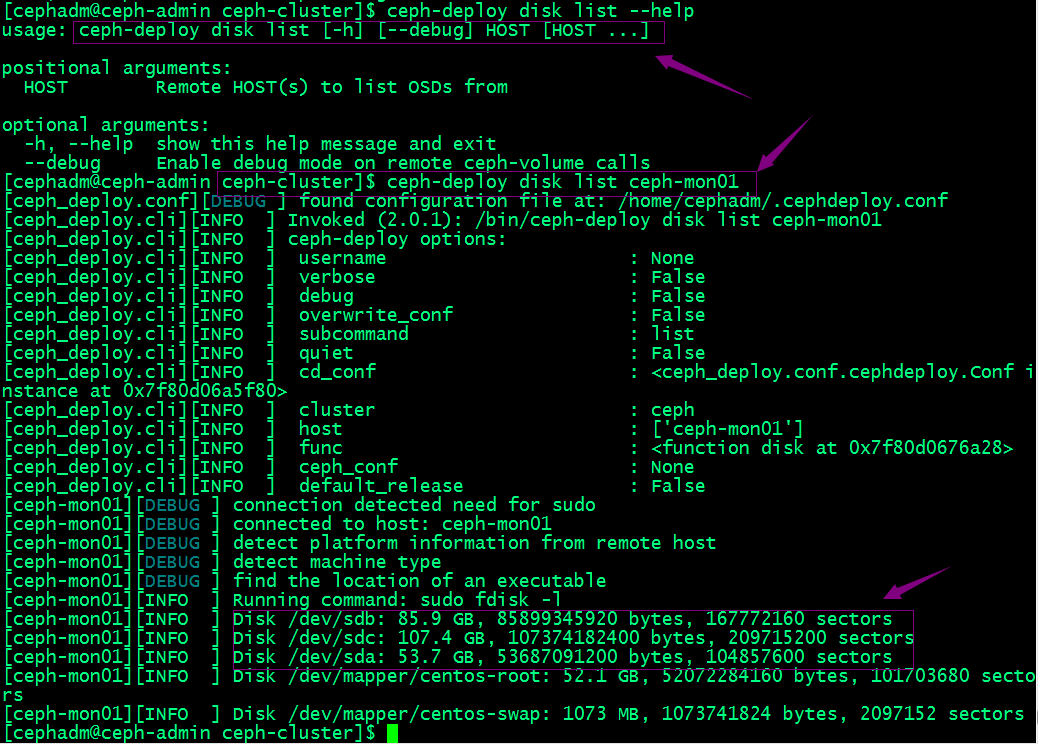

列出并擦净磁盘

查看ceph-deploy disk命令的帮助

提示:ceph-deploy disk命令有两个子命令,list表示列出对应主机上的磁盘;zap表示擦净对应主机上的磁盘;

擦净mon01的sdb和sdc

[cephadm@ceph-admin ceph-cluster]$ ceph-deploy disk zap --help

usage: ceph-deploy disk zap [-h] [--debug] [HOST] DISK [DISK ...] positional arguments:

HOST Remote HOST(s) to connect

DISK Disk(s) to zap optional arguments:

-h, --help show this help message and exit

--debug Enable debug mode on remote ceph-volume calls

[cephadm@ceph-admin ceph-cluster]$ ceph-deploy disk zap ceph-mon01 /dev/sdb /dev/sdc

[ceph_deploy.conf][DEBUG ] found configuration file at: /home/cephadm/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /bin/ceph-deploy disk zap ceph-mon01 /dev/sdb /dev/sdc

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] debug : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] subcommand : zap

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f35f8500f80>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] host : ceph-mon01

[ceph_deploy.cli][INFO ] func : <function disk at 0x7f35f84d1a28>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.cli][INFO ] disk : ['/dev/sdb', '/dev/sdc']

[ceph_deploy.osd][DEBUG ] zapping /dev/sdb on ceph-mon01

[ceph-mon01][DEBUG ] connection detected need for sudo

[ceph-mon01][DEBUG ] connected to host: ceph-mon01

[ceph-mon01][DEBUG ] detect platform information from remote host

[ceph-mon01][DEBUG ] detect machine type

[ceph-mon01][DEBUG ] find the location of an executable

[ceph_deploy.osd][INFO ] Distro info: CentOS Linux 7.9.2009 Core

[ceph-mon01][DEBUG ] zeroing last few blocks of device

[ceph-mon01][DEBUG ] find the location of an executable

[ceph-mon01][INFO ] Running command: sudo /usr/sbin/ceph-volume lvm zap /dev/sdb

[ceph-mon01][WARNIN] --> Zapping: /dev/sdb

[ceph-mon01][WARNIN] --> --destroy was not specified, but zapping a whole device will remove the partition table

[ceph-mon01][WARNIN] Running command: /bin/dd if=/dev/zero of=/dev/sdb bs=1M count=10 conv=fsync

[ceph-mon01][WARNIN] stderr: 10+0 records in

[ceph-mon01][WARNIN] 10+0 records out

[ceph-mon01][WARNIN] stderr: 10485760 bytes (10 MB) copied, 0.0721997 s, 145 MB/s

[ceph-mon01][WARNIN] --> Zapping successful for: <Raw Device: /dev/sdb>

[ceph_deploy.osd][DEBUG ] zapping /dev/sdc on ceph-mon01

[ceph-mon01][DEBUG ] connection detected need for sudo

[ceph-mon01][DEBUG ] connected to host: ceph-mon01

[ceph-mon01][DEBUG ] detect platform information from remote host

[ceph-mon01][DEBUG ] detect machine type

[ceph-mon01][DEBUG ] find the location of an executable

[ceph_deploy.osd][INFO ] Distro info: CentOS Linux 7.9.2009 Core

[ceph-mon01][DEBUG ] zeroing last few blocks of device

[ceph-mon01][DEBUG ] find the location of an executable

[ceph-mon01][INFO ] Running command: sudo /usr/sbin/ceph-volume lvm zap /dev/sdc

[ceph-mon01][WARNIN] --> Zapping: /dev/sdc

[ceph-mon01][WARNIN] --> --destroy was not specified, but zapping a whole device will remove the partition table

[ceph-mon01][WARNIN] Running command: /bin/dd if=/dev/zero of=/dev/sdc bs=1M count=10 conv=fsync

[ceph-mon01][WARNIN] stderr: 10+0 records in

[ceph-mon01][WARNIN] 10+0 records out

[ceph-mon01][WARNIN] 10485760 bytes (10 MB) copied

[ceph-mon01][WARNIN] stderr: , 0.0849861 s, 123 MB/s

[ceph-mon01][WARNIN] --> Zapping successful for: <Raw Device: /dev/sdc>

[cephadm@ceph-admin ceph-cluster]$

提示:擦净磁盘我们需要在后面接对应主机和磁盘;若设备上此前有数据,则可能需要在相应节点上以root用户使用“ceph-volume lvm zap --destroy {DEVICE}”命令进行;

添加osd

查看 ceph-deploy osd帮助

[cephadm@ceph-admin ceph-cluster]$ ceph-deploy osd --help

usage: ceph-deploy osd [-h] {list,create} ... Create OSDs from a data disk on a remote host: ceph-deploy osd create {node} --data /path/to/device For bluestore, optional devices can be used:: ceph-deploy osd create {node} --data /path/to/data --block-db /path/to/db-device

ceph-deploy osd create {node} --data /path/to/data --block-wal /path/to/wal-device

ceph-deploy osd create {node} --data /path/to/data --block-db /path/to/db-device --block-wal /path/to/wal-device For filestore, the journal must be specified, as well as the objectstore:: ceph-deploy osd create {node} --filestore --data /path/to/data --journal /path/to/journal For data devices, it can be an existing logical volume in the format of:

vg/lv, or a device. For other OSD components like wal, db, and journal, it

can be logical volume (in vg/lv format) or it must be a GPT partition. positional arguments:

{list,create}

list List OSD info from remote host(s)

create Create new Ceph OSD daemon by preparing and activating a

device optional arguments:

-h, --help show this help message and exit

[cephadm@ceph-admin ceph-cluster]$

提示:ceph-deploy osd有两个子命令,list表示列出远程主机上osd;create表示创建一个新的ceph osd守护进程设备;

查看ceph-deploy osd create 帮助

[cephadm@ceph-admin ceph-cluster]$ ceph-deploy osd create --help

usage: ceph-deploy osd create [-h] [--data DATA] [--journal JOURNAL]

[--zap-disk] [--fs-type FS_TYPE] [--dmcrypt]

[--dmcrypt-key-dir KEYDIR] [--filestore]

[--bluestore] [--block-db BLOCK_DB]

[--block-wal BLOCK_WAL] [--debug]

[HOST] positional arguments:

HOST Remote host to connect optional arguments:

-h, --help show this help message and exit

--data DATA The OSD data logical volume (vg/lv) or absolute path

to device

--journal JOURNAL Logical Volume (vg/lv) or path to GPT partition

--zap-disk DEPRECATED - cannot zap when creating an OSD

--fs-type FS_TYPE filesystem to use to format DEVICE (xfs, btrfs)

--dmcrypt use dm-crypt on DEVICE

--dmcrypt-key-dir KEYDIR

directory where dm-crypt keys are stored

--filestore filestore objectstore

--bluestore bluestore objectstore

--block-db BLOCK_DB bluestore block.db path

--block-wal BLOCK_WAL

bluestore block.wal path

--debug Enable debug mode on remote ceph-volume calls

[cephadm@ceph-admin

提示:create可以指定数据盘,日志盘以及block-db盘和bluestore 日志盘等信息;

将ceph-mon01的/dev/sdb盘添加为集群osd

[cephadm@ceph-admin ceph-cluster]$ ceph-deploy osd create ceph-mon01 --data /dev/sdb

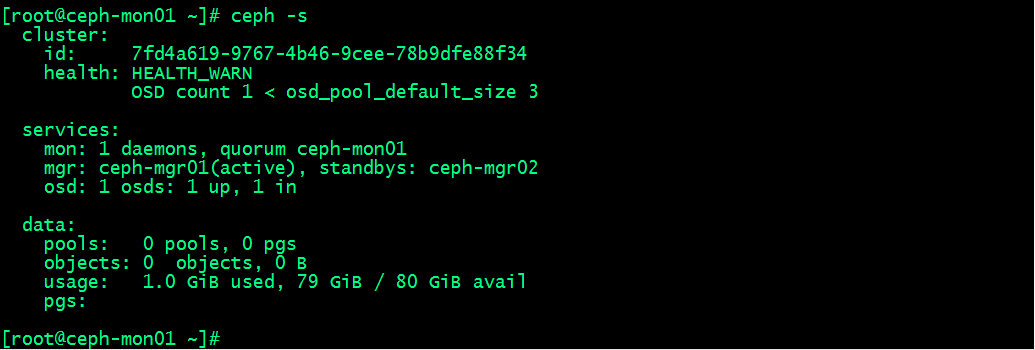

查看集群状态

提示:可以看到现在集群osd有一个正常,存储空间为80G;说明我们刚才添加到osd已经成功;后续其他主机上的osd也是上述过程,先擦净磁盘,然后在添加为osd;

列出对应主机上的osd信息

[cephadm@ceph-admin ceph-cluster]$ ceph-deploy osd list ceph-mon01

[ceph_deploy.conf][DEBUG ] found configuration file at: /home/cephadm/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /bin/ceph-deploy osd list ceph-mon01

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] debug : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] subcommand : list

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f01148f9128>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] host : ['ceph-mon01']

[ceph_deploy.cli][INFO ] func : <function osd at 0x7f011493d9b0>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph-mon01][DEBUG ] connection detected need for sudo

[ceph-mon01][DEBUG ] connected to host: ceph-mon01

[ceph-mon01][DEBUG ] detect platform information from remote host

[ceph-mon01][DEBUG ] detect machine type

[ceph-mon01][DEBUG ] find the location of an executable

[ceph_deploy.osd][INFO ] Distro info: CentOS Linux 7.9.2009 Core

[ceph_deploy.osd][DEBUG ] Listing disks on ceph-mon01...

[ceph-mon01][DEBUG ] find the location of an executable

[ceph-mon01][INFO ] Running command: sudo /usr/sbin/ceph-volume lvm list

[ceph-mon01][DEBUG ]

[ceph-mon01][DEBUG ]

[ceph-mon01][DEBUG ] ====== osd.0 =======

[ceph-mon01][DEBUG ]

[ceph-mon01][DEBUG ] [block] /dev/ceph-56cdba71-749f-4c01-8364-f5bdad0b8f8d/osd-block-538baff0-ed25-4e3f-9ed7-f228a7ca0086

[ceph-mon01][DEBUG ]

[ceph-mon01][DEBUG ] block device /dev/ceph-56cdba71-749f-4c01-8364-f5bdad0b8f8d/osd-block-538baff0-ed25-4e3f-9ed7-f228a7ca0086

[ceph-mon01][DEBUG ] block uuid 40cRBg-53ZO-Dbho-wWo6-gNcJ-ZJJi-eZC6Vt

[ceph-mon01][DEBUG ] cephx lockbox secret

[ceph-mon01][DEBUG ] cluster fsid 7fd4a619-9767-4b46-9cee-78b9dfe88f34

[ceph-mon01][DEBUG ] cluster name ceph

[ceph-mon01][DEBUG ] crush device class None

[ceph-mon01][DEBUG ] encrypted 0

[ceph-mon01][DEBUG ] osd fsid 538baff0-ed25-4e3f-9ed7-f228a7ca0086

[ceph-mon01][DEBUG ] osd id 0

[ceph-mon01][DEBUG ] type block

[ceph-mon01][DEBUG ] vdo 0

[ceph-mon01][DEBUG ] devices /dev/sdb

[cephadm@ceph-admin ceph-cluster]$

提示:到此我们RADOS集群相关组件就都部署完毕了;

管理osd ceph命令查看osd相关信息

1、查看osd状态

[root@ceph-mon01 ~]# ceph osd stat

10 osds: 10 up, 10 in; epoch: e56

提示:osds表示现有集群里osd总数;up表示活动在线的osd数量,in表示在集群内的osd数量;

2、查看osd编号

[root@ceph-mon01 ~]# ceph osd ls

0

1

2

3

4

5

6

7

8

9

[root@ceph-mon01 ~]#

3、查看osd映射状态

[root@ceph-mon01 ~]# ceph osd dump

epoch 56

fsid 7fd4a619-9767-4b46-9cee-78b9dfe88f34

created 2022-09-24 00:36:13.639715

modified 2022-09-24 02:29:38.086464

flags sortbitwise,recovery_deletes,purged_snapdirs

crush_version 25

full_ratio 0.95

backfillfull_ratio 0.9

nearfull_ratio 0.85

require_min_compat_client jewel

min_compat_client jewel

require_osd_release mimic

pool 1 'testpool' replicated size 3 min_size 2 crush_rule 0 object_hash rjenkins pg_num 16 pgp_num 16 last_change 42 flags hashpspool stripe_width 0

max_osd 10

osd.0 up in weight 1 up_from 55 up_thru 0 down_at 0 last_clean_interval [0,0) 192.168.0.71:6800/52355 172.16.30.71:6800/52355 172.16.30.71:6801/52355 192.168.0.71:6801/52355 exists,up bf3649af-e3f4-41a2-a5ce-8f1a316d344e

osd.1 up in weight 1 up_from 9 up_thru 42 down_at 0 last_clean_interval [0,0) 192.168.0.71:6802/49913 172.16.30.71:6802/49913 172.16.30.71:6803/49913 192.168.0.71:6803/49913 exists,up 7293a12a-7b4e-4c86-82dc-0acc15c3349e

osd.2 up in weight 1 up_from 13 up_thru 42 down_at 0 last_clean_interval [0,0) 192.168.0.72:6800/48196 172.16.30.72:6800/48196 172.16.30.72:6801/48196 192.168.0.72:6801/48196 exists,up 96c437c5-8e82-4486-910f-9e98d195e4f9

osd.3 up in weight 1 up_from 17 up_thru 55 down_at 0 last_clean_interval [0,0) 192.168.0.72:6802/48679 172.16.30.72:6802/48679 172.16.30.72:6803/48679 192.168.0.72:6803/48679 exists,up 4659d2a9-09c7-49d5-bce0-4d2e65f5198c

osd.4 up in weight 1 up_from 21 up_thru 55 down_at 0 last_clean_interval [0,0) 192.168.0.73:6800/48122 172.16.30.73:6800/48122 172.16.30.73:6801/48122 192.168.0.73:6801/48122 exists,up de019aa8-3d2a-4079-a99e-ec2da2d4edb9

osd.5 up in weight 1 up_from 25 up_thru 55 down_at 0 last_clean_interval [0,0) 192.168.0.73:6802/48601 172.16.30.73:6802/48601 172.16.30.73:6803/48601 192.168.0.73:6803/48601 exists,up 119c8748-af3b-4ac4-ac74-6171c90c82cc

osd.6 up in weight 1 up_from 29 up_thru 55 down_at 0 last_clean_interval [0,0) 192.168.0.74:6801/58248 172.16.30.74:6800/58248 172.16.30.74:6801/58248 192.168.0.74:6802/58248 exists,up 08d8dd8b-cdfe-4338-83c0-b1e2b5c2a799

osd.7 up in weight 1 up_from 33 up_thru 55 down_at 0 last_clean_interval [0,0) 192.168.0.74:6803/58727 172.16.30.74:6802/58727 172.16.30.74:6803/58727 192.168.0.74:6804/58727 exists,up 9de6cbd0-bb1b-49e9-835c-3e714a867393

osd.8 up in weight 1 up_from 37 up_thru 42 down_at 0 last_clean_interval [0,0) 192.168.0.75:6800/48268 172.16.30.75:6800/48268 172.16.30.75:6801/48268 192.168.0.75:6801/48268 exists,up 63aaa0b8-4e52-4d74-82a8-fbbe7b48c837

osd.9 up in weight 1 up_from 41 up_thru 42 down_at 0 last_clean_interval [0,0) 192.168.0.75:6802/48751 172.16.30.75:6802/48751 172.16.30.75:6803/48751 192.168.0.75:6803/48751 exists,up 6bf3204a-b64c-4808-a782-434a93ac578c

[root@ceph-mon01 ~]#

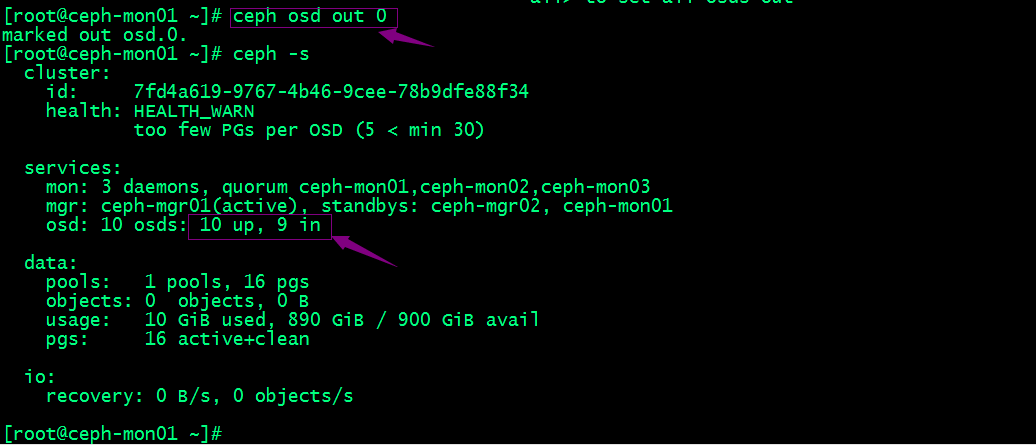

删除osd

1、停用设备

提示:可以看到我们将0号osd停用以后,对应集群状态里osd就只有9个在集群里;

2、停止进程

提示:停用进程需要在对应主机上停止ceph-osd@{osd-num};停止进程以后,对应集群状态就能看到对应只有9个osd进程处于up状态;

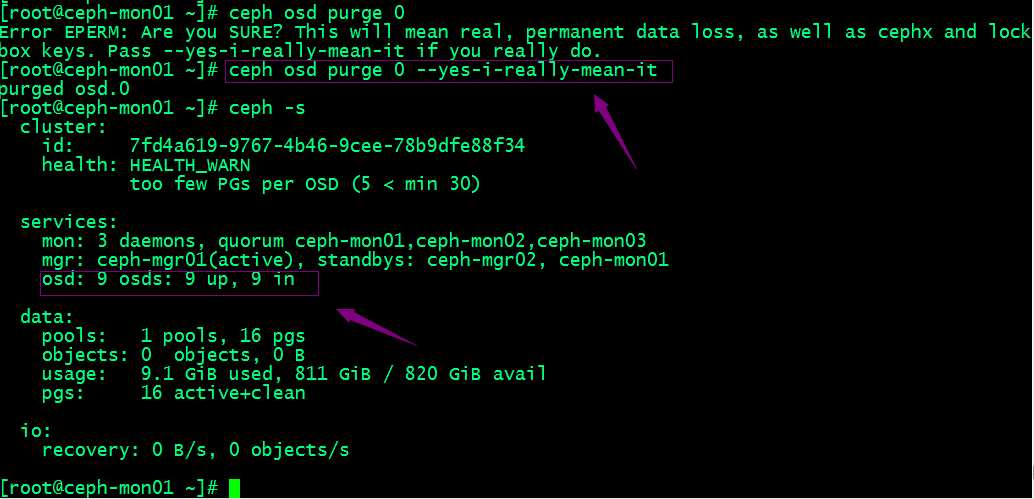

3、移除设备

提示:可以看到移除osd以后,对应集群状态里就只有9个osd了;若类似如下的OSD的配置信息存在于ceph.conf配置文件中,管理员在删除OSD之后手动将其删除。

[osd.1] host = {hostname}

不过,对于Luminous之前的版本来说,管理员需要依次手动执行如下步骤删除OSD设备:

1. 于CRUSH运行图中移除设备:ceph osd crush remove {name}

2. 移除OSD的认证key:ceph auth del osd.{osd-num}

3. 最后移除OSD设备:ceph osd rm {osd-num}

测试上传下载数据对象

1、创建存储池并设置PG数量为16个

[root@ceph-mon01 ~]# ceph osd pool create testpool 16 16

pool 'testpool' created

[root@ceph-mon01 ~]# ceph osd pool ls

testpool

[root@ceph-mon01 ~]#

2、上传文件到testpool

[root@ceph-mon01 ~]# rados put test /etc/issue -p testpool

[root@ceph-mon01 ~]# rados ls -p testpool

test

[root@ceph-mon01 ~]#

提示:可以看到我们上传/etc/issue文件到testpool存储池中并命名为test,对应文件已将在testpool存储中存在;说明上传没有问题;

3、获取存储池中数据对象的具体位置信息

[root@ceph-mon01 ~]# ceph osd map testpool test

osdmap e44 pool 'testpool' (1) object 'test' -> pg 1.40e8aab5 (1.5) -> up ([4,0,6], p4) acting ([4,0,6], p4)

[root@ceph-mon01 ~]#

提示:可以看到test文件在testpool存储中被分别存放编号为4、0、6的osd上去了;

4、下载文件到本地

[root@ceph-mon01 ~]# ls

[root@ceph-mon01 ~]# rados get test test-down -p testpool

[root@ceph-mon01 ~]# ls

test-down

[root@ceph-mon01 ~]# cat test-down

\S

Kernel \r on an \m [root@ceph-mon01 ~]#

5、删除数据对象

[root@ceph-mon01 ~]# rados rm test -p testpool

[root@ceph-mon01 ~]# rados ls -p testpool

[root@ceph-mon01 ~]#

6、删除存储池

[root@ceph-mon01 ~]# ceph osd pool rm testpool

Error EPERM: WARNING: this will *PERMANENTLY DESTROY* all data stored in pool testpool. If you are *ABSOLUTELY CERTAIN* that is what you want, pass the pool name *twice*, followed by --yes-i-really-really-mean-it.

[root@ceph-mon01 ~]# ceph osd pool rm testpool --yes-i-really-really-mean-it.

Error EPERM: WARNING: this will *PERMANENTLY DESTROY* all data stored in pool testpool. If you are *ABSOLUTELY CERTAIN* that is what you want, pass the pool name *twice*, followed by --yes-i-really-really-mean-it.

[root@ceph-mon01 ~]#

提示:删除存储池命令存在数据丢失的风险,Ceph于是默认禁止此类操作。管理员需要在ceph.conf配置文件中启用支持删除存储池的操作后,方可使用类似上述命令删除存储池;

扩展ceph集群

扩展mon节点

Ceph存储集群需要至少运行一个Ceph Monitor和一个Ceph Manager,生产环境中,为了实现高可用性,Ceph存储集群通常运行多个监视器,以免单监视器整个存储集群崩溃。Ceph使用Paxos算法,该算法需要半数以上的监视器大于n/2,其中n为总监视器数量)才能形成法定人数。尽管此非必需,但奇数个监视器往往更好。“ceph-deploy mon add {ceph-nodes}”命令可以一次添加一个监视器节点到集群中。

[cephadm@ceph-admin ceph-cluster]$ ceph-deploy mon add ceph-mon02

[cephadm@ceph-admin ceph-cluster]$ ceph-deploy mon add ceph-mon03

查看监视器及法定人数相关状态

[root@ceph-mon01 ~]# ceph quorum_status --format json-pretty

{

"election_epoch": 12,

"quorum": [

0,

1,

2

],

"quorum_names": [

"ceph-mon01",

"ceph-mon02",

"ceph-mon03"

],

"quorum_leader_name": "ceph-mon01",

"monmap": {

"epoch": 3,

"fsid": "7fd4a619-9767-4b46-9cee-78b9dfe88f34",

"modified": "2022-09-24 01:56:24.196075",

"created": "2022-09-24 00:36:13.210155",

"features": {

"persistent": [

"kraken",

"luminous",

"mimic",

"osdmap-prune"

],

"optional": []

},

"mons": [

{

"rank": 0,

"name": "ceph-mon01",

"addr": "192.168.0.71:6789/0",

"public_addr": "192.168.0.71:6789/0"

},

{

"rank": 1,

"name": "ceph-mon02",

"addr": "192.168.0.72:6789/0",

"public_addr": "192.168.0.72:6789/0"

},

{

"rank": 2,

"name": "ceph-mon03",

"addr": "192.168.0.73:6789/0",

"public_addr": "192.168.0.73:6789/0"

}

]

}

}

[root@ceph-mon01 ~]#

提示:可以看到现在有3个mon节点,其中mon01为leader节点,总共有3个选票;

扩展mgr节点

Ceph Manager守护进程以“Active/Standby”模式运行,部署其它ceph-mgr守护程序可确保在Active节点或其上的ceph-mgr守护进程故障时,其中的一个Standby实例可以在不中断服务的情况下接管其任务。“ceph-deploy mgr create {new-manager-nodes}”命令可以一次添加多个Manager节点。

把ceph-mon01节点添加为mgr节点

[cephadm@ceph-admin ceph-cluster]$ ceph-deploy mgr create ceph-mon01

[ceph_deploy.conf][DEBUG ] found configuration file at: /home/cephadm/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /bin/ceph-deploy mgr create ceph-mon01

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] mgr : [('ceph-mon01', 'ceph-mon01')]

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] subcommand : create

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7fba72e66950>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] func : <function mgr at 0x7fba736df230>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.mgr][DEBUG ] Deploying mgr, cluster ceph hosts ceph-mon01:ceph-mon01

[ceph-mon01][DEBUG ] connection detected need for sudo

[ceph-mon01][DEBUG ] connected to host: ceph-mon01

[ceph-mon01][DEBUG ] detect platform information from remote host

[ceph-mon01][DEBUG ] detect machine type

[ceph_deploy.mgr][INFO ] Distro info: CentOS Linux 7.9.2009 Core

[ceph_deploy.mgr][DEBUG ] remote host will use systemd

[ceph_deploy.mgr][DEBUG ] deploying mgr bootstrap to ceph-mon01

[ceph-mon01][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph-mon01][WARNIN] mgr keyring does not exist yet, creating one

[ceph-mon01][DEBUG ] create a keyring file

[ceph-mon01][DEBUG ] create path recursively if it doesn't exist

[ceph-mon01][INFO ] Running command: sudo ceph --cluster ceph --name client.bootstrap-mgr --keyring /var/lib/ceph/bootstrap-mgr/ceph.keyring auth get-or-create mgr.ceph-mon01 mon allow profile mgr osd allow * mds allow * -o /var/lib/ceph/mgr/ceph-ceph-mon01/keyring

[ceph-mon01][INFO ] Running command: sudo systemctl enable ceph-mgr@ceph-mon01

[ceph-mon01][WARNIN] Created symlink from /etc/systemd/system/ceph-mgr.target.wants/ceph-mgr@ceph-mon01.service to /usr/lib/systemd/system/ceph-mgr@.service.

[ceph-mon01][INFO ] Running command: sudo systemctl start ceph-mgr@ceph-mon01

[ceph-mon01][INFO ] Running command: sudo systemctl enable ceph.target

[cephadm@ceph-admin ceph-cluster]$

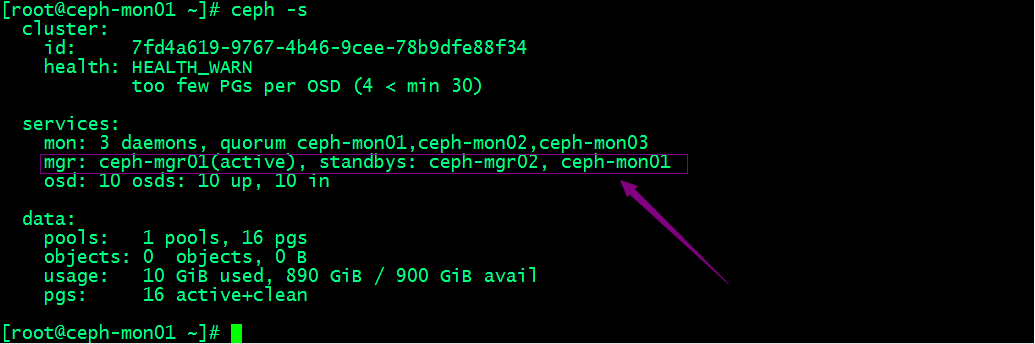

查看集群状态

提示:可以看到现在集群有3个mgr;对应我们刚才加的cehp-mon01以standby的形式运行着;到此一个完整的RADOS集群就搭建好了,该集群现在有3个mon节点,3个mgr节点,10个osd;

分布式存储系统之Ceph集群部署的更多相关文章

- 分布式存储系统之Ceph集群CephX认证和授权

前文我们了解了Ceph集群存储池操作相关话题,回顾请参考https://www.cnblogs.com/qiuhom-1874/p/16743611.html:今天我们来聊一聊在ceph上认证和授权的 ...

- 分布式存储系统之Ceph集群MDS扩展

前文我们了解了cephfs使用相关话题,回顾请参考https://www.cnblogs.com/qiuhom-1874/p/16758866.html:今天我们来聊一聊MDS组件扩展相关话题: 我们 ...

- 分布式存储系统之Ceph集群RadosGW基础使用

前文我们了解了MDS扩展相关话题,回顾请参考https://www.cnblogs.com/qiuhom-1874/p/16759585.html:今天我们来聊一聊RadosGW的基础使用相关话题: ...

- 分布式存储系统之Ceph集群启用Dashboard及使用Prometheus监控Ceph

前文我们了解了Ceph之上的RadosGW基础使用相关话题,回顾请参考https://www.cnblogs.com/qiuhom-1874/p/16768998.html:今天我们来聊一聊Ceph启 ...

- 分布式存储系统之Ceph集群访问接口启用

前文我们使用ceph-deploy工具简单拉起了ceph底层存储集群RADOS,回顾请参考https://www.cnblogs.com/qiuhom-1874/p/16724473.html:今天我 ...

- 分布式存储系统之Ceph集群RBD基础使用

前文我们了解了Ceph集群cephx认证和授权相关话题,回顾请参考https://www.cnblogs.com/qiuhom-1874/p/16748149.html:今天我们来聊一聊ceph集群的 ...

- 分布式存储系统之Ceph集群CephFS基础使用

前文我们了解了ceph之上的RBD接口使用相关话题,回顾请参考https://www.cnblogs.com/qiuhom-1874/p/16753098.html:今天我们来聊一聊ceph之上的另一 ...

- 分布式存储系统之Ceph集群存储池、PG 与 CRUSH

前文我们了解了ceph集群状态获取常用命令以及通过ceph daemon.ceph tell动态配置ceph组件.ceph.conf配置文件相关格式的说明等,回顾请参考https://www.cnbl ...

- 分布式存储系统之Ceph集群存储池操作

前文我们了解了ceph的存储池.PG.CRUSH.客户端IO的简要工作过程.Ceph客户端计算PG_ID的步骤的相关话题,回顾请参考https://www.cnblogs.com/qiuhom-187 ...

随机推荐

- 如何在 pyqt 中实现桌面歌词

前言 酷狗.网抑云和 QQ 音乐都有桌面歌词功能,这篇博客也将使用 pyqt 实现桌面歌词功能,效果如下图所示: 代码实现 桌面歌词部件 LyricWidget 在 paintEvent 中绘制歌词. ...

- 一篇文章带你走进meta viewport的世界

一.什么是 meta 标签? 可提供有关页面的元信息 二.为什么需要移动端适配? 因为我们在 pc 端上看到的页面都是比较大的,在 pc 端上都是正常显示的,自动不会被进行缩放,除非手动进行放大或缩小 ...

- Go语言基础四:数组和指针

GO语言中数组和指针 数组 Go语言提供了数组类型的数据结构. 数组是同一数据类型元素的集合.这里的数据类型可以是整型.字符串等任意原始的数据类型.数组中不允许混合不同类型的元素.(当然,如果是int ...

- python 文件操作(读写等)

简介 在实际开发中我们需要对文件做一些操作,例如读写文件.在文件中新添内容等,通常情况下,我们会使用open函数进行相关文件的操作,下面将介绍一下关于open读写的相关内容. open()方法 ope ...

- linux history命令优化

主要功能: 1, 可以记录哪个ip和时间(精确到秒)以及哪个用户,作了哪些命令 2,最大日志记录增加到4096条 把下面的代码直接粘贴到/etc/profile后面就可以了 #history modi ...

- 2020年是时候更新你的技术武器库了:Asgi vs Wsgi(FastAPI vs Flask)

原文转载自「刘悦的技术博客」https://v3u.cn/a_id_167 也许这一篇的标题有那么一点不厚道,因为Asgi(Asynchronous Server Gateway Interface) ...

- ZJOI2022选做

\(ZJOI2022\) 众数 发现并不存在\(poly(log(n))\)的做法,那么尝试\(n\sqrt n\) 套路的按照出现次数分组,分为大于\(\sqrt n\)和小于\(\sqrt n\) ...

- Java连接数据库从入门到入土

Java连接数据库 一.最原始的连接数据库 是没有导入任何数据源的:只导入了一个数据库驱动:mysql-connector-java-8.0.27.jar 首先是编写db.proterties文件 d ...

- Java基础 | Stream流原理与用法总结

Stream简化元素计算: 一.接口设计 从Java1.8开始提出了Stream流的概念,侧重对于源数据计算能力的封装,并且支持序列与并行两种操作方式:依旧先看核心接口的设计: BaseStream: ...

- python包合集-cffi

一.cffi cffi是连接Python与c的桥梁,可实现在Python中调用c文件.cffi为c语言的外部接口,在Python中使用该接口可以实现在Python中使用外部c文件的数据结构及函数. 二 ...