docker报错:报错br-xxxx 之Docker-Compose 自动创建的网桥与局域网络冲突

故障描述:

当我使用docker-compose的方式部署内网的harbor时。它自动创建了一个bridge网桥,与内网的一个网段(172.18.0.1/16)发生冲突,docker 默认的网络模式是bridge ,默认网段是172.17.0.1/16。

多次执行docker-compose up -d 部署服务后,自动生成的网桥会依次使用: 172.18.x.x ,172.19.x.x....

然后碰巧内网的一个网段也是172.18.x.x。这样就导致这台机器死活也连不到172.18.x.x这台机器。

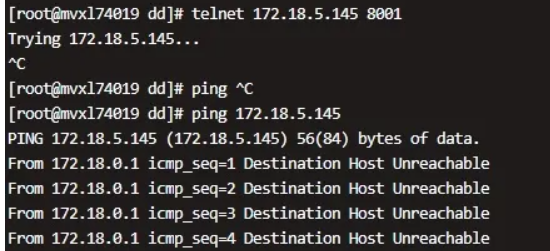

现象:

telnet不通,也无法ping通。。

解决方法

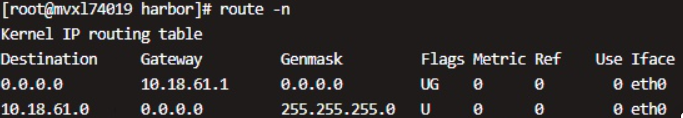

1、查看路由表:

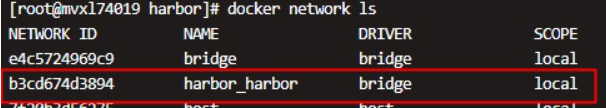

2、查看docker network如下:

3、将docker-compose应用停止

docker-compose down

4、修改docker.json文件

下次docker启动的时候docker0将会变为172.31.0.1/24,docker-compose自动创建的bridge也会变为172.31.x.x/24

# cat /etc/docker/daemon.json

{

"debug" : true,

"default-address-pools" : [

{

"base" : "172.31.0.0/16",

"size" : 24

}

]

}

5、删除原来有冲突的bridge

# docker network ls

NETWORK ID NAME DRIVER SCOPE

e4c5724969c9 bridge bridge local

b3cd674d3894 harbor_harbor bridge local

7f20b3d56275 host host local

7b5f3000115b none null local # docker network rm b3cd674d3894

b3cd674d3894

6、重启docker服务

# systemctl restart docker

7、查看ip a和路由表

# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:50:56:a3:f4:69 brd ff:ff:ff:ff:ff:ff

inet 10.18.61.80/24 brd 10.18.61.255 scope global eth0

valid_lft forever preferred_lft forever

64: br-6a82e7536981: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:4f:5a:cc:53 brd ff:ff:ff:ff:ff:ff

inet 172.31.1.1/24 brd 172.31.1.255 scope global br-6a82e7536981

valid_lft forever preferred_lft forever

65: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:91:20:87:bd brd ff:ff:ff:ff:ff:ff

inet 172.31.0.1/24 brd 172.31.0.255 scope global docker0

valid_lft forever preferred_lft forever # route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 10.18.61.1 0.0.0.0 UG 0 0 0 eth0

10.18.61.0 0.0.0.0 255.255.255.0 U 0 0 0 eth0

169.254.0.0 0.0.0.0 255.255.0.0 U 1002 0 0 eth0

172.31.0.0 0.0.0.0 255.255.255.0 U 0 0 0 docker0

172.31.1.0 0.0.0.0 255.255.255.0 U 0 0 0 br-6a82e7536981 # docker network ls

NETWORK ID NAME DRIVER SCOPE

27b40217b79c bridge bridge local

6a82e7536981 harbor_harbor bridge local

7f20b3d56275 host host local

7b5f3000115b none null local

可以看到64: br-6a82e7536981 和 65: docker0的网段都已经变成172.31.x.x了。说明配置ok了

8、启动docker-compose

# docker-compose up -d

如果出现以下错误

Starting log ... error

Starting registry ... error

Starting registryctl ... error

Starting postgresql ... error

Starting portal ... error

Starting redis ... error

Starting core ... error

Starting jobservice ... error

Starting proxy ... error ERROR: for log Cannot start service log: network b3cd674d38943c91c439ea8eafc0ecc4cea6d4e0df875d930e8342f6d678d135 not found

ERROR: No containers to start

因为没有自动切换。NETWORK ID:b3cd674d3....还是原来的NETWORK ID

需要绑定bridge

# docker network ls

NETWORK ID NAME DRIVER SCOPE

27b40217b79c bridge bridge local

6a82e7536981 harbor_harbor bridge local

7f20b3d56275 host host local

7b5f3000115b none null local

# docker network connect network_name container_name

[root@mvxl74019 harbor]# docker network connect harbor_harbor nginx

[root@mvxl74019 harbor]# docker network connect harbor_harbor harbor-jobservice

[root@mvxl74019 harbor]# docker network connect harbor_harbor harbor-core

[root@mvxl74019 harbor]# docker network connect harbor_harbor registryctl

[root@mvxl74019 harbor]# docker network connect harbor_harbor registry

[root@mvxl74019 harbor]# docker network connect harbor_harbor redis

[root@mvxl74019 harbor]# docker network connect harbor_harbor harbor-db

[root@mvxl74019 harbor]# docker network connect harbor_harbor harbor-portal

[root@mvxl74019 harbor]# docker network connect harbor_harbor harbor-log

[root@mvxl74019 harbor]# docker-compose up -d

Starting log ... done

Starting registry ... done

Starting registryctl ... done

Starting postgresql ... done

Starting portal ... done

Starting redis ... done

Starting core ... done

Starting jobservice ... done

Starting proxy ... done

9、检查服务是否正常

# docker-compose ps

Name Command State Ports

---------------------------------------------------------------------------------------------

harbor-core /harbor/entrypoint.sh Up (healthy)

harbor-db /docker-entrypoint.sh Up (healthy)

harbor-jobservice /harbor/entrypoint.sh Up (healthy)

harbor-log /bin/sh -c /usr/local/bin/ ... Up (healthy) 127.0.0.1:1514->10514/tcp

harbor-portal nginx -g daemon off; Up (healthy)

nginx nginx -g daemon off; Up (healthy) 0.0.0.0:80->8080/tcp

redis redis-server /etc/redis.conf Up (healthy)

registry /home/harbor/entrypoint.sh Up (healthy)

registryctl /home/harbor/start.sh Up (healthy)

10、再尝试是否能telnet通目标IP: 172.18.5.145

可以看到是已经能够telnet通和ping通了。

说明:此文档参考转载至:https://zhuanlan.zhihu.com/p/379305319,仅用于学习使用docker报错:报错br-xxxx 之Docker-Compose 自动创建的网桥与局域网络冲突的更多相关文章

- 【docker】docker启动、重启、关闭命令,附带:docker启动容器报错:docker: Error response from daemon: driver failed programming external connectivity on endpoint es2-node

在关闭并放置centos 的防火墙重启之后[操作:https://www.cnblogs.com/sxdcgaq8080/p/10032829.html] 启动docker容器就发现开始报错: [ro ...

- zun 不能创建 docker 容器,报错: datastore for scope "global" is not initialized

问题:zun不能创建docker容器,报错:datastore for scope "global" is not initialized 解决:修改docker 服务配置文件 ...

- docker 启动容器报错

2018-10-24 报错信息: /usr/bin/docker-current: Error response from daemon: driver failed programming exte ...

- egg 连接 mysql 的 docker 容器,报错:Client does not support authentication protocol requested by server; consider upgrading MySQL client

egg 连接 mysql 的 docker 容器,报错:Client does not support authentication protocol requested by server; con ...

- 使用nsenter进入docker容器后端报错 mesg: ttyname failed: No such file or directory

通过nsenter 进入到docker容器的后端总是报下面的错,, [root@devdtt ~]# docker inspect -f {{.State.Pid}} mynginx411950 [r ...

- docker启动容器报错 Unknown runtime specified nvidia.

启动docker容器时,报错 问题复现 当我启动一个容器时,运行以下命令: docker run --runtime=nvidia .... 后面一部分命令没写出来,此时报错的信息如下: docker ...

- docker启动镜像报错

docker启动镜像报错: docker: Error response from daemon: driver failed programming external connectivity on ...

- docker启动redis报错 oO0OoO0OoO0Oo Redis is starting oO0OoO0OoO0Oo

docker启动redis报错 1:C 17 Jun 08:18:04.613 # oO0OoO0OoO0Oo Redis is starting oO0OoO0OoO0Oo1:C 17 Jun 08 ...

- docker推送镜像到docker本地仓库报错:http: server gave HTTP response to HTTPS client

因为Docker从1.3.X之后,与docker registry交互默认使用的是https,然而此处搭建的私有仓库只提供http服务,所以当与私有仓库交互时就会报上面的错误. 解决办法: vim / ...

- Docker获取镜像报错docker: Error response from daemon

docker: Error response from daemon: Get https://registry-1.docker.io/v2/: net/http: request canceled ...

随机推荐

- ENCOURAGE研究: 病情中度活动RA获得深度缓解后有望实现停药

标签: 类风湿关节炎; TNF拮抗剂; 维持期减停 病情中度活动的RA患者在获得深度缓解后有望实现停用生物制剂 电邮发布日期: 2016年2月3日 病情中度活动的类风湿关节炎(RA)患者通过传统DMA ...

- WPF ScrollViewer 没有效果

ScrollViewer组件外组件如果是StackPanel组件 需要给StackPanel 设置高度,ScrollViewer 才会有滚动条 如果不想设置StackPanel高度,可以把StackP ...

- “堆内存持续占用高 且 ygc回收效果不佳” 排查处理实践

作者:京东零售 王江波 说明:部分素材来源于网络,数据分析全为真实数据. 一. 问题背景 自建的两套工具,运行一段时间后均出现 内存占用高触发报警,频繁young gc且效果不佳.曾经尝试多次解决,因 ...

- LeetCode-1705 吃苹果的最大数目

来源:力扣(LeetCode)链接:https://leetcode-cn.com/problems/maximum-number-of-eaten-apples 题目描述 有一棵特殊的苹果树,一连 ...

- Ubuntu 桌面系统升级

背景 之前在学习 ROS2 时,安装 ros-humble-desktop 出现依赖错误:无法修正错误,因为您要求某些软件包保持现状,就是它们破坏了软件包间的依赖关系. 依赖错误 该问题需要升级 Ub ...

- Tensorflow1.0版本与以前函数不同之处

大部分是Api版本问题: AttributeError: 'module' object has no attribute 'SummaryWriter' tf.train.SummaryWriter ...

- 基于GPU 显卡在k8s 集群上实现hpa 功能

前言 Kubernetes 支持HPA模块进行容器伸缩,默认支持CPU和内存等指标.原生的HPA基于Heapster,不支持GPU指标的伸缩,但是支持通过CustomMetrics的方式进行HPA指标 ...

- 解决MySQL5.5MySQLInstanceConfig最后一步setting报错

问题描述 在安装过MySQL(或已卸载)的电脑中重新安装MySQL5.5, 在安装最后一项中Processing configuration中最后一项配置失败: 问题解决: 首先关于卸载: 安装时候若 ...

- rocketmq集群配置

rocketmq 2m-2s-sync部署 1.下载 jdk-8u361-linux-x64.tar.gz rocketmq-all-5.1.0-bin-release.zip #/etc/profi ...

- [BOM] 封装一下cookie

function get_cookie(key) { var arr, reg = new RegExp("(^| )" + key + "=([^;]*)(;|$)&q ...