京东云开发者|IoT运维 - 如何部署一套高可用K8S集群

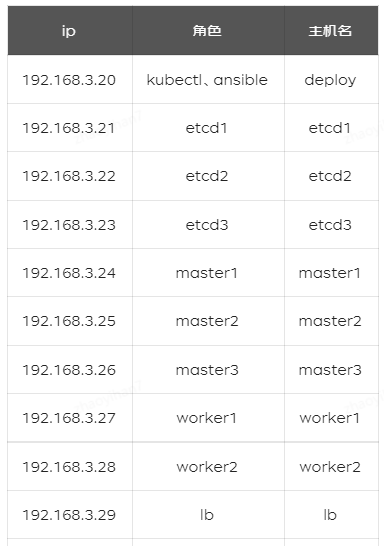

环境

准备工作

配置ansible(deploy 主机执行)

# ssh-keygen# for i in 192.168.3.{21..28}; do ssh-copy-id -i ~/.ssh/id_rsa.pub $i; done

[root@deploy ~]# cat /etc/ansible/hosts[etcd]192.168.3.21192.168.3.22192.168.3.23[k8s-master]192.168.3.24192.168.3.25192.168.3.26[k8s-worker]192.168.3.27192.168.3.28[k8s:children]k8s-masterk8s-worker

优化主机配置

关闭防火墙和selinux

# ansible all -m shell -a "systemctl stop firewalld && systemctl disable firewalld"# ansible all -m shell -a "sed -i 's/^SELINUX=.*/SELINUX=disabled/g' /etc/selinux/config"

修改limit

关闭交换分区

# swapoff -a# ansible k8s -m shell -a "yes | cp /etc/fstab /etc/fstab_bak"# ansible k8s -m shell -a "cat /etc/fstab_bak | grep -v swap > /etc/fstab"# ansible k8s -m shell -a "echo vm.swappiness = 0 >> /etc/sysctl.d/k8s.conf"# ansible k8s -m shell -a "sysctl -p /etc/sysctl.d/k8s.conf"

配置ipvs

# cat /root/ipvs.sh#!/bin/bashyum -y install ipvsadm ipset####创建ipvs脚本cat > /etc/sysconfig/modules/ipvs.modules << EOF#!/bin/bashmodprobe -- ip_vsmodprobe -- ip_vs_rrmodprobe -- ip_vs_wrrmodprobe -- ip_vs_shmodprobe -- nf_conntrack_ipv4EOF####执行脚本,验证配置chmod 755 /etc/sysconfig/modules/ipvs.modulesbash /etc/sysconfig/modules/ipvs.moduleslsmod | grep -e ip_vs -e nf_conntrack_ipv4########################## ansible k8s -m script -a "/root/ipvs.sh"

配置网桥转发规则

# cat sysctl.sh#!/bin/bashcat > /etc/sysctl.d/k8s.conf << EOFnet.bridge.bridge-nf-call-ip6tables = 1net.bridge.bridge-nf-call-iptables = 1net.ipv4.ip_forward = 1EOFcat <<EOF | tee /etc/modules-load.d/crio.confoverlaybr_netfilterEOFmodprobe overlaymodprobe br_netfiltersysctl --system

# ansible k8s -m script -a "/root/sysctl.sh"

配置etcd集群

生成证书(ansible 主机操作)

# curl -o /usr/bin/cfssl https://pkg.cfssl.org/R1.2/cfssl_linux-amd64# curl -o /usr/bin/cfssljson https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64# curl -o /usr/bin/cfssl-certinfo https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64# chmod +x /usr/bin/cfssl*

创建 CA 配置文件

# mkdir p ssl# cd /root/ssl# cat >ca-config.json <<EOF{"signing": {"default": {"expiry": "876000h"},"profiles": {"etcd": {"usages": ["signing","key encipherment","server auth","client auth"],"expiry": "876000h"}}}}EOF

创建 CA 证书签名请求

# cat >ca-csr.json <<EOF{"CN": "etcd","key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","ST": "beijing","L": "beijing","O": "jdt","OU": "iot"}]}EOF

生成 CA 证书和私钥

# cfssl gencert -initca ca-csr.json | cfssljson -bare ca

创建etcd的TLS认证证书

# cat > etcd-csr.json <<EOF{"CN": "etcd","hosts": ["192.168.3.21","192.168.3.22","192.168.3.23","192.168.3.24","192.168.3.23","192.168.3.26","etcd1","etcd2","etcd3","master1","master2","master3"],"key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","ST": "beijing","L": "beijing","O": "jdt","OU": "iot"}]EOF

生成 etcd证书和私钥并分发

# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=etcd etcd-csr.json | cfssljson -bare etcd# ansible etcd -m copy -a "src=/root/ssl/ dest=/export/Data/certs/"

ETCD安装以及配置

创建数据目录

# ansible etcd -m shell -a "mkdir -p /export/Data/etcd_data"

下载etcd并分发

# wget https://github.com/etcd-io/etcd/releases/download/v3.5.1/etcd-v3.5.1-linux-amd64.tar.gz# tar xf etcd-v3.5.1-linux-amd64.tar.gz && cd etcd-v3.5.1-linux-amd64# ansible etcd -m copy -a "src=etcd dest=/usr/bin/"# ansible etcd -m copy -a "src=etcdutl dest=/usr/bin/"# ansible etcd -m copy -a "src=etcdctl dest=/usr/bin/"# ansible etcd -m shell -a "chmod +x /usr/bin/etcd*"

配置etcd

# cat etcd_config.sh#!/bin/bash#PEER_NAME指定本节点的主机名称/域名,#PRIVATE_IP指定本节点的IP(用于后面配置文件的生成)#ETCD_CLUSTER群集列表,是所有节点信息(内容格式: 各节点名称=https://ip:端口 名称任意但要有标识性)#ETCD_INITIAL_CLUSTER_TOKEN为该etcd集群Token,同一集群token一致interface_name=`cat /proc/net/dev | sed -n '3,$p' | awk -F ':' {'print $1'} | grep -E "^ " | grep -v lo | head -n1`ipaddr=`ip a | grep $interface_name | awk '{print $2}' | awk -F"/" '{print $1}' | awk -F':' '{print $NF}'`export PEER_NAME=`hostname`export PRIVATE_IP=`echo $ipaddr | tr -d '\r'`export ETCD_CLUSTER="etcd1=https://192.168.3.21:2380,etcd2=https://192.168.3.22:2380,etcd3=https://192.168.3.23:2380"export ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster-1"cat > /etc/systemd/system/etcd.service <<EOF[Unit]Description=etcdDocumentation=https://github.com/coreos/etcdConflicts=etcd.service[Service]Type=notifyRestart=alwaysRestartSec=5sLimitNOFILE=65536TimeoutStartSec=0ExecStart=/usr/bin/etcd --name ${PEER_NAME} \--data-dir /export/Data/etcd_data\--listen-client-urls https://${PRIVATE_IP}:2379 \--advertise-client-urls https://${PRIVATE_IP}:2379 \--listen-peer-urls https://${PRIVATE_IP}:2380 \--initial-advertise-peer-urls https://${PRIVATE_IP}:2380 \--cert-file=/export/Data/certs/etcd.pem \--key-file=/export/Data/certs/etcd-key.pem \--client-cert-auth \--trusted-ca-file=/export/Data/certs/ca.pem \--peer-cert-file=/export/Data/certs/etcd.pem \--peer-key-file=/export/Data/certs/etcd-key.pem \--peer-client-cert-auth \--peer-trusted-ca-file=/export/Data/certs/ca.pem \--initial-cluster ${ETCD_CLUSTER} \--initial-cluster-token etcd-cluster-1 \--initial-cluster-state new[Install]WantedBy=multi-user.targetEOF

# ansible etcd -m script -a "/root/etcd_config.sh"

启动ETCD

# ansible etcd -m shell -a "systemctl daemon-reload"# ansible etcd -m service -a 'name=etcd state=started'# ansible etcd -m shell -a "systemctl enable etcd"

校验ETCD

注: ansible节点执行,需安装 etcdctl

# cat check_etcd.sh#!/bin/bashHOST1=192.168.3.21HOST2=192.168.3.22HOST3=192.168.3.23ENDPOINTS=$HOST1:2379,$HOST2:2379,$HOST3:2379#因为开启了证书验证,因此执行命令需加上证书KEY="--cacert=/root/ssl/ca.pem \--cert=/root/ssl/etcd.pem \--key=/root/ssl/etcd-key.pem"#etcd集群健康信息etcdctl --endpoints=$ENDPOINTS $KEY endpoint health#etcd集群状态信息etcdctl --endpoints=$ENDPOINTS $KEY --write-out=table endpoint status#etcd集群成员信息etcdctl --endpoints=$ENDPOINTS $KEY member list -w table

# sh check_etcd.sh192.168.3.22:2379 is healthy: successfully committed proposal: took = 6.670434ms192.168.3.23:2379 is healthy: successfully committed proposal: took = 7.021894ms192.168.3.21:2379 is healthy: successfully committed proposal: took = 6.938656ms+-------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+| ENDPOINT | ID | VERSION | DB SIZE | IS LEADER | IS LEARNER | RAFT TERM | RAFT INDEX | RAFT APPLIED INDEX | ERRORS |+-------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+| 192.168.3.21:2379 | a30c90f91c6bc0bf | 3.5.1 | 20 kB | false | false | 2 | 23 | 23 | || 192.168.3.22:2379 | 877407b6419f0fed | 3.5.1 | 20 kB | true | false | 2 | 23 | 23 | || 192.168.3.23:2379 | 75b3a36457698e9a | 3.5.1 | 37 kB | false | false | 2 | 23 | 23 | |+-------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------++------------------+---------+-------+---------------------------+---------------------------+------------+| ID | STATUS | NAME | PEER ADDRS | CLIENT ADDRS | IS LEARNER |+------------------+---------+-------+---------------------------+---------------------------+------------+| 75b3a36457698e9a | started | etcd3 | https://192.168.3.23:2380 | https://192.168.3.23:2379 | false || 877407b6419f0fed | started | etcd2 | https://192.168.3.22:2380 | https://192.168.3.22:2379 | false || a30c90f91c6bc0bf | started | etcd1 | https://192.168.3.21:2380 | https://192.168.3.21:2379 | false |+------------------+---------+-------+---------------------------+---------------------------+------------+

安装配置 CRI-O

安装CRI-O

# cat get_cri-o.sh#!/bin/bashVERSION=1.22sudo curl -L -o /etc/yum.repos.d/devel:kubic:libcontainers:stable.repo https://download.opensuse.org/repositories/devel:kubic:libcontainers:stable/CentOS_7/devel:kubic:libcontainers:stable.reposudo curl -L -o /etc/yum.repos.d/devel:kubic:libcontainers:stable:cri-o:${VERSION}.repo https://download.opensuse.org/repositories/devel:kubic:libcontainers:stable:cri-o:${VERSION}/CentOS_7/devel:kubic:libcontainers:stable:cri-o:${VERSION}.repo

# ansible k8s -m yum -a "name=cri-o,cri-tools state=latest"# ansible k8s -m shell -a "sudo systemctl enable --now crio"

修改cri-o 存储路径

# ansible k8s -m shell -a "sed -i -e 's?^graphroot =.*?graphroot = "/export/Data/containers/storage"?g' /etc/containers/storage.conf"

配置cgroup

# cat 02-cgroup-manager.conf[crio.runtime]conmon_cgroup = "pod"cgroup_manager = "systemd"# ansible k8s -m copy -a "src=02-cgroup-manager.conf dest=/etc/crio/crio.conf.d/"

配置镜像加速

# cat images_mirr.sh#!/bin/bashcat >> /etc/containers/registries.conf << EOF[[registry]]prefix = "docker.io"location = "hub-mirror.c.163.com"[[registry.mirror]]prefix = "docker.io"location = "hub-mirror.c.163.com"EOF

# ansible k8s -m script -a "/root/images_mirr.sh"# ansible k8s -m service -a 'name=crio state=restarted'

配置LB

公有云使用负载均衡代替

高可用LB后续更新,暂用nginx代替

以下操作LB节点执行

[root@lb ~]# yum -y install epel-release.noarch[root@lb ~]# yum -y install nginx nginx-mod-stream

nginx 配置文件中加入以下配置

stream {log_format main '$remote_addr [$time_local]''$protocol $status $bytes_sent $bytes_received''$session_time';server {listen 16443;proxy_pass kubeapi;access_log /var/log/nginx/access.log main;}upstream kubeapi {server 192.168.3.24:6443;server 192.168.3.25:6443;server 192.168.3.26:6443;}}

部署k8s

安装kubeadm、kubelet

# cat kube.sh#!/bin/bashcat <<EOF | sudo tee /etc/yum.repos.d/kubernetes.repo[kubernetes]name=Kubernetesbaseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-\$basearchenabled=1gpgcheck=1repo_gpgcheck=1gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg https://packages.cloud.google.com/yum/doc/rpm-package-key.gpgexclude=kubelet kubeadm kubectlEOFyum install -y kubelet-1.22.3-0 kubeadm-1.22.3-0 kubectl-1.22.3-0 --disableexcludes=kubernetessudo systemctl enable --now kubelet# ansible k8s -m script -a "/root/kube.sh"

分发etcd证书

# ansible k8s -m shell -a "mkdir -p /export/Data/certs/"

配置kubelet

# cat kubelet_conf.sh#!/bin/bashcat > /etc/sysconfig/kubelet <<EOFKUBELET_EXTRA_ARGS=--container-runtime=remote --cgroup-driver=systemd --container-runtime-endpoint='unix:///var/run/crio/crio.sock' --runtime-request-timeout=5mEOF# ansible k8s -m script -a "/root/kubelet_conf.sh"# ansible k8s -m service -a 'name=kubelet state=restarted'

初始第一个master节点

# cat kubeadm_config.yamlapiVersion: kubeadm.k8s.io/v1beta2kind: ClusterConfigurationkubernetesVersion: v1.22.3imageRepository: registry.aliyuncs.com/google_containerscontrolPlaneEndpoint: "192.168.3.29:16443"networking:serviceSubnet: "10.96.0.0/16"podSubnet: "172.16.0.0/16"dnsDomain: "cluster.local"dns:type: "CoreDNS"etcd:external:endpoints:- https://192.168.3.21:2379- https://192.168.3.22:2379- https://192.168.3.23:2379caFile: /export/Data/certs/ca.pemcertFile: /export/Data/certs/etcd.pemkeyFile: /export/Data/certs/etcd-key.pem---apiVersion: kubelet.config.k8s.io/v1beta1kind: KubeletConfigurationcgroupDriver: systemd---apiVersion: kubeproxy.config.k8s.io/v1alpha1kind: KubeProxyConfigurationmode: ipvs

# ansible 192.168.3.24 -m copy -a "src=kubeadm_config.yaml dest=/root"# ansible k8s -m copy -a "src=/root/ssl/ dest=/export/Data/certs/"# ansible 192.168.3.24 -m shell -a "kubeadm init --config=/root/kubeadm_config.yaml --upload-certs"

初始化第二个master节点

注: 密钥上步获得

# ansible 192.168.3.25 -m shell -a "kubeadm join 192.168.3.29:16443 --token de4x51.d923b7l0tbi0692t --discovery-token-ca-cert-hash sha256:b1a8f00caed912ac083d10d8ecd1e92ddf6870c768f91d4e43c91c2614e24e1a --control-plane --certificate-key 0b34ca2ebd85f99ff66b2f57b80708e2ac0368880da52a802e3feb01852f2d81"

初始化第三个master节点

# ansible 192.168.3.26 -m shell -a "kubeadm join 192.168.3.29:16443 --token de4x51.d923b7l0tbi0692t --discovery-token-ca-cert-hash sha256:b1a8f00caed912ac083d10d8ecd1e92ddf6870c768f91d4e43c91c2614e24e1a --control-plane --certificate-key 0b34ca2ebd85f99ff66b2f57b80708e2ac0368880da52a802e3feb01852f2d81"

初始化worker节点

# ansible k8s-worker -m shell -a " kubeadm join 192.168.3.29:16443 --token de4x51.d923b7l0tbi0692t --discovery-token-ca-cert-hash sha256:b1a8f00caed912ac083d10d8ecd1e92ddf6870c768f91d4e43c91c2614e24e1a"

初始化kubectl

# mkdir -p $HOME/.kube# scp root@192.168.3.24:/etc/kubernetes/admin.conf $HOME/.kube/config# scp root@192.168.3.24:/usr/bin/kubectl /usr/bin/kubectl

验证kubelet

[root@deploy ~]# kubectl get nodeNAME STATUS ROLES AGE VERSIONmaster1 Ready control-plane,master 41m v1.22.3master2 Ready control-plane,master 13m v1.22.3master3 Ready control-plane,master 12m v1.22.3worker1 Ready <none> 9m18s v1.22.3worker2 Ready <none> 9m19s v1.22.3

部署网络模型

修改配置

---# Source: calico/templates/calico-etcd-secrets.yaml# The following contains k8s Secrets for use with a TLS enabled etcd cluster.# For information on populating Secrets, see http://kubernetes.io/docs/user-guide/secrets/apiVersion: v1kind: Secrettype: Opaquemetadata:name: calico-etcd-secretsnamespace: kube-systemdata:# Populate the following with etcd TLS configuration if desired, but leave blank if# not using TLS for etcd.# The keys below should be uncommented and the values populated with the base64# encoded contents of each file that would be associated with the TLS data.# Example command for encoding a file contents: cat <file> | base64 -w 0etcd-key: LS0tLS1CRUdJTiBSU0EgUFJJVkFURSBLRVktLS0tLQpNSUlFcEFJQkFBS0NBUUVBNkZJNE91OUl4WU01L2IxUVBHa2RWQUF0UHc1QzA1b0MwN1Z0WGhWaWZDUzYwWitsCjV3aFRBRGRITVpvajhzdXB3dGU2a3IyUnpOYjluM2xGb2lqb0MxMjV4LzUvd2xZcWYyOWt1WTJrQzd4RlBwUFgKdHhwUmlUMEVFV09vTGVyQ202Y3RmMVhjR1RxTHBsZXorVTBCSGNRY0FoUnc3NFFHK1ByU3pDbzV5UDhGWTFHSQo0UWhHWkxyWnZXT0JJZGcyWjFGOHpvSVdVVE1QdWxrQ0Z6WHNDeENFVXY1TXFybjZsN0RZcWJ5K2drZG5qb2U3CklhQXp0UW5CZFVOMmEvdHdXSVE4S3YvSU15TTduT0plL29POG5rdDY4d2h6V1Ftc3p2VkdpSDI1SWhwUUxub3UKcmp1TEhBRERCaS9RM1JuWXdVZWZYTGVOZ1FoTmJYZXE0eGVuT1FJREFRQUJBb0lCQVFETVNjaHlZb211VFFlSQpqWmxwbGRFWlZaSnorVGxnVXZTYmI5VTlQemE4RFp4TnlzSWJGMkhOTmM2ZjJuZ3ovMDFITFdZOXRQN3BqaisxCnBQRkxlQWNjUDQyblJLN1psK1dFNjlJNXJFaU5uVCtTbUhTKzZTQzd1bkRDVGN6TW03d0hIWW5QaUJPa0I2eFgKV0pYRTZpYktJdkd5RG9HRXpLZEk5MTYzODRXZXJKejJneEhDMEZzUGcxOEJPV0NjMmM3SkN5UmdhOXl6Sk90UQpISWw5N0svNStNRXZpSFI0emhqV3hiTXNyVW55MllMQ1hPeW9reTBzVzN0U2RLbmZBUUs2RkJueWxFN3VVQnNXCm1XK2o3Kzl6ZUJGUVdRMlBGS1J4Q1o4amtYSSt1a1NhVzBoTER6b0d6OHBweno5UWNHMWVCOURqTFE5UHFCZDYKbTFkcnovU0JBb0dCQVBqY2x3TlEvOVFFUWhMc1Q3Nkw1OFpZQnBkQ2RUZTFMbG1vVWVIeDQveStJYlYwSkQ2eQpvRmpFbGEzZm1UOHA3THJGMXFQZjlNWmsrUmtRa3VjOGcyQUlyUXp0cmtrUnQxWmVSWHBhMHIzMU53emlEdTU3ClpLNnVWamM4S0NKYlV6S3NNcGZUajdRemFLelZNSFI3aWgxdzZOUWYxS0JNdG94Q3Rtc2MrdjBwQW9HQkFPNzgKSytxd3BJbWpCd2dXZm01NitSKzFzWXkrclZOMk1kZU9QdmRFTzRyQzlHSGlWT1VaaEM0WXlNRnJNOEh4TFd5RQo0eVpsMVpvNXZYVG5ha0JFUWxHU2E4L1NnMkZkY0tJdnNyTG9RUnBMTEhWMHo4b1E0Q2JWTW1GZkgwMDBMK1dICnBYYXNYaWlBdkcrdmxxOGk2Z1U2OGRnOFc2akFUeTZTTUNQTDBZdVJBb0dBU2h4L1NIaU54MWtCU0Z0aG9EQlAKOU14d0lnbWptTlIzR2pJN09GdHQ5dTIwWWpKVlBPcTdQOVJEY3dWY3dPZStYUnpmdit2SkhIQWprcWhSNTFVcApGcWRleWJQYXJGMy9TRlJJd3BoYm5FQnpoWDJvenJLbW1ETEk4Q2dWRjY0MHg2bHFZN2FZWENUWExtbEt4ZFdvCm12M3VDSVgyTDBySkxsb0xzemh0TW9rQ2dZQjJOdUw2YW5wWll2MDljUE1GYjJyLzFuNkhJbUxXWUNiemUzZUcKRklobmNWdzFkeUdMV2YzYVY0UW11UUtYTXRmSFVFeVVWOWM3UE1pTXBWUVhpaXhMOFdQSEgxakJ0dGphUVVIaAo0YVVpZm9EMWNOekFGV3pyaUpZdE9FSmhqQ2tOSHZZb0o4ZER2YnA0ZktESzdUaFpjZmpqZjZmUFo2RkRaaWpOCjdDb3hJUUtCZ1FDWTRWTDM5cG5KZVFQMFhYc1dHVnhVN2Z5Szh0YVFJVnk4Y0tXeVBGdXNaYklXSVM0eU5ENW8KWFI5cHZGYjdkbmQzMnJXaFNKeWZJVm9ZQWhMTXpyd2dBdnF5Q1J4MXdEU2NqdnRFbCs5ZUF1SWIrUFNYZEQ2NgpRMnFyWEttMjNlem0yVkpUL2MxWHlOb2FDYVExb1BPK1BBTDMxZkxiUklLdUZrUEMzVTRZSFE9PQotLS0tLUVORCBSU0EgUFJJVkFURSBLRVktLS0tLQo=etcd-cert: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUVLRENDQXhDZ0F3SUJBZ0lVWkRmczR0UGR0dVJGZEZGRHM0MHBRcWp0VUNjd0RRWUpLb1pJaHZjTkFRRUwKQlFBd1hERUxNQWtHQTFVRUJoTUNRMDR4RURBT0JnTlZCQWdUQjJKbGFXcHBibWN4RURBT0JnTlZCQWNUQjJKbAphV3BwYm1jeEREQUtCZ05WQkFvVEEycGtkREVNTUFvR0ExVUVDeE1EYVc5ME1RMHdDd1lEVlFRREV3UmxkR05rCk1DQVhEVEl4TVRFeE56RXhOVGd3TUZvWUR6SXhNakV4TURJME1URTFPREF3V2pCY01Rc3dDUVlEVlFRR0V3SkQKVGpFUU1BNEdBMVVFQ0JNSFltVnBhbWx1WnpFUU1BNEdBMVVFQnhNSFltVnBhbWx1WnpFTU1Bb0dBMVVFQ2hNRAphbVIwTVF3d0NnWURWUVFMRXdOcGIzUXhEVEFMQmdOVkJBTVRCR1YwWTJRd2dnRWlNQTBHQ1NxR1NJYjNEUUVCCkFRVUFBNElCRHdBd2dnRUtBb0lCQVFEb1VqZzY3MGpGZ3puOXZWQThhUjFVQUMwL0RrTFRtZ0xUdFcxZUZXSjgKSkxyUm42WG5DRk1BTjBjeG1pUHl5Nm5DMTdxU3ZaSE0xdjJmZVVXaUtPZ0xYYm5IL24vQ1ZpcC9iMlM1amFRTAp2RVUrazllM0dsR0pQUVFSWTZndDZzS2JweTEvVmR3Wk9vdW1WN1A1VFFFZHhCd0NGSER2aEFiNCt0TE1Lam5JCi93VmpVWWpoQ0Vaa3V0bTlZNEVoMkRablVYek9naFpSTXcrNldRSVhOZXdMRUlSUy9reXF1ZnFYc05pcHZMNkMKUjJlT2g3c2hvRE8xQ2NGMVEzWnIrM0JZaER3cS84Z3pJenVjNGw3K2c3eWVTM3J6Q0hOWkNhek85VWFJZmJraQpHbEF1ZWk2dU80c2NBTU1HTDlEZEdkakJSNTljdDQyQkNFMXRkNnJqRjZjNUFnTUJBQUdqZ2Q4d2dkd3dEZ1lEClZSMFBBUUgvQkFRREFnV2dNQjBHQTFVZEpRUVdNQlFHQ0NzR0FRVUZCd01CQmdnckJnRUZCUWNEQWpBTUJnTlYKSFJNQkFmOEVBakFBTUIwR0ExVWREZ1FXQkJUSGNlT1BOZm50WnUxR3hZMEtzcmttQmsyQVpUQWZCZ05WSFNNRQpHREFXZ0JUS0JxL29EV3p0TE5HSDNzcnVvY0IrckI1akp6QmRCZ05WSFJFRVZqQlVnZ1ZsZEdOa01ZSUZaWFJqClpES0NCV1YwWTJRemdnZHRZWE4wWlhJeGdnZHRZWE4wWlhJeWdnZHRZWE4wWlhJemh3VEFxQU1WaHdUQXFBTVcKaHdUQXFBTVhod1RBcUFNWWh3VEFxQU1YaHdUQXFBTWFNQTBHQ1NxR1NJYjNEUUVCQ3dVQUE0SUJBUUEvSU9NbgpIMkZYWmVqYU1DNHhlTjdVRmVoaTNGQndjbGNXcUtLU3J2VHhYT1RsMjZOVzhRd2h1SGc3RHNrQkN3UEhXL0s3ClJqdllRNHlEbVB0Q0JHbDE0K3hnMmxYcnhuY0Zzd1N0dFoxcDV1UjNWVFFlNlFDS3ZsNGMyWXNHQzZEU3d2dE4KK041SVFkVVhvalhJTVhkWXVzZS90Qk42b2xjMkdvVFJQV0lCU2FHODhBejd4em5VNThiZXZzN28vU1ZtS2pxZgpTVVA2U3FZeHlPaUtDNWs5cC9qOU42MnN0ZmJURmRxN1JYQ2p0OVl6Q3QwNWg4QW1wLzNmdStYZkhCQTRjYjN1ClJUNTdjZVlXdkIzSEtMMFFFNWNOUjRLNWlXa01LUi94YnNzZlNxSWFPTVF6Q29sWjF3dFZPendaNGZsZUkrVUUKYTFpQUF4K1IxNkNCeG4xZgotLS0tLUVORCBDRVJUSUZJQ0FURS0tLS0tCg==etcd-ca: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSURyRENDQXBTZ0F3SUJBZ0lVTGpLRjE2cDVteXhiWkZFRWNUMi9sSDhGTVdrd0RRWUpLb1pJaHZjTkFRRUwKQlFBd1hERUxNQWtHQTFVRUJoTUNRMDR4RURBT0JnTlZCQWdUQjJKbGFXcHBibWN4RURBT0JnTlZCQWNUQjJKbAphV3BwYm1jeEREQUtCZ05WQkFvVEEycGtkREVNTUFvR0ExVUVDeE1EYVc5ME1RMHdDd1lEVlFRREV3UmxkR05rCk1CNFhEVEl4TVRFeE56RXhOVEl3TUZvWERUSTJNVEV4TmpFeE5USXdNRm93WERFTE1Ba0dBMVVFQmhNQ1EwNHgKRURBT0JnTlZCQWdUQjJKbGFXcHBibWN4RURBT0JnTlZCQWNUQjJKbGFXcHBibWN4RERBS0JnTlZCQW9UQTJwawpkREVNTUFvR0ExVUVDeE1EYVc5ME1RMHdDd1lEVlFRREV3UmxkR05rTUlJQklqQU5CZ2txaGtpRzl3MEJBUUVGCkFBT0NBUThBTUlJQkNnS0NBUUVBeXE2Zm4ycWtlVzJOS3RqREJiMmg5Q1lzZWpBWnIwTmlOWGRPZy8rL3FRNk8KbUNMR1pLU3picUlVY05NRUQvSk5tbWF4UGlPUVNLYmFBUlZscWFKS1ZMVXUxMmo2S1NPUi9KR1hOcnF5ZjM4RQpvREE0R29jdHdtWkI3Rzdrdk1PdXFRWXRMKzQxR0hJYmZsaHFXci9zQ2hEM1E3VlJyaWVMYW9CVFpFMFpEUWVDCis5KzNLcDNqYmEyeWZUNU85K3F4WlFya0xBeE5GR21KUVBzT2ZhTnJjU1p6YTVBc0sybE9MNXAveExGRm0yQU8KanVVSE8yMnBKL0NjMDBPanNveUFnVE5jdVJmaDNuNjdXbllyYVV1RXVhU0RheEZBWlk4bGlhdXFHbndlV296VAp0Q3pVcEUrbW9RTzVqL0o0UksrNm94OTJlZWpLQm1hY3VTM1JNeTFPQVFJREFRQUJvMll3WkRBT0JnTlZIUThCCkFmOEVCQU1DQVFZd0VnWURWUjBUQVFIL0JBZ3dCZ0VCL3dJQkFqQWRCZ05WSFE0RUZnUVV5Z2F2NkExczdTelIKaDk3SzdxSEFmcXdlWXljd0h3WURWUjBqQkJnd0ZvQVV5Z2F2NkExczdTelJoOTdLN3FIQWZxd2VZeWN3RFFZSgpLb1pJaHZjTkFRRUxCUUFEZ2dFQkFEQ1huN3h0VnFjT2ptTUcvUFpHYWp5UEdVTlFsKzNwaFhnYm1vZmtQMFNoCkJlVTZXbTNkOHB4WThrR0xTT2gxSzl1MlA3RnUyTzY3M21pMkdSOEJPT3NLWS9UK0p0RmlmenlmY3VGMjQ4L3QKcFFjRkNUOGw1Wjc1bXcySTRORXZJU1pnU3piWVN0SXdPU01FcFBJZWRRNzY2QUxtVGVwZXNuVll3K2U2dGlBUgpmS0lqakJEeGcrd1B4b2tseWNxWXRCQVA5dDRKMVk4Vkt6VENNelorcllweWRNNWlIaElFK3VYTlhqSXJTMURMCmxNN3JSS2crblRnRk9ma2dPQ3FaTmlZMTFzdzNWQnJUOW4rM1BhTzB2ekZqbXcrUVNxTVpTclJUS1d1V2xaSkcKT2JiUXUvcGhKdk9hZ2NBbUVXRGorNUdaZTVwS29neXlIdUVSVW0wSzYwST0KLS0tLS1FTkQgQ0VSVElGSUNBVEUtLS0tLQo=---# Source: calico/templates/calico-config.yaml# This ConfigMap is used to configure a self-hosted Calico installation.kind: ConfigMapapiVersion: v1metadata:name: calico-confignamespace: kube-systemdata:# Configure this with the location of your etcd cluster.etcd_endpoints: "https://192.168.3.21:2379,https://192.168.3.22:2379,https://192.168.3.23:2379"# If you're using TLS enabled etcd uncomment the following.# You must also populate the Secret below with these files.etcd_ca: "/calico-secrets/etcd-ca" # "/calico-secrets/etcd-ca"etcd_cert: "/calico-secrets/etcd-cert" # "/calico-secrets/etcd-cert"etcd_key: "/calico-secrets/etcd-key" # "/calico-secrets/etcd-key"# Typha is disabled.typha_service_name: "none"# Configure the backend to use.calico_backend: "vxlan"# Configure the MTU to use for workload interfaces and tunnels.# By default, MTU is auto-detected, and explicitly setting this field should not be required.# You can override auto-detection by providing a non-zero value.veth_mtu: "0"# The CNI network configuration to install on each node. The special# values in this config will be automatically populated.cni_network_config: |-{"name": "k8s-pod-network","cniVersion": "0.3.1","plugins": [{"type": "calico","log_level": "info","log_file_path": "/var/log/calico/cni/cni.log","etcd_endpoints": "__ETCD_ENDPOINTS__","etcd_key_file": "__ETCD_KEY_FILE__","etcd_cert_file": "__ETCD_CERT_FILE__","etcd_ca_cert_file": "__ETCD_CA_CERT_FILE__","mtu": __CNI_MTU__,"ipam": {"type": "calico-ipam"},"policy": {"type": "k8s"},"kubernetes": {"kubeconfig": "__KUBECONFIG_FILEPATH__"}},{"type": "portmap","snat": true,"capabilities": {"portMappings": true}},{"type": "bandwidth","capabilities": {"bandwidth": true}}]}---# Source: calico/templates/calico-kube-controllers-rbac.yaml# Include a clusterrole for the kube-controllers component,# and bind it to the calico-kube-controllers serviceaccount.kind: ClusterRoleapiVersion: rbac.authorization.k8s.io/v1metadata:name: calico-kube-controllersrules:# Pods are monitored for changing labels.# The node controller monitors Kubernetes nodes.# Namespace and serviceaccount labels are used for policy.- apiGroups: [""]resources:- pods- nodes- namespaces- serviceaccountsverbs:- watch- list- get# Watch for changes to Kubernetes NetworkPolicies.- apiGroups: ["networking.k8s.io"]resources:- networkpoliciesverbs:- watch- list---kind: ClusterRoleBindingapiVersion: rbac.authorization.k8s.io/v1metadata:name: calico-kube-controllersroleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: calico-kube-controllerssubjects:- kind: ServiceAccountname: calico-kube-controllersnamespace: kube-system------# Source: calico/templates/calico-node-rbac.yaml# Include a clusterrole for the calico-node DaemonSet,# and bind it to the calico-node serviceaccount.kind: ClusterRoleapiVersion: rbac.authorization.k8s.io/v1metadata:name: calico-noderules:# The CNI plugin needs to get pods, nodes, and namespaces.- apiGroups: [""]resources:- pods- nodes- namespacesverbs:- get# EndpointSlices are used for Service-based network policy rule# enforcement.- apiGroups: ["discovery.k8s.io"]resources:- endpointslicesverbs:- watch- list- apiGroups: [""]resources:- endpoints- servicesverbs:# Used to discover service IPs for advertisement.- watch- list# Pod CIDR auto-detection on kubeadm needs access to config maps.- apiGroups: [""]resources:- configmapsverbs:- get- apiGroups: [""]resources:- nodes/statusverbs:# Needed for clearing NodeNetworkUnavailable flag.- patch---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRoleBindingmetadata:name: calico-noderoleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: calico-nodesubjects:- kind: ServiceAccountname: calico-nodenamespace: kube-system---# Source: calico/templates/calico-node.yaml# This manifest installs the calico-node container, as well# as the CNI plugins and network config on# each master and worker node in a Kubernetes cluster.kind: DaemonSetapiVersion: apps/v1metadata:name: calico-nodenamespace: kube-systemlabels:k8s-app: calico-nodespec:selector:matchLabels:k8s-app: calico-nodeupdateStrategy:type: RollingUpdaterollingUpdate:maxUnavailable: 1template:metadata:labels:k8s-app: calico-nodespec:nodeSelector:kubernetes.io/os: linuxhostNetwork: truetolerations:# Make sure calico-node gets scheduled on all nodes.- effect: NoScheduleoperator: Exists# Mark the pod as a critical add-on for rescheduling.- key: CriticalAddonsOnlyoperator: Exists- effect: NoExecuteoperator: ExistsserviceAccountName: calico-node# Minimize downtime during a rolling upgrade or deletion; tell Kubernetes to do a "force# deletion": https://kubernetes.io/docs/concepts/workloads/pods/pod/#termination-of-pods.terminationGracePeriodSeconds: 0priorityClassName: system-node-criticalinitContainers:# This container installs the CNI binaries# and CNI network config file on each node.- name: install-cniimage: docker.mirrors.ustc.edu.cn/calico/cni:v3.21.0command: ["/opt/cni/bin/install"]envFrom:- configMapRef:# Allow KUBERNETES_SERVICE_HOST and KUBERNETES_SERVICE_PORT to be overridden for eBPF mode.name: kubernetes-services-endpointoptional: trueenv:# Name of the CNI config file to create.- name: CNI_CONF_NAMEvalue: "10-calico.conflist"# The CNI network config to install on each node.- name: CNI_NETWORK_CONFIGvalueFrom:configMapKeyRef:name: calico-configkey: cni_network_config# The location of the etcd cluster.- name: ETCD_ENDPOINTSvalueFrom:configMapKeyRef:name: calico-configkey: etcd_endpoints# CNI MTU Config variable- name: CNI_MTUvalueFrom:configMapKeyRef:name: calico-configkey: veth_mtu# Prevents the container from sleeping forever.- name: SLEEPvalue: "false"volumeMounts:- mountPath: /host/opt/cni/binname: cni-bin-dir- mountPath: /host/etc/cni/net.dname: cni-net-dir- mountPath: /calico-secretsname: etcd-certssecurityContext:privileged: true# Adds a Flex Volume Driver that creates a per-pod Unix Domain Socket to allow Dikastes# to communicate with Felix over the Policy Sync API.- name: flexvol-driverimage: docker.mirrors.ustc.edu.cn/calico/pod2daemon-flexvol:v3.21.0volumeMounts:- name: flexvol-driver-hostmountPath: /host/driversecurityContext:privileged: truecontainers:# Runs calico-node container on each Kubernetes node. This# container programs network policy and routes on each# host.- name: calico-nodeimage: docker.mirrors.ustc.edu.cn/calico/node:v3.21.0envFrom:- configMapRef:# Allow KUBERNETES_SERVICE_HOST and KUBERNETES_SERVICE_PORT to be overridden for eBPF mode.name: kubernetes-services-endpointoptional: trueenv:# The location of the etcd cluster.- name: ETCD_ENDPOINTSvalueFrom:configMapKeyRef:name: calico-configkey: etcd_endpoints# Location of the CA certificate for etcd.- name: ETCD_CA_CERT_FILEvalueFrom:configMapKeyRef:name: calico-configkey: etcd_ca# Location of the client key for etcd.- name: ETCD_KEY_FILEvalueFrom:configMapKeyRef:name: calico-configkey: etcd_key# Location of the client certificate for etcd.- name: ETCD_CERT_FILEvalueFrom:configMapKeyRef:name: calico-configkey: etcd_cert# Set noderef for node controller.- name: CALICO_K8S_NODE_REFvalueFrom:fieldRef:fieldPath: spec.nodeName# Choose the backend to use.- name: CALICO_NETWORKING_BACKENDvalueFrom:configMapKeyRef:name: calico-configkey: calico_backend# Cluster type to identify the deployment type- name: CLUSTER_TYPEvalue: "k8s,bgp"# Auto-detect the BGP IP address.- name: IPvalue: "autodetect"# Enable IPIP- name: CALICO_IPV4POOL_IPIPvalue: "Never"# Enable or Disable VXLAN on the default IP pool.- name: CALICO_IPV4POOL_VXLANvalue: "Always"# Set MTU for tunnel device used if ipip is enabled- name: FELIX_IPINIPMTUvalueFrom:configMapKeyRef:name: calico-configkey: veth_mtu# Set MTU for the VXLAN tunnel device.- name: FELIX_VXLANMTUvalueFrom:configMapKeyRef:name: calico-configkey: veth_mtu# Set MTU for the Wireguard tunnel device.- name: FELIX_WIREGUARDMTUvalueFrom:configMapKeyRef:name: calico-configkey: veth_mtu# The default IPv4 pool to create on startup if none exists. Pod IPs will be# chosen from this range. Changing this value after installation will have# no effect. This should fall within `--cluster-cidr`.- name: CALICO_IPV4POOL_CIDRvalue: "172.16.0.0/16"# Disable file logging so `kubectl logs` works.- name: CALICO_DISABLE_FILE_LOGGINGvalue: "true"# Set Felix endpoint to host default action to ACCEPT.- name: FELIX_DEFAULTENDPOINTTOHOSTACTIONvalue: "ACCEPT"# Disable IPv6 on Kubernetes.- name: FELIX_IPV6SUPPORTvalue: "false"- name: FELIX_HEALTHENABLEDvalue: "true"securityContext:privileged: trueresources:requests:cpu: 250mlifecycle:preStop:exec:command:- /bin/calico-node- -shutdownlivenessProbe:exec:command:- /bin/calico-node- -felix-live#- -bird-liveperiodSeconds: 10initialDelaySeconds: 10failureThreshold: 6timeoutSeconds: 10readinessProbe:exec:command:- /bin/calico-node- -felix-ready#- -bird-readyperiodSeconds: 10timeoutSeconds: 10volumeMounts:# For maintaining CNI plugin API credentials.- mountPath: /host/etc/cni/net.dname: cni-net-dirreadOnly: false- mountPath: /lib/modulesname: lib-modulesreadOnly: true- mountPath: /run/xtables.lockname: xtables-lockreadOnly: false- mountPath: /var/run/caliconame: var-run-calicoreadOnly: false- mountPath: /var/lib/caliconame: var-lib-calicoreadOnly: false- mountPath: /calico-secretsname: etcd-certs- name: policysyncmountPath: /var/run/nodeagent# For eBPF mode, we need to be able to mount the BPF filesystem at /sys/fs/bpf so we mount in the# parent directory.- name: sysfsmountPath: /sys/fs/# Bidirectional means that, if we mount the BPF filesystem at /sys/fs/bpf it will propagate to the host.# If the host is known to mount that filesystem already then Bidirectional can be omitted.mountPropagation: Bidirectional- name: cni-log-dirmountPath: /var/log/calico/cnireadOnly: truevolumes:# Used by calico-node.- name: lib-moduleshostPath:path: /lib/modules- name: var-run-calicohostPath:path: /var/run/calico- name: var-lib-calicohostPath:path: /var/lib/calico- name: xtables-lockhostPath:path: /run/xtables.locktype: FileOrCreate- name: sysfshostPath:path: /sys/fs/type: DirectoryOrCreate# Used to install CNI.- name: cni-bin-dirhostPath:path: /opt/cni/bin- name: cni-net-dirhostPath:path: /etc/cni/net.d# Used to access CNI logs.- name: cni-log-dirhostPath:path: /var/log/calico/cni# Mount in the etcd TLS secrets with mode 400.# See https://kubernetes.io/docs/concepts/configuration/secret/- name: etcd-certssecret:secretName: calico-etcd-secretsdefaultMode: 0400# Used to create per-pod Unix Domain Sockets- name: policysynchostPath:type: DirectoryOrCreatepath: /var/run/nodeagent# Used to install Flex Volume Driver- name: flexvol-driver-hosthostPath:type: DirectoryOrCreatepath: /usr/libexec/kubernetes/kubelet-plugins/volume/exec/nodeagent~uds---apiVersion: v1kind: ServiceAccountmetadata:name: calico-nodenamespace: kube-system---# Source: calico/templates/calico-kube-controllers.yaml# See https://github.com/projectcalico/kube-controllersapiVersion: apps/v1kind: Deploymentmetadata:name: calico-kube-controllersnamespace: kube-systemlabels:k8s-app: calico-kube-controllersspec:# The controllers can only have a single active instance.replicas: 1selector:matchLabels:k8s-app: calico-kube-controllersstrategy:type: Recreatetemplate:metadata:name: calico-kube-controllersnamespace: kube-systemlabels:k8s-app: calico-kube-controllersspec:nodeSelector:kubernetes.io/os: linuxtolerations:# Mark the pod as a critical add-on for rescheduling.- key: CriticalAddonsOnlyoperator: Exists- key: node-role.kubernetes.io/mastereffect: NoScheduleserviceAccountName: calico-kube-controllerspriorityClassName: system-cluster-critical# The controllers must run in the host network namespace so that# it isn't governed by policy that would prevent it from working.hostNetwork: truecontainers:- name: calico-kube-controllersimage: docker.mirrors.ustc.edu.cn/calico/kube-controllers:v3.21.0env:# The location of the etcd cluster.- name: ETCD_ENDPOINTSvalueFrom:configMapKeyRef:name: calico-configkey: etcd_endpoints# Location of the CA certificate for etcd.- name: ETCD_CA_CERT_FILEvalueFrom:configMapKeyRef:name: calico-configkey: etcd_ca# Location of the client key for etcd.- name: ETCD_KEY_FILEvalueFrom:configMapKeyRef:name: calico-configkey: etcd_key# Location of the client certificate for etcd.- name: ETCD_CERT_FILEvalueFrom:configMapKeyRef:name: calico-configkey: etcd_cert# Choose which controllers to run.- name: ENABLED_CONTROLLERSvalue: policy,namespace,serviceaccount,workloadendpoint,nodevolumeMounts:# Mount in the etcd TLS secrets.- mountPath: /calico-secretsname: etcd-certslivenessProbe:exec:command:- /usr/bin/check-status- -lperiodSeconds: 10initialDelaySeconds: 10failureThreshold: 6timeoutSeconds: 10readinessProbe:exec:command:- /usr/bin/check-status- -rperiodSeconds: 10volumes:# Mount in the etcd TLS secrets with mode 400.# See https://kubernetes.io/docs/concepts/configuration/secret/- name: etcd-certssecret:secretName: calico-etcd-secretsdefaultMode: 0440---apiVersion: v1kind: ServiceAccountmetadata:name: calico-kube-controllersnamespace: kube-system---# This manifest creates a Pod Disruption Budget for Controller to allow K8s Cluster Autoscaler to evictapiVersion: policy/v1beta1kind: PodDisruptionBudgetmetadata:name: calico-kube-controllersnamespace: kube-systemlabels:k8s-app: calico-kube-controllersspec:maxUnavailable: 1selector:matchLabels:k8s-app: calico-kube-controllers---# Source: calico/templates/calico-typha.yaml---# Source: calico/templates/configure-canal.yaml---# Source: calico/templates/kdd-crds.yaml

安装calico

# kubectl apply -f calico-etcd.yaml

验证集群

[root@deploy ~]# kubectl get pod -n kube-systemNAME READY STATUS RESTARTS AGEcalico-kube-controllers-9767fc4b9-tk9fb 1/1 Running 0 6m56scalico-node-5mc9h 1/1 Running 0 6m56scalico-node-dswmp 1/1 Running 0 6m56scalico-node-qht2s 1/1 Running 0 6m56scalico-node-sdrcg 1/1 Running 0 6m56scalico-node-x58lj 1/1 Running 0 6m56scoredns-7f6cbbb7b8-fc8rd 1/1 Running 0 61mcoredns-7f6cbbb7b8-qvw2m 1/1 Running 0 61mkube-apiserver-master1 1/1 Running 2 94mkube-apiserver-master2 1/1 Running 0 66mkube-apiserver-master3 1/1 Running 0 64mkube-controller-manager-master1 1/1 Running 2 94mkube-controller-manager-master2 1/1 Running 0 66mkube-controller-manager-master3 1/1 Running 0 64mkube-proxy-bscfn 1/1 Running 0 62mkube-proxy-f2fpb 1/1 Running 0 64mkube-proxy-kt7nl 1/1 Running 0 66mkube-proxy-lzww8 1/1 Running 0 62mkube-proxy-zn6gj 1/1 Running 2 94mkube-scheduler-master1 1/1 Running 2 94mkube-scheduler-master2 1/1 Running 0 66mkube-scheduler-master3 1/1 Running 0 64m

问题与解决

1 、 kubelet日报错 failed to get cgroup stats for "

/system.slice/kubelet.service"

11月 18 09:00:42 master1 kubelet[2424]: E1118 09:00:42.948672 2424 summary_sys_containers.go:47] "Failed to get system container stats" err="failed to get cgroup stats for \"/system.slice/kubelet.service\": failed to get container info for \"/system.slice/kubelet.service\": unknown container \"/system.slice/kubelet.service\"" containerName="/system.slice/kubelet.service"11月 18 09:00:52 master1 kubelet[2424]: E1118 09:00:52.956142 2424 summary_sys_containers.go:47] "Failed to get system container stats" err="failed to get cgroup stats for \"/system.slice/kubelet.service\": failed to get container info for \"/system.slice/kubelet.service\": unknown container \"/system.slice/kubelet.service\"" containerName="/system.slice/kubelet.service"11月 18 09:01:02 master1 kubelet[2424]: E1118 09:01:02.961022 2424 summary_sys_containers.go:47] "Failed to get system container stats" err="failed to get cgroup stats for \"/system.slice/kubelet.service\": failed to get container info for \"/system.slice/kubelet.service\": unknown container \"/system.slice/kubelet.service\"" containerName="/system.slice/kubelet.service"11月 18 09:01:12 master1 kubelet[2424]: E1118 09:01:12.966033 2424 summary_sys_containers.go:47] "Failed to get system container stats" err="failed to get cgroup stats for \"/system.slice/kubelet.service\": failed to get container info for \"/system.slice/kubelet.service\": unknown container \"/system.slice/kubelet.service\"" containerName="/system.slice/kubelet.service"11月 18 09:01:22 master1 kubelet[2424]: E1118 09:01:22.970644 2424 summary_sys_containers.go:47] "Failed to get system container stats" err="failed to get cgroup stats for \"/system.slice/kubelet.service\": failed to get container info for \"/system.slice/kubelet.service\": unknown container \"/system.slice/kubelet.service\"" containerName="/system.slice/kubelet.service"

解决方案

配置文件中写入 CPUAccounting=true 与 MemoryAccounting=true

[root@master2 ~]# cat /lib/systemd/system/kubelet.service.d/10-kubeadm.conf# Note: This dropin only works with kubeadm and kubelet v1.11+[Service]CPUAccounting=trueMemoryAccounting=trueEnvironment="KUBELET_KUBECONFIG_ARGS=--bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf --kubeconfig=/etc/kubernetes/kubelet.conf"Environment="KUBELET_CONFIG_ARGS=--config=/var/lib/kubelet/config.yaml"# This is a file that "kubeadm init" and "kubeadm join" generates at runtime, populating the KUBELET_KUBEADM_ARGS variable dynamicallyEnvironmentFile=-/var/lib/kubelet/kubeadm-flags.env# This is a file that the user can use for overrides of the kubelet args as a last resort. Preferably, the user should use# the .NodeRegistration.KubeletExtraArgs object in the configuration files instead. KUBELET_EXTRA_ARGS should be sourced from this file.EnvironmentFile=-/etc/sysconfig/kubeletExecStart=ExecStart=/usr/bin/kubelet $KUBELET_KUBECONFIG_ARGS $KUBELET_CONFIG_ARGS $KUBELET_KUBEADM_ARGS $KUBELET_EXTRA_ARGS

2、kubectl get cs 提示 dial tcp 127.0.0.1:10251: connect: connection refused

[root@deploy ~]# kubectl get csWarning: v1 ComponentStatus is deprecated in v1.19+NAME STATUS MESSAGE ERRORscheduler Unhealthy Get "http://127.0.0.1:10251/healthz": dial tcp 127.0.0.1:10251: connect: connection refusedetcd-1 Healthy {"health":"true","reason":""}controller-manager Healthy oketcd-0 Healthy {"health":"true","reason":""}etcd-2 Healthy {"health":"true","reason":""}

解决方案

注释 port=0

[root@master1 ~]# cat /etc/kubernetes/manifests/kube-scheduler.yamlapiVersion: v1kind: Podmetadata:creationTimestamp: nulllabels:component: kube-schedulertier: control-planename: kube-schedulernamespace: kube-systemspec:containers:- command:- kube-scheduler- --authentication-kubeconfig=/etc/kubernetes/scheduler.conf- --authorization-kubeconfig=/etc/kubernetes/scheduler.conf- --bind-address=127.0.0.1- --kubeconfig=/etc/kubernetes/scheduler.conf- --leader-elect=true# - --port=0image: registry.aliyuncs.com/google_containers/kube-scheduler:v1.22.3imagePullPolicy: IfNotPresent

[root@deploy ~]# kubectl get csWarning: v1 ComponentStatus is deprecated in v1.19+NAME STATUS MESSAGE ERRORscheduler Healthy okcontroller-manager Healthy oketcd-1 Healthy {"health":"true","reason":""}etcd-2 Healthy {"health":"true","reason":""}etcd-0 Healthy {"health":"true","reason":""}

作者:宗庄凯

京东云开发者|IoT运维 - 如何部署一套高可用K8S集群的更多相关文章

- 使用kubeadm部署一套高可用k8s集群

使用kubeadm部署一套高可用k8s集群 有疑问的地方可以看官方文档 准备环境 我的机器如下, 系统为ubuntu20.04, kubernetes版本1.21.0 hostname IP 硬件配置 ...

- 企业运维实践-还不会部署高可用的kubernetes集群?使用kubeadm方式安装高可用k8s集群v1.23.7

关注「WeiyiGeek」公众号 设为「特别关注」每天带你玩转网络安全运维.应用开发.物联网IOT学习! 希望各位看友[关注.点赞.评论.收藏.投币],助力每一个梦想. 文章目录: 0x00 前言简述 ...

- Rancher 2.2.2 - HA 部署高可用k8s集群

对于生产环境,需以高可用的配置安装 Rancher,确保用户始终可以访问 Rancher Server.当安装在Kubernetes集群中时,Rancher将与集群的 etcd 集成,并利用Kuber ...

- kubeadm部署高可用K8S集群(v1.14.2)

1. 简介 测试环境Kubernetes 1.14.2版本高可用搭建文档,搭建方式为kubeadm 2. 服务器版本和架构信息 系统版本:CentOS Linux release 7.6.1810 ( ...

- Kubeadm部署高可用K8S集群

一 基础环境 1.1 资源 节点名称 ip地址 VIP 192.168.12.150 master01 192.168.12.48 master02 192.168.12.242 master03 1 ...

- kubespray -- 快速部署高可用k8s集群 + 扩容节点 scale.yaml

主机 系统版本 配置 ip Mater.Node,ansible CentOS 7.2 4 ...

- 技术沙龙|京东云DevOps自动化运维技术实践

自动化测试体系不完善.缺少自助式的持续交付平台.系统间耦合度高服务拆分难度大.成熟的DevOps工程师稀缺,缺少敏捷文化--这些都是DevOps 在落地过程中,或多或少会碰到的问题,DevOps发展任 ...

- 沙龙报名 | 京东云DevOps——自动化运维技术实践

随着互联网技术的发展,越来越多企业开始认识DevOps重要性,在企业内部推进实施DevOps,期望获得更好的软件质量,缩短软件开发生命周期,提高服务稳定性.但在DevOps 的实施与落地的过程中,或多 ...

- Redis之高可用、集群、云平台搭建

原文:Redis之高可用.集群.云平台搭建 文章大纲 一.基础知识学习二.Redis常见的几种架构及优缺点总结三.Redis之Redis Sentinel(哨兵)实战四.Redis之Redis Clu ...

随机推荐

- Web 前端实战:JQ 实现下拉菜单

实现过程 实现一个简易的鼠标悬停菜单项显示其子项的下拉框控件.将用到 CSS 绝对定位.流式布局.动画等:JQuery 鼠标移入和移出事件.DOM 查找.效果图如下: HTML 结构: <div ...

- CSS 笔记目录

布局 CSS 布局(一):Flex 布局 选择器 CSS 选择器(一):属性选择器 CSS 选择器(二):子代选择器(>)

- [WPF]WPF设置单实例启动

WPF设置单实例启动 使用Mutex设置单实例启动 using System; using System.Threading; using System.Windows; namespace Test ...

- Mysql 一主一从

1. 主从原理 1.1 主从介绍 所谓 mysql 主从就是建立两个完全一样的数据库,其中一个为主要使用的数据库,另一个为次要的数据库,一般在企业中,存放比较重要的数据的数据库服务器需要配置主从,这样 ...

- 搭建docker镜像仓库(一):使用registry搭建本地镜像仓库

目录 一.系统环境 二.前言 三.使用registry搭建私有镜像仓库 3.1 环境介绍 3.2 k8smaster节点配置镜像仓库 3.3 k8sworker1节点配置从私有仓库上传和拉取镜像 3. ...

- EL&JSTL笔记------jsp

今日内容 1. JSP: 1. 指令 2. 注释 3. 内置对象 2. MVC开发模式 3. EL表达式 4. JSTL标签 5. 三层架构 JSP: 1. 指令 * 作用:用于配置JSP页面,导入资 ...

- 配置Windows server dhcp与AD域对接并使用Win1创的用户登录Win2

创建两台windows_server_2012 创建步骤链接(https://www.cnblogs.com/zhengyan6/p/16338084.html) 注意:所有虚拟机都要在同意网段 配置 ...

- python 数据挖掘模块学习

项目中用到的模块 API # 模块: import pandas as pd import numpy as np from scipy.optimize import curve_fit numpy ...

- mysql_唯一索引数据重复问题总结

CREATE TABLE `tt_transfer_assemble_diffuse_plan_info` ( `id` bigint(20) unsigned NOT NULL AUTO_INCRE ...

- 【读书笔记】C#高级编程 第七章 运算符和类型强制转换

(一)运算符 类别 运算符 算术运算符 + - * / % 逻辑运算符 & | ^ ~ && || ! 字符串连接运算符 + 增量和减量运算符 ++ -- 移位运算符 < ...