图像分割实验:FCN数据集制作,网络模型定义,网络训练(提供数据集和模型文件,以供参考)

论文:《Fully Convolutional Networks for Semantic Segmentation》

代码:FCN的Caffe 实现

数据集:PascalVOC

一 数据集制作

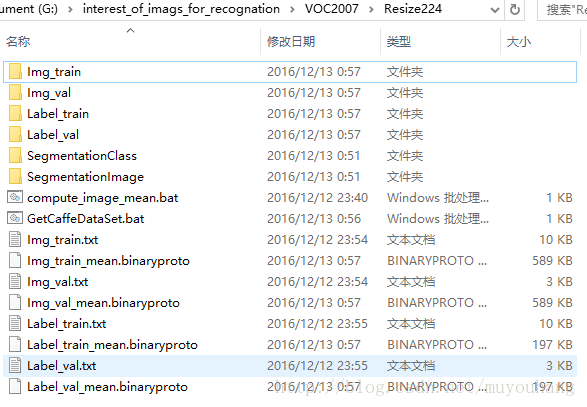

PascalVOC数据下载下来后,制作用以图像分割的图像数据集和标签数据集,LMDB或者LEVELDB格式。 最好resize一下(填充的方式)。

1. 数据文件夹构成

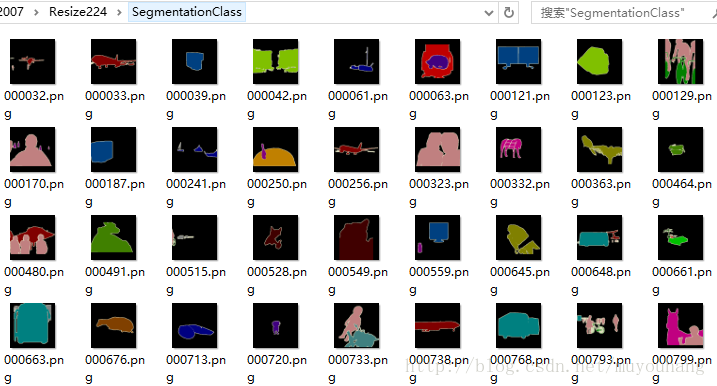

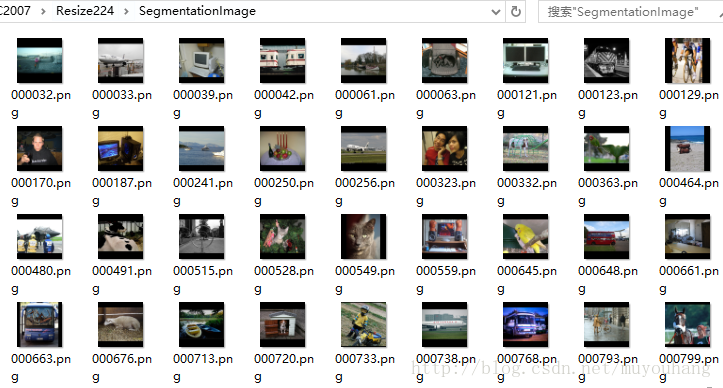

包括原始图片和标签图片,如下。

然后,构建对应的lmdb文件。可以将所有图片按照4:1的比例分为train:val的比例。每个txt文件列出图像路径就可以,不用给label,因为image的label还是image,在caffe中指定就行。

Img_train.txt

SegmentationImage/002120.png

SegmentationImage/002132.png

SegmentationImage/002142.png

SegmentationImage/002212.png

SegmentationImage/002234.png

SegmentationImage/002260.png

SegmentationImage/002266.png

SegmentationImage/002268.png

SegmentationImage/002273.png

SegmentationImage/002281.png

SegmentationImage/002284.png

SegmentationImage/002293.png

SegmentationImage/002361.png

Label_train.txt

SegmentationClass/002120.png

SegmentationClass/002132.png

SegmentationClass/002142.png

SegmentationClass/002212.png

SegmentationClass/002234.png

SegmentationClass/002260.png

SegmentationClass/002266.png

SegmentationClass/002268.png

SegmentationClass/002273.png

SegmentationClass/002281.png

SegmentationClass/002284.png

SegmentationClass/002293.png

注意:label要自己生成,根据SegmentationClass下的groundtruth图片。 每个类别的像素值如下:

类别名称 R G B

background 0 0 0 背景

aeroplane 128 0 0 飞机

bicycle 0 128 0

bird 128 128 0

boat 0 0 128

bottle 128 0 128 瓶子

bus 0 128 128 大巴

car 128 128 128

cat 64 0 0 猫

chair 192 0 0

cow 64 128 0

diningtable 192 128 0 餐桌

dog 64 0 128

horse 192 0 128

motorbike 64 128 128

person 192 128 128

pottedplant 0 64 0 盆栽

sheep 128 64 0

sofa 0 192 0

train 128 192 0

tvmonitor 0 64 128 显示器

对数据集中的 ground truth 图像进行处理,生成用以训练的label图像。

需要注意的是,label文件要是gray格式,不然会出错:scores层输出与label的数据尺寸不一致,通道问题导致的。

然后生成lmdb就行了。数据集准备完毕。

二 网络模型定义

这里主要考虑的是数据输入的问题,指定data和label,如下。

layer {

name: "data"

type: "Data"

top:"data"

include {

phase: TRAIN

}

transform_param {

scale: 0.00390625

mean_file: "G:/interest_of_imags_for_recognation/VOC2007/Resize224/Img_train_mean.binaryproto"

}

data_param {

source: "G:/interest_of_imags_for_recognation/VOC2007/Resize224/Img_train"

batch_size:

backend: LMDB

}

}

layer {

name: "label"

type: "Data"

top:"label"

include {

phase: TRAIN

}

transform_param {

scale: 0.00390625

mean_file: "G:/interest_of_imags_for_recognation/VOC2007/Resize224/Label_train_mean.binaryproto"

}

data_param {

source: "G:/interest_of_imags_for_recognation/VOC2007/Resize224/Label_train"

batch_size:

backend: LMDB

}

}

layer {

name: "data"

type: "Data"

top: "data"

include {

phase: TEST

}

transform_param {

scale: 0.00390625

mean_file: "G:/interest_of_imags_for_recognation/VOC2007/Resize224/Img_val_mean.binaryproto"

}

data_param {

source: "G:/interest_of_imags_for_recognation/VOC2007/Resize224/Img_val"

batch_size:

backend: LMDB

}

}

layer {

name: "label"

type: "Data"

top: "label"

include {

phase: TEST

}

transform_param {

scale: 0.00390625

mean_file: "G:/interest_of_imags_for_recognation/VOC2007/Resize224/Label_val_mean.binaryproto"

}

data_param {

source: "G:/interest_of_imags_for_recognation/VOC2007/Resize224/Label_val"

batch_size:

backend: LMDB

}

}

三 网络训练

最好fintune,不然loss下降太慢。

Log file created at: // ::

Running on machine: DESKTOP

Log line format: [IWEF]mmdd hh:mm:ss.uuuuuu threadid file:line] msg

I1213 ::07.177220 caffe.cpp:] Using GPUs

I1213 ::07.436894 caffe.cpp:] GPU : GeForce GTX

I1213 ::07.758122 common.cpp:] System entropy source not available, using fallback algorithm to generate seed instead.

I1213 ::07.758623 solver.cpp:] Initializing solver from parameters:

test_iter:

test_interval:

base_lr: 1e-

display:

max_iter:

lr_policy: "fixed"

momentum: 0.95

weight_decay: 0.0005

snapshot:

snapshot_prefix: "FCN"

solver_mode: GPU

device_id:

net: "train_val.prototxt"

train_state {

level:

stage: ""

}

iter_size:

I1213 ::07.759624 solver.cpp:] Creating training net from net file: train_val.prototxt

I1213 ::07.760124 net.cpp:] The NetState phase () differed from the phase () specified by a rule in layer data

I1213 ::07.760124 net.cpp:] The NetState phase () differed from the phase () specified by a rule in layer label

I1213 ::07.760124 net.cpp:] The NetState phase () differed from the phase () specified by a rule in layer accuracy

I1213 ::07.761126 net.cpp:] Initializing net from parameters:

state {

phase: TRAIN

level:

stage: ""

}

layer {

name: "data"

type: "Data"

top: "data"

include {

phase: TRAIN

}

transform_param {

scale: 0.00390625

mean_file: "G:/interest_of_imags_for_recognation/VOC2007/Resize224/Img_train_mean.binaryproto"

}

data_param {

source: "G:/interest_of_imags_for_recognation/VOC2007/Resize224/Img_train"

batch_size:

backend: LMDB

}

}

layer {

name: "label"

type: "Data"

top: "label"

include {

phase: TRAIN

}

transform_param {

scale: 0.00390625

mean_file: "G:/interest_of_imags_for_recognation/VOC2007/Resize224/Label_train_mean.binaryproto"

}

data_param {

source: "G:/interest_of_imags_for_recognation/VOC2007/Resize224/Label_train"

batch_size:

backend: LMDB

}

}

layer {

name: "conv1_1"

type: "Convolution"

bottom: "data"

top: "conv1_1"

param {

lr_mult:

decay_mult:

}

param {

lr_mult:

decay_mult:

}

convolution_param {

num_output:

pad:

kernel_size:

stride:

}

}

layer {

name: "relu1_1"

type: "ReLU"

bottom: "conv1_1"

top: "conv1_1"

}

layer {

name: "conv1_2"

type: "Convolution"

bottom: "conv1_1"

top: "conv1_2"

param {

lr_mult:

decay_mult:

}

param {

lr_mult:

decay_mult:

}

convolution_param {

num_output:

pad:

kernel_size:

stride:

}

}

layer {

name: "relu1_2"

type: "ReLU"

bottom: "conv1_2"

top: "conv1_2"

}

layer {

name: "pool1"

type: "Pooling"

bottom: "conv1_2"

top: "pool1"

pooling_param {

pool: MAX

kernel_size:

stride:

}

}

layer {

name: "conv2_1"

type: "Convolution"

bottom: "pool1"

top: "conv2_1"

param {

lr_mult:

decay_mult:

}

param {

lr_mult:

decay_mult:

}

convolution_param {

num_output:

pad:

kernel_size:

stride:

}

}

layer {

name: "relu2_1"

type: "ReLU"

bottom: "conv2_1"

top: "conv2_1"

}

layer {

name: "conv2_2"

type: "Convolution"

bottom: "conv2_1"

top: "conv2_2"

param {

lr_mult:

decay_mult:

}

param {

lr_mult:

decay_mult:

}

convolution_param {

num_output:

pad:

kernel_size:

stride:

}

}

layer {

name: "relu2_2"

type: "ReLU"

bottom: "conv2_2"

top: "conv2_2"

}

layer {

name: "pool2"

type: "Pooling"

bottom: "conv2_2"

top: "pool2"

pooling_param {

pool: MAX

kernel_size:

stride:

}

}

layer {

name: "conv3_1"

type: "Convolution"

bottom: "pool2"

top: "conv3_1"

param {

lr_mult:

decay_mult:

}

param {

lr_mult:

decay_mult:

}

convolution_param {

num_output:

pad:

kernel_size:

stride:

}

}

layer {

name: "relu3_1"

type: "ReLU"

bottom: "conv3_1"

top: "conv3_1"

}

layer {

name: "conv3_2"

type: "Convolution"

bottom: "conv3_1"

top: "conv3_2"

param {

lr_mult:

decay_mult:

}

param {

lr_mult:

decay_mult:

}

convolution_param {

num_output:

pad:

kernel_size:

stride:

}

}

layer {

name: "relu3_2"

type: "ReLU"

bottom: "conv3_2"

top: "conv3_2"

}

layer {

name: "conv3_3"

type: "Convolution"

bottom: "conv3_2"

top: "conv3_3"

param {

lr_mult:

decay_mult:

}

param {

lr_mult:

decay_mult:

}

convolution_param {

num_output:

pad:

kernel_size:

stride:

}

}

layer {

name: "relu3_3"

type: "ReLU"

bottom: "conv3_3"

top: "conv3_3"

}

layer {

name: "pool3"

type: "Pooling"

bottom: "conv3_3"

top: "pool3"

pooling_param {

pool: MAX

kernel_size:

stride:

}

}

layer {

name: "conv4_1"

type: "Convolution"

bottom: "pool3"

top: "conv4_1"

param {

lr_mult:

decay_mult:

}

param {

lr_mult:

decay_mult:

}

convolution_param {

num_output:

pad:

kernel_size:

stride:

}

}

layer {

name: "relu4_1"

type: "ReLU"

bottom: "conv4_1"

top: "conv4_1"

}

layer {

name: "conv4_2"

type: "Convolution"

bottom: "conv4_1"

top: "conv4_2"

param {

lr_mult:

decay_mult:

}

param {

lr_mult:

decay_mult:

}

convolution_param {

num_output:

pad:

kernel_size:

stride:

}

}

layer {

name: "relu4_2"

type: "ReLU"

bottom: "conv4_2"

top: "conv4_2"

}

layer {

name: "conv4_3"

type: "Convolution"

bottom: "conv4_2"

top: "conv4_3"

param {

lr_mult:

decay_mult:

}

param {

lr_mult:

decay_mult:

}

convolution_param {

num_output:

pad:

kernel_size:

stride:

}

}

layer {

name: "relu4_3"

type: "ReLU"

bottom: "conv4_3"

top: "conv4_3"

}

layer {

name: "pool4"

type: "Pooling"

bottom: "conv4_3"

top: "pool4"

pooling_param {

pool: MAX

kernel_size:

stride:

}

}

layer {

name: "conv5_1"

type: "Convolution"

bottom: "pool4"

top: "conv5_1"

param {

lr_mult:

decay_mult:

}

param {

lr_mult:

decay_mult:

}

convolution_param {

num_output:

pad:

kernel_size:

stride:

}

}

layer {

name: "relu5_1"

type: "ReLU"

bottom: "conv5_1"

top: "conv5_1"

}

layer {

name: "conv5_2"

type: "Convolution"

bottom: "conv5_1"

top: "conv5_2"

param {

lr_mult:

decay_mult:

}

param {

lr_mult:

decay_mult:

}

convolution_param {

num_output:

pad:

kernel_size:

stride:

}

}

layer {

name: "relu5_2"

type: "ReLU"

bottom: "conv5_2"

top: "conv5_2"

}

layer {

name: "conv5_3"

type: "Convolution"

bottom: "conv5_2"

top: "conv5_3"

param {

lr_mult:

decay_mult:

}

param {

lr_mult:

decay_mult:

}

convolution_param {

num_output:

pad:

kernel_size:

stride:

}

}

layer {

name: "relu5_3"

type: "ReLU"

bottom: "conv5_3"

top: "conv5_3"

}

layer {

name: "pool5"

type: "Pooling"

bottom: "conv5_3"

top: "pool5"

pooling_param {

pool: MAX

kernel_size:

stride:

}

}

layer {

name: "fc6"

type: "Convolution"

bottom: "pool5"

top: "fc6"

param {

lr_mult:

decay_mult:

}

param {

lr_mult:

decay_mult:

}

convolution_param {

num_output:

pad:

kernel_size:

stride:

}

}

layer {

name: "relu6"

type: "ReLU"

bottom: "fc6"

top: "fc6"

}

layer {

name: "drop6"

type: "Dropout"

bottom: "fc6"

top: "fc6"

dropout_param {

dropout_ratio: 0.5

}

}

layer {

name: "fc7"

type: "Convolution"

bottom: "fc6"

top: "fc7"

param {

lr_mult:

decay_mult:

}

param {

lr_mult:

decay_mult:

}

convolution_param {

num_output:

pad:

kernel_size:

stride:

}

}

layer {

name: "relu7"

type: "ReLU"

bottom: "fc7"

top: "fc7"

}

layer {

name: "drop7"

type: "Dropout"

bottom: "fc7"

top: "fc7"

dropout_param {

dropout_ratio: 0.5

}

}

layer {

name: "score_fr"

type: "Convolution"

bottom: "fc7"

top: "score_fr"

param {

lr_mult:

decay_mult:

}

param {

lr_mult:

decay_mult:

}

convolution_param {

num_output:

pad:

kernel_size:

}

}

layer {

name: "upscore2"

type: "Deconvolution"

bottom: "score_fr"

top: "upscore2"

param {

lr_mult:

}

convolution_param {

num_output:

bias_term: false

kernel_size:

stride:

}

}

layer {

name: "score_pool4"

type: "Convolution"

bottom: "pool4"

top: "score_pool4"

param {

lr_mult:

decay_mult:

}

param {

lr_mult:

decay_mult:

}

convolution_param {

num_output:

pad:

kernel_size:

}

}

layer {

name: "score_pool4c"

type: "Crop"

bottom: "score_pool4"

bottom: "upscore2"

top: "score_pool4c"

crop_param {

axis:

offset:

}

}

layer {

name: "fuse_pool4"

type: "Eltwise"

bottom: "upscore2"

bottom: "score_pool4c"

top: "fuse_pool4"

eltwise_param {

operation: SUM

}

}

layer {

name: "upscore_pool4"

type: "Deconvolution"

bottom: "fuse_pool4"

top: "upscore_pool4"

param {

lr_mult:

}

convolution_param {

num_output:

bias_term: false

kernel_size:

stride:

}

}

layer {

name: "score_pool3"

type: "Convolution"

bottom: "pool3"

top: "score_pool3"

param {

lr_mult:

decay_mult:

}

param {

lr_mult:

decay_mult:

}

convolution_param {

num_output:

pad:

kernel_size:

}

}

layer {

name: "score_pool3c"

type: "Crop"

bottom: "score_pool3"

bottom: "upscore_pool4"

top: "score_pool3c"

crop_param {

axis:

offset:

}

}

layer {

name: "fuse_pool3"

type: "Eltwise"

bottom: "upscore_pool4"

bottom: "score_pool3c"

top: "fuse_pool3"

eltwise_param {

operation: SUM

}

}

layer {

name: "upscore8"

type: "Deconvolution"

bottom: "fuse_pool3"

top: "upscore8"

param {

lr_mult:

}

convolution_param {

num_output:

bias_term: false

kernel_size:

stride:

}

}

layer {

name: "score"

type: "Crop"

bottom: "upscore8"

bottom: "data"

top: "score"

crop_param {

axis:

offset:

}

}

layer {

name: "loss"

type: "SoftmaxWithLoss"

bottom: "score"

bottom: "label"

top: "loss"

loss_param {

ignore_label:

normalize: false

}

}

I1213 ::07.787643 layer_factory.hpp:] Creating layer data

I1213 ::07.788645 common.cpp:] System entropy source not available, using fallback algorithm to generate seed instead.

I1213 ::07.789145 net.cpp:] Creating Layer data

I1213 ::07.789645 net.cpp:] data -> data

I1213 ::07.790145 common.cpp:] System entropy source not available, using fallback algorithm to generate seed instead.

I1213 ::07.790145 data_transformer.cpp:] Loading mean file from: G:/interest_of_imags_for_recognation/VOC2007/Resize224/Img_train_mean.binaryproto

I1213 ::07.791647 db_lmdb.cpp:] Opened lmdb G:/interest_of_imags_for_recognation/VOC2007/Resize224/Img_train

I1213 ::07.841182 data_layer.cpp:] output data size: ,,,

I1213 ::07.846186 net.cpp:] Setting up data

I1213 ::07.846688 net.cpp:] Top shape: ()

I1213 ::07.849189 common.cpp:] System entropy source not available, using fallback algorithm to generate seed instead.

I1213 ::07.849689 net.cpp:] Memory required for data:

I1213 ::07.852190 layer_factory.hpp:] Creating layer data_data_0_split

I1213 ::07.853691 net.cpp:] Creating Layer data_data_0_split

I1213 ::07.855195 net.cpp:] data_data_0_split <- data

I1213 ::07.856194 net.cpp:] data_data_0_split -> data_data_0_split_0

I1213 ::07.857697 net.cpp:] data_data_0_split -> data_data_0_split_1

I1213 ::07.858695 net.cpp:] Setting up data_data_0_split

I1213 ::07.859695 net.cpp:] Top shape: ()

I1213 ::07.862702 net.cpp:] Top shape: ()

I1213 ::07.864199 net.cpp:] Memory required for data:

I1213 ::07.865211 layer_factory.hpp:] Creating layer label

I1213 ::07.866701 net.cpp:] Creating Layer label

I1213 ::07.867712 net.cpp:] label -> label

I1213 ::07.869706 common.cpp:] System entropy source not available, using fallback algorithm to generate seed instead.

I1213 ::07.870203 data_transformer.cpp:] Loading mean file from: G:/interest_of_imags_for_recognation/VOC2007/Resize224/Label_train_mean.binaryproto

I1213 ::07.873206 db_lmdb.cpp:] Opened lmdb G:/interest_of_imags_for_recognation/VOC2007/Resize224/Label_train

I1213 ::07.875710 data_layer.cpp:] output data size: ,,,

I1213 ::07.877709 net.cpp:] Setting up label

I1213 ::07.879212 net.cpp:] Top shape: ()

I1213 ::07.881211 common.cpp:] System entropy source not available, using fallback algorithm to generate seed instead.

I1213 ::07.882211 net.cpp:] Memory required for data:

I1213 ::07.883713 layer_factory.hpp:] Creating layer conv1_1

I1213 ::07.884716 net.cpp:] Creating Layer conv1_1

I1213 ::07.885215 net.cpp:] conv1_1 <- data_data_0_split_0

I1213 ::07.886214 net.cpp:] conv1_1 -> conv1_1

I1213 ::08.172420 net.cpp:] Setting up conv1_1

I1213 ::08.172919 net.cpp:] Top shape: ()

I1213 ::08.173419 net.cpp:] Memory required for data:

I1213 ::08.173919 layer_factory.hpp:] Creating layer relu1_1

I1213 ::08.173919 net.cpp:] Creating Layer relu1_1

I1213 ::08.173919 net.cpp:] relu1_1 <- conv1_1

I1213 ::08.174420 net.cpp:] relu1_1 -> conv1_1 (in-place)

I1213 ::08.174921 net.cpp:] Setting up relu1_1

I1213 ::08.175420 net.cpp:] Top shape: ()

I1213 ::08.175921 net.cpp:] Memory required for data:

I1213 ::08.175921 layer_factory.hpp:] Creating layer conv1_2

I1213 ::08.176421 net.cpp:] Creating Layer conv1_2

I1213 ::08.176421 net.cpp:] conv1_2 <- conv1_1

I1213 ::08.176421 net.cpp:] conv1_2 -> conv1_2

I1213 ::08.178923 net.cpp:] Setting up conv1_2

I1213 ::08.179424 net.cpp:] Top shape: ()

I1213 ::08.179424 net.cpp:] Memory required for data:

I1213 ::08.179424 layer_factory.hpp:] Creating layer relu1_2

I1213 ::08.179424 net.cpp:] Creating Layer relu1_2

I1213 ::08.180424 net.cpp:] relu1_2 <- conv1_2

I1213 ::08.180424 net.cpp:] relu1_2 -> conv1_2 (in-place)

I1213 ::08.180924 net.cpp:] Setting up relu1_2

I1213 ::08.181426 net.cpp:] Top shape: ()

I1213 ::08.181426 net.cpp:] Memory required for data:

I1213 ::08.181426 layer_factory.hpp:] Creating layer pool1

I1213 ::08.181426 net.cpp:] Creating Layer pool1

I1213 ::08.182425 net.cpp:] pool1 <- conv1_2

I1213 ::08.182425 net.cpp:] pool1 -> pool1

I1213 ::08.182425 net.cpp:] Setting up pool1

I1213 ::08.183426 net.cpp:] Top shape: ()

I1213 ::08.183426 net.cpp:] Memory required for data:

I1213 ::08.183426 layer_factory.hpp:] Creating layer conv2_1

I1213 ::08.183926 net.cpp:] Creating Layer conv2_1

I1213 ::08.183926 net.cpp:] conv2_1 <- pool1

I1213 ::08.183926 net.cpp:] conv2_1 -> conv2_1

I1213 ::08.189931 net.cpp:] Setting up conv2_1

I1213 ::08.189931 net.cpp:] Top shape: ()

I1213 ::08.190433 net.cpp:] Memory required for data:

I1213 ::08.190932 layer_factory.hpp:] Creating layer relu2_1

I1213 ::08.191432 net.cpp:] Creating Layer relu2_1

I1213 ::08.191432 net.cpp:] relu2_1 <- conv2_1

I1213 ::08.191432 net.cpp:] relu2_1 -> conv2_1 (in-place)

I1213 ::08.192433 net.cpp:] Setting up relu2_1

I1213 ::08.192934 net.cpp:] Top shape: ()

I1213 ::08.192934 net.cpp:] Memory required for data:

I1213 ::08.193434 layer_factory.hpp:] Creating layer conv2_2

I1213 ::08.193434 net.cpp:] Creating Layer conv2_2

I1213 ::08.194434 net.cpp:] conv2_2 <- conv2_1

I1213 ::08.194434 net.cpp:] conv2_2 -> conv2_2

I1213 ::08.197937 net.cpp:] Setting up conv2_2

I1213 ::08.197937 net.cpp:] Top shape: ()

I1213 ::08.198437 net.cpp:] Memory required for data:

I1213 ::08.198437 layer_factory.hpp:] Creating layer relu2_2

I1213 ::08.198437 net.cpp:] Creating Layer relu2_2

I1213 ::08.199439 net.cpp:] relu2_2 <- conv2_2

I1213 ::08.199439 net.cpp:] relu2_2 -> conv2_2 (in-place)

I1213 ::08.199939 net.cpp:] Setting up relu2_2

I1213 ::08.200939 net.cpp:] Top shape: ()

I1213 ::08.200939 net.cpp:] Memory required for data:

I1213 ::08.200939 layer_factory.hpp:] Creating layer pool2

I1213 ::08.200939 net.cpp:] Creating Layer pool2

I1213 ::08.202940 net.cpp:] pool2 <- conv2_2

I1213 ::08.203441 net.cpp:] pool2 -> pool2

I1213 ::08.203441 net.cpp:] Setting up pool2

I1213 ::08.203441 net.cpp:] Top shape: ()

I1213 ::08.203441 net.cpp:] Memory required for data:

I1213 ::08.203941 layer_factory.hpp:] Creating layer conv3_1

I1213 ::08.203941 net.cpp:] Creating Layer conv3_1

I1213 ::08.203941 net.cpp:] conv3_1 <- pool2

I1213 ::08.207443 net.cpp:] conv3_1 -> conv3_1

I1213 ::08.214949 net.cpp:] Setting up conv3_1

I1213 ::08.214949 net.cpp:] Top shape: ()

I1213 ::08.215450 net.cpp:] Memory required for data:

I1213 ::08.215450 layer_factory.hpp:] Creating layer relu3_1

I1213 ::08.215450 net.cpp:] Creating Layer relu3_1

I1213 ::08.216450 net.cpp:] relu3_1 <- conv3_1

I1213 ::08.216450 net.cpp:] relu3_1 -> conv3_1 (in-place)

I1213 ::08.217952 net.cpp:] Setting up relu3_1

I1213 ::08.219452 net.cpp:] Top shape: ()

I1213 ::08.219452 net.cpp:] Memory required for data:

I1213 ::08.219452 layer_factory.hpp:] Creating layer conv3_2

I1213 ::08.220453 net.cpp:] Creating Layer conv3_2

I1213 ::08.222455 net.cpp:] conv3_2 <- conv3_1

I1213 ::08.222455 net.cpp:] conv3_2 -> conv3_2

I1213 ::08.227458 net.cpp:] Setting up conv3_2

I1213 ::08.227458 net.cpp:] Top shape: ()

I1213 ::08.228458 net.cpp:] Memory required for data:

I1213 ::08.228458 layer_factory.hpp:] Creating layer relu3_2

I1213 ::08.228458 net.cpp:] Creating Layer relu3_2

I1213 ::08.228958 net.cpp:] relu3_2 <- conv3_2

I1213 ::08.229960 net.cpp:] relu3_2 -> conv3_2 (in-place)

I1213 ::08.230460 net.cpp:] Setting up relu3_2

I1213 ::08.230959 net.cpp:] Top shape: ()

I1213 ::08.230959 net.cpp:] Memory required for data:

I1213 ::08.231461 layer_factory.hpp:] Creating layer conv3_3

I1213 ::08.231961 net.cpp:] Creating Layer conv3_3

I1213 ::08.231961 net.cpp:] conv3_3 <- conv3_2

I1213 ::08.231961 net.cpp:] conv3_3 -> conv3_3

I1213 ::08.240967 net.cpp:] Setting up conv3_3

I1213 ::08.240967 net.cpp:] Top shape: ()

I1213 ::08.241467 net.cpp:] Memory required for data:

I1213 ::08.241467 layer_factory.hpp:] Creating layer relu3_3

I1213 ::08.242468 net.cpp:] Creating Layer relu3_3

I1213 ::08.242468 net.cpp:] relu3_3 <- conv3_3

I1213 ::08.242468 net.cpp:] relu3_3 -> conv3_3 (in-place)

I1213 ::08.243969 net.cpp:] Setting up relu3_3

I1213 ::08.244971 net.cpp:] Top shape: ()

I1213 ::08.244971 net.cpp:] Memory required for data:

I1213 ::08.244971 layer_factory.hpp:] Creating layer pool3

I1213 ::08.245970 net.cpp:] Creating Layer pool3

I1213 ::08.245970 net.cpp:] pool3 <- conv3_3

I1213 ::08.245970 net.cpp:] pool3 -> pool3

I1213 ::08.245970 net.cpp:] Setting up pool3

I1213 ::08.246471 net.cpp:] Top shape: ()

I1213 ::08.246471 net.cpp:] Memory required for data:

I1213 ::08.246471 layer_factory.hpp:] Creating layer pool3_pool3_0_split

I1213 ::08.246471 net.cpp:] Creating Layer pool3_pool3_0_split

I1213 ::08.246971 net.cpp:] pool3_pool3_0_split <- pool3

I1213 ::08.247473 net.cpp:] pool3_pool3_0_split -> pool3_pool3_0_split_0

I1213 ::08.247473 net.cpp:] pool3_pool3_0_split -> pool3_pool3_0_split_1

I1213 ::08.247473 net.cpp:] Setting up pool3_pool3_0_split

I1213 ::08.247473 net.cpp:] Top shape: ()

I1213 ::08.247473 net.cpp:] Top shape: ()

I1213 ::08.247473 net.cpp:] Memory required for data:

I1213 ::08.249974 layer_factory.hpp:] Creating layer conv4_1

I1213 ::08.249974 net.cpp:] Creating Layer conv4_1

I1213 ::08.249974 net.cpp:] conv4_1 <- pool3_pool3_0_split_0

I1213 ::08.249974 net.cpp:] conv4_1 -> conv4_1

I1213 ::08.260982 net.cpp:] Setting up conv4_1

I1213 ::08.261482 net.cpp:] Top shape: ()

I1213 ::08.262984 net.cpp:] Memory required for data:

I1213 ::08.262984 layer_factory.hpp:] Creating layer relu4_1

I1213 ::08.266486 net.cpp:] Creating Layer relu4_1

I1213 ::08.266985 net.cpp:] relu4_1 <- conv4_1

I1213 ::08.266985 net.cpp:] relu4_1 -> conv4_1 (in-place)

I1213 ::08.269989 net.cpp:] Setting up relu4_1

I1213 ::08.269989 net.cpp:] Top shape: ()

I1213 ::08.270488 net.cpp:] Memory required for data:

I1213 ::08.270488 layer_factory.hpp:] Creating layer conv4_2

I1213 ::08.270488 net.cpp:] Creating Layer conv4_2

I1213 ::08.272990 net.cpp:] conv4_2 <- conv4_1

I1213 ::08.275492 net.cpp:] conv4_2 -> conv4_2

I1213 ::08.287000 net.cpp:] Setting up conv4_2

I1213 ::08.287000 net.cpp:] Top shape: ()

I1213 ::08.287500 net.cpp:] Memory required for data:

I1213 ::08.287500 layer_factory.hpp:] Creating layer relu4_2

I1213 ::08.287500 net.cpp:] Creating Layer relu4_2

I1213 ::08.288501 net.cpp:] relu4_2 <- conv4_2

I1213 ::08.288501 net.cpp:] relu4_2 -> conv4_2 (in-place)

I1213 ::08.289504 net.cpp:] Setting up relu4_2

I1213 ::08.290503 net.cpp:] Top shape: ()

I1213 ::08.290503 net.cpp:] Memory required for data:

I1213 ::08.290503 layer_factory.hpp:] Creating layer conv4_3

I1213 ::08.290503 net.cpp:] Creating Layer conv4_3

I1213 ::08.291503 net.cpp:] conv4_3 <- conv4_2

I1213 ::08.291503 net.cpp:] conv4_3 -> conv4_3

I1213 ::08.301012 net.cpp:] Setting up conv4_3

I1213 ::08.301512 net.cpp:] Top shape: ()

I1213 ::08.303011 net.cpp:] Memory required for data:

I1213 ::08.303011 layer_factory.hpp:] Creating layer relu4_3

I1213 ::08.306015 net.cpp:] Creating Layer relu4_3

I1213 ::08.307015 net.cpp:] relu4_3 <- conv4_3

I1213 ::08.307515 net.cpp:] relu4_3 -> conv4_3 (in-place)

I1213 ::08.309517 net.cpp:] Setting up relu4_3

I1213 ::08.312518 net.cpp:] Top shape: ()

I1213 ::08.312518 net.cpp:] Memory required for data:

I1213 ::08.313519 layer_factory.hpp:] Creating layer pool4

I1213 ::08.313519 net.cpp:] Creating Layer pool4

I1213 ::08.313519 net.cpp:] pool4 <- conv4_3

I1213 ::08.313519 net.cpp:] pool4 -> pool4

I1213 ::08.314019 net.cpp:] Setting up pool4

I1213 ::08.314019 net.cpp:] Top shape: ()

I1213 ::08.314019 net.cpp:] Memory required for data:

I1213 ::08.314019 layer_factory.hpp:] Creating layer pool4_pool4_0_split

I1213 ::08.314019 net.cpp:] Creating Layer pool4_pool4_0_split

I1213 ::08.315521 net.cpp:] pool4_pool4_0_split <- pool4

I1213 ::08.315521 net.cpp:] pool4_pool4_0_split -> pool4_pool4_0_split_0

I1213 ::08.315521 net.cpp:] pool4_pool4_0_split -> pool4_pool4_0_split_1

I1213 ::08.316522 net.cpp:] Setting up pool4_pool4_0_split

I1213 ::08.316522 net.cpp:] Top shape: ()

I1213 ::08.317023 net.cpp:] Top shape: ()

I1213 ::08.317023 net.cpp:] Memory required for data:

I1213 ::08.317023 layer_factory.hpp:] Creating layer conv5_1

I1213 ::08.317523 net.cpp:] Creating Layer conv5_1

I1213 ::08.318022 net.cpp:] conv5_1 <- pool4_pool4_0_split_0

I1213 ::08.318522 net.cpp:] conv5_1 -> conv5_1

I1213 ::08.326529 net.cpp:] Setting up conv5_1

I1213 ::08.327530 net.cpp:] Top shape: ()

I1213 ::08.327530 net.cpp:] Memory required for data:

I1213 ::08.327530 layer_factory.hpp:] Creating layer relu5_1

I1213 ::08.327530 net.cpp:] Creating Layer relu5_1

I1213 ::08.327530 net.cpp:] relu5_1 <- conv5_1

I1213 ::08.328531 net.cpp:] relu5_1 -> conv5_1 (in-place)

I1213 ::08.329530 net.cpp:] Setting up relu5_1

I1213 ::08.330030 net.cpp:] Top shape: ()

I1213 ::08.330030 net.cpp:] Memory required for data:

I1213 ::08.330030 layer_factory.hpp:] Creating layer conv5_2

I1213 ::08.330030 net.cpp:] Creating Layer conv5_2

I1213 ::08.331032 net.cpp:] conv5_2 <- conv5_1

I1213 ::08.331032 net.cpp:] conv5_2 -> conv5_2

I1213 ::08.339037 net.cpp:] Setting up conv5_2

I1213 ::08.339539 net.cpp:] Top shape: ()

I1213 ::08.339539 net.cpp:] Memory required for data:

I1213 ::08.339539 layer_factory.hpp:] Creating layer relu5_2

I1213 ::08.340538 net.cpp:] Creating Layer relu5_2

I1213 ::08.340538 net.cpp:] relu5_2 <- conv5_2

I1213 ::08.340538 net.cpp:] relu5_2 -> conv5_2 (in-place)

I1213 ::08.341539 net.cpp:] Setting up relu5_2

I1213 ::08.342039 net.cpp:] Top shape: ()

I1213 ::08.342039 net.cpp:] Memory required for data:

I1213 ::08.342039 layer_factory.hpp:] Creating layer conv5_3

I1213 ::08.342039 net.cpp:] Creating Layer conv5_3

I1213 ::08.342039 net.cpp:] conv5_3 <- conv5_2

I1213 ::08.342540 net.cpp:] conv5_3 -> conv5_3

I1213 ::08.348544 net.cpp:] Setting up conv5_3

I1213 ::08.348544 net.cpp:] Top shape: ()

I1213 ::08.349545 net.cpp:] Memory required for data:

I1213 ::08.349545 layer_factory.hpp:] Creating layer relu5_3

I1213 ::08.349545 net.cpp:] Creating Layer relu5_3

I1213 ::08.350545 net.cpp:] relu5_3 <- conv5_3

I1213 ::08.350545 net.cpp:] relu5_3 -> conv5_3 (in-place)

I1213 ::08.352046 net.cpp:] Setting up relu5_3

I1213 ::08.352547 net.cpp:] Top shape: ()

I1213 ::08.352547 net.cpp:] Memory required for data:

I1213 ::08.352547 layer_factory.hpp:] Creating layer pool5

I1213 ::08.352547 net.cpp:] Creating Layer pool5

I1213 ::08.353049 net.cpp:] pool5 <- conv5_3

I1213 ::08.353049 net.cpp:] pool5 -> pool5

I1213 ::08.353049 net.cpp:] Setting up pool5

I1213 ::08.353548 net.cpp:] Top shape: ()

I1213 ::08.353548 net.cpp:] Memory required for data:

I1213 ::08.354048 layer_factory.hpp:] Creating layer fc6

I1213 ::08.354048 net.cpp:] Creating Layer fc6

I1213 ::08.354048 net.cpp:] fc6 <- pool5

I1213 ::08.354048 net.cpp:] fc6 -> fc6

I1213 ::08.565698 net.cpp:] Setting up fc6

I1213 ::08.566198 net.cpp:] Top shape: ()

I1213 ::08.566699 net.cpp:] Memory required for data:

I1213 ::08.567199 layer_factory.hpp:] Creating layer relu6

I1213 ::08.567700 net.cpp:] Creating Layer relu6

I1213 ::08.567700 net.cpp:] relu6 <- fc6

I1213 ::08.568200 net.cpp:] relu6 -> fc6 (in-place)

I1213 ::08.568701 net.cpp:] Setting up relu6

I1213 ::08.569201 net.cpp:] Top shape: ()

I1213 ::08.569701 net.cpp:] Memory required for data:

I1213 ::08.569701 layer_factory.hpp:] Creating layer drop6

I1213 ::08.569701 net.cpp:] Creating Layer drop6

I1213 ::08.570201 net.cpp:] drop6 <- fc6

I1213 ::08.570703 net.cpp:] drop6 -> fc6 (in-place)

I1213 ::08.571703 net.cpp:] Setting up drop6

I1213 ::08.572703 net.cpp:] Top shape: ()

I1213 ::08.573204 net.cpp:] Memory required for data:

I1213 ::08.573204 layer_factory.hpp:] Creating layer fc7

I1213 ::08.573204 net.cpp:] Creating Layer fc7

I1213 ::08.573704 net.cpp:] fc7 <- fc6

I1213 ::08.574204 net.cpp:] fc7 -> fc7

I1213 ::08.610230 net.cpp:] Setting up fc7

I1213 ::08.610730 net.cpp:] Top shape: ()

I1213 ::08.611232 net.cpp:] Memory required for data:

I1213 ::08.611732 layer_factory.hpp:] Creating layer relu7

I1213 ::08.612232 net.cpp:] Creating Layer relu7

I1213 ::08.612232 net.cpp:] relu7 <- fc7

I1213 ::08.612232 net.cpp:] relu7 -> fc7 (in-place)

I1213 ::08.612732 net.cpp:] Setting up relu7

I1213 ::08.613232 net.cpp:] Top shape: ()

I1213 ::08.613232 net.cpp:] Memory required for data:

I1213 ::08.613232 layer_factory.hpp:] Creating layer drop7

I1213 ::08.613232 net.cpp:] Creating Layer drop7

I1213 ::08.613732 net.cpp:] drop7 <- fc7

I1213 ::08.614733 net.cpp:] drop7 -> fc7 (in-place)

I1213 ::08.615234 net.cpp:] Setting up drop7

I1213 ::08.615734 net.cpp:] Top shape: ()

I1213 ::08.616235 net.cpp:] Memory required for data:

I1213 ::08.616235 layer_factory.hpp:] Creating layer score_fr

I1213 ::08.616235 net.cpp:] Creating Layer score_fr

I1213 ::08.616235 net.cpp:] score_fr <- fc7

I1213 ::08.617235 net.cpp:] score_fr -> score_fr

I1213 ::08.619237 net.cpp:] Setting up score_fr

I1213 ::08.619237 net.cpp:] Top shape: ()

I1213 ::08.619237 net.cpp:] Memory required for data:

I1213 ::08.619237 layer_factory.hpp:] Creating layer upscore2

I1213 ::08.620237 net.cpp:] Creating Layer upscore2

I1213 ::08.620237 net.cpp:] upscore2 <- score_fr

I1213 ::08.620237 net.cpp:] upscore2 -> upscore2

I1213 ::08.621739 net.cpp:] Setting up upscore2

I1213 ::08.622740 net.cpp:] Top shape: ()

I1213 ::08.622740 net.cpp:] Memory required for data:

I1213 ::08.622740 layer_factory.hpp:] Creating layer upscore2_upscore2_0_split

I1213 ::08.622740 net.cpp:] Creating Layer upscore2_upscore2_0_split

I1213 ::08.623240 net.cpp:] upscore2_upscore2_0_split <- upscore2

I1213 ::08.623740 net.cpp:] upscore2_upscore2_0_split -> upscore2_upscore2_0_split_0

I1213 ::08.623740 net.cpp:] upscore2_upscore2_0_split -> upscore2_upscore2_0_split_1

I1213 ::08.623740 net.cpp:] Setting up upscore2_upscore2_0_split

I1213 ::08.627243 net.cpp:] Top shape: ()

I1213 ::08.628244 net.cpp:] Top shape: ()

I1213 ::08.628744 net.cpp:] Memory required for data:

I1213 ::08.628744 layer_factory.hpp:] Creating layer score_pool4

I1213 ::08.630245 net.cpp:] Creating Layer score_pool4

I1213 ::08.631748 net.cpp:] score_pool4 <- pool4_pool4_0_split_1

I1213 ::08.631748 net.cpp:] score_pool4 -> score_pool4

I1213 ::08.634748 net.cpp:] Setting up score_pool4

I1213 ::08.634748 net.cpp:] Top shape: ()

I1213 ::08.636250 net.cpp:] Memory required for data:

I1213 ::08.636250 layer_factory.hpp:] Creating layer score_pool4c

I1213 ::08.636250 net.cpp:] Creating Layer score_pool4c

I1213 ::08.636250 net.cpp:] score_pool4c <- score_pool4

I1213 ::08.637750 net.cpp:] score_pool4c <- upscore2_upscore2_0_split_0

I1213 ::08.637750 net.cpp:] score_pool4c -> score_pool4c

I1213 ::08.637750 net.cpp:] Setting up score_pool4c

I1213 ::08.638751 net.cpp:] Top shape: ()

I1213 ::08.638751 net.cpp:] Memory required for data:

I1213 ::08.639251 layer_factory.hpp:] Creating layer fuse_pool4

I1213 ::08.639251 net.cpp:] Creating Layer fuse_pool4

I1213 ::08.640751 net.cpp:] fuse_pool4 <- upscore2_upscore2_0_split_1

I1213 ::08.645756 net.cpp:] fuse_pool4 <- score_pool4c

I1213 ::08.645756 net.cpp:] fuse_pool4 -> fuse_pool4

I1213 ::08.646756 net.cpp:] Setting up fuse_pool4

I1213 ::08.646756 net.cpp:] Top shape: ()

I1213 ::08.646756 net.cpp:] Memory required for data:

I1213 ::08.646756 layer_factory.hpp:] Creating layer upscore_pool4

I1213 ::08.647258 net.cpp:] Creating Layer upscore_pool4

I1213 ::08.647258 net.cpp:] upscore_pool4 <- fuse_pool4

I1213 ::08.647758 net.cpp:] upscore_pool4 -> upscore_pool4

I1213 ::08.649258 net.cpp:] Setting up upscore_pool4

I1213 ::08.649760 net.cpp:] Top shape: ()

I1213 ::08.650259 net.cpp:] Memory required for data:

I1213 ::08.650259 layer_factory.hpp:] Creating layer upscore_pool4_upscore_pool4_0_split

I1213 ::08.650259 net.cpp:] Creating Layer upscore_pool4_upscore_pool4_0_split

I1213 ::08.651260 net.cpp:] upscore_pool4_upscore_pool4_0_split <- upscore_pool4

I1213 ::08.651260 net.cpp:] upscore_pool4_upscore_pool4_0_split -> upscore_pool4_upscore_pool4_0_split_0

I1213 ::08.651260 net.cpp:] upscore_pool4_upscore_pool4_0_split -> upscore_pool4_upscore_pool4_0_split_1

I1213 ::08.652261 net.cpp:] Setting up upscore_pool4_upscore_pool4_0_split

I1213 ::08.652261 net.cpp:] Top shape: ()

I1213 ::08.652261 net.cpp:] Top shape: ()

I1213 ::08.652261 net.cpp:] Memory required for data:

I1213 ::08.652261 layer_factory.hpp:] Creating layer score_pool3

I1213 ::08.653261 net.cpp:] Creating Layer score_pool3

I1213 ::08.656764 net.cpp:] score_pool3 <- pool3_pool3_0_split_1

I1213 ::08.656764 net.cpp:] score_pool3 -> score_pool3

I1213 ::08.659765 net.cpp:] Setting up score_pool3

I1213 ::08.660266 net.cpp:] Top shape: ()

I1213 ::08.666271 net.cpp:] Memory required for data:

I1213 ::08.666271 layer_factory.hpp:] Creating layer score_pool3c

I1213 ::08.666271 net.cpp:] Creating Layer score_pool3c

I1213 ::08.666771 net.cpp:] score_pool3c <- score_pool3

I1213 ::08.667271 net.cpp:] score_pool3c <- upscore_pool4_upscore_pool4_0_split_0

I1213 ::08.667271 net.cpp:] score_pool3c -> score_pool3c

I1213 ::08.667271 net.cpp:] Setting up score_pool3c

I1213 ::08.667271 net.cpp:] Top shape: ()

I1213 ::08.667271 net.cpp:] Memory required for data:

I1213 ::08.667271 layer_factory.hpp:] Creating layer fuse_pool3

I1213 ::08.667271 net.cpp:] Creating Layer fuse_pool3

I1213 ::08.668272 net.cpp:] fuse_pool3 <- upscore_pool4_upscore_pool4_0_split_1

I1213 ::08.668772 net.cpp:] fuse_pool3 <- score_pool3c

I1213 ::08.669273 net.cpp:] fuse_pool3 -> fuse_pool3

I1213 ::08.669273 net.cpp:] Setting up fuse_pool3

I1213 ::08.670274 net.cpp:] Top shape: ()

I1213 ::08.670274 net.cpp:] Memory required for data:

I1213 ::08.670274 layer_factory.hpp:] Creating layer upscore8

I1213 ::08.670274 net.cpp:] Creating Layer upscore8

I1213 ::08.670274 net.cpp:] upscore8 <- fuse_pool3

I1213 ::08.670274 net.cpp:] upscore8 -> upscore8

I1213 ::08.671274 net.cpp:] Setting up upscore8

I1213 ::08.671775 net.cpp:] Top shape: ()

I1213 ::08.671775 net.cpp:] Memory required for data:

I1213 ::08.671775 layer_factory.hpp:] Creating layer score

I1213 ::08.671775 net.cpp:] Creating Layer score

I1213 ::08.671775 net.cpp:] score <- upscore8

I1213 ::08.672274 net.cpp:] score <- data_data_0_split_1

I1213 ::08.673275 net.cpp:] score -> score

I1213 ::08.673275 net.cpp:] Setting up score

I1213 ::08.674276 net.cpp:] Top shape: ()

I1213 ::08.674276 net.cpp:] Memory required for data:

I1213 ::08.674276 layer_factory.hpp:] Creating layer loss

I1213 ::08.674276 net.cpp:] Creating Layer loss

I1213 ::08.675277 net.cpp:] loss <- score

I1213 ::08.675277 net.cpp:] loss <- label

I1213 ::08.675277 net.cpp:] loss -> loss

I1213 ::08.675277 layer_factory.hpp:] Creating layer loss

I1213 ::08.678781 net.cpp:] Setting up loss

I1213 ::08.679280 net.cpp:] Top shape: ()

I1213 ::08.679780 net.cpp:] with loss weight

I1213 ::08.680280 net.cpp:] Memory required for data:

I1213 ::08.680280 net.cpp:] loss needs backward computation.

I1213 ::08.680280 net.cpp:] score needs backward computation.

I1213 ::08.680280 net.cpp:] upscore8 needs backward computation.

I1213 ::08.680280 net.cpp:] fuse_pool3 needs backward computation.

I1213 ::08.680280 net.cpp:] score_pool3c needs backward computation.

I1213 ::08.680280 net.cpp:] score_pool3 needs backward computation.

I1213 ::08.682281 net.cpp:] upscore_pool4_upscore_pool4_0_split needs backward computation.

I1213 ::08.682782 net.cpp:] upscore_pool4 needs backward computation.

I1213 ::08.682782 net.cpp:] fuse_pool4 needs backward computation.

I1213 ::08.682782 net.cpp:] score_pool4c needs backward computation.

I1213 ::08.683282 net.cpp:] score_pool4 needs backward computation.

I1213 ::08.683282 net.cpp:] upscore2_upscore2_0_split needs backward computation.

I1213 ::08.683282 net.cpp:] upscore2 needs backward computation.

I1213 ::08.683282 net.cpp:] score_fr needs backward computation.

I1213 ::08.683282 net.cpp:] drop7 needs backward computation.

I1213 ::08.683784 net.cpp:] relu7 needs backward computation.

I1213 ::08.683784 net.cpp:] fc7 needs backward computation.

I1213 ::08.683784 net.cpp:] drop6 needs backward computation.

I1213 ::08.683784 net.cpp:] relu6 needs backward computation.

I1213 ::08.684284 net.cpp:] fc6 needs backward computation.

I1213 ::08.684284 net.cpp:] pool5 needs backward computation.

I1213 ::08.684284 net.cpp:] relu5_3 needs backward computation.

I1213 ::08.684783 net.cpp:] conv5_3 needs backward computation.

I1213 ::08.685284 net.cpp:] relu5_2 needs backward computation.

I1213 ::08.685784 net.cpp:] conv5_2 needs backward computation.

I1213 ::08.686285 net.cpp:] relu5_1 needs backward computation.

I1213 ::08.686285 net.cpp:] conv5_1 needs backward computation.

I1213 ::08.686285 net.cpp:] pool4_pool4_0_split needs backward computation.

I1213 ::08.686285 net.cpp:] pool4 needs backward computation.

I1213 ::08.687286 net.cpp:] relu4_3 needs backward computation.

I1213 ::08.687286 net.cpp:] conv4_3 needs backward computation.

I1213 ::08.687286 net.cpp:] relu4_2 needs backward computation.

I1213 ::08.687286 net.cpp:] conv4_2 needs backward computation.

I1213 ::08.687286 net.cpp:] relu4_1 needs backward computation.

I1213 ::08.688787 net.cpp:] conv4_1 needs backward computation.

I1213 ::08.688787 net.cpp:] pool3_pool3_0_split needs backward computation.

I1213 ::08.688787 net.cpp:] pool3 needs backward computation.

I1213 ::08.689286 net.cpp:] relu3_3 needs backward computation.

I1213 ::08.690287 net.cpp:] conv3_3 needs backward computation.

I1213 ::08.690287 net.cpp:] relu3_2 needs backward computation.

I1213 ::08.690287 net.cpp:] conv3_2 needs backward computation.

I1213 ::08.690287 net.cpp:] relu3_1 needs backward computation.

I1213 ::08.691288 net.cpp:] conv3_1 needs backward computation.

I1213 ::08.691288 net.cpp:] pool2 needs backward computation.

I1213 ::08.691288 net.cpp:] relu2_2 needs backward computation.

I1213 ::08.691288 net.cpp:] conv2_2 needs backward computation.

I1213 ::08.691788 net.cpp:] relu2_1 needs backward computation.

I1213 ::08.692291 net.cpp:] conv2_1 needs backward computation.

I1213 ::08.692790 net.cpp:] pool1 needs backward computation.

I1213 ::08.692790 net.cpp:] relu1_2 needs backward computation.

I1213 ::08.692790 net.cpp:] conv1_2 needs backward computation.

I1213 ::08.693289 net.cpp:] relu1_1 needs backward computation.

I1213 ::08.693289 net.cpp:] conv1_1 needs backward computation.

I1213 ::08.693289 net.cpp:] label does not need backward computation.

I1213 ::08.693789 net.cpp:] data_data_0_split does not need backward computation.

I1213 ::08.694290 net.cpp:] data does not need backward computation.

I1213 ::08.694290 net.cpp:] This network produces output loss

I1213 ::08.694290 net.cpp:] Network initialization done.

I1213 ::08.695791 solver.cpp:] Creating test net (#) specified by net file: train_val.prototxt

I1213 ::08.695791 net.cpp:] The NetState phase () differed from the phase () specified by a rule in layer data

I1213 ::08.698796 net.cpp:] The NetState phase () differed from the phase () specified by a rule in layer label

I1213 ::08.699795 net.cpp:] Initializing net from parameters:

state {

phase: TEST

}

layer {

name: "data"

type: "Data"

top: "data"

include {

phase: TEST

}

transform_param {

scale: 0.00390625

mean_file: "G:/interest_of_imags_for_recognation/VOC2007/Resize224/Img_val_mean.binaryproto"

}

data_param {

source: "G:/interest_of_imags_for_recognation/VOC2007/Resize224/Img_val"

batch_size:

backend: LMDB

}

}

layer {

name: "label"

type: "Data"

top: "label"

include {

phase: TEST

}

transform_param {

scale: 0.00390625

mean_file: "G:/interest_of_imags_for_recognation/VOC2007/Resize224/Label_val_mean.binaryproto"

}

data_param {

source: "G:/interest_of_imags_for_recognation/VOC2007/Resize224/Label_val"

batch_size:

backend: LMDB

}

}

layer {

name: "conv1_1"

type: "Convolution"

bottom: "data"

top: "conv1_1"

param {

lr_mult:

decay_mult:

}

param {

lr_mult:

decay_mult:

}

convolution_param {

num_output:

pad:

kernel_size:

stride:

}

}

layer {

name: "relu1_1"

type: "ReLU"

bottom: "conv1_1"

top: "conv1_1"

}

layer {

name: "conv1_2"

type: "Convolution"

bottom: "conv1_1"

top: "conv1_2"

param {

lr_mult:

decay_mult:

}

param {

lr_mult:

decay_mult:

}

convolution_param {

num_output:

pad:

kernel_size:

stride:

}

}

layer {

name: "relu1_2"

type: "ReLU"

bottom: "conv1_2"

top: "conv1_2"

}

layer {

name: "pool1"

type: "Pooling"

bottom: "conv1_2"

top: "pool1"

pooling_param {

pool: MAX

kernel_size:

stride:

}

}

layer {

name: "conv2_1"

type: "Convolution"

bottom: "pool1"

top: "conv2_1"

param {

lr_mult:

decay_mult:

}

param {

lr_mult:

decay_mult:

}

convolution_param {

num_output:

pad:

kernel_size:

stride:

}

}

layer {

name: "relu2_1"

type: "ReLU"

bottom: "conv2_1"

top: "conv2_1"

}

layer {

name: "conv2_2"

type: "Convolution"

bottom: "conv2_1"

top: "conv2_2"

param {

lr_mult:

decay_mult:

}

param {

lr_mult:

decay_mult:

}

convolution_param {

num_output:

pad:

kernel_size:

stride:

}

}

layer {

name: "relu2_2"

type: "ReLU"

bottom: "conv2_2"

top: "conv2_2"

}

layer {

name: "pool2"

type: "Pooling"

bottom: "conv2_2"

top: "pool2"

pooling_param {

pool: MAX

kernel_size:

stride:

}

}

layer {

name: "conv3_1"

type: "Convolution"

bottom: "pool2"

top: "conv3_1"

param {

lr_mult:

decay_mult:

}

param {

lr_mult:

decay_mult:

}

convolution_param {

num_output:

pad:

kernel_size:

stride:

}

}

layer {

name: "relu3_1"

type: "ReLU"

bottom: "conv3_1"

top: "conv3_1"

}

layer {

name: "conv3_2"

type: "Convolution"

bottom: "conv3_1"

top: "conv3_2"

param {

lr_mult:

decay_mult:

}

param {

lr_mult:

decay_mult:

}

convolution_param {

num_output:

pad:

kernel_size:

stride:

}

}

layer {

name: "relu3_2"

type: "ReLU"

bottom: "conv3_2"

top: "conv3_2"

}

layer {

name: "conv3_3"

type: "Convolution"

bottom: "conv3_2"

top: "conv3_3"

param {

lr_mult:

decay_mult:

}

param {

lr_mult:

decay_mult:

}

convolution_param {

num_output:

pad:

kernel_size:

stride:

}

}

layer {

name: "relu3_3"

type: "ReLU"

bottom: "conv3_3"

top: "conv3_3"

}

layer {

name: "pool3"

type: "Pooling"

bottom: "conv3_3"

top: "pool3"

pooling_param {

pool: MAX

kernel_size:

stride:

}

}

layer {

name: "conv4_1"

type: "Convolution"

bottom: "pool3"

top: "conv4_1"

param {

lr_mult:

decay_mult:

}

param {

lr_mult:

decay_mult:

}

convolution_param {

num_output:

pad:

kernel_size:

stride:

}

}

layer {

name: "relu4_1"

type: "ReLU"

bottom: "conv4_1"

top: "conv4_1"

}

layer {

name: "conv4_2"

type: "Convolution"

bottom: "conv4_1"

top: "conv4_2"

param {

lr_mult:

decay_mult:

}

param {

lr_mult:

decay_mult:

}

convolution_param {

num_output:

pad:

kernel_size:

stride:

}

}

layer {

name: "relu4_2"

type: "ReLU"

bottom: "conv4_2"

top: "conv4_2"

}

layer {

name: "conv4_3"

type: "Convolution"

bottom: "conv4_2"

top: "conv4_3"

param {

lr_mult:

decay_mult:

}

param {

lr_mult:

decay_mult:

}

convolution_param {

num_output:

pad:

kernel_size:

stride:

}

}

layer {

name: "relu4_3"

type: "ReLU"

bottom: "conv4_3"

top: "conv4_3"

}

layer {

name: "pool4"

type: "Pooling"

bottom: "conv4_3"

top: "pool4"

pooling_param {

pool: MAX

kernel_size:

stride:

}

}

layer {

name: "conv5_1"

type: "Convolution"

bottom: "pool4"

top: "conv5_1"

param {

lr_mult:

decay_mult:

}

param {

lr_mult:

decay_mult:

}

convolution_param {

num_output:

pad:

kernel_size:

stride:

}

}

layer {

name: "relu5_1"

type: "ReLU"

bottom: "conv5_1"

top: "conv5_1"

}

layer {

name: "conv5_2"

type: "Convolution"

bottom: "conv5_1"

top: "conv5_2"

param {

lr_mult:

decay_mult:

}

param {

lr_mult:

decay_mult:

}

convolution_param {

num_output:

pad:

kernel_size:

stride:

}

}

layer {

name: "relu5_2"

type: "ReLU"

bottom: "conv5_2"

top: "conv5_2"

}

layer {

name: "conv5_3"

type: "Convolution"

bottom: "conv5_2"

top: "conv5_3"

param {

lr_mult:

decay_mult:

}

param {

lr_mult:

decay_mult:

}

convolution_param {

num_output:

pad:

kernel_size:

stride:

}

}

layer {

name: "relu5_3"

type: "ReLU"

bottom: "conv5_3"

top: "conv5_3"

}

layer {

name: "pool5"

type: "Pooling"

bottom: "conv5_3"

top: "pool5"

pooling_param {

pool: MAX

kernel_size:

stride:

}

}

layer {

name: "fc6"

type: "Convolution"

bottom: "pool5"

top: "fc6"

param {

lr_mult:

decay_mult:

}

param {

lr_mult:

decay_mult:

}

convolution_param {

num_output:

pad:

kernel_size:

stride:

}

}

layer {

name: "relu6"

type: "ReLU"

bottom: "fc6"

top: "fc6"

}

layer {

name: "drop6"

type: "Dropout"

bottom: "fc6"

top: "fc6"

dropout_param {

dropout_ratio: 0.5

}

}

layer {

name: "fc7"

type: "Convolution"

bottom: "fc6"

top: "fc7"

param {

lr_mult:

decay_mult:

}

param {

lr_mult:

decay_mult:

}

convolution_param {

num_output:

pad:

kernel_size:

stride:

}

}

layer {

name: "relu7"

type: "ReLU"

bottom: "fc7"

top: "fc7"

}

layer {

name: "drop7"

type: "Dropout"

bottom: "fc7"

top: "fc7"

dropout_param {

dropout_ratio: 0.5

}

}

layer {

name: "score_fr"

type: "Convolution"

bottom: "fc7"

top: "score_fr"

param {

lr_mult:

decay_mult:

}

param {

lr_mult:

decay_mult:

}

convolution_param {

num_output:

pad:

kernel_size:

}

}

layer {

name: "upscore2"

type: "Deconvolution"

bottom: "score_fr"

top: "upscore2"

param {

lr_mult:

}

convolution_param {

num_output:

bias_term: false

kernel_size:

stride:

}

}

layer {

name: "score_pool4"

type: "Convolution"

bottom: "pool4"

top: "score_pool4"

param {

lr_mult:

decay_mult:

}

param {

lr_mult:

decay_mult:

}

convolution_param {

num_output:

pad:

kernel_size:

}

}

layer {

name: "score_pool4c"

type: "Crop"

bottom: "score_pool4"

bottom: "upscore2"

top: "score_pool4c"

crop_param {

axis:

offset:

}

}

layer {

name: "fuse_pool4"

type: "Eltwise"

bottom: "upscore2"

bottom: "score_pool4c"

top: "fuse_pool4"

eltwise_param {

operation: SUM

}

}

layer {

name: "upscore_pool4"

type: "Deconvolution"

bottom: "fuse_pool4"

top: "upscore_pool4"

param {

lr_mult:

}

convolution_param {

num_output:

bias_term: false

kernel_size:

stride:

}

}

layer {

name: "score_pool3"

type: "Convolution"

bottom: "pool3"

top: "score_pool3"

param {

lr_mult:

decay_mult:

}

param {

lr_mult:

decay_mult:

}

convolution_param {

num_output:

pad:

kernel_size:

}

}

layer {

name: "score_pool3c"

type: "Crop"

bottom: "score_pool3"

bottom: "upscore_pool4"

top: "score_pool3c"

crop_param {

axis:

offset:

}

}

layer {

name: "fuse_pool3"

type: "Eltwise"

bottom: "upscore_pool4"

bottom: "score_pool3c"

top: "fuse_pool3"

eltwise_param {

operation: SUM

}

}

layer {

name: "upscore8"

type: "Deconvolution"

bottom: "fuse_pool3"

top: "upscore8"

param {

lr_mult:

}

convolution_param {

num_output:

bias_term: false

kernel_size:

stride:

}

}

layer {

name: "score"

type: "Crop"

bottom: "upscore8"

bottom: "data"

top: "score"

crop_param {

axis:

offset:

}

}

layer {

name: "accuracy"

type: "Accuracy"

bottom: "score"

bottom: "label"

top: "accuracy"

include {

phase: TEST

}

}

layer {

name: "loss"

type: "SoftmaxWithLoss"

bottom: "score"

bottom: "label"

top: "loss"

loss_param {

ignore_label:

normalize: false

}

}

I1213 ::08.702296 layer_factory.hpp:] Creating layer data

I1213 ::08.703297 net.cpp:] Creating Layer data

I1213 ::08.704798 net.cpp:] data -> data

I1213 ::08.705298 common.cpp:] System entropy source not available, using fallback algorithm to generate seed instead.

I1213 ::08.706300 data_transformer.cpp:] Loading mean file from: G:/interest_of_imags_for_recognation/VOC2007/Resize224/Img_val_mean.binaryproto

I1213 ::08.707300 db_lmdb.cpp:] Opened lmdb G:/interest_of_imags_for_recognation/VOC2007/Resize224/Img_val

I1213 ::08.709803 data_layer.cpp:] output data size: ,,,

I1213 ::08.715806 net.cpp:] Setting up data

I1213 ::08.716306 net.cpp:] Top shape: ()

I1213 ::08.716807 net.cpp:] Memory required for data:

I1213 ::08.716807 layer_factory.hpp:] Creating layer data_data_0_split

I1213 ::08.717808 common.cpp:] System entropy source not available, using fallback algorithm to generate seed instead.

I1213 ::08.718808 net.cpp:] Creating Layer data_data_0_split

I1213 ::08.720309 net.cpp:] data_data_0_split <- data

I1213 ::08.722811 net.cpp:] data_data_0_split -> data_data_0_split_0

I1213 ::08.723311 net.cpp:] data_data_0_split -> data_data_0_split_1

I1213 ::08.723311 net.cpp:] Setting up data_data_0_split

I1213 ::08.723811 net.cpp:] Top shape: ()

I1213 ::08.724812 net.cpp:] Top shape: ()

I1213 ::08.724812 net.cpp:] Memory required for data:

I1213 ::08.724812 layer_factory.hpp:] Creating layer label

I1213 ::08.725312 net.cpp:] Creating Layer label

I1213 ::08.725813 net.cpp:] label -> label

I1213 ::08.727314 data_transformer.cpp:] Loading mean file from: G:/interest_of_imags_for_recognation/VOC2007/Resize224/Label_val_mean.binaryproto

I1213 ::08.727814 common.cpp:] System entropy source not available, using fallback algorithm to generate seed instead.

I1213 ::08.730816 db_lmdb.cpp:] Opened lmdb G:/interest_of_imags_for_recognation/VOC2007/Resize224/Label_val

I1213 ::08.731317 data_layer.cpp:] output data size: ,,,

I1213 ::08.733319 net.cpp:] Setting up label

I1213 ::08.733319 net.cpp:] Top shape: ()

I1213 ::08.734318 common.cpp:] System entropy source not available, using fallback algorithm to generate seed instead.

I1213 ::08.734819 net.cpp:] Memory required for data:

I1213 ::08.736820 layer_factory.hpp:] Creating layer label_label_0_split

I1213 ::08.737321 net.cpp:] Creating Layer label_label_0_split

I1213 ::08.738822 net.cpp:] label_label_0_split <- label

I1213 ::08.739823 net.cpp:] label_label_0_split -> label_label_0_split_0

I1213 ::08.739823 net.cpp:] label_label_0_split -> label_label_0_split_1

I1213 ::08.740324 net.cpp:] Setting up label_label_0_split

I1213 ::08.740823 net.cpp:] Top shape: ()

I1213 ::08.741324 net.cpp:] Top shape: ()

I1213 ::08.742324 net.cpp:] Memory required for data:

I1213 ::08.743825 layer_factory.hpp:] Creating layer conv1_1

I1213 ::08.744827 net.cpp:] Creating Layer conv1_1

I1213 ::08.745326 net.cpp:] conv1_1 <- data_data_0_split_0

I1213 ::08.746327 net.cpp:] conv1_1 -> conv1_1

I1213 ::08.749830 net.cpp:] Setting up conv1_1

I1213 ::08.749830 net.cpp:] Top shape: ()

I1213 ::08.750830 net.cpp:] Memory required for data:

I1213 ::08.751332 layer_factory.hpp:] Creating layer relu1_1

I1213 ::08.751832 net.cpp:] Creating Layer relu1_1

I1213 ::08.752832 net.cpp:] relu1_1 <- conv1_1

I1213 ::08.753332 net.cpp:] relu1_1 -> conv1_1 (in-place)

I1213 ::08.756836 net.cpp:] Setting up relu1_1

I1213 ::08.757336 net.cpp:] Top shape: ()

I1213 ::08.757835 net.cpp:] Memory required for data:

I1213 ::08.760339 layer_factory.hpp:] Creating layer conv1_2

I1213 ::08.761338 net.cpp:] Creating Layer conv1_2

I1213 ::08.761838 net.cpp:] conv1_2 <- conv1_1

I1213 ::08.762339 net.cpp:] conv1_2 -> conv1_2

I1213 ::08.767343 net.cpp:] Setting up conv1_2

I1213 ::08.767843 net.cpp:] Top shape: ()

I1213 ::08.768343 net.cpp:] Memory required for data:

I1213 ::08.769345 layer_factory.hpp:] Creating layer relu1_2

I1213 ::08.769845 net.cpp:] Creating Layer relu1_2

I1213 ::08.771845 net.cpp:] relu1_2 <- conv1_2

I1213 ::08.772346 net.cpp:] relu1_2 -> conv1_2 (in-place)

I1213 ::08.775348 net.cpp:] Setting up relu1_2

I1213 ::08.775849 net.cpp:] Top shape: ()

I1213 ::08.776350 net.cpp:] Memory required for data:

I1213 ::08.777349 layer_factory.hpp:] Creating layer pool1

I1213 ::08.778350 net.cpp:] Creating Layer pool1

I1213 ::08.778851 net.cpp:] pool1 <- conv1_2

I1213 ::08.779851 net.cpp:] pool1 -> pool1

I1213 ::08.780853 net.cpp:] Setting up pool1

I1213 ::08.781352 net.cpp:] Top shape: ()

I1213 ::08.782353 net.cpp:] Memory required for data:

I1213 ::08.782853 layer_factory.hpp:] Creating layer conv2_1

I1213 ::08.783854 net.cpp:] Creating Layer conv2_1

I1213 ::08.784854 net.cpp:] conv2_1 <- pool1

I1213 ::08.785356 net.cpp:] conv2_1 -> conv2_1

I1213 ::08.791860 net.cpp:] Setting up conv2_1

I1213 ::08.791860 net.cpp:] Top shape: ()

I1213 ::08.792861 net.cpp:] Memory required for data:

I1213 ::08.793861 layer_factory.hpp:] Creating layer relu2_1

I1213 ::08.794363 net.cpp:] Creating Layer relu2_1

I1213 ::08.794862 net.cpp:] relu2_1 <- conv2_1

I1213 ::08.795362 net.cpp:] relu2_1 -> conv2_1 (in-place)

I1213 ::08.796363 net.cpp:] Setting up relu2_1

I1213 ::08.796363 net.cpp:] Top shape: ()

I1213 ::08.796864 net.cpp:] Memory required for data:

I1213 ::08.797363 layer_factory.hpp:] Creating layer conv2_2

I1213 ::08.797864 net.cpp:] Creating Layer conv2_2

I1213 ::08.798364 net.cpp:] conv2_2 <- conv2_1

I1213 ::08.798864 net.cpp:] conv2_2 -> conv2_2

I1213 ::08.802367 net.cpp:] Setting up conv2_2

I1213 ::08.802367 net.cpp:] Top shape: ()

I1213 ::08.803367 net.cpp:] Memory required for data:

I1213 ::08.803869 layer_factory.hpp:] Creating layer relu2_2

I1213 ::08.804869 net.cpp:] Creating Layer relu2_2

I1213 ::08.807371 net.cpp:] relu2_2 <- conv2_2

I1213 ::08.808372 net.cpp:] relu2_2 -> conv2_2 (in-place)

I1213 ::08.809875 net.cpp:] Setting up relu2_2

I1213 ::08.810374 net.cpp:] Top shape: ()

I1213 ::08.810874 net.cpp:] Memory required for data:

I1213 ::08.811373 layer_factory.hpp:] Creating layer pool2

I1213 ::08.811874 net.cpp:] Creating Layer pool2

I1213 ::08.812374 net.cpp:] pool2 <- conv2_2

I1213 ::08.812875 net.cpp:] pool2 -> pool2

I1213 ::08.813375 net.cpp:] Setting up pool2

I1213 ::08.813875 net.cpp:] Top shape: ()

I1213 ::08.814376 net.cpp:] Memory required for data:

I1213 ::08.814877 layer_factory.hpp:] Creating layer conv3_1

I1213 ::08.815376 net.cpp:] Creating Layer conv3_1

I1213 ::08.815877 net.cpp:] conv3_1 <- pool2

I1213 ::08.816377 net.cpp:] conv3_1 -> conv3_1

I1213 ::08.819380 net.cpp:] Setting up conv3_1

I1213 ::08.819380 net.cpp:] Top shape: ()

I1213 ::08.819880 net.cpp:] Memory required for data:

I1213 ::08.822382 layer_factory.hpp:] Creating layer relu3_1

I1213 ::08.823384 net.cpp:] Creating Layer relu3_1

I1213 ::08.823884 net.cpp:] relu3_1 <- conv3_1

I1213 ::08.824383 net.cpp:] relu3_1 -> conv3_1 (in-place)

I1213 ::08.826386 net.cpp:] Setting up relu3_1

I1213 ::08.826886 net.cpp:] Top shape: ()

I1213 ::08.827386 net.cpp:] Memory required for data:

I1213 ::08.828387 layer_factory.hpp:] Creating layer conv3_2

I1213 ::08.828887 net.cpp:] Creating Layer conv3_2

I1213 ::08.829887 net.cpp:] conv3_2 <- conv3_1

I1213 ::08.830387 net.cpp:] conv3_2 -> conv3_2

I1213 ::08.838393 net.cpp:] Setting up conv3_2

I1213 ::08.838393 net.cpp:] Top shape: ()

I1213 ::08.838893 net.cpp:] Memory required for data:

I1213 ::08.840395 layer_factory.hpp:] Creating layer relu3_2

I1213 ::08.840894 net.cpp:] Creating Layer relu3_2

I1213 ::08.841395 net.cpp:] relu3_2 <- conv3_2

I1213 ::08.841895 net.cpp:] relu3_2 -> conv3_2 (in-place)

I1213 ::08.842896 net.cpp:] Setting up relu3_2

I1213 ::08.842896 net.cpp:] Top shape: ()

I1213 ::08.843397 net.cpp:] Memory required for data:

I1213 ::08.844398 layer_factory.hpp:] Creating layer conv3_3

I1213 ::08.844898 net.cpp:] Creating Layer conv3_3

I1213 ::08.845397 net.cpp:] conv3_3 <- conv3_2

I1213 ::08.845899 net.cpp:] conv3_3 -> conv3_3

I1213 ::08.850401 net.cpp:] Setting up conv3_3

I1213 ::08.850401 net.cpp:] Top shape: ()

I1213 ::08.851402 net.cpp:] Memory required for data:

I1213 ::08.851903 layer_factory.hpp:] Creating layer relu3_3

I1213 ::08.852403 net.cpp:] Creating Layer relu3_3

I1213 ::08.852903 net.cpp:] relu3_3 <- conv3_3

I1213 ::08.853404 net.cpp:] relu3_3 -> conv3_3 (in-place)

I1213 ::08.854964 net.cpp:] Setting up relu3_3

I1213 ::08.855406 net.cpp:] Top shape: ()

I1213 ::08.855906 net.cpp:] Memory required for data:

I1213 ::08.856405 layer_factory.hpp:] Creating layer pool3

I1213 ::08.856906 net.cpp:] Creating Layer pool3

I1213 ::08.857406 net.cpp:] pool3 <- conv3_3

I1213 ::08.857906 net.cpp:] pool3 -> pool3

I1213 ::08.858907 net.cpp:] Setting up pool3

I1213 ::08.858907 net.cpp:] Top shape: ()

I1213 ::08.859908 net.cpp:] Memory required for data:

I1213 ::08.860409 layer_factory.hpp:] Creating layer pool3_pool3_0_split

I1213 ::08.860909 net.cpp:] Creating Layer pool3_pool3_0_split

I1213 ::08.860909 net.cpp:] pool3_pool3_0_split <- pool3

I1213 ::08.861409 net.cpp:] pool3_pool3_0_split -> pool3_pool3_0_split_0

I1213 ::08.861910 net.cpp:] pool3_pool3_0_split -> pool3_pool3_0_split_1

I1213 ::08.862910 net.cpp:] Setting up pool3_pool3_0_split

I1213 ::08.862910 net.cpp:] Top shape: ()

I1213 ::08.863410 net.cpp:] Top shape: ()

I1213 ::08.863910 net.cpp:] Memory required for data:

I1213 ::08.864411 layer_factory.hpp:] Creating layer conv4_1

I1213 ::08.864912 net.cpp:] Creating Layer conv4_1

I1213 ::08.865412 net.cpp:] conv4_1 <- pool3_pool3_0_split_0

I1213 ::08.865913 net.cpp:] conv4_1 -> conv4_1

I1213 ::08.871917 net.cpp:] Setting up conv4_1

I1213 ::08.871917 net.cpp:] Top shape: ()

I1213 ::08.872918 net.cpp:] Memory required for data:

I1213 ::08.873417 layer_factory.hpp:] Creating layer relu4_1

I1213 ::08.874919 net.cpp:] Creating Layer relu4_1

I1213 ::08.875419 net.cpp:] relu4_1 <- conv4_1

I1213 ::08.875921 net.cpp:] relu4_1 -> conv4_1 (in-place)

I1213 ::08.877421 net.cpp:] Setting up relu4_1

I1213 ::08.877421 net.cpp:] Top shape: ()

I1213 ::08.877921 net.cpp:] Memory required for data:

I1213 ::08.878422 layer_factory.hpp:] Creating layer conv4_2

I1213 ::08.878922 net.cpp:] Creating Layer conv4_2

I1213 ::08.879422 net.cpp:] conv4_2 <- conv4_1

I1213 ::08.879923 net.cpp:] conv4_2 -> conv4_2

I1213 ::08.885927 net.cpp:] Setting up conv4_2

I1213 ::08.885927 net.cpp:] Top shape: ()

I1213 ::08.886929 net.cpp:] Memory required for data:

I1213 ::08.886929 layer_factory.hpp:] Creating layer relu4_2

I1213 ::08.886929 net.cpp:] Creating Layer relu4_2

I1213 ::08.886929 net.cpp:] relu4_2 <- conv4_2

I1213 ::08.886929 net.cpp:] relu4_2 -> conv4_2 (in-place)

I1213 ::08.887929 net.cpp:] Setting up relu4_2

I1213 ::08.888429 net.cpp:] Top shape: ()

I1213 ::08.888929 net.cpp:] Memory required for data:

I1213 ::08.890933 layer_factory.hpp:] Creating layer conv4_3

I1213 ::08.891433 net.cpp:] Creating Layer conv4_3

I1213 ::08.891433 net.cpp:] conv4_3 <- conv4_2

I1213 ::08.891433 net.cpp:] conv4_3 -> conv4_3

I1213 ::08.897935 net.cpp:] Setting up conv4_3

I1213 ::08.897935 net.cpp:] Top shape: ()

I1213 ::08.898437 net.cpp:] Memory required for data:

I1213 ::08.898936 layer_factory.hpp:] Creating layer relu4_3

I1213 ::08.899437 net.cpp:] Creating Layer relu4_3

I1213 ::08.899937 net.cpp:] relu4_3 <- conv4_3

I1213 ::08.900437 net.cpp:] relu4_3 -> conv4_3 (in-place)

I1213 ::08.901938 net.cpp:] Setting up relu4_3

I1213 ::08.902438 net.cpp:] Top shape: ()

I1213 ::08.902938 net.cpp:] Memory required for data:

I1213 ::08.903439 layer_factory.hpp:] Creating layer pool4

I1213 ::08.903939 net.cpp:] Creating Layer pool4

I1213 ::08.904940 net.cpp:] pool4 <- conv4_3

I1213 ::08.907443 net.cpp:] pool4 -> pool4

I1213 ::08.907443 net.cpp:] Setting up pool4

I1213 ::08.907943 net.cpp:] Top shape: ()

I1213 ::08.908443 net.cpp:] Memory required for data:

I1213 ::08.908443 layer_factory.hpp:] Creating layer pool4_pool4_0_split

I1213 ::08.908443 net.cpp:] Creating Layer pool4_pool4_0_split

I1213 ::08.908943 net.cpp:] pool4_pool4_0_split <- pool4

I1213 ::08.909443 net.cpp:] pool4_pool4_0_split -> pool4_pool4_0_split_0

I1213 ::08.909945 net.cpp:] pool4_pool4_0_split -> pool4_pool4_0_split_1

I1213 ::08.910444 net.cpp:] Setting up pool4_pool4_0_split

I1213 ::08.910944 net.cpp:] Top shape: ()

I1213 ::08.911445 net.cpp:] Top shape: ()

I1213 ::08.911445 net.cpp:] Memory required for data:

I1213 ::08.911445 layer_factory.hpp:] Creating layer conv5_1

I1213 ::08.911945 net.cpp:] Creating Layer conv5_1

I1213 ::08.912446 net.cpp:] conv5_1 <- pool4_pool4_0_split_0

I1213 ::08.912946 net.cpp:] conv5_1 -> conv5_1

I1213 ::08.919451 net.cpp:] Setting up conv5_1

I1213 ::08.919451 net.cpp:] Top shape: ()

I1213 ::08.919951 net.cpp:] Memory required for data:

I1213 ::08.922454 layer_factory.hpp:] Creating layer relu5_1

I1213 ::08.922953 net.cpp:] Creating Layer relu5_1

I1213 ::08.923954 net.cpp:] relu5_1 <- conv5_1

I1213 ::08.923954 net.cpp:] relu5_1 -> conv5_1 (in-place)

I1213 ::08.924454 net.cpp:] Setting up relu5_1

I1213 ::08.924955 net.cpp:] Top shape: ()

I1213 ::08.925456 net.cpp:] Memory required for data:

I1213 ::08.925956 layer_factory.hpp:] Creating layer conv5_2

I1213 ::08.926456 net.cpp:] Creating Layer conv5_2

I1213 ::08.926956 net.cpp:] conv5_2 <- conv5_1

I1213 ::08.927458 net.cpp:] conv5_2 -> conv5_2

I1213 ::08.933961 net.cpp:] Setting up conv5_2

I1213 ::08.933961 net.cpp:] Top shape: ()

I1213 ::08.934463 net.cpp:] Memory required for data:

I1213 ::08.934962 layer_factory.hpp:] Creating layer relu5_2

I1213 ::08.935462 net.cpp:] Creating Layer relu5_2

I1213 ::08.938464 net.cpp:] relu5_2 <- conv5_2

I1213 ::08.938464 net.cpp:] relu5_2 -> conv5_2 (in-place)

I1213 ::08.939966 net.cpp:] Setting up relu5_2

I1213 ::08.940466 net.cpp:] Top shape: ()

I1213 ::08.940966 net.cpp:] Memory required for data:

I1213 ::08.941467 layer_factory.hpp:] Creating layer conv5_3

I1213 ::08.942467 net.cpp:] Creating Layer conv5_3

I1213 ::08.942467 net.cpp:] conv5_3 <- conv5_2

I1213 ::08.942467 net.cpp:] conv5_3 -> conv5_3

I1213 ::08.948472 net.cpp:] Setting up conv5_3

I1213 ::08.948472 net.cpp:] Top shape: ()

I1213 ::08.948973 net.cpp:] Memory required for data:

I1213 ::08.949973 layer_factory.hpp:] Creating layer relu5_3

I1213 ::08.950474 net.cpp:] Creating Layer relu5_3

I1213 ::08.950973 net.cpp:] relu5_3 <- conv5_3

I1213 ::08.950973 net.cpp:] relu5_3 -> conv5_3 (in-place)

I1213 ::08.951975 net.cpp:] Setting up relu5_3

I1213 ::08.952976 net.cpp:] Top shape: ()

I1213 ::08.952976 net.cpp:] Memory required for data:

I1213 ::08.952976 layer_factory.hpp:] Creating layer pool5

I1213 ::08.952976 net.cpp:] Creating Layer pool5

I1213 ::08.952976 net.cpp:] pool5 <- conv5_3

I1213 ::08.953476 net.cpp:] pool5 -> pool5

I1213 ::08.953476 net.cpp:] Setting up pool5

I1213 ::08.954977 net.cpp:] Top shape: ()

I1213 ::08.955476 net.cpp:] Memory required for data:

I1213 ::08.955977 layer_factory.hpp:] Creating layer fc6

I1213 ::08.956979 net.cpp:] Creating Layer fc6

I1213 ::08.957479 net.cpp:] fc6 <- pool5

I1213 ::08.957979 net.cpp:] fc6 -> fc6

I1213 ::09.144121 net.cpp:] Setting up fc6

I1213 ::09.144121 net.cpp:] Top shape: ()

I1213 ::09.144611 net.cpp:] Memory required for data:

I1213 ::09.145612 layer_factory.hpp:] Creating layer relu6

I1213 ::09.146113 net.cpp:] Creating Layer relu6

I1213 ::09.146613 net.cpp:] relu6 <- fc6

I1213 ::09.147114 net.cpp:] relu6 -> fc6 (in-place)

I1213 ::09.148114 net.cpp:] Setting up relu6

I1213 ::09.148114 net.cpp:] Top shape: ()

I1213 ::09.148614 net.cpp:] Memory required for data:

I1213 ::09.149114 layer_factory.hpp:] Creating layer drop6

I1213 ::09.149616 net.cpp:] Creating Layer drop6

I1213 ::09.150615 net.cpp:] drop6 <- fc6

I1213 ::09.151116 net.cpp:] drop6 -> fc6 (in-place)

I1213 ::09.151617 net.cpp:] Setting up drop6

I1213 ::09.152117 net.cpp:] Top shape: ()

I1213 ::09.153617 net.cpp:] Memory required for data:

I1213 ::09.154119 layer_factory.hpp:] Creating layer fc7

I1213 ::09.154618 net.cpp:] Creating Layer fc7

I1213 ::09.155119 net.cpp:] fc7 <- fc6

I1213 ::09.155619 net.cpp:] fc7 -> fc7

I1213 ::09.190145 net.cpp:] Setting up fc7

I1213 ::09.190145 net.cpp:] Top shape: ()

I1213 ::09.191145 net.cpp:] Memory required for data:

I1213 ::09.191645 layer_factory.hpp:] Creating layer relu7

I1213 ::09.192145 net.cpp:] Creating Layer relu7

I1213 ::09.192646 net.cpp:] relu7 <- fc7

I1213 ::09.193145 net.cpp:] relu7 -> fc7 (in-place)

I1213 ::09.194146 net.cpp:] Setting up relu7

I1213 ::09.194146 net.cpp:] Top shape: ()

I1213 ::09.194648 net.cpp:] Memory required for data:

I1213 ::09.195147 layer_factory.hpp:] Creating layer drop7

I1213 ::09.195647 net.cpp:] Creating Layer drop7

I1213 ::09.196148 net.cpp:] drop7 <- fc7

I1213 ::09.196648 net.cpp:] drop7 -> fc7 (in-place)

I1213 ::09.197149 net.cpp:] Setting up drop7

I1213 ::09.197649 net.cpp:] Top shape: ()

I1213 ::09.198149 net.cpp:] Memory required for data:

I1213 ::09.198650 layer_factory.hpp:] Creating layer score_fr

I1213 ::09.200150 net.cpp:] Creating Layer score_fr

I1213 ::09.200651 net.cpp:] score_fr <- fc7

I1213 ::09.201653 net.cpp:] score_fr -> score_fr

I1213 ::09.203654 net.cpp:] Setting up score_fr

I1213 ::09.204154 net.cpp:] Top shape: ()

I1213 ::09.204654 net.cpp:] Memory required for data:

I1213 ::09.205155 layer_factory.hpp:] Creating layer upscore2

I1213 ::09.205656 net.cpp:] Creating Layer upscore2

I1213 ::09.206156 net.cpp:] upscore2 <- score_fr

I1213 ::09.207156 net.cpp:] upscore2 -> upscore2

I1213 ::09.207656 net.cpp:] Setting up upscore2

I1213 ::09.208156 net.cpp:] Top shape: ()

I1213 ::09.208657 net.cpp:] Memory required for data:

I1213 ::09.209157 layer_factory.hpp:] Creating layer upscore2_upscore2_0_split

I1213 ::09.209657 net.cpp:] Creating Layer upscore2_upscore2_0_split

I1213 ::09.210157 net.cpp:] upscore2_upscore2_0_split <- upscore2

I1213 ::09.210659 net.cpp:] upscore2_upscore2_0_split -> upscore2_upscore2_0_split_0

I1213 ::09.211158 net.cpp:] upscore2_upscore2_0_split -> upscore2_upscore2_0_split_1

I1213 ::09.211659 net.cpp:] Setting up upscore2_upscore2_0_split

I1213 ::09.212159 net.cpp:] Top shape: ()

I1213 ::09.212661 net.cpp:] Top shape: ()

I1213 ::09.213160 net.cpp:] Memory required for data:

I1213 ::09.213660 layer_factory.hpp:] Creating layer score_pool4

I1213 ::09.214160 net.cpp:] Creating Layer score_pool4

I1213 ::09.216163 net.cpp:] score_pool4 <- pool4_pool4_0_split_1

I1213 ::09.216663 net.cpp:] score_pool4 -> score_pool4

I1213 ::09.219164 net.cpp:] Setting up score_pool4

I1213 ::09.219666 net.cpp:] Top shape: ()

I1213 ::09.220165 net.cpp:] Memory required for data:

I1213 ::09.220665 layer_factory.hpp:] Creating layer score_pool4c

I1213 ::09.221166 net.cpp:] Creating Layer score_pool4c

I1213 ::09.221667 net.cpp:] score_pool4c <- score_pool4

I1213 ::09.222167 net.cpp:] score_pool4c <- upscore2_upscore2_0_split_0

I1213 ::09.222667 net.cpp:] score_pool4c -> score_pool4c

I1213 ::09.223167 net.cpp:] Setting up score_pool4c