Coherence装载数据的研究 - Invocation Service

这里验证第三个方法,原理是将需要装载的数据分载在所有的存储节点上,不同的地方是利用了存储节点提供的InvocationService进行装载,而不是PreloadRequest,

原理如图

前提条件是:

- 需要知道所有要装载的key值

- 需要根据存储节点的数目把key值进行分摊,这里是通过

- Map<Member, List<String>> divideWork(Set members)这个方法,输入Coherence的存储节点成员,输出一个map结构,以member为key,所有的entry key值为value.

- 装载数据的任务,主要是通过驱动MyLoadInvocable的run方法,把数据在各个节点中进行装载,MyLoadInvocable必须扩展AbstractInvocable并实现PortableObject,不知何解,我尝试实现Seriable方法,结果出错

- 在拆解所有key值的任务过程中,发现list<String>数组被后面的值覆盖,后来每次放入map的时候新建一个List才避免此现象发生.

- 不需要实现CacheLoader或者CacheStore方法

Person.java

|

package dataload; import java.io.Serializable; public class Person implements Serializable { public void setId(String Id) { public String getId() { public void setFirstname(String Firstname) { public String getFirstname() { public void setLastname(String Lastname) { public String getLastname() { public void setAddress(String Address) { public String getAddress() { public Person() { public Person(String sId,String sFirstname,String sLastname,String sAddress) { |

MyLoadInvocable.java

装载数据的任务,主要是通过驱动这个任务的run方法,把数据在各个节点中进行装载

|

package dataload; import com.tangosol.io.pof.PofReader; import java.io.IOException; import java.sql.Connection; import java.util.Hashtable; import javax.naming.Context; import serp.bytecode.NameCache; public class MyLoadInvocable extends AbstractInvocable implements PortableObject { private List<String> m_memberKeys; public MyLoadInvocable() { public MyLoadInvocable(List<String> memberKeys, String cache) { public Connection getConnection() { Hashtable<String,String> ht = new Hashtable<String,String>(); m_con = ds.getConnection(); return m_con; public void run() { try for(int i = 0; i < m_memberKeys.size(); i++) String id = (String)m_memberKeys.get(i); stmt.setString(1, id); } } stmt.close(); }catch (Exception e) } public void readExternal(PofReader in) /** } |

LoadUsingEP.java

装载的客户端,负责数据分段,InvocationService查找以及驱动。

|

package dataload; import com.tangosol.net.CacheFactory; import java.sql.Connection; import java.sql.SQLException; import java.sql.Statement; import java.util.ArrayList; import javax.naming.Context; public class LoaderUsingEP { private Connection m_con; public Connection getConnection() { Hashtable<String,String> ht = new Hashtable<String,String>(); m_con = ds.getConnection(); return m_con; protected Set getStorageMembers(NamedCache cache) protected Map<Member, List<String>> divideWork(Set members) try { int onecount = totalcount / membercount; sql = "select id from persons"; ResultSet rs1 = st.executeQuery(sql); while (rs1.next()) { if (count < onecount) { list.add(rs1.getString("id")); Member member = (Member) i.next(); ArrayList<String> list2=new ArrayList<String>(); list.clear(); /* print the list value } currentworker ++; if (currentworker == membercount-1) { } } Member member = (Member) i.next(); st.close(); for(Map.Entry<Member, List<String>> entry:mapWork.entrySet()){ } public void load() Set members = getStorageMembers(cache); Map<Member, List<String>> mapWork = divideWork(members); InvocationService service = (InvocationService) for (Map.Entry<Member, List<String>> entry : mapWork.entrySet()) Member member = entry.getKey(); MyLoadInvocable task = new MyLoadInvocable(memberKeys, cache.getCacheName()); public static void main(String[] args) { } |

需要配置的客户端schema

storage-override-client.xml

|

<?xml version="1.0"?> <read-write-backing-map-scheme> <internal-cache-scheme> <cachestore-scheme> <listener/> <invocation-scheme> </caching-schemes> |

存储节点的Schema

|

<?xml version="1.0"?> <read-write-backing-map-scheme> <internal-cache-scheme> <cachestore-scheme> <listener/> <invocation-scheme> </caching-schemes> |

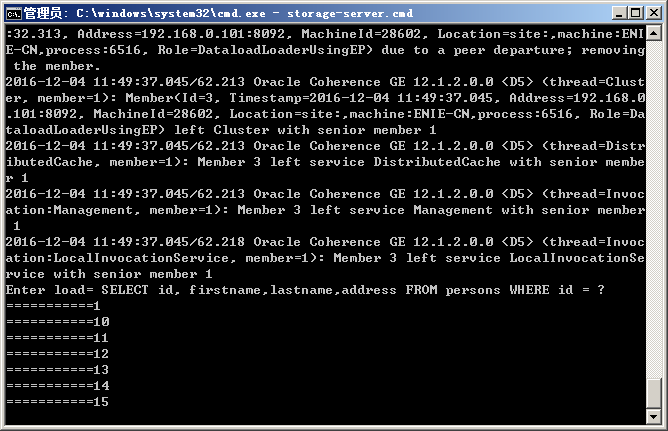

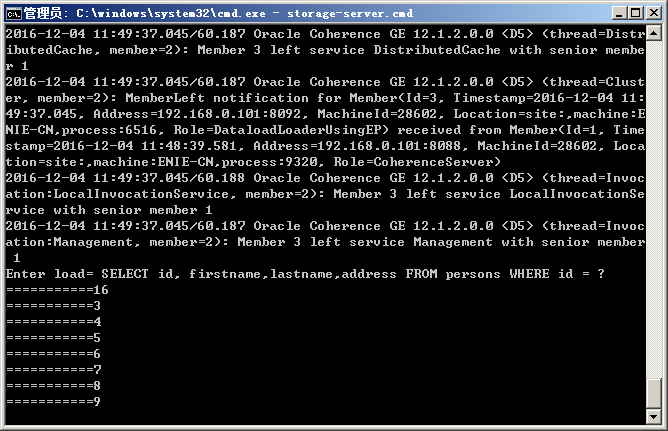

输出结果

可见数据分片装载.

Coherence装载数据的研究 - Invocation Service的更多相关文章

- Coherence装载数据的研究-PreloadRequest

最近给客户准备培训,看到Coherence可以通过三种方式批量加载数据,分别是: Custom application InvocableMap - PreloadRequest Invocation ...

- 使用 Hive装载数据的几种方式

装载数据 1.以LOAD的方式装载数据 LOAD DATA [LOCAL] INPATH 'filepath' [OVERWRITE] INTO TABLE tablename [PARTITION( ...

- WinCE数据通讯之Web Service分包传输篇

前面写过<WinCE数据通讯之Web Service篇>那篇对于数据量不是很大的情况下单包传输是可以了,但是对于大数据量的情况下WinCE终端的内存往往会在解包或者接受数据时产生内存溢出. ...

- db2 load命令装载数据时定位错误出现的位置

使用如下命令装载数据(注意CPU_PARALLELISM 1): db2 load from filename.del of del replace into tab_name CPU_PARALL ...

- 总结一下用caffe跑图片数据的研究流程

近期在用caffe玩一些数据集,这些数据集是从淘宝爬下来的图片.主要是想研究一下对女性衣服的分类. 以下是一些详细的操作流程,这里总结一下. 1 爬取数据.写爬虫从淘宝爬取自己须要的数据. 2 数据预 ...

- Android开发 ---ContentProvider数据提供者,Activity和Service就是上下文对象,短信监听器,内容观察者

1.activity_main.xml <?xml version="1.0" encoding="utf-8"?> <LinearLayou ...

- 基于Web Service的客户端框架搭建一:C#使用Http Post方式传递Json数据字符串调用Web Service

引言 前段时间一直在做一个ERP系统,随着系统功能的完善,客户端(CS模式)变得越来越臃肿.现在想将业务逻辑层以下部分和界面层分离,使用Web Service来做.由于C#中通过直接添加引用的方来调用 ...

- WinCE数据通讯之Web Service篇

准备写个WinCE平台与数据库服务器数据通讯交互方面的专题文章,今天先整理个Web Service通讯方式. 公司目前的硬件产品平台是WinCE5.0,数据通讯是连接服务器与终端的桥梁,关系着终端的数 ...

- 对Yii 2.0模型rules的理解(load()无法正确装载数据)

在实际开发中,遇到数据表新增字段而忘记了在对应模型中rules规则中添加新增的字段,而导致load()方法装载不到新增字段,导致新增字段无法写入数据库中. 解决办法:在新增字段后及时在对应模型ru ...

随机推荐

- Vijos 1232 核电站问题

核电站问题 描述 一个核电站有N个放核物质的坑,坑排列在一条直线上.如果连续M个坑中放入核物质,则会发生爆炸,于是,在某些坑中可能不放核物质. 现在,请你计算:对于给定的N和M,求不发生爆炸的放置核物 ...

- 【51NOD】1486 大大走格子

[算法]动态规划+组合数学 [题意]有一个h行w列的棋盘,定义一些格子为不能走的黑点,现在要求从左上角走到右下角的方案数. [题解] 大概能考虑到离散化黑点后,中间的空格子直接用组合数计算. 然后解决 ...

- thinkphp 随机获取一条数据

$data=$AD->field("ID,Answer,State")->limit(1)->order('rand()')->select();

- 关于background

background目前有size; color; image; repeat;position;attachtment; 作用分别是一:调整背景大小. 语法:background-size:a ...

- 无界面运行Jmeter压测脚本

今天在针对单一接口压测时出现了从未遇到的问题,设好并发量后用调度器控制脚本的开始和结束,但在脚本应该自动结束时间,脚本却停不下来,手动stop报告就会有error率,卡了我很久很久不能解决,网络上也基 ...

- DRF视图集的路由设置

在使用DRF视图集时,往往需要配一大堆路由,例如: # views.py class DepartmentViewSet(ListModelMixin,CreateModelMixin,Retriev ...

- python中的迭代器详解

#原创,转载请先联系 理论性的东西有点枯燥,耐心点看- 1.迭代是什么? 我们知道可以对list,tuple,dict,str等数据类型使用for...in的循环语法,从其中依次取出数据,这个过程叫做 ...

- java.lang.NumberFormatException: multiple points 异常

平时使用SimpleDateFormat的时候都是在单线程的情况下使用的,今天在改写别人的代码,发现每个类中都会写大量的SimpleDateFormat实例.作为一个程序特有的洁癖开始对代码进行优化. ...

- .net开发CAD2008无法调试的解决方法

把acad.exe.config文件修改为:------------------------------------------------------------------------------ ...

- Jest+Enzyme React js/typescript测试环境配置案例

本文案例github:https://github.com/axel10/react-jest-typescript-demo 配置jest的react测试环境时我们可以参考官方的配置教程: http ...