prometheus + influxdb + grafana + mysql

前言

本文介绍使用influxdb 作为prometheus持久化存储和使用mysql 作为grafana 持久化存储的安装方法

一 安装go环境

如果自己有go环境可以自主编译remote_storage_adapter插件,安装go环境目的就是为了获得此插件,如果没有go环境可以使用我分享的连接下载。

链接: https://pan.baidu.com/s/1DJpoYDOIfCeAFC6UGY22Xg 提取码: uj42

1 下载

wget https://storage.googleapis.com/golang/go1.8.3.linux-amd64.tar.gz2 安装

tar -C /usr/local -xzf go1.8.3.linux-amd64.tar.gz

添加环境变量

vim /etc/profile

export GOROOT=/usr/local/go

export GOBIN=$GOROOT/bin

export GOPKG=$GOROOT/pkg/tool/linux_amd64

export GOARCH=amd64

export GOOS=linux

export GOPATH=/go

export PATH=$PATH:$GOBIN:$GOPKG:$GOPATH/bin

vim /etc/profile

go get -d -v

二 安装 influxdb

1 下载并安装

wget https://dl.influxdata.com/influxdb/releases/influxdb-1.5.2.x86_64.rpm

sudo yum localinstall influxdb-1.5.2.x86_64.rpm2 启动influxdb

systemctl start influxdb

systemctl enable influxdb

以非服务方式启动

influxd

需要指定配置文件的话,可以使用 --config 选项,具体可以help下看看3 查看相关配置

安装后, 在/usr/bin下面有如下文件

influxd influxdb服务器

influx influxdb命令行客户端

influx_inspect 查看工具

influx_stress 压力测试工具

influx_tsm 数据库转换工具(将数据库从b1或bz1格式转换为tsm1格式)

在 /var/lib/influxdb/下面会有如下文件夹

data 存放最终存储的数据,文件以.tsm结尾

meta 存放数据库元数据

wal 存放预写日志文件配置文件路径 :/etc/influxdb/influxdb.conf

4 创建http接口用于普罗米修斯

如何进入到db中

influx如何创建一个prometheus库http 接口

curl -XPOST http://localhost:8086/query --data-urlencode "q=CREATE DATABASE prometheus"

三 安装prometheus

1 下载

https://prometheus.io/download/2 解压安装

tar xf prometheus-2.8.0.linux-amd64.tar.gz

mv prometheus-2.8.0.linux-amd64 /usr/local/prometheus

cd /usr/local/prometheus

./prometheus --version四 准备remote_storage_adapter

在github上准备一个 remote_storage_adapter 的可执行文件,然后启动它,如果想获取相应的帮助可以使用:./remote_storage_adapter -h来获取相应帮助(修改绑定的端口,influxdb的设置等..),现在我们启动一个remote_storage_adapter来对接influxdb和prometheus:./remote_storage_adapter -influxdb-url=http://localhost:8086/ -influxdb.database=prometheus -influxdb.retention-policy=autogen,influxdb默认绑定的端口为9201

1 build 插件

/usr/local/go/bin/go get github.com/prometheus/documentation/examples/remote_storage/remote_storage_adapter/2 使用插件

./remote_storage_adapter --influxdb-url=http://127.0.0.1:8086/ --influxdb.database="prometheus" --influxdb.retention-policy=autogen3 修改prometheus文件

vim prometheus.yml

添加:

#Remote write configuration (for Graphite, OpenTSDB, or InfluxDB).

remote_write:

- url: "http://localhost:9201/write"

# Remote read configuration (for InfluxDB only at the moment).

remote_read:

- url: "http://localhost:9201/read"

4 启动 prometheus

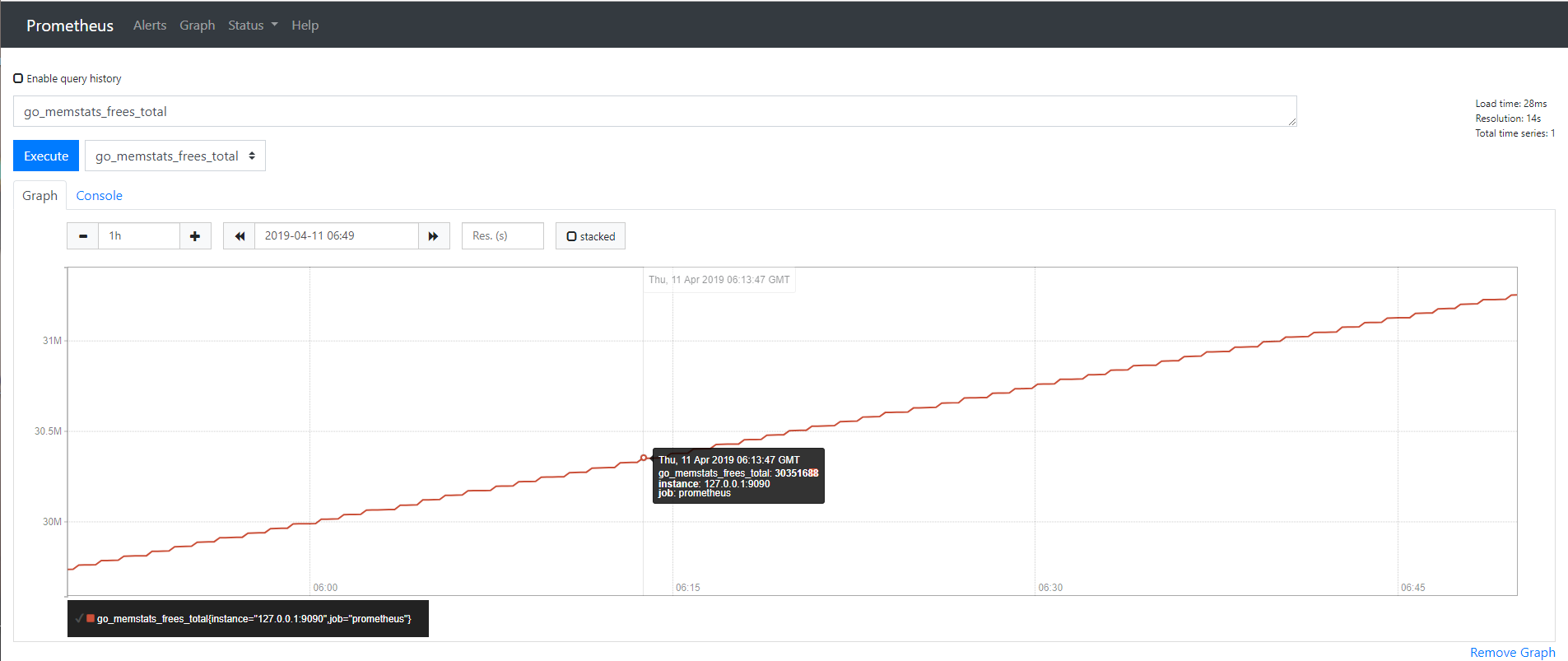

./prometheus5 查看有无数据上报

此时只监控了server本身

6 查看 influxdb 数据库内容

> show databases;

name: databases

name

----

_internal

mydb

prometheus

> use prometheus

Using database prometheus

> SHOW MEASUREMENTS

name: measurements

name

----

go_gc_duration_seconds

go_gc_duration_seconds_count

go_gc_duration_seconds_sum

go_goroutines

go_info

go_memstats_alloc_bytes

go_memstats_alloc_bytes_total

go_memstats_buck_hash_sys_bytes

go_memstats_frees_total

go_memstats_gc_cpu_fraction

go_memstats_gc_sys_bytes

go_memstats_heap_alloc_bytes

go_memstats_heap_idle_bytes

go_memstats_heap_inuse_bytes

go_memstats_heap_objects

go_memstats_heap_released_bytes

go_memstats_heap_sys_bytes

go_memstats_last_gc_time_seconds

go_memstats_lookups_total

go_memstats_mallocs_total

go_memstats_mcache_inuse_bytes

go_memstats_mcache_sys_bytes

go_memstats_mspan_inuse_bytes

go_memstats_mspan_sys_bytes

go_memstats_next_gc_bytes

go_memstats_other_sys_bytes

go_memstats_stack_inuse_bytes

go_memstats_stack_sys_bytes

go_memstats_sys_bytes

go_threads

net_conntrack_dialer_conn_attempted_total

net_conntrack_dialer_conn_closed_total

net_conntrack_dialer_conn_established_total

net_conntrack_dialer_conn_failed_total

net_conntrack_listener_conn_accepted_total

net_conntrack_listener_conn_closed_total

process_cpu_seconds_total

process_max_fds

process_open_fds

process_resident_memory_bytes

process_start_time_seconds

process_virtual_memory_bytes

process_virtual_memory_max_bytes

prometheus_api_remote_read_queries

prometheus_build_info

prometheus_config_last_reload_success_timestamp_seconds

prometheus_config_last_reload_successful

prometheus_engine_queries

prometheus_engine_queries_concurrent_max

prometheus_engine_query_duration_seconds

prometheus_engine_query_duration_seconds_count

prometheus_engine_query_duration_seconds_sum

prometheus_http_request_duration_seconds_bucket

prometheus_http_request_duration_seconds_count

prometheus_http_request_duration_seconds_sum

prometheus_http_response_size_bytes_bucket

prometheus_http_response_size_bytes_count

prometheus_http_response_size_bytes_sum

prometheus_notifications_alertmanagers_discovered

prometheus_notifications_dropped_total

prometheus_notifications_queue_capacity

prometheus_notifications_queue_length

prometheus_remote_storage_dropped_samples_total

prometheus_remote_storage_enqueue_retries_total

prometheus_remote_storage_failed_samples_total

prometheus_remote_storage_highest_timestamp_in_seconds

prometheus_remote_storage_pending_samples

prometheus_remote_storage_queue_highest_sent_timestamp_seconds

prometheus_remote_storage_remote_read_queries

prometheus_remote_storage_retried_samples_total

prometheus_remote_storage_samples_in_total

prometheus_remote_storage_sent_batch_duration_seconds_bucket

prometheus_remote_storage_sent_batch_duration_seconds_count

prometheus_remote_storage_sent_batch_duration_seconds_sum

prometheus_remote_storage_shard_capacity

prometheus_remote_storage_shards

prometheus_remote_storage_succeeded_samples_total

prometheus_rule_evaluation_duration_seconds_count

prometheus_rule_evaluation_duration_seconds_sum

prometheus_rule_evaluation_failures_total

prometheus_rule_evaluations_total

prometheus_rule_group_duration_seconds_count

prometheus_rule_group_duration_seconds_sum

prometheus_rule_group_iterations_missed_total

prometheus_rule_group_iterations_total

prometheus_sd_azure_refresh_duration_seconds_count

prometheus_sd_azure_refresh_duration_seconds_sum

prometheus_sd_azure_refresh_failures_total

prometheus_sd_consul_rpc_duration_seconds_count

prometheus_sd_consul_rpc_duration_seconds_sum

prometheus_sd_consul_rpc_failures_total

prometheus_sd_discovered_targets

prometheus_sd_dns_lookup_failures_total

prometheus_sd_dns_lookups_total

prometheus_sd_ec2_refresh_duration_seconds_count

prometheus_sd_ec2_refresh_duration_seconds_sum

prometheus_sd_ec2_refresh_failures_total

prometheus_sd_file_read_errors_total

prometheus_sd_file_scan_duration_seconds_count

prometheus_sd_file_scan_duration_seconds_sum

prometheus_sd_gce_refresh_duration_count

prometheus_sd_gce_refresh_duration_sum

prometheus_sd_gce_refresh_failures_total

prometheus_sd_kubernetes_cache_last_resource_version

prometheus_sd_kubernetes_cache_list_duration_seconds_count

prometheus_sd_kubernetes_cache_list_duration_seconds_sum

prometheus_sd_kubernetes_cache_list_items_count

prometheus_sd_kubernetes_cache_list_items_sum

prometheus_sd_kubernetes_cache_list_total

prometheus_sd_kubernetes_cache_short_watches_total

prometheus_sd_kubernetes_cache_watch_duration_seconds_count

prometheus_sd_kubernetes_cache_watch_duration_seconds_sum

prometheus_sd_kubernetes_cache_watch_events_count

prometheus_sd_kubernetes_cache_watch_events_sum

prometheus_sd_kubernetes_cache_watches_total

prometheus_sd_kubernetes_events_total

prometheus_sd_marathon_refresh_duration_seconds_count

prometheus_sd_marathon_refresh_duration_seconds_sum

prometheus_sd_marathon_refresh_failures_total

prometheus_sd_openstack_refresh_duration_seconds_count

prometheus_sd_openstack_refresh_duration_seconds_sum

prometheus_sd_openstack_refresh_failures_total

prometheus_sd_received_updates_total

prometheus_sd_triton_refresh_duration_seconds_count

prometheus_sd_triton_refresh_duration_seconds_sum

prometheus_sd_triton_refresh_failures_total

prometheus_sd_updates_total

prometheus_target_interval_length_seconds

prometheus_target_interval_length_seconds_count

prometheus_target_interval_length_seconds_sum

prometheus_target_scrape_pool_reloads_failed_total

prometheus_target_scrape_pool_reloads_total

prometheus_target_scrape_pool_sync_total

prometheus_target_scrape_pools_failed_total

prometheus_target_scrape_pools_total

prometheus_target_scrapes_exceeded_sample_limit_total

prometheus_target_scrapes_sample_duplicate_timestamp_total

prometheus_target_scrapes_sample_out_of_bounds_total

prometheus_target_scrapes_sample_out_of_order_total

prometheus_target_sync_length_seconds

prometheus_target_sync_length_seconds_count

prometheus_target_sync_length_seconds_sum

prometheus_template_text_expansion_failures_total

prometheus_template_text_expansions_total

prometheus_treecache_watcher_goroutines

prometheus_treecache_zookeeper_failures_total

prometheus_tsdb_blocks_loaded

prometheus_tsdb_checkpoint_creations_failed_total

prometheus_tsdb_checkpoint_creations_total

prometheus_tsdb_checkpoint_deletions_failed_total

prometheus_tsdb_checkpoint_deletions_total

prometheus_tsdb_compaction_chunk_range_seconds_bucket

prometheus_tsdb_compaction_chunk_range_seconds_count

prometheus_tsdb_compaction_chunk_range_seconds_sum

prometheus_tsdb_compaction_chunk_samples_bucket

prometheus_tsdb_compaction_chunk_samples_count

prometheus_tsdb_compaction_chunk_samples_sum

prometheus_tsdb_compaction_chunk_size_bytes_bucket

prometheus_tsdb_compaction_chunk_size_bytes_count

prometheus_tsdb_compaction_chunk_size_bytes_sum

prometheus_tsdb_compaction_duration_seconds_bucket

prometheus_tsdb_compaction_duration_seconds_count

prometheus_tsdb_compaction_duration_seconds_sum

prometheus_tsdb_compaction_populating_block

prometheus_tsdb_compactions_failed_total

prometheus_tsdb_compactions_total

prometheus_tsdb_compactions_triggered_total

prometheus_tsdb_head_active_appenders

prometheus_tsdb_head_chunks

prometheus_tsdb_head_chunks_created_total

prometheus_tsdb_head_chunks_removed_total

prometheus_tsdb_head_gc_duration_seconds

prometheus_tsdb_head_gc_duration_seconds_count

prometheus_tsdb_head_gc_duration_seconds_sum

prometheus_tsdb_head_max_time

prometheus_tsdb_head_max_time_seconds

prometheus_tsdb_head_min_time

prometheus_tsdb_head_min_time_seconds

prometheus_tsdb_head_samples_appended_total

prometheus_tsdb_head_series

prometheus_tsdb_head_series_created_total

prometheus_tsdb_head_series_not_found_total

prometheus_tsdb_head_series_removed_total

prometheus_tsdb_head_truncations_failed_total

prometheus_tsdb_head_truncations_total

prometheus_tsdb_lowest_timestamp

prometheus_tsdb_lowest_timestamp_seconds

prometheus_tsdb_reloads_failures_total

prometheus_tsdb_reloads_total

prometheus_tsdb_size_retentions_total

prometheus_tsdb_storage_blocks_bytes

prometheus_tsdb_symbol_table_size_bytes

prometheus_tsdb_time_retentions_total

prometheus_tsdb_tombstone_cleanup_seconds_bucket

prometheus_tsdb_tombstone_cleanup_seconds_count

prometheus_tsdb_tombstone_cleanup_seconds_sum

prometheus_tsdb_vertical_compactions_total

prometheus_tsdb_wal_completed_pages_total

prometheus_tsdb_wal_corruptions_total

prometheus_tsdb_wal_fsync_duration_seconds_count

prometheus_tsdb_wal_fsync_duration_seconds_sum

prometheus_tsdb_wal_page_flushes_total

prometheus_tsdb_wal_truncate_duration_seconds_count

prometheus_tsdb_wal_truncate_duration_seconds_sum

prometheus_tsdb_wal_truncations_failed_total

prometheus_tsdb_wal_truncations_total

prometheus_wal_watcher_current_segment

prometheus_wal_watcher_record_decode_failures_total

prometheus_wal_watcher_records_read_total

prometheus_wal_watcher_samples_sent_pre_tailing_total

promhttp_metric_handler_requests_in_flight

promhttp_metric_handler_requests_total

scrape_duration_seconds

scrape_samples_post_metric_relabeling

scrape_samples_scraped

up

7 向添加一个node节点

下载

wget https://github.com/prometheus/node_exporter/releases/download/v0.17.0/node_exporter-0.17.0.linux-amd64.tar.gz

安装agent

tar xf node_exporter-0.17.0.linux-amd64.tar.gz

cd node_exporter-0.17.0.linux-amd64

./node_exporter

向prometheus 注册节点

vim prometheus.yml

scrape_configs下添加

- job_name: 'linux-node'

static_configs:

- targets: ['10.10.25.149:9100']

labels:

instance: node1

重启 prometheus

8 将prometheus写成系统服务

cat>/lib/systemd/system/prometheus.service<<EOF

[Service]

Restart=on-failure

WorkingDirectory=/usr/local/prometheus/

ExecStart=/usr/local/prometheus/prometheus --config.file=/usr/local/prometheus/prometheus.yml

[Install]

WantedBy=multi-user.target

EOF

chown 644 /lib/systemd/system/prometheus.service

systemctl daemon-reload

systemctl enable prometheus

systemctl start prometheus

systemctl status prometheus9 将agent写成系统服务

cat>/lib/systemd/system/node_exporter.service<<EOF

[Service]

Restart=on-failure

WorkingDirectory=/root/node_exporter-0.17.0.linux-amd64

ExecStart=/root/node_exporter-0.17.0.linux-amd64/node_exporter

[Install]

WantedBy=multi-user.target

EOF

chown 644 /lib/systemd/system/node_exporter.service

systemctl daemon-reload

systemctl enable node_exporter

systemctl start node_exporter

systemctl status node_exporter10 将remote_storage_adapter注册为系统服务

cat>/lib/systemd/system/remote_storage_adapter.service<<EOF

[Service]

Restart=on-failure

WorkingDirectory=/root/

ExecStart=/root/remote_storage_adapter --influxdb-url=http://127.0.0.1:8086/ --influxdb.database="prometheus" --influxdb.retention-policy=autogen

[Install]

WantedBy=multi-user.target

EOF

chown 644 /lib/systemd/system/remote_storage_adapter.service

systemctl daemon-reload

systemctl enable remote_storage_adapter

systemctl start remote_storage_adapter

systemctl status remote_storage_adapter五 安装 grafana

1 下载

wget https://dl.grafana.com/oss/release/grafana-6.0.2-1.x86_64.rpm2 安装

yum install grafana-6.0.2-1.x86_64.rpm

systemctl start grafana-server

systemctl enable grafana-server

grafana-server -v

grafana-server 监听端口为 30003 访问 grafana-server

http://ServerIP:3000

默认用户名密码为: admin admin六 安装 mysql

1 添加源

rpm -Uvh http://dev.mysql.com/get/mysql-community-release-el7-5.noarch.rpm

yum repolist enabled | grep "mysql.*-community.*"2 安装 mysql-5.6

yum -y install mysql-community-server3 启动mysql并简单安全设置

systemctl enable mysqld

systemctl start mysqld

systemctl status mysqld

mysql_secure_installation 设置密码一路Y

4 创建grafana 数据库

create database grafana;

create user grafana@'%' IDENTIFIED by 'grafana';

grant all on grafana.* to grafana@'%';

flush privileges;七 修改grafana默认数据库并配置grafana

1 修改配置文件连接mysql

vim /etc/grafana/grafana.ini

[database]

type = mysql

host = 127.0.0.1:3306

name = grafana

user = grafana

password =grafana

url = mysql://grafana:grafana@localhost:3306/grafana

[session]

provider = mysql

provider_config = `grafana:grafana@tcp(127.0.0.1:3306)/grafana`

2 重启grafana

systemctl restart grafana-server

3 访问grafana

http://serverip:30004 查看数据库

mysql> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| grafana |

| mysql |

| performance_schema |

+--------------------+

mysql> use grafana

Reading table information for completion of table and column names

You can turn off this feature to get a quicker startup with -A

Database changed

mysql> show tables;

+--------------------------+

| Tables_in_grafana |

+--------------------------+

| alert |

| alert_notification |

| alert_notification_state |

| annotation |

| annotation_tag |

| api_key |

| dashboard |

| dashboard_acl |

| dashboard_provisioning |

| dashboard_snapshot |

| dashboard_tag |

| dashboard_version |

| data_source |

| login_attempt |

| migration_log |

| org |

| org_user |

| playlist |

| playlist_item |

| plugin_setting |

| preferences |

| quota |

| server_lock |

| session |

| star |

| tag |

| team |

| team_member |

| temp_user |

| test_data |

| user |

| user_auth |

| user_auth_token |

+--------------------------+

33 rows in set (0.00 sec)

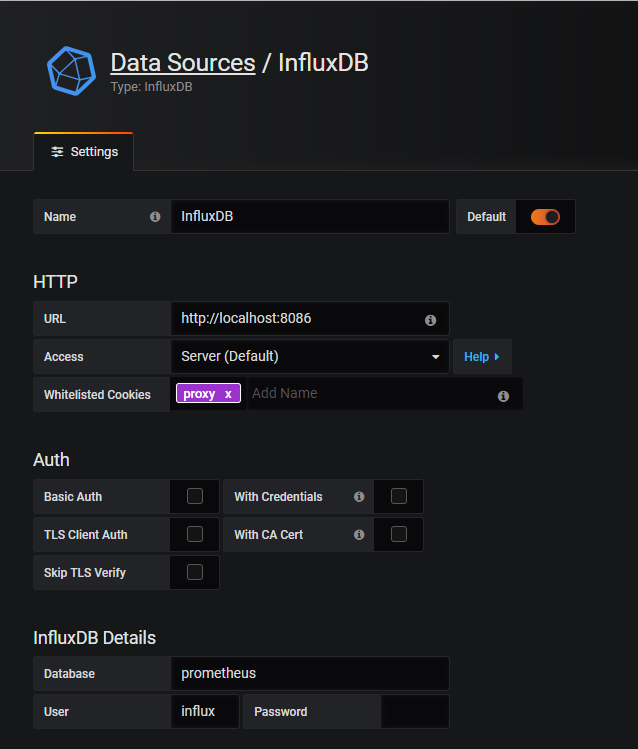

5 配置 grafana 添加数据源

由于使用influxDB作为prometheus的持久化存储,所以添加的influxDB数据源,由于influxDB未设置密码所以此处没有填写密码

prometheus + influxdb + grafana + mysql的更多相关文章

- Docker监控平台prometheus和grafana,监控redis,mysql,docker,服务器信息

Docker监控平台prometheus和grafana,监控redis,mysql,docker,服务器信息 一.通过redis_exporter监控redis 1.1 下载镜像 1.2 运行服务 ...

- 使用Docker部署监控系统,Prometheus,Grafana,监控服务器信息及Mysql

使用Docker部署监控系统,Prometheus,Grafana,监控服务器信息及Mysql 一.docker部署prometheus监控系统 1.1 配置安装环境 1.1.1 安装promethe ...

- Prometheus 与 Grafana 集成

简介 Grafana 是一个可视化仪表盘,它拥有美观的图标和布局展示,功能齐全的仪表盘和图形编辑器,默认支持 CloudWatch.Graphite.Elasticsearch.InfluxDB.My ...

- docker容器监控:cadvisor+influxdb+grafana

cadvisor+influxdb+grafana可以实现容器信息获取.存储.显示等容器监控功能,是目前流行的docker监控开源方案. 方案介绍 cadvisor Google开源的用于监控基础设施 ...

- docker监控方案实践(cadvisor+influxdb+grafana)

一.概要 1.1 背景 虚拟化技术如今已经非常热门,如果你不知道什么是虚拟化,那你应该了解虚拟机.虚拟化技术如同虚拟机一样,用于将某些硬件通过软件方式实现"复制",虚拟出" ...

- 详解k8s原生的集群监控方案(Heapster+InfluxDB+Grafana) - kubernetes

1.浅析监控方案 heapster是一个监控计算.存储.网络等集群资源的工具,以k8s内置的cAdvisor作为数据源收集集群信息,并汇总出有价值的性能数据(Metrics):cpu.内存.netwo ...

- .NET Core微服务之基于App.Metrics+InfluxDB+Grafana实现统一性能监控

Tip: 此篇已加入.NET Core微服务基础系列文章索引 一.关于App.Metrics+InfluxDB+Grafana 1.1 App.Metrics App.Metrics是一款开源的支持. ...

- Prometheus 和 Grafana 安装部署

Prometheus 是一套开源的系统监控报警框架.Prometheus 作为生态圈 Cloud Native Computing Foundation(简称:CNCF)中的重要一员,其活跃度仅次于 ...

- 性能工具之JMeter+InfluxDB+Grafana打造压测可视化实时监控【转】

概述 本文我们将介绍如何使用JMeter+InfluxDB+Grafana打造压测可视化实时监控. 引言 我们很多时候在使用JMeter做性能测试,我们很难及时察看压测过程中应用的性能状况,总是需要等 ...

随机推荐

- 渗透之路基础 -- XXE注入漏洞

XXE漏洞 XXE漏洞全称XML External Entity Injection即xml外部实体注入漏洞,XXE漏洞发生在应用程序解析XML输入时,没有禁止外部实体的加载,导致可加载恶意外部文件, ...

- Codeforces J. A Simple Task(多棵线段树)

题目描述: Description This task is very simple. Given a string S of length n and q queries each query is ...

- flask基础2

一个装饰器无法装饰多个函数的解决方法 当我们想在flask的多个视图函数前添加同一个装饰器时,如果什么都不做会报一个错 AssertionError: View function mapping is ...

- 微信小程序~App.js中获取用户信息

(1)代码:主要介绍下获取用户信息部分 onLaunch: function () { // 展示本地存储能力 var logs = wx.getStorageSync('logs') || [] l ...

- 4.Linq to Xml

目录 1.Linq to Xml函数构造方法 2.创建包含文本节点的Xml文档 3.保存和加载Xml 4.处理Xml片段 5.从数据库中生成XML 1.Linq to Xml函数构造方法 Linq t ...

- 《Exceptioning团队》第六次作业:团队项目系统设计改进与详细设计

一.项目基本介绍 项目 内容 这个作业属于哪个课程 任课教师博客主页链接 这个作业的要求在哪里 作业链接地址 团队名称 Exception 作业学习目标 1.掌握面向对象软件设计方法:2.完善系统设计 ...

- 扫雷小游戏PyQt5开发【附源代码】

也没啥可介绍哒,扫雷大家都玩过. 雷的分布算法也很简单,就是在雷地图(map:二维数组)中,随机放雷,然后这个雷的8个方位(上下左右.四个对角)的数字(非雷的标记.加一后不为雷的标记)都加一. 如何判 ...

- 原生js获取display属性注意事项

原生js获取diaplay属性需要在标签上写行间样式style='display:none/block;' <div style="display:block;">&l ...

- PageHelper的问题

如果分页语句没有被消耗掉,它一直保留着,直到被织入到下一次查询语句,如果 被织入的查询语句自己有LIMIT限制,那么两个LIMIT就导致语法错误了. PageHelper.startPage(page ...

- mac webstorm 安装破解

下载: 链接:https://pan.baidu.com/s/1A1afhcpPWMrQtOr1Suqs-g 密码:5r7b 激活码 K6IXATEF43-eyJsaWNlbnNlSWQiOiJLN ...