二进制部署K8S-3核心插件部署

二进制部署K8S-3核心插件部署

5.1. CNI网络插件

kubernetes设计了网络模型,但是pod之间通信的具体实现交给了CNI往插件。常用的CNI网络插件有:Flannel 、Calico、Canal、Contiv等,其中Flannel和Calico占比接近80%,Flannel占比略多于Calico。本次部署使用Flannel作为网络插件。涉及的机器 hdss7-21,hdss7-22

kubernetes设计了网络模型,但是pod之间通信的具体实现交给了CNI往插件。跨宿主机之间的pod是无法互通的,所以要使用 flannel插件。

host-gw 模式,需要宿主机在同一二层网络下,同一网关,是资源利用率最高的。

vxlan 模式

混合模式

5.1.1. 安装Flannel

github地址:https://github.com/coreos/flannel/releases

涉及的机器 hdss7-21,hdss7-22

[root@hdss7-21 ~]# cd /opt/src/

[root@hdss7-21 src]# wget https://github.com/coreos/flannel/releases/download/v0.11.0/flannel-v0.11.0-linux-amd64.tar.gz

[root@hdss7-21 src]# mkdir /opt/release/flannel-v0.11.0 # 因为flannel压缩包内部没有套目录

[root@hdss7-21 src]# tar -xf flannel-v0.11.0-linux-amd64.tar.gz -C /opt/release/flannel-v0.11.0

[root@hdss7-21 src]# ln -s /opt/release/flannel-v0.11.0 /opt/apps/flannel

[root@hdss7-21 src]# ll /opt/apps/flannel

lrwxrwxrwx 1 root root 28 Jan 9 22:33 /opt/apps/flannel -> /opt/release/flannel-v0.11.0

5.1.2. 拷贝证书

# flannel 需要以客户端的身份访问etcd,需要相关证书

[root@hdss7-21 src]# mkdir /opt/apps/flannel/certs

[root@hdss7-200 ~]# cd /opt/certs/

[root@hdss7-200 certs]# for i in 21 22;do scp ca.pem client-key.pem client.pem hdss7-$i:/opt/apps/flannel/certs/;done

5.1.3. 创建启动脚本

涉及的机器 hdss7-21,hdss7-22

[root@hdss7-21 src]# vim /opt/apps/flannel/subnet.env # 创建子网信息,7-22的subnet需要修改

FLANNEL_NETWORK=172.7.0.0/16

FLANNEL_SUBNET=172.7.21.1/24

FLANNEL_MTU=1500

FLANNEL_IPMASQ=false

[root@hdss7-21 src]# /opt/apps/etcd/etcdctl set /coreos.com/network/config '{"Network": "172.7.0.0/16", "Backend": {"Type": "host-gw"}}'

[root@hdss7-21 src]# /opt/apps/etcd/etcdctl get /coreos.com/network/config # 只需要在一台etcd机器上设置就可以了

{"Network": "172.7.0.0/16", "Backend": {"Type": "host-gw"}}

# public-ip 为本机IP,iface 为当前宿主机对外网卡

[root@hdss7-21 src]# vim /opt/apps/flannel/flannel-startup.sh

#!/bin/sh

WORK_DIR=$(dirname $(readlink -f $0))

[ $? -eq 0 ] && cd $WORK_DIR || exit

/opt/apps/flannel/flanneld \

--public-ip=10.4.7.21 \

--etcd-endpoints=https://10.4.7.12:2379,https://10.4.7.21:2379,https://10.4.7.22:2379 \

--etcd-keyfile=./certs/client-key.pem \

--etcd-certfile=./certs/client.pem \

--etcd-cafile=./certs/ca.pem \

--iface=ens32 \

--subnet-file=./subnet.env \

--healthz-port=2401

[root@hdss7-21 src]# chmod u+x /opt/apps/flannel/flannel-startup.sh

[root@hdss7-21 src]# vim /etc/supervisord.d/flannel.ini

[program:flanneld-7-21]

command=/opt/apps/flannel/flannel-startup.sh

numprocs=1

directory=/opt/apps/flannel

autostart=true

autorestart=true

startsecs=30

startretries=3

exitcodes=0,2

stopsignal=QUIT

stopwaitsecs=10

user=root

redirect_stderr=true

stdout_logfile=/data/logs/flanneld/flanneld.stdout.log

stdout_logfile_maxbytes=64MB

stdout_logfile_backups=5

stdout_capture_maxbytes=1MB

stdout_events_enabled=false

killasgroup=true

stopasgroup=true

[root@hdss7-21 src]# mkdir -p /data/logs/flanneld/

[root@hdss7-21 src]# supervisorctl update

flanneld-7-21: added process group

[root@hdss7-21 src]# supervisorctl status

etcd-server-7-21 RUNNING pid 1058, uptime -1 day, 16:33:25

flanneld-7-21 RUNNING pid 13154, uptime 0:00:30

kube-apiserver-7-21 RUNNING pid 1061, uptime -1 day, 16:33:25

kube-controller-manager-7-21 RUNNING pid 1068, uptime -1 day, 16:33:25

kube-kubelet-7-21 RUNNING pid 1052, uptime -1 day, 16:33:25

kube-proxy-7-21 RUNNING pid 1082, uptime -1 day, 16:33:25

kube-scheduler-7-21 RUNNING pid 1089, uptime -1 day, 16:33:25

5.1.4. 验证跨网络访问

[root@hdss7-21 src]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-ds-7db29 1/1 Running 1 2d 172.7.22.2 hdss7-22.host.com <none> <none>

nginx-ds-vvsz7 1/1 Running 1 2d 172.7.21.2 hdss7-21.host.com <none> <none>

[root@hdss7-21 src]# curl -I 172.7.22.2

HTTP/1.1 200 OK

Server: nginx/1.17.6

Date: Thu, 09 Jan 2020 14:55:21 GMT

Content-Type: text/html

Content-Length: 612

Last-Modified: Tue, 19 Nov 2019 12:50:08 GMT

Connection: keep-alive

ETag: "5dd3e500-264"

Accept-Ranges: bytes

5.1.5. 解决pod间IP透传问题

所有Node上操作,即优化NAT网络

# 从pod a跨宿主机访问pod b时,在pod b中能看到的地址为 pod a 宿主机地址

[root@nginx-ds-jdp7q /]# tail -f /usr/local/nginx/logs/access.log

10.4.7.22 - - [13/Jan/2020:13:13:39 +0000] "GET / HTTP/1.1" 200 12 "-" "curl/7.29.0"

10.4.7.22 - - [13/Jan/2020:13:14:27 +0000] "GET / HTTP/1.1" 200 12 "-" "curl/7.29.0"

10.4.7.22 - - [13/Jan/2020:13:54:20 +0000] "HEAD / HTTP/1.1" 200 0 "-" "curl/7.29.0"

10.4.7.22 - - [13/Jan/2020:13:54:25 +0000] "HEAD / HTTP/1.1" 200 0 "-" "curl/7.29.0"

[root@hdss7-21 ~]# iptables-save |grep POSTROUTING|grep docker # 引发问题的规则

-A POSTROUTING -s 172.7.21.0/24 ! -o docker0 -j MASQUERADE

[root@hdss7-21 ~]# yum install -y iptables-services

[root@hdss7-21 ~]# systemctl start iptables.service ; systemctl enable iptables.service

# 需要处理的规则:

[root@hdss7-21 ~]# iptables-save |grep POSTROUTING|grep docker

-A POSTROUTING -s 172.7.21.0/24 ! -o docker0 -j MASQUERADE

[root@hdss7-21 ~]# iptables-save | grep -i reject

-A INPUT -j REJECT --reject-with icmp-host-prohibited

-A FORWARD -j REJECT --reject-with icmp-host-prohibited

# 处理方式:

[root@hdss7-21 ~]# iptables -t nat -D POSTROUTING -s 172.7.21.0/24 ! -o docker0 -j MASQUERADE

[root@hdss7-21 ~]# iptables -t nat -I POSTROUTING -s 172.7.21.0/24 ! -d 172.7.0.0/16 ! -o docker0 -j MASQUERADE

[root@hdss7-21 ~]# iptables -t filter -D INPUT -j REJECT --reject-with icmp-host-prohibited

[root@hdss7-21 ~]# iptables -t filter -D FORWARD -j REJECT --reject-with icmp-host-prohibited

[root@hdss7-21 ~]# iptables-save > /etc/sysconfig/iptables

# 注意重启一次docker

systemctl restart docker

# 此时跨宿主机访问pod时,显示pod的IP

[root@nginx-ds-jdp7q /]# tail -f /usr/local/nginx/logs/access.log

172.7.22.2 - - [13/Jan/2020:14:15:39 +0000] "HEAD / HTTP/1.1" 200 0 "-" "curl/7.29.0"

172.7.22.2 - - [13/Jan/2020:14:15:47 +0000] "HEAD / HTTP/1.1" 200 0 "-" "curl/7.29.0"

172.7.22.2 - - [13/Jan/2020:14:15:48 +0000] "HEAD / HTTP/1.1" 200 0 "-" "curl/7.29.0"

172.7.22.2 - - [13/Jan/2020:14:15:48 +0000] "HEAD / HTTP/1.1" 200 0 "-" "curl/7.29.0"

5.2. CoreDNS

CoreDNS用于实现 service --> cluster IP 的DNS解析。以容器的方式交付到k8s集群,由k8s自行管理,降低人为操作的复杂度。

在k8s集群中pod的IP是不断的变化的,使用service通过标签选择器关联一组pod。

抽象出集群网络,通过相对固定的“集群IP”使服务接入点固定。

CoreDNS只负责把服务名(Service)和集群网络IP(ClusterIP)管理起来。

5.2.1. 配置yaml文件库

在hdss7-200中配置yaml文件库,后期通过Http方式去使用yaml清单文件。

- 配置nginx虚拟主机( hdss7-200 )

[root@hdss7-200 ~]# vim /etc/nginx/conf.d/k8s-yaml.od.com.conf

server {

listen 80;

server_name k8s-yaml.od.com;

location / {

autoindex on;

default_type text/plain;

root /data/k8s-yaml;

}

}

[root@hdss7-200 ~]# mkdir /data/k8s-yaml;

[root@hdss7-200 ~]# nginx -qt && nginx -s reload

- 配置dns解析(hdss7-11)

[root@hdss7-11 ~]# vim /var/named/od.com.zone

[root@hdss7-11 ~]# cat /var/named/od.com.zone

$ORIGIN od.com.

$TTL 600 ; 10 minutes

@ IN SOA dns.od.com. dnsadmin.od.com. (

2020011301 ; serial

10800 ; refresh (3 hours)

900 ; retry (15 minutes)

604800 ; expire (1 week)

86400 ; minimum (1 day)

)

NS dns.od.com.

$TTL 60 ; 1 minute

dns A 10.4.7.11

harbor A 10.4.7.200

k8s-yaml A 10.4.7.200

[root@hdss7-11 ~]# systemctl restart named

5.2.2. coredns的资源清单文件

清单文件存放到 hdss7-200:/data/k8s-yaml/coredns/coredns_1.6.1/

- rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: coredns

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

kubernetes.io/bootstrapping: rbac-defaults

addonmanager.kubernetes.io/mode: Reconcile

name: system:coredns

rules:

- apiGroups:

- ""

resources:

- endpoints

- services

- pods

- namespaces

verbs:

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

addonmanager.kubernetes.io/mode: EnsureExists

name: system:coredns

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:coredns

subjects:

- kind: ServiceAccount

name: coredns

namespace: kube-system

- configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: coredns

namespace: kube-system

data:

Corefile: |

.:53 {

errors

log

health

ready

kubernetes cluster.local 192.168.0.0/16

# 上级dns

forward . 10.4.7.11

cache 30

loop

reload

loadbalance

}

- deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: coredns

namespace: kube-system

labels:

k8s-app: coredns

kubernetes.io/name: "CoreDNS"

spec:

replicas: 1

selector:

matchLabels:

k8s-app: coredns

template:

metadata:

labels:

k8s-app: coredns

spec:

priorityClassName: system-cluster-critical

serviceAccountName: coredns

containers:

- name: coredns

image: harbor.od.com/public/coredns:v1.6.1

args:

- -conf

- /etc/coredns/Corefile

volumeMounts:

- name: config-volume

mountPath: /etc/coredns

ports:

- containerPort: 53

name: dns

protocol: UDP

- containerPort: 53

name: dns-tcp

protocol: TCP

- containerPort: 9153

name: metrics

protocol: TCP

livenessProbe:

httpGet:

path: /health

port: 8080

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

dnsPolicy: Default

volumes:

- name: config-volume

configMap:

name: coredns

items:

- key: Corefile

path: Corefile

- service.yaml

apiVersion: v1

kind: Service

metadata:

name: coredns

namespace: kube-system

labels:

k8s-app: coredns

kubernetes.io/cluster-service: "true"

kubernetes.io/name: "CoreDNS"

spec:

selector:

k8s-app: coredns

clusterIP: 192.168.0.2

ports:

- name: dns

port: 53

protocol: UDP

- name: dns-tcp

port: 53

- name: metrics

port: 9153

protocol: TCP

5.2.3. 交付coredns到K8s

# 准备镜像

[root@hdss7-200 ~]# docker pull coredns/coredns:1.6.1

[root@hdss7-200 ~]# docker image tag coredns/coredns:1.6.1 harbor.od.com/public/coredns:v1.6.1

[root@hdss7-200 ~]# docker image push harbor.od.com/public/coredns:v1.6.1

# 交付coredns

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/coredns/coredns_1.6.1/rbac.yaml

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/coredns/coredns_1.6.1/configmap.yaml

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/coredns/coredns_1.6.1/deployment.yaml

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/coredns/coredns_1.6.1/service.yaml

[root@hdss7-21 ~]# kubectl get all -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/coredns-6b6c4f9648-4vtcl 1/1 Running 0 38s 172.7.21.3 hdss7-21.host.com <none> <none>

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/coredns ClusterIP 192.168.0.2 <none> 53/UDP,53/TCP,9153/TCP 29s k8s-app=coredns

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

deployment.apps/coredns 1/1 1 1 39s coredns harbor.od.com/public/coredns:v1.6.1 k8s-app=coredns

NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTOR

replicaset.apps/coredns-6b6c4f9648 1 1 1 39s coredns harbor.od.com/public/coredns:v1.6.1 k8s-app=coredns,pod-template-hash=6b6c4f9648

5.2.4. 测试dns

# 创建service

[root@hdss7-21 ~]# kubectl create deployment nginx-web --image=harbor.od.com/public/nginx:src_1.14.2

[root@hdss7-21 ~]# kubectl expose deployment nginx-web --port=80 --target-port=80

[root@hdss7-21 ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 192.168.0.1 <none> 443/TCP 8d

nginx-web ClusterIP 192.168.164.230 <none> 80/TCP 8s

# 测试DNS,集群外必须使用FQDN(Fully Qualified Domain Name),全域名

[root@hdss7-21 ~]# dig -t A nginx-web.default.svc.cluster.local @192.168.0.2 +short # 内网解析OK

192.168.164.230

[root@hdss7-21 ~]# dig -t A www.baidu.com @192.168.0.2 +short # 外网解析OK

www.a.shifen.com.

180.101.49.11

180.101.49.12

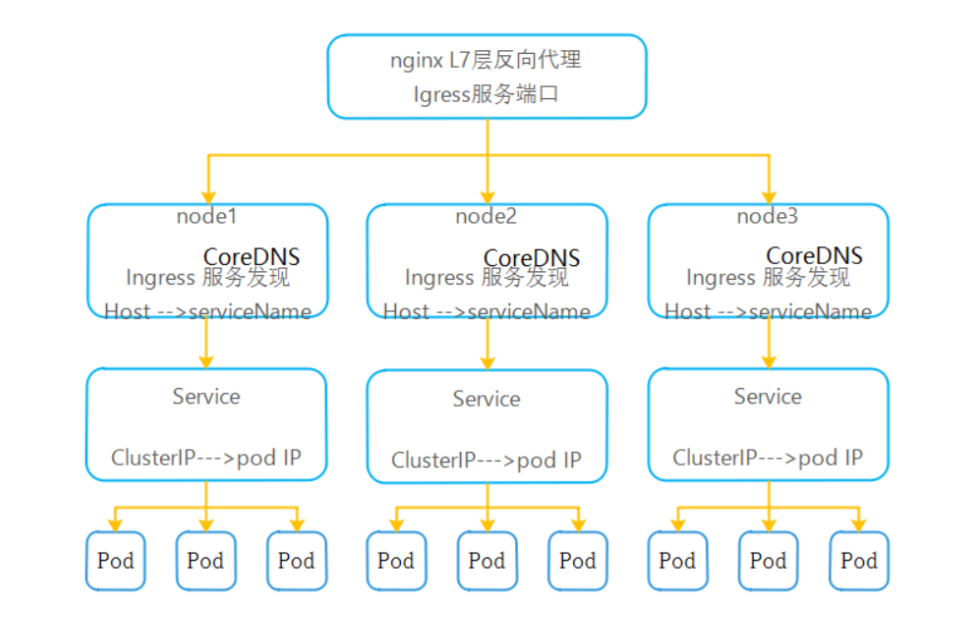

5.3. Ingress-Controller

service是将一组pod管理起来,提供了一个cluster ip和service name的统一访问入口,屏蔽了pod的ip变化。 ingress 是一种基于七层的流量转发策略,即将符合条件的域名或者location流量转发到特定的service上,而ingress仅仅是一种规则,k8s内部并没有自带代理程序完成这种规则转发。

ingress-controller 是一个代理服务器,将ingress的规则能真正实现的方式,常用的有 nginx,traefik,haproxy。但是在k8s集群中,建议使用traefik,性能比haroxy强大,更新配置不需要重载服务,是首选的ingress-controller。github地址:https://github.com/containous/traefik

使得k8s集群内部的服务,能够被外部访问。

NodePort

使用这种方式,无法使用kube-proxy的ipvs模型,只能使用iptables模型。

使用ingress资源

Ingress只能调度并暴露7层应用,特指http和https。

Ingress是K8S API的标准资源类型之一,也是一种核心资源,它其实就是一组基于域名和url路径,把用户的请求转发给指定的service资源的规则。

可以将集群外部的的请求流量转发至集群内部,从而实现“服务暴露”

Ingress控制器是能够为Ingress资源监听某套接字,然后根据Ingress规则匹配机制路由调度流量的一个组件。

kubectl describe pod traefik-ingress-2tr49 -n kube-system

5.3.1. 配置traefik资源清单

清单文件存放到 hdss7-200:/data/k8s-yaml/traefik/traefik_1.7.2

- rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: traefik-ingress-controller

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: traefik-ingress-controller

rules:

- apiGroups:

- ""

resources:

- services

- endpoints

- secrets

verbs:

- get

- list

- watch

- apiGroups:

- extensions

resources:

- ingresses

verbs:

- get

- list

- watch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: traefik-ingress-controller

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: traefik-ingress-controller

subjects:

- kind: ServiceAccount

name: traefik-ingress-controller

namespace: kube-system

- daemonset.yaml

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: traefik-ingress

namespace: kube-system

labels:

k8s-app: traefik-ingress

spec:

template:

metadata:

labels:

k8s-app: traefik-ingress

name: traefik-ingress

spec:

serviceAccountName: traefik-ingress-controller

terminationGracePeriodSeconds: 60

containers:

- image: harbor.od.com/public/traefik:v1.7.2

name: traefik-ingress

ports:

- name: controller

containerPort: 80

hostPort: 81

- name: admin-web

containerPort: 8080

securityContext:

capabilities:

drop:

- ALL

add:

- NET_BIND_SERVICE

args:

- --api

- --kubernetes

- --logLevel=INFO

- --insecureskipverify=true

- --kubernetes.endpoint=https://10.4.7.10:7443

- --accesslog

- --accesslog.filepath=/var/log/traefik_access.log

- --traefiklog

- --traefiklog.filepath=/var/log/traefik.log

- --metrics.prometheus

- service.yaml

kind: Service

apiVersion: v1

metadata:

name: traefik-ingress-service

namespace: kube-system

spec:

selector:

k8s-app: traefik-ingress

ports:

- protocol: TCP

port: 80

name: controller

- protocol: TCP

port: 8080

name: admin-web

- ingress.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: traefik-web-ui

namespace: kube-system

annotations:

kubernetes.io/ingress.class: traefik

spec:

rules:

- host: traefik.od.com

http:

paths:

- path: /

backend:

serviceName: traefik-ingress-service

servicePort: 8080

- 准备镜像

[root@hdss7-200 traefik_1.7.2]# docker pull traefik:v1.7.2-alpine

[root@hdss7-200 traefik_1.7.2]# docker image tag traefik:v1.7.2-alpine harbor.od.com/public/traefik:v1.7.2

[root@hdss7-200 traefik_1.7.2]# docker push harbor.od.com/public/traefik:v1.7.2

5.3.2. 交付traefik到k8s

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/traefik/traefik_1.7.2/rbac.yaml

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/traefik/traefik_1.7.2/daemonset.yaml

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/traefik/traefik_1.7.2/service.yaml

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/traefik/traefik_1.7.2/ingress.yaml

[root@hdss7-21 ~]# kubectl get pods -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

coredns-6b6c4f9648-4vtcl 1/1 Running 1 24h 172.7.21.3 hdss7-21.host.com <none> <none>

traefik-ingress-4gm4w 1/1 Running 0 77s 172.7.21.5 hdss7-21.host.com <none> <none>

traefik-ingress-hwr2j 1/1 Running 0 77s 172.7.22.3 hdss7-22.host.com <none> <none>

[root@hdss7-21 ~]# kubectl get ds -n kube-system

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

traefik-ingress 2 2 2 2 2 <none> 107s

5.3.3. 配置外部nginx负载均衡

- 在hdss7-11,hdss7-12 配置nginx L7转发

[root@hdss7-11 ~]# vim /etc/nginx/conf.d/od.com.conf

server {

server_name *.od.com;

location / {

proxy_pass http://default_backend_traefik;

proxy_set_header Host $http_host;

proxy_set_header x-forwarded-for $proxy_add_x_forwarded_for;

}

}

upstream default_backend_traefik {

# 所有的nodes都放到upstream中

server 10.4.7.21:81 max_fails=3 fail_timeout=10s;

server 10.4.7.22:81 max_fails=3 fail_timeout=10s;

}

[root@hdss7-11 ~]# nginx -tq && nginx -s reload

- 配置dns解析

[root@hdss7-11 ~]# vim /var/named/od.com.zone

$ORIGIN od.com.

$TTL 600 ; 10 minutes

@ IN SOA dns.od.com. dnsadmin.od.com. (

2020011302 ; serial

10800 ; refresh (3 hours)

900 ; retry (15 minutes)

604800 ; expire (1 week)

86400 ; minimum (1 day)

)

NS dns.od.com.

$TTL 60 ; 1 minute

dns A 10.4.7.11

harbor A 10.4.7.200

k8s-yaml A 10.4.7.200

traefik A 10.4.7.10

[root@hdss7-11 ~]# systemctl restart named

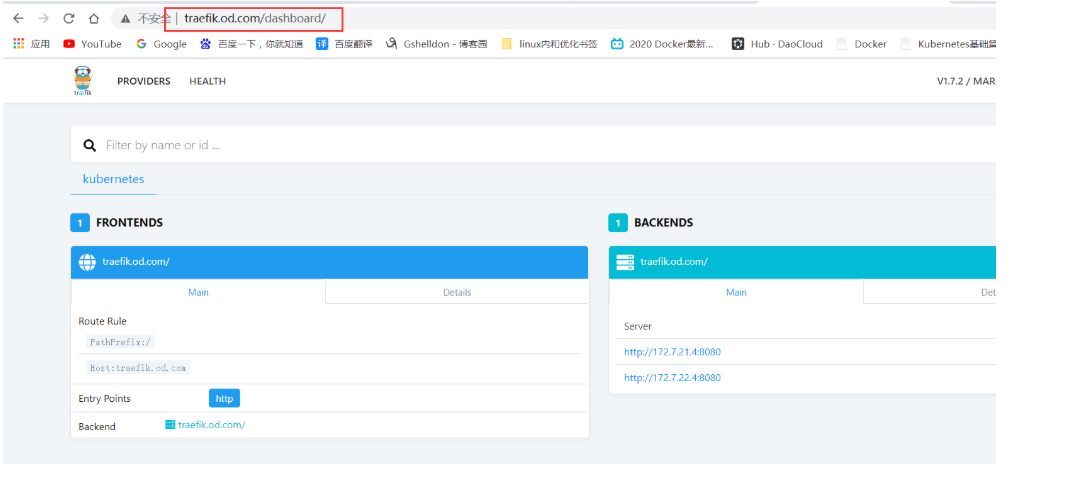

- 查看traefik网页

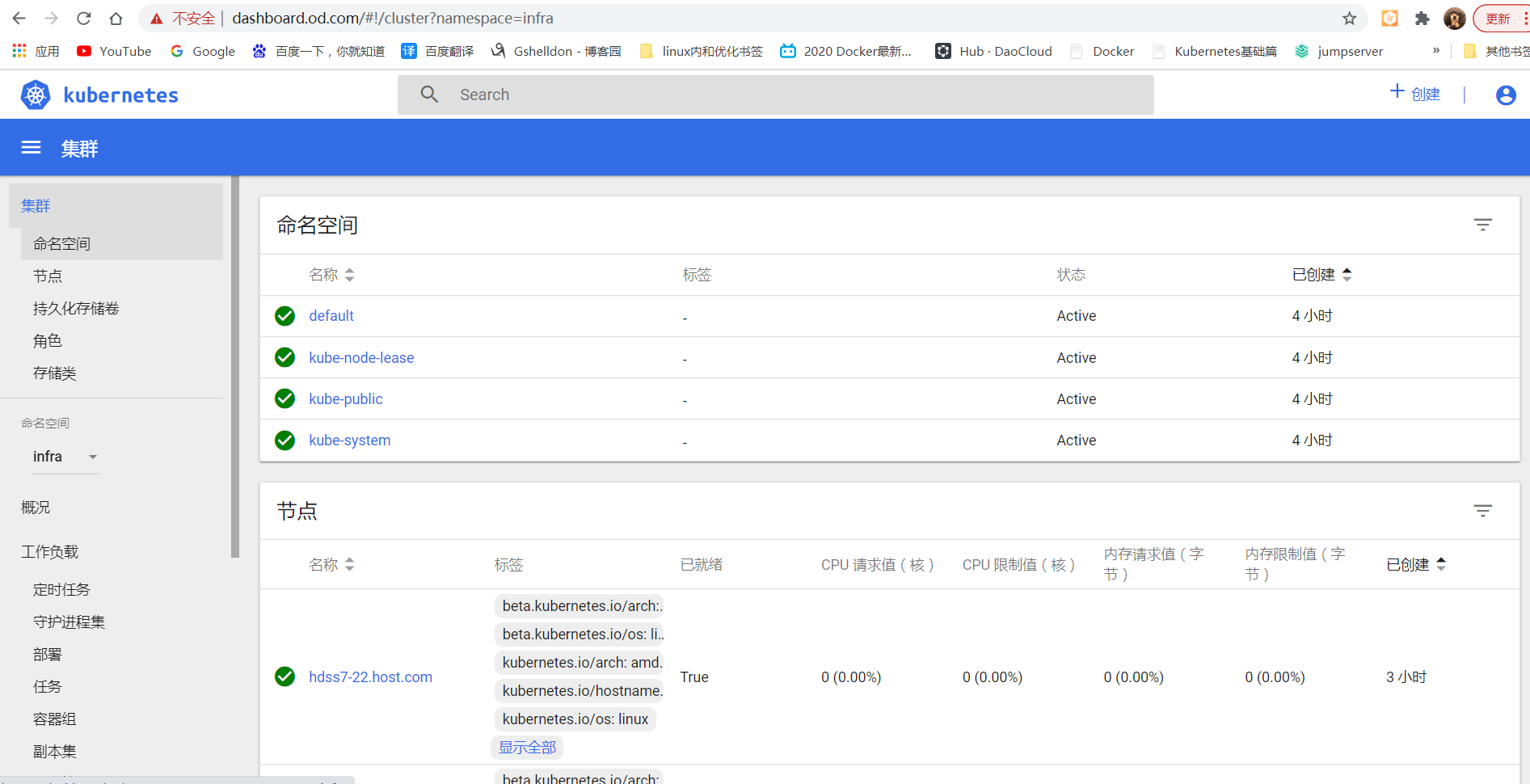

5.4. dashboard

5.4.1. 配置资源清单

清单文件存放到 hdss7-200:/data/k8s-yaml/dashboard/dashboard_1.10.1

- 准备镜像

# 镜像准备

# 因不可描述原因,无法访问k8s.gcr.io,改成registry.aliyuncs.com/google_containers

[root@hdss7-200 ~]# docker image pull registry.aliyuncs.com/google_containers/kubernetes-dashboard-amd64:v1.10.1

[root@hdss7-200 ~]# docker image tag f9aed6605b81 harbor.od.com/public/kubernetes-dashboard-amd64:v1.10.1

[root@hdss7-200 ~]# docker image push harbor.od.com/public/kubernetes-dashboard-amd64:v1.10.1

- rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

addonmanager.kubernetes.io/mode: Reconcile

name: kubernetes-dashboard-admin

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard-admin

namespace: kube-system

labels:

k8s-app: kubernetes-dashboard

addonmanager.kubernetes.io/mode: Reconcile

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard-admin

namespace: kube-system

- deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: kubernetes-dashboard

namespace: kube-system

labels:

k8s-app: kubernetes-dashboard

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ''

spec:

priorityClassName: system-cluster-critical

containers:

- name: kubernetes-dashboard

image: harbor.od.com/public/kubernetes-dashboard-amd64:v1.10.1

resources:

limits:

cpu: 100m

memory: 300Mi

requests:

cpu: 50m

memory: 100Mi

ports:

- containerPort: 8443

protocol: TCP

args:

# PLATFORM-SPECIFIC ARGS HERE

- --auto-generate-certificates

volumeMounts:

- name: tmp-volume

mountPath: /tmp

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

volumes:

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard-admin

tolerations:

- key: "CriticalAddonsOnly"

operator: "Exists"

- service.yaml

apiVersion: v1

kind: Service

metadata:

name: kubernetes-dashboard

namespace: kube-system

labels:

k8s-app: kubernetes-dashboard

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

selector:

k8s-app: kubernetes-dashboard

ports:

- port: 443

targetPort: 8443

- ingress.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: kubernetes-dashboard

namespace: kube-system

annotations:

kubernetes.io/ingress.class: traefik

spec:

rules:

- host: dashboard.od.com

http:

paths:

- backend:

serviceName: kubernetes-dashboard

servicePort: 443

5.4.2. 交付dashboard到k8s

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/dashboard/dashboard_1.10.1/rbac.yaml

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/dashboard/dashboard_1.10.1/deployment.yaml

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/dashboard/dashboard_1.10.1/service.yaml

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/dashboard/dashboard_1.10.1/ingress.yaml

5.4.3. 配置DNS解析

[root@hdss7-11 ~]# vim /var/named/od.com.zone

$ORIGIN od.com.

$TTL 600 ; 10 minutes

@ IN SOA dns.od.com. dnsadmin.od.com. (

2020011303 ; serial

10800 ; refresh (3 hours)

900 ; retry (15 minutes)

604800 ; expire (1 week)

86400 ; minimum (1 day)

)

NS dns.od.com.

$TTL 60 ; 1 minute

dns A 10.4.7.11

harbor A 10.4.7.200

k8s-yaml A 10.4.7.200

traefik A 10.4.7.10

dashboard A 10.4.7.10

[root@hdss7-11 ~]# systemctl restart named.service

5.4.4. 签发SSL证书

[root@hdss7-200 ~]# cd /opt/certs/

[root@hdss7-200 certs]# (umask 077; openssl genrsa -out dashboard.od.com.key 2048)

[root@hdss7-200 certs]# openssl req -new -key dashboard.od.com.key -out dashboard.od.com.csr -subj "/CN=dashboard.od.com/C=CN/ST=BJ/L=Beijing/O=OldboyEdu/OU=ops"

[root@hdss7-200 certs]# openssl x509 -req -in dashboard.od.com.csr -CA ca.pem -CAkey ca-key.pem -CAcreateserial -out dashboard.od.com.crt -days 3650

[root@hdss7-200 certs]# ll dashboard.od.com.*

-rw-r--r-- 1 root root 1196 Jan 29 20:52 dashboard.od.com.crt

-rw-r--r-- 1 root root 1005 Jan 29 20:51 dashboard.od.com.csr

-rw------- 1 root root 1675 Jan 29 20:51 dashboard.od.com.key

[root@hdss7-200 certs]# for i in 11 12;do ssh hdss7-$i mkdir /etc/nginx/certs/;scp dashboard.od.com.key dashboard.od.com.crt hdss7-$i:/etc/nginx/certs/ ;done

5.4.5. 配置Nginx

# hdss7-11和hdss7-12都需要操作

[root@hdss7-11 ~]# vim /etc/nginx/conf.d/dashborad.conf

server {

listen 80;

server_name dashboard.od.com;

rewrite ^(.*)$ https://${server_name}$1 permanent;

}

server {

listen 443 ssl;

server_name dashboard.od.com;

ssl_certificate "certs/dashboard.od.com.crt";

ssl_certificate_key "certs/dashboard.od.com.key";

ssl_session_cache shared:SSL:1m;

ssl_session_timeout 10m;

ssl_ciphers HIGH:!aNULL:!MD5;

ssl_prefer_server_ciphers on;

location / {

proxy_pass http://default_backend_traefik;

proxy_set_header Host $http_host;

proxy_set_header x-forwarded-for $proxy_add_x_forwarded_for;

}

}

[root@hdss7-11 ~]# nginx -t && nginx -s reload

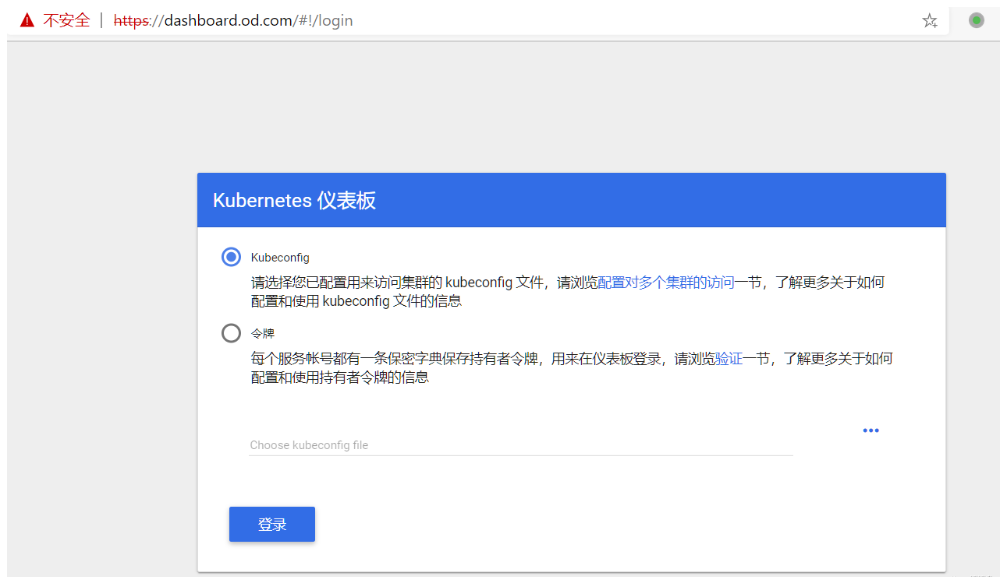

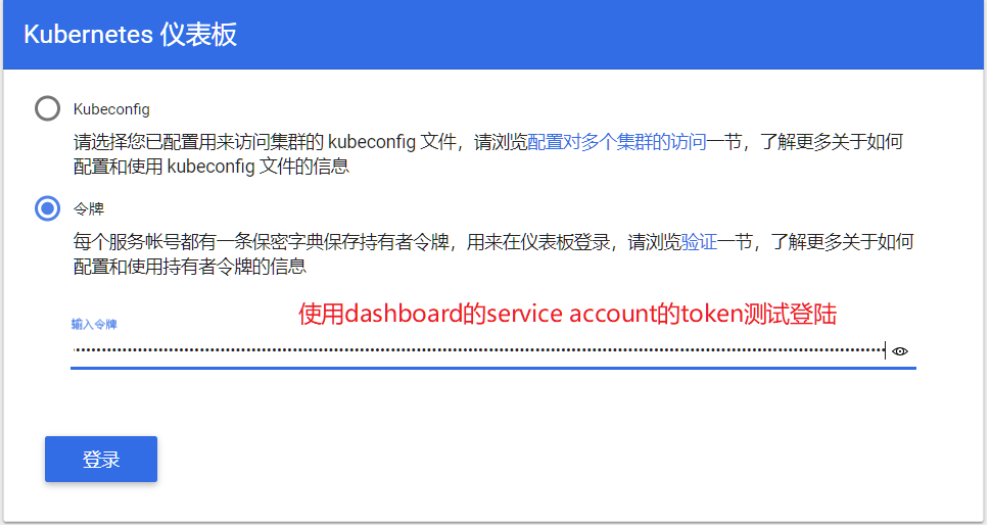

5.4.6. 测试token登陆

[root@hdss7-21 ~]# kubectl get secret -n kube-system|grep kubernetes-dashboard

kubernetes-dashboard-token-hr5rj kubernetes.io/service-account-token 3 17m

[root@hdss7-21 ~]# kubectl describe secret kubernetes-dashboard-admin-token-bsfv7 -n kube-system|grep token

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZC10b2tlbi1ocjVyaiIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6ImZhNzAxZTRmLWVjMGItNDFkNS04NjdmLWY0MGEwYmFkMjFmNSIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlLXN5c3RlbTprdWJlcm5ldGVzLWRhc2hib2FyZCJ9.SDUZEkH_N0B6rjm6bW_jN03F4pHCPafL3uKD2HU0ksM0oenB2425jxvfi16rUbTRCsfcGqYXRrE2x15gpb03fb3jJy-IhnInUnPrw6ZwEdqWagen_Z4tdFhUgCpdjdShHy40ZPfql_iuVKbvv7ASt8w8v13Ar3FxztyDyLScVO3rNEezT7JUqMI4yj5LYQ0IgpSXoH12tlDSTyX8Rk2a_3QlOM_yT5GB_GEZkwIESttQKVr7HXSCrQ2tEdYA4cYO2AbF1NgAo_CVBNNvZLvdDukWiQ_b5zwOiO0cUbbiu46x_p6gjNWzVb7zHNro4gh0Shr4hIhiRQot2DJ-sq94Ag

二进制部署K8S-3核心插件部署的更多相关文章

- k8s之Dashboard插件部署及使用

k8s之Dashboard插件部署及使用 目录 k8s之Dashboard插件部署及使用 1. Dashboard介绍 2. 服务器环境 3. 在K8S工具目录中创建dashboard工作目录 4. ...

- Elasticsearch学习随笔(一)--原理理解与5.0核心插件部署过程

最近由于要涉及一些安全运维的工作,最近在研究Elasticsearch,为ELK做相关的准备.于是把自己学习的一些随笔分享给大家,进行学习,在部署常用插件的时候由于是5.0版本的Elasticsear ...

- K8S(03)核心插件-Flannel网络插件

系列文章说明 本系列文章,可以基本算是 老男孩2019年王硕的K8S周末班课程 笔记,根据视频来看本笔记最好,否则有些地方会看不明白 需要视频可以联系我 K8S核心网络插件Flannel 目录 系列文 ...

- K8S(05)核心插件-ingress(服务暴露)控制器-traefik

K8S核心插件-ingress(服务暴露)控制器-traefik 1 K8S两种服务暴露方法 前面通过coredns在k8s集群内部做了serviceNAME和serviceIP之间的自动映射,使得不 ...

- K8S(04)核心插件-coredns服务

K8S核心插件-coredns服务 目录 K8S核心插件-coredns服务 1 coredns用途 1.1 为什么需要服务发现 2 coredns的部署 2.1 获取coredns的docker镜像 ...

- 二进制部署k8s

一.二进制部署 k8s集群 1)参考文章 博客: https://blog.qikqiak.com 文章: https://www.qikqiak.com/post/manual-install-hi ...

- 【原】二进制部署 k8s 1.18.3

二进制部署 k8s 1.18.3 1.相关前置信息 1.1 版本信息 kube_version: v1.18.3 etcd_version: v3.4.9 flannel: v0.12.0 cored ...

- 二进制方法-部署k8s集群部署1.18版本

二进制方法-部署k8s集群部署1.18版本 1. 前置知识点 1.1 生产环境可部署kubernetes集群的两种方式 目前生产部署Kubernetes集群主要有两种方式 kuberadm Kubea ...

- 6、二进制安装K8s之部署kubectl

二进制安装K8s之部署kubectl 我们把k8s-master 也设置成node,所以先master上面部署node,在其他机器上部署node也适用,更换名称即可. 1.在所有worker node ...

随机推荐

- [贪心]P1049 装箱问题

装箱问题 题目描述 有一个箱子容量为V(正整数,0≤V≤20000),同时有n个物品(0<n≤30),每个物品有一个体积(正整数). 要求n个物品中,任取若干个装入箱内,使箱子的剩余空间为最小. ...

- C# Linq 延迟查询的执行

在定义linq查询表达式时,查询是不会执行,查询会在迭代数据项时运行.它使用yield return 语句返回谓词为true的元素. var names = new List<string> ...

- HashMap、ConcurrentHashMap 1.7和1.8对比

本篇内容是学习的记录,可能会有所不足. 一:JDK1.7中的HashMap JDK1.7的hashMap是由数组 + 链表组成 /** 1 << 4,表示1,左移4位,变成10000,即1 ...

- Day13_70_join()

join() 方法 * 合并线程 join()线程合并方法出现在哪,就会和哪个线程合并 (此处是thread和主线程合并), * 合并之后变成了单线程,主线程需要等thread线程执行完毕后再执行,两 ...

- Java代理简述

1.什么是代理? 对类或对象(目标对象)进行增强功能,最终形成一个新的代理对象,(Spring Framework中)当应用调用该对象(目标对象)的方法时,实际调用的是代理对象增强后的方法,比如对功能 ...

- Nest 中处理 XML 类型的请求与响应

公众号及小程序的微信接口是通过 xml 格式进行数据交换的. 比如接收普通消息的接口: 当普通微信用户向公众账号发消息时,微信服务器将 POST 消息的 XML 数据包到开发者填写的 URL 上. - ...

- Mybatis(一)Porxy动态代理和sql解析替换

JDK常用核心原理 概述 在 Mybatis 中,常用的作用就是讲数据库中的表的字段映射为对象的属性,在进入Mybatis之前,原生的 JDBC 有几个步骤:导入 JDBC 驱动包,通过 Driver ...

- 04- 移动APP功能测试要点以及具体业务流程测试

5.离线测试: 离线是应用程序在本地的客户端会缓存一部分数据以供程序下次调用. 1.对于一些程序,需要在登录进来后,这是没有网络的情况下可以浏览本地数据. 2.对于无网络时,刷新获取新数据时,不能获取 ...

- 关于Number、parseInt、isNaN转化参数

1.首先,关于NaN的相等判断 alert(NaN==NaN) //返回的是false: 2.isNaN 确定这个参数是否是数值或者是否可以被转化为数值:NaN是not a number 的缩写,所以 ...

- 【swagger】 swagger-ui的升级版swagger-bootstrap-ui

swagger-bootstrap-ui是基于swagger-ui做了一些优化拓展: swagger-ui的界面: swagger-bootstrap-ui界面: 相比于原生的swagger-ui , ...