ceph-csi源码分析(4)-rbd driver-controllerserver分析

更多ceph-csi其他源码分析,请查看下面这篇博文:kubernetes ceph-csi分析目录导航

ceph-csi源码分析(4)-rbd driver-controllerserver分析

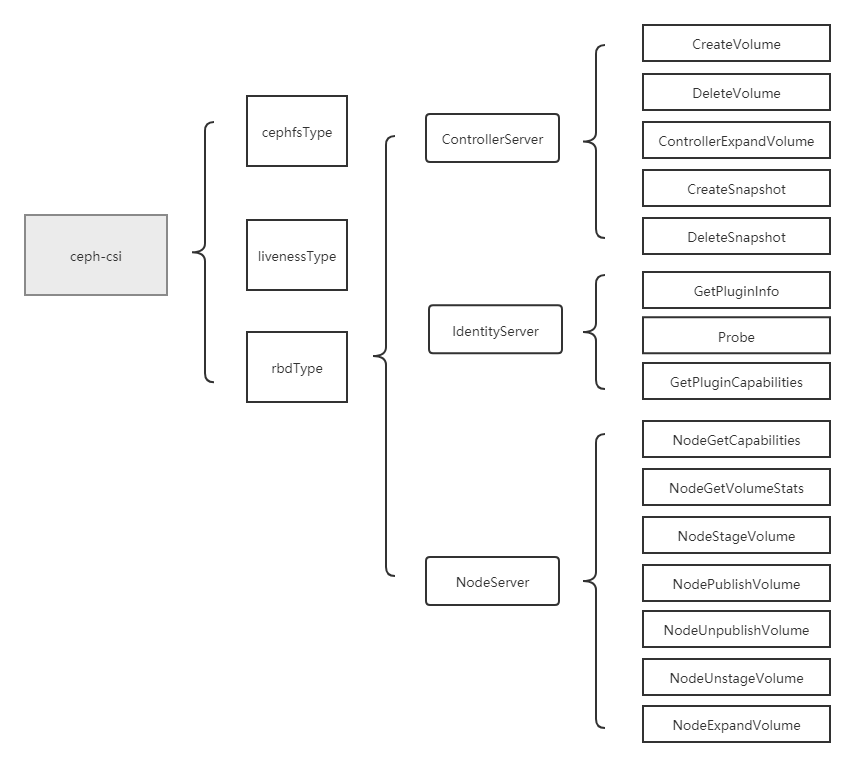

当ceph-csi组件启动时指定的driver type为rbd时,会启动rbd driver相关的服务。然后再根据controllerserver、nodeserver的参数配置,决定启动ControllerServer与IdentityServer,或NodeServer与IdentityServer。

基于tag v3.0.0

https://github.com/ceph/ceph-csi/releases/tag/v3.0.0

rbd driver分析将分为4个部分,分别是服务入口分析、controllerserver分析、nodeserver分析与IdentityServer分析。

这节进行controllerserver分析,controllerserver主要包括了CreateVolume(创建存储)、DeleteVolume(删除存储)、ControllerExpandVolume(存储扩容)、CreateSnapshot(创建存储快照)、DeleteSnapshot(删除存储快照)操作,这里主要对CreateVolume(创建存储)、DeleteVolume(删除存储)、ControllerExpandVolume(存储扩容)进行分析。

controllerserver分析

(1)CreateVolume

简介

调用ceph存储后端,创建存储(rbd image)。

CreateVolume creates the volume in backend.

大致步骤:

(1)检查该driver是否支持存储的创建,不支持则直接返回错误;

(2)构建ceph请求凭证,生成volume ID;

(3)调用ceph存储后端,创建存储。

三种创建来源:

(1)从已有image拷贝;

(2)根据快照创建image;

(3)直接创建新的image。

CreateVolume

主体流程:

(1)检查该driver是否支持存储的创建,不支持则直接返回错误;

(2)根据secret构建ceph请求凭证(secret由external-provisioner组件传入);

(3)处理请求参数,并转换为rbdVol结构体(根据请求参数clusterID,从本地ceph配置文件中读取该clusterID对应的monitor信息,将clusterID与monitor赋值给rbdVol结构体;检查请求参数imageFeatures,只支持Layering;根据请求参数VolumeCapabilities决定在存储挂载时是否启动in-use检查,即多节点挂载检查;存储大小只支持整数,对小数做进一处理);

(4)检查并获取锁(同名存储在同一时间,只能做创建、删除、扩容操作中的一个,通过该锁来保证互斥);

(5)创建与Ceph集群的连接;

(6)生成RbdImageName与ReservedID,并生成volume ID;

(7)调用createBackingImage去创建image;

(8)释放锁。

(暂时跳过从已有image拷贝与根据快照创建image的分析)

//ceph-csi/internal/rbd/controllerserver.go

func (cs *ControllerServer) CreateVolume(ctx context.Context, req *csi.CreateVolumeRequest) (*csi.CreateVolumeResponse, error) {

// (1)校验请求参数;

if err := cs.validateVolumeReq(ctx, req); err != nil {

return nil, err

}

// (2)根据secret构建ceph请求凭证;

cr, err := util.NewUserCredentials(req.GetSecrets())

if err != nil {

return nil, status.Error(codes.Internal, err.Error())

}

defer cr.DeleteCredentials()

// (3)将请求参数转换为rbdVol结构体;

rbdVol, err := cs.parseVolCreateRequest(ctx, req)

if err != nil {

return nil, err

}

defer rbdVol.Destroy()

// Existence and conflict checks

if acquired := cs.VolumeLocks.TryAcquire(req.GetName()); !acquired {

klog.Errorf(util.Log(ctx, util.VolumeOperationAlreadyExistsFmt), req.GetName())

return nil, status.Errorf(codes.Aborted, util.VolumeOperationAlreadyExistsFmt, req.GetName())

}

defer cs.VolumeLocks.Release(req.GetName())

// (4)创建与Ceph集群的连接;

err = rbdVol.Connect(cr)

if err != nil {

klog.Errorf(util.Log(ctx, "failed to connect to volume %v: %v"), rbdVol.RbdImageName, err)

return nil, status.Error(codes.Internal, err.Error())

}

parentVol, rbdSnap, err := checkContentSource(ctx, req, cr)

if err != nil {

return nil, err

}

found, err := rbdVol.Exists(ctx, parentVol)

if err != nil {

return nil, getGRPCErrorForCreateVolume(err)

}

if found {

if rbdSnap != nil {

// check if image depth is reached limit and requires flatten

err = checkFlatten(ctx, rbdVol, cr)

if err != nil {

return nil, err

}

}

return buildCreateVolumeResponse(ctx, req, rbdVol)

}

err = validateRequestedVolumeSize(rbdVol, parentVol, rbdSnap, cr)

if err != nil {

return nil, err

}

err = flattenParentImage(ctx, parentVol, cr)

if err != nil {

return nil, err

}

// (5)生成rbd image name并生成volume ID;

err = reserveVol(ctx, rbdVol, rbdSnap, cr)

if err != nil {

return nil, status.Error(codes.Internal, err.Error())

}

defer func() {

if err != nil {

if !errors.Is(err, ErrFlattenInProgress) {

errDefer := undoVolReservation(ctx, rbdVol, cr)

if errDefer != nil {

klog.Warningf(util.Log(ctx, "failed undoing reservation of volume: %s (%s)"), req.GetName(), errDefer)

}

}

}

}()

// (6)调用createBackingImage去创建image。

err = cs.createBackingImage(ctx, cr, rbdVol, parentVol, rbdSnap)

if err != nil {

if errors.Is(err, ErrFlattenInProgress) {

return nil, status.Error(codes.Aborted, err.Error())

}

return nil, err

}

volumeContext := req.GetParameters()

volumeContext["pool"] = rbdVol.Pool

volumeContext["journalPool"] = rbdVol.JournalPool

volumeContext["imageName"] = rbdVol.RbdImageName

volume := &csi.Volume{

VolumeId: rbdVol.VolID,

CapacityBytes: rbdVol.VolSize,

VolumeContext: volumeContext,

ContentSource: req.GetVolumeContentSource(),

}

if rbdVol.Topology != nil {

volume.AccessibleTopology =

[]*csi.Topology{

{

Segments: rbdVol.Topology,

},

}

}

return &csi.CreateVolumeResponse{Volume: volume}, nil

}

1 reserveVol

用于生成RbdImageName与ReservedID,并生成volume ID。调用j.ReserveName获取imageName,调用util.GenerateVolID生成volume ID。

//ceph-csi/internal/rbd/rbd_journal.go

// reserveVol is a helper routine to request a rbdVolume name reservation and generate the

// volume ID for the generated name.

func reserveVol(ctx context.Context, rbdVol *rbdVolume, rbdSnap *rbdSnapshot, cr *util.Credentials) error {

var (

err error

)

err = updateTopologyConstraints(rbdVol, rbdSnap)

if err != nil {

return err

}

journalPoolID, imagePoolID, err := util.GetPoolIDs(ctx, rbdVol.Monitors, rbdVol.JournalPool, rbdVol.Pool, cr)

if err != nil {

return err

}

kmsID := ""

if rbdVol.Encrypted {

kmsID = rbdVol.KMS.GetID()

}

j, err := volJournal.Connect(rbdVol.Monitors, cr)

if err != nil {

return err

}

defer j.Destroy()

// 生成rbd image name

rbdVol.ReservedID, rbdVol.RbdImageName, err = j.ReserveName(

ctx, rbdVol.JournalPool, journalPoolID, rbdVol.Pool, imagePoolID,

rbdVol.RequestName, rbdVol.NamePrefix, "", kmsID)

if err != nil {

return err

}

// 生成volume ID

rbdVol.VolID, err = util.GenerateVolID(ctx, rbdVol.Monitors, cr, imagePoolID, rbdVol.Pool,

rbdVol.ClusterID, rbdVol.ReservedID, volIDVersion)

if err != nil {

return err

}

util.DebugLog(ctx, "generated Volume ID (%s) and image name (%s) for request name (%s)",

rbdVol.VolID, rbdVol.RbdImageName, rbdVol.RequestName)

return nil

}

1.1 GenerateVolID

调用vi.ComposeCSIID()来生成volume ID。

//ceph-csi/internal/util/util.go

func GenerateVolID(ctx context.Context, monitors string, cr *Credentials, locationID int64, pool, clusterID, objUUID string, volIDVersion uint16) (string, error) {

var err error

if locationID == InvalidPoolID {

locationID, err = GetPoolID(monitors, cr, pool)

if err != nil {

return "", err

}

}

// generate the volume ID to return to the CO system

vi := CSIIdentifier{

LocationID: locationID,

EncodingVersion: volIDVersion,

ClusterID: clusterID,

ObjectUUID: objUUID,

}

volID, err := vi.ComposeCSIID()

return volID, err

}

vi.ComposeCSIID()为生成volume ID的方法,volume ID由csi_id_version + length of clusterID + clusterID + poolID + ObjectUUID组成,共64bytes。

//ceph-csi/internal/util/volid.go

/*

ComposeCSIID composes a CSI ID from passed in parameters.

Version 1 of the encoding scheme is as follows,

[csi_id_version=1:4byte] + [-:1byte]

[length of clusterID=1:4byte] + [-:1byte]

[clusterID:36bytes (MAX)] + [-:1byte]

[poolID:16bytes] + [-:1byte]

[ObjectUUID:36bytes]

Total of constant field lengths, including '-' field separators would hence be,

4+1+4+1+1+16+1+36 = 64

*/

func (ci CSIIdentifier) ComposeCSIID() (string, error) {

buf16 := make([]byte, 2)

buf64 := make([]byte, 8)

if (knownFieldSize + len(ci.ClusterID)) > maxVolIDLen {

return "", errors.New("CSI ID encoding length overflow")

}

if len(ci.ObjectUUID) != uuidSize {

return "", errors.New("CSI ID invalid object uuid")

}

binary.BigEndian.PutUint16(buf16, ci.EncodingVersion)

versionEncodedHex := hex.EncodeToString(buf16)

binary.BigEndian.PutUint16(buf16, uint16(len(ci.ClusterID)))

clusterIDLength := hex.EncodeToString(buf16)

binary.BigEndian.PutUint64(buf64, uint64(ci.LocationID))

poolIDEncodedHex := hex.EncodeToString(buf64)

return strings.Join([]string{versionEncodedHex, clusterIDLength, ci.ClusterID,

poolIDEncodedHex, ci.ObjectUUID}, "-"), nil

}

2 createBackingImage

主要流程:

(1)调用createImage创建image;

(2)调用j.StoreImageID存储image ID等信息进omap。

//ceph-csi/internal/rbd/controllerserver.go

func (cs *ControllerServer) createBackingImage(ctx context.Context, cr *util.Credentials, rbdVol, parentVol *rbdVolume, rbdSnap *rbdSnapshot) error {

var err error

var j = &journal.Connection{}

j, err = volJournal.Connect(rbdVol.Monitors, cr)

if err != nil {

return status.Error(codes.Internal, err.Error())

}

defer j.Destroy()

// nolint:gocritic // this ifElseChain can not be rewritten to a switch statement

if rbdSnap != nil {

if err = cs.OperationLocks.GetRestoreLock(rbdSnap.SnapID); err != nil {

klog.Error(util.Log(ctx, err.Error()))

return status.Error(codes.Aborted, err.Error())

}

defer cs.OperationLocks.ReleaseRestoreLock(rbdSnap.SnapID)

err = cs.createVolumeFromSnapshot(ctx, cr, rbdVol, rbdSnap.SnapID)

if err != nil {

return err

}

util.DebugLog(ctx, "created volume %s from snapshot %s", rbdVol.RequestName, rbdSnap.RbdSnapName)

} else if parentVol != nil {

if err = cs.OperationLocks.GetCloneLock(parentVol.VolID); err != nil {

klog.Error(util.Log(ctx, err.Error()))

return status.Error(codes.Aborted, err.Error())

}

defer cs.OperationLocks.ReleaseCloneLock(parentVol.VolID)

return rbdVol.createCloneFromImage(ctx, parentVol)

} else {

err = createImage(ctx, rbdVol, cr)

if err != nil {

klog.Errorf(util.Log(ctx, "failed to create volume: %v"), err)

return status.Error(codes.Internal, err.Error())

}

}

util.DebugLog(ctx, "created volume %s backed by image %s", rbdVol.RequestName, rbdVol.RbdImageName)

defer func() {

if err != nil {

if !errors.Is(err, ErrFlattenInProgress) {

if deleteErr := deleteImage(ctx, rbdVol, cr); deleteErr != nil {

klog.Errorf(util.Log(ctx, "failed to delete rbd image: %s with error: %v"), rbdVol, deleteErr)

}

}

}

}()

err = rbdVol.getImageID()

if err != nil {

klog.Errorf(util.Log(ctx, "failed to get volume id %s: %v"), rbdVol, err)

return status.Error(codes.Internal, err.Error())

}

err = j.StoreImageID(ctx, rbdVol.JournalPool, rbdVol.ReservedID, rbdVol.ImageID, cr)

if err != nil {

klog.Errorf(util.Log(ctx, "failed to reserve volume %s: %v"), rbdVol, err)

return status.Error(codes.Internal, err.Error())

}

if rbdSnap != nil {

err = rbdVol.flattenRbdImage(ctx, cr, false, rbdHardMaxCloneDepth, rbdSoftMaxCloneDepth)

if err != nil {

klog.Errorf(util.Log(ctx, "failed to flatten image %s: %v"), rbdVol, err)

return err

}

}

if rbdVol.Encrypted {

err = rbdVol.ensureEncryptionMetadataSet(rbdImageRequiresEncryption)

if err != nil {

klog.Errorf(util.Log(ctx, "failed to save encryption status, deleting image %s: %s"),

rbdVol, err)

return status.Error(codes.Internal, err.Error())

}

}

return nil

}

2.1 StoreImageID

调用setOMapKeys将ImageID与ReservedID存入omap中。

//ceph-csi/internal/journal/voljournal.go

// StoreImageID stores the image ID in omap.

func (conn *Connection) StoreImageID(ctx context.Context, pool, reservedUUID, imageID string, cr *util.Credentials) error {

err := setOMapKeys(ctx, conn, pool, conn.config.namespace, conn.config.cephUUIDDirectoryPrefix+reservedUUID,

map[string]string{conn.config.csiImageIDKey: imageID})

if err != nil {

return err

}

return nil

}

//ceph-csi/internal/journal/omap.go

func setOMapKeys(

ctx context.Context,

conn *Connection,

poolName, namespace, oid string, pairs map[string]string) error {

// fetch and configure the rados ioctx

ioctx, err := conn.conn.GetIoctx(poolName)

if err != nil {

return omapPoolError(err)

}

defer ioctx.Destroy()

if namespace != "" {

ioctx.SetNamespace(namespace)

}

bpairs := make(map[string][]byte, len(pairs))

for k, v := range pairs {

bpairs[k] = []byte(v)

}

err = ioctx.SetOmap(oid, bpairs)

if err != nil {

klog.Errorf(

util.Log(ctx, "failed setting omap keys (pool=%q, namespace=%q, name=%q, pairs=%+v): %v"),

poolName, namespace, oid, pairs, err)

return err

}

util.DebugLog(ctx, "set omap keys (pool=%q, namespace=%q, name=%q): %+v)",

poolName, namespace, oid, pairs)

return nil

}

(2)DeleteVolume

简介

调用ceph存储后端,删除存储(rbd image)。

DeleteVolume deletes the volume in backend and removes the volume metadata from store

大致步骤:

(1)检查该driver是否支持存储的删除,不支持则直接返回错误;

(2)判断image是否还在使用(是否有watchers);

(3)没被使用则调用ceph存储后端删除该image。

DeleteVolume

主体流程:

(1)检查该driver是否支持存储的删除,不支持则直接返回错误;

(2)根据secret构建ceph请求凭证(secret由external-provisioner组件传入);

(3)从请求参数获取volumeID;

(4)将请求参数转换为rbdVol结构体;

(5)判断rbd image是否还在被使用,被使用则返回错误;

(6)删除temporary rbd image(clone操作的临时image);

(7)删除rbd image。

//ceph-csi/internal/rbd/controllerserver.go

func (cs *ControllerServer) DeleteVolume(ctx context.Context, req *csi.DeleteVolumeRequest) (*csi.DeleteVolumeResponse, error) {

// (1)校验请求参数;

if err := cs.Driver.ValidateControllerServiceRequest(csi.ControllerServiceCapability_RPC_CREATE_DELETE_VOLUME); err != nil {

klog.Errorf(util.Log(ctx, "invalid delete volume req: %v"), protosanitizer.StripSecrets(req))

return nil, err

}

// (2)根据secret构建ceph请求凭证;

cr, err := util.NewUserCredentials(req.GetSecrets())

if err != nil {

return nil, status.Error(codes.Internal, err.Error())

}

defer cr.DeleteCredentials()

// (3)从请求参数获取volumeID;

// For now the image get unconditionally deleted, but here retention policy can be checked

volumeID := req.GetVolumeId()

if volumeID == "" {

return nil, status.Error(codes.InvalidArgument, "empty volume ID in request")

}

if acquired := cs.VolumeLocks.TryAcquire(volumeID); !acquired {

klog.Errorf(util.Log(ctx, util.VolumeOperationAlreadyExistsFmt), volumeID)

return nil, status.Errorf(codes.Aborted, util.VolumeOperationAlreadyExistsFmt, volumeID)

}

defer cs.VolumeLocks.Release(volumeID)

// lock out volumeID for clone and expand operation

if err = cs.OperationLocks.GetDeleteLock(volumeID); err != nil {

klog.Error(util.Log(ctx, err.Error()))

return nil, status.Error(codes.Aborted, err.Error())

}

defer cs.OperationLocks.ReleaseDeleteLock(volumeID)

rbdVol := &rbdVolume{}

defer rbdVol.Destroy()

// (4)将请求参数转换为rbdVol结构体;

rbdVol, err = genVolFromVolID(ctx, volumeID, cr, req.GetSecrets())

if err != nil {

if errors.Is(err, util.ErrPoolNotFound) {

klog.Warningf(util.Log(ctx, "failed to get backend volume for %s: %v"), volumeID, err)

return &csi.DeleteVolumeResponse{}, nil

}

// if error is ErrKeyNotFound, then a previous attempt at deletion was complete

// or partially complete (image and imageOMap are garbage collected already), hence return

// success as deletion is complete

if errors.Is(err, util.ErrKeyNotFound) {

klog.Warningf(util.Log(ctx, "Failed to volume options for %s: %v"), volumeID, err)

return &csi.DeleteVolumeResponse{}, nil

}

// All errors other than ErrImageNotFound should return an error back to the caller

if !errors.Is(err, ErrImageNotFound) {

return nil, status.Error(codes.Internal, err.Error())

}

// If error is ErrImageNotFound then we failed to find the image, but found the imageOMap

// to lead us to the image, hence the imageOMap needs to be garbage collected, by calling

// unreserve for the same

if acquired := cs.VolumeLocks.TryAcquire(rbdVol.RequestName); !acquired {

klog.Errorf(util.Log(ctx, util.VolumeOperationAlreadyExistsFmt), rbdVol.RequestName)

return nil, status.Errorf(codes.Aborted, util.VolumeOperationAlreadyExistsFmt, rbdVol.RequestName)

}

defer cs.VolumeLocks.Release(rbdVol.RequestName)

if err = undoVolReservation(ctx, rbdVol, cr); err != nil {

return nil, status.Error(codes.Internal, err.Error())

}

return &csi.DeleteVolumeResponse{}, nil

}

defer rbdVol.Destroy()

// lock out parallel create requests against the same volume name as we

// cleanup the image and associated omaps for the same

if acquired := cs.VolumeLocks.TryAcquire(rbdVol.RequestName); !acquired {

klog.Errorf(util.Log(ctx, util.VolumeOperationAlreadyExistsFmt), rbdVol.RequestName)

return nil, status.Errorf(codes.Aborted, util.VolumeOperationAlreadyExistsFmt, rbdVol.RequestName)

}

defer cs.VolumeLocks.Release(rbdVol.RequestName)

// (5)判断rbd image是否还在被使用,被使用则返回错误;

inUse, err := rbdVol.isInUse()

if err != nil {

klog.Errorf(util.Log(ctx, "failed getting information for image (%s): (%s)"), rbdVol, err)

return nil, status.Error(codes.Internal, err.Error())

}

if inUse {

klog.Errorf(util.Log(ctx, "rbd %s is still being used"), rbdVol)

return nil, status.Errorf(codes.Internal, "rbd %s is still being used", rbdVol.RbdImageName)

}

// (6)删除temporary rbd image;

// delete the temporary rbd image created as part of volume clone during

// create volume

tempClone := rbdVol.generateTempClone()

err = deleteImage(ctx, tempClone, cr)

if err != nil {

// return error if it is not ErrImageNotFound

if !errors.Is(err, ErrImageNotFound) {

klog.Errorf(util.Log(ctx, "failed to delete rbd image: %s with error: %v"),

tempClone, err)

return nil, status.Error(codes.Internal, err.Error())

}

}

// (7)删除rbd image.

// Deleting rbd image

util.DebugLog(ctx, "deleting image %s", rbdVol.RbdImageName)

if err = deleteImage(ctx, rbdVol, cr); err != nil {

klog.Errorf(util.Log(ctx, "failed to delete rbd image: %s with error: %v"),

rbdVol, err)

return nil, status.Error(codes.Internal, err.Error())

}

if err = undoVolReservation(ctx, rbdVol, cr); err != nil {

klog.Errorf(util.Log(ctx, "failed to remove reservation for volume (%s) with backing image (%s) (%s)"),

rbdVol.RequestName, rbdVol.RbdImageName, err)

return nil, status.Error(codes.Internal, err.Error())

}

if rbdVol.Encrypted {

if err = rbdVol.KMS.DeletePassphrase(rbdVol.VolID); err != nil {

klog.Warningf(util.Log(ctx, "failed to clean the passphrase for volume %s: %s"), rbdVol.VolID, err)

}

}

return &csi.DeleteVolumeResponse{}, nil

}

isInUse

通过列出image的watcher来判断该image是否在被使用。当image没有watcher时,isInUse返回false,否则返回true。

//ceph-csi/internal/rbd/rbd_util.go

func (rv *rbdVolume) isInUse() (bool, error) {

image, err := rv.open()

if err != nil {

if errors.Is(err, ErrImageNotFound) || errors.Is(err, util.ErrPoolNotFound) {

return false, err

}

// any error should assume something else is using the image

return true, err

}

defer image.Close()

watchers, err := image.ListWatchers()

if err != nil {

return false, err

}

// because we opened the image, there is at least one watcher

return len(watchers) != 1, nil

}

(3)ControllerExpandVolume

简介

调用ceph存储后端,扩容存储(rbd image)。

ControllerExpandVolume expand RBD Volumes on demand based on resizer request.

大致步骤:

(1)检查该driver是否支持存储扩容,不支持则直接返回错误;

(2)调用ceph存储后端扩容该image。

实际上,存储扩容分为两大步骤,第一步是external-provisioner组件调用rbdType-ControllerServer类型的ceph-csi进行ControllerExpandVolume,主要负责将底层存储扩容;第二步是kubelet调用rbdType-NodeServer类型的ceph-csi进行NodeExpandVolume,主要负责将底层rbd image的扩容信息同步到rbd/nbd device,对xfs/ext文件系统进行扩展(当volumemode是block,则不用进行node端扩容操作)。

ControllerExpandVolume

主体流程:

(1)检查该driver是否支持存储扩容,不支持则直接返回错误;

(2)根据secret构建ceph请求凭证(secret由external-provisioner组件传入);

(3)将请求参数转换为rbdVol结构体;

(4)调用rbdVol.resize去扩容image。

func (cs *ControllerServer) ControllerExpandVolume(ctx context.Context, req *csi.ControllerExpandVolumeRequest) (*csi.ControllerExpandVolumeResponse, error) {

if err := cs.Driver.ValidateControllerServiceRequest(csi.ControllerServiceCapability_RPC_EXPAND_VOLUME); err != nil {

klog.Errorf(util.Log(ctx, "invalid expand volume req: %v"), protosanitizer.StripSecrets(req))

return nil, err

}

volID := req.GetVolumeId()

if volID == "" {

return nil, status.Error(codes.InvalidArgument, "volume ID cannot be empty")

}

capRange := req.GetCapacityRange()

if capRange == nil {

return nil, status.Error(codes.InvalidArgument, "capacityRange cannot be empty")

}

// lock out parallel requests against the same volume ID

if acquired := cs.VolumeLocks.TryAcquire(volID); !acquired {

klog.Errorf(util.Log(ctx, util.VolumeOperationAlreadyExistsFmt), volID)

return nil, status.Errorf(codes.Aborted, util.VolumeOperationAlreadyExistsFmt, volID)

}

defer cs.VolumeLocks.Release(volID)

// lock out volumeID for clone and delete operation

if err := cs.OperationLocks.GetExpandLock(volID); err != nil {

klog.Error(util.Log(ctx, err.Error()))

return nil, status.Error(codes.Aborted, err.Error())

}

defer cs.OperationLocks.ReleaseExpandLock(volID)

cr, err := util.NewUserCredentials(req.GetSecrets())

if err != nil {

return nil, status.Error(codes.Internal, err.Error())

}

defer cr.DeleteCredentials()

rbdVol := &rbdVolume{}

defer rbdVol.Destroy()

rbdVol, err = genVolFromVolID(ctx, volID, cr, req.GetSecrets())

if err != nil {

// nolint:gocritic // this ifElseChain can not be rewritten to a switch statement

if errors.Is(err, ErrImageNotFound) {

err = status.Errorf(codes.NotFound, "volume ID %s not found", volID)

} else if errors.Is(err, util.ErrPoolNotFound) {

klog.Errorf(util.Log(ctx, "failed to get backend volume for %s: %v"), volID, err)

err = status.Errorf(codes.NotFound, err.Error())

} else {

err = status.Errorf(codes.Internal, err.Error())

}

return nil, err

}

defer rbdVol.Destroy()

if rbdVol.Encrypted {

return nil, status.Errorf(codes.InvalidArgument, "encrypted volumes do not support resize (%s)",

rbdVol)

}

// always round up the request size in bytes to the nearest MiB/GiB

volSize := util.RoundOffBytes(req.GetCapacityRange().GetRequiredBytes())

// resize volume if required

nodeExpansion := false

if rbdVol.VolSize < volSize {

util.DebugLog(ctx, "rbd volume %s size is %v,resizing to %v", rbdVol, rbdVol.VolSize, volSize)

rbdVol.VolSize = volSize

nodeExpansion = true

err = rbdVol.resize(ctx, cr)

if err != nil {

klog.Errorf(util.Log(ctx, "failed to resize rbd image: %s with error: %v"), rbdVol, err)

return nil, status.Error(codes.Internal, err.Error())

}

}

return &csi.ControllerExpandVolumeResponse{

CapacityBytes: rbdVol.VolSize,

NodeExpansionRequired: nodeExpansion,

}, nil

}

rbdVol.resize

拼接resize命令并执行。

func (rv *rbdVolume) resize(ctx context.Context, cr *util.Credentials) error {

mon := rv.Monitors

volSzMiB := fmt.Sprintf("%dM", util.RoundOffVolSize(rv.VolSize))

args := []string{"resize", rv.String(), "--size", volSzMiB, "--id", cr.ID, "-m", mon, "--keyfile=" + cr.KeyFile}

_, stderr, err := util.ExecCommand(ctx, "rbd", args...)

if err != nil {

return fmt.Errorf("failed to resize rbd image (%w), command output: %s", err, stderr)

}

return nil

}

至此,rbd driver-controllerserver的分析已经完成,下面做个总结。

rbd driver-controllerserver分析总结

(1)controllerserver主要包括了CreateVolume(创建存储)、DeleteVolume(删除存储)、ControllerExpandVolume(存储扩容)、CreateSnapshot(创建存储快照)、DeleteSnapshot(删除存储快照)操作,这里主要对CreateVolume(创建存储)、DeleteVolume(删除存储)、ControllerExpandVolume(存储扩容)进行分析,作用分别如下:

CreateVolume:调用ceph存储后端,创建存储(rbd image)。

DeleteVolume:调用ceph存储后端,删除存储(rbd image)。

ControllerExpandVolume:调用ceph存储后端,扩容存储(rbd image)。

(2)存储扩容分为两大步骤,第一步是external-provisioner组件调用rbdType-ControllerServer类型的ceph-csi进行ControllerExpandVolume,主要负责将底层存储扩容;第二步是kubelet调用rbdType-NodeServer类型的ceph-csi进行NodeExpandVolume,主要负责将底层rbd image的扩容信息同步到rbd/nbd device,对xfs/ext文件系统进行扩展(当volumemode是block,则不用进行node端扩容操作)。

ceph-csi源码分析(4)-rbd driver-controllerserver分析的更多相关文章

- Apache Spark源码走读之6 -- 存储子系统分析

欢迎转载,转载请注明出处,徽沪一郎. 楔子 Spark计算速度远胜于Hadoop的原因之一就在于中间结果是缓存在内存而不是直接写入到disk,本文尝试分析Spark中存储子系统的构成,并以数据写入和数 ...

- Java集合源码学习(四)HashMap分析

ArrayList.LinkedList和HashMap的源码是一起看的,横向对比吧,感觉对这三种数据结构的理解加深了很多. >>数组.链表和哈希表结构 数据结构中有数组和链表来实现对数据 ...

- Java集合源码学习(三)LinkedList分析

前面学习了ArrayList的源码,数组是顺序存储结构,存储区间是连续的,占用内存严重,故空间复杂度很大.但数组的二分查找时间复杂度小,为O(1),数组的特点是寻址容易,插入和删除困难.今天学习另外的 ...

- Java集合源码学习(二)ArrayList分析

>>关于ArrayList ArrayList直接继承AbstractList,实现了List. RandomAccess.Cloneable.Serializable接口,为什么叫&qu ...

- 十大基础排序算法[java源码+动静双图解析+性能分析]

一.概述 作为一个合格的程序员,算法是必备技能,特此总结十大基础排序算法.java版源码实现,强烈推荐<算法第四版>非常适合入手,所有算法网上可以找到源码下载. PS:本文讲解算法分三步: ...

- Java源码详解系列(十)--全面分析mybatis的使用、源码和代码生成器(总计5篇博客)

简介 Mybatis 是一个持久层框架,它对 JDBC 进行了高级封装,使我们的代码中不会出现任何的 JDBC 代码,另外,它还通过 xml 或注解的方式将 sql 从 DAO/Repository ...

- Mybatis源码学习第七天(PageHelper分析)

其实我本来是不打算写这个PageHelper的,但是后来想了想,还是写了吧!现在市场用Mybatis的产品分页应该差不多都是用PageHelper来实现的,毕竟Mybatis的分页rowbound.. ...

- 【源码】Redis exists命令bug分析

本文基于社区版Redis 4.0.8 1.复现条件 版本:社区版Redis 4.0.10以下版本 使用场景:开启读写分离的主从架构或者集群架构(master只负责写流量,slave负责读流量) 案例: ...

- 【 js 基础 】【 源码学习 】源码设计 (更新了backbone分析)

学习源码,除了学习对一些方法的更加聪明的代码实现,同时也要学习源码的设计,把握整体的架构.(推荐对源码有一定熟悉了之后,再看这篇文章) 目录结构:第一部分:zepto 设计分析 第二部分:unders ...

- Swoft源码之Swoole和Swoft的分析

这篇文章给大家分享的内容是关于Swoft 源码剖析之Swoole和Swoft的一些介绍(Task投递/定时任务篇),有一定的参考价值,有需要的朋友可以参考一下. 前言 Swoft的任务功能基于Swoo ...

随机推荐

- checked 和 prop() (散列性比较少的)

在<input class="sex1" type="radio" checked>男 checked表示该框会被默认选上 prop()操作的是D ...

- [前端] JSON

背景 JavaScript对象表示法(JavaScript Object Notation):是一种存储数据的方式 JSON对象 创建 var gareen = {"name":& ...

- shell判断一个变量是否为空方法总结

shell中如何判断一个变量是否为空 shell编程中,对参数的错误检查项中,包含了变量是否赋值(即一个变量是否为空),判断变量为空方法如下: 1.变量通过" "引号引起来 1 2 ...

- xpath元素定位语法

举个栗子 -------------------------------------------------------------------------------------- <?xml ...

- 013.Ansible Playbook include

一 include 当项目越大,tasks越多的时候.如果将多有的task写入一个playbook中,可读性很差,就需要重新组织playbook 可以把一个playbook分成若干份晓得palyboo ...

- 搜狗拼音输入法v9.6a (9.6.0.3568) 去广告精简优化版本

https://yxnet.net/283.html 搜狗拼音输入法v9.6a (9.6.0.3568) 去广告精简优化版本 软件大小:29.2 MB 软件语言:简体中文 软件版本:去广告版 软件授权 ...

- Redis I/O 多路复用技术原理

引言 Redis 是一个单线程却性能非常好的内存数据库, 主要用来作为缓存系统. Redis 采用网络 I/O 多路复用技术来保证在多个连接时,系统的高吞吐量(TPS). 系统吞吐量(TPS)指的是系 ...

- 2.socket编程

套接字:进行网络通信的一种手段socket 1.流式套接字(SOCK_STREAM):传输层基于tcp协议进行通信 2.数据报套接字(SOCK_DGRAM):传输层基于udp协议进行通信 3.原始套接 ...

- Java 自定义常量

Java 中的常量就是初始化或赋值后不能再修改,而变量则可以重新赋值. 我们可以使用Java 关键字 final 定义一个常量,如下 final double PI = 3.14; 注意:为了区别 J ...

- 11.2 uptime:显示系统的运行时间及负载

uptime命令可以输出当前系统时间.系统开机到现在的运行时间.目前有多少用户在线和系统平均负载等信息. [root@cs6 ~]# uptime 17:02:25 up 1:48, 3 user ...