LVM学习

LVM Logical Volume Manager

Volume management creates a layer of abstraction over physical storage, allowing you to create logical storage volumes.

Logical volumes provide the following advantages over using physical storage directly:

Flexible capacity

When using logical volumes, file systems can extend across multiple disks, since you can aggregate disks and partitions into a single logical volume.

Resizeable storage pools

You can extend logical volumes or reduce logical volumes in size with simple software commands, without reformatting and repartitioning the underlying disk devices.

Online data relocation

To deploy newer, faster, or more resilient storage subsystems, you can move data while your system is active. Data can be rearranged on disks while the disks are in use. For example, you can empty a hot-swappable disk before removing it.

Convenient device naming

Logical storage volumes can be managed in user-defined groups, which you can name according to your convenience.

Disk striping

You can create a logical volume that stripes data across two or more disks. T his can dramatically increase throughput.

Mirroring volumes

Logical volumes provide a convenient way to configure a mirror for your data.

Volume Snapshots

Using logical volumes, you can take device snapshots for consistent backups or to test the effect of changes without affecting the real data.

LVM

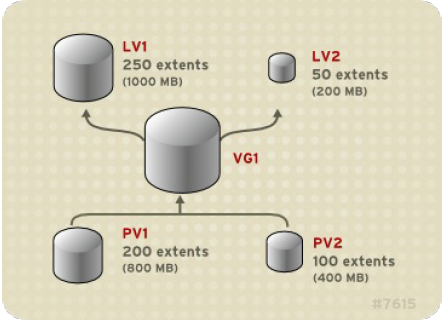

The underlying physical storage unit of an LVM logical volume is a block device such as a partition or whole disk.

This device is initialized as an LVM physical volume (PV).

To create an LVM logical volume, the physical volumes are combined into a volume group (VG).

This creates a pool of disk space out of which LVM logical volumes (LVs) can be allocated.

CLVM

The Clustered Logical Volume Manager (CLVM) is a set of clustering extensions to LVM.

These extensions allow a cluster of computers to manage shared storage (for example, on a SAN) using LVM.

In order to use CLVM, the High Availability Add-On and Resilient Storage Add-On software, including the clvmd daemon, must be running.

The clvmd daemon is the key clustering extension to LVM.

The clvmd daemon runs in each cluster computer and distributes LVM metadata updates in a cluster, presenting each cluster computer with the same view of the logical volumes.

LVM Components : Physical Volumes

The underlying physical storage unit of an LVM logical volume is a block device such as a partition or whole disk.

To use the device for an LVM logical volume the device must be initialized as a physical volume (PV).

Initializing a block device as a physical volume places a label near the start of the device.

By default, the LVM label is placed in the second 512-byte sector.

An LVM label provides correct identification and device ordering for a physical device, since devices can come up in any order when the system is booted. An LVM label remains persistent across reboots and throughout a cluster.

The LVM label identifies the device as an LVM physical volume. It contains a random unique identifier (the UUID) for the physical volume. It also stores the size of the block device in bytes, and it records where the LVM metadata will be stored on the device.

The LVM metadata contains the configuration details of the LVM volume groups on your system.

By default, an identical copy of the metadata is maintained in every metadata area in every physical volume within the volume group. LVM metadata is small and stored as ASCII.

LVM allows you to create physical volumes out of disk partitions.

It is generally recommended that you create a single partition that covers the whole disk to label as an LVM physical volume for the following reasons:

–Administrative convenience: It is easier to keep track of the hardware in a system if each real disk only appears once. This becomes particularly true if a disk fails. In addition, multiple physical volumes on a single disk may cause a kernel warning about unknown partition types at boot-up.

–Striping performance: LVM cannot tell that two physical volumes are on the same physical disk. If you create a striped logical volume when two physical volumes are on the same physical disk, the stripes could be on different partitions on the same disk. This would result in a decrease in performance rather than an increase.

LVM Components : Volume Groups

Physical volumes are combined into volume groups (VGs).

This creates a pool of disk space out of which logical volumes can be allocated.

Within a volume group, the disk space available for allocation is divided into units of a fixed-size called extents.

An extent is the smallest unit of space that can be allocated.

Within a physical volume, extents are referred to as physical extents.

A logical volume is allocated into logical extents of the same size as the physical extents.

The extent size is thus the same for all logical volumes in the volume group.

The volume group maps the logical extents to physical extents.

LVM Components : LVM Logical Volumes

In LVM, a volume group is divided up into logical volumes.

There are three types of LVM logical volumes:

linear volumes, striped volumes, and mirrored volumes

Linear Volumes

A linear volume aggregates space from one or more physical volumes into one logical volume.

For example, if you have two 60GB disks, you can create a 120GB logical volume. The physical storage is oncatenated.

Creating a linear volume assigns a range of physical extents to an area of a logical volume in order.

For example, logical extents 1 to 99 could map to one physical volume and logical extents 100 to 198 could map to a second physical volume.

The physical volumes that make up a logical volume do not have to be the same size.

You can configure more than one linear logical volume of whatever size you require from the pool of physical extents.

Striped Logical Volumes

When you write data to an LVM logical volume, the file system lays the data out across the underlying physical volumes.

You can control the way the data is written to the physical volumes by creating a striped logical volume.

For large sequential reads and writes, this can improve the efficiency of the data I/O.

Striping enhances performance by writing data to a predetermined number of physical volumes in round robin fashion.

–the first stripe of data is written to PV1

–the second stripe of data is written to PV2

–the third stripe of data is written to PV3

–the fourth stripe of data is written to PV1

In a striped logical volume, the size of the stripe cannot exceed the size of an extent.

Striped logical volumes can be extended by concatenating another set of devices onto the end of the first set.

In order to extend a striped logical volume, however, there must be enough free space on the underlying physical volumes that make up the volume group to support the stripe.

For example, if you have a two-way stripe that uses up an entire volume group, adding a single physical volume to the volume group will not enable you to extend the stripe.

Instead, you must add at least two physical volumes to the volume group.

Mirrored Logical Volumes

A mirror maintains identical copies of data on different devices.

When data is written to one device, it is written to a second device as well, mirroring the data.

This provides protection for device failures. When one leg of a mirror fails, the logical volume becomes a linear volume and can still be accessed.

LVM supports mirrored volumes. When you create a mirrored logical volume, LVM ensures that data written to an underlying physical volume is mirrored onto a separate physical volume.

With LVM, you can create mirrored logical volumes with multiple mirrors.

An LVM mirror divides the device being copied into regions that are typically 512KB in size.

LVM maintains a small log which it uses to keep track of which regions are in sync with the mirror or mirrors.

This log can be kept on disk, which will keep it persistent across reboots, or it can be maintained in memory.

RAID Logical Volumes

Thinly-Provisioned Logical Volumes (Thin Volumes)

Logical volumes can be thinly provisioned. T his allows you to create logical volumes that are larger than the available extents.

By using thin provisioning, a storage administrator can over-commit the physical storage, often avoiding the need to purchase additional storage.

For example, if ten users each request a 100GB file system for their application, the storage administrator can create what appears to be a 100GB file system for each user but which is backed by less actual storage that is used only when needed.

When using thin provisioning, it is important that the storage administrator monitor the storage pool and add more capacity if it starts to become full.

To make sure that all available space can be used, LVM supports data discard. T his allows for re-use of the space that was formerly used by a discarded file or other block range.

Snapshot Volumes

The LVM snapshot feature provides the ability to create virtual images of a device at a particular instant without causing a service interruption.

When a change is made to the original device (the origin) after a snapshot is taken, the snapshot feature makes a copy of the changed data area as it was prior to the change so that it can reconstruct the state of the device.

Because a snapshot copies only the data areas that change after the snapshot is created, the snapshot feature requires a minimal amount of storage.

You can use the --merge option of the lvconvert command to merge a snapshot into its origin volume.

One use for this feature is to perform system rollback if you have lost data or files or otherwise need to restore your system to a previous state.

Thinly-Provisioned Snapshot Volumes

This simplifies administration and allows for the sharing of data between snapshot volumes.

As for all LVM snapshot volumes, as well as all thin volumes, thin snapshot volumes are not supported across the nodes in a cluster.

A thin snapshot volume can reduce disk usage when there are multiple snapshots of the same origin volume.

Thin snapshot volumes can be used as a logical volume origin for another snapshot.

A snapshot of a thin logical volume also creates a thin logical volume.

You cannot change the chunk size of a thin pool.

You cannot limit the size of a thin snapshot volume

LVM Administration Overview

The following is a summary of the steps to perform to create an LVM logical volume.

Initialize the partitions you will use for the LVM volume as physical volumes (this labels them).

Create a volume group.

Create a logical volume.

After creating the logical volume you can create and mount the file system. The examples in this document use GFS2 file systems.

Create a GFS2 file system on the logical volume with the

mkfs.gfs2command.Create a new mount point with the

mkdircommand. In a clustered system, create the mount point on all nodes in the cluster.Mount the file system. You may want to add a line to the

fstabfile for each node in the system.

To grow a file system on a logical volume, perform the following steps:

Make a new physical volume.

Extend the volume group that contains the logical volume with the file system you are growing to include the new physical volume.

Extend the logical volume to include the new physical volume.

Grow the file system.

Logical Volume Backup

Metadata backups and archives are automatically created on every volume group and logical volume configuration change unless disabled in the lvm.conf file.

By default, the metadata backup is stored in the /etc/lvm/backup file and the metadata archives are stored in the/etc/lvm/archive file.

A daily system backup should include the contents of the /etc/lvm directory in the backup.

You can manually back up the metadata to the /etc/lvm/backup file with the vgcfgbackup command. You can restore metadata with the vgcfgrestore command.

root:/etc/lvm/backup# cat cinder-volumes # Generated by LVM2 version 2.02.98(2) (2012-10-15): Mon Jul 7 03:34:24 2014

contents = "Text Format Volume Group" version = 1

description = "Created *after* executing 'lvextend -L 20G /dev/cinder-volumes/lv_cliu8_test'"

creation_host = "escto-bj-hp-z620" # Linux escto-bj-hp-z620 3.13.0-27-generic #50-Ubuntu SMP Thu May 15 18:06:16 UTC 2014 x86_64 creation_time = 1404675264 # Mon Jul 7 03:34:24 2014

cinder-volumes { id = "86NpeF-RjB1-vBGd-8LDO-N1nJ-ssK7-862yFH" seqno = 15 format = "lvm2" # informational status = ["RESIZEABLE", "READ", "WRITE"] flags = [] extent_size = 8192 # 4 Megabytes max_lv = 0 max_pv = 0 metadata_copies = 0

physical_volumes {

pv0 { id = "anOUYC-Ia27-xkf2-A6rY-ALPP-N6dF-pHp523" device = "/dev/sdc" # Hint only

status = ["ALLOCATABLE"] flags = [] dev_size = 500118192 # 238.475 Gigabytes pe_start = 2048 pe_count = 61049 # 238.473 Gigabytes } }

logical_volumes {

volume-f6ba87f7-d0b6-4fdb-ac82-346371e78c48 { id = "PKRR9B-nUCI-Ob1Y-U97W-b1Rh-RhuH-Ow4h5P" status = ["READ", "WRITE", "VISIBLE"] flags = [] creation_host = "escto-bj-hp-z620" creation_time = 1404253343 # 2014-07-02 06:22:23 +0800 segment_count = 1

segment1 { start_extent = 0 extent_count = 2816 # 11 Gigabytes

type = "striped" stripe_count = 1 # linear

stripes = [ "pv0", 0 ] } }

_snapshot-138e677d-721a-435e-b945-fbd6009f3b2a { id = "uTu3GB-J1Nb-1G6Z-xroP-nUmk-394l-KHVYIk" status = ["READ", "WRITE"] flags = [] creation_host = "escto-bj-hp-z620" creation_time = 1404299680 # 2014-07-02 19:14:40 +0800 segment_count = 1

segment1 { start_extent = 0 extent_count = 2816 # 11 Gigabytes

type = "striped" stripe_count = 1 # linear

stripes = [ "pv0", 2816 ] } }

lv_cliu8_test { id = "Fg1uLV-Rdfc-p33c-1m03-GMrn-QgJ4-mpMelP" status = ["READ", "WRITE", "VISIBLE"] flags = [] creation_host = "escto-bj-hp-z620" creation_time = 1404314427 # 2014-07-02 23:20:27 +0800 segment_count = 2

segment1 { start_extent = 0 extent_count = 3584 # 14 Gigabytes

type = "striped" stripe_count = 1 # linear

stripes = [ "pv0", 5632 ] } segment2 { start_extent = 3584 extent_count = 1536 # 6 Gigabytes

type = "striped" stripe_count = 1 # linear

stripes = [ "pv0", 27648 ] } }

volume-640a10f7-3965-4a47-9641-002a94526444 { id = "WGmGTD-vzFQ-hwld-Lg4T-jVtl-i6I2-U8igvq" status = ["READ", "WRITE", "VISIBLE"] flags = [] creation_host = "escto-bj-hp-z620" creation_time = 1404318851 # 2014-07-03 00:34:11 +0800 segment_count = 1

segment1 { start_extent = 0 extent_count = 6144 # 24 Gigabytes

type = "striped" stripe_count = 1 # linear

stripes = [ "pv0", 9216 ] } }

_snapshot-ed57ce28-612a-4290-985e-098852abd628 { id = "Q3YJ7c-gSy6-e32M-2Aq6-2GX4-Nqb5-CPhFPe" status = ["READ", "WRITE"] flags = [] creation_host = "escto-bj-hp-z620" creation_time = 1404319228 # 2014-07-03 00:40:28 +0800 segment_count = 1

segment1 { start_extent = 0 extent_count = 6144 # 24 Gigabytes

type = "striped" stripe_count = 1 # linear

stripes = [ "pv0", 15360 ] } }

_snapshot-16664480-56b6-431f-8cd2-399d57a8bb52 { id = "dcNCnP-dQN5-CxFh-C40y-wIq3-u26p-jW0r9X" status = ["READ", "WRITE"] flags = [] creation_host = "escto-bj-hp-z620" creation_time = 1404390011 # 2014-07-03 20:20:11 +0800 segment_count = 1

segment1 { start_extent = 0 extent_count = 6144 # 24 Gigabytes

type = "striped" stripe_count = 1 # linear

stripes = [ "pv0", 21504 ] } }

snapshot0 { id = "H6qrZu-ue6Y-ySuV-pECo-eXTS-Bon2-0y3pws" status = ["READ", "WRITE", "VISIBLE"] flags = [] creation_host = "escto-bj-hp-z620" creation_time = 1404299680 # 2014-07-02 19:14:40 +0800 segment_count = 1

segment1 { start_extent = 0 extent_count = 2816 # 11 Gigabytes

type = "snapshot" chunk_size = 8 origin = "volume-f6ba87f7-d0b6-4fdb-ac82-346371e78c48" cow_store = "_snapshot-138e677d-721a-435e-b945-fbd6009f3b2a" } }

snapshot1 { id = "cmdtmf-J8fh-rJ6b-o4xi-BLko-CRcd-oiN4cH" status = ["READ", "WRITE", "VISIBLE"] flags = [] creation_host = "escto-bj-hp-z620" creation_time = 1404319228 # 2014-07-03 00:40:28 +0800 segment_count = 1

segment1 { start_extent = 0 extent_count = 6144 # 24 Gigabytes

type = "snapshot" chunk_size = 8 origin = "volume-640a10f7-3965-4a47-9641-002a94526444" cow_store = "_snapshot-ed57ce28-612a-4290-985e-098852abd628" } }

snapshot2 { id = "FOHJ2Y-d7lc-xAhe-d3C5-liho-xAc9-FEQdUj" status = ["READ", "WRITE", "VISIBLE"] flags = [] creation_host = "escto-bj-hp-z620" creation_time = 1404390011 # 2014-07-03 20:20:11 +0800 segment_count = 1

segment1 { start_extent = 0 extent_count = 6144 # 24 Gigabytes

type = "snapshot" chunk_size = 8 origin = "volume-640a10f7-3965-4a47-9641-002a94526444" cow_store = "_snapshot-16664480-56b6-431f-8cd2-399d57a8bb52" } } } }

root:/etc/lvm/archive# ls cinder-volumes_00000-1814477754.vg cinder-volumes_00006-533729372.vg escto-bj-hp-z620-vg_00000-915060969.vg cinder-volumes_00001-1145348940.vg cinder-volumes_00007-1811105450.vg escto-bj-hp-z620-vg_00001-1174812246.vg cinder-volumes_00002-758695712.vg cinder-volumes_00008-215202875.vg escto-bj-hp-z620-vg_00002-1166612034.vg cinder-volumes_00003-858285105.vg cinder-volumes_00009-134406032.vg VolGroup00_00000-1367027080.vg cinder-volumes_00004-552493463.vg cinder-volumes_00010-1177484978.vg VolGroup00_00001-87410705.vg cinder-volumes_00005-760124492.vg cinder-volumes_00011-1529598199.vg root:/etc/lvm/archive# cat cinder-volumes_00000-1814477754.vg # Generated by LVM2 version 2.02.98(2) (2012-10-15): Wed Jul 2 06:13:04 2014

contents = "Text Format Volume Group" version = 1

description = "Created *before* executing 'vgcreate cinder-volumes /dev/sdc'"

creation_host = "escto-bj-hp-z620" # Linux escto-bj-hp-z620 3.13.0-27-generic #50-Ubuntu SMP Thu May 15 18:06:16 UTC 2014 x86_64 creation_time = 1404252784 # Wed Jul 2 06:13:04 2014

cinder-volumes { id = "86NpeF-RjB1-vBGd-8LDO-N1nJ-ssK7-862yFH" seqno = 0 format = "lvm2" # informational status = ["RESIZEABLE", "READ", "WRITE"] flags = [] extent_size = 8192 # 4 Megabytes max_lv = 0 max_pv = 0 metadata_copies = 0

physical_volumes {

pv0 { id = "anOUYC-Ia27-xkf2-A6rY-ALPP-N6dF-pHp523" device = "/dev/sdc" # Hint only

status = ["ALLOCATABLE"] flags = [] dev_size = 500118192 # 238.475 Gigabytes pe_start = 2048 pe_count = 61049 # 238.473 Gigabytes } }

}

The Metadata Daemon (lvmetad)

LVM can optionally use a central metadata cache, implemented through a daemon (lvmetad) and a udev rule. The metadata daemon has two main purposes: It improves performance of LVM commands and it allows udev to automatically activate logical volumes or entire volume groups as they become available to the system.

LVM Administration with CLI Commands

Physical Volume Administration

pvcreate /dev/sdd /dev/sde /dev/sdf

You can scan for block devices that may be used as physical volumes with the lvmdiskscan

The pvs command provides physical volume information in a configurable form, displaying one line per physical volume.

The pvdisplay command provides a verbose multi-line output for each physical volume.

The pvscan command scans all supported LVM block devices in the system for physical volumes.

You can prevent allocation of physical extents on the free space of one or more physical volumes with the pvchange command.

# pvchange -x n /dev/sdk1

If you need to change the size of an underlying block device for any reason, use the pvresize command to update LVM with the new size.

#pvremove /dev/ram15

Labels on physical volume "/dev/ram15" successfully wiped

Volume Group Administration

The following command creates a volume group named vg1 that contains physical volumes /dev/sdd1 and /dev/sde1.

# vgcreate vg1 /dev/sdd1 /dev/sde1

When physical volumes are used to create a volume group, its disk space is divided into 4MB extents, by default.

LVM volume groups and underlying logical volumes are included in the device special file directory tree in the /dev directory with the following layout:

/dev/vg/lv/

The following command adds the physical volume /dev/sdf1 to the volume group vg1.

# vgextend vg1 /dev/sdf1

There are two commands you can use to display properties of LVM volume groups: vgs and vgdisplay.

The vgscan command, which scans all the disks for volume groups and rebuilds the LVM cache file, also displays the volume groups.

This builds the LVM cache in the /etc/lvm/cache/.cache file, which maintains a listing of current LVM devices.

The following command removes the physical volume /dev/hda1 from the volume group my_volume_group.

# vgreduce my_volume_group /dev/hda1

The following example deactivates the volume group my_volume_group.

# vgchange -a n my_volume_group

To remove a volume group that contains no logical volumes, use the vgremove command.

#vgremove officevg

Volume group "officevg" successfully removed

The following example splits off the new volume group smallvg from the original volume group bigvg.

#vgsplit bigvg smallvg /dev/ram15

Volume group "smallvg" successfully split from "bigvg"

The following command merges the inactive volume group my_vg into the active or inactive volume group databases giving verbose runtime information.

# vgmerge -v databases my_vg

You can manually back up the metadata to the /etc/lvm/backup file with the vgcfgbackup command.

The vgcfrestore command restores the metadata of a volume group from the archive to all the physical volumes in the volume groups.

Either of the following commands renames the existing volume group vg02 to my_volume_group

# vgrename /dev/vg02 /dev/my_volume_group

# vgrename vg02 my_volume_group

Moving a Volume Group to Another System

To move a volume group form one system to another, perform the following steps:

Make sure that no users are accessing files on the active volumes in the volume group, then unmount the logical volumes.

Use the

-a nargument of thevgchangecommand to mark the volume group as inactive, which prevents any further activity on the volume group.Use the

vgexportcommand to export the volume group. This prevents it from being accessed by the system from which you are removing it.After you export the volume group, the physical volume will show up as being in an exported volume group when you execute the

pvscancommand, as in the following example.#

pvscan

PV /dev/sda1 is in exported VG myvg [17.15 GB / 7.15 GB free]

PV /dev/sdc1 is in exported VG myvg [17.15 GB / 15.15 GB free]

PV /dev/sdd1 is in exported VG myvg [17.15 GB / 15.15 GB free]

...When the system is next shut down, you can unplug the disks that constitute the volume group and connect them to the new system.

When the disks are plugged into the new system, use the

vgimportcommand to import the volume group, making it accessible to the new system.Activate the volume group with the

-a yargument of thevgchangecommand.Mount the file system to make it available for use.

To recreate a volume group directory and logical volume special files, use the vgmknodes command. This command checks the LVM2 special files in the /dev directory that are needed for active logical volumes.

Logical Volume Administration

Creating Linear Logical Volumes

The following command creates a 1500 MB linear logical volume named testlv in the volume group testvg, creating the block device /dev/testvg/testlv.

# lvcreate -L 1500 -n testlv testvg

The following command creates a logical volume called mylv that uses 60% of the total space in volume group testvg.

# lvcreate -l 60%VG -n mylv testvg

The following command creates a logical volume called yourlv that uses all of the unallocated space in the volume group testvg.

# lvcreate -l 100%FREE -n yourlv testvg

The following command creates a logical volume named testlv in volume group testvg allocated from the physical volume/dev/sdg1,

# lvcreate -L 1500 -ntestlv testvg /dev/sdg1

The following example creates a linear logical volume out of extents 0 through 24 of physical volume /dev/sda1 and extents 50 through 124 of physical volume /dev/sdb1 in volume group testvg.

# lvcreate -l 100 -n testlv testvg /dev/sda1:0-24 /dev/sdb1:50-124

The following example creates a linear logical volume out of extents 0 through 25 of physical volume /dev/sda1 and then continues laying out the logical volume at extent 100.

# lvcreate -l 100 -n testlv testvg /dev/sda1:0-25:100-

Creating Striped Volumes

The following command creates a striped logical volume across 2 physical volumes with a stripe of 64kB. The logical volume is 50 gigabytes in size, is named gfslv, and is carved out of volume group vg0.

# lvcreate -L 50G -i2 -I64 -n gfslv vg0

The following command creates a striped volume 100 extents in size that stripes across two physical volumes, is namedstripelv and is in volume group testvg. The stripe will use sectors 0-49 of /dev/sda1 and sectors 50-99 of /dev/sdb1.

#lvcreate -l 100 -i2 -nstripelv testvg /dev/sda1:0-49 /dev/sdb1:50-99

Using default stripesize 64.00 KB

Logical volume "stripelv" created

Creating Mirrored Volumes

The following command creates a mirrored logical volume with a single mirror. The volume is 50 gigabytes in size, is namedmirrorlv, and is carved out of volume group vg0:

# lvcreate -L 50G -m1 -n mirrorlv vg0

The following command creates a mirrored logical volume from the volume group bigvg. The logical volume is namedondiskmirvol and has a single mirror. The volume is 12MB in size and keeps the mirror log in memory.

#lvcreate -L 12MB -m1 --mirrorlog core -n ondiskmirvol bigvg

Logical volume "ondiskmirvol" created

The following command creates a mirrored logical volume with a single mirror for which the mirror log is on the same device as one of the mirror legs. In this example, the volume group vg0 consists of only two devices. This command creates a 500 MB volume named mirrorlv in the vg0 volume group.

# lvcreate -L 500M -m1 -n mirrorlv -alloc anywhere vg0

To create a mirror log that is itself mirrored, you can specify the --mirrorlog mirrored argument. The following command creates a mirrored logical volume from the volume group bigvg. The logical volume is named twologvol and has a single mirror. The volume is 12MB in size and the mirror log is mirrored, with each log kept on a separate device.

#lvcreate -L 12MB -m1 --mirrorlog mirrored -n twologvol bigvg

Logical volume "twologvol" created

The following command creates a mirrored logical volume with a single mirror and a single log that is not mirrored. The volume is 500 MB in size, it is named mirrorlv, and it is carved out of volume group vg0. The first leg of the mirror is on device/dev/sda1, the second leg of the mirror is on device /dev/sdb1, and the mirror log is on /dev/sdc1.

# lvcreate -L 500M -m1 -n mirrorlv vg0 /dev/sda1 /dev/sdb1 /dev/sdc1

The following command creates a mirrored logical volume with a single mirror. The volume is 500 MB in size, it is namedmirrorlv, and it is carved out of volume group vg0. The first leg of the mirror is on extents 0 through 499 of device /dev/sda1, the second leg of the mirror is on extents 0 through 499 of device /dev/sdb1, and the mirror log starts on extent 0 of device/dev/sdc1. These are 1MB extents. If any of the specified extents have already been allocated, they will be ignored.

# lvcreate -L 500M -m1 -n mirrorlv vg0 /dev/sda1:0-499 /dev/sdb1:0-499 /dev/sdc1:0

Mirrored Logical Volume Failure Policy

You can define how a mirrored logical volume behaves in the event of a device failure with the mirror_image_fault_policy andmirror_log_fault_policy parameters in the activation section of the lvm.conf file.

When these parameters are set to remove, the system attempts to remove the faulty device and run without it.

When this parameter is set to allocate, the system attempts to remove the faulty device and tries to allocate space on a new device to be a replacement for the failed device; this policy acts like the remove policy if no suitable device and space can be allocated for the replacement.

The following command splits off a new logical volume named copy from the mirrored logical volume vg/lv. The new logical volume contains two mirror legs. In this example, LVM selects which devices to split off.

# lvconvert --splitmirrors 2 --name copy vg/lv

You can specify which devices to split off. The following command splits off a new logical volume named copy from the mirrored logical volume vg/lv. The new logical volume contains two mirror legs consisting of devices /dev/sdc1 and /dev/sde1.

# lvconvert --splitmirrors 2 --name copy vg/lv /dev/sd[ce]1

Repairing a Mirrored Logical Device

You can use the lvconvert --repair command to repair a mirror after a disk failure. This brings the mirror back into a consistent state.

The following command converts the linear logical volume vg00/lvol1 to a mirrored logical volume.

# lvconvert -m1 vg00/lvol1

The following command converts the mirrored logical volume vg00/lvol1 to a linear logical volume, removing the mirror leg.

# lvconvert -m0 vg00/lvol1

The following example adds an additional mirror leg to the existing logical volume vg00/lvol1. This example shows the configuration of the volume before and after the lvconvert command changed the volume to a volume with two mirror legs.

#lvs -a -o name,copy_percent,devices vg00

LV Copy% Devices

lvol1 100.00 lvol1_mimage_0(0),lvol1_mimage_1(0)

[lvol1_mimage_0] /dev/sda1(0)

[lvol1_mimage_1] /dev/sdb1(0)

[lvol1_mlog] /dev/sdd1(0)

#lvconvert -m 2 vg00/lvol1

vg00/lvol1: Converted: 13.0%

vg00/lvol1: Converted: 100.0%

Logical volume lvol1 converted.

#lvs -a -o name,copy_percent,devices vg00

LV Copy% Devices

lvol1 100.00 lvol1_mimage_0(0),lvol1_mimage_1(0),lvol1_mimage_2(0)

[lvol1_mimage_0] /dev/sda1(0)

[lvol1_mimage_1] /dev/sdb1(0)

[lvol1_mimage_2] /dev/sdc1(0)

[lvol1_mlog] /dev/sdd1(0)

Creating Thinly-Provisioned Logical Volumes

To create a thin volume, you perform the following tasks:

Create a volume group with the

vgcreatecommand.Create a thin pool with the

lvcreatecommand.Create a thin volume in the thin pool with the

lvcreatecommand.

The following command uses the -T option of the lvcreate command to create a thin pool named mythinpool that is in the volume group vg001 and that is 100M in size.

#lvcreate -L 100M -T vg001/mythinpool

Rounding up size to full physical extent 4.00 MiB

Logical volume "mythinpool" created

#lvs

LV VG Attr LSize Pool Origin Data% Move Log Copy% Convert

my mythinpool vg001 twi-a-tz 100.00m 0.00

The following command uses the -T option of the lvcreate command to create a thin volume named thinvolume in the thin poolvg001/mythinpool. Note that in this case you are specifying virtual size, and that you are specifying a virtual size for the volume that is greater than the pool that contains it.

#lvcreate -V1G -T vg001/mythinpool -n thinvolume

Logical volume "thinvolume" created

#lvs

LV VG Attr LSize Pool Origin Data% Move Log Copy% Convert

mythinpool vg001 twi-a-tz 100.00m 0.00

thinvolume vg001 Vwi-a-tz 1.00g mythinpool 0.00

Striping is supported for pool creation. The following command creates a 100M thin pool named pool in volume group vg001 with two 64 kB stripes and a chunk size of 256 kB. It also creates a 1T thin volume, vg00/thin_lv.

# lvcreate -i 2 -I 64 -c 256 -L100M -T vg00/pool -V 1T --name thin_lv

The following command resizes an existing thin pool that is 100M in size by extending it another 100M.

#lvextend -L+100M vg001/mythinpool

Extending logical volume mythinpool to 200.00 MiB

Logical volume mythinpool successfully resized

#lvs

LV VG Attr LSize Pool Origin Data% Move Log Copy% Convert

mythinpool vg001 twi-a-tz 200.00m 0.00

thinvolume vg001 Vwi-a-tz 1.00g mythinpool 0.00

The following example converts the existing logical volume lv1 in volume group vg001 to a thin volume and converts the existing logical volume lv2 in volume group vg001 to the metadata volume for that thin volume.

#lvconvert --thinpool vg001/lv1 --poolmetadata vg001/lv2

Converted vg001/lv1 to thin pool.

Creating Snapshot Volumes

Use the -s argument of the lvcreate command to create a snapshot volume. A snapshot volume is writable.

The following command creates a snapshot logical volume that is 100 MB in size named /dev/vg00/snap. This creates a snapshot of the origin logical volume named /dev/vg00/lvol1.

# lvcreate --size 100M --snapshot --name snap /dev/vg00/lvol1

The following example shows the status of the logical volume /dev/new_vg/lvol0, for which a snapshot volume/dev/new_vg/newvgsnap has been created.

#lvdisplay /dev/new_vg/lvol0

--- Logical volume ---

LV Name /dev/new_vg/lvol0

VG Name new_vg

LV UUID LBy1Tz-sr23-OjsI-LT03-nHLC-y8XW-EhCl78

LV Write Access read/write

LV snapshot status source of

/dev/new_vg/newvgsnap1 [active]

LV Status available

# open 0

LV Size 52.00 MB

Current LE 13

Segments 1

Allocation inherit

Read ahead sectors 0

Block device 253:2

Creating Thinly-Provisioned Snapshot Volumes

The following command creates a thinly-provisioned snapshot volume of the thinly-provisioned logical volume vg001/thinvolumethat is named mysnapshot1.

#lvcreate -s --name mysnapshot1 vg001/thinvolume

Logical volume "mysnapshot1" created

# lvs

LV VG Attr LSize Pool Origin Data% Move Log Copy% Convert

mysnapshot1 vg001 Vwi-a-tz 1.00g mythinpool thinvolume 0.00

mythinpool vg001 twi-a-tz 100.00m 0.00

thinvolume vg001 Vwi-a-tz 1.00g mythinpool 0.00

The following command creates a thin snapshot volume of the read-only inactive volume origin_volume.

# lvcreate -s --thinpool vg001/pool origin_volume --name mythinsnap

You can create a second thinly-provisioned snapshot volume of the first snapshot volume, as in the following command.

# lvcreate -s vg001/mythinsnap --name my2ndthinsnap

The following command merges snapshot volume vg00/lvol1_snap into its origin.

# lvconvert --merge vg00/lvol1_snap

The following command reduces the size of logical volume lvol1 in volume group vg00 by 3 logical extents.

# lvreduce -l -3 vg00/lvol1

The following command changes the permission on volume lvol1 in volume group vg00 to be read-only.

# lvchange -pr vg00/lvol1

Major and minor device numbers are allocated dynamically at module load. Some applications work best if the block device always is activated with the same device (major and minor) number. You can specify these with the lvcreate and the lvchangecommands by using the following arguments:

--persistent y --majormajor--minorminor

#lvremove /dev/testvg/testlv

Do you really want to remove active logical volume "testlv"? [y/n]:y

Logical volume "testlv" successfully removed

There are three commands you can use to display properties of LVM logical volumes: lvs, lvdisplay, and lvscan.

Growing Logical Volumes

The following command extends the logical volume /dev/myvg/homevol to 12 gigabytes.

#lvextend -L12G /dev/myvg/homevol

lvextend -- extending logical volume "/dev/myvg/homevol" to 12 GB

lvextend -- doing automatic backup of volume group "myvg"

lvextend -- logical volume "/dev/myvg/homevol" successfully extended

The following command adds another gigabyte to the logical volume /dev/myvg/homevol.

#lvextend -L+1G /dev/myvg/homevol

lvextend -- extending logical volume "/dev/myvg/homevol" to 13 GB

lvextend -- doing automatic backup of volume group "myvg"

lvextend -- logical volume "/dev/myvg/homevol" successfully extended

For example, consider a volume group vg that consists of two underlying physical volumes, as displayed with the following vgscommand.

#vgs

VG #PV #LV #SN Attr VSize VFree

vg 2 0 0 wz--n- 271.31G 271.31G

You can create a stripe using the entire amount of space in the volume group.

#lvcreate -n stripe1 -L 271.31G -i 2 vg

Using default stripesize 64.00 KB

Rounding up size to full physical extent 271.31 GB

Logical volume "stripe1" created

#lvs -a -o +devices

LV VG Attr LSize Origin Snap% Move Log Copy% Devices

stripe1 vg -wi-a- 271.31G /dev/sda1(0),/dev/sdb1(0)

Note that the volume group now has no more free space.

#vgs

VG #PV #LV #SN Attr VSize VFree

vg 2 1 0 wz--n- 271.31G 0

The following command adds another physical volume to the volume group, which then has 135G of additional space.

#vgextend vg /dev/sdc1

Volume group "vg" successfully extended

#vgs

VG #PV #LV #SN Attr VSize VFree

vg 3 1 0 wz--n- 406.97G 135.66G

At this point you cannot extend the striped logical volume to the full size of the volume group, because two underlying devices are needed in order to stripe the data.

#lvextend vg/stripe1 -L 406G

Using stripesize of last segment 64.00 KB

Extending logical volume stripe1 to 406.00 GB

Insufficient suitable allocatable extents for logical volume stripe1: 34480

more required

To extend the striped logical volume, add another physical volume and then extend the logical volume. In this example, having added two physical volumes to the volume group we can extend the logical volume to the full size of the volume group.

#vgextend vg /dev/sdd1

Volume group "vg" successfully extended

#vgs

VG #PV #LV #SN Attr VSize VFree

vg 4 1 0 wz--n- 542.62G 271.31G

#lvextend vg/stripe1 -L 542G

Using stripesize of last segment 64.00 KB

Extending logical volume stripe1 to 542.00 GB

Logical volume stripe1 successfully resized

The following example extends a logical volume that was created without the --nosync option, indicated that the mirror was synchronized when it was created. This example, however, specifies that the mirror not be synchronized when the volume is extended. Note that the volume has an attribute of "m", but after executing the lvextend commmand with the --nosync option the volume has an attribute of "M".

#lvs vg

LV VG Attr LSize Pool Origin Snap% Move Log Copy% Convert

lv vg mwi-a-m- 20.00m lv_mlog 100.00

#lvextend -L +5G vg/lv --nosync

Extending 2 mirror images.

Extending logical volume lv to 5.02 GiB

Logical volume lv successfully resized

#lvs vg

LV VG Attr LSize Pool Origin Snap% Move Log Copy% Convert

lv vg Mwi-a-m- 5.02g lv_mlog 100.00

RAID Logical Volumes

The following command creates a RAID5 array (3 stripes + 1 implicit parity drive) named my_lv in the volume group my_vg that is 1G in size. Note that you specify the number of stripes just as you do for an LVM striped volume; the correct number of parity drives is added automatically.

# lvcreate --type raid5 -i 3 -L 1G -n my_lv my_vg

The following command creates a 2-way RAID10 array with 3 stripes that is 10G is size with a maximum recovery rate of 128 kiB/sec/device. The array is named my_lv and is in the volume group my_vg.

lvcreate --type raid10 -i 2 -m 1 -L 10G --maxrecoveryrate 128 -n my_lv my_vg

Converting a Linear Device to a RAID Device

The following command converts the linear logical volume my_lv in volume group my_vg to a 2-way RAID1 array.

# lvconvert --type raid1 -m 1 my_vg/my_lv

Since RAID logical volumes are composed of metadata and data subvolume pairs, when you convert a linear device to a RAID1 array, a new metadata subvolume is created and associated with the original logical volume on (one of) the same physical volumes that the linear volume is on. The additional images are added in metadata/data subvolume pairs. For example, if the original device is as follows:

#lvs -a -o name,copy_percent,devices my_vg

LV Copy% Devices

my_lv /dev/sde1(0)

After conversion to a 2-way RAID1 array the device contains the following data and metadata subvolume pairs:

#lvconvert --type raid1 -m 1 my_vg/my_lv

#lvs -a -o name,copy_percent,devices my_vg

LV Copy% Devices

my_lv 6.25 my_lv_rimage_0(0),my_lv_rimage_1(0)

[my_lv_rimage_0] /dev/sde1(0)

[my_lv_rimage_1] /dev/sdf1(1)

[my_lv_rmeta_0] /dev/sde1(256)

[my_lv_rmeta_1] /dev/sdf1(0)

Converting an LVM RAID1 Logical Volume to an LVM Linear Logical Volume

The following example displays an existing LVM RAID1 logical volume.

#lvs -a -o name,copy_percent,devices my_vg

LV Copy% Devices

my_lv 100.00 my_lv_rimage_0(0),my_lv_rimage_1(0)

[my_lv_rimage_0] /dev/sde1(1)

[my_lv_rimage_1] /dev/sdf1(1)

[my_lv_rmeta_0] /dev/sde1(0)

[my_lv_rmeta_1] /dev/sdf1(0)

The following command converts the LVM RAID1 logical volume my_vg/my_lv to an LVM linear device.

#lvconvert -m0 my_vg/my_lv

#lvs -a -o name,copy_percent,devices my_vg

LV Copy% Devices

my_lv /dev/sde1(1)

When you convert an LVM RAID1 logical volume to an LVM linear volume, you can specify which physical volumes to remove. The following example shows the layout of an LVM RAID1 logical volume made up of two images: /dev/sda1 and /dev/sda2. In this example, the lvconvert command specifies that you want to remove /dev/sda1, leaving /dev/sdb1 as the physical volume that makes up the linear device.

#lvs -a -o name,copy_percent,devices my_vg

LV Copy% Devices

my_lv 100.00 my_lv_rimage_0(0),my_lv_rimage_1(0)

[my_lv_rimage_0] /dev/sda1(1)

[my_lv_rimage_1] /dev/sdb1(1)

[my_lv_rmeta_0] /dev/sda1(0)

[my_lv_rmeta_1] /dev/sdb1(0)

#lvconvert -m0 my_vg/my_lv /dev/sda1

#lvs -a -o name,copy_percent,devices my_vg

LV Copy% Devices

my_lv /dev/sdb1(1)

Converting a Mirrored LVM Device to a RAID1 Device

The following example shows the layout of a mirrored logical volume my_vg/my_lv.

#lvs -a -o name,copy_percent,devices my_vg

LV Copy% Devices

my_lv 15.20 my_lv_mimage_0(0),my_lv_mimage_1(0)

[my_lv_mimage_0] /dev/sde1(0)

[my_lv_mimage_1] /dev/sdf1(0)

[my_lv_mlog] /dev/sdd1(0)

The following command converts the mirrored logical volume my_vg/my_lv to a RAID1 logical volume.

#lvconvert --type raid1 my_vg/my_lv

#lvs -a -o name,copy_percent,devices my_vg

LV Copy% Devices

my_lv 100.00 my_lv_rimage_0(0),my_lv_rimage_1(0)

[my_lv_rimage_0] /dev/sde1(0)

[my_lv_rimage_1] /dev/sdf1(0)

[my_lv_rmeta_0] /dev/sde1(125)

[my_lv_rmeta_1] /dev/sdf1(125) 转自:http://www.cnblogs.com/popsuper1982/p/3854000.html

LVM学习的更多相关文章

- Linux LVM学习总结——扩展卷组VG

Linux服务器由于应用变更或需求的缘故,有可能出现分区空间不足的情况,此时往往需要进行扩容(要增加分区的空间),而采用LVM的好处就是可以在不需停机的情况下可以方便地调整各个分区大小.如下所示,分区 ...

- Linux LVM学习总结——创建卷组VG

在Linux平台如何创建一个卷组(VG)呢?下面简单介绍一下卷组(VG)的创建步骤.本文实验平台为Red Hat Enterprise Linux Server release 6.6 (Santia ...

- 烂泥:LVM学习之KVM利用LVM快照备份与恢复虚拟机

本文由秀依林枫提供友情赞助,首发于烂泥行天下. 最近一段时间一直在学习有关LVM逻辑卷方面的知识,前几篇文章介绍了有关LVM的逻辑卷的基本相关知识,包括逻辑卷及卷组的扩容与缩小.今天我们再来介绍LVM ...

- 烂泥:LVM学习之逻辑卷及卷组缩小空间

本文由秀依林枫提供友情赞助,首发于烂泥行天下. 上一篇文章,我们学习了如何给LVM的逻辑卷及卷组扩容.这篇文章我们来学习,如何给LVM的逻辑卷及卷组缩小空间. 注意逻辑卷的缩小一定要离线操作,不能是在 ...

- 烂泥:LVM学习之LVM基础

本文由秀依林枫提供友情赞助,首发于烂泥行天下. 有关LVM的好处我就不在此多介绍了,有空的话自己可以去百度百科中看看.我们在此之进行LVM的相关操作,以及命令的学习. 要想使系统支持LVM,我们必须安 ...

- Linux LVM学习总结——放大LV容量

本篇介绍LVM管理中的命令lvresize,我们先创建一个卷组VG VolGroup02,它建立在磁盘/dev/sdc (大小为8G)上.创建逻辑卷LV时,我们故意只使用了一小部分.具体情况如下所示 ...

- Linux LVM学习总结——删除物理卷

本篇介绍LVM管理中的命令vgreduce, pvremove.其实前面几篇中以及有所涉及. vgreduce:通过删除LVM卷组中的物理卷来减少卷组容量.注意:不能删除LVM卷组中剩余的最后一个物理 ...

- 烂泥:LVM学习之逻辑卷、卷组及物理卷删除

本文由秀依林枫提供友情赞助,首发于烂泥行天下. 上篇文章,我们介绍了有关LVM的逻辑卷及卷组的空间缩小.这次我们来介绍下如何删除一个逻辑卷及卷组. 删除逻辑卷需要以下几个步骤: 1. 卸载已经挂载的逻 ...

- 烂泥:LVM学习之逻辑卷LV及卷组扩容VG

本文由秀依林枫提供友情赞助,首发于烂泥行天下. 上篇文章中介绍了有关LVM基础的知识,这篇文章我们来介绍如何给LVM的逻辑卷LV及卷组VG扩容. LVM的逻辑卷,我们知道它最后相当于一个分区,既然是一 ...

- AIX LVM学习笔记

LVM: LOGIC VOLUMN MANAGEMENT (逻辑卷管理器) 通过将数据在存储空间的 逻辑视图 与 实际的物理磁盘 之间进行映射,来控制磁盘资源.实现方式是在传统的物理设备驱动层之上加载 ...

随机推荐

- Oracle的基本学习(三)—函数

一.字符函数 1.大小写控制函数 --lower:使字母变为小写-- --upper:使字母变为大写-- --initcap:使字符的第一个字母变为大写-- select lower('ABC') ...

- java线程池ThreadPoolExector源码分析

java线程池ThreadPoolExector源码分析 今天研究了下ThreadPoolExector源码,大致上总结了以下几点跟大家分享下: 一.ThreadPoolExector几个主要变量 先 ...

- position relative

position的默认值是static,(也就是说对于任意一个元素,如果没有定义它的position属性,那么它的position:static) 如果你想让这个#demo里的一个div#sub相对于 ...

- GitHub上非常受开发者欢迎的iOS开源项目(二)

"每一次的改变总意味着新的开始."这句话用在iOS上可谓是再合适不过的了.GitHub上的iOS开源项目数不胜数,iOS每一次的改变,总会引发iOS开源项目的演变,从iOS 1.x ...

- 记录参加QCon的心得

如有侵权,请告知作者删除.scottzg@126.com 很荣幸参加QCon全球软件开发大会,这里特别感谢我们部门的总经理,也是<互联网广告算法和系统实践>此书的作者王勇睿.因为他我才有这 ...

- 关于Java中String类的hashCode方法

首先来看一下String中hashCode方法的实现源码 public int hashCode() { int h = hash; if (h == 0 && value.lengt ...

- 开通阿里云 CDN

CDN,内容分发网络,主要功能是在不同的地点缓存内容,通过负载均衡技术,将用户的请求定向到最合适的缓存服务器上去获取内容,从而加快文件加载速度. 阿里云提供了按量计费的CDN,开启十分方便,于是我在自 ...

- tomcat的环境搭建

tomcat搭建过程还是比较简单的,只需要安装好jdk,然后配置好环境变量,最后把tomcat安装上开启就可以了. 首先下载jdk,然后把下载下来的jdk放到/usr/local下,然后用rpm -i ...

- C# TreeGridView 实现进程列表

效果如图 0x01 获取进程列表,使用Win32Api规避"拒绝访问"异常 public List<AppProcess> GetAppProcesses() { In ...

- 接口加密《二》: API权限设计总结

来源:http://meiyitianabc.blog.163.com/blog/static/105022127201310562811897/ API权限设计总结: 最近在做API的权限设计这一块 ...