tensorflow在文本处理中的使用——Doc2Vec情感分析

代码来源于:tensorflow机器学习实战指南(曾益强 译,2017年9月)——第七章:自然语言处理

代码地址:https://github.com/nfmcclure/tensorflow-cookbook

数据:http://www.cs.cornell.edu/people/pabo/movie-review-data/rt-polaritydata.tar.gz

在word2vec的方法中,处理的是单词之间的上下文关系,但是没有考虑单词和单词所在文档之间的关系

word2vec方法的拓展之一就是doc2vec方法,其考虑文档的影响。

算法思想

引入文档嵌套,连同单词嵌套一起帮助判断文档的情感色彩

如何结合?

1.文档嵌套和单词嵌套相加(要求文档嵌套的大小和单词嵌套大小相同)

2.文档嵌套追加在单词嵌套后面(需要增加变量数目)

一般而言,对于小数据集,两种嵌套相加是更好的选择

步骤

- 数据准备处理工作

- 计算出文档嵌套和单词嵌套

- 训练逻辑回归模型

1. 数据准备处理工作

1.1 导入必要的包

1.2 加载数据

1.3 声明模型参数

1.4 归一化影评,确保影评长度大于指定窗口大小

1.5 创建单词词典(无需建立文档字典,每个文档均有唯一的索引值)

import tensorflow as tf

import matplotlib.pyplot as plt

import numpy as np

import random

import os

import pickle

import string

import requests

import collections

import io

import tarfile

import urllib.request

import text_helpers

from nltk.corpus import stopwords

from tensorflow.python.framework import ops

ops.reset_default_graph() os.chdir(os.path.dirname(os.path.realpath(__file__))) # Make a saving directory if it doesn't exist

data_folder_name = 'temp'

if not os.path.exists(data_folder_name):

os.makedirs(data_folder_name) # Start a graph session

sess = tf.Session() # Declare model parameters

batch_size = 500

vocabulary_size = 7500

generations = 100000

model_learning_rate = 0.001 embedding_size = 200 # Word embedding size

doc_embedding_size = 100 # Document embedding size

concatenated_size = embedding_size + doc_embedding_size num_sampled = int(batch_size/2) # Number of negative examples to sample.

window_size = 3 # How many words to consider to the left. # Add checkpoints to training

save_embeddings_every = 5000

print_valid_every = 5000

print_loss_every = 100 # Declare stop wordsstops = stopwords.words('english')# We pick a few test words for validation.

valid_words = ['love', 'hate', 'happy', 'sad', 'man', 'woman']

# Later we will have to transform these into indices # Load the movie review data

print('Loading Data')

texts, target = text_helpers.load_movie_data(data_folder_name) # Normalize text

print('Normalizing Text Data')

texts = text_helpers.normalize_text(texts, stops) # Texts must contain at least 3 words

target = [target[ix] for ix, x in enumerate(texts) if len(x.split()) > window_size]

texts = [x for x in texts if len(x.split()) > window_size]

assert(len(target)==len(texts)) # Build our data set and dictionaries

print('Creating Dictionary')

word_dictionary = text_helpers.build_dictionary(texts, vocabulary_size)

word_dictionary_rev = dict(zip(word_dictionary.values(), word_dictionary.keys()))

text_data = text_helpers.text_to_numbers(texts, word_dictionary) # Get validation word keys待验证单词集

valid_examples = [word_dictionary[x] for x in valid_words]

2. 计算出文档嵌套和单词嵌套

2.1 定义文档嵌套和单词嵌套

print('Creating Model')

# Define Embeddings:

embeddings = tf.Variable(tf.random_uniform([vocabulary_size, embedding_size], -1.0, 1.0))

doc_embeddings = tf.Variable(tf.random_uniform([len(texts), doc_embedding_size], -1.0, 1.0))

# NCE loss parameters

nce_weights = tf.Variable(tf.truncated_normal([vocabulary_size, concatenated_size], stddev=1.0 / np.sqrt(concatenated_size)))

nce_biases = tf.Variable(tf.zeros([vocabulary_size]))

2.2 声明doc2vec索引和目标单词索引的占位符(输入索引大小是窗口大小加1,因为有一个额外的文档索引)

# Create data/target placeholders

x_inputs = tf.placeholder(tf.int32, shape=[None, window_size + 1]) # plus 1 for doc index

y_target = tf.placeholder(tf.int32, shape=[None, 1])

valid_dataset = tf.constant(valid_examples, dtype=tf.int32)

2.3 解释产生批量数据时输入多一维文档索引

# 和skip-gram,CBOW一样

>>> rand_sentence=[2520, 1421, 146, 1215, 5, 468, 12, 14, 18, 20]

>>> window_size = 3

>>> window_sequences = [rand_sentence[max((ix-window_size),0):(ix+window_size+1)] for ix, x in enumerate(rand_sentence)]

>>> label_indices = [ix if ix<window_size else window_size for ix,x in enumerate(window_sequences)]

>>> window_sequences

[[2520, 1421, 146, 1215], [2520, 1421, 146, 1215, 5], [2520, 1421, 146, 1215, 5, 468], [2520, 1421, 146, 1215, 5, 468, 12], [1421, 146, 1215, 5, 468, 12, 14],

[146, 1215, 5, 468, 12, 14, 18], [1215, 5, 468, 12, 14, 18, 20], [5, 468, 12, 14, 18, 20], [468, 12, 14, 18, 20], [12, 14, 18, 20]]

>>> label_indices

[0, 1, 2, 3, 3, 3, 3, 3, 3, 3] # 左边窗口词和目标词

>>> batch_and_labels = [(rand_sentence[i:i+window_size], rand_sentence[i+window_size]) for i in range(0, len(rand_sentence)-window_size)]

>>> batch_and_labels

[([2520, 1421, 146], 1215), ([1421, 146, 1215], 5), ([146, 1215, 5], 468), ([1215, 5, 468], 12), ([5, 468, 12], 14), ([468, 12, 14], 18), ([12, 14, 18], 20)]

>>> batch, labels = [list(x) for x in zip(*batch_and_labels)]

>>> batch

[[2520, 1421, 146], [1421, 146, 1215], [146, 1215, 5], [1215, 5, 468], [5, 468, 12], [468, 12, 14], [12, 14, 18]]

>>> labels

[1215, 5, 468, 12, 14, 18, 20] # 155代表的是rand_sentence_ix,把文档索引加入

>>> batch = [x + [155] for x in batch]

>>> batch

[[2520, 1421, 146, 155], [1421, 146, 1215, 155], [146, 1215, 5, 155], [1215, 5, 468, 155], [5, 468, 12, 155], [468, 12, 14, 155], [12, 14, 18, 155]]

2.4 创建嵌套函数将单词嵌套求和,然后连接文档嵌套

# 创建嵌套函数将单词嵌套求和,然后连接文档嵌套

embed = tf.zeros([batch_size, embedding_size])

for element in range(window_size):

embed += tf.nn.embedding_lookup(embeddings, x_inputs[:, element]) doc_indices = tf.slice(x_inputs, [0,window_size],[batch_size,1])

doc_embed = tf.nn.embedding_lookup(doc_embeddings,doc_indices) # concatenate embeddings

final_embed = tf.concat(1, [embed, tf.squeeze(doc_embed)])

函数参考:tf.slice,tf.concat,tf.squeeze

上述计算过程实例展示

In [1]: import tensorflow as tf In [2]: batch_size=4 In [3]: embedding_size=2 In [4]: window_size=3 In [5]: vocabulary_size=5 In [6]: embeddings = tf.Variable(tf.random_uniform([vocabulary_size, embedding_size], -1.0, 1.0)) In [7]: x_inputs = tf.constant([[1,2,3,4],[2,1,3,4],[0,1,2,4],[0,2,1,4]]) In [8]: doc_embedding_size=6 In [9]: doc_embeddings=tf.Variable(tf.random_uniform([5, doc_embedding_size], -1.0, 1.0)) # 输入上述operation In [17]: init = tf.global_variables_initializer() In [18]: sess.run(init) In [19]: sess.run(embeddings)

Out[19]:

array([[ 0.98145223, -0.83131742],

[-0.52276659, -0.29783845],

[ 0.46809649, 0.044348 ],

[ 0.31654406, -0.46324134],

[ 0.75828886, -0.99072552]], dtype=float32) In [20]: sess.run(doc_embeddings)

Out[20]:

array([[ 0.9781754 , -0.06880736, -0.2043252 , 0.26498127, -0.5746839 ,

0.98072433],

[-0.38463736, 0.79673767, 0.43830776, 0.85574818, 0.56155062,

-0.18029761],

[-0.72975278, 0.39068675, -0.72180915, -0.04128242, 0.00453711,

0.35815096],

[ 0.66498852, 0.07083178, -0.42824841, -0.12427211, 0.35502028,

0.92180991],

[ 0.64659524, -0.57290649, 0.76603436, -0.20811057, -0.10866618,

0.52539349]], dtype=float32) In [22]: sess.run(x_inputs)

Out[22]:

array([[1, 2, 3, 4],

[2, 1, 3, 4],

[0, 1, 2, 4],

[0, 2, 1, 4]], dtype=int32) In [23]: sess.run(embed)

Out[23]:

array([[ 0.26187396, -0.71673179],

[ 0.26187396, -0.71673179],

[ 0.92678213, -1.08480787],

[ 0.92678213, -1.08480787]], dtype=float32) In [24]: sess.run(doc_indices)

Out[24]:

array([[4],

[4],

[4],

[4]], dtype=int32) In [25]: sess.run(doc_embed)

Out[25]:

array([[[ 0.64659524, -0.57290649, 0.76603436, -0.20811057, -0.10866618,

0.52539349]], [[ 0.64659524, -0.57290649, 0.76603436, -0.20811057, -0.10866618,

0.52539349]], [[ 0.64659524, -0.57290649, 0.76603436, -0.20811057, -0.10866618,

0.52539349]], [[ 0.64659524, -0.57290649, 0.76603436, -0.20811057, -0.10866618,

0.52539349]]], dtype=float32)

2.5 声明loss和optimizer

# Get loss from prediction

loss = tf.reduce_mean(tf.nn.nce_loss(nce_weights, nce_biases, final_embed, y_target, num_sampled, vocabulary_size)) # Create optimizer

optimizer = tf.train.GradientDescentOptimizer(learning_rate=model_learning_rate)

train_step = optimizer.minimize(loss)

2.6 声明验证单词集的余弦距离

# Cosine similarity between words

norm = tf.sqrt(tf.reduce_sum(tf.square(embeddings), 1, keep_dims=True))

normalized_embeddings = embeddings / norm

valid_embeddings = tf.nn.embedding_lookup(normalized_embeddings, valid_dataset)

similarity = tf.matmul(valid_embeddings, normalized_embeddings, transpose_b=True)

2.7 保存嵌套数据&训练

# Create model saving operation

saver = tf.train.Saver({"embeddings": embeddings, "doc_embeddings": doc_embeddings}) #Add variable initializer.

init = tf.initialize_all_variables()

sess.run(init) # Run the skip gram model.

print('Starting Training')

loss_vec = []

loss_x_vec = []

for i in range(generations):

batch_inputs, batch_labels = text_helpers.generate_batch_data(text_data, batch_size, window_size, method='doc2vec')

feed_dict = {x_inputs : batch_inputs, y_target : batch_labels} # Run the train step

sess.run(train_step, feed_dict=feed_dict) # Return the loss

if (i+1) % print_loss_every == 0:

loss_val = sess.run(loss, feed_dict=feed_dict)

loss_vec.append(loss_val)

loss_x_vec.append(i+1)

print('Loss at step {} : {}'.format(i+1, loss_val)) # Validation: Print some random words and top 5 related words

if (i+1) % print_valid_every == 0:

sim = sess.run(similarity, feed_dict=feed_dict)

for j in range(len(valid_words)):

valid_word = word_dictionary_rev[valid_examples[j]]

top_k = 5 # number of nearest neighbors

nearest = (-sim[j, :]).argsort()[1:top_k+1]

log_str = "Nearest to {}:".format(valid_word)

for k in range(top_k):

close_word = word_dictionary_rev[nearest[k]]

log_str = '{} {},'.format(log_str, close_word)

print(log_str) # Save dictionary + embeddings

if (i+1) % save_embeddings_every == 0:

# Save vocabulary dictionary

with open(os.path.join(data_folder_name,'movie_vocab.pkl'), 'wb') as f:

pickle.dump(word_dictionary, f) # Save embeddings

model_checkpoint_path = os.path.join(os.getcwd(),data_folder_name,'doc2vec_movie_embeddings.ckpt')

save_path = saver.save(sess, model_checkpoint_path)

print('Model saved in file: {}'.format(save_path))

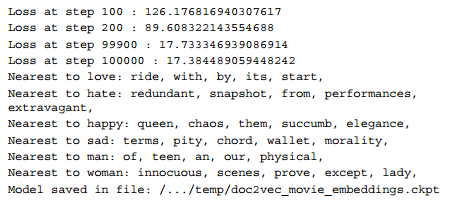

结果展示:

3. 训练逻辑回归模型

3.1 参数

# Start logistic model-------------------------

max_words = 20

logistic_batch_size = 500

3.2 分割数据集

# Split dataset into train and test sets

# Need to keep the indices sorted to keep track of document index

train_indices = np.sort(np.random.choice(len(target), round(0.8*len(target)), replace=False))

test_indices = np.sort(np.array(list(set(range(len(target))) - set(train_indices))))

texts_train = [x for ix, x in enumerate(texts) if ix in train_indices]

texts_test = [x for ix, x in enumerate(texts) if ix in test_indices]

target_train = np.array([x for ix, x in enumerate(target) if ix in train_indices])

target_test = np.array([x for ix, x in enumerate(target) if ix in test_indices])

3.3 以20个单词表示每条影评

# Convert texts to lists of indices

text_data_train = np.array(text_helpers.text_to_numbers(texts_train, word_dictionary))

text_data_test = np.array(text_helpers.text_to_numbers(texts_test, word_dictionary)) # Pad/crop movie reviews to specific length

text_data_train = np.array([x[0:max_words] for x in [y+[0]*max_words for y in text_data_train]])

text_data_test = np.array([x[0:max_words] for x in [y+[0]*max_words for y in text_data_test]])

3.4 创建另一个嵌套函数,前面的嵌套函数是训练三个单词窗口和文档索引预测最近的单词,这里类似,不同的是训练20个单词的影评

# Define logistic embedding lookup (needed if we have two different batch sizes)

# Add together element embeddings in window:

log_embed = tf.zeros([logistic_batch_size, embedding_size])

for element in range(max_words):

log_embed += tf.nn.embedding_lookup(embeddings, log_x_inputs[:, element]) log_doc_indices = tf.slice(log_x_inputs, [0,max_words],[logistic_batch_size,1])

log_doc_embed = tf.nn.embedding_lookup(doc_embeddings,log_doc_indices) # concatenate embeddings

log_final_embed = tf.concat(1, [log_embed, tf.squeeze(log_doc_embed)])

3.5 构建逻辑回归模型的训练图

# Define Logistic placeholders

log_x_inputs = tf.placeholder(tf.int32, shape=[None, max_words + 1]) # plus 1 for doc index

log_y_target = tf.placeholder(tf.int32, shape=[None, 1]) # Define model:

# Create variables for logistic regression

A = tf.Variable(tf.random_normal(shape=[concatenated_size,1]))

b = tf.Variable(tf.random_normal(shape=[1,1])) # Declare logistic model (sigmoid in loss function)

model_output = tf.add(tf.matmul(log_final_embed, A), b) # Declare loss function (Cross Entropy loss)

logistic_loss = tf.reduce_mean(tf.nn.sigmoid_cross_entropy_with_logits(model_output, tf.cast(log_y_target, tf.float32))) # Actual Prediction

prediction = tf.round(tf.sigmoid(model_output))

predictions_correct = tf.cast(tf.equal(prediction, tf.cast(log_y_target, tf.float32)), tf.float32)

accuracy = tf.reduce_mean(predictions_correct) # Declare optimizer

logistic_opt = tf.train.GradientDescentOptimizer(learning_rate=0.01)

logistic_train_step = logistic_opt.minimize(logistic_loss, var_list=[A, b])

3.6 训练

# Intitialize Variables

init = tf.global_variables_initializer()

sess.run(init) # Start Logistic Regression

print('Starting Logistic Doc2Vec Model Training')

train_loss = []

test_loss = []

train_acc = []

test_acc = []

i_data = []

for i in range(10000):

rand_index = np.random.choice(text_data_train.shape[0], size=logistic_batch_size)

rand_x = text_data_train[rand_index]

# Append review index at the end of text data

rand_x_doc_indices = train_indices[rand_index]

rand_x = np.hstack((rand_x, np.transpose([rand_x_doc_indices])))

rand_y = np.transpose([target_train[rand_index]]) feed_dict = {log_x_inputs : rand_x, log_y_target : rand_y}

sess.run(logistic_train_step, feed_dict=feed_dict) # Only record loss and accuracy every 100 generations

if (i+1)%100==0:

rand_index_test = np.random.choice(text_data_test.shape[0], size=logistic_batch_size)

rand_x_test = text_data_test[rand_index_test]

# Append review index at the end of text data

rand_x_doc_indices_test = test_indices[rand_index_test]

rand_x_test = np.hstack((rand_x_test, np.transpose([rand_x_doc_indices_test])))

rand_y_test = np.transpose([target_test[rand_index_test]]) test_feed_dict = {log_x_inputs: rand_x_test, log_y_target: rand_y_test} i_data.append(i+1) train_loss_temp = sess.run(logistic_loss, feed_dict=feed_dict)

train_loss.append(train_loss_temp) test_loss_temp = sess.run(logistic_loss, feed_dict=test_feed_dict)

test_loss.append(test_loss_temp) train_acc_temp = sess.run(accuracy, feed_dict=feed_dict)

train_acc.append(train_acc_temp) test_acc_temp = sess.run(accuracy, feed_dict=test_feed_dict)

test_acc.append(test_acc_temp)

if (i+1)%500==0:

acc_and_loss = [i+1, train_loss_temp, test_loss_temp, train_acc_temp, test_acc_temp]

acc_and_loss = [np.round(x,2) for x in acc_and_loss]

print('Generation # {}. Train Loss (Test Loss): {:.2f} ({:.2f}). Train Acc (Test Acc): {:.2f} ({:.2f})'.format(*acc_and_loss))

结果:

可视化代码参考:tensorflow在文本处理中的使用——Word2Vec预测

tensorflow在文本处理中的使用——Doc2Vec情感分析的更多相关文章

- tensorflow在文本处理中的使用——Word2Vec预测

代码来源于:tensorflow机器学习实战指南(曾益强 译,2017年9月)——第七章:自然语言处理 代码地址:https://github.com/nfmcclure/tensorflow-coo ...

- tensorflow在文本处理中的使用——CBOW词嵌入模型

代码来源于:tensorflow机器学习实战指南(曾益强 译,2017年9月)——第七章:自然语言处理 代码地址:https://github.com/nfmcclure/tensorflow-coo ...

- tensorflow在文本处理中的使用——skip-gram模型

代码来源于:tensorflow机器学习实战指南(曾益强 译,2017年9月)——第七章:自然语言处理 代码地址:https://github.com/nfmcclure/tensorflow-coo ...

- tensorflow在文本处理中的使用——TF-IDF算法

代码来源于:tensorflow机器学习实战指南(曾益强 译,2017年9月)——第七章:自然语言处理 代码地址:https://github.com/nfmcclure/tensorflow-coo ...

- tensorflow在文本处理中的使用——词袋

代码来源于:tensorflow机器学习实战指南(曾益强 译,2017年9月)——第七章:自然语言处理 代码地址:https://github.com/nfmcclure/tensorflow-coo ...

- tensorflow在文本处理中的使用——辅助函数

代码来源于:tensorflow机器学习实战指南(曾益强 译,2017年9月)——第七章:自然语言处理 代码地址:https://github.com/nfmcclure/tensorflow-coo ...

- tensorflow在文本处理中的使用——skip-gram & CBOW原理总结

摘自:http://www.cnblogs.com/pinard/p/7160330.html 先看下列三篇,再理解此篇会更容易些(个人意见) skip-gram,CBOW,Word2Vec 词向量基 ...

- [Algorithm & NLP] 文本深度表示模型——word2vec&doc2vec词向量模型

深度学习掀开了机器学习的新篇章,目前深度学习应用于图像和语音已经产生了突破性的研究进展.深度学习一直被人们推崇为一种类似于人脑结构的人工智能算法,那为什么深度学习在语义分析领域仍然没有实质性的进展呢? ...

- TensorFlow实现文本情感分析详解

http://c.biancheng.net/view/1938.html 前面我们介绍了如何将卷积网络应用于图像.本节将把相似的想法应用于文本. 文本和图像有什么共同之处?乍一看很少.但是,如果将句 ...

随机推荐

- PHP核心编程-图像操作

一 图像操作环境: 1. 开启GD2图像处理并检测 在php.ini开启GD库 2. 画布坐标系说明 二. 图像基本操作(步骤) 1. 创建图像 创建画布(图像资源) 创建的方法: ...

- 【python小随笔】List列表的常见函数与切片

eval()的使用 n = ["2.3","2.56"] m = [] for i in n: k = eval(i) #只是去了最外层的双引号,单引号, 规定 ...

- python2与python3爬虫中get与post对比

python2中的urllib2改为python3中的urllib.request 四种方式对比: python2的get: # coding=utf-8 import urllib import u ...

- day39-Spring 17-Spring的JDBC模板:完成增删改的操作

JdbcTemplate根DBUtils非常类似,你要是有非常多的Dao,你每一个Dao都需要写它 /*在Dao层注入JDBC模板*/ private JdbcTemplate jdbcTemplat ...

- PLAY2.6-SCALA(二) Actions, Controllers ,Results,routes

一.Action(play.api.mvc.Action) 大多数的应用的请求都是由action进行处理,并生成一个结果给客户端,Action有多种创建方式: 1. Action {Ok(" ...

- day19 django继续

上节回顾 django - 路由系统:url.py - 视图函数:views.py - 模板引擎渲染 - HttpResonse(字符串) - render(request,'index.html') ...

- s3c6410时钟初始化

今天自己写bootloader做时钟初始化时遇到的问题,特记录下来.为了方便理解,我大部分都有截图, 在此我先说明下,图均来自数据手冊.也希望看了本篇文章的同志多多參看数据手冊才干理解的更加透 ...

- <Mysql必知必会> ---- 笔记

转载自 https://www.jianshu.com/p/294502893128 挺基础的mysql的书籍,基本上都是如何操作的语法. 第1章 了解SQL 主键(primary key):能够唯 ...

- 阿里云:面向5G时代的物联网无线连接服务

在4月24日落幕的2019中国联通合作伙伴大会“5G+物联网(IoT)论坛”上,阿里云高级运营专家李茁出席圆桌对话,分享了5G时代物联网如何更好地推动行业完成生产.管理和商业模式的创新,阿里云又会以何 ...

- @bzoj - 4378@ [POI2015] Pustynia

目录 @description@ @solution@ @accepted code@ @details@ @description@ 给定一个长度为 n 的正整数序列 a,每个数都在 1 到 10^ ...