6.824 Lab 3: Fault-tolerant Key/Value Service 3A

6.824 Lab 3: Fault-tolerant Key/Value Service

Due Part A: Mar 13 23:59

Due Part B: Apr 10 23:59

Introduction

In this lab you will build a fault-tolerant key/value storage service using your Raft library from lab 2. You key/value service will be a replicated state machine, consisting of several key/value servers that use Raft to maintain replication. Your key/value service should continue to process client requests as long as a majority of the servers are alive and can communicate, in spite of other failures or network partitions.您的键/值服务将是一个复制的状态机,由几个使用Raft来维护复制的键/值服务器组成。只要大多数服务器是活动的,并且可以通信,您的键/值服务就应该继续处理客户机请求,尽管存在其他故障或网络分区。

The service supports three operations: Put(key, value), Append(key, arg), and Get(key). It maintains a simple database of key/value pairs. Put() replaces the value for a particular key in the database, Append(key, arg) appends arg to key's value, and Get() fetches the current value for a key. An Append to a non-existant key should act like Put. Each client talks to the service through a Clerk with Put/Append/Get methods. A Clerk manages RPC interactions with the servers.

Your service must provide strong consistency to applications calls to the Clerk Get/Put/Append methods. Here's what we mean by strong consistency. If called one at a time, the Get/Put/Append methods should act as if the system had only one copy of its state, and each call should observe the modifications to the state implied by the preceding sequence of calls. For concurrent calls, the return values and final state must be the same as if the operations had executed one at a time in some order. Calls are concurrent if they overlap in time, for example if client X calls Clerk.Put(), then client Y calls Clerk.Append(), and then client X's call returns. Furthermore, a call must observe the effects of all calls that have completed before the call starts (so we are technically asking for linearizability).

Strong consistency is convenient for applications because it means that, informally, all clients see the same state and they all see the latest state. Providing strong consistency is relatively easy for a single server. It is harder if the service is replicated, since all servers must choose the same execution order for concurrent requests, and must avoid replying to clients using state that isn't up to date.强一致性对于应用程序来说是很方便的,因为它意味着,在非正式的情况下,所有客户机都看到相同的状态,并且它们都看到最新的状态。对于单个服务器,提供强一致性相对容易。如果复制服务就比较困难了,因为所有服务器必须为并发请求选择相同的执行顺序,并且必须避免使用不是最新的状态来响应客户机。

This lab has two parts. In part A, you will implement the service without worrying that the Raft log can grow without bound. In part B, you will implement snapshots (Section 7 in the paper), which will allow Raft to garbage collect old log entries. Please submit each part by the respective deadline.

- This lab doesn't require you to write much code, but you will most likely spend a substantial amount of time thinking and staring at debugging logs to figure out why your implementation doesn't work. Debugging will be more challenging than in the Raft lab because there are more components that work asynchronously of each other. Start early.

- You should reread the extended Raft paper, in particular Sections 7 and 8. For a wider perspective, have a look at Chubby, Raft Made Live, Spanner, Zookeeper, Harp, Viewstamped Replication, and Bolosky et al.

- You are allowed to add fields to the Raft ApplyMsg, and to add fields to Raft RPCs such as AppendEntries. But be sure that your code continues to pass the Lab 2 tests.

Getting Started

Do a git pull to get the latest lab software.

We supply you with skeleton code and tests in src/kvraft. You will need to modify kvraft/client.go, kvraft/server.go, and perhaps kvraft/common.go.

To get up and running, execute the following commands:

$ cd ~/6.824

$ git pull

...

$ cd src/kvraft

$ GOPATH=~/6.824

$ export GOPATH

$ go test

...

$

Part A: Key/value service without log compaction

Each of your key/value servers ("kvservers") will have an associated Raft peer. Clerks send Put(), Append(), and Get() RPCs to the kvserver whose associated Raft is the leader. The kvserver code submits the Put/Append/Get operation to Raft, so that the Raft log holds a sequence of Put/Append/Get operations. All of the kvservers execute operations from the Raft log in order, applying the operations to their key/value databases; the intent is for the servers to maintain identical replicas of the key/value database.

A Clerk sometimes doesn't know which kvserver is the Raft leader. If the Clerk sends an RPC to the wrong kvserver, or if it cannot reach the kvserver, the Clerk should re-try by sending to a different kvserver. If the key/value service commits the operation to its Raft log (and hence applies the operation to the key/value state machine), the leader reports the result to the Clerk by responding to its RPC. If the operation failed to commit (for example, if the leader was replaced), the server reports an error, and the Clerk retries with a different server.

Your first task is to implement a solution that works when there are no dropped messages, and no failed servers.您的第一个任务是实现一个解决方案,该解决方案在没有丢失的消息和失败的服务器时可以工作。

You'll need to add RPC-sending code to the Clerk Put/Append/Get methods in client.go, and implement PutAppend() and Get() RPC handlers in server.go. These handlers should enter an Op in the Raft log using Start(); you should fill in the Op struct definition in server.go so that it describes a Put/Append/Get operation. Each server should execute Op commands as Raft commits them, i.e. as they appear on the applyCh. An RPC handler should notice when Raft commits its Op, and then reply to the RPC.

You have completed this task when you reliably pass the first test in the test suite: "One client". You may also find that you can pass the "concurrent clients" test, depending on how sophisticated your implementation is.

Your kvservers should not directly communicate; they should only interact with each other through the Raft log.

- After calling Start(), your kvservers will need to wait for Raft to complete agreement. Commands that have been agreed upon arrive on the applyCh. You should think carefully about how to arrange your code so that it will keep reading applyCh, while PutAppend() and Get() handlers submit commands to the Raft log using Start(). It is easy to achieve deadlock between the kvserver and its Raft library.

- Your solution needs to handle the case in which a leader has called Start() for a Clerk's RPC, but loses its leadership before the request is committed to the log. In this case you should arrange for the Clerk to re-send the request to other servers until it finds the new leader. One way to do this is for the server to detect that it has lost leadership, by noticing that a different request has appeared at the index returned by Start(), or that Raft's term has changed. If the ex-leader is partitioned by itself, it won't know about new leaders; but any client in the same partition won't be able to talk to a new leader either, so it's OK in this case for the server and client to wait indefinitely until the partition heals.

- You will probably have to modify your Clerk to remember which server turned out to be the leader for the last RPC, and send the next RPC to that server first. This will avoid wasting time searching for the leader on every RPC, which may help you pass some of the tests quickly enough.

- A kvserver should not complete a Get() RPC if it is not part of a majority (so that it does not serve stale data). A simple solution is to enter every Get() (as well as each Put() and Append()) in the Raft log. You don't have to implement the optimization for read-only operations that is described in Section 8.

- It's best to add locking from the start because the need to avoid deadlocks sometimes affects overall code design. Check that your code is race-free using go test -race.

In the face of unreliable connections and server failures, a Clerk may send an RPC multiple times until it finds a kvserver that replies positively. If a leader fails just after committing an entry to the Raft log, the Clerk may not receive a reply, and thus may re-send the request to another leader. Each call to Clerk.Put() or Clerk.Append() should result in just a single execution, so you will have to ensure that the re-send doesn't result in the servers executing the request twice.

Add code to cope with duplicate Clerk requests, including situations where the Clerk sends a request to a kvserver leader in one term, times out waiting for a reply, and re-sends the request to a new leader in another term. The request should always execute just once. Your code should pass the go test -run 3A tests.

- You will need to uniquely identify client operations to ensure that the key/value service executes each one just once.

- Your scheme for duplicate detection should free server memory quickly, for example by having each RPC imply that the client has seen the reply for its previous RPC. It's OK to assume that a client will make only one call into a Clerk at a time.

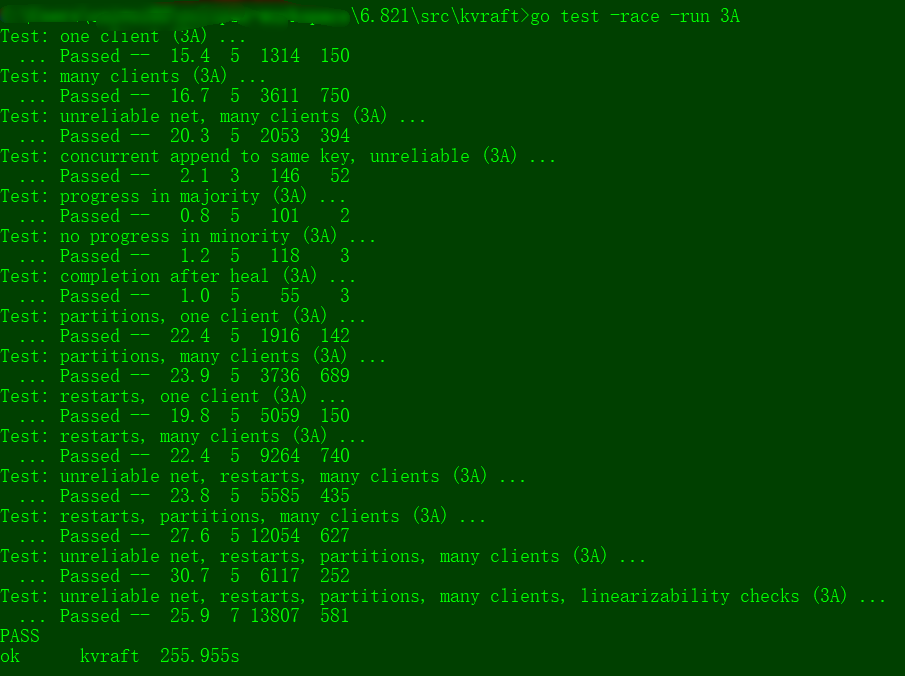

Your code should now pass the Lab 3A tests, like this:

$ go test -run 3A

Test: one client (3A) ...

... Passed -- 15.1 5 12882 2587

Test: many clients (3A) ...

... Passed -- 15.3 5 9678 3666

Test: unreliable net, many clients (3A) ...

... Passed -- 17.1 5 4306 1002

Test: concurrent append to same key, unreliable (3A) ...

... Passed -- 0.8 3 128 52

Test: progress in majority (3A) ...

... Passed -- 0.9 5 58 2

Test: no progress in minority (3A) ...

... Passed -- 1.0 5 54 3

Test: completion after heal (3A) ...

... Passed -- 1.0 5 59 3

Test: partitions, one client (3A) ...

... Passed -- 22.6 5 10576 2548

Test: partitions, many clients (3A) ...

... Passed -- 22.4 5 8404 3291

Test: restarts, one client (3A) ...

... Passed -- 19.7 5 13978 2821

Test: restarts, many clients (3A) ...

... Passed -- 19.2 5 10498 4027

Test: unreliable net, restarts, many clients (3A) ...

... Passed -- 20.5 5 4618 997

Test: restarts, partitions, many clients (3A) ...

... Passed -- 26.2 5 9816 3907

Test: unreliable net, restarts, partitions, many clients (3A) ...

... Passed -- 29.0 5 3641 708

Test: unreliable net, restarts, partitions, many clients, linearizability checks (3A) ...

... Passed -- 26.5 7 10199 997

PASS

ok kvraft 237.352s

The numbers after each Passed are real time in seconds, number of peers, number of RPCs sent (including client RPCs), and number of key/value operations executed (Clerk Get/Put/Append calls).

Handin procedure for lab 3A

Before submitting, please run the tests for part A one final time. Some bugs may not appear on every run, so run the tests multiple times.

Submit your code via the class's submission website, located at https://6824.scripts.mit.edu/2020/handin.py/.

You may use your MIT Certificate or request an API key via email to log in for the first time. Your API key (XXX) is displayed once you are logged in, which can be used to upload the lab from the console as follows.

$ cd "$GOPATH"

$ echo "XXX" > api.key

$ make lab3a

Check the submission website to make sure it sees your submission!

You may submit multiple times. We will use the timestamp of your last submission for the purpose of calculating late days. Your grade is determined by the score your solution reliably achieves when we run the tester on our test machines.

my homework code: server.go

- package raftkv

- import (

- "labgob"

- "labrpc"

- "log"

- "raft"

- "sync"

- "time"

- )

- const Debug =

- func DPrintf(format string, a ...interface{}) (n int, err error) {

- if Debug > {

- log.Printf(format, a...)

- }

- return

- }

- type Op struct {

- // Your definitions here.

- // Field names must start with capital letters,

- // otherwise RPC will break.

- Key string

- Value string

- Name string

- ClientId int64

- RequestId int

- }

- type KVServer struct {

- mu sync.Mutex

- me int

- rf *raft.Raft

- applyCh chan raft.ApplyMsg

- maxraftstate int // snapshot if log grows this big

- // Your definitions here.

- db map[string]string // 3A

- dispatcher map[int]chan Notification // 3A

- lastAppliedRequestId map[int64]int // 3A

- }

- //3A

- type Notification struct {

- ClientId int64

- RequestId int

- }

- func (kv *KVServer) Get(args *GetArgs, reply *GetReply) {

- // Your code here.

- op := Op{

- Key: args.Key,

- Name: "Get",

- ClientId: args.ClientId,

- RequestId: args.RequestId,

- }

- // wait for being applied

- // or leader changed (log is overrided, and never gets applied)

- reply.WrongLeader = kv.waitApplying(op, *time.Millisecond)

- if reply.WrongLeader == false {

- kv.mu.Lock()

- value, ok := kv.db[args.Key]

- kv.mu.Unlock()

- if ok {

- reply.Value = value

- return

- }

- // not found

- reply.Err = ErrNoKey

- }

- }

- func (kv *KVServer) PutAppend(args *PutAppendArgs, reply *PutAppendReply) {

- // Your code here.

- op := Op{

- Key: args.Key,

- Value: args.Value,

- Name: args.Op,

- ClientId: args.ClientId,

- RequestId: args.RequestId,

- }

- // wait for being applied

- // or leader changed (log is overrided, and never gets applied)

- reply.WrongLeader = kv.waitApplying(op, *time.Millisecond)

- }

- //

- // the tester calls Kill() when a KVServer instance won't

- // be needed again. you are not required to do anything

- // in Kill(), but it might be convenient to (for example)

- // turn off debug output from this instance.

- //

- func (kv *KVServer) Kill() {

- kv.rf.Kill()

- // Your code here, if desired.

- }

- //

- // servers[] contains the ports of the set of

- // servers that will cooperate via Raft to

- // form the fault-tolerant key/value service.

- // me is the index of the current server in servers[].

- // the k/v server should store snapshots through the underlying Raft

- // implementation, which should call persister.SaveStateAndSnapshot() to

- // atomically save the Raft state along with the snapshot.

- // the k/v server should snapshot when Raft's saved state exceeds maxraftstate bytes,

- // in order to allow Raft to garbage-collect its log. if maxraftstate is -1,

- // you don't need to snapshot.

- // StartKVServer() must return quickly, so it should start goroutines

- // for any long-running work.

- //

- func StartKVServer(servers []*labrpc.ClientEnd, me int, persister *raft.Persister, maxraftstate int) *KVServer {

- // call labgob.Register on structures you want

- // Go's RPC library to marshall/unmarshall.

- labgob.Register(Op{})

- kv := new(KVServer)

- kv.me = me

- kv.maxraftstate = maxraftstate

- // You may need initialization code here.

- kv.db = make(map[string]string)

- kv.dispatcher = make(map[int]chan Notification)

- kv.lastAppliedRequestId = make(map[int64]int)

- kv.applyCh = make(chan raft.ApplyMsg)

- kv.rf = raft.Make(servers, me, persister, kv.applyCh)

- // You may need initialization code here.

- go func() {

- for msg := range kv.applyCh {

- if msg.CommandValid == false {

- continue

- }

- op := msg.Command.(Op)

- DPrintf("kvserver %d start applying command %s at index %d, request id %d, client id %d",

- kv.me, op.Name, msg.CommandIndex, op.RequestId, op.ClientId)

- kv.mu.Lock()

- if kv.isDuplicateRequest(op.ClientId, op.RequestId) {

- kv.mu.Unlock()

- continue

- }

- switch op.Name {

- case "Put":

- kv.db[op.Key] = op.Value

- case "Append":

- kv.db[op.Key] += op.Value

- // Get() does not need to modify db, skip

- }

- kv.lastAppliedRequestId[op.ClientId] = op.RequestId

- if ch, ok := kv.dispatcher[msg.CommandIndex]; ok {

- notify := Notification{

- ClientId: op.ClientId,

- RequestId: op.RequestId,

- }

- ch <- notify

- }

- kv.mu.Unlock()

- DPrintf("kvserver %d applied command %s at index %d, request id %d, client id %d",

- kv.me, op.Name, msg.CommandIndex, op.RequestId, op.ClientId)

- }

- }()

- return kv

- }

- // should be called with lock

- func (kv *KVServer) isDuplicateRequest(clientId int64, requestId int) bool {

- appliedRequestId, ok := kv.lastAppliedRequestId[clientId]

- if ok == false || requestId > appliedRequestId {

- return false

- }

- return true

- }

- func (kv *KVServer) waitApplying(op Op, timeout time.Duration) bool {

- // return common part of GetReply and PutAppendReply

- // i.e., WrongLeader

- index, _, isLeader := kv.rf.Start(op)

- if isLeader == false {

- return true

- }

- var wrongLeader bool

- kv.mu.Lock()

- if _, ok := kv.dispatcher[index]; !ok {

- kv.dispatcher[index] = make(chan Notification, )

- }

- ch := kv.dispatcher[index]

- kv.mu.Unlock()

- select {

- case notify := <-ch:

- if notify.ClientId != op.ClientId || notify.RequestId != op.RequestId {

- // leader has changed

- wrongLeader = true

- } else {

- wrongLeader = false

- }

- case <-time.After(timeout):

- kv.mu.Lock()

- if kv.isDuplicateRequest(op.ClientId, op.RequestId) {

- wrongLeader = false

- } else {

- wrongLeader = true

- }

- kv.mu.Unlock()

- }

- DPrintf("kvserver %d got %s() RPC, insert op %+v at %d, reply WrongLeader = %v",

- kv.me, op.Name, op, index, wrongLeader)

- kv.mu.Lock()

- delete(kv.dispatcher, index)

- kv.mu.Unlock()

- return wrongLeader

- }

client.go

- package raftkv

- import "labrpc"

- import "crypto/rand"

- import "math/big"

- type Clerk struct {

- servers []*labrpc.ClientEnd

- // You will have to modify this struct.

- leaderId int

- clientId int64

- lastRequestId int

- }

- func nrand() int64 {

- max := big.NewInt(int64() << )

- bigx, _ := rand.Int(rand.Reader, max)

- x := bigx.Int64()

- return x

- }

- func MakeClerk(servers []*labrpc.ClientEnd) *Clerk {

- ck := new(Clerk)

- ck.servers = servers

- // You'll have to add code here.

- ck.clientId = nrand() // in real world the id can be a unique ip:port

- return ck

- }

- //

- // fetch the current value for a key.

- // returns "" if the key does not exist.

- // keeps trying forever in the face of all other errors.

- //

- // you can send an RPC with code like this:

- // ok := ck.servers[i].Call("KVServer.Get", &args, &reply)

- //

- // the types of args and reply (including whether they are pointers)

- // must match the declared types of the RPC handler function's

- // arguments. and reply must be passed as a pointer.

- //

- func (ck *Clerk) Get(key string) string {

- // You will have to modify this function.

- requestId := ck.lastRequestId +

- for {

- args := GetArgs{

- Key: key,

- ClientId: ck.clientId,

- RequestId: requestId,

- }

- var reply GetReply

- ok := ck.servers[ck.leaderId].Call("KVServer.Get", &args, &reply)

- if ok == false || reply.WrongLeader == true {

- ck.leaderId = (ck.leaderId + ) % len(ck.servers)

- continue

- }

- // request is sent successfully

- ck.lastRequestId = requestId

- return reply.Value

- }

- return ""

- }

- //

- // shared by Put and Append.

- //

- // you can send an RPC with code like this:

- // ok := ck.servers[i].Call("KVServer.PutAppend", &args, &reply)

- //

- // the types of args and reply (including whether they are pointers)

- // must match the declared types of the RPC handler function's

- // arguments. and reply must be passed as a pointer.

- //

- func (ck *Clerk) PutAppend(key string, value string, op string) {

- // You will have to modify this function.

- requestId := ck.lastRequestId +

- for {

- args := PutAppendArgs{

- Key: key,

- Value: value,

- Op: op,

- ClientId: ck.clientId,

- RequestId: requestId,

- }

- var reply PutAppendReply

- ok := ck.servers[ck.leaderId].Call("KVServer.PutAppend", &args, &reply)

- if ok == false || reply.WrongLeader == true {

- ck.leaderId = (ck.leaderId + ) % len(ck.servers)

- continue

- }

- // request is sent successfully

- ck.lastRequestId = requestId

- return

- }

- }

- func (ck *Clerk) Put(key string, value string) {

- ck.PutAppend(key, value, "Put")

- }

- func (ck *Clerk) Append(key string, value string) {

- ck.PutAppend(key, value, "Append")

- }

common.go

- package raftkv

- const (

- OK = "OK"

- ErrNoKey = "ErrNoKey"

- )

- type Err string

- // Put or Append

- type PutAppendArgs struct {

- Key string

- Value string

- Op string // "Put" or "Append"

- // You'll have to add definitions here.

- // Field names must start with capital letters,

- // otherwise RPC will break.

- ClientId int64

- RequestId int

- }

- type PutAppendReply struct {

- WrongLeader bool

- Err Err

- }

- type GetArgs struct {

- Key string

- // You'll have to add definitions here.

- ClientId int64

- RequestId int

- }

- type GetReply struct {

- WrongLeader bool

- Err Err

- Value string

- }

go test -run 3A

go test -race -run 3A

6.824 Lab 3: Fault-tolerant Key/Value Service 3A的更多相关文章

- 6.824 Lab 3: Fault-tolerant Key/Value Service 3B

Part B: Key/value service with log compaction Do a git pull to get the latest lab software. As thing ...

- 6.824 Lab 2: Raft 2A

6.824 Lab 2: Raft Part 2A Due: Feb 23 at 11:59pm Part 2B Due: Mar 2 at 11:59pm Part 2C Due: Mar 9 at ...

- FTH: (7156): *** Fault tolerant heap shim applied to current process. This is usually due to previous crashes. ***

这两天在Qtcreator上编译程序的时候莫名其妙的出现了FTH: (7156): *** Fault tolerant heap shim applied to current process. T ...

- MIT-6.824 Lab 3: Fault-tolerant Key/Value Service

概述 lab2中实现了raft协议,本lab将在raft之上实现一个可容错的k/v存储服务,第一部分是实现一个不带日志压缩的版本,第二部分是实现日志压缩.时间原因我只完成了第一部分. 设计思路 如上图 ...

- 6.824 Lab 2: Raft 2C

Part 2C Do a git pull to get the latest lab software. If a Raft-based server reboots it should resum ...

- Akka的fault tolerant

要想容错,该怎么办? 父actor首先要获知子actor的失败状态,然后确定该怎么办, “怎么办”这回事叫做“supervisorStrategy". // Restart the st ...

- 6.824 Lab 5: Caching Extents

Introduction In this lab you will modify YFS to cache extents, reducing the load on the extent serve ...

- 6.824 Lab 2: Raft 2B

Part 2B We want Raft to keep a consistent, replicated log of operations. A call to Start() at the le ...

- 解决Qt4.8.6+VS2010运行程序提示 FTH: (6512): *** Fault tolerant heap shim applied to current process. This is usually due to previous crashes

这个问题偶尔碰到两次,现在又遇上了,解决办法如下: 打开注册表,设置HKLM\Software\Microsoft\FTH\Enabled 为0 打开CMD,运行Rundll32.exe fthsvc ...

随机推荐

- PAT Basic 1081 检查密码 (15 分)

本题要求你帮助某网站的用户注册模块写一个密码合法性检查的小功能.该网站要求用户设置的密码必须由不少于6个字符组成,并且只能有英文字母.数字和小数点 .,还必须既有字母也有数字. 输入格式: 输入第一行 ...

- PAT Basic 1043 输出PATest (20 分)

给定一个长度不超过 的.仅由英文字母构成的字符串.请将字符重新调整顺序,按 PATestPATest.... 这样的顺序输出,并忽略其它字符.当然,六种字符的个数不一定是一样多的,若某种字符 ...

- kloxo增加了域名,怎么不能访问?如何重启web服务?

kloxo增加了域名,怎么不能访问?这是因为需要重新启动web服务. 有时候网站打不开,也可以尝试重启web服务. 重启web服务方法: 登录kloxo后台-->左边栏:服务器linux --& ...

- [CQOI2013]新Nim游戏(博弈论,线性基)

[CQOI2013]新Nim游戏 题目描述 传统的Nim游戏是这样的:有一些火柴堆,每堆都有若干根火柴(不同堆的火柴数量可以不同).两个游戏者轮流操作,每次可以选一个火柴堆拿走若干根火柴.可以只拿一根 ...

- __name__='main' 这句代码是什么意思?《读书笔记》

当我们阅读 别人的python代码都会有 if name == "main"这么一行语句,但却不知道为什么要写这个?有什么用 想知道这段代码什么意思让我们来根据例子来测试一下 我们 ...

- 甘特图 dhtmlx 插件

https://dhtmlx.com/docs/products/demoApps/advanced-gantt-chart/

- Perf Event :Linux下的系统性能调优工具

Perf Event :Linux下的系统性能调优工具 2011-05-27 10:35 刘 明 IBMDW 字号:T | T Perf Event 是一款随 Linux 内核代码一同发布和维护的性能 ...

- springboot 配置quart多数据源

Springboot版本为2.1.6 多数据源配置使用druid进行配置,数据库使用的为Oracle11g,如果使用的是MySQL,直接将数据库的地址和驱动改一下即可 <parent> & ...

- EEPROM类库的使用---断电不丢失的存储芯片

EEPROM(Electrically Erasable Programmable Read-Only Memory),电可擦可编程只读存储器——一种掉电后数据不丢失的存储芯片. EEPROM可以在不 ...

- vertica,greenplumr容器安装

一,vertica 来源: https://github.com/sumitchawla/docker-vertica 使用方法: # To run without a persistent data ...