Introducing Deep Reinforcement

The manuscript of Deep Reinforcement Learning is available now! It makes significant improvements to Deep Reinforcement Learning: An Overview, which has received 100+ citations, by extending its latest version more than one year ago from 70 pages to 150 pages.

It draws a big picture of deep reinforcement learning (RL) with many details. It covers contemporary work in historical contexts. It endeavours to answer the following questions: 1) Why deep? 2) What is the state of the art? and, 3) What are the issues, and potential solutions? It attempts to help those who want to get more familiar with deep RL, and to serve as a reference for people interested in this fascinating area, like professors, researchers, students, engineers, managers, investors, etc. Shortcomings and mistakes are inevitable; comments and criticisms are welcome.

The manuscript introduces AI, machine learning, and deep learning briefly, and provides a mini tutorial for reinforcement learning. The following figure illustrates relationships among these concepts, with major contents for machine learning and AI .Deep reinforcement learning is reinforcement learning integrated with deep learning, or deep artificial neural networks. A blog is dedicated to Resources for Deep Reinforcement Learning.

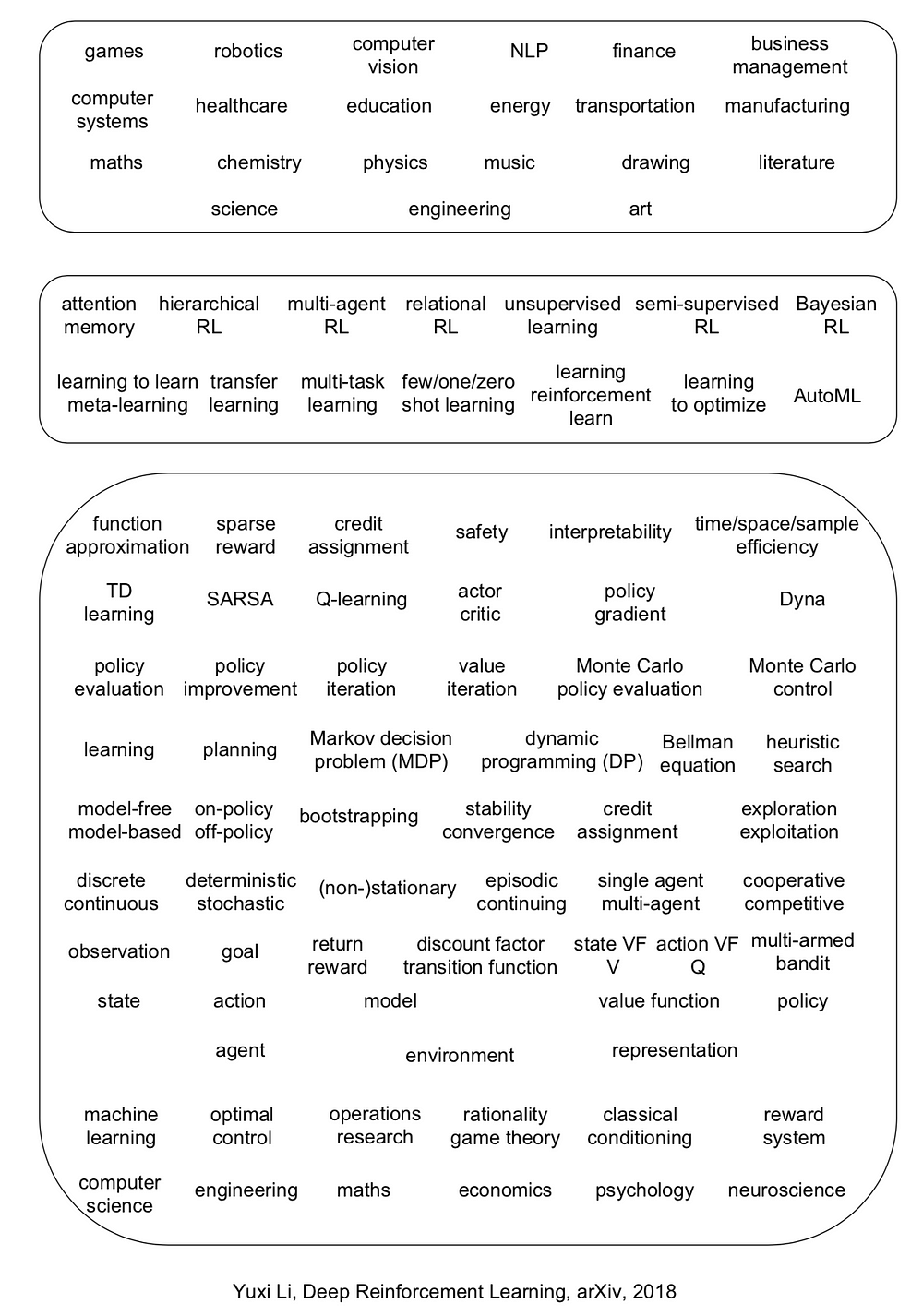

The manuscript covers six core elements: value function, policy, reward, model, exploration vs. exploitation, and representation; six important mechanisms: attention and memory, unsupervised learning, hierarchical RL, multi-agent RL, relational RL, and learning to learn; and twelve applications: games, robotics, natural language processing (NLP), computer vision, finance, business management, healthcare, education, energy, transportation, computer systems, and, science, engineering, and art.

Deep reinforcement learning has made exceptional achievements, e.g., DQN applying to Atari games ignited this wave of deep RL, and AlphaGo (Zero) and DeepStack set landmarks for AI. Deep RL has many newly invented algorithms/architectures, e.g., DQN, A3C, TRPO, PPO, DDPG, Trust-PCL, GPS, UNREAL, DNC, etc. Moreover, deep RL has been enjoying very abound and diverse applications, e.g., Capture the Flag, Dota 2, StarCraft II, robotics, character animation, conversational AI, neural architecture design (AutoML), data center cooling, recommender systems, data augmentation, model compression, combinatorial optimization, program synthesis, theorem proving, medical imaging, music, and chemical retrosynthesis, so on and so forth. A blog is dedicated to Reinforcement Learning applications.

In general, RL is probably helpful, if a problem can be regarded as or transformed to a sequential decision making problem, and states, actions, maybe rewards, can be constructed; sometimes the problem may not appear as an RL problem on the surface. Roughly speaking, if a task involves some manual designed “strategy”, then there is a chance for reinforcement learning to help. Creativity would push the frontiers of deep RL further with respect to core elements, important mechanisms, and applications.

Albeit being so successful, deep RL encounters many issues, like credit assignment, sparse reward, sample efficiency, instability, divergence, interpretability, safety, etc.; even reproducibility is an issue.

Six research directions are proposed as both challenges and opporrtunities. There are already some progress in these directions, e.g., Dopamine, TStarBots, MOREL, GQN, visual reasoning, neural-symbolic learning, UPN, causal InfoGAN, meta-gradient RL, along with many applications as above.

- systematic, comparative study of deep RL algorithms

- “solve” multi-agent problems

- learn from entities, but not just raw inputs

- design an optimal representation for RL

- AutoRL

- develop killer applications for (deep) RL

It is desirable to integrate RL more deeply with AI, with more intelligence in the end-to-end mapping from raw inputs to decisions, to incorporate knowledge, to have common sense, to be more efficient, to be more interpretable, and to avoid obvious mistakes, etc., rather than working as a blackbox.

Deep learning and reinforcement learning, being selected as one of the MIT Technology Review 10 Breakthrough Technologies in 2013 and 2017 respectively, will play their crucial roles in achieving artificial general intelligence. David Silver proposed a conjecture: artificial intelligence = reinforcement learning + deep learning (AI = RL + DL). We will see both deep learning and reinforcement learning prospering in the coming years and beyond. Deep learning is exploding. It is the right time to nurture, educate and lead the market for reinforcement learning.

Deep learning, in this third wave of AI, will have deeper influences, as we have already seen from its many achievements. Reinforcement learning, as a more general learning and decision making paradigm, will deeply influence deep learning, machine learning, and artificial intelligence in general.

Introducing Deep Reinforcement的更多相关文章

- 论文笔记之:Asynchronous Methods for Deep Reinforcement Learning

Asynchronous Methods for Deep Reinforcement Learning ICML 2016 深度强化学习最近被人发现貌似不太稳定,有人提出很多改善的方法,这些方法有很 ...

- (转) Playing FPS games with deep reinforcement learning

Playing FPS games with deep reinforcement learning 博文转自:https://blog.acolyer.org/2016/11/23/playing- ...

- (zhuan) Deep Reinforcement Learning Papers

Deep Reinforcement Learning Papers A list of recent papers regarding deep reinforcement learning. Th ...

- Learning Roadmap of Deep Reinforcement Learning

1. 知乎上关于DQN入门的系列文章 1.1 DQN 从入门到放弃 DQN 从入门到放弃1 DQN与增强学习 DQN 从入门到放弃2 增强学习与MDP DQN 从入门到放弃3 价值函数与Bellman ...

- (转) Deep Reinforcement Learning: Playing a Racing Game

Byte Tank Posts Archive Deep Reinforcement Learning: Playing a Racing Game OCT 6TH, 2016 Agent playi ...

- 论文笔记之:Dueling Network Architectures for Deep Reinforcement Learning

Dueling Network Architectures for Deep Reinforcement Learning ICML 2016 Best Paper 摘要:本文的贡献点主要是在 DQN ...

- getting started with building a ROS simulation platform for Deep Reinforcement Learning

Apparently, this ongoing work is to make a preparation for futural research on Deep Reinforcement Le ...

- (转) Deep Reinforcement Learning: Pong from Pixels

Andrej Karpathy blog About Hacker's guide to Neural Networks Deep Reinforcement Learning: Pong from ...

- 论文笔记之:Deep Reinforcement Learning with Double Q-learning

Deep Reinforcement Learning with Double Q-learning Google DeepMind Abstract 主流的 Q-learning 算法过高的估计在特 ...

随机推荐

- 浏览器兼容性工具 Spoon Browser Sandbox

1.Spoon Browser Sandbox 勺浏览器沙箱 主流浏览器多(IE.Chrome.FireFox.Safari.Opera),浏览器又有很多版本:保证网页在主流浏览器中很好的显示,不可能 ...

- UI基础:UITableView表视图

表视图 UITableView,iOS中最重要的视图,随处可见. 表视图通常用来管理一组具有相同数据结构的数据. UITableView继承于UIScrollView,所以可以滚动 表视图的每条数据都 ...

- 读博 在没有导师PUSH的情况下该何去何从?

读博已有两月之久,与导师也是仅有的一面之缘,短短数分钟谈话大致总结便是看看基础知识,再然后就没有什么了,突然之间有些小懵逼.突然间感慨这就是我的博士生涯的生活,这就没有啦,以后就这么过啦?在读博士之前 ...

- OK335xS-Android mkmmc-android-ubifs.sh hacking

#/******************************************************************************* # * OK335xS-Androi ...

- 转:三种状态对象的使用及区别(Application,Session,Cookie)

Application状态对象 Application 对象是HttpApplication 类的实例,将在客户端第一期从某个特定的ASP.NET应用程序虚拟目录中请求任何URL 资源时创建.对于We ...

- SUST OJ 1671: 数字拼图

1671: 数字拼图 时间限制: 1 Sec 内存限制: 16 MB提交: 34 解决: 19[提交][状态][讨论版] 题目描述 拼图游戏即在任意一个N*N(N>1)的拼图中,会把一张完整 ...

- Battle City 优先队列+bfs

Many of us had played the game "Battle city" in our childhood, and some people (like me) e ...

- Vue2.x directive自定义指令

directive自定义指令 除了默认设置的核心指令( v-model 和 v-show ),Vue 也允许注册自定义指令. 注意,在 Vue2.0 里面,代码复用的主要形式和抽象是组件——然而,有的 ...

- windows 版 nginx 运行错误的一些解决方法

1. 关于文件夹的中文的问题. 错误的截图如下: 看得到这个 failed (1113: No mapping for the Unicode character exists in the targ ...

- NOSQL之MONGODB

MongoDB 基于分布式文件存储的数据库.由 C++ 语言编写.旨在为 WEB 应用提供可扩展的高性能数据存储解决方案,它是一个介于关系数据库和非关系数据库之间的产品,是非关系数据库当中功能最丰富, ...