附014.Kubernetes Prometheus+Grafana+EFK+Kibana+Glusterfs整合性方案

一 glusterfs存储集群部署

1.1 架构示意

1.2 相关规划

|

主机

|

IP

|

磁盘

|

备注

|

|

k8smaster01

|

172.24.8.71

|

——

|

Kubernetes Master节点

Heketi主机

|

|

k8smaster02

|

172.24.8.72

|

——

|

Kubernetes Master节点

Heketi主机

|

|

k8smaster03

|

172.24.8.73

|

——

|

Kubernetes Master节点

Heketi主机

|

|

k8snode01

|

172.24.8.74

|

sdb

|

Kubernetes Worker节点

glusterfs 01节点

|

|

k8snode02

|

172.24.8.75

|

sdb

|

Kubernetes Worker节点

glusterfs 02节点

|

|

k8snode03

|

172.24.8.76

|

sdb

|

Kubernetes Worker节点

glusterfs 03节点

|

1.3 安装glusterfs

1.4 添加信任池

1.5 安装heketi

1.6 配置heketi

{

"_port_comment": "Heketi Server Port Number",

"port": "",

"_use_auth": "Enable JWT authorization. Please enable for deployment",

"use_auth": true,

"_jwt": "Private keys for access",

"jwt": {

"_admin": "Admin has access to all APIs",

"admin": {

"key": "admin123"

},

"_user": "User only has access to /volumes endpoint",

"user": {

"key": "xianghy"

}

},

"_glusterfs_comment": "GlusterFS Configuration",

"glusterfs": {

"_executor_comment": [

"Execute plugin. Possible choices: mock, ssh",

"mock: This setting is used for testing and development.",

" It will not send commands to any node.",

"ssh: This setting will notify Heketi to ssh to the nodes.",

" It will need the values in sshexec to be configured.",

"kubernetes: Communicate with GlusterFS containers over",

" Kubernetes exec api."

],

"executor": "ssh",

"_sshexec_comment": "SSH username and private key file information",

"sshexec": {

"keyfile": "/etc/heketi/heketi_key",

"user": "root",

"port": "",

"fstab": "/etc/fstab"

},

"_db_comment": "Database file name",

"db": "/var/lib/heketi/heketi.db",

"_loglevel_comment": [

"Set log level. Choices are:",

" none, critical, error, warning, info, debug",

"Default is warning"

],

"loglevel" : "warning"

}

}

1.7 配置免秘钥

1.8 启动heketi

1.9 配置Heketi拓扑

{

"clusters": [

{

"nodes": [

{

"node": {

"hostnames": {

"manage": [

"k8snode01"

],

"storage": [

"172.24.8.74"

]

},

"zone": 1

},

"devices": [

"/dev/sdb"

]

},

{

"node": {

"hostnames": {

"manage": [

"k8snode02"

],

"storage": [

"172.24.8.75"

]

},

"zone": 1

},

"devices": [

"/dev/sdb"

]

},

{

"node": {

"hostnames": {

"manage": [

"k8snode03"

],

"storage": [

"172.24.8.76"

]

},

"zone": 1

},

"devices": [

"/dev/sdb"

]

}

]

}

]

}

1.10 集群管理及测试

1.11 创建StorageClass

apiVersion: v1

kind: Secret

metadata:

name: heketi-secret

namespace: heketi

data:

key: YWRtaW4xMjM=

type: kubernetes.io/glusterfs

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: ghstorageclass

parameters:

resturl: "http://172.24.8.71:8080"

clusterid: "ad0f81f75f01d01ebd6a21834a2caa30"

restauthenabled: "true"

restuser: "admin"

secretName: "heketi-secret"

secretNamespace: "heketi"

volumetype: "replicate:3"

provisioner: kubernetes.io/glusterfs

reclaimPolicy: Delete

二 集群监控Metrics

2.1 开启聚合层

2.2 获取部署文件

……

image: mirrorgooglecontainers/metrics-server-amd64:v0.3.6 #修改为国内源

command:

- /metrics-server

- --metric-resolution=30s

- --kubelet-insecure-tls

- --kubelet-preferred-address-types=InternalIP,Hostname,InternalDNS,ExternalDNS,ExternalIP #添加如上command

……

2.3 正式部署

2.4 确认验证

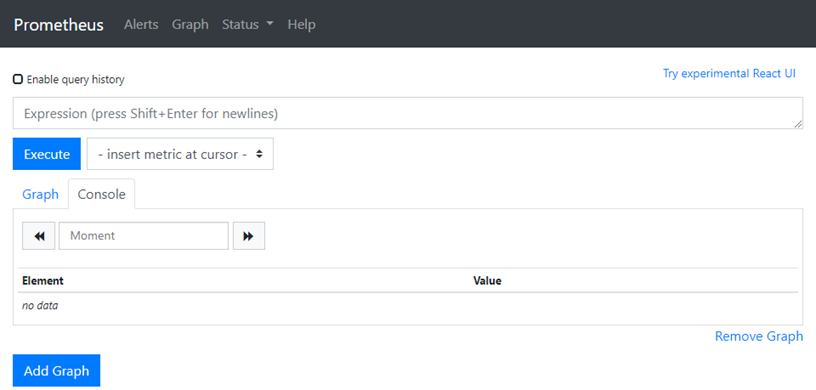

三 Prometheus部署

3.1 获取部署文件

3.2 创建命名空间

apiVersion: v1

kind: Namespace

metadata:

name: monitoring

3.3 创建RBAC

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: prometheus

rules:

- apiGroups: [""]

resources:

- nodes

- nodes/proxy

- services

- endpoints

- pods

verbs: ["get", "list", "watch"]

- apiGroups:

- extensions

resources:

- ingresses

verbs: ["get", "list", "watch"]

- nonResourceURLs: ["/metrics"]

verbs: ["get"]

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: prometheus

namespace: monitoring #仅需修改命名空间

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: prometheus

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: prometheus

subjects:

- kind: ServiceAccount

name: prometheus

namespace: monitoring #仅需修改命名空间

3.4 创建Prometheus ConfigMap

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-server-conf

labels:

name: prometheus-server-conf

namespace: monitoring #修改命名空间

……

3.5 创建持久PVC

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: prometheus-pvc

namespace: monitoring

annotations:

volume.beta.kubernetes.io/storage-class: ghstorageclass

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 5Gi

3.6 Prometheus部署

apiVersion: apps/v1beta2

kind: Deployment

metadata:

labels:

name: prometheus-deployment

name: prometheus-server

namespace: monitoring

spec:

replicas: 1

selector:

matchLabels:

app: prometheus-server

template:

metadata:

labels:

app: prometheus-server

spec:

containers:

- name: prometheus-server

image: prom/prometheus:v2.14.0

command:

- "/bin/prometheus"

args:

- "--config.file=/etc/prometheus/prometheus.yml"

- "--storage.tsdb.path=/prometheus/"

- "--storage.tsdb.retention=72h"

ports:

- containerPort: 9090

protocol: TCP

volumeMounts:

- name: prometheus-config-volume

mountPath: /etc/prometheus/

- name: prometheus-storage-volume

mountPath: /prometheus/

serviceAccountName: prometheus

imagePullSecrets:

- name: regsecret

volumes:

- name: prometheus-config-volume

configMap:

defaultMode: 420

name: prometheus-server-conf

- name: prometheus-storage-volume

persistentVolumeClaim:

claimName: prometheus-pvc

3.7 创建Prometheus Service

apiVersion: v1

kind: Service

metadata:

labels:

app: prometheus-service

name: prometheus-service

namespace: monitoring

spec:

type: NodePort

selector:

app: prometheus-server

ports:

- port: 9090

targetPort: 9090

nodePort: 30001

3.8 确认验证Prometheus

四 部署grafana

4.1 获取部署文件

4.2 创建持久PVC

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: grafana-data-pvc

namespace: monitoring

annotations:

volume.beta.kubernetes.io/storage-class: ghstorageclass

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 5Gi

4.3 grafana部署

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: monitoring-grafana

namespace: monitoring

spec:

replicas: 1

template:

metadata:

labels:

task: monitoring

k8s-app: grafana

spec:

containers:

- name: grafana

image: grafana/grafana:6.5.0

imagePullPolicy: IfNotPresent

ports:

- containerPort: 3000

protocol: TCP

volumeMounts:

- mountPath: /var/lib/grafana

name: grafana-storage

env:

- name: INFLUXDB_HOST

value: monitoring-influxdb

- name: GF_SERVER_HTTP_PORT

value: ""

- name: GF_AUTH_BASIC_ENABLED

value: "false"

- name: GF_AUTH_ANONYMOUS_ENABLED

value: "true"

- name: GF_AUTH_ANONYMOUS_ORG_ROLE

value: Admin

- name: GF_SERVER_ROOT_URL

value: /

readinessProbe:

httpGet:

path: /login

port: 3000

volumes:

- name: grafana-storage

persistentVolumeClaim:

claimName: grafana-data-pvc

nodeSelector:

node-role.kubernetes.io/master: "true"

tolerations:

- key: "node-role.kubernetes.io/master"

effect: "NoSchedule"

---

apiVersion: v1

kind: Service

metadata:

labels:

kubernetes.io/cluster-service: 'true'

kubernetes.io/name: monitoring-grafana

annotations:

prometheus.io/scrape: 'true'

prometheus.io/tcp-probe: 'true'

prometheus.io/tcp-probe-port: '80'

name: monitoring-grafana

namespace: monitoring

spec:

type: NodePort

ports:

- port: 80

targetPort: 3000

nodePort: 30002

selector:

k8s-app: grafana

4.4 确认验证Prometheus

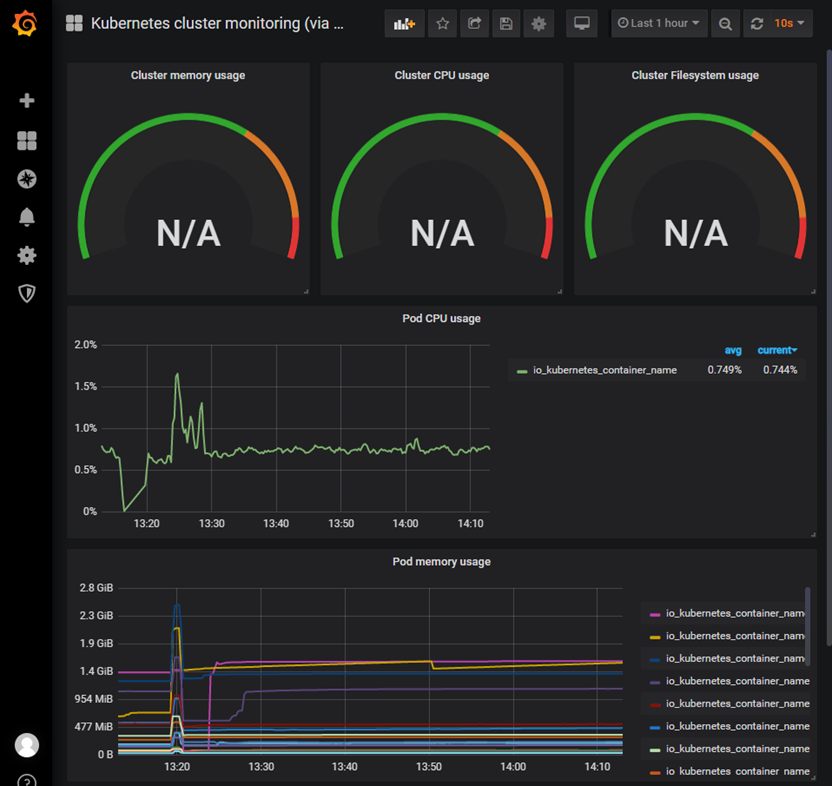

4.4 grafana配置

- 添加数据源:略

- 创建用户:略

4.5 查看监控

附014.Kubernetes Prometheus+Grafana+EFK+Kibana+Glusterfs整合性方案的更多相关文章

- 附014.Kubernetes Prometheus+Grafana+EFK+Kibana+Glusterfs整合解决方案

一 glusterfs存储集群部署 注意:以下为简略步骤,详情参考<附009.Kubernetes永久存储之GlusterFS独立部署>. 1.1 架构示意 略 1.2 相关规划 主机 I ...

- Kubernetes+Prometheus+Grafana部署笔记

一.基础概念 1.1 基础概念 Kubernetes(通常写成“k8s”)Kubernetes是Google开源的容器集群管理系统.其设计目标是在主机集群之间提供一个能够自动化部署.可拓展.应用容器可 ...

- Kubernetes使用prometheus+grafana做一个简单的监控方案

前言 本文介绍在k8s集群中使用node-exporter.prometheus.grafana对集群进行监控.其实现原理有点类似ELK.EFK组合.node-exporter组件负责收集节点上的me ...

- Kubernetes prometheus+grafana k8s 监控

参考: https://www.cnblogs.com/terrycy/p/10058944.html https://www.cnblogs.com/weiBlog/p/10629966.html ...

- 附024.Kubernetes全系列大总结

Kubernetes全系列总结如下,后期不定期更新.欢迎基于学习.交流目的的转载和分享,禁止任何商业盗用,同时希望能带上原文出处,尊重ITer的成果,也是尊重知识.若发现任何错误或纰漏,留言反馈或右侧 ...

- SpringBoot+Prometheus+Grafana实现应用监控和报警

一.背景 SpringBoot的应用监控方案比较多,SpringBoot+Prometheus+Grafana是目前比较常用的方案之一.它们三者之间的关系大概如下图: 关系图 二.开发SpringBo ...

- 使用 Prometheus + Grafana 对 Kubernetes 进行性能监控的实践

1 什么是 Kubernetes? Kubernetes 是 Google 开源的容器集群管理系统,其管理操作包括部署,调度和节点集群间扩展等. 如下图所示为目前 Kubernetes 的架构图,由 ...

- [转帖]Prometheus+Grafana监控Kubernetes

原博客的位置: https://blog.csdn.net/shenhonglei1234/article/details/80503353 感谢原作者 这里记录一下自己试验过程中遇到的问题: . 自 ...

- 附010.Kubernetes永久存储之GlusterFS超融合部署

一 前期准备 1.1 基础知识 在Kubernetes中,使用GlusterFS文件系统,操作步骤通常是: 创建brick-->创建volume-->创建PV-->创建PVC--&g ...

随机推荐

- 署名|单位地址|Abstract

科研论文写作---如何署名单位地址 署名的作者要研究结果负责,其署名作为对所作贡献的认可. 参与设计实验想法,参与实验过程,参与起草论文或重大修改论文,或对论文定稿的学者拥有署名权.而资金资助& ...

- scarce|component|

ADJ-GRADED 缺乏的;不足的;供不应求的If something is scarce, there is not enough of it. Food was scarce and expen ...

- Dubbo与Nginx微服务架构

Dubbo的负载均衡已经是服务层面的了,和nginx的负载均衡还在http请求层面完全不同.至于二者哪个优秀,当然没办法直接比较. 涉及到负载均衡就涉及到你的业务,根据业务来选择才是最适合的. dub ...

- C 语言高效编程与代码优化

译文链接:http://www.codeceo.com/article/c-high-performance-coding.html英文原文:Writing Efficient C and C Cod ...

- MongoDB的初级安装和使用

对于非关系型数据库,很多人不是很清楚,我也是作为新手慢慢摸索, 外网地址下载贼慢:我烦放在自己的百度网盘里 软件链接:https://pan.baidu.com/s/1A7h4VOfvm8N2gnlJ ...

- TCP 为什么是三次握手,而不是两次或四次?

TCP是一种全双工的可靠传输协议,核心思想:保证数据可靠传输以及数据的传输效率 A------B 二次握手: 1.A发送同步信号SYN+A's initial sequence number 2.B发 ...

- 使用JavaServer Faces技术的Web模块:hello1 example

该hello1应用程序是一个Web模块,它使用JavaServer Faces技术来显示问候语和响应.您可以使用文本编辑器查看应用程序文件,也可以使用NetBeans IDE. 此应用程序的源代码位于 ...

- python标准库:ftplib模块

ftplib模块包含了文件传输协议(FTP)客户端的实现. 下面的例子展示了如何登入和获取进入目录的列表,dir函数传入一个回调函数,该回调函数在服务器相应时每一行调用一次.ftplib模块默认的回调 ...

- 29.eval函数

eval 函数 eval() 函数十分强大 -- 将字符串 当成 有效的表达式 来求值 并 返回计算结果 123456789101112131415 # 基本的数学计算In [1]: eval(&qu ...

- “淘宝技术这十年”

"少时淘气,大时淘宝" 时势造英雄 起因eBay 易趣 在资本方面对仗,阿里想趁此崛起新项目就要求能在短时间内做出一个 个人对个人的商品交易网站(C2C)2003年4月7日-5月1 ...