CDH5.16.1集群企业真正离线部署

一.准备工作

1.离线部署主要分为三块:

- MySQL离线部署

- CM离线部署

- Parcel文件离线源部署

2、规划

| 节点 | MySQL部署组件 | Parcel文件离线源 | CM服务进程 | 大数据组件 |

|---|---|---|---|---|

| hadoop001 | MySQL | Parcel | Activity Monitor | NN RM DN NM |

| hadoop002 | Alert Publisher Event Server | DN NM | ||

| hadoop003 | Host MonitorService Monitor | DN NM |

3.下载源:

地址:http://archive.cloudera.com/cdh5/

CM:

http://archive.cloudera.com/cm5/cm/5/cloudera-manager-centos7-cm5.16.1_x86_64.tar.gz

Parcel:

http://archive.cloudera.com/cdh5/parcels/5.16.1/CDH-5.16.1-1.cdh5.16.1.p0.3-el7.parcel

http://archive.cloudera.com/cdh5/parcels/5.16.1/CDH-5.16.1-1.cdh5.16.1.p0.3-el7.parcel.sha1

http://archive.cloudera.com/cdh5/parcels/5.16.1/manifest.json

JDK:

https://www.oracle.com/technetwork/java/javase/downloads/java-archive-javase8-2177648.html

MySQL

https://dev.mysql.com/downloads/mysql/5.7.html#downloads

MySQL jdbc jar

http://central.maven.org/maven2/mysql/mysql-connector-java/5.1.47/mysql-connector-java-5.1.47.jar

下载完成后要重命名去掉版本号,

mv mysql-connector-java-5.1.47.jar mysql-connector-java.jar

————————————————

CM

cloudera-manager-centos7-cm5.16.1_x86_64.tar.gzParcel

CDH-5.16.1-1.cdh5.16.1.p0.3-el7.parcel

CDH-5.16.1-1.cdh5.16.1.p0.3-el7.parcel.sha1

manifest.jsonJDK

https://www.oracle.com/technetwork/java/javase/downloads/java-archive-javase8-2177648.html

下载jdk-8u202-linux-x64.tar.gzMySQL

https://dev.mysql.com/downloads/mysql/5.7.html#downloads

下载mysql-5.7.26-el7-x86_64.tar.gzMySQL jdbc jar

mysql-connector-java-5.1.47.jar

下载完成后要重命名去掉版本号,

mv mysql-connector-java-5.1.47.jar mysql-connector-java.jar

二.集群节点初始化

1.阿里云上海区购买3台,按量付费虚拟机

CentOS7.2操作系统,2核8G最低配置

2.当前笔记本或台式机配置hosts文件

MAC: /etc/hosts

Window: C:\windows\system32\drivers\etc\hosts

公网地址:

10.10.10.1 hadoop001

10.10.10.2 hadoop002

10.10.10.3 hadoop0033.设置所有节点的hosts文件

私有地址、内网地址:

echo "172.19.7.96 hadoop001">> /etc/hosts

echo "172.19.7.98 hadoop002">> /etc/hosts

echo "172.19.7.97 hadoop003">> /etc/hosts4.关闭所有节点的防火墙及清空规则

systemctl stop firewalld

systemctl disable firewalld

iptables -F5.关闭所有节点的selinux

vi /etc/selinux/config

将SELINUX=enforcing改为SELINUX=disabled

设置后需要重启才能生效6.设置所有节点的时区一致及时钟同步

1[root@hadoop001 ~]# date

2Sat May 11 10:07:53 CST 2019

3[root@hadoop001 ~]# timedatectl

4 Local time: Sat 2019-05-11 10:10:31 CST

5 Universal time: Sat 2019-05-11 02:10:31 UTC

6 RTC time: Sat 2019-05-11 10:10:29

7 Time zone: Asia/Shanghai (CST, +0800)

8 NTP enabled: yes

9NTP synchronized: yes

10 RTC in local TZ: yes

11 DST active: n/a查看命令帮助

[root@hadoop001 ~]# timedatectl --help

timedatectl [OPTIONS...] COMMAND ...

Query or change system time and date settings.

-h --help Show this help message

--version Show package version

--no-pager Do not pipe output into a pager

--no-ask-password Do not prompt for password

-H --host=[USER@]HOST Operate on remote host

-M --machine=CONTAINER Operate on local container

--adjust-system-clock Adjust system clock when changing local RTC mode

Commands:

status Show current time settings

set-time TIME Set system time

set-timezone ZONE Set system time zone

list-timezones Show known time zones

set-local-rtc BOOL Control whether RTC is in local time

set-ntp BOOL Control whether NTP is enabled查看哪些时区

[root@hadoop001 ~]# timedatectl list-timezones

Africa/Abidjan

Africa/Accra

Africa/Addis_Ababa

Africa/Algiers

Africa/Asmara

Africa/Bamako所有节点设置亚洲上海时区

[root@hadoop001 ~]# timedatectl set-timezone Asia/Shanghai

[root@hadoop002 ~]# timedatectl set-timezone Asia/Shanghai

[root@hadoop003 ~]# timedatectl set-timezone Asia/Shanghai6.2.时间

所有节点安装ntp

[root@hadoop001 ~]# yum install -y ntp选取hadoop001为ntp的主节点

[root@hadoop001 ~]# vi /etc/ntp.conf time

server 0.asia.pool.ntp.org

server 1.asia.pool.ntp.org

server 2.asia.pool.ntp.org

server 3.asia.pool.ntp.org

当外部时间不可用时,可使用本地硬件时间

server 127.127.1.0 iburst local clock 允许哪些网段的机器来同步时间

restrict 172.19.7.0 mask 255.255.255.0 nomodify notrap开启ntpd及查看状态

[root@hadoop001 ~]# systemctl start ntpd

[root@hadoop001 ~]# systemctl status ntpd

● ntpd.service - Network Time Service

Loaded: loaded (/usr/lib/systemd/system/ntpd.service; enabled; vendor preset: disabled)

Active: active (running) since Sat 2019-05-11 10:15:00 CST; 11min ago

Main PID: 18518 (ntpd)

CGroup: /system.slice/ntpd.service

└─18518 /usr/sbin/ntpd -u ntp:ntp -g

May 11 10:15:00 hadoop001 systemd[1]: Starting Network Time Service...

May 11 10:15:00 hadoop001 ntpd[18518]: proto: precision = 0.088 usec

May 11 10:15:00 hadoop001 ntpd[18518]: 0.0.0.0 c01d 0d kern kernel time sync enabled

May 11 10:15:00 hadoop001 systemd[1]: Started Network Time Service.验证

[root@hadoop001 ~]# ntpq -p

2 remote refid st t when poll reach delay offset jitter

3==============================================================================

4 LOCAL(0) .LOCL. 10 l 726 64 0 0.000 0.000 0.000[root@hadoop002 ~]# systemctl stop ntpd

[root@hadoop002 ~]# systemctl disable ntpd

Removed symlink /etc/systemd/system/multi-user.target.wants/ntpd.service.

[root@hadoop002 ~]# /usr/sbin/ntpdate hadoop001

11 May 10:29:22 ntpdate[9370]: adjust time server 172.19.7.96 offset 0.000867 sec其他从节点停止禁用ntpd服务

[root@hadoop002 ~]# systemctl stop ntpd

[root@hadoop002 ~]# systemctl disable ntpd

Removed symlink /etc/systemd/system/multi-user.target.wants/ntpd.service.

[root@hadoop002 ~]# /usr/sbin/ntpdate hadoop001

11 May 10:29:22 ntpdate[9370]: adjust time server 172.19.7.96 offset 0.000867 sec每天凌晨同步hadoop001节点时间

[root@hadoop002 ~]# crontab -e

00 00 * * * /usr/sbin/ntpdate hadoop001

[root@hadoop003 ~]# systemctl stop ntpd

[root@hadoop004 ~]# systemctl disable ntpd

Removed symlink /etc/systemd/system/multi-user.target.wants/ntpd.service.

[root@hadoop005 ~]# /usr/sbin/ntpdate hadoop001

11 May 10:29:22 ntpdate[9370]: adjust time server 172.19.7.96 offset 0.000867 sec

#每天凌晨同步hadoop001节点时间

[root@hadoop003 ~]# crontab -e

000 00 * * * /usr/sbin/ntpdate hadoop001 7.部署集群的JDK

mkdir /usr/java

tar -xzvf jdk-8u45-linux-x64.tar.gz -C /usr/java/

#切记必须修正所属用户及用户组

chown -R root:root /usr/java/jdk1.8.0_45

echo "export JAVA_HOME=/usr/java/jdk1.8.0_45" >> /etc/profile

echo "export PATH=${JAVA_HOME}/bin:${PATH}" >> /etc/profile

source /etc/profile

which java8.hadoop001节点离线部署MySQL5.7

8.1 解压及创建文件夹

#解压

[root@hadoop001 cdh5.16.1]# tar xzvf mysql-5.7.11-linux-glibc2.5-x86_64.tar.gz -C /usr/local/

#切换目录

[root@hadoop001 cdh5.16.1]# cd /usr/local/

#修改mysql名称

[root@hadoop001 local]# mv mysql-5.7.11-linux-glibc2.5-x86_64/ mysql

#创建文件夹

[root@hadoop001 local]# mkdir mysql/arch mysql/data mysql/tmp8.2 创建my.cnf

rm /etc/my.cnfvim /etc/my.cnf[client]

port = 3306

socket = /usr/local/mysql/data/mysql.sock

default-character-set=utf8mb4

[mysqld]

port = 3306

socket = /usr/local/mysql/data/mysql.sock

skip-slave-start

skip-external-locking

key_buffer_size = 256M

sort_buffer_size = 2M

read_buffer_size = 2M

read_rnd_buffer_size = 4M

query_cache_size= 32M

max_allowed_packet = 16M

myisam_sort_buffer_size=128M

tmp_table_size=32M

table_open_cache = 512

thread_cache_size = 8

wait_timeout = 86400

interactive_timeout = 86400

max_connections = 600

# Try number of CPU‘s*2 for thread_concurrency

#thread_concurrency = 32

#isolation level and default engine

default-storage-engine = INNODB

transaction-isolation = READ-COMMITTED

server-id = 1739

basedir = /usr/local/mysql

datadir = /usr/local/mysql/data

pid-file = /usr/local/mysql/data/hostname.pid

#open performance schema

log-warnings

sysdate-is-now

binlog_format = ROW

log_bin_trust_function_creators=1

log-error = /usr/local/mysql/data/hostname.err

log-bin = /usr/local/mysql/arch/mysql-bin

expire_logs_days = 7

innodb_write_io_threads=16

relay-log = /usr/local/mysql/relay_log/relay-log

relay-log-index = /usr/local/mysql/relay_log/relay-log.index

relay_log_info_file= /usr/local/mysql/relay_log/relay-log.info

log_slave_updates=1

gtid_mode=OFF

enforce_gtid_consistency=OFF

# slave

slave-parallel-type=LOGICAL_CLOCK

slave-parallel-workers=4

master_info_repository=TABLE

relay_log_info_repository=TABLE

relay_log_recovery=ON

#other logs

#general_log =1

#general_log_file = /usr/local/mysql/data/general_log.err

#slow_query_log=1

#slow_query_log_file=/usr/local/mysql/data/slow_log.err

#for replication slave

sync_binlog = 500

#for innodb options

innodb_data_home_dir = /usr/local/mysql/data/

innodb_data_file_path = ibdata1:1G;ibdata2:1G:autoextend

innodb_log_group_home_dir = /usr/local/mysql/arch

innodb_log_files_in_group = 4

innodb_log_file_size = 1G

innodb_log_buffer_size = 200M

#根据生产需要,调整pool size

innodb_buffer_pool_size = 2G

#innodb_additional_mem_pool_size = 50M #deprecated in 5.6

tmpdir = /usr/local/mysql/tmp

innodb_lock_wait_timeout = 1000

#innodb_thread_concurrency = 0

innodb_flush_log_at_trx_commit = 2

innodb_locks_unsafe_for_binlog=1

#innodb io features: add for mysql5.5.8

performance_schema

innodb_read_io_threads=4

innodb-write-io-threads=4

innodb-io-capacity=200

#purge threads change default(0) to 1 for purge

innodb_purge_threads=1

innodb_use_native_aio=on

#case-sensitive file names and separate tablespace

innodb_file_per_table = 1

lower_case_table_names=1

[mysqldump]

quick

max_allowed_packet = 128M

[mysql]

no-auto-rehash

default-character-set=utf8mb4

[mysqlhotcopy]

interactive-timeout

[myisamchk]

key_buffer_size = 256M

sort_buffer_size = 256M

read_buffer = 2M

write_buffer = 2M8.3 创建用户组及用户

[root@hadoop001 local]# groupadd -g 101 dba

[root@hadoop001 local]# useradd -u 514 -g dba -G root -d /usr/local/mysql mysqladmin[root@hadoop001 local]# id mysqladmin

uid=514(mysqladmin) gid=101(dba) groups=101(dba),0(root)

## 一般不需要设置mysqladmin的密码,直接从root或者LDAP用户sudo切换8.4 copy 环境变量配置文件

copy 环境变量配置文件至mysqladmin用户的home目录中,为了以下步骤配置个人环境变量

cp /etc/skel/.* /usr/local/mysql 8.5 配置环境变量

[root@hadoop001 local]# vi mysql/.bash_profile# .bash_profile

# Get the aliases and functions

if [ -f ~/.bashrc ]; then

. ~/.bashrc

fi

# User specific environment and startup programs

export MYSQL_BASE=/usr/local/mysql

export PATH=${MYSQL_BASE}/bin:$PATH

unset USERNAME

#stty erase ^H

set umask to 022

umask 022

PS1=`uname -n`":"‘$USER‘":"‘$PWD‘":>"; export PS18.6 赋权限和用户组 切换用户mysqladmin 安装

[root@hadoop001 local]# chown mysqladmin:dba /etc/my.cnf

[root@hadoop001 local]# chmod 640 /etc/my.cnf

[root@hadoop001 local]# chown -R mysqladmin:dba /usr/local/mysql

[root@hadoop001 local]# chmod -R 755 /usr/local/mysql 8.7 配置服务及开机自启动

[root@hadoop001 local]# cd /usr/local/mysql

#将服务文件拷贝到init.d下,并重命名为mysql

[root@hadoop001 mysql]# cp support-files/mysql.server /etc/rc.d/init.d/mysql

#赋予可执行权限

[root@hadoop001 mysql]# chmod +x /etc/rc.d/init.d/mysql

#删除服务

[root@hadoop001 mysql]# chkconfig --del mysql

#添加服务

[root@hadoop001 mysql]# chkconfig --add mysql

[root@hadoop001 mysql]# chkconfig --level 345 mysql on8.8 安装libaio及安装mysql的初始db

[root@hadoop001 mysql]# yum -y install libaio[root@hadoop001 mysql]# su - mysqladmin

Last login: Tue May 28 17:04:49 CST 2019 on pts/0

hadoop001:mysqladmin:/usr/local/mysql:>bin/mysqld > --defaults-file=/etc/my.cnf > --user=mysqladmin > --basedir=/usr/local/mysql/ > --datadir=/usr/local/mysql/data/ > --initialize在初始化时如果加上 –initial-insecure,则会创建空密码的 root@localhost 账号,否则会创建带密码的 root@localhost 账号,密码直接写在 log-error 日志文件中(在5.6版本中是放在 ~/.mysql_secret 文件里,更加隐蔽,不熟悉的话可能会无所适从)

8.9 查看临时密码

#查看密码

hadoop001:mysqladmin:/usr/local/mysql/data:>cat hostname.err |grep password

2019-05-28T09:28:40.447701Z 1 [Note] A temporary password is generated for root@localhost: J=<z#diyC4fh8.10 启动

hadoop001:mysqladmin:/usr/local/mysql:>/usr/local/mysql/bin/mysqld_safe --defaults-file=/etc/my.cnf &

[1] 21740

hadoop001:mysqladmin:/usr/local/mysql:>2019-05-28T09:38:16.127060Z mysqld_safe Logging to ‘/usr/local/mysql/data/hostname.err‘.

2019-05-28T09:38:16.196799Z mysqld_safe Starting mysqld daemon with databases from /usr/local/mysql/data

#按两次回车

##退出mysqladmin用户

##查看mysql进程号

[root@hadoop001 mysql]#ps -ef|grep mysql

mysqlad+ 21740 1 0 17:38 pts/0 00:00:00 /bin/sh /usr/local/mysql/bin/mysqld_safe --defaults-file=/etc/my.cnf

mysqlad+ 22557 21740 0 17:38 pts/0 00:00:00 /usr/local/mysql/bin/mysqld --defaults-file=/etc/my.cnf --basedir=/usr/local/mysql --datadir=/usr/local/mysql/data --plugin-dir=/usr/local/mysql/lib/plugin --log-error=/usr/local/mysql/data/hostname.err --pid-file=/usr/local/mysql/data/hostname.pid --socket=/usr/local/mysql/data/mysql.sock --port=3306

root 22609 9194 0 17:39 pts/0 00:00:00 grep --color=auto mysql

##通过mysql进程号查看mysql端口号

[root@hadoop001 mysql]# netstat -nlp|grep 22557

#切换成mysqladmin

[root@hadoop001 mysql]# su - mysqladmin

Last login: Tue May 28 17:24:45 CST 2019 on pts/0

hadoop001:mysqladmin:/usr/local/mysql:>

##查看mysql是否运行

hadoop001:mysqladmin:/usr/local/mysql:>service mysql status

MySQL running (22557)[ OK ]8.11 登录及修改用户密码

#初始密码

hadoop001:mysqladmin:/usr/local/mysql:>mysql -uroot -p‘J=<z#diyC4fh‘

mysql: [Warning] Using a password on the command line interface can be insecure.

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 2

Server version: 5.7.11-log

Copyright (c) 2000, 2016, Oracle and/or its affiliates. All rights reserved.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type ‘help;‘ or ‘\h‘ for help. Type ‘\c‘ to clear the current input statement.

#重置密码

mysql> alter user root@localhost identified by ‘ruozedata123‘;

mysql>GRANT ALL PRIVILEGES ON *.* TO ‘root‘@‘%‘ IDENTIFIED BY ‘ruozedata123‘ ;

#刷权限

mysql> flush privileges;8.12 重启

9.创建CDH的元数据库和用户、amon服务的数据库及用户

create database cmf DEFAULT CHARACTER SET utf8;

create database amon DEFAULT CHARACTER SET utf8;

grant all on cmf.* TO 'cmf'@'%' IDENTIFIED BY 'Ruozedata123456!';

grant all on amon.* TO 'amon'@'%' IDENTIFIED BY 'Ruozedata123456!';

flush privileges;10.hadoop001节点部署mysql jdbc jar

mkdir -p /usr/share/java/

cp mysql-connector-java.jar /usr/share/java/三.CDH部署

1.离线部署cm server及agent

1.1.所有节点创建目录及解压

mkdir /opt/cloudera-manager

tar -zxvf cloudera-manager-centos7-cm5.16.1_x86_64.tar.gz -C /opt/cloudera-manager/

1.2.所有节点修改agent的配置,指向server的节点hadoop001

sed -i "s/server_host=localhost/server_host=hadoop001/g" /opt/cloudera-manager/cm-5.16.1/etc/cloudera-scm-agent/config.ini

1.3.主节点修改server的配置:

vi /opt/cloudera-manager/cm-5.16.1/etc/cloudera-scm-server/db.properties

com.cloudera.cmf.db.type=mysql

com.cloudera.cmf.db.host=hadoop001

com.cloudera.cmf.db.name=cmf

com.cloudera.cmf.db.user=cmf

com.cloudera.cmf.db.password=Ruozedata123456!

com.cloudera.cmf.db.setupType=EXTERNAL

1.4.所有节点创建用户

useradd --system --home=/opt/cloudera-manager/cm-5.16.1/run/cloudera-scm-server/ --no-create-home --shell=/bin/false --comment "Cloudera SCM User" cloudera-scm

1.5.目录修改用户及用户组

chown -R cloudera-scm:cloudera-scm /opt/cloudera-manager2.hadoop001节点部署离线parcel源

2.1.部署离线parcel源

$ mkdir -p /opt/cloudera/parcel-repo

$ ll

total 3081664

-rw-r--r-- 1 root root 2127506677 May 9 18:04 CDH-5.16.1-1.cdh5.16.1.p0.3-el7.parcel

-rw-r--r-- 1 root root 41 May 9 18:03 CDH-5.16.1-1.cdh5.16.1.p0.3-el7.parcel.sha1

-rw-r--r-- 1 root root 841524318 May 9 18:03 cloudera-manager-centos7-cm5.16.1_x86_64.tar.gz

-rw-r--r-- 1 root root 185515842 Aug 10 2017 jdk-8u144-linux-x64.tar.gz

-rw-r--r-- 1 root root 66538 May 9 18:03 manifest.json

-rw-r--r-- 1 root root 989495 May 25 2017 mysql-connector-java.jar

$ cp CDH-5.16.1-1.cdh5.16.1.p0.3-el7.parcel /opt/cloudera/parcel-repo/

#切记cp时,重命名去掉1,不然在部署过程CM认为如上文件下载未完整,会持续下载

$ cp CDH-5.16.1-1.cdh5.16.1.p0.3-el7.parcel.sha1 /opt/cloudera/parcel-repo/CDH-5.16.1-1.cdh5.16.1.p0.3-el7.parcel.sha

$ cp manifest.json /opt/cloudera/parcel-repo/

2.2.目录修改用户及用户组

$ chown -R cloudera-scm:cloudera-scm /opt/cloudera/ 3.所有节点创建软件安装目录、用户及用户组权限

mkdir -p /opt/cloudera/parcels

chown -R cloudera-scm:cloudera-scm /opt/cloudera/4.hadoop001节点启动Server

4.1.启动server

/opt/cloudera-manager/cm-5.16.1/etc/init.d/cloudera-scm-server start

4.2.阿里云web界面,设置该hadoop001节点防火墙放开7180端口

4.3.等待1min,打开 http://hadoop001:7180 账号密码:admin/admin

4.4.假如打不开,去看server的log,根据错误仔细排查错误5.所有节点启动Agent

/opt/cloudera-manager/cm-5.16.1/etc/init.d/cloudera-scm-agent start6.接下来,全部Web界面操作

http://hadoop001:7180/

账号密码:admin/admin

7.欢迎使用Cloudera Manager--最终用户许可条款与条件。勾选

8.欢迎使用Cloudera Manager--您想要部署哪个版本?选择Cloudera Express免费版本

9.感谢您选择Cloudera Manager和CDH

10.为CDH集群安装指导主机。选择[当前管理的主机],全部勾选

11.选择存储库

12.集群安装--正在安装选定Parcel

假如本地parcel离线源配置正确,则"下载"阶段瞬间完成,其余阶段视节点数与内部网络情况决定

13.检查主机正确性

13.1.建议将/proc/sys/vm/swappiness设置为最大值10。

swappiness值控制操作系统尝试交换内存的积极;

swappiness=0:表示最大限度使用物理内存,之后才是swap空间;

swappiness=100:表示积极使用swap分区,并且把内存上的数据及时搬迁到swap空间;

如果是混合服务器,不建议完全禁用swap,可以尝试降低swappiness。

临时调整:

sysctl vm.swappiness=10

永久调整:

cat << EOF >> /etc/sysctl.conf

# Adjust swappiness value

vm.swappiness=10

EOF

13.2.已启用透明大页面压缩,可能会导致重大性能问题,建议禁用此设置。

临时调整:

echo never > /sys/kernel/mm/transparent_hugepage/defrag

echo never > /sys/kernel/mm/transparent_hugepage/enabled

永久调整:

cat << EOF >> /etc/rc.d/rc.local

# Disable transparent_hugepage

echo never > /sys/kernel/mm/transparent_hugepage/defrag

echo never > /sys/kernel/mm/transparent_hugepage/enabled

EOF

# centos7.x系统,需要为"/etc/rc.d/rc.local"文件赋予执行权限

chmod +x /etc/rc.d/rc.local14.自定义服务,选择部署Zookeeper、HDFS、Yarn服务

15.自定义角色分配

16.数据库设置

17.审改设置,默认即可

18.首次运行

19.恭喜您!

20.主页

四.报错

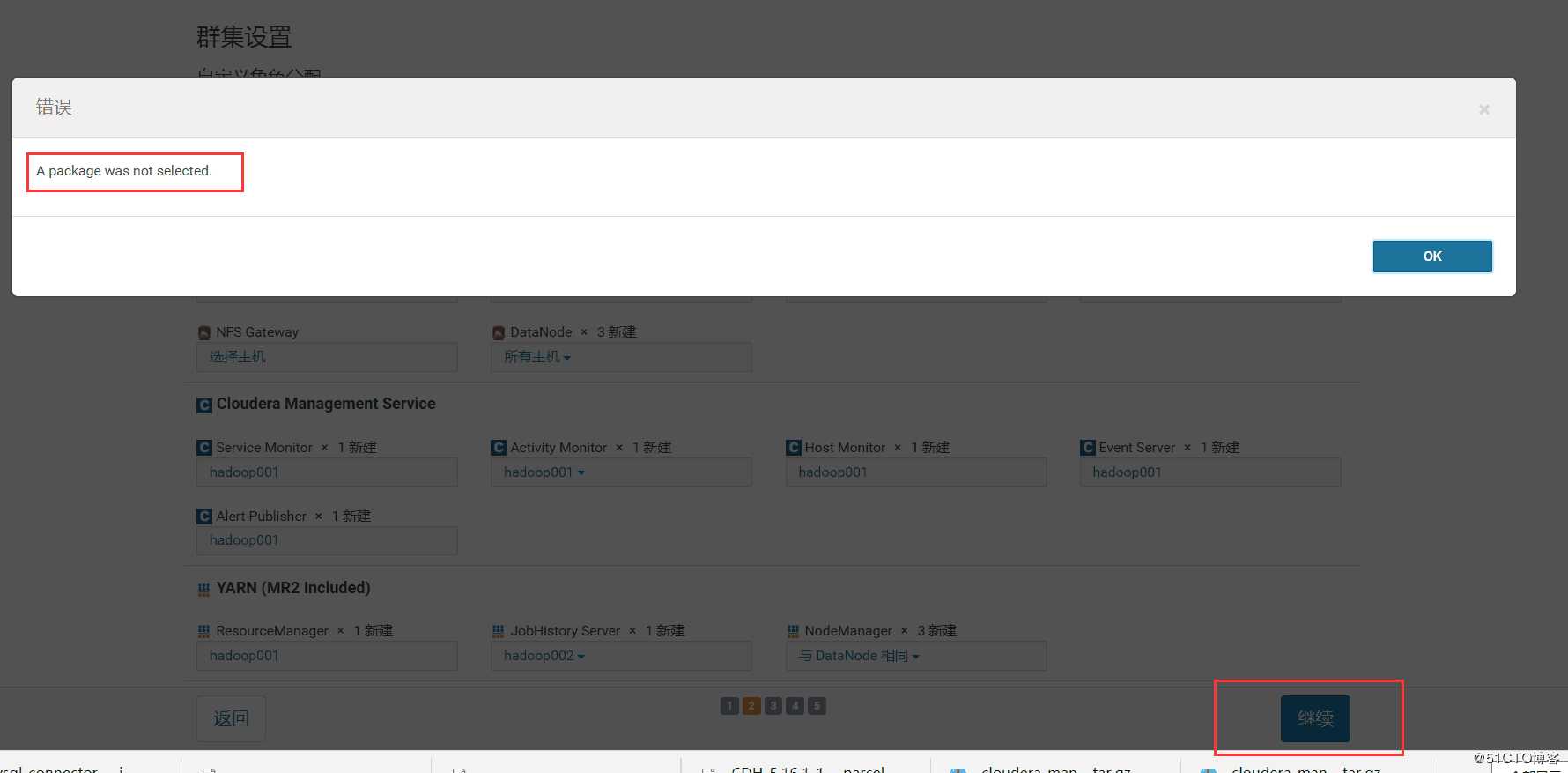

1.在数据库设置测试时发生报错

报错信息

ERROR 226616765@scm-web-17:com.cloudera.server.web.common.JsonResponse:

JsonResponse created with throwable: com.cloudera.server.web.cmf.MessageException:

A package was not selected.

原因:

测试连接时,等待时间过长,我就点了返回键重新加载,然后出现packet找不到的异常。

解决:

返回到选择大数据组件的页面后,重新进行操作,就可以测试成功了。

CDH5.16.1集群企业真正离线部署的更多相关文章

- CDH5.16.1集群新增节点

如果是全新安装集群的话,可以参考<Ubuntu 16.04上搭建CDH5.16.1集群> 下面是集群新增节点步骤: 1.已经存在一个集群,有两个节点 192.168.100.19 hado ...

- Ubuntu 16.04上搭建CDH5.16.1集群

本文参考自:<Ubuntu16.04上搭建CDH5.14集群> 1.准备三台(CDH默认配置为三台)安装Ubuntu 16.04.4 LTS系统的服务器,假设ip地址分布为 192.168 ...

- Hadoop ha CDH5.15.1-hadoop集群启动后,集群容量不正确,莫慌,这是正常的表现!

Hadoop ha CDH5.15.1-hadoop集群启动后,集群容量不正确,莫慌,这是正常的表现! 作者:尹正杰 版权声明:原创作品,谢绝转载!否则将追究法律责任. 一.集群启动顺序 1>. ...

- Hadoop ha CDH5.15.1-hadoop集群启动后,两个namenode都是standby模式

Hadoop ha CDH5.15.1-hadoop集群启动后,两个namenode都是standby模式 作者:尹正杰 版权声明:原创作品,谢绝转载!否则将追究法律责任. 一说起周五,想必大家都特别 ...

- 3-3 Hadoop集群完全分布式配置部署

Hadoop集群完全分布式配置部署 下面的部署步骤,除非说明是在哪个服务器上操作,否则默认为在所有服务器上都要操作.为了方便,使用root用户. 1.准备工作 1.1 centOS6服务器3台 手动指 ...

- 即将上线的Kafka 集群(用CM部署的)无法使用“--bootstrap-server”进行消费,怎么破?

即将上线的Kafka 集群(用CM部署的)无法使用“--bootstrap-server”进行消费,怎么破? 作者:尹正杰 版权声明:原创作品,谢绝转载!否则将追究法律责任. 一.报错:org.a ...

- Kubernetes容器集群管理环境 - 完整部署(中篇)

接着Kubernetes容器集群管理环境 - 完整部署(上篇)继续往下部署: 八.部署master节点master节点的kube-apiserver.kube-scheduler 和 kube-con ...

- Kubernetes容器集群管理环境 - 完整部署(下篇)

在前一篇文章中详细介绍了Kubernetes容器集群管理环境 - 完整部署(中篇),这里继续记录下Kubernetes集群插件等部署过程: 十一.Kubernetes集群插件 插件是Kubernete ...

- 实现CI/CDk8s高可用集群搭建总结以及部署API到k8s

实现CI/CD(Centos7.2)系列二:k8s高可用集群搭建总结以及部署API到k8s 前言:本系列博客又更新了,是博主研究很长时间,亲自动手实践过后的心得,k8s集群是购买了5台阿里云服务器部署 ...

随机推荐

- 综合CSS3 transition、transform、animation写的一个动画导航

打算好好写博客开始,就想把博客给装修下,近几个月一直处在准备找工作疯狂学习前端的状态.感觉博客装修要等到工作稳定下来才有时间和经历去想想要搞成什么样的了.也看过一些博主的博客导航有这种样式的,趁着回顾 ...

- 释放DT时代释放金融数据价值,驱动金融商业裂变

摘要:客户微细分模型上线华为云ModelArts,看如何以AI科技挖掘金融数据价值. 当前信息化浪潮席卷全球,新一轮的科技革命和产业革命推动金融行业发展到全新阶段.人工智能2.0时代,智慧金融方兴未艾 ...

- 设计模式:单例模式介绍及8种写法(饿汉式、懒汉式、Double-Check、静态内部类、枚举)

一.饿汉式(静态常量) 这种饿汉式的单例模式构造的步骤如下: 构造器私有化:(防止用new来得到对象实例) 类的内部创建对象:(因为1,所以2) 向外暴露一个静态的公共方法:(getInstance) ...

- 攻防世界-web(进阶)-upload1

打开链接是一个上传文件的窗口,随便上传一个PDF文件提示必须上传图片,查看源代码,要求必须输入png或jpg格式文件才会上传成功,想到通过修改源代码删除上传限制条件,上传一句话木马,通过中国菜刀进入后 ...

- 设计模式:装饰者模式介绍及代码示例 && JDK里关于装饰者模式的应用

0.背景 来看一个项目需求:咖啡订购项目. 咖啡种类有很多:美式.摩卡.意大利浓咖啡: 咖啡加料:牛奶.豆浆.可可. 要求是,扩展新的咖啡种类的时候,能够方便维护,不同种类的咖啡需要快速计算多少钱,客 ...

- 【Azure DevOps系列】什么是Azure DevOps

DevOps DevOps是一种重视"软件开发人员(Dev)"和"IT运维技术人员(Ops)"之间沟通合作的文化,它促进开发和运营团队之间的协作,以自动化和可重 ...

- 土地购买 (斜率优化dp)

土地购买 (斜率优化dp) 题目描述 农夫 \(John\) 准备扩大他的农场,他正在考虑$ N(1 \leqslant N \leqslant 50,000)$ 块长方形的土地. 每块土地的长宽满足 ...

- PAT 2-09. 装箱问题模拟(20)

题目链接 :http://www.patest.cn/contests/ds/2-09 解题思路:直接模拟, 记录已经使用的箱子的剩余容量, 如果已经使用的箱子中没有可以放下物品的箱子, 在增加另一个 ...

- SpringMVC实现客户端跳转

之前无论是/index跳转到index.jsp 还是/addProduct 跳转到showProduct.jsp,都是服务端跳转. 这一篇练习如何进行客户端跳转 @ 目录 修改IndexControl ...

- ls-remote -h -t git://github.com/adobe-webplatform/eve.git 报错问题

npm ERR! Error while executing:npm ERR! D:\开发工具\git\Git\cmd\git.EXE ls-remote -h -t git://github.com ...