9.3 k8s结合ELK实现日志收集

数据流:

logfile -> filebeat > kafka(依赖zookeeper)-> logstash -> elasticsearch -> kibana

1.部署zookeeper集群

1.1 主机IP规划

| hostname | IP |

|---|---|

| zk-kfk-1 | 192.168.2.26 |

| zk-kfk-2 | 192.168.2.27 |

| zk-kfk-3 | 192.168.2.28 |

3个服务器上都需要操作以下安装配置步骤

1.2 安装jdk11

apt install openjdk-11-jdk -y

1.3 下载安装包

wget https://mirrors.tuna.tsinghua.edu.cn/apache/zookeeper/zookeeper-3.7.0/apache-zookeeper-3.7.0-bin.tar.gz

1.4 部署配置

tar xf apache-zookeeper-3.7.0-bin.tar.gz -C /usr/local/

cd /usr/local

ln -s apache-zookeeper-3.7.0-bin/ zookeeper

cd conf/

cp zoo_sample.cfg zoo.cfg

mkdir -p /data/zookeeper-data

# 配置文件修改如下:

root@zk-kfk-1:/usr/local/zookeeper/conf# sed -e '/^#/d' -e '/^$/d' zoo.cfg

tickTime=2000

initLimit=10

syncLimit=5

dataDir=/data/zookeeper-data

clientPort=2181

maxClientCnxns=150

autopurge.snapRetainCount=3

autopurge.purgeInterval=1

server.1=192.168.2.26:2888:3888

server.2=192.168.2.27:2888:3888

server.3=192.168.2.28:2888:3888

1.5 启动服务并查看运行状态

# /usr/local/zookeeper/bin/zkServer.sh start

root@zk-kfk-1:# /usr/local/zookeeper/bin/zkServer.sh status

/usr/bin/java

ZooKeeper JMX enabled by default

Using config: /usr/local/zookeeper/bin/../conf/zoo.cfg

Client port found: 2181. Client address: localhost. Client SSL: false.

Mode: follower

root@zk-kfk-2:# /usr/local/zookeeper/bin/zkServer.sh status

...

Mode: follower

root@zk-kfk-3:# /usr/local/zookeeper/bin/zkServer.sh status

...

Mode: leader

2.部署kafka

复用zookeeper集群3台服务器部署,以下操作3台服务器上都需要执行

2.1 下载安装包并解压

# 下载

wget https://mirrors.tuna.tsinghua.edu.cn/apache/kafka/3.0.0/kafka_2.13-3.0.0.tg

# 解压

tar xf kafka_2.13-3.0.0.tgz -C /usr/local/

cd /usr/local

ln -s kafka_2.13-3.0.0/ kafka

# 创建数据目录

mkdir -p /data/kafka-logs

2.2 修改配置文件

# vim /usr/local/kafka/config/server.properties

broker.id=26

listeners=PLAINTEXT://192.168.2.26:9092

log.dirs=/data/kafka-logs

zookeeper.connect=192.168.2.26:2181,192.168.2.27:2181,192.168.2.28:2181

2.3 启动服务

cd /usr/local/kafka && ./bin/kafka-server-start.sh -daemon config/server.properties

使用绝对路径启动会报错:

# /usr/local/kafka/bin/kafka-server-start.sh --daemon /usr/local/kafka/config/server.properties

[2021-10-28 08:51:14,097] INFO Registered kafka:type=kafka.Log4jController MBean (kafka.utils.Log4jControllerRegistration$)

[2021-10-28 08:51:14,455] ERROR Exiting Kafka due to fatal exception (kafka.Kafka$)

...

3.部署ES集群

复用zookeeper,kafka 3台服务器

3.1 下载安装包

wget https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-7.15.1-amd64.deb

3.2 安装

dpkg -i elasticsearch-7.15.1-amd64.deb

3.3 修改配置

集群中的另外2个节点的node.name,network.host 2个配置需按实际情况修改

# es node-1的配置

root@zk-kfk-1:/data# cat /etc/elasticsearch/elasticsearch.yml

cluster.name: k8s-elk

node.name: node-1

path.data: /data/es/data

path.logs: /data/es/logs

network.host: 192.168.2.26

http.port: 9200

discovery.seed_hosts: ["192.168.2.26", "192.168.2.27","192.168.2.28"]

cluster.initial_master_nodes: ["192.168.2.26", "192.168.2.27","192.168.2.28"]

action.destructive_requires_name: true

# 创建数据和日志目录

mkdir -p /data/es/data

mkdir -p /data/es/logs

# 修改目录权限

chown -R elasticsearch:elasticsearch /data/es/

3.4 启动服务

systemctl start elasticsearch.service

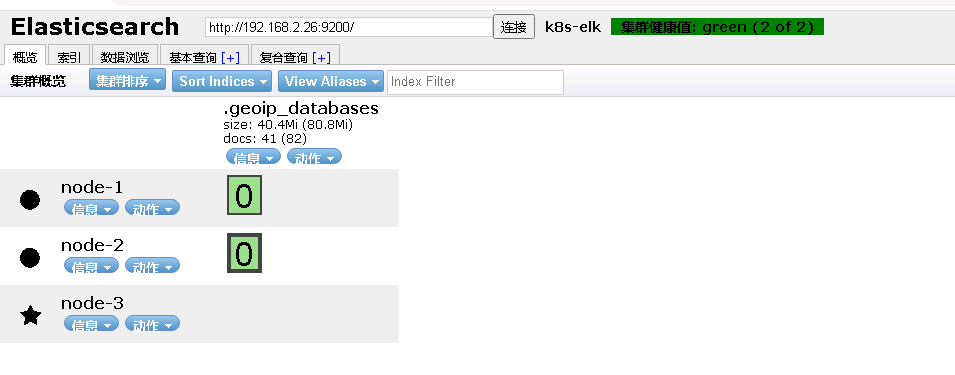

3.5 使用elasticsearch-head 查看es集群状态

chrome浏览器加载elasticsearch-head 插件参考:

https://blog.csdn.net/xiaolinzi176/article/details/105187311

4.pod中启动filebeat实现日志收集并输出到kafka

采用将filebeat和nginx放到一个容器里面方案

4.1 制作镜像

Dockerfile

FROM nginx:1.21.1

COPY nginx.conf /etc/nginx

COPY default.conf /etc/nginx/conf.d

COPY filebeat-7.15.1-amd64.deb /root

COPY start_filebeat.sh /docker-entrypoint.d

RUN rm -f /var/log/nginx/* \

&& dpkg -i /root/filebeat-7.15.1-amd64.deb \

&& rm -f /root/filebeat-7.15.1-amd64.deb

COPY filebeat.yml /etc/filebeat

filebeat.yaml配置文件

filebeat.inputs:

- type: log

paths:

- /var/log/nginx/access.log

tail_files: true

tags: ["nginx-access"]

fields:

log_topics: nginx-access

setup.kibana:

host: "192.168.2.29:5601"

output.kafka:

enabled: true

hosts: ["192.168.2.26:9092","192.168.2.27:9092","192.168.2.28:9092"]

topic: '%{[fields][log_topics]}'

filebeat运行脚本

cat start_filebeat.sh

#/bin/bash

nohup /usr/share/filebeat/bin/filebeat -c /etc/filebeat/filebeat.yml --path.home /usr/share/filebeat --path.config /etc/filebeat --path.data /var/lib/filebeat --path.logs /var/log/filebeat 2>&1 &

镜像目录文件列表

filebeat-7.15.1-amd64.deb 可以在官网下载

总用量 35016

drwxr-xr-x 2 root root 4096 10月 29 14:42 ./

drwxr-xr-x 4 root root 4096 10月 28 18:59 ../

-rwxr-xr-x 1 root root 137 10月 29 14:39 build_command.sh*

-rw-r--r-- 1 root root 619 10月 28 18:59 default.conf

-rw-r--r-- 1 root root 317 10月 29 14:42 Dockerfile

-rw-r--r-- 1 root root 35821726 10月 28 18:59 filebeat-7.15.1-amd64.deb

-rw-r--r-- 1 root root 518 10月 29 14:27 filebeat.yml

-rw-r--r-- 1 root root 1270 10月 28 19:01 nginx.conf

-rwxr-xr-x 1 root root 207 10月 29 11:24 start_filebeat.sh*

build_command.sh 脚本

#!/bin/bash

TAG=$1

docker build -t 192.168.1.110/web/nginx-filebeat:${TAG} .

sleep 1

docker push 192.168.1.110/web/nginx-filebeat:${TAG}

生成镜像文件并上传到本地harbor

# ./build_command.sh 2021.10.29-144201

# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

192.168.1.110/web/nginx-filebeat 2021.10.29-144201 8f011ec312e5 4 hours ago 312MB

使用docker run运行测试

# docker run -d -p 81:80 192.168.1.110/web/nginx-filebeat:2021.10.29-144201

root@k8-deploy:~/k8s-yaml/web/nginx_2/dockerfile# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

40dff03b2e4a 192.168.1.110/web/nginx-filebeat:2021.10.29-144201 "/docker-entrypoint.…" 4 hours ago Up 4 hours 0.0.0.0:81->80/tcp exciting_perlman

进入容器并查看nginx log

root@40dff03b2e4a:/# tail -f /var/log/nginx/*.log

==> /var/log/nginx/access.log <==

29/Oct/2021:06:44:57 +0000|172.17.0.1|localhost|GET / HTTP/1.1|200|612|0.000|905|76|-|-|curl/7.68.0

29/Oct/2021:06:51:43 +0000|127.0.0.1|localhost|GET / HTTP/1.1|200|612|0.000|905|73|-|-|curl/7.64.0

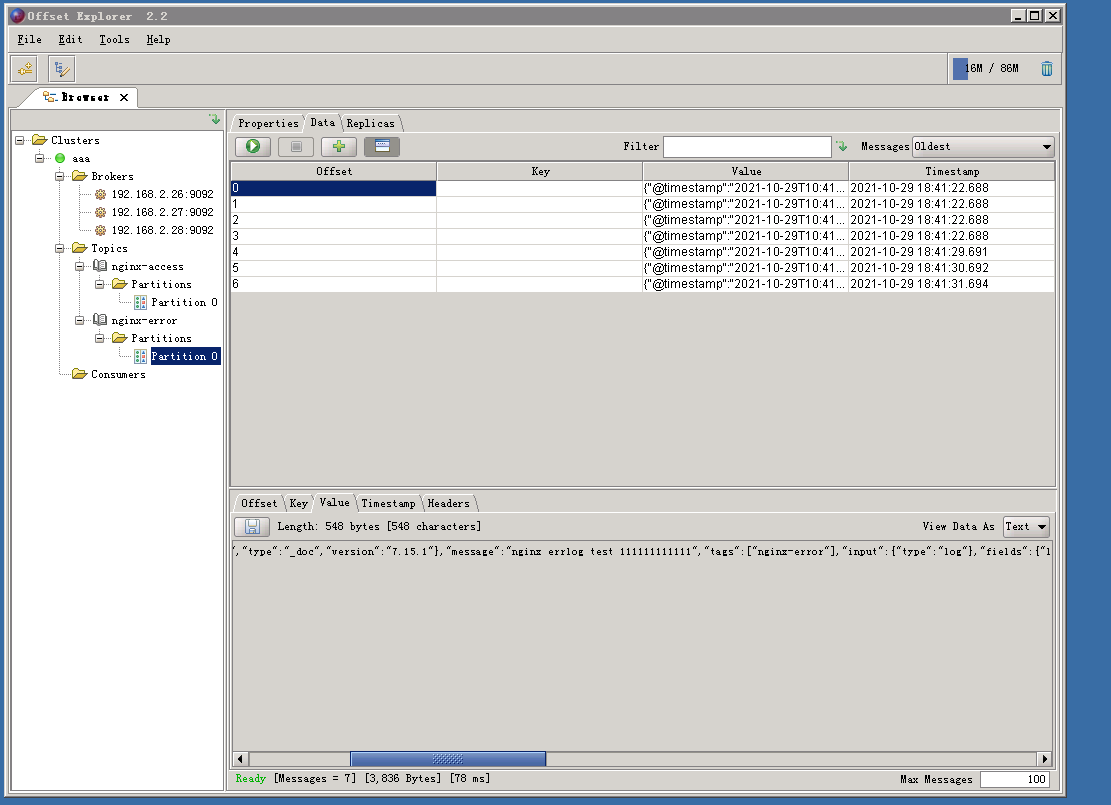

使用kafka客户端工具(offsetexplorer_64bit.exe)查看kafka中是否已经有数据

5.部署logstash将kakfa数据输出至elasticsearch集群

5.1 下载logstash安装包

https://artifacts.elastic.co/downloads/logstash/logstash-7.15.1-amd64.deb

5.2 安装

dpkg -i logstash-7.15.1-amd64.deb

5.3 编写logstash处理nginx日志的配置文件

配置文件路径:/etc/logstash/conf.d/kafka-to-es.conf

input {

kafka {

bootstrap_servers => "192.168.2.26:9092,192.168.2.27:9092,192.168.2.28:9092"

auto_offset_reset => "latest"

topics=> ["nginx-access"]

consumer_threads => 2

decorate_events => "true"

}

}

#=============================================================================

filter {

json {

source => "message"

}

mutate{

split => ["message","|"]

add_field => { "time_local" => "%{[message][0]}" }

add_field => { "client_ip" => "%{[message][1]}" }

add_field => { "domain_name" => "%{[message][2]}" }

add_field => { "request" => "%{[message][3]}" }

add_field => { "status" => "%{[message][4]}" }

add_field => { "body_bytes_sent" => "%{[message][5]}" }

add_field => { "request_time" => "%{[message][6]}" }

add_field => { "bytes_sent" => "%{[message][7]}" }

add_field => { "request_length" => "%{[message][8]}" }

add_field => { "upstream_response_time" => "%{[message][9]}" }

add_field => { "http_referer" => "%{[message][10]}" }

add_field => { "user_agent" => "%{[message][11]}" }

remove_field => ["message", "log", "file", "offset", "ecs", "version", "path", "agent", "beat", "@version", "input", "prospector", "source", "offset"]

}

date {

match => [ "time_local","dd/MMM/YYYY:HH:mm:ss Z" ]

target => "@timestamp"

}

}

#=============================================================================

#调试用

output {

stdout { codec => rubydebug }

}

# 生产环境使用

#output {

# elasticsearch {

# hosts => [ "http://192.168.2.26:9200","http://192.168.2.27:9200","http://192.168.2.28:9200"]

# index => "%{tags}-%{+YYYY.MM.dd}"

# }

#}

测试配置文件

root@zk-kfk-1:/etc/logstash/conf.d# /usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/kafka-to-es.conf -t

Using bundled JDK: /usr/share/logstash/jdk

OpenJDK 64-Bit Server VM warning: Option UseConcMarkSweepGC was deprecated in version 9.0 and will likely be removed in a future release.

WARNING: Could not find logstash.yml which is typically located in $LS_HOME/config or /etc/logstash. You can specify the path using --path.settings. Continuing using the defaults

Could not find log4j2 configuration at path /usr/share/logstash/config/log4j2.properties. Using default config which logs errors to the console

[INFO ] 2021-10-29 19:05:14.181 [main] runner - Starting Logstash {"logstash.version"=>"7.15.1", "jruby.version"=>"jruby 9.2.19.0 (2.5.8) 2021-06-15 55810c552b OpenJDK 64-Bit Server VM 11.0.12+7 on 11.0.12+7 +indy +jit [linux-x86_64]"}

[INFO ] 2021-10-29 19:05:14.293 [main] writabledirectory - Creating directory {:setting=>"path.queue", :path=>"/usr/share/logstash/data/queue"}

[INFO ] 2021-10-29 19:05:14.330 [main] writabledirectory - Creating directory {:setting=>"path.dead_letter_queue", :path=>"/usr/share/logstash/data/dead_letter_queue"}

[WARN ] 2021-10-29 19:05:15.140 [LogStash::Runner] multilocal - Ignoring the 'pipelines.yml' file because modules or command line options are specified

[INFO ] 2021-10-29 19:05:18.207 [LogStash::Runner] Reflections - Reflections took 166 ms to scan 1 urls, producing 120 keys and 417 values

[WARN ] 2021-10-29 19:05:20.035 [LogStash::Runner] plain - Relying on default value of `pipeline.ecs_compatibility`, which may change in a future major release of Logstash. To avoid unexpected changes when upgrading Logstash, please explicitly declare your desired ECS Compatibility mode.

Configuration OK

5.5 使用调试模式将logstash处理后的日志直接打印到屏

root@zk-kfk-1:/etc/logstash/conf.d# /usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/kafka-to-es.conf

...

5.6 在nginx容器内重新写几条数据进行测试

root@40dff03b2e4a:/# curl 127.0.0.1

5.7 观察logstash是否有相关日志的输出

{

"request" => "GET / HTTP/1.1",

"client_ip" => "127.0.0.1",

"status" => "200",

"@timestamp" => 2021-10-29T11:07:16.000Z,

"request_length" => "73",

"http_referer" => "-",

"domain_name" => "localhost",

"user_agent" => "curl/7.64.0",

"bytes_sent" => "905",

"request_time" => "0.000",

"time_local" => "29/Oct/2021:11:07:16 +0000",

"fields" => {

"log_topics" => "nginx-access"

},

"host" => {

"name" => "40dff03b2e4a"

},

"upstream_response_time" => "-",

"tags" => [

[0] "nginx-access"

],

"body_bytes_sent" => "612"

}

5.7 测试没问题后,更改logstash的配置文件,将日志写入es集群,并重新以服务的方式启动logstash

# 修改配置文件 kafka-to-es.conf

#output {

# stdout { codec => rubydebug }

#}

output {

elasticsearch {

hosts => [ "http://192.168.2.26:9200","http://192.168.2.27:9200","http://192.168.2.28:9200"]

index => "%{tags}-%{+YYYY.MM.dd}"

}

}

# systemctl start logstash.service

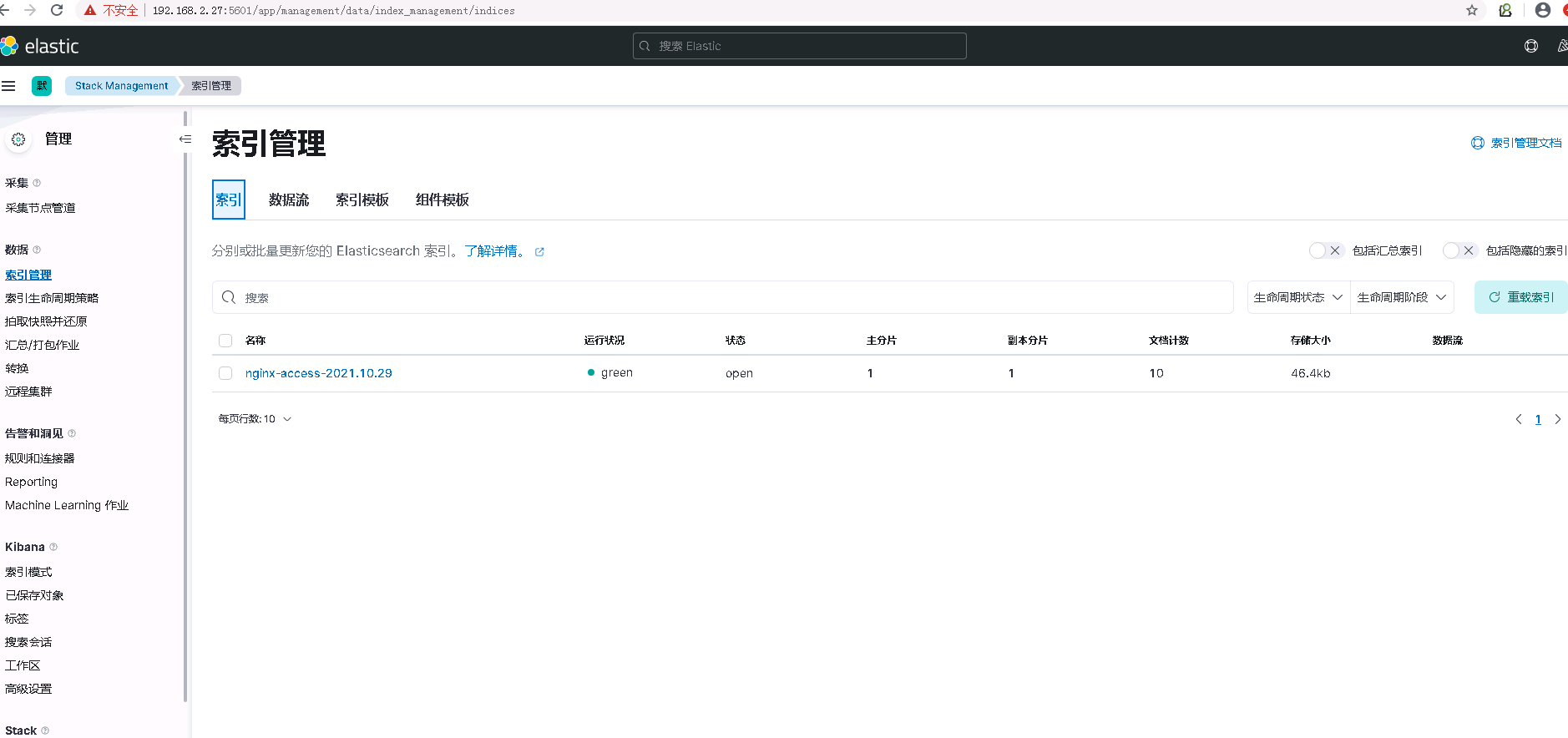

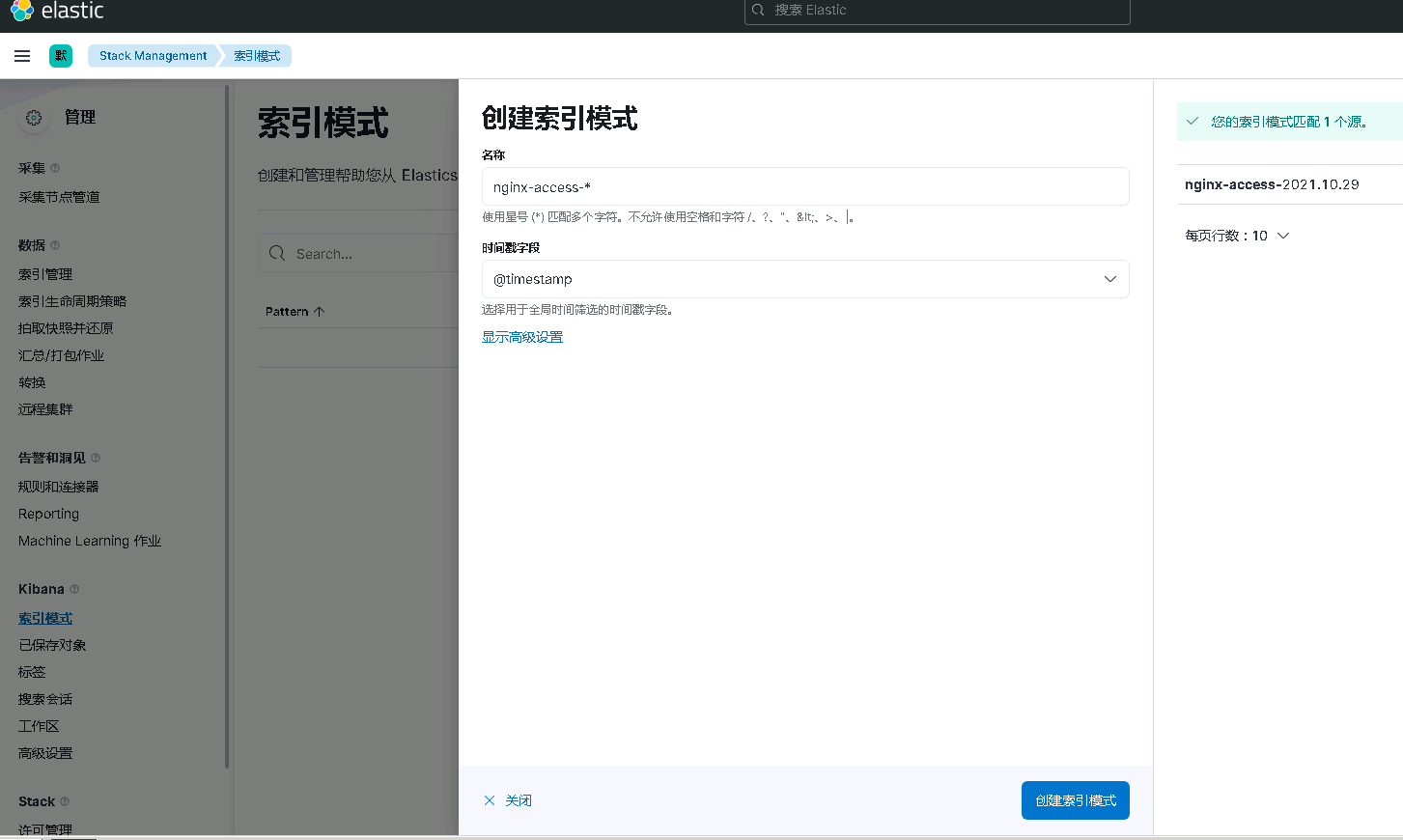

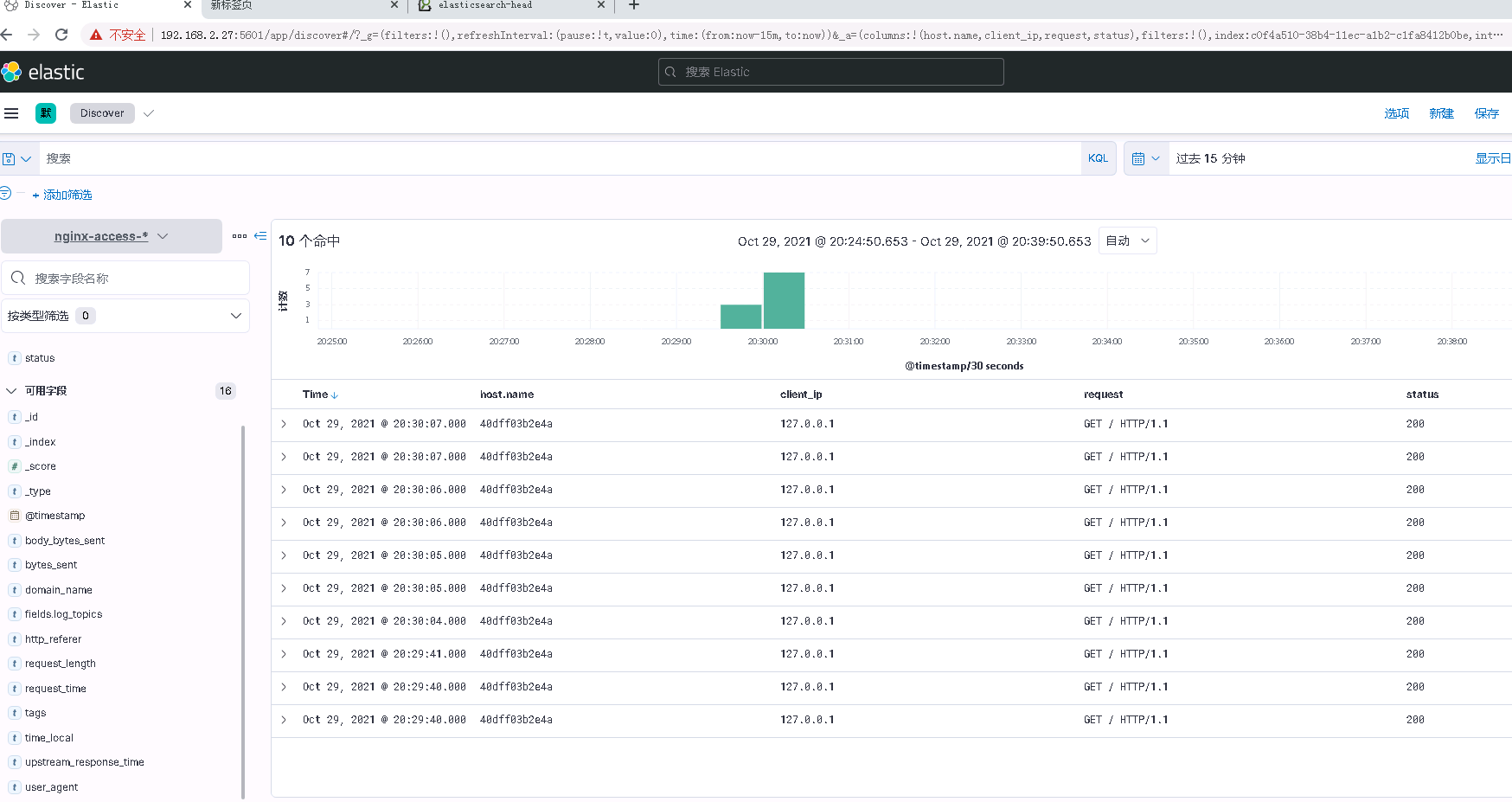

6.部署kibana并验证数据

6.1 下载安装包并安装

wget https://artifacts.elastic.co/downloads/kibana/kibana-7.15.1-amd64.deb

dpkg -i kibana-7.15.1-amd64.deb

6.2 修改配置文件如下参数

# 配置文件 /etc/kibana/kibana.yml

server.port: 5601

server.host: "192.168.2.27"

elasticsearch.hosts: ["http://192.168.2.29:9200"]

i18n.locale: "zh-CN"

6.3 查看kibana监听端口

root@zk-kfk-2:~# netstat -ntlp |grep 5601

tcp 0 0 192.168.2.27:5601 0.0.0.0:* LISTEN 31969/node

6.4 浏览器访问kibana并测试

9.3 k8s结合ELK实现日志收集的更多相关文章

- ELK分布式日志收集搭建和使用

大型系统分布式日志采集系统ELK全框架 SpringBootSecurity1.传统系统日志收集的问题2.Logstash操作工作原理3.分布式日志收集ELK原理4.Elasticsearch+Log ...

- StringBoot整合ELK实现日志收集和搜索自动补全功能(详细图文教程)

@ 目录 StringBoot整合ELK实现日志收集和搜索自动补全功能(详细图文教程) 一.下载ELK的安装包上传并解压 1.Elasticsearch下载 2.Logstash下载 3.Kibana ...

- SpringBoot+kafka+ELK分布式日志收集

一.背景 随着业务复杂度的提升以及微服务的兴起,传统单一项目会被按照业务规则进行垂直拆分,另外为了防止单点故障我们也会将重要的服务模块进行集群部署,通过负载均衡进行服务的调用.那么随着节点的增多,各个 ...

- 关于K8s集群器日志收集的总结

本文介绍了kubernetes官方提供的日志收集方法,并介绍了Fluentd日志收集器并与其他产品做了比较.最后介绍了好雨云帮如何对k8s进行改造并使用ZeroMQ以消息的形式将日志传输到统一的日志处 ...

- 微服务下,使用ELK做日志收集及分析

一.使用背景 目前项目中,采用的是微服务框架,对于日志,采用的是logback的配置,每个微服务的日志,都是通过File的方式存储在部署的机器上,但是由于日志比较分散,想要检查各个微服务是否有报错信息 ...

- ELK+kafka日志收集

一.服务器信息 版本 部署服务器 用途 备注 JDK jdk1.8.0_102 使用ELK5的服务器 Logstash 5.1.1 安装Tomcat的服务器 发送日志 Kafka降插件版本 Log ...

- 传统ELK分布式日志收集的缺点?

传统ELK图示: 单纯使用ElK实现分布式日志收集缺点? 1.logstash太多了,扩展不好. 如上图这种形式就是一个 tomcat 对应一个 logstash,新增一个节点就得同样的拥有 logs ...

- ELK:日志收集分析平台

简介 ELK是一个日志收集分析的平台,它能收集海量的日志,并将其根据字段切割.一来方便供开发查看日志,定位问题:二来可以根据日志进行统计分析,通过其强大的呈现能力,挖掘数据的潜在价值,分析重要指标的趋 ...

- ELK/EFK——日志收集分析平台

ELK——日志收集分析平台 ELK简介:在开源的日志管理方案之中,最出名的莫过于ELK了,ELK由ElasticSearch.Logstash和Kiabana三个开源工具组成.1)ElasticSea ...

随机推荐

- Vuls 漏洞扫描工具部署及效果展示

Vuls 漏洞扫描工具部署及效果展示 介绍 Vuls根据NVD,OVAL等数据对主流Linux系统进行扫描,并具有完善的报告. 支持系统 Distribution Release Alpine 3.3 ...

- 深度剖析Redis6的持久化机制(大量图片说明,简洁易懂)

Redis的强劲性能很大程度上是由于它所有的数据都存储在内存中,当然如果redis重启或者服务器故障导致redis重启,所有存储在内存中的数据就会丢失.但是在某些情况下,我们希望Redis在重启后能够 ...

- gridlayout在kv中的引用

from kivy.app import App from kivy.uix.gridlayout import GridLayout class GridLayoutWidget(GridLayou ...

- Seata整合SpringBoot和Mybatis

Seata整合SpringBoot和Mybatis 一.背景 二.实现功能 三.每个服务使用到的技术 1.账户服务 2.订单服务 四.服务实现 1.账户服务实现 1.引入jar包 2.项目配置 3.建 ...

- redis5集群搭建步骤

通常情况下为了redis的高可用,我们一般不会使用redis的单实例去运行,一般都会搭建一个 redis 的集群去运行.此处记录一下 redis5 以后 cluster 集群的搭建. 一.需求 red ...

- NOIP 模拟 八十五

T1 冲刺NOIP2021模拟18 莓良心 容易发现答案和每一个 \(w_i\) 无关,我们只需要求出总和然后计算方案数. 对于每一个数贡献的方案数是相同的,首先是自己的部分就是\(\begin{Bm ...

- IdentityServer4 负载均衡配置

在不用到负载之前,一切都很好,但是部署多个实例之后,问题挺多的:session问题.令牌签发后的校验问题. 在此之前,先自查官方文档:Deployment - IdentityServer4 1.0. ...

- 数字设计中的时钟与约束(gate)

转载:https://www.cnblogs.com/IClearner/p/6440488.html 最近做完了synopsys的DC workshop,涉及到时钟的建模/约束,这里就来聊聊数字中的 ...

- SpringBoot热部署(7)

1.引入热部署依赖包 <dependency> <groupId>org.springframework.boot</groupId> <artifactId ...

- 攻防世界 WEB 高手进阶区 TokyoWesterns CTF shrine Writeup

攻防世界 WEB 高手进阶区 TokyoWesterns CTF shrine Writeup 题目介绍 题目考点 模板注入 Writeup 进入题目 import flask import os a ...